Report ecs group4

-

Upload

ions90j -

Category

Technology

-

view

430 -

download

3

Transcript of Report ecs group4

Francesco Cervigni Jonas Henriksson Tomas Måhlberg

Mikael Thuné Åsa Tysk

Project Report in Embedded Control Systems

LegoWay

Uppsala University 2009-09-16

Abstract: The object of this project was to build a two-wheeled self-balancing robot using the Lego Mindstorms NXT set and light sensors for detecting the tilt of the robot. The balancing was achieved with an LQG controller with a steady state Kalman filter and the implementation was done in RobotC code. Two light sensors were used. As the standard sensors included with the set did not give high enough resolution these were substituted by more advanced light sensors. The robot is sensitive to ambient lighting and surface conditions. For robots supposed to handle different locations and light settings, the use of light sensors is therefore not recommended.

2

The star of this project, Iron Buck

3

Table of Contents

1. Introduction ............................................................................................................................ 5

1.1 Problem formulation ............................................................................................................................................ 5

1.2 Group members ...................................................................................................................................................... 5

2. State of the art analysis ........................................................................................................... 5

3. Problem analysis .................................................................................................................... 6

3.1 Project goals ............................................................................................................................................................. 7

3.2 Subtasks ..................................................................................................................................................................... 7

3.2.1 The model ......................................................................................................................................................... 7

3.2.2 The sensor ........................................................................................................................................................ 7

3.2.3 The controller ................................................................................................................................................. 7

3.2.4 Robot design .................................................................................................................................................... 8

4. Our solutions .......................................................................................................................... 8

4.1 Building the robot .................................................................................................................................................. 8

4.2 Modelling ................................................................................................................................................................... 8

4.3 Sensors .................................................................................................................................................................... 10

4.3.1 Converting raw sensor data into an angle with one sensor ..................................................... 10

4.3.2 Converting raw sensor data into an angle with two sensors ................................................... 11

4.4 Controllers ............................................................................................................................................................. 12

4.4.1 LQG controller ............................................................................................................................................. 12

4.5 Simulations ............................................................................................................................................................ 17

4.5.1 Modelling in Simulink ............................................................................................................................... 17

4.5.2 Simulation results ...................................................................................................................................... 18

4.6 System verification ............................................................................................................................................. 21

4.7 Line tracking ......................................................................................................................................................... 23

4.7.1 Line tracking algorithm ........................................................................................................................... 23

4.7.2 The search algorithm ................................................................................................................................ 24

4.7.3 Line tracking not achieved ..................................................................................................................... 24

4.8 Programming and RobotC implementation ............................................................................................. 24

4.8.1 Concurrent processes ............................................................................................................................... 24

4.8.2 Sampling time .............................................................................................................................................. 25

5. Practical issues ..................................................................................................................... 25

4

5.1 Synchronization and varying power of the motors .............................................................................. 26

5.2 Motor malfunctions ............................................................................................................................................ 26

5.3 Sensor sensitive to background lighting ................................................................................................... 26

5.4 Logging data .......................................................................................................................................................... 26

5.5 Joystick driver interferes with the integer conversion ....................................................................... 27

6. Discussion ............................................................................................................................ 27

6.1 Goal Assesment .................................................................................................................................................... 27

6.2 Experiences with the RobotC environment ............................................................................................. 27

6.2.1 The Environment ........................................................................................................................................ 28

6.2.2 Debugging ...................................................................................................................................................... 28

6.2.3 OS and Tasks ................................................................................................................................................ 28

6.3 Limitations due to lack of time ...................................................................................................................... 28

6.3.1 Kalman filter estimations not good enough .................................................................................... 29

6.3.2 Not able to perform the line tracking ................................................................................................. 29

6.3.3 Better evaluation of sensor readings ................................................................................................. 29

6.4 Future work .......................................................................................................................................................... 29

Acknowledgements .................................................................................................................. 30

References ................................................................................................................................ 31

Appendix A – C code ............................................................................................................... 32

Appendix B – Matlab code ....................................................................................................... 36

Appendix C - Model blueprints ................................................................................................ 39

Appendix D – Poetry ................................................................................................................ 47

5

1. Introduction The Lego Mindstorms NXT set is a big box full of opportunities; the parts can be assembled into a wide range of shapes, which with the aid of the control brick can be put to use in an endless variety of ways. It is relatively cheap and the standard set can be extended with additional sensors and building parts. This makes it a candidate for laboratory work where the existing equipment might be expensive or for one use only; the Lego parts can be reassembled for course work with other purposes as well. They are also durable, making them ideal not only for children but also for harsh testing by engineering students.

The IT department is looking at possibilities of using the NXT in control theory education, where it might be able to illustrate the use of controllers. One laboratory assignment in a basic course of control theory is to keep an inverted pendulum balancing. The NXT parts can be assembled into a segway imitation where the control brick is supported by two wheels. This is an unstable system, which is very hard to control with just simple control theory or, even worse, by hand.

1.1 Problem formulation

The assignment is to build a two-wheeled self-balancing robot out of a Lego Mindstorms NXT. The robot should be able to follow some predefined path, preferably represented by a dark-coloured line on a white surface, while keeping upright. The idea is that this robot should be used in a control theory course, as a demonstration of what you can achieve with control theory. Since these kinds of robots already have been built quite a few times at different universities or even as hobby projects around the world the robot should also present improvements in some way - cheaper, faster or more robust.

There are some guidelines as to how this is to be solved:

• The robot must be built using only major mechanical and electronic equipment from the Lego Mindstorms NXT, with the exception of some 3rd party Lego-compatible sensors.

• The robot should be user friendly. Meaning it should contain as few parts as possible and have a robust construction design.

• The control system of the robot should be model-based.

1.2 Group members

Mikael Thuné - Group leader. Head of organization and planning. Worked with modeling and system identification.

Jonas Henriksson - Worked with programming and sensor issues. Also responsible for group communication and public relations.

Åsa Tysk - Worked with simulation and controller design. Responsible for proof reading the final report and obtaining coffee.

Tomas Måhlberg - Worked with simulation, controller and the robot design.

Francesco Cervigni - Responsible for the line tracking and the programming structure.

2. State of the art analysis Browsing the Internet reveals that building self-balancing robots is nothing new under the sun. Everyone, from amateur hobby programmer to advanced control theory researcher, seems fascinated with this simple yet elegant idea.

Material found can be broadly categorized into two categories: simple PID-based light sensor robots able to balance, and more advanced model-based gyro sensor robots able to balance and

6

move. While the actual results of the former are in some cases quite good the actual PID parameters seem to be found by trial and error and not by any kind of mathematical derivation.

More interesting are the model-based approaches. By deriving a model of the robot it is possible to either use a model-based controller or use it to design a hopefully better non-model-based controller. The most common way is to first derive a model of the system and in the case of the system not being linear, linearize it around some working point. It might also be possible to directly control the nonlinear model without linearization, for example by using partial feedback linearization. None of us in the group had any previous experience with nonlinear models and we therefore chose to concentrate on the linear models.

The most famous of the self-balancing robot is probably the Segway PT (where PT stands for personal transporter) invented by Dean Kamen and now produced by Segway Inc [1]. Being used in traffic by fragile human beings means the PT needs to be very safe and robust. For example, a failure of the electronics should not allow the machine to go out of control but slow down to a safe stop. To achieve this it incorporates two accelerometers, five gyroscopic sensors and two controller circuit boards. The large number of sensors also adds redundant input which can be used to obtain more accurate readings.

Trevor Blackwell built his own self-balancing scooter using a wheelchair motor, RC car batteries and an 8-bit microcontroller [2]. The controller used is a PD written in C. Blackwell compares the Segway with his own creation and calls it “Rolls Royce vs Model T”. While it seems to ride just fine it totally lacks safety features and makes “startlingly loud squelching sounds on tile floors”.

Another two-wheeled robot is the neat looking nBot by David P. Anderson [3]. Much of its hardware is homebuilt which is quite unusual in this kind of project. The nBot is controlled with simple a simple LQ controller and seems to be very robust to disturbances.

With the introduction of Lego Mindstorms the numbers of self-balancing robots around the world have increased rapidly. Possibly the first self-balancing Lego robot is the LegWay by Steve Hassenplug, built using the original Lego Mindstorms RCX kit [4]. The LegWay uses two advanced light sensors called EOPD (Electro Optical Proximity Detector) to estimate its tilt angle and is controlled with a simple PID controller. The LegWay is quite stable on flat surfaces but does not handle disturbances very well.

Another interesting robot is the NXTway-G by Ryo Watanabe. It uses a linearized model and state feedback control. Unlike the LegWay, and many similar light sensor based designs, it uses the engines rotary encoders to keep track of the robots position which is necessary for internal stability [5].

One of the best models available on the internet is the NXTway-GS by Yorihisa Yamamoto [6]. The results of this prototype are quite similar to the ones of the NXTway-G. Both designs use an LQ controller for balancing. The NXTway-GS is also well documented and uses known system tools. However it does not take advantage of a Kalman filter which possibly might improve the results further.

3. Problem analysis

In this section we define our goals with the project more in depth and try to divide the full problem into smaller parts.

7

3.1 Project goals

After the state of the art analysis, some project meetings and a few cups of coffee we set up some goals of our project:

To try some model based controller together with the EOPD - We have only found EOPD robots balancing with PID controllers and are curious to see if a state space controller would fare any better.

To build an EOPD based robot able to move and turn - The EOPD robots we have seen have only been able to stand still and balance or move in a very crude manner.

To build simple and modular code - There exist a number of code generating tools for LegoNXT but we wanted to program everything from scratch in RobotC. We also wanted to make the code modular to make it easy to switch between and compare different controllers.

3.2 Subtasks

The problem of balancing the two-wheeled robot can be divided into a set of subtasks, where the main one is to keep the robot upright and moving with some kind of controller. This in turn needs a sensor for estimating the body tilt angle so that the robot is aware of when it is balancing or falling. Since it is the motors that balance the robot; they are required to be synchronized and it is also important that they work regardless of how well the battery is charged. To balance the robot and having it follow a path at the same time, the controlling program has to be able to do simultaneous tasking.

3.2.1 The model

The robot will be modelled as a rectangular block on wheels. The model only needs to describe the behaviour of the robot under all the conditions it will "normally" operate under; moving, turning and falling. If the robot ends up in a state not described by our model (e.g. when disturbed so that the body tilt angle becomes too large or struck by lightning) we do not expect it to continue to balance.

3.2.2 The sensor

The sensor readings will be nonlinear with respect to the tilt angle and needs to be converted into angles if they are to be of any use in the controller. The angles need to be as accurate and precise as possible but should be very lightweight to calculate.

3.2.3 The controller

The controller is possibly the most important part of the robot. Foremost it needs to be fast enough to balance the robot before it falls. If the reference signal is the body angle of the robot then the stationary error needs to be suppressed or the robot will move in one direction. If the oscillation of the controller is too big it will be susceptible to disturbances and will probably end up falling. All of these cases need to be taken into account by the controller.

8

3.2.4 Robot design

The robot needs to be designed in very stable way to make sure it does not break. The design should also be quite simple, so the behaviour of the robot is easier to model. The centre of gravity should be placed as low as possible to make it easier to balance and we should use as few parts as possible to make it easy to build and maintain.

4. Our solutions

4.1 Building the robot

The robot is built strictly with parts from the original Lego Mindstorms NXT set apart from the sensors which were produced by a third party company. The main part is the actual computer of the NXT robot, constructed like a big Lego brick. It will from hereon be referred to as just the brick. When designing our robot prototype we focused on making it easy to build and to replicate. Building a robust robot is somewhat in conflict with building a simple one, as it takes lots of parts to make it really robust. One advantage of using only a few parts is that Lego parts are very prone to disappear. It is therefore not wise having a model dependant on some not easily available or replaceable parts. The full blueprint of our robots is available in Appendix A.

Main parts of the BUCK model are:

• The NXT brick, with a 32-bit ARM7 microcontroller with 256 Kbytes FLASH and 64 Kbytes RAM

• One original touch sensor • Two original light sensors (used only in the defunct line following version) • Two EOPD (Electro Optical Proximity Detector) sensors, an enhanced light sensor

produced by HiTechnic Products

4.2 Modelling

After having spent some time trying to model the system of the robot ourselves we decided to instead proceed by basing our model on the NXTway-GS, by Yorihisa Yamamoto. The robot is modelled as a rectangular block on two wheels. The full NXTway-GS model is three-dimensional, ours however is reduced to two dimensions. From the beginning we did this in order to simplify the model so we could get it working with minimal effort. In the end we were forced to keep this model due to lack of time to fully complete the project. The simplified physical model is illustrated in Figure 1 below.

9

Figure 1: A simplified schematic drawing of the robot in two dimensions.

Ψ depicts the body yaw angle and Ѳ is the angle of the wheels relative to their starting position.

The linearized motion of the system is described by equation 1.

equation 1

where

� = �ℎ��� ���ℎ

� = � �� ���ℎ

� = �ℎ��� ������

� = ������� ����� � ���� �� � �ℎ��� ����

�� = �ℎ��� ������ � ���

�� = �� � � ������ � ���

�� = � �� ��ℎ ������ � ���

Changes had to be made to the original model in order to take into account the differences between our robot and the NXTWay-GS. These changes include modifications prompted by the use of smaller wheels, a different placement of the centre of mass and anything else that was different on our robot. To determine what adjustments would have to be made, the radius of the wheels was measured and the parts weighed. The centre of mass was estimated by balancing to robot on a thin stick and measuring the position of this point. All physical properties were

10

written into a MATLAB m-file that can be found in Appendix B where the state space matrices were calculated.

4.3 Sensors

The sensor used for this project was the EOPD (Electro Optical Proximity Detector), a light sensor with higher resolution than the one included in the NXT kit. The tilt of the robot can be estimated by measuring the intensity of the reflected light from the sensor. In the beginning we only used one sensor which was positioned at the front of the robot. When the robot tilts forward more light is reflected and when falling backwards the sensor reads a lower intensity value. This makes it possible to establish a relationship between the light intensity and the robot tilt angle.

The EOPD is less sensitive to background lighting than the standard sensor, which is a good quality since the lighting conditions then do not impact the measurements. However, the EOPD is sensitive to colours - a light surface is a better reflector than a dark one. This means that for every new surface the robot should handle, the intensity-angle relation has to be re-evaluated.

Another drawback of using the EOPD, or any light sensor, is that the resolution of the measurements will not be equal for all angles. The mapping between intensity and angle is nonlinear and the intensity readings are integer valued resulting in non-uniform resolution. Therefore the detection of forward tilting is more accurate than that the tilt in the backwards direction. This was however solved later when we received a second EOPD which we then could mount at the back of the robot, thus making it equally accurate in forward and backward tilting. Another problem arises when the sensor is placed too close to the underlying surface. When the robot tilts the sensor also tilts. If the robot falls forward there comes a point when the intensity measurements starts to decline, instead of increasing as expected, because the sensor is angled so that it does not catch enough of the reflected light. Another limitation of using the light sensor is the fact that the surface needs to be of a uniform colour.

4.3.1 Converting raw sensor data into an angle with one sensor

To form a function for converting sensor data into an angle, direct measurements of the angle and the light value were made. This was done by holding the robot at different angles measured with a large protractor while reading the EOPD value from the RobotC debugger. These data were then plotted in MATLAB and fitted to a polynomial. To reduce the measuring errors the mean of several readings were used. We tried both the 2nd and 3rd order polynomials shown in Figure 2 and Figure 3 of which the 3rd order works the best. The polynomial coefficients were specified in our RobotC code and used to convert the sensor readings into actual angles.

11

Figure 2: 2:nd degree polynomial approximation of data

Figure 3: 3:rd degree polynomial approximation of data

This offline method gives very accurate results. However, a drawback problem is that the polynomial fitting only can be done offline. If we take into account that the EOPD is both sensitive to the background lighting and the surface material this drawback actually turns into a major problem, since it is necessary to recalibrate the function quite often.

4.3.2 Converting raw sensor data into an angle with two sensors

In order to make it easier to move the robot from one surface to another we devised a calibration scheme that is carried out every time the program starts. Taking the cubic root of the

12

measurements from a light sensor yields a relationship between the sensor values and the angle that it is linear. This made it possible to easily evaluate this relationship from the robot program at startup. First the sensor values at two known angles are measured, then a system of two equations is solved to determine two parameters, a and b. The angle is then given by the parameters multiplied with the cubic root of the sensors readings.

A stand was mounted on the robot, allowing it to rest at two known angles. These angles were large enough for the stand not to interfere with the self-balancing, i.e. by hitting the ground helping it to balance, so that they could be kept mounted on the robot at all times.

At startup measure the light intensity from the front, sensor Ifront, and back sensor, Iback sensor at two known angles ψ1 and ψ2. We want to determine the parameters a and b where

equation 2

Which is done by solving the system of equations for a and b

equation 3

Solving this system is reduced to finding the inverse of a 2x2-matrix. A lightweight operation even on the limited CPU of the NXT brick.

4.4 Controllers

The model describes an unstable system. To stabilize it and keep the robot from falling over, some kind of controller feedback needs to be applied. There are many possible controller types, more or less suitable for solving our problem. Because of the restrictions inherent to our robot and the choice of platform, RobotC, the selection narrowed a bit. The execution of RobotC code in the processor is not that fast, this left all computation-heavy controllers out of our reach. In the end we decided to try a non-model based PID controller and one that uses knowledge of the model - the LQG controller.

4.4.1 LQG controller

The LQG controller consists of two building blocks. An observer, a Kalman filter, for estimation of the unknown states and noise reduction in the measured states, and an optimal LQ gain for feedback control from the estimated states from the Kalman filter.

4.4.1.1 The Kalman filter

For our Kalman filter we chose to use the steady-state Kalman gain, for a cheap implementation with fewer computations. This meant we could obtain the Kalman filter as a discrete linear time-invariant system, i.e. on state-space form, by using functions in MATLAB's Control System toolbox. The only measurable states in our system are the angles ψ and Ѳ. Also the motor signal, u, from the previous time step is known. Hence, these signals were chosen as the input signals to our Kalman filter. The equations that need to be evaluated for each cycle can be seen in equation 4:

13

equation 4

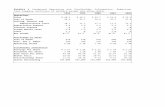

Finding the right covariance matrices for the Kalman filter proved to be rather tedious work. Using what could be considered reasonable noise variances assuming the model was correct resulted in a filter with such bad performance that the output was more or less completely useless. The solution was to instead add a lot of process noise and at the same time reduce the measurement noise. As can be seen in Figure 4&5, when using a small process noise variance the robot would still balance but oscillate quite a bit because of the inaccuracy of the state estimates. When increasing the process noise variance the estimated states would follow the measured states more closely, but the derivatives would become very noisy. This behaviour can be seen in Figure 6&7. The final version was decided by logging data on the robot and evaluating the performance of the filter. For our final Kalman filter we used the covariance matrices found in equation 5:

equation 5

14

Figure 4: Measured ψ and output from Kalman filter. Blue is the measured value and red the Kalman estimate. Q = [50 0; 0 50], R = [0.01 0; 0 0.001].

Figure 5: Measured Ѳ and output from Kalman filter. Blue is the measured value and red the Kalman estimate. Q = [50 0; 0 50], R = [0.01 0; 0 0.001].

15

Figure 6: Measured ψ and output from Kalman filter. Blue is the measured value and red the Kalman estimate. Q = [5 0; 0 1], R = [0.01 0; 0 0.001].

Figure 7: Measured Ѳ and output from Kalman filter. Blue is the measured value and red the Kalman estimate. Q = [5 0; 0 1], R = [0.01 0; 0 0.001].

16

4.4.1.2 The LQ gain

For the inverted pendulum problem it is crucial that the body tilt angle is kept small to keep the system from becoming unstable. Hence, when choosing the design parameters for the LQ controller our choice was to put a heavy penalty on the ψ angle. Additionally we have a limitation on the amount of power that can be sent to the motors. The signal can only be in the interval [-100, 100] pwm. To avoid clipping of the input signal we therefore also chose to put a heavy penalty on the input signal of the system, i.e. the motor signal. Based on these premises a first LQ controller was derived by using functions from MATLAB's Control System toolbox. The penalty matrices were then fine-tuned by analysing a series of logging of the data and evaluation of the behaviour of the robot for a large number of choices. The penalty matrices that are used for the final version of our controller can be seen in equation 6. This led to the LQ gain in equation 7.

Since the performance of our Kalman filter is not perfect, especially for the derivatives which fluctuate quite a bit, the feedback gain for the Ѳ derivative was manually adjusted to a smaller value. It was then adjusted to 30% of the calculated value, so the final LQ gain vector can be seen in equation 8.

equation 6

equation 7

equation 8

The performance of this LQG controller can be seen in Figure 8.

17

Figure 8: Plots of Kalman estimates of ψ and Ѳ, as well as the motor signal. The robot starts from leaning position (approximately 13 degrees) and automatically stands up and starts balancing.

4.5 Simulations

After obtaining a state-space model of our robot we wanted to evaluate the performance of different Kalman filters and LQ gains. For this purpose we built a model of the system in Simulink to simulate the behaviour of the robot.

4.5.1 Modelling in Simulink

The Simulink model of our system with the physical model of our robot and the LQG controller can be seen in Figure 9.

18

Figure 9: Simulink model of the system with an LQG controller.

An important limitation to our robot, which is not present in our state space of the physical system is the fact that the signal sent to the motor cannot exceed 100 pwm or be any lower than -100 pwm. This is represented by a saturation put on the input signal to the model in our simulations.

To further approximate the real environment, noise was added to the input signal and to the measurements. The noise on the input signal, the process noise, was chosen to have the signal power 1. The measurements of ψ were deemed by us to be rather accurate and the power of the noise estimated to be in the order of 0.001. The angle Ѳ is determined by a built-in function in RobotC, which outputs an integer value. This led us to choose a larger noise power, 0.1 for the Ѳ measurement.

We had great difficulty reconciling the performance of the Kalman filter in simulations with the actual behaviour on the robot. It would pass the simulations admirably but when the filter was implemented on the robot it failed the testing; the Kalman estimates of the angles would be wrong from the start and continually worsening so that the error of the estimates would finally (after a few seconds) be so large that the robot would fall over. With this discrepancy between simulations and reality, we began to believe that our model was faulty. Since the simulations are entirely dependant upon an accurate model we decided to not be overly trusting of the results. What we mostly used Simulink for was to examine if the controller would be able to stabilize the system, not how well, and checking if the motor signal would be within the allowed -100 pwm and 100 pwm values, so that the saturation property of the motor signal would not give rise to too many problems.

4.5.2 Simulation results

In this section some of the results of the simulations for the LQG controller that performed best on the actual robot are presented. Many choices of penalty matrices for the Kalman filter and LQ gain were tested and the final one, managing to balance the robot well, was designed with the parameters in equation 9 and 10:

19

The results from a 5 second simulation with the LQG controller based on the penalty choices described above are shown in the

Figure 10: The true Ѳ value and the

equation 9

equation 10

The results from a 5 second simulation with the LQG controller based on the penalty choices described above are shown in the Figures 10-12.

value and the Kalman estimation plotted for a 5 second simulation.

The results from a 5 second simulation with the LQG controller based on the penalty choices

estimation plotted for a 5 second simulation.

20

Figure 11: The true ψ value and the Kalman estimation plotted for a 5 second simulation.

Figure 12: The control signal sent to the motors plotted for a 5 second simulation.

21

4.6 System verification

Since the PID parameters obtained from simulations in Simulink did not stabilize the robot, an error in the theoretical model was suspected to be the cause. The model structure had itself been proven to work in earlier Lego robots [6] so the problem probably was the actual parameters of the model. Some parameters which were considered hardware constant were taken from previous experiments on the Lego NXT [6] and the rest were measured or approximated.

The parameters measured by us were the masses of the chassis and the wheels, the wheel radius and the distance to the centre of mass. These were all measured using a letter scales and a standard ruler and were therefore considered to be reliable enough. Most of the parameters concerning the NXT motors were taken from an experiment made by Ryo Watanabe [5] and one was taken from the report of the NXTway-GS [6].

Even though the NXT motors are mass produced there is the possibility that these parameters are different on our robot. However, the parameters most likely to be incorrect are the ones estimated by approximation. These are the different moments of inertia. Since both the shape and the mass distribution of the robot is very complex, an accurate calculation of the moments of inertia would be very hard and not worth the effort. Instead the robot was approximated as a rectangular block with uniform mass distribution and the calculations were based on that. Hence, the parameters that are most likely to be incorrect are the moments of inertia.

So in order to investigate if the theoretical model was inaccurate or not a grey box modelling approach was chosen. To accomplish this, MATLAB’s System Identification Toolbox was used. To use this toolbox a state space representation of the theoretical model is needed and also a set of gathered data. This data is the input and output signals from the actual robot during a test run. This might seem very straightforward. The problem is that the theoretical model needs to be stable in order for the toolbox to work, but the open loop system modelled is naturally unstable. The solution is to use the stabilized closed loop system instead. Which brings us to the next problem, the closed loop system needs to be represented in state space form.

To get the state space representation of the system with a PI-controller some derivations had to be made. A helpful webpage was used as a reference [7], and the resulting system can be seen in Figure 13.

Figure 13: State space representation of the close loop system with a PI-controller

A0, B0 and C0 are the state space matrices for the open loop system. So the measured signal y consists of the control error and the integral of the control error.

22

Now almost all the preparations needed for the system identification were done. What was left was logged data from a test run. In order to make the data as informative as possible, the system was excited with a reference signal consisting of three summed sinusoids. The frequencies of the sinusoids were chosen with respect to the frequency gain of the system, see Figure 14, i.e. they were placed where the system had a significant gain. The actual logging of the data ran into some problems since the PI parameters that stabilized the robot did not stabilize the theoretical model and vice versa. A compromise was therefore chosen. A set of parameters that barely stabilized both systems were found and data from test runs could now be logged.

Figure 14: Bode diagram for the closed loop system with Kp=25 and Ki=130

With the gathered data and the state space representation, the toolbox could now be run. The function used is called pem() and uses an iterative prediction-error minimization method to find the value of the specified parameters that best fits the data. After a few runs with different parameters specified for identification, some rather mixed results were collected. The moment of inertia for the wheels was identified as ten times larger than our approximated value and this was proven to be correct, since we had made some miscalculations. As for the rest of the parameters no helpful results were gained. Some were chosen up to 104 times larger and some were given a different sign. This seemed like a too big difference and when the logged outputs were compared with the outputs from the validated model, given the same reference signal and starting values, they did not match.

Given that we had little time left on the project we decided to try using system identification on the LQG controller instead. We thought the very last days would be better spent on our latest controller which finally was working properly. As before, a state space representation of the closed loop system was needed to perform the identification. First we divided the system into three parts and modelled them separately, the robot, the Kalman filter and the Feedback gain. We then used MATLAB's built in functions series() and feedback() to connect these systems in a closed loop and got a state space representation. We then used the same procedure as with the PID controller to perform the system identification. Unfortunately we did not get any satisfying

23

results at all. The parameters were way off and the loss function was huge. This pointerror in the model structure given to the toolbox and we think the problem was that we might have forgotten to update the Kalman filter when the model parameteattempts were performed on the last day and we did not have time to find and correct the problems.

4.7 Line tracking

One of the goals of the project printed in a dark colour on a white paper and formed to make an irregular track.

4.7.1 Line tracking algorithm

In order to perform the line detection we used two light sensors of the original type that came with the NXT. The two EOPD light sensors we used for approximatisensitive to changes in the light values. This means they need to be on a surface of uniform colour. If one of them should be placed over the track less light would be reflected and this would be registered as a big change in thesensor implementation. Our thoughts on how to solve this was that the line detection sensors should be mounted far from the balancing sensors, on the side of the robot. In this way our robot would move along the line and not over it, while still being able to follow it. It is however still likely that the sensors at some point is executing the search algorithm or if the track at some point int

The reference value BLACK represents the value coming from the sensor when over the line. Using this value as a threshold it is possible to detect from the incoming sensor readings if they are over the line or not.

The robot's state with respect to the line can be

• OUT: None of the sensors are • IN: Both sensors are • P_IN: Just one sensor is

using 2 sensors.

Figure

The parameters were way off and the loss function was huge. This pointerror in the model structure given to the toolbox and we think the problem was that we might have forgotten to update the Kalman filter when the model parameters changed. However, these attempts were performed on the last day and we did not have time to find and correct the

of the project was to make the robot autonomously follow a line. The line was k colour on a white paper and formed to make an irregular track.

4.7.1 Line tracking algorithm

In order to perform the line detection we used two light sensors of the original type that came with the NXT. The two EOPD light sensors we used for approximating the tilt angle are very sensitive to changes in the light values. This means they need to be on a surface of uniform

be placed over the track less light would be reflected and this would be registered as a big change in the body tilt angle. This is a major limitation of the light

Our thoughts on how to solve this was that the line detection sensors far from the balancing sensors, on the side of the robot. In this way our robot

ove along the line and not over it, while still being able to follow it. It is however still likely that the sensors at some point would be positioned over the track. Either when the robot

the search algorithm or if the track at some point intersects with itself.

The reference value BLACK represents the value coming from the sensor when over the line. Using this value as a threshold it is possible to detect from the incoming sensor readings if they are over the line or not.

robot's state with respect to the line can be (see figure 15)

sensors are over the line. Both sensors are positioned over the line. Just one sensor is over the line. Having this state is the main advantage of

Figure 15: Possible states of the line tracking

The parameters were way off and the loss function was huge. This pointed to some error in the model structure given to the toolbox and we think the problem was that we might

rs changed. However, these attempts were performed on the last day and we did not have time to find and correct the

was to make the robot autonomously follow a line. The line was k colour on a white paper and formed to make an irregular track.

In order to perform the line detection we used two light sensors of the original type that came ng the tilt angle are very

sensitive to changes in the light values. This means they need to be on a surface of uniform be placed over the track less light would be reflected and this

body tilt angle. This is a major limitation of the light Our thoughts on how to solve this was that the line detection sensors

far from the balancing sensors, on the side of the robot. In this way our robot ove along the line and not over it, while still being able to follow it. It is however still

be positioned over the track. Either when the robot ersects with itself.

The reference value BLACK represents the value coming from the sensor when entirely placed over the line. Using this value as a threshold it is possible to detect from the incoming sensor

the line. Having this state is the main advantage of

24

It is possible from the third state to know exactly what movement that has to be performed in order to achieve a better position; it can be determined on which side of the line the

The line tracking is performed as state [IN , P_IN, OUT] the action perform

• OUT : The search algorithm is performed.• IN: The robot moves slowly forward, and the search algorithm variables ar• P_IN: A movement is performed to the left or right,

the line.

4.7.2 The search algorithm

The search algorithm requires an undefined number of steps before finperformed atomically but in a sequence of single separate movements. This is because we have to continuously run the balance function. A set of global variables represent the search state. Every time the tracking function is calaccording to the search state is performed.

For each attempt to find the line, the robot searches within a given range ATTEMPT * TURNING_STEP. The variable ATTEMPT is first set to 1 and increased for each failed attempt to find the line, making the robot search in a line first the robot will know in what direction to look first.

Figure

4.7.3 Line tracking not achieved

Trouble with getting the robot to balance caused work on aside in favour of controller testing. In the end this meant that the line tracking was never actually implemented on the robot.

4.8 Programming and RobotC implementation

To get a working and balancing robot we had tRobotC-code. As always when programminglanguage used.

4.8.1 Concurrent processes

Our implementation does not make use of multiple concurrent processes. Efparallelization is impossible because the brick is a single processor system though the

It is possible from the third state to know exactly what movement that has to be performed in order to achieve a better position; it can be determined on which side of the line the

acking is performed as a continuous polling of the light sensors, returning the current state [IN , P_IN, OUT] the action performed depends on the current state:

OUT : The search algorithm is performed. slowly forward, and the search algorithm variables ar

A movement is performed to the left or right, depending on which sensor is

4.7.2 The search algorithm

The search algorithm requires an undefined number of steps before finding the line, so it is performed atomically but in a sequence of single separate movements. This is because we have to continuously run the balance function. A set of global variables represent the search state. Every time the tracking function is called and if the robot is in the state OUT, the next movement according to the search state is performed.

For each attempt to find the line, the robot searches within a given range ATTEMPT * TURNING_STEP. The variable ATTEMPT is first set to 1 and increased for each failed attempt to find the line, making the robot search in a larger area. Since it is also known which sensor left the line first the robot will know in what direction to look first.

Figure 16: Search ranges for different attempts.

4.7.3 Line tracking not achieved

Trouble with getting the robot to balance caused work on the line tracking algorithm to be set aside in favour of controller testing. In the end this meant that the line tracking was never actually implemented on the robot.

4.8 Programming and RobotC implementation

To get a working and balancing robot we had to implement all our theories and solutions and in code. As always when programming, the solutions have to be adapted somewhat to the

4.8.1 Concurrent processes

Our implementation does not make use of multiple concurrent processes. Effective parallelization is impossible because the brick is a single processor system though the

It is possible from the third state to know exactly what movement that has to be performed in order to achieve a better position; it can be determined on which side of the line the robot is.

polling of the light sensors, returning the current

slowly forward, and the search algorithm variables are reset. depending on which sensor is over

ding the line, so it is not performed atomically but in a sequence of single separate movements. This is because we have to continuously run the balance function. A set of global variables represent the search state.

led and if the robot is in the state OUT, the next movement

For each attempt to find the line, the robot searches within a given range ATTEMPT * TURNING_STEP. The variable ATTEMPT is first set to 1 and increased for each failed attempt to

wn which sensor left the

the line tracking algorithm to be set aside in favour of controller testing. In the end this meant that the line tracking was never

o implement all our theories and solutions and in the solutions have to be adapted somewhat to the

fective parallelization is impossible because the brick is a single processor system though the

25

programming environment supports multitasking. During the course of the project we tried to implement concurrent solutions anyway, earning us some experience in that field but when we realised that it would not help us we reverted to the use of a single process for all the tasks.

We made one attempt to implement concurrency in our robot, consisting of five tasks in addition o the main task. Two control tasks:

- Line_check : polling data from the light_sensors for the line tracking, switched. - Balance_check : polling data from the light_sensors used for balancing.

These would work as switches, activating and deactivating three operative tasks, giving the actual directions to the motors:

- Run_forward which would make the robot move straight forward. - Find_line which would move the robot in search of the line. - Balance which would hold the robot upright.

We abandoned this implementation, using single tasking instead for the following reasons:

- The mathematical model required an constant time step. With concurrent tasks we would not be entirely certain of exactly when the actions would have been performed, especially because of the lack of documentation available about the scheduler (a Priority-Round Robin) and the context switch.

- Complicated, to achieve the right scheduling we would have to manually change the tasks priorities.

- It would be inefficient since the continual context switches between tasks would steal time that would be much needed for computations in the balance task.

4.8.2 Sampling time

The robot balances in continuous time whereas the controller works in discrete time. Therefore the system needs to be sampled. For good performance the sampling frequency of the system should be high. This is limited by the fact that both the sensors and the motors need some time between value readings and output to the wheels. Also the computations in RobotC require some processor time every cycle of the program in between readings. Since the RobotC system functions only return the time stamps in multiples of milliseconds, 1 ms is the smallest unit by which time is measured on the robot. The EOPD sensor readings will only be updated every 3 ms and the motor output every other millisecond. This puts a lower limit of 3 ms for the sampling period. In order to allow for the time it takes to calculate the Kalman estimates and conversion of the angle, currently at 4 ms, we decided to use a time slot of 10 ms for the program and controller.

5. Practical issues During the course of the project we encountered some problems that are related to the Lego Mindstorms and sensor hardware and also the RobotC programming environment. The motor outputs from the Lego bricks output ports were not synchronized and sometimes worked in an unpredictable way. The fact that the power of the motors varied with the battery level had to be addressed and the sensor values were dependant on the lighting conditions which meant some form of calibration needed to be performed.

26

5.1 Synchronization and varying power of the motors

The robot is reliant upon the wheels for balancing and moving. Unfortunately the motors rotating the wheels were not synchronized properly, one motor was rotating at a higher pace than the other. This resulted in the robot turning slightly when it should have moved straight ahead. The RobotC language includes a function that is supposed to counteract this problem, however it did not work in a satisfactory manner. In fact, there was no apparent difference between having it turned on or off. The problem likely was a slight mechanical variation between the motors. We also noticed that the speed difference changed with varying reference speed so we solved the problem with a P controller where the faster motor was assigned to follow the slower motor, effectively synchronizing them.

When the power level in the battery of the NXT brick was reduced, the output power to the motors also decreased. This meant that the same control signal did not give the same response from the motors when the battery was well charged as when it had lost some of its capacity, making the balancing very dependant on the battery level. To remedy the problem we scaled the motor signals with a constant equal to the current battery voltage divided with the maximum battery voltage.

5.2 Motor malfunctions

Much time was spent on problems caused by the motors not functioning as they should. The outputs from the motors are dependant on how well the batteries are charged. Then there is the previously discussed matter of synchronizing the two motors. Also, when one of the motors was connected to port C, both the motors would stop dead for several samples, regain their proper functions, only to stop dead again after another short period of time. We have found no explanation for this behaviour. But it did not occur with any of the other motor ports on the NXT. This was deduced to being a bug in the firmware of the brick.

5.3 Sensor sensitive to background lighting

After many measurements and adjustments we have concluded that the EOPD sensor is not as insensitive to ambient light as the supplier describes. Our measurements gave different results, which we could only be accredited to lighting conditions since this was the only factor that changed between the measurements. We noticed a small improvement when we changed the underlying surface to a paper of a duller colour. This less reflective material seemed to be more consistent for different background lighting, though not as much as we would have wanted.

5.4 Logging data

There are some limitations to what data is possible to log with the NXT brick. First of all it is only possible to log positive integers. To be able to log negative numbers we added a large number to make all negative numbers positive, subtracting it again in MATLAB to convert back to the original values. This is not a very pretty solution unless you know what values to expect, but in the end it was good enough for us. To log floating numbers it is possible to multiply the value by a factor of 10 before type converting it into an integer, dividing it with the same number later to convert it back.

Another nuisance of using the data log on the brick is that it has to be restarted in between each run in order for the right data to be logged. The logging is done by writing variables to a log file on the brick. There is a RobotC function that saves the log file as a new file on the brick which can then be uploaded to the work station for analysis. The log file is not flushed at this command however and logging new values means adding them to the end of that file. In order to properly remove all earlier logged variables in the log file the brick has to be restarted.

27

We tried to bypass the inefficient RobotC way of logging variables and made an attempt at live logging the data straight to MATLAB via Bluetooth, using the RWTH Mindstorms NXT Toolbox. This was unsuccessful because of the limitations of the USB dongle we used (Abe UB22S [8]). It turned out that it is either not bi-directional or we could not get it to work in bi-directional mode. This was a necessary condition for using the aforementioned toolbox. Since we did a lot of analysing of data, having live logging working would have sped up the logging process considerably and saved us a lot of time.

5.5 Joystick driver interferes with the integer conversion

When using the standard RobotC driver for a joystick, which is convenient when you want to try the performance of a servo controller for the robot, the integer conversion in RobotC is somehow changed. This means you cannot typecast float variables to the integer type. Many functions in RobotC require values to be integers, e.g. the logging function(see the previous section) and the function for sending directions to the motors. This peculiarity of the driver took some time for us to figure out. The motor synchronisation solution we had devised that made one of the motors follow the other used an integer typecast which caused the command for that motor to fail. Meanwhile, what we observed was the motor port A ceasing to function. We wrongly assumed this to be a problem with the port, not the program, since we had already had some experience with port malfunctions, see section 5.2.

6. Discussion We had much fun working on this project and learned a great deal. In this section we would like to present some final thoughts on what we achieved as compared to what we initially stated as our objectives. We also present a brief evaluation of the programming environment and an account of what we did not have time to do, things that could be improved and some suggestions for future work.

6.1 Goal Assesment

In section 3.1 we defined some goals for the project: to use EOPD sensors and a model-based controller, to have the robot being able to move and turn and to make all the code for this assignment simple and modular.

The controller that we designed for our robot was indeed model-based, an LQG controller with steady state Kalman filter. The second goal of having the robot move and turn was not reached however, since the controller did not work in servo mode and the robot was only able to stand and balance. As for the code, it is simple and easily modified. Due to its rather compact form we decided that splitting it up for the sake of modularity would not enhance the readability or ease of use.

6.2 Experiences with the RobotC environment

All the programming was done in RobotC. We also had the option of using a graphical programming environment, the Embedded Coder Robot NXT, but we chose to do the implementation in RobotC instead.

28

6.2.1 The Environment

Programming of the brick was done using the RobotC programming environment by Carnegie Mallon Robotics Academy. Our summarised experience with this IDE:

RobotC pros:

• An easy language, C-like and without the need of pointers managing. • There are a many system calls available to get information from and managing the brick.

These are easy to call since their specifications and signatures are mostly available in a side frame of the environment.

• The programs are compiled and automatically downloaded to the brick via USB or Bluetooth.

• A very accurate debugger. • Good documentation.

RobotC cons:

• Frequently crashes on the computer, especially when Bluetooth communication with the brick fails.

• Some drivers (joystick drivers in particular) can cause errors with the typecasting affecting the motors and the logging behaviour.

• Some function specifications are inaccurate, or sometimes even missing.

6.2.2 Debugging

Many control windows are offered during this phase. Real-time variables can be observed during a step-by-step execution or live during runtime. The second option was very useful to observe the behaviour of the robot during the balancing without having to log data from the run. The combined use of off-line analysing of logged data and on-line variable watching in the debugger windows provides a good view of the robot behaviour and sensor values. Though not as convenient as live logging certain variables in MATLAB would have been, which we tried but were unable to implement, see section 5.4.

6.2.3 OS and Tasks

In the RobotC specification of the bricks firmware there is some documentation of how multithreading in the OS works. The scheduler uses a Round-Robin with assigned priorities where the threads are commonly called tasks.

Very important are the task control functions, a task can:

• start or stop another task. • give up its time slice. • dynamically set its own priority.

6.3 Limitations due to lack of time

When making our initial plans for how the project assignment should be solved we were very optimistic in our estimation of how much time it would take to derive a model of the system ourselves. Half of the work force was employed for several weeks on developing a model, which was ultimately scrapped in favour of the NXTway-GS model. In retrospect it would have been wiser to just skip the modelling altogether and use an already available model. This would have saved us some time, which could have been put to good use elsewhere.

29

6.3.1 Kalman filter estimations not good enough

We made rapid progress during the last few weeks of the project but in the end we really ran out of time and were unable to implement all that we wished. The main thing left to complete is the Kalman filter, which at the moment is not good enough for implementation with a servo controller. This is partly connected with the issues of the system identification - with a better model we think we would be able to build a better filter. The next step after this would be to implement the full three dimensional model in order to improve the turning capabilities of our robot.

6.3.2 Not able to perform the line tracking

We also started developing the line tracking algorithm very early. Our project group consisted of four people having studied some control theory and one person with a background in computer programming. Since the RobotC program we designed had a fairly simple structure and no advanced algorithms were needed our programmer soon ran out of things to do. So he was put to the task of making the robot follow the line. In the end we were not able to implement the line following because the servo controller of our robot does not work and it cannot balance while moving.

6.3.3 Better evaluation of sensor readings

With more time available we would like to find some better way of setting the initial values of the EOPD sensor. It should be possible to develop a good method for finding all needed settings online without the need to manually tune the parameters. We tried some solutions but none of them worked in a satisfactory way.

6.4 Future work

For future projects we do not recommend the EOPD sensors for balancing robots. While we do think it is possible to build a very stable robot far better than ours there are quite a few unavoidable problems which makes them less suitable then the gyro sensor. Using light sensors will require the surface to be of uniform colour and the robot will have difficulty balancing when the surface is not perfectly flat. These problems do not arise when working with a gyro sensor.

At one point we had an idea of using the standard NXT light sensors with a Kalman filter and state feedback for balancing. These sensors do have a lower resolution compared to the EOPD sensors but they are much less costly as they come included with the standard box. If this solution worked it would make a cheap set up for lab experiments. However, since the Kalman filter performed rather poorly we never attempted an implementation with the standard light sensors.

30

Acknowledgements We would like to thank the people working with the course for providing great help, extensive availability, equipment procurement and superb feedback on the project: Alexander Medvedev, Romeo Buenaflor, Karl Marklund and Egi Hidayat.

31

References � [1]: Segway.com <http://segway.com/> � [2]: Trevor Blackwell <http://www.tlb.org/> � [3]: nBot Balancing Robot <http://geology.heroy.smu.edu/~dpa-www/robo/nbot/> � [4]: Steve´s LegWay <http://www.teamhassenplug.org/robots/legway/> � [5]: Ryo´s Holiday <http://web.mac.com/ryo_watanabe> � [6]: NXTway-GS <http://www.mathworks.com/matlabcentral/fileexchange/19147> � [7]: PID vs. Linear Control <http://home.earthlink.net/~ltrammell/tech/pidvslin.htm> � [8]: Bluetooth adapter

<http://www.mindstorms.rwth-aachen.de/trac/wiki/BluetoothAdapter >

32

Appendix A – C code #pragma config(Sensor, S2, EOPDSensor_front, sensorHighSpeed)

#pragma config(Sensor, S3, EOPDSensor_back, sensorHighSpeed)

#pragma config(Sensor, S4, touchSensor1, sensorTouch)

#pragma config(Motor, motorA, mot_A, tmotorNormal, openLoop, )

#pragma config(Motor, motorB, mot_B, tmotorNormal, openLoop, )

//*!!Code automatically generated by 'ROBOTC' configuration wizard

!!*//

void initiate();

void balance();

float controller();

float get_angle();

// Kalman filter and LQG controller parameters, generated by MATLAB

const float A_kalman[4][4] = {0.62306648, 0.85921357, 0.00441865, 0.00553713,

0.08679139, 0.55889584, 0.00194059, 0.00808854,

-0.56002930, -0.45173857, 0.27036566, 0.71947306,

0.05872239, -0.57039578, 0.25410188, 0.75356152};

const float B_kalman[4][4] = {0.00542480, 0.00542480, 0.37693352, -0.86937486,

-0.00188616, -0.00188616, -0.08679139, 0.44876757,

0.70916911, 0.70916911, 0.56002930, -0.66609073,

-0.24697468, -0.24697468, -0.05872239, 1.78653831};

const float C_kalman[4][4] = {0.66520288, 0.73866618, 0.00000000, 0.00000000,

0.07386662, 0.61037187, 0.00000000, 0.00000000,

-16.13045327, 53.45637964, 1.00000000, 0.00000000,

5.39792503, -19.76754552, 0.00000000, 1.00000000};

const float D_kalman[4][4] = {0.00000000, 0.00000000, 0.33479712, -0.73866618,

0.00000000, 0.00000000, -0.07386662, 0.38962813,

0.00000000, 0.00000000, 16.13045327, -53.45637964,

0.00000000, 0.00000000, -5.39792503, 19.76754552};

const float L_theta = -0.00000056;

const float L_psi = -32.23874313;

const float L_theta_dot = -1.00138809*0.3;

const float L_psi_dot = -2.96306334;

const float dt = 0.010; // Sampling time of system (10 ms)

float equi = 1.4; // Equilibrium angle of the robot, needed to adjust the psi

value

// psi=0 when the robot is perpendicular to the surface

float u_front = 0; // Sensor value read from the EOPD sensor in front

float u_back = 0; // Sensor value read from the EOPD sensor in back

float motor_signal = 0; // Signal power to be sent to motors

float psi = 0; // Body yaw

float theta = 0; // Wheel angle

float psi_1 = 13; // Body yaw angle for front measurments during initiate

float psi_2 = -19; // Body yaw angle for back measurments during initiate

float sens_front = 0; // Constants used for mapping sensor readings to psi angle

float sens_back = 0;

float x_est[4] = {0,0,0,0}; // Kalman filter state estimates

float x_prev[4] = {0,0,0,0}; // Kalman filter state estimates from previous time

step

float u_kalman[4] = {0,0,0,0}; // Input signal to Kalman filter

float x_new[4] = {0,0,0,0}; // Updated states

float V_max_full = 7740; // Maximum voltage when battery is fully charged (in mV)

float V_max_current = 0; // Current maximum voltage

float battery_scale = 1; // Scale the motor signals with respect to battery level

33

//MAIN

//---------------------------------------------

task main() {

/* main() executes the intiate function and then enters the main loop. */

// Calculate battery scaling from current maximum voltage

V_max_current = nAvgBatteryLevel;

battery_scale = V_max_full / V_max_current;

// Execute initiate to calibrate sensors->angle mapping

initiate();

// Wait for button to be pressed before starting main loop

eraseDisplay();

nxtDisplayTextLine(1, "Waiting", );

while(SensorValue(touchSensor1) == 0);

wait1Msec(1000);

eraseDisplay();

nxtDisplayTextLine(1, "Running", );

// Read body yaw angle to give the Kalman filter a good start guess

// if the robot is not held upright at start

x_prev[1] = get_angle();

// Run main loop until touch sensor pressed

while(SensorValue(touchSensor1) == 0) {

ClearTimer(T1);

balance(); // Balance the robot

while(time1[T1] < (dt*1000)); // Stay idle until next time slice

}

// Save data log

SaveNxtDatalog();

}

//---------------------------------------------

//INITIATE

//---------------------------------------------

void initiate() {

/* initiate() takes two readings at known psi angles from the

two EOPD sensors, and from these values evaluates two constants

used for the sensors->psi angle mapping. The first position is

when the robot is tilted forward psi_1 degrees and for the second

it is tilted backwards psi_2 degrees. */

nxtDisplayCenteredTextLine(1, "When ready,", );

nxtDisplayCenteredTextLine(3, "press button for", );

nxtDisplayCenteredTextLine(5, "front measurement", );

// Wait for button to be pressed before taking first measurement

while(SensorValue(touchSensor1) == 0);

wait1Msec(1000);

float u_front_1 = exp(0.33333*log(1023-SensorValue(EOPDSensor_front)));

float u_back_1 = exp(0.33333*log(1023-SensorValue(EOPDSensor_back)));

nxtDisplayCenteredTextLine(1, "When ready,", );

nxtDisplayCenteredTextLine(3, "press button for", );

nxtDisplayCenteredTextLine(5, "back measurement", );

// Wait for button to be pressed before taking second measurement

while(SensorValue(touchSensor1) == 0);

wait1Msec(1000);

float u_front_2 = exp(0.33333*log(1023-SensorValue(EOPDSensor_front)));

float u_back_2 = exp(0.33333*log(1023-SensorValue(EOPDSensor_back)));

float det = 1/(u_front_1*u_back_2-u_front_2*u_back_1);

34

// Evalute the two contants used for the sensors->psi mapping

sens_front = det*(u_back_2*psi_1-u_back_1*psi_2);

sens_back = det*(u_front_1*psi_2-u_front_2*psi_1);

}

//---------------------------------------------

//BALANCE

//---------------------------------------------

void balance() {

/* balance() essentially reads control signal and sends power to the

motors accordingly. */

// Get new motor signal from controller

motor_signal = controller();

// Clip signal if it exceeds max or min values

if (motor_signal<-100)

motor_signal = -100;

else if (motor_signal>100)

motor_signal = 100;

// Send motor signals to motors, the signal sent to motor A is corrected

// by a simple P controller to make the robot move straight

motor[mot_A] = battery_scale*(motor_signal+(1.2*(nMotorEncoder[mot_B]-

nMotorEncoder[mot_A])));

motor[mot_B] = battery_scale*motor_signal;

}

//---------------------------------------------

//GET_ANGLE

//---------------------------------------------

float get_angle() {

/* get_angle() reads the current sensor values from the two EOPD sensors

and maps these to a psi angle. */

u_front = 1023-SensorValue(EOPDSensor_front);

u_back = 1023-SensorValue(EOPDSensor_back);

psi = sens_front*exp(0.33333*log(u_front)) + sens_back*exp(0.33333*log(u_back));

// Correct psi for robot equlibrium angle

return psi - equi;

}

//---------------------------------------------

//CONTROLLER

//---------------------------------------------

float controller() {

/* controller() calucluates the Kalman filter state estimates and returns

the LQ control signal to caller. */

// Get psi (body yaw angle)

psi = get_angle();

// Get theta (wheel angle)

theta = 0.5*(nMotorEncoder[motorA] + nMotorEncoder[motorB]) + psi;

// Set input signals to Kalman filter

u_kalman[0] = motor_signal;

u_kalman[1] = motor_signal;

u_kalman[2] = theta;

u_kalman[3] = psi;

// Reset old variables

for (int i=0;i<4;i++) {

x_new[i] = 0;

x_est[i] = 0;

}

35

// x_est = C*x_prev + D*u

for (int r=0;r<4;r++)

for (int k=0;k<2;k++)

x_est[r] += x_prev[k]*C_kalman[r][k];

x_est[2] += x_prev[2];

x_est[3] += x_prev[3];

for (int r=0;r<4;r++)

for (int k=2;k<4;k++)

x_est[r] += u_kalman[k]*D_kalman[r][k];

// x_prev = A*x_prev + B*u

for (int r=0;r<4;r++)

for (int k=0;k<4;k++)

x_new[r] += x_prev[k]*A_kalman[r][k] + u_kalman[k]*B_kalman[r][k];

for (int i=0;i<4;i++)

x_prev[i] = x_new[i];

// Uncomment these lines if a log is needed. The data need to be corrected

// accordingly when read in MATLAB.

/*AddToDatalog(0,(int)(theta)+10000);

AddToDatalog(0,(int)(x_est[0])+10000);

AddToDatalog(0,(int)(psi*100)+10000);

AddToDatalog(0,(int)(x_est[1]*100)+10000);

AddToDatalog(0,(int)(x_est[2]*10)+10000);

AddToDatalog(0,(int)(x_est[3]*10)+10000);*/

// return LQ control signal

return -

(L_theta*x_est[0]+L_psi*x_est[1]+L_theta_dot*x_est[2]+L_psi_dot*x_est[3]);

}

//---------------------------------------------

36

Appendix B – Matlab code clc

clear all

%% NXTway-GS Parameters and State-Space Matrix Calculation %%

% Physical Constant

g = 9.82; % gravity acceleration [m/sec^2]

% NXTway-GS Parameters

m = 0.017; % wheel weight [kg]

R = 0.028; % wheel radius [m]

Jw = 6.7e-5; % wheel inertia moment [kgm^2]

M = 0.57; % body weight [kg]

W = 0.14; % body width [m]

D = 0.06; % body depth [m]

H = 0.144; % body height [m]

L = 0.08; % distance of the center of mass from the wheel

axle [m]

Jpsi = 0.5e-3;%M*L^2/3%3e-3; % body pitch inertia moment [kgm^2]

Jphi = 9.67e-4; % body yaw inertia moment [kgm^2]

fm = 0.0022; % friction coefficient between body & DC motor

fw = 0; % friction coefficient between wheel & floor

% DC Motor Parameters

Jm = 1e-6; % DC motor inertia moment [kgm^2]

Rm = 6.69; % DC motor resistance [Ħ]

Kb = 0.468; % DC motor back EMF constant [Vsec/rad]

Kt = 0.317; % DC motor torque constant [Nm/A]

n = 1; % gear ratio

% NXTway-GS State-Space Matrix Calculation

alpha = n * Kt / Rm;

beta = n * Kt * Kb / Rm + fm;

tmp = beta + fw;

E_11 = (2 * m + M) * R^2 + 2 * Jw + 2 * n^2 * Jm;

E_12 = M * L * R - 2 * n^2 * Jm;

E_22 = M * L^2 + Jpsi + 2 * n^2 * Jm;

detE = E_11 * E_22 - E_12^2;

A1_32 = -g * M * L * E_12 / detE;

A1_42 = g * M * L * E_11 / detE;

A1_33 = -2 * (tmp * E_22 + beta * E_12) / detE;

A1_43 = 2 * (tmp * E_12 + beta * E_11) / detE;

A1_34 = 2 * beta * (E_22 + E_12) / detE;

A1_44 = -2 * beta * (E_11 + E_12) / detE;

B1_3 = alpha * (E_22 + E_12) / detE;

B1_4 = -alpha * (E_11 + E_12) / detE;

A1 = [

0 0 1 0

0 0 0 1

0 A1_32 A1_33 A1_34

0 A1_42 A1_43 A1_44

];

B1 = [

0 0

0 0

B1_3 B1_3

B1_4 B1_4

];

C1 = eye(4);

D1 = zeros(4, 2);

I = m * W^2 / 2 + Jphi + (Jw + n^2 * Jm) * W^2 / (2 * R^2);

J = tmp * W^2 / (2 * R^2);

K = alpha * W / (2 * R);

37

A2 = [

0 1

0 -J / I

];

B2 = [

0 0

-K / I K / I

];

C2 = eye(2);

D2 = zeros(2);

A = [[A1 zeros(4,2)]; [zeros(2,4) A2]];

B = [B1; B2];

C = [[C1 zeros(4,2)]; [zeros(2,4) C2]];

D = [D1; D2];

Ts = 0.010; % sampling time

sys_c = ss(A1,B1,C1,D1); % continuous system

sys_d = c2d(sys_c,Ts); % time-discrete system

%% Kalman filter calculations %%

Ck = C1(1:2,:); % only two first states (psi and theta) measureable

Dk = zeros(2,4);

plant = ss(A1,[B1 B1],Ck,Dk,'InputName',{'u1','u2','w1','w2'},'OutputName',{'theta'

'psi'});

plant_d = c2d(plant,Ts); % discrete state-space model of plant

Q = [50 0; % process noise

0 50];

R = [0.01 0; % measurement noise

0 0.001];

[kalmf,L,P,M,Z] = kalman(plant_d,Q,R); % create steady-state Kalman filter

kalmf = kalmf(3:end,:) % only interested in outputting plant states

% (last four states in Kalman filter)

%% LQG regulator computation %%

Ql = [1 0 0 0; 0 1e6 0 0;0 0 1 0; 0 0 0 1];

Rl = [1e12 0;0 1e12];

[X_,L_,LQG] = dare(sys_d.a,sys_d.b,Ql,Rl); % compute LQG parameters

% %% LQG with servo

% Ck = C1(1:2,:); % only two first states (psi and theta) measureable

% Dk = zeros(2,2);

% plant = ss(A1,B1,Ck,Dk,'InputName',{'u1','u2'},'OutputName',{'theta' 'psi'});

% plant_d = c2d(plant,Ts); % discrete state-space model of plant

%

% nx = 4; %Number of states

% ny = 2; %Number of outputs

% Q = 0.5*eye(nx);

%

% Ql = [1 0 0 0; 0 1e9 0 0; 0 0 1 0; 0 0 0 1];

% Rl = [1e12 0;0 1e12];

%

% R = [1 0; % measurement noise

% 0 0.1];

% QXU = blkdiag(Ql,Rl);

% QWV = blkdiag(Q,R);

% QI = [1 1e2; 1e2 1e6];

%

% KLQG = lqg(plant_d,QXU,QWV,QI)

%% Generate C code for Kalman & LGQ parameters

38

fprintf(1,'const float A_kalman[%d][%d] = {',[size(kalmf.a,1),size(kalmf.a,2)]);

for i=1:size(kalmf.a,1)

for j=1:size(kalmf.a,2)

fprintf(1,'%0.8f, ',kalmf.a(i,j));

end

if i~=size(kalmf.a,1)

fprintf(1,'\n ');

else

fprintf(1,'\b\b};\n\n');

end

end