Setup of Swiss CMS Tier-3

description

Transcript of Setup of Swiss CMS Tier-3

Setup of Swiss CMS Tier-3

Zhiling Chen (IPP-ETHZ)Doktorandenseminar

June, 4th, 2009

2

Outline Intro of CMS Computing Model Setup of Swiss CMS Tier-3 at

PSI Working on the Swiss CMS Tier-

3 Operational Experience

LCG Tier Organization

3

CERN

ASGC

RAL

FNAL

IN2P3FZK

CNAF

PIC

India KNU TaiwanRussian

sitesHelsinki

London

Rutherford PPD

Bristol

Caltech

Wisconsin

UCSD

MIT

Purdue

Nebraska

Florida

UERJ

SPARCE

BeijingBelgiumIN2P3-AFGRIFWarsaw

CSCS

DESY

RWTH

ROME

Bari

Legnaro

Pisa

Hungary

LIP-Lisbon

CIEMAT

IFCA

LIP-Coimbra

Swiss CMS Tier-3 at PSI

• T0 (CERN)• Filter farm • Raw data custodial• Prompt

reconstruction

• 7 T1s • Raw data custodial

(shared)• Re-reconstruction• Skimming,

calibration

• ~ 40 T2s• Central scheduled

MC production• Analysis and MC

Simulation for all CMS Users

• ~ Many T3s at institutes

• Local institutes’ users

• Final-stage analysis and MC Simulation

• optimized for users' analysis needs

Swiss Tier-2 for ATLAS, CMS, LHCb ….

Tier-3 for Swiss CMS community

CMS Data OrganizationPhysicist’s View

Event collection

DatasetA set of event collections that would

naturally be grouped for analysis

To process Events:

FindTransferAccess

System View

Files

File BlocksFiles grouped into blocks of reasonable size or

logical content.

To operate files:

files blocks Stored in GridTransfer and Access files in different storage system

Manage replicas

4

CMS Data OrganizationPhysicist’s View

Find

“What data exist?”

“Where are data located?”

Transfer

Access

CMS Data management

Data Bookkeeping SystemStandardized and queryable info of event

datamapping from event collections to files/file

blocks

Data Location Servicemaps file blocks to locations

PhEDExData Transfer and Placement

System

LCG commandsSRM and POSIX-I/O

5Map Physicist and system views

CMS Tier-3Local Site

Globe CMS Data Management

Service

CMS Analysis work flow

6

Data Book-keeping

DBDBS

Data Location

DBDLS

LHC Grid Computing

Tier-3Local

Cluster

Analysis Tool

CRABTier-3 User Interface

Tier-3 Storage ElementRemote SE

PhEDExGlobe Data Transfer

Agents and Database

PhEDExLocal Data

Transfer AgentsFile Transfer Service

CRAB is a Python program to simplify the process of creation and submission of CMS analysis jobs into a grid environment.

Overview of Swiss CMS Tier-3

7

For CMS members of ETHZ, University of Zurich and PSI

Located at PSI Try to adapt best to the users'

analysis needs running in test mode in October

2008, and in production mode since November 2008

30 registered physicist users Manager: Dr. Derek Feichtinger,

Assistant: Zhiling Chen

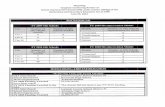

Hardware of Swiss CMS Tier-3

8

No. of Work Nodes Processors

Cores/Node Total Cores

8 2*Xeon E5410 8 64

No. of File Servers Type Space/Node

(TB) Total Space

(TB)6 SUN X4500 17.5 107

Present Computing Power

Present Storage

Layout of Swiss CMS Tier-3 at PSI

9

User Interface

CMS VoBox(PhEDEx)

Storage Element

(t3se01.psi.ch)

[dcache admin, dcap, SRM,

gridftp, resource info

provider]

NFS ServerNFS (home and shared software directories:

CMSSW, CRAB, Glite)

DB Server[postgres,

pnfs, dcache pnfs cell]

FileServerFileServerFileServerFileServerFileServerFileServerFileServer[dcache pool cells, gridftp,

dcap, gsidcap ]

Computing Element[Sun Grid Engine ]

Work NodesWork NodesWork NodesWork NodesWork NodesWork NodesWork NodesWork NodesWork Nodes[Sun Grid Engine

Clients]

Dispatch/CollectionBatch Jobs

Submit/retrieveBatch Jobs

Access Home/Software Directory

Access Home/Software Directory

Access L

ocal SE : SRM, gridftp, dcap …

Submit/retrieveLCG jobs

User login

Access PheEDEx Central DB

Access Remote SE

Accessed by LCGCMS Tier-3 at PSI

Monitoring[ganglia collector, ganglia web front

end ]

Network connectivity: PSI has a 1Gb/s uplink to CSCS.

Setup of Swiss CMS Tier-3 User Interface (8 cores): t3ui01.psi.ch

A fully operational LCG UI. It enables users to: login from outside Submit/Manage local jobs on the Tier-3 local

cluster Interact with the LCG Grid: Submit Grid jobs,

access storage elements, etc. Interact with AFS, CVS … Test users’ Jobs

Local batch cluster(8 Work Nodes * 8 Cores): Batch System: Sun Grid Engine 6.1

10

Setup of Swiss CMS Tier-3(cont.)

Storage Element (SE): t3se01.psi.chA fully equipped LCG storage element running a dCache. It allows users to: Access files by local jobs (dcap, srmcp, gridftp

etc.) in Tier-3 Access files (srmcp, gridftp) from other sites Give users extra space in addition to the space in

CSCS Tier-2 NFS Server (for small storage)

Hosts users’ home directories: analysis code, jobs output

Shared software: CMSSW, CRAB, Glite … Easy to access, but not for huge files

Note: If you need large storage space for longer time, you should use SE.11

Setup of Swiss CMS Tier-3(cont.) CMS VoBox (PhEDEx):

Users can order datasets to Tier-3 SE Admin can manage datasets with PhDEDx

Monitoring: Status of batch system Accounting Worker nodes load Free storage space Network Activities …

13

Working on Swiss CMS Tier-3Before Submit jobs: Order dataset

Check currently stored data sets for the Tier-3 from DBS Data Discovery Page

If the data sets are not stored on Tier-3, Order data sets to T3_CH_PSI by PhEDEx central web page

Work Flow on Tier-3

Working on Swiss CMS Tier-3Submit and Manage batch jobs

• CRAB• CRAB module for SGE• Simplify creation and

submission of CMS analysis jobs

• Consistent way to submit jobs to Grid or Tier-3 Local Cluster

• Sun Grid Engine• More flexible• More powerful controls

• Priority• Job Dependency…

• Command line and GUI

14

Operational Experience User acceptance of the T3 services seems to be

quite good Our CRAB SGE-scheduler module works well

with SGE batch system. SGE provides flexible and versatile way to

submit and manage jobs on Tier-3 local cluster Typical Problems in “Bad” jobs:

CMSSW jobs produce huge output file with tons of debug messages -> Fill up home directory quickly, cluster stalled Set Quota for every user

Jobs initiate too many requests to SE in parallel -> Overload SE, jobs waiting Users should beware

15

Upgrade Plan

Phase year CPU/kCINT2000

Disk/TB

A (Plan) 2008 180 75A (Archived) 2008 213.76 107.1B (Plan) End of 2009 500 250

16

Hardware Upgrade

Software Upgrade:•Regular upgrade

•Glite•CMS Software: CMSSW, CRAB •…

•Upgrade under discussion:•using a parallel file system instead of NFS

•Better performance than NFS•Good for the operational of large root files

Documents and User Support Request Account: Send email to

[email protected] Users mailing list: cms-tier3-

[email protected] https://twiki.cscs.ch/twiki/bin/view/CmsTier3/

WebHome

Swiss CMS Tier-3 Wiki page17

19

CMS Event Data Flow

20

Format Content Event Size [MB]

RAW Detector data after online formatting; Result of HLT selections (~ 5 PB/Year)

1.5

RECO CMSSW Data Format containing the relevant output of reconstruction. (tracks, vertices, jets, electrons, muons, hits/clusters)

0.25

AOD derived from the RECO information. They are in a convenient, compact format, enough information about the event to support all the typical usage patterns of a physics analysis

0.05

Event Data Flow

Tier -0Online system

tape RAW,RECOAOD

First passreconstruction

O(50) primary datasetsO(10) streams (RAW)

Tier - 1

Tier - 1

Scheduled data processing (skim& reprocessing)

tape

RAWRECOAOD

RECO, AOD

Tier -2

Tier - 2

Tier - 2

Tier - 2

• Analysis• MC simulation

Tier -2

Tier - 2

Tier - 2

Tier - 2

• Analysis• MC simulation

Tier-3

Tier-3

Tier-3AnalysisMc sinmulation •

Based on the hierarchy of computing tiers from LHC Computing Grid