Scalable Database Logging for MulticoresScalable Database Logging for Multicores Hyungsoo Jung...

Transcript of Scalable Database Logging for MulticoresScalable Database Logging for Multicores Hyungsoo Jung...

Scalable Database Logging for Multicores

Hyungsoo Jung˚

Hanyang UniversitySeoul, South Korea

Hyuck HanDongduk Women’s University

Seoul, South [email protected]

Sooyong KangHanyang UniversitySeoul, South Korea

ABSTRACTModern databases, guaranteeing atomicity and durability, store trans-action logs in a volatile, central log buffer and then flush the logbuffer to non-volatile storage by the write-ahead logging principle.Buffering logs in central log store has recently faced a severe mul-ticore scalability problem, and log flushing has been challengedby synchronous I/O delay. We have designed and implemented afast and scalable logging method, ELEDA, that can migrate a surgeof transaction logs from volatile memory to stable storage withoutrisking durable transaction atomicity. Our efficient implementa-tion of ELEDA is enabled by a highly concurrent data structure,GRASSHOPPER, that eliminates a multicore scalability problem ofcentralized logging and enhances system utilization in the presenceof synchronous I/O delay. We implemented ELEDA and plugged itto WiredTiger and Shore-MT by replacing their log managers. Ourevaluation showed that ELEDA-based transaction systems improveperformance up to 71ˆ, thus showing the applicability of ELEDA.

PVLDB Reference Format:Hyungsoo Jung, Hyuck Han, and Sooyong Kang. Scalable Database Log-ging for Multicores. PVLDB, 11(2): 135 - 148, 2017.DOI: https://doi.org/10.14778/3149193.3149195

1. INTRODUCTIONA logging and recovery subsystem has been an indispensable

part of databases, since System R [4] was designed in the mid-1970s. To ensure atomicity and durability, the write-ahead log-ging (WAL) protocol [21, 22] was defined and later codified inARIES [39], a de facto transaction recovery method widely de-ployed in relational database systems. Databases relying on ARIESuse centralized logging that in general consists of two operations;(1) building an in-memory image of a log file by storing transactionlogs into a volatile, central log buffer and (2) appending the imageto the log file by flushing the log buffer to non-volatile storage (fol-lowing the WAL protocol).

The widespread, decades-long use of centralized logging posesa significant challenge in the landscape of multicore hardware. Thekey challenge is to find a fast, scalable logging method that can∗Contact author and principal investigatorPermission to make digital or hard copies of all or part of this work forpersonal or classroom use is granted without fee provided that copies arenot made or distributed for profit or commercial advantage and that copiesbear this notice and the full citation on the first page. To copy otherwise, torepublish, to post on servers or to redistribute to lists, requires prior specificpermission and/or a fee. Articles from this volume were invited to presenttheir results at The 44th International Conference on Very Large Data Bases,August 2018, Rio de Janeiro, Brazil.Proceedings of the VLDB Endowment, Vol. 11, No. 2Copyright 2017 VLDB Endowment 2150-8097/17/10... $ 10.00.DOI: https://doi.org/10.14778/3149193.3149195

solve the classic problem of multiple producers (i.e., transactions)and a single consumer (i.e., log flusher), with WAL being strictlyenforced. Two technical obstacles are known at present:Multicore scalability for multiple producers. As multicore plat-forms allow more transactions to run in parallel, storing concur-rently generated logs into a central log buffer faces the serializationbottleneck [8] that hinders the entire database from being scalable.Prior studies [31, 32] have addressed this problem with noticeableimprovements, but scalability bottlenecks still exist due to the non-scalable lock used in proposed solutions.Synchronous I/O delay by a single consumer. Flushing a logbuffer to stable storage by the WAL protocol has long been chal-lenged by synchronous I/O delay. In this regard, latency-hidingtechniques, originally proposed by DeWitt et al. [15] and Nightin-gale et al. [42], have successfully been used to increase system-wide throughput in the presence of synchronous I/O delay. Fur-thermore, emerging non-volatile memory (NVM) technologies areworth considering for low access latency. We believe that combin-ing these can be a promising solution.

Important to notice is the intrinsic difficulty of the challengethat whichever of two salient issues remains unaddressed will be aculprit responsible for performance bottlenecks. Although a largebody of work has focused on this challenge, what still remains un-resolved yet is the multicore scalability that may render severaldatabases vulnerable to performance problems on hardware withdozens of cores, which appears in the cloud [1]. This constitutesthe primary motivation for the present work.

As a general solution to the performance bottlenecks of cen-tralized logging, we present ELEDA (Express Logging EnsuringDurable Atomicity) that can smoothly shepherd a surge of trans-action logs from volatile memory to stable storage, without riskingatomicity and durability. The centerpiece of ELEDA is a highly con-current data structure, called GRASSHOPPER, which is designed toresolve the multicore scalability issues arising in centralized log-ging. To enhance system-wide utilization in the presence of syn-chronous I/O delay, we follow the claim of DeWitt et al. [15] andapply proven implementation techniques to ELEDA. We tightly in-tegrate all these into a three-stage logging pipeline of ELEDA thatcan be applicable to any transaction systems suffering performancebottlenecks of centralized logging.

We implemented a prototype of the ELEDA design and pluggedit to WiredTiger [41] and Shore-MT [17] that use consolidation ar-ray techniques [31,32] but still exhibit the performance bottlenecksunder high loads on multicores. We evaluate the performance ofELEDA on a high-end computing platform equipped with 36 physi-cal cores, 488 GiB memory and two enterprise NVMe SSDs. Withthe key-value workload, ELEDA-based systems improve transac-tion throughput by a wide margin, thus some achieving higher than

135

„ 3.9 million Txn/s with guaranteed atomicity and durability. Withthe online transaction processing (OLTP) workload, ELEDA-basedsystems also show significant improvements.

This paper makes the following contributions:– We confirm that the performance bottlenecks in centralized

logging still exist and reframe the key challenge to be thedesign of concurrent data structures (§2.2).

– We design ELEDA that is a fast, scalable logging method forhigh performance transaction systems with guaranteed atom-icity and durability (§3 and §4).

– We build ELEDA and plug it to WiredTiger and Shore-MT(§5). We then evaluate ELEDA-based systems, demonstrat-ing that our design avoids the performance bottleneck andimproves performance compared to the baseline systems.

2. BACKGROUND AND RELATED WORKIn this section, we point to a few essential concepts needed for

understanding our contribution.

2.1 Database Logging and RecoveryTransactional recovery is of importance to databases that are ex-

pected to guarantee ACID (Atomicity, Consistency, Isolation andDurability) properties. Authoritative coverage on the field of trans-action management and its subfield of transactional recovery canbe found in the textbooks by Bernstein et al. [6] and Weikum andVossen [53]. The internal design of database systems is describedby Hellerstein et al. [24].

Database logging in this context is used to capture every mod-ification made to data by a transaction. A log sequence number(LSN) is associated with each log to establish the happen-beforerelation [36] between logs pertaining to the same database page,or between logs spanning multiple data pages. LSN-stamped logsare stored in a volatile, central log buffer and later synchronouslywritten to non-volatile storage by the WAL protocol. In a nut-shell, given all log records that are totally-ordered, ARIES [39],upon failures, replays all redo logs in LSN order to reconstruct thestate of databases as of the crash, then it applies undo logs in re-verse LSN order to rollback the effects of uncommitted transac-tions. Hence a globally unique LSN in ARIES (or its variants) ispivotal to imposing proper ordering on transaction logs.

2.2 Multicore Scalability

2.2.1 Sequentiality of loggingA fundamental restriction imposed on database logs is the se-

quentiality of logging, strictly requiring that the sequence of LSNand the log flushing order be the same. In other words, the LSNorder should also match the log order in an in-memory image ofa log file. Any violation would definitely risk the correctness ofdatabase recovery. Note that the sequentiality condition held forlogs in a volatile buffer must be retained prior to the volatile logbuffer being sequentially flushed to non-volatile storage. To honorthe sequentiality of logging, the widespread use of a global lockbegan early days in many database engines, such that (1) a trans-action acquires a lock, (2) allocates an LSN for its log and embedsthe LSN in the log, (3) copies the log to the central log buffer, and(4) releases the lock. The use of such a global lock undoubtedlylimits the scalability of database logging on multicore systems.

2.2.2 Related workTo improve the scalability of centralized logging, Johnson et

al. [31, 32] proposed the consolidation array technique that is ro-bustly implemented in two publicly available database engines (i.e.,

Table 1: Control options for transaction durability.Databases or Durability/Slow Non-Durability/Fast

storage engines (no data loss) (data loss)Oracle [44] force wait no-wait

MySQL [43] flush log at commit delayed flushSQL Server [38] full durability delayed durabilityMongoDB [40] commit-level durability checkpoint durability

PostgreSQL [50] synchronous commit asynchronous commitShore-MT [17] flush log at commit lazy flush

Shore-MT [17] and WiredTiger [41]). But we observe that thereis still a chance to improve the multicore scalability of central-ized logging in these systems, as the author of prior work also re-cently confirmed the same issue and proposed distributed loggingon NVM instead in [52]. Distributed logging and recovery [49, 52,54], owing to performance benefits, have been studied as such, butdesign complexity added to recovery and log space partitioning,which may restrict transactions to access multiple database tablesbelonging to different log partitions, remains to be addressed fordatabase vendors to change the status quo. For instance, Silo [51]avoids centralized logging bottleneck by letting each worker threadcopy transaction-local redo logs to per-thread log buffer after val-idation (following optimistic concurrency control (OCC)). SinceSilo stores redo logs only for committed transactions, epoch-baseddecentralized logging ensures correctness and works well for OCC-based transaction engines. Despite this attractive feature, it is non-trivial to directly apply Silo’s design to ARIES (or its variants)-based databases adopting the steal and no-force policies that re-quire undo/redo logs.

2.2.3 ChallengeThe key challenge in eliminating the non-scalable global lock for

scalable logging on multicores is to allow transactions to concur-rently buffer their logs with the sequentiality being guaranteed. Asprior work attempted, concurrent log buffering itself is not tech-nically challenging. The essence of the challenge is to guaranteethe sequentiality for those concurrently buffered logs, without us-ing locks. Since the assigned LSN tells a log where to be copied inthe log buffer, the sequentiality for the log being buffered cannot beensured until its preceding logs are completely buffered. Here wedefine an LSN already assigned to a log not being buffered yet asan LSN hole, and also define the maximum LSN of which no LSNholes are behind as a sequentially buffered LSN (SBL). Since LSNholes undoubtedly stall the sequential log flushing, advancing SBLwith LSN holes being chased is critical to the entire logging sys-tem. Hence the core challenge we address is to design concurrentdata structures that perform required operations while transactionswrite logs to the central log buffer concurrently.

2.3 Synchronous I/O DelayThe strict enforcement of the WAL protocol incurs synchronous

I/O delay, which is detrimental to high performance reliable trans-action processing. The synchronous I/O delay therefore has placedsubstantial pressure on database designers for pursuing alternativeoptions that are intended to trade transaction durability for betterperformance. As shown in Table 1, well-known database enginesprovide such options that control balance between transaction dura-bility and performance.

2.3.1 NVM is close at handThe advent of fast, non-volatile memory technologies has changed

the age-old belief in our mind that non-volatile storage is slow. Thishas driven a substantial body of proposals that have attempted toreduce or to avoid the synchronous I/O delay in databases, either

136

by rearchitecting subcomponents of databases [2, 13, 52], or by thenovel use of NVM [9,18,20,23,34]. Closer to our work is the pro-posal by Fang et al. [18] that combines logging in NVM with holedetection being executed during database recovery. Although thehole detection uses linear scanning of log space in NVM, the pro-posal retains efficiency during normal operations mainly because(i) logs are quickly hardened to fast NVM with some LSN holesbeing left unresolved and (ii) the linear scanning is performed onlyduring the recovery for detecting any holes left due to the systemcrash. Write-behind logging (WBL) [3] is recent work designed forhybrid storage with DRAM and NVM. With WBL, database man-agement systems write updates to NVM-resident databases prior toa log being flushed. Updates are made visible after a log is hard-ened. WBL can ensure good performance only if databases resideon fast, byte-addressable NVM that is again an essential part of thescheme. All in all, despite performance gain proved by these tech-niques, non-scalable locks in software, if left unaddressed, wouldraise undesirable performance issues on high-end computing plat-forms with dozens of cores, like what prior works [7,10–12,16,29,30, 33, 37, 48] have addressed for improving multicore scalabilityof components of operating systems and databases.

2.3.2 Latency-hiding techniquesRadically different from NVM-based approaches are some pro-

posals that are intended to increase system-wide utilization visibleto users in the presence of synchronous I/O delay. The databaseand operating systems communities call these latency-hiding tech-niques, and they are widely adopted in both research and commer-cial fields. The key idea for improving system-wide utilization isswitching waiting threads/processes with runnable ones. The elab-oration required here is to control when to release the computationresults to external users.

Early lock release. Three decades ago, DeWitt et al. [15] pro-posed the early lock release (ELR) in the database community, stat-ing that database locks can be released before its commit recordis hardened to the disk, as long as the transaction does not returnresults to the client. This means, other transactions may see themodifications made by the pre-committed transaction, and thesetransactions are not allowed to return results to the clients. Whilematerializing ELR, Johnson et al. [31] proposed the flush pipelin-ing technique that allows the thread to detach the pre-committedtransaction and to attach another transactions to reduce schedul-ing bottleneck. Other examples of adopting ELR are Silo [51]and SiloR [54] that have proposed high-performance in-memorydatabase engines, based on optimistic concurrency control and dis-tributed logging.

External synchrony. In the systems community, Nightingale etal. [42] proposed the external synchrony that may share the samedesign goals of increasing system-wide utilization in the presenceof synchronous file I/O. The external synchrony is a model of localfile I/O to provide the reliability and simplicity of synchronous I/O,while providing the performance of asynchronous I/O. The essenceof the external synchrony is, a process/thread is not allowed to de-liver any output to an external user until the file system transac-tion on which the pending output depends commits, meaning thepending output is released only after the corresponding file systemtransaction writes all contents to the non-volatile storage.

3. OVERVIEWIn this section, we provide an overview of ELEDA. We first de-

scribe the overall architecture and then explain how we approachtechnical challenges for achieving the goals of making centralizedlogging fast and scalable, with guaranteed atomicity and durability.

Log Sequence Number (LSN)

Stable Storage

Central Log Buffer

Flushing logs

Storage Durable LSN (SDL)

: Storage durable log : Fully buffered log : Partially buffered log : Unused log buffer

Sequentially Buffered LSN (SBL)

Tx1 Tx2

122

NN

1

Transactions

DB threads

Logs

TxN

Copying

void eleda_invoke_callback (int thd_id);long eleda_reserve_lsn (size_t len);void eleda_write_log (int thd_id,

void* log_ptr, size_t len, long lsn,void (*callback) (void*), void* arg);

ELEDA APIs

Figure 1: ELEDA logging architecture.

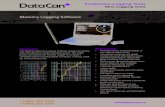

3.1 Overall ArchitectureThe logging architecture of ELEDA is based on a three-stage log-

ging pipeline, and it consists of three types of threads: databasethreads, an ELEDA worker thread and an ELEDA flusher thread.Figure 1 depicts the overall architecture. The central log buffer inELEDA is one contiguous memory logically divided into chunks byI/O unit size. Database threads do the memory copy to store theirlogs to the log buffer.

In the first stage of the logging pipeline, database threads, on be-half of its transaction, reserve log space (i.e., LSNs) and copy logsto the reserved buffer via ELEDA APIs, all done concurrently. LSNallocation is done by using the atomic fetch-add instruction1,and concurrent space allocation and copying inevitably creates so-called LSN holes (i.e., partially buffered logs in Figure 1). Thatbeing said, database threads can make rapid progress because theELEDA worker takes the responsibility for tracking such LSN holesand advancing SBL. Among transactions is a committing one thatmust provide a callback function to ELEDA and needs wait until itslog and all preceding logs become durable on stable storage.

In the second stage, the ELEDA worker tracks the LSN holesand advances SBL efficiently using carefully designed concurrentdata structures (i.e., GRASSHOPPER) (§4.2). In the third stage,the ELEDA flusher keeps flushing all sequentially buffered logs upto SBL to non-volatile storage. Here we define the most recentlyflushed LSN as a storage durable LSN (SDL), which is a durabilityindicator for database logs in stable storage (§4.3). And databasethreads wake up waiting transactions whose commit LSNs becamedurable (i.e., commit LSN ď SDL). This is done by invoking call-back functions associated with waiting transactions, and it makesuse of the latency-hiding technique (§3.3). As shown in Figure 1,ELEDA provides APIs for this purpose. Although ELEDA is obliv-ious to host engine’s logging and recovery policies, it permits thehost engine to pre-allocate in-memory log space at their disposal(e.g., reserving log buffer space for logs generated upon rollback).But such pre-allocated buffer space works as a large LSN hole thatmay hinder log flushing.

3.2 Scalable LoggingThe widespread use of a non-scalable global lock to protect log

buffering is now liable for the serialization bottleneck [8] on mul-ticores. The naive elimination of a global shared lock for scalablelogging however poses a challenge that requires sequentiality oflogging to be preserved. The essence in addressing this challengeis to design shared data structures allowing concurrent accesses,and to devise an algorithm for fast tracking of LSN holes.1This will surely hit a hardware-based synchronization bottleneckonce latch contention is eliminated, and research on new designsfor surpassing the limitation is ongoing in our community [47].

137

2H

2H

i x 2H

2H 2Hix2H (i+1)x2H (i+2)x2H

SBL

(i+1) x 2H

(i+2) x 2H

(i+3) x 2Hhopping

X

crawlingX (<2H )

Hopping Index

(i+3)x2H

Log Buffer

Copied bytesH = hopping exponent

Figure 2: Grasshopper algorithm for advancing SBL.

To address the challenge, we eliminate non-scalable locks bydesigning concurrent data structures intended to enable multipletransactions to store their logs to the log buffer concurrently whiletracking LSN holes fast and thus advancing SBL accordingly. Wecall this GRASSHOPPER. GRASSHOPPER advances SBL in twoways - hopping and crawling - depending on how LSN holes aretraced. To put it in a nutshell, a grasshopper on grass hops usingits hind legs when it feels threatened, and crawls otherwise. OurGRASSHOPPER works likewise, except that the threat we are con-cerned here is the gap between the current SBL and the most re-cently allocated LSN. The wider the gap is, the longer it takes totrace LSN holes and to flush sequentially buffered logs.

Figure 2 depicts how SBL is advanced in two different ways:hopping is used when the gap is too wide while crawling is acti-vated otherwise. The question is how to figure out when to com-mence or to stop hopping. We address this by maintaining a table,called a hopping index (or H-index in short), whose entry book-keeps the cumulative byte count of buffered logs for the LSN rangeof [i ¨ 2H , pi` 1q ¨ 2H ), where i and 2H are the index to the tableand a hopping distance, respectively. As shown in Figure 2, entriesin the hopping index are used to provide more accurate informa-tion to GRASSHOPPER. If an entry equals to 2H , we can assert thatall logs in the corresponding LSN range are buffered without LSNholes. Otherwise (ă 2H ), one or more holes exist in the range.

3.2.1 SBL-hoppingWhen the current SBL - a precise indicator of the tip of the se-

quentially buffered logs - is far behind the most recently allocatedLSN because transactions generate logs too fast, we advance SBLby hopping the hopping distance, provided that logs in a given LSNrange are all sequentially buffered. SBL-hopping continues untilGRASSHOPPER detects any LSN holes, by checking the byte countof the hopping index entry (i.e., byte count ă 2H ). Hence SBL-hopping can be viewed as fast-path SBL advancement which workswell when logs are surging fast. But the hopping is unable to pre-cisely spot LSN holes since the hopping index does never recordeach individual LSN value. We cope with this issue by chasingLSN holes accurately (i.e., SBL-crawling).

3.2.2 SBL-crawlingThe method of advancing SBL switches from SBL-hopping to

SBL-crawling when we detect the presence of LSN holes. Once weknow the presence of LSN holes, GRASSHOPPER advances SBL bychasing LSN holes thoroughly (i.e., SBL-crawling). SBL-crawling,despite of its relatively slow nature of advancing SBL, can handlea wide range of operating conditions efficiently.

3.3 Latency-hiding DesignTo address the synchronous I/O delay, we use latency-hiding

techniques in ELEDA for pursuing better system-wide utilization.To this end, we leverage the proven techniques used in the priorstudies [32, 42, 51, 54]. This is done by first letting synchronouswaiting be switched over other useful transaction processing work.Once I/O is completed, the computation results held by this I/Ocan be delivered to an external user asynchronously through the

BEGIN;UPDATE ..;...

COMMIT;

T1

SDL

BEGIN;UPDATE ..;...

COMMIT;

T1

waitingrunnable

BEGIN;DELETE ..;...COMMIT;

T2

switchT1 T1 T2

BEGIN;UPDATE ..;...

COMMIT;

T1

BEGIN;DELETE ..;...

COMMIT;

T2

T2

SDL

callback

< <T1’s LSN T1’s LSN

1 2 3

runnablewait

T1 is committing. DB Thread detaches T1 and attaches T2.

DB Thread runs T2 and T1 is woken up by its callback.

Transaction

Thread

Figure 3: Latency-hiding techniques in ELEDA.

callback. Since database engines we tested (or assumed) are allbased on multi-threaded architecture, we use a limited number ofdatabase threads (i.e., „ the number of available cores) as workerthreads, and each of them binds itself to an active transaction amongmultiple candidates. We therefore assume that there is one-to-one relationship between a transaction object and an external user,though a database connection object may have such relationshipwith an external user instead in other engines (e.g., MariaDB).

Figure 3 shows how we implement proven techniques in ELEDA.Suppose there is a committing transaction T1 who just writes acommit log to the log buffer, but the SBL is not advanced to itsLSN due to the LSN holes. Then, it is unsafe for T1 to return re-sults to the client because its commit log is not durable yet. At thispoint, the database thread detaches T1 (pre-committed transaction)and put it to the waiting state, and then the database thread attachesa runnable transaction T2, which has useful operations to execute.When all LSN holes preceding T1’s commit LSN are completelyfilled (i.e., SBL passes T1’s commit LSN), then the log flusher ad-vances SDL to SBL by hardening logs in between. This makes T1’scommit log durable (i.e., T1’s commit LSNď SDL), and this is themoment ELEDA can put T1 to a running state, thereby letting T1return its results to the external user. A callback registered for T1 isinvoked to deliver an asynchronous notification to an external user.

4. ELEDA DESIGNIn this section, we describe designs and the approach we take

to address the challenge in §3.2, especially a highly concurrentdata structure that resolves the serialization bottleneck caused bythe non-scalable global lock used in centralized logging of severaldatabase systems. In particular, we focus on explaining how we de-sign shared data structures and make concurrent operations actingon the data structures correct.

4.1 Data StructuresELEDA uses concurrent data structures (i.e., a hopping index ta-

ble and GRASSHOPPER) that are intended to chase LSN holes andto advance SBL, the tip of sequentially buffered logs, without usingnon-scalable locks. GRASSHOPPER is designed based on two basicdata structures: an augmented shared FIFO queue (i.e., a two-levellist) and a binary min-heap.

Grasshopper list. An augmented shared FIFO queue is called agrasshopper list that manages LSNs that have been buffered but notconfirmed to be durable yet. We call these LSNs pending LSNs. Agrasshopper list is created for each database thread, and it is sharedbetween the owner database thread and the ELEDA worker threadwith different access privileges. Since a grasshopper list is basi-cally a FIFO queue, enqueuing is solely done by the owner databasethread and dequeuing is performed by the ELEDA worker thread.

138

cbi×2H+1 cb cb

i × 2H (i+1) ×2H (i+2) ×2H

cb

Hoppinglist

Crawlinglist

tailhead

gc

tailhead

Per-threadgrasshopper list

callbackstart_lsn

callback

hoppingcrawling

cb cb cb

: pending LSN node

Figure 4: Per-thread grasshopper list.

A per-thread grasshopper list internally consists of two lists; acrawling list (or c-list) and a hopping list (or h-list). Figure 4 showsa per-thread grasshopper list with some pointer fields being sharedby a database thread and the ELEDA worker thread. The figuredepicts how we organize the crawling list with the augmented hop-ping list. Note that none of shared variables are protected by a lock.

The crawling list is a list of all pending LSN nodes, and a pend-ing LSN node is a triple of ăstart lsn, end lsn, callbacką, wherethe callback is valid when the LSN is for a commit log that re-quires a committing transaction to wait until it becomes durable.The crawling list has three pointer fields: head, tail and gc. Since agrasshopper list is created for each database thread, pending LSNsin each grasshopper list are totally ordered in ascending order.

The hopping list is a list of pointer pairs, one pointing to the firstpending LSN node in a given LSN range of [i ¨ 2H , pi ` 1q ¨ 2H ),and the other one pointing to the next pair. The hopping list hastwo pointer fields: head and tail. The hopping list augments thecrawling list in that the head pointer is updated after hopping 2H

distance when GRASSHOPPER finds itself lagging too behind thecurrent log buffer offset, and the head pointer is used to place thehead pointer of the crawling list to the LSN node where the crawl-ing should commence afterward. Any other succinct data structuremeeting the requirement can be used.

LSN-heap. A binary min-heap is called an LSN-heap that isexclusively owned by a dedicated ELEDA worker thread, and anLSN-heap is constructed from a collection of first (and smallest)pending LSNs of all grasshopper lists. For SBL-crawling, we canremove the top element (i.e., the smallest LSN) from the currentLSN-heap if and only if the top LSN, which is the smallest pendingLSN (ăSBL) in all grasshopper lists, is contiguous to the currentSBL. Then we update the old SBL (i.e., advancing SBL).

4.1.1 Access rules on shared data structuresWe have presented main shared data structures and variables so

far. These shared data structures in ELEDA are accessed by threetypes of threads: database threads, ELEDA worker and ELEDAflusher. Since shared accesses allow several concurrent executionsto be exposed to shared data structures, access rules must be clearlyset to avoid undefined behaviors due to malicious data races.

Design principles. In this regard, we use two design princi-ples in making access rules. The first design principle is, ELEDA’slogging pipeline architecture solely consists of producer/consumerstructures, which means that every pair of a reader and a writeraccessing the same shared data structure is mapped to a pair ofa producer and a consumer. This makes designing concurrent al-gorithms straightforward since concurrent algorithms for the pro-ducer/consumer problem have been discussed thoroughly in manytextbooks (e.g., Herlihy and Shavit [25]).

As shown in Figure 5, there are three such relationships. Thefirst one is, a database thread and an ELEDA worker thread access-ing a grasshopper list are mapped to a producer enqueuing pending

DBThread

ELEDAWorker

ELEDAFlusher

DBThread

ELEDAWorker

Producer Consumer

LSN2 LSN1

ELEDAWorker

ELEDAFlusherSBL2 SBL1

ELEDAFlusher

DBThread

SDL2 SDL1

P

C

Track LSN holes&

Advance SBL

Flush logs < SBL&

Advance SDL

Invokecallbacks < SDL

Work

P

P

C

C

Figure 5: The producer/consumer structures in ELEDA.

Table 2: Allowed operations (W: Write, R: Read and Ø: none)for different threads on variables in shared data structures.

Thread Type Shared Variableshead tail gc SBL SDL h-index

Database thread Ø W W Ø R WELEDA worker thread W R Ø W Ø RELEDA flusher thread Ø Ø Ø R W Ø

LSN nodes to the list, and a consumer dequeuing nodes from thelist, respectively. The second one is, the ELEDA worker and theELEDA flusher are also mapped to a producer advancing SBL, anda consumer advancing SDL by flushing logs up to SBL. The thirdone is, the ELEDA flusher and a database thread are mapped to aproducer advancing SDL and a consumer invoking callbacks up toSDL and advancing the garbage-collection point.

The second principle is in accordance with the first one: wenever allow concurrent write privileges since arbitrating concurrentwrites on shared variables complicates synchronization methods,and the performance cannot usually be guaranteed. Table 2 showsaccess privileges that clearly represent who can access which vari-ables with what access privileges. By the access privileges, there isonly one writer who can modify any variables in shared data struc-tures. In ELEDA, there is only one producer thread who can modifythe shared variables, which can be read by the consumer thread todetect overrunning. We resort to Table 2 in explaining concurrentalgorithms for tracking LSN holes in §4.2.General invariant. A general invariant enforced on a shared, con-ceptual FIFO structure supporting a single producer and a singleconsumer is, the consumer can never overrun the producer. Thisinvariant however does never mandate that the consumer shoulddo spin-waiting until the waiting condition is cleared. Instead, inELEDA the consumer-side thread skips consuming work and pro-cesses other useful work to retain high utilization. The consumerlater revisits and processes the work whenever it is safe. The formaldescriptions of algorithm-specific invariants will be fully discussedin §4.5 after we explain complete algorithms.

4.1.2 Starting point: enqueuing an LSN nodeThe starting point of our logging pipeline is when a transaction

needs to store its logs to the central log buffer. A database threadfirst gets an LSN for its logs by atomically incrementing the LSNsequencer. The allocated LSN is used as an offset inside the centrallog buffer and tells where to copy given logs. After embedding theassigned LSN in the logs, the database thread copies the logs to theoffset in the log buffer. Once the copy is done, the database threadcreates a new pending LSN node for the logs and enqueues the nodeto its grasshopper list. At that time, if the pending LSN node is fora commit log, then we follow the protocol to mask synchronousI/O delay (see Figure 3 in §3.3) such that the database thread mustdetach the current transaction by changing the state to waiting, andthen the pending LSN node is enqueued to the grasshopper list. Thecallback in the pending LSN node will be used to put the waiting

139

tailheadgc

new_node1

2 cb(i+1)×2H+1

3

(i+1) ×2H

c-list

tailheadh-list

4

Figure 6: Enqueuing a new pending LSN node.

transaction to the runnable state once SDL passes the registeredstart lsn, meaning all preceding LSNs are made durable. In linewith this protocol, a database thread first inserts a pending LSNnode into the crawling list and then adds a node to the hopping listif and only if the start lsn of the node is the first (i.e., smallest) onein the list for a given LSN range.

Enqueuing a new pending LSN to the grasshopper list can beseparated into three constituent steps in detail:

1. A database thread appends a new pending LSN node into thec-list by resetting the c-list.tail to the new node.

2. IF the start lsn of the new pending LSN node is the first onein the range of [pi` 1q ¨ 2H , pi` 2q ¨ 2H ), a database threadinserts a hopping node to the h-list by resetting the h-list.tailto the new hopping node. ELSE this step can be skipped.

3. Increment the cumulative byte count of the correspondingentry of the hopping index by the log size.

Figure 6 describes the steps needed to complete enqueuing a newpending LSN node to a grasshopper list, assuming that a databasethread obtained the LSN of pi` 1q ¨ 2H ` 1 for its logs.

Note that a database thread, while enqueuing a new pendingLSN node, changes the tail fields of two lists, not changing othervariables (following access rules in Table 2). We enforce that thepointer manipulation order should strictly abide by the order shownabove, not to have malicious data race with the ELEDA workerthread who is advancing SBL by changing head fields of the grasshop-per list concurrently.

4.2 Tracking LSN HolesWith GRASSHOPPER data structures, ELEDA performs the fast

advancement of SBL that inevitably relies on how fast it can trackLSN holes. In this section, we explain how we advance SBL ef-ficiently by the following key procedures: SBL-hopping (§4.2.1)and SBL-crawling (§4.2.2). Note that none of procedures use anytype of locks in performing operations. When advancing SBL, adedicated ELEDA worker thread2 is used to chase LSN holes, andtracking the holes involves moving head pointer fields of grasshop-per lists. Moving head pointers has two implications in that movingc-list.head means that we advance SBL by SBL-crawling, whilemoving the h-list.head implies that SBL-hopping advances SBL.SBL-crawling is by far the most important workhorse under a va-riety of workloads. The main switch to control the mode is deter-mined by whether or not the current SBL falls into the same entryof the hopping index with the most recently allocated LSN. We firstexplain how we do SBL-hopping.

4.2.1 SBL-hopping algorithmIf ELEDA finds itself lagging too behind the most recently al-

located LSN, meaning that the ELEDA cannot advance SBL fastenough to process all pending LSNs being produced by databasetransactions, then SBL-hopping is used. To decide whether or not2The performance of a single worker thread can be limited by inter-processor communication overhead in NUMA machines. Over-coming such a limitation will be left as future work.

Algorithm 1: SBL-Hopping() and SBL-Crawling()Data: DBT // a group of database threadsData: H-Index[] // hopping indexData: H // hopping exponent

1 Procedure SBL-Hopping()2 h-size Ð H-Index.table size3 high Ð (SBL ąą H) // high-order (64-H) bits4 index Ð high modulo h-size5 while H-Index[index].bytes == 2H do6 H-Index[index].bytes Ð 07 high Ð high + 18 index Ð (index + 1) modulo h-size9 end

10 HB Ð (high ăă H) // hopping boundary11 adjust heads12 SBL Ð min

@kPDBT{e lsn | h-listk .head.e lsn ě HB}

13 rebuild the LSN-heap14 Procedure SBL-Crawling()15 top Ð LSN-heap.peek()16 while top.start lsn == SBL and H-Index[index].bytes != 2H do17 SBL Ð top.e lsn18 LSN-heap.pop()19 adjust head of top.c-list20 LSN-heap.insert(head of top.c-list)21 top Ð LSN-heap.peek()22 end23 LSN-heap.insert(heads of c-lists)

Per-thread crawling lists

ELEDA

Threadhead

LSN-heap

tail

New SBL

DB Threads

3

1 13

22

Figure 7: The overall flow of SBL-crawling.

to perform SBL-hopping, the ELEDA worker thread first checks theentry of the hopping index that current SBL belongs to. If the en-try has a value of 2H , meaning the corresponding LSN range ofthe log buffer is already filled up, then we guarantee that there isno LSN hole in that range. Then the thread checks the next entryof the hopping index. Checking entries of the hopping index con-tinues until the ELEDA worker confronts with an entry whose bytecount is smaller than 2H . Then it advances the head pointers ofboth the h-list and c-list of all per-thread grasshopper lists, to ad-vance SBL. In principle, we are able to advance SBL to the LSNrange boundary (i.e., i ˆ 2H ) at which hopping stops. However,we have to be careful here since there can be a log placed acrossthe LSN range boundary, which must not be partially flushed forcorrectness. In that case, SBL is set to the end lsn of the log (seeline 21 of algorithm 1).

After the ELEDA worker thread advances the head of the h-list,it deletes all pointer pairs preceding the new head in the list. Itis worth noting that the SBL-Hopping algorithm modifies only thehead fields of the grasshopper lists, by the access rules in Table 2.SBL-Hopping() of algorithm 1 describes the general algorith-mic sequences of SBL-hopping.

4.2.2 SBL-crawling algorithmFor SBL-crawling, we use the LSN-heap built from the head

nodes of per-thread crawling lists. Hence, the maximum number ofnodes in the LSN heap is equal to the number of database threads.

As shown in Figure 7, the LSN-heap is exclusively managed bythe ELEDA worker thread, and no other threads can access it. Assummarized in Table 2, the head LSN nodes in crawling lists are

140

also modified by the ELEDA worker. These access restrictions onconcerned shared data structures enable the ELEDA worker threadto manipulate the LSN-heap in a thread-safe way, especially whenadvancing SBL while other database threads run. Figure 7 depictsthe concurrent operations; while database threads insert newly cre-ated pending LSN nodes to their own crawling list by modifyingthe tail pointer, the ELEDA worker thread can insert new head LSNnodes to the LSN-heap and adjust the head pointers of crawlinglists concurrently, as long as heads do never overrun tails.

The top of the LSN-heap is guaranteed to be the smallest pendingLSN among all pending ones, owing to both the total order propertyof each crawling list and the very nature of min-heap. The top ele-ment of the heap can be removed when its start lsn is contiguous tothe current SBL. As the ELEDA worker thread pops the top pendingLSN from the LSN-heap, the end lsn of the top element will be thenew SBL. Once the top element is removed, the ELEDA worker ad-justs the head of the c-list from which the top pending LSN came,to the next pending LSN node. Once the head of the c-list is ad-justed, we insert the new head node of the c-list to the LSN-heap.When the start lsn of the head of the c-list crosses the LSN rangeboundary (i.e., i ¨ 2H ), then the head of the h-list should also be ad-justed to the next node, which is the first/smallest pending LSN inthe next LSN range. The ELEDA worker performs SBL-crawlingfor advancing SBL as long as SBL falls in the same entry of thehopping index with the most recently allocated LSN. Otherwise ifthe hopping index entry equals 2H , we switch to SBL-hopping.

4.3 Flushing LogsRecall that the last stage of ELEDA logging pipeline is to flush

all sequentially buffered logs to stable storage. For this purpose,ELEDA bookkeeps the most recently flushed SBL, defined as stor-age durable LSN (SDL). The SDL is stored in stable storage. Theflushing operation is straightforward in that an ELEDA flusher threadkeeps flushing the logs, starting from the current SDL to SBL.Since SBL is always reset to the end of a log, no logs can be par-tially written, leaving the remaining part in the volatile log buffer.Once the thread receives a completion notification, then it advancesSDL to SBL. Once SDL is advanced, the flusher thread stores SDLto stable storage and frees the log buffer area in between SDL andSBL to be used for incoming logs. Since we use NVMe SSD de-vices for stable storage, the I/O unit is tailored to maximize the I/Othroughput of sequential writes for given devices. This inevitablybrings us a tradeoff between high bandwidth utilization and lowlatency. Choosing a right point on this tradeoff depends on theworkload characteristics, such as an average size of a log and themaximum degree of concurrency.

4.4 Garbage-collection & CallbacksWhile the ELEDA worker thread moves the heads of h- and c-

lists, a database thread should move the gc pointer (i.e., garbagecollection point) to collect garbage LSN nodes in the c-list andto invoke callback functions to inform waiting transactions of thecompletion. The physical removal of pending LSN nodes is doneafter the callback is invoked. Moving the gc pointer continues untilthe gc and SDL logically point to the same pending LSN. Note thatgc pointer is exclusively accessed by the owner database thread.

4.5 Invariants for CorrectnessIn ELEDA, there are three types of the producer/consumer struc-

tures, all of them allow concurrent executions on concerned shareddata structures. Notoriously hard to reason are these concurrentexecutions on shared data structures that may yield undefined be-haviors. We therefore specify three important invariants that must

be preserved throughout the concurrent executions. Three invari-ants are algorithm-specific refinements of the general invariant dis-cussed in §4.1.1, and operations on shared variables strictly followthe access rules in Table 2. We express the specifications of invari-ants using Hoare logic [27, 28]: {P} C {Q} where P and Q arepre- and post-states satisfying relevant conditions, and C is a se-quence of commands. We abbreviate SBL advancement, garbage-collection and log flushing as SA, GC and LF, respectively. Threeinvariants are specified as follows:Spec1. {head ÞÑ v

Ź

tail ÞÑ v} SA {head ÞÑ v}: If both headand tail point to the same pending LSN node (v), the ELEDA workerthread (consumer) is not permitted to move the head while a databasethread (producer) is allowed to update the tail.Spec2. {gc ÞÑ v

Ź

SDL ÞÑ v} GC {gc ÞÑ v}: If both gc and SDLlogically point to the same pending LSN (v), the database thread(consumer) is not permitted to move the gc while the ELEDA flusherthread (producer) is allowed to update the SDL.Spec3. {SDL ÞÑ v

Ź

SBL ÞÑ v} LF {SDL ÞÑ v}: If both SDLand SBL have the same value (v), the ELEDA flusher thread (con-sumer) is not permitted to flush anymore while the ELEDA workerthread (producer) is allowed to update SBL.

Three invariants forbid one of concurrent threads from mutatingconcerned data, and these specifications indeed work as lineariza-tion points [26] that are used as a correctness condition for ourGRASSHOPPER data structures. We strictly enforce these invariantsin concerned producer/consumer structures to ensure correctness ofconcurrent executions on shared data structures in ELEDA.Memory model. Modern microprocessors allow out-of-order exe-cution to enhance performance of programs, and compilers do sim-ilar work (i.e., instruction reordering) when generating machinecode. This inevitably brings memory ordering issues to multi-threaded programs. To enforce memory ordering constraints oncode that reads or updates shared variables in ELEDA, we explicitlyuse memory barriers (i.e., mfence) to guarantee the read-after-write (RAW) order for correct synchronization.

5. IMPLEMENTATIONSWe have implemented a prototype of ELEDA as a shared library

and then plugged it to two storage engines, WiredTiger storage en-gine and the official EPFL branch of Shore-MT, with proper mod-ifications being made. WiredTiger and Shore-MT use the consoli-dation array technique [31, 32] in their log manager.

WiredTiger storage engine. WiredTiger has been adopted re-cently as a default storage engine for MongoDB and offers multi-version concurrency control via a concurrent skip-list for maxi-mizing update concurrency. Unlike conventional database engines,WiredTiger’s transaction creates a single log for all updates it madewhen it commits. This limits the highest isolation level to SNAP-SHOT ISOLATION [5]. Owing to the absence of database lockmanagement, WiredTiger storage engine achieves higher perfor-mance than Shore-MT engine. ELEDA replaced WiredTiger’s logmanager implementing the consolidation array technique. Whilewe implement latency-hiding techniques, we add thread-local park-ing slots to park pre-committing transactions. Parked transactionswill later be woken up and be allowed to deliver results to the client.

Shore-MT storage engine. The EPFL branch of Shore-MTfully implemented several techniques [30,32,45–47] offered to im-prove the multicore scalability of database locking, latching, andlogging. In the context of this paper, we have only enabled thelogging optimizations from this codebase. We have implementedELEDA to Shore-MT with Aether [31]. We replaced its consolida-tion array-based logging subsystem and modified its flush pipelin-ing implementation for transaction switching.

141

Table 3: Supermicro Server 6028R-TR specifications.Component SpecificationProcessor 18-Core Intel Xeon E5-2699 v3Processor Sockets 2 SocketsHardware Threads 36 (HyperThreading Disabled)Clock Speed 2.3 GHzL3 Cache 45 MiB (per socket)Memory 488 GiB DDR4 2400 MHzStorage Samsung SM1725 NVMe SSD (3.5 TB)

6. EVALUATIONFor evaluation, we use three workloads; the key-value work-

load (YCSB) [14], the online transaction processing workload (sys-bench) [35] and a logging microbenchmark.

6.1 System SetupAll storage engines are running on a 36-core server specified in

Table 3. To reduce irrelevant lock contention, we run all exper-iments with Hyperthreading being disabled. Two NVMe SSDs,each of which has the peak I/O speed of 3.5 GiB/s with 50 µs de-lay for the sequential writes of 16 KiB block, are used. ELEDAstripes log data across them with 512 KiB of stripe unit. The I/Ounit for flushing logs is set to 64 KiB throughout all experiments,unless stated otherwise. For all systems, we use three variants; 1) avanilla system on NVMe SSDs (NVMe), 2) a vanilla system on thetmpfs in-memory file system (tmpfs) and 3) an ELEDA-based sys-tem on NVMe SSDs (ELEDA). We used tmpfs, although durabilityis broken, to focus on the multicore scalability of log buffering invanilla systems. Hopping distance is set to 4 MiB.

We run both vanilla and ELEDA-based Shore-MT storage sys-tems with the default configuration, except that we provide 200GiB memory for a buffer pool and a 10 GiB log file. The logbuffer size is set to 32 MiB. For log flushing policy, we use the de-fault setting which forces the log buffer to the log file by the WALprinciple. This means that it ensures full ACID compliance. Alltransactions in Shore-MT experiments are committed after flush-ing logs. WiredTiger is configured with 60 GiB of cache size. Atransaction in WiredTiger flushes its log to the stable storage whenit commits (i.e., commit-level durability). To expose performancebottlenecks in database logging and preclude irrelevant overheadarising from other components, we use the isolation level READUNCOMMITTED in all storage engines. By doing this, we focus ourattention to the impact of non-scalable locks and the performanceupper bound of ELEDA-based systems, not on discovering latentbottlenecks in other places. Note that workloads are configured toaccess disjoint records or key-values to make the resulting transac-tion history SERIALIZABLE.

6.2 Key-value WorkloadThe YCSB benchmark consists of representative transactions han-

dled by web-based companies, as can be seen in many studies [3,37, 51, 54]. To demonstrate that ELEDA-based systems can per-form fast, scalable logging under high update workloads, we runELEDA-based WiredTiger and Shore-MT on the YCSB benchmarkwith workload A(50% Reads and 50% Writes) and 1 KiB payload.

6.2.1 ThroughputFigure 8 shows the throughput results. In all systems, ELEDA-

based systems improved performance with a wide margin. Notice-able is the wide performance gap („10ˆ) between ELEDA-basedand vanilla systems with 1 database thread that is primarily ow-ing to the latency-hiding technique allowing a database thread toexecute transactions not conflicting with parked ones. The benefitof the latency-hiding technique under low load can be consistentlyobserved throughout all experiments. In Figure 8(a), WiredTiger

0

1000

2000

3000

4000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

WiredTiger (NVMe)WiredTiger (tmpfs)

WiredTiger (ELEDA)

34 35 30 36 43 55

27

(a) WiredTiger

0

200

400

600

800

1000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

Shore-MT (NVMe)Shore-MT (tmpfs)

Shore-MT (ELEDA)

(b) Shore-MT

Figure 8: Throughput on the key-value workload.

(ELEDA) peaks at 3.9 million Txn/s with 32 database threads. Mean-while, the throughput of WiredTiger (NVMe) is not commensuratewith the number of database threads: the throughput almost flattensout from the beginning. Interestingly, WiredTiger (tmpfs) severelysuffers the scalability problem after it peaked at 740 kTxn/s (with 4database threads). The throughput improvement that ELEDA con-tributes with 32 threads is 71ˆ. The I/O bandwidth consumed byWiredTiger (ELEDA) peaks at 2.2 GiB/s with 32 database threads,while WiredTiger (NVMe) peaks at 30 MiB/s with 32 databasethreads. Noticeable is the performance drop in WiredTiger (tmpfs),due to the non-scalable lock in the consolidation array technique.

Figure 8(b) shows that Shore-MT (ELEDA) improves the trans-action throughput compared to the other two systems. We notethat Shore-MT (ELEDA) exhibits slight performance drop-off afterit peaks at 898 kTxn/s with 16 threads. In-depth looking throughprofiling reveals that it is due to the non-scalable algorithm for in-serting a new transaction into the transaction list to get a transactionID, which was also spotted as the main culprit for scalability prob-lems in [33]. Although Shore-MT (ELEDA) showed saturated per-formance due to other cause that is beyond the scope of the presentwork, the performance behaviour observed up to 16 threads indi-cates that ELEDA can scale as more threads join if the non-scalablealgorithm is resolved. Shore-MT (NVMe) and Shore-MT (tmpfs)peak at 143 kTxn/s (32 threads) and 492 kTxn/s (16 threads). TheI/O bandwidth consumed by Shore-MT (ELEDA) peaks at „190MiB/s with 16 threads, while Shore-MT (NVMe) also peaks at„35MiB/s with 32 threads. The throughput improvement ELEDA con-tributed to the Shore-MT engine with 32 threads is „6.3ˆ.

6.2.2 Commit latencyFigure 9 shows the CDF of the commit latencies for all stor-

age engines with different logging methods. WiredTiger (ELEDA)

0

20

40

60

80

100

0 2 4 6 8 10

CD

F (

%)

Latency (ms)

ELEDANVMetmpfs

(a) WiredTiger

0

20

40

60

80

100

0 2 4 6 8 10

CD

F (

%)

Latency (ms)

ELEDANVMetmpfs

(b) Shore-MT

Figure 9: CDFs for the commit latencies on the key-value work-load: WiredTiger and Shore-MT with 32 threads.

142

0

200

400

600

800

1000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

WiredTiger (NVMe)WiredTiger (tmpfs)

WiredTiger (ELEDA)

19 26 14 27 27 3165 13

(a) WiredTiger

0

200

400

600

800

1000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

Shore-MT (NVMe)Shore-MT (tmpfs)

Shore-MT (ELEDA)

(b) Shore-MT

Figure 10: Throughput on the OLTP workload.

has the average commit latency of 1.3 ms, whereas WiredTiger(NVMe) has 576 µs. Shore-MT (ELEDA) shows worse behaviour(the average commit latency of 6.4 ms) than Shore-MT (NVMe)(171 µs for the commit latency). In Shore-MT, a transaction gen-erates 3 logs, and 2 out of 3 logs have a 40 bytes payload. This in-curs nontrivial processing overhead in maintaining a crawling list.In terms of the average commit latency, Shore-MT (tmpfs) is betterthan ELEDA-based and vanilla systems. However, the average com-mit latency of WiredTiger (tmpfs) is 1.1 ms, which is worse thanWiredTiger (NVMe) or comparable to WiredTiger (ELEDA) with32 database threads because of heavy lock contention in logging.

6.3 Online Transaction Processing WorkloadFor experiments with the OLTP workload, we first use a variant

of sysbench OLTP workloads and measure throughput and commitlatency of ELEDA-based systems under high loads. For evaluation,we wrote sysbench-like programs using transaction APIs exportedfrom Shore-MT and WiredTiger storage engines.

6.3.1 ThroughputFigure 10 shows the transaction throughput results. WiredTiger

(ELEDA) showed similar behavior as was shown in Figure 8 in thatit scales well as we increase the number of database threads. In Fig-ure 10(a), WiredTiger (ELEDA) peaks at 1 million Txn/s with 32threads, while WiredTiger (NVMe) and WiredTiger (tmpfs) peakat 30 kTxn/s (32 threads) and 421 kTxn/s (4 threads), respectively.Note that WiredTiger (tmpfs) again shows performance collapsesas load increases due to the non-scalable lock. The I/O band-width consumed by WiredTiger (ELEDA) peaks at 606 MiB/s whileWiredTiger (NVMe) peaks at 84 MiB/s, both with 32 threads. Thethroughput improvement in WiredTiger with 32 threads is „32ˆ.

Shore-MT also shows similar performance behavior likewise.The throughput of Shore-MT (ELEDA) peaks at 810 kTxn/s with32 threads, while Shore-MT (NVMe) and Shore-MT (tmpfs) peakat 67 kTxn/s (16 threads) and 248 kTxn/s (16 threads), respectively.The I/O bandwidth consumed by Shore-MT (ELEDA) peaks at 315MiB/s with 32 database threads while Shore-MT (NVMe) peaks at269 MiB/s with 16 database threads. The throughput improvementin Shore-MT with 32 database threads is „12ˆ.

6.3.2 Commit latencyFigure 11 shows the CDF of the commit latencies with the OLTP

workload. WiredTiger (ELEDA) has an average commit latency of

0

20

40

60

80

100

0 1 2 3 4 5 6

CD

F (

%)

Latency (ms)

ELEDANVMetmpfs

(a) WiredTiger

0

20

40

60

80

100

0 2 4 6 8 10 12 14

CD

F (

%)

Latency (ms)

ELEDANVMetmpfs

(b) Shore-MT

Figure 11: CDFs for the commit latencies on the OLTP work-load: WiredTiger and Shore-MT with 32 threads.

3.7ms, whereas WiredTiger (NVMe) and WiredTiger (tmpfs) have1 ms and 2.5 ms. Shore-MT (ELEDA) shows worse behaviour(the average commit latency of 10.9 ms) than Shore-MT (NVMe)and Shore-MT (tmpfs) (439 µs and 222 µs for the average commitlatencies). Although the average commit latency of WiredTiger(ELEDA) is longer than the other two systems, it scales the perfor-mance as the count of threads increases by eliminating lock con-tention observed in vanilla systems.

6.3.3 Throughput with TPC-C workloads

0

1000

2000

3000

4000

1 2 4 8 16 32

tpm

C (

x1

,00

0)

No. of Database Threads

WiredTiger (NVMe)WiredTiger (tmpfs)

WiredTiger (ELEDA)

Figure 12: TPC-C benchmark result of WiredTiger.

Next, we use TPC-C workloads for measuring transaction through-put and also discuss effects of long transactions using NEW ORDERand PAYMENT transactions. For this experiment, we implementedthe WiredTiger-based TPC-C application running under SNAPSHOTISOLATION (SI)3. The number of warehouses is 32 with eachdatabase thread executing a transaction against the dedicated ware-house, avoiding unwanted contention. Figure 12 shows tpmC aswe increase database threads. It shows that WiredTiger (tmpfs)scales tpmC throughput up to 16 threads, and then tpmC collapsesafterward due to the contention in the log manager. WiredTiger(ELEDA) shows no performance collapse and handles long transac-tions without extra processing overhead.

6.4 Experiments with Different Settings

6.4.1 Evaluation under snapshot isolationTo see the performance behavior of ELEDA-based systems under

higher isolation level, we conduct experiments measuring the per-formance of WiredTiger under SNAPSHOT ISOLATION (SI) thatis the highest isolation level supported by WiredTiger. Figure 13shows results with key-value and OLTP workloads. As shown inthe figure, WiredTiger (NVMe) is mainly affected by synchronousI/O delay, and WiredTiger (tmpfs) suffers the serialization bottle-neck by lock contention. WiredTiger (ELEDA) shows better per-formance, but the performance is suddenly limited as the count ofdatabase threads exceeds 16. In-depth profiling spotted a culpritfor this issue. Code flows intended to obtain a snapshot that de-termines the visibility of key-value records, spend „30% of timewith 32 database threads. This is engine-specific overhead, and it3Fekete at al. [19] proved that TPC-C benchmarks, running underSI, ensures serializable execution.

143

0

500

1000

1500

2000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

WiredTiger (NVMe)WiredTiger (tmpfs)

WiredTiger (ELEDA)

48 51 51 62 62 9653

(a) Key-value workload

0

200

400

600

800

1000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

WiredTiger (NVMe)WiredTiger (tmpfs)

WiredTiger (ELEDA)

29 36 36 45 61 5715

(b) OLTP workload

Figure 13: Throughput of WiredTiger under SI.

is irrelevant to ELEDA. If it were removed, WiredTiger (ELEDA)would show the same peak throughput as shown in Figure 8(a).With OLTP workloads (Figure 13(b)), WiredTiger (ELEDA) showsa similar behavior as was shown in Figure 10(a). Though further in-vestigation is needed, we believe that the overhead of WiredTiger’ssnapshot routines only matters under very high loads (ą 2M Txn/s).

6.4.2 Key-value workload with varying sizeNext, we explore the performance of WiredTiger with payload

size being varied. For the evaluation, we use key-value work-loads by varying the payload size from 512 bytes to 5 KiB. Sincethe workload is configured to use larger payload, the I/O unit isalso reset to 512 KiB for ELEDA to utilize maximum device band-width. Figure 14 shows the results of WiredTiger (ELEDA) withthe number of database threads being fixed to 32. As payload sizeincreases, the performance of WiredTiger (ELEDA) gradually de-grades mainly because the amount of log data grows. Through-put of other two systems remains the same, since they suffer eithersynchronous I/O delay or scalability bottleneck with 32 databasethreads. As shown in Figure 14, the I/O bandwidth consumed byWiredTiger (ELEDA) peaks at 6.2 GiB/s when the payload size is 5KiB, while the write bandwidth consumed by WiredTiger (NVMe)is unchanged regardless of payload size.

6.4.3 Comparison with NVM-based loggingAs discussed in §2.3.1, NVM-based logging has clear advan-

tages over other proposals owing to the attractive characteristic ofNVM itself. The proposal of Fang et al. [18] is a good example.In this section, we conduct performance comparison between theapproach of Fang et al. on the emulated NVM with ELEDA on

10

100

1000

10000

0.5 1 2 3 4 5 0

1

2

3

4

5

6

7

Th

rou

gh

pu

t (k

Txn

/s)

Write

Ba

nd

wid

th (

GiB

/s)

Payload Size (KiB)

WT-Throughput (NVMe)WT-Throughput (tmpfs)

WT-Throughput (ELEDA)

WT-Bandwidth (NVMe)WT-Bandwidth (ELEDA)

52 55 56 50 51 60

25 26 27 32 27 30

4138 3912 3682 3494 3222 2488

Figure 14: Performance of WiredTiger w/ varying payload size.

0

1000

2000

3000

4000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

WiredTiger (NVM Logging)WiredTiger (Eleda)

(a) Key-value workload

0

200

400

600

800

1000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

WiredTiger (NVM Logging)WiredTiger (Eleda)

(b) OLTP workload

Figure 15: Throughput comparison between NVM-based log-ging [18] and ELEDA

SSDs. The objective of this experiment is to shed light on ELEDA’spotential application in conventional database systems that mostlyrun on commodity hardware without NVM, by comparing perfor-mance between software-only ELEDA and hardware-assisted NVMlogging. For this experiment, we use a small segment of shared-memory and treat it as fast NVM. We also implement the approachof Fang et al. in ELEDA, by following their algorithms. We thenmeasure the performance of WiredTiger with key-value and OLTPworkloads. As shown in Figure 15, experimental results demon-strate that pure software architecture (ELEDA) on a commodityserver can attain comparable (or sometimes better) performancethan NVM-based logging. It is noteworthy that, to exploit NVM-based logging in conventional database systems, we need to ex-pense not only the cost for hardware (NVM module) but also (andmore importantly) the cost for software modification to make themNVM-aware.

6.5 In-depth Analysis

6.5.1 MicrobenchmarkTo measure various performance metrics, we build a database

logging microbenchmark that creates a transaction which is de-signed to buffer three separate logs to ELEDA, followed by thetransaction commit. For accurate measurements, transaction switch-ing and callback mechanisms are also implemented, and callbacksare invoked after ELEDA flushes logs to the storage devices. Wemeasure throughput, I/O bandwidth and the CDF of commit la-tency by varying the number of database threads and the log size.To maximize device utilization, we reset the I/O unit to 512 KiB.

Figure 16(a) shows the throughput graphs of ELEDA, as we varythe number of database threads and the log size. ELEDA’s through-put increases as we add more database threads. The peak through-put (1.34 million Txn/s) is achieved with a 128 bytes log, and sus-tained until the log size is increased to 2 KiB. After that point, thethroughput decreases as we grow the log size. Figure 16(b) showsI/O bandwidth consumed by ELEDA. Since we use two NVMeSSDs, the maximum aggregated bandwidth for sequential write isaround 6.92 GiB/s. The figure clearly shows that ELEDA can uti-lize more bandwidth as we increase the log size and the count ofdatabase threads. When the log size reaches 4 KiB, ELEDA utilizesfull device bandwidth. Figure 16(c) shows the CDF of a commit la-tency with a 128 bytes log. The CDF indicates that increased CPU

144

0 200 400 600 800

1000 1200 1400

16 32 64 128 256 512 1024 2048 4096 8192

Thro

ugh

put (k

Txn/s

)

Log Size (Bytes)

1 Thread2 Threads

4 Threads8 Threads

16 Threads32 Threads

(a) Throughput

0 1 2 3 4 5 6 7

16 32 64 128 256 512 1024 2048 4096 8192

Write

B/W

(G

iB/s

)

Log Size (Bytes)

1 Thread2 Threads4 Threads8 Threads

16 Threads32 Threads

(b) I/O bandwidth

0

20

40

60

80

100

0 1 2 3 4 5 6 7 8

CD

F (

%)

Latency (ms)

1 Thread2 Threads4 Threads8 Threads

16 Threads32 Threads

(c) CDF of the commit latency with a 128 bytes log

Figure 16: Microbenchmark measures throughput, I/O band-width and CDF of the commit latency.

utilization by more active threads widens the latency distributions.One interesting phenomenon comes to our attention; performanceresults with a single database thread are better than those with twoand four threads. This is because Linux dispatches threads evenlyto different processor sockets to maximize the use of cache mem-ory, but this increases inter-socket memory bus traffic with cacheinvalidation messages in ELEDA. Giving the processor affinity tothreads easily makes the graph look better, but we do not changethe configuration since databases would not do that.

6.5.2 Profiling CPU utilizationProfiling reveals detailed information about various system ac-

tivities, especially about where the time goes. Since WiredTigershows larger performance improvement with ELEDA than Shore-MT, we choose it for profiling.

Figure 17 shows the breakdown of profiled results of WiredTiger(tmpfs), WiredTiger (NVMe) and WiredTiger (ELEDA), with thekey-value workload. As we increase the number of database threads,vanilla engines cannot achieve commensurate CPU utilization dueto too much portion of waiting/sleeping activities primarily causedby the lock contention. With 32 threads, WiredTiger (tmpfs) spends20% time in spin-waiting while WiredTiger (NVMe) has negligibleportion. Since the modern implementation of a mutex, upon con-tention, first incurs spin-waiting, followed by sleeping, WiredTiger(tmpfs) shows substantial portion of spin-waiting due to the re-moval of synchronous I/O delay. Spin-waiting on a mutex vari-able however bursts memory bus with cache invalidation messages,thus resulting in performance collapses in WiredTiger (tmpfs). Thisdefinitely prohibits the system from gaining more utilization. Thesame explanation applies to WiredTiger (NVMe). The differenceis, WiredTiger (NVMe) with 32 database threads spends 88% oftime in doing nothing, but sleeping. At this time, the synchronousI/O delay causes lock contention to reach sleeping most time. Thisis why we observed such low throughput numbers from vanilla

0

20

40

60

80

100

1 2 4 8 16 32

CP

U U

tiliz

atio

n (

%)

No. of DB Threads

KernelLock

DB

(a) tmpfs

0

20

40

60

80

100

1 2 4 8 16 32

CP

U U

tiliz

atio

n (

%)

No. of DB Threads

KernelLock

DB

(b) NVMe

0

20

40

60

80

100

1 2 4 8 16 32

CP

U U

tiliz

atio

n (

%)

No. of DB Threads

KernelELEDA

DB

(c) ELEDA

Figure 17: Profiling CPU utilization: WiredTiger on the key-value workload.

WiredTiger systems. In contrast, WiredTiger (ELEDA) show nei-ther spin-waiting nor sleeping owing to the absence of lock con-tention, and CPU utilization is therefore commensurate with thecount of database threads. Figure 17(c) shows time spent in ELEDA.Note that WiredTiger (ELEDA), unlike other systems, does havenegligible kernel time that is usually increased due to lock con-tention (e.g., futex()).

6.5.3 Effects of I/O unit sizeAs we discussed in §4.3, the commit latency is critically influ-

enced by the I/O unit. A larger I/O unit for higher device utilizationtakes longer to fill the I/O buffer, but this increases the commit la-tency. To analyze the effect of I/O unit on commit latency and band-width utilization, we use different I/O units - 64 KiB and 512 KiB- for ELEDA-based systems, all running 32 database threads withkey-value and OLTP workloads. In all results shown in Figure 18,the smaller I/O unit provides much shorter commit latencies thanthe larger I/O unit. Hence, if there is a strict service-level agree-ment on response time, adjusting the I/O unit for different typesof workloads is required. We then measure the max bandwidthutilization for different I/O units by using large size logs, althoughresults are not plotted here. The max bandwidth utilization ELEDA-based systems can achieve for 64 KiB I/O unit is„3.2 GiB/s, whileELEDA with 512 KiB I/O unit utilizes full device bandwidth (i.e.,6.9 GiB/s). However, the commit latency for 64 KiB I/O unit isan order of magnitude shorter than that for 512 KiB I/O unit. Onemay think that multiple flusher threads may help increasing the uti-lization with a smaller I/O unit, but preserving the sequentiality oflogging with multiple flusher threads would be very challenging.We leave this issue open to our community.

0

20

40

60

80

100

0 2 4 6 8 10

CD

F (

%)

Latency (ms)

64 KiB I/O Unit512 KiB I/O Unit

(a) Key-value, WiredTiger

0

20

40

60

80

100

0 2 4 6 8 10

CD

F (

%)

Latency (ms)

64 KiB I/O Unit512 KiB I/O Unit

(b) Key-value, Shore-MT

0

20

40

60

80

100

0 1 2 3 4

CD

F (

%)

Latency (ms)

64 KiB I/O Unit512 KiB I/O Unit

(c) OLTP, WiredTiger

0

20

40

60

80

100

0 2 4 6 8 10 12 14 16 18

CD

F (

%)

Latency (ms)

64 KiB I/O Unit512 KiB I/O Unit

(d) OLTP, Shore-MT

Figure 18: Effects of the I/O unit to commit latencies.

145

0

1000

2000

3000

4000

1 2 4 8 16 32

Thro

ughput (k

Txn/s

)

No. of DB Threads

WT w/ ELEDAWT w/ Latency-hiding

WT (Vanilla)

(a) Key-value workload

0

200

400

600

800

1000

1200

1 2 4 8 16 32

Thro

ughput (k

Txn/s

)

No. of DB Threads

WT w/ ELEDAWT w/ Latency-hiding

WT (Vanilla)

(b) OLTP workload

Figure 19: Performance breakdown of WiredTiger (ELEDA).

6.5.4 Performance breakdown of ELEDAThe next experiment focuses on ELEDA and measures the con-

tribution of GRASSHOPPER and the latency-hiding technique onthe achieved throughput. The contribution of GRASSHOPPER isfurther broken down by SBL-crawling and SBL-hopping. For theevaluation, we selectively enable one or both of the latency-hidingtechnique and GRASSHOPPER in WiredTiger (ELEDA); they aredenoted as “WT w/ Latency-hiding” and “WT w/ ELEDA”, respec-tively. For comparison, we use vanilla WiredTiger (NVMe) as abaseline (i.e., “WT (Vanilla)”).

Figure 19 shows the performance breakdown of ELEDA withkey-value and OLTP workloads. The contribution of the latency-hiding technique is dominant when the count of database threads islow because a small number of threads incur negligible lock con-tention overhead. As load increases, the sole use of the latency-hiding technique reveals its limitation in scaling the performance,primarily due to lock contention with multiple threads of execu-tion. If lock contention is left unaddressed, the effect of the latency-hiding technique diminishes, and the system suffers the serializa-tion bottleneck (see the throughput collapse of “WT w/ Latency-hiding” in Figure 19).

Once the latency-hiding technique reaches its limitation (i.e.,„4database threads), GRASSHOPPER plays a major role in achiev-ing scalable performance by eliminating the scalability bottleneck.Hence GRASSHOPPER contributes to achieving commensurate per-formance as the count of database threads increases (i.e., the resultsof “WT w/ ELEDA”). Since GRASSHOPPER is designed to resolveserialization bottleneck on multicores, it is of use to the systemthat shows a clear indication of performance bottlenecks causedby lock contention; in other words it is of no use to the system ifsynchronous I/O delay remains unresolved. Overall, performancebreakdown shows that ELEDA successfully resolves whichever ofthe synchronous I/O delay and the scalability bottleneck is a matterof pressing concern.

We further break down the impact of GRASSHOPPER on ELEDAto have a deeper understanding of interplay between SBL-crawlingand SBL-hopping. For this evaluation, we use WiredTiger (ELEDA)

4 8 12 16 20 24 28 32 0.5 1 2

3 4

5 0

20

40

60

80

100

Cra

wlin

g R

atio (

%)

No. of DB ThreadsPayload (K

iB)Cra

wlin

g R

atio (

%)

40 50 60 70 80 90 100

Figure 20: Performance breakdown of GRASSHOPPER.

0

1000

2000

3000

4000

1 2 4 8 16 32

Th

rou

gh

pu

t (k

Txn

/s)

No. of Database Threads

WiredTiger (ELEDA w/ SCAN)WiredTiger (ELEDA w/ GRASSPHOPPER)

128 170 96 91 81 47

Figure 21: Throughput comparison between GRASSHOPPERand a linear scanning approach on the key-value workload.

on the key-value workload and measure the contribution of SBL-crawling on the measured throughput. The contribution of crawl-ing is quantified by the ratio of the SBL increment conducted bySBL-crawling to the total, and it is denoted as “Crawling Ratio”in Figure 20. SBL-crawling makes a principal contribution to theperformance when the thread count is low where the overhead ofmanaging the LSN-heap can be neglected. As the count of threadsincreases and payload size is decreased, SBL-crawling incurs sig-nificant overhead in manipulating the LSN-heap. This causes SBL-crawling to start falling behind, and this is the moment when SBL-hopping plays a key role in advancing SBL in a timely manner. Theeffect of this interplay is well presented in Figure 20.

Finally, we compare GRASSHOPPER with a simple scanning ap-proach for tracking LSN holes to see the clear benefit of GRASSHOP-PER. For the evaluation, we use a bitmap with each bit representinga single byte of a log buffer. A dedicated worker simply scans abitmap to advance SBL while database threads set bits after copy-ing their logs, except that database threads stop before they outrunthe dedicated worker thread. We run the same YCSB workloadused before against the WiredTiger engine. As shown in Figure 21,the scanning approach hits its max speed quickly (i.e., „40 MiB/s)even with 2 database threads. We believe that designing an efficientscanning mechanism would require nontrivial effort and warrantmeaningful research outcomes.

7. CONCLUSIONSWe have observed that contemporary database systems relying

on ARIES (or its variants) have faced significant performance bot-tlenecks in centralized logging on computing platforms with dozensof processor cores and fast storage devices. With two open-sourcesystems, we have identified non-scalable locks (or algorithms) usedin the centralized logging as the main bottleneck. To address theproblem, we proposed a fast, scalable logging method, ELEDA,that is based on highly concurrent data structures. ELEDA alsointegrated transaction switching and asynchronous callback mech-anisms to address synchronous I/O delay. Our evaluation demon-strated that ELEDA is modular and applicable to any transactionsystems suffering the bottleneck in centralized logging. As hard-ware vendors provision more cores, scalability issues in systemsoftware deserve careful consideration so as not to have undesir-able performance problems. In this regard, ELEDA provides onemethod to address the key challenge in database logging, that is anessential prerequisite for high performance transaction systems.

8. ACKNOWLEDGMENTSThis work was supported by the National Research Foundation

of Korea grants (2017R1A2B4006134 and 2015M3C4A7065645)funded by the Korea government. This work was also supportedby the R&D program of MOTIE/KEIT (10077609). We would liketo thank the anonymous PVLDB reviewers for valuable commentswhich helped to improve the paper.

146

9. REFERENCES[1] Amazon Web Services Inc. SAP HANA on the AWS X1

Instance with 2 TiB Memory and 4-way Intel R© Xeon R©

E7-8880 v3. https://aws.amazon.com/sap/solutions/saphana/.

[2] J. Arulraj, A. Pavlo, and S. R. Dulloor. Let’s talk aboutstorage & recovery methods for non-volatile memorydatabase systems. In Proceedings of the 2015 ACM SIGMODInternational Conference on Management of Data, SIGMOD’15, pages 707–722, New York, NY, USA, 2015. ACM.

[3] J. Arulraj, M. Perron, and A. Pavlo. Write-behind logging.PVLDB, 10(4):337–348, Nov. 2016.