Midterm exam, Mathematics 504 - 12000.org fileMidterm exam, Mathematics 504 CSUF, spring 2008 by...

Transcript of Midterm exam, Mathematics 504 - 12000.org fileMidterm exam, Mathematics 504 CSUF, spring 2008 by...

----

Midterm exam, Mathematics 504

CSUF, spring 2008

by Nasser Abbasi

April 2, 200'8

Contents

1 Questions

2 Problem 1

2.1 Part (A)

2.2 Part (B)

2.3 Extra/not part of exam requirement

3 Problem 2

3.1 part (A)

3.2 Part (b)

3.3 Part (c)

2

4

4

5

7

8

8

9

9

4 Problem 3 12

5 Problem 4 14

5.1 part(A) ., .. . . . . . . . . . . . 14

5.2 Part(b). . . . . . . . . . 15

5.3 Part(c). . . .. .. .. 15

5.4 Part(d) . . . . .. 17

5.5 Extra/not part of exam requirement . .. . . . . 18

1

1 Questions

Math 504 Exam 1

Show your work The grade for a problem will be based

on the work and reasoning shown, not just the answer.

1. A stochastic process has states 0,1,2, ... ,r. If the process is in state i 2 1, thenduring the next time step, it goes to a state that is selected at random from amongthe states 0,1,2, .. " i - 1. If the process is in state 0, it stays there. This processcan be modelled as a Markov chain. (a) Specify the one-step transition probabilitymatrix. (b) Let Yi be the number of steps that the process takes to reach state 0,given that it starts in state i. Derive a linear system of equations that determinesthe expected values E(Yi), for i = 1,2,"', r. Write the linear system in the formAx = b, where the components of the vector x are the unknown expected values.Specify the matrices A and b.

2. A coin shows heads (H) with probability p and tails (T) with probability q = 1-p,

where °< p < 1. The coin is tossed repeatedly and independently until eitherthe pattern H H occurs, or the pattern T H occurs. (a) Model the problem as aMarkov chain. Specify the states and the one-step probability transition matrix.(b) Let T denote the set of transient states. For i E T, let Yi denote the probabilitythat state THis reached eventually, given that the process starts in state i. Derivea linear system of equations that determine the probabilities Yi for i E T. (c) Solvethis system of equations, and select the appropriate component of Y to determinethe probability of eventually reaching state T H. Help: It would be useful to drawthe graph of the chain. Also, number the states in a convenient way.

2

---

3. Suppose N red balls and N white balls are placed in two urns so that each urn contains N balls. Label one urn A and the other B. The following action is performed

repeatedly and independently: A ball is selected at random from urn A and independently a ball is selected at random from urn B. Each ball is then transferred tothe other urn. The state of the system at the beginning of each trial is the numberof white balls in urn B. This process can be modelled as a regular Markov chain.Determine the one-step transition probability matrix.

4. A machine component has a random lifetime L with given discrete distributionP(L = k) = 13k, for k = 1,2,'" . Assume 13k > 0 for all k. A component is putinto operation, and replaced when it burns out. However, if the component inoperation has age N, it is instantly replaced by a new component. This process canbe modelled as a regular Markov chain, where the state X n at time n = 0,1,2, ... ,is defined by X n = i if the component in operation has age i. The possible statesare i = 0,1,2, ... ,N - 1. The state i = 0 means that a new component has justbeen placed into operation. (a) Determine the one-step transition probabilities.Help: For i = 0, 1,2, ... , N - 2,

ai = Pi,i+l = P(L > i + 11 L > i), and PN-l,O = 1 .

First, find the probabilities {ai}, i = 0,1,2,' . " N - 2. Then, in terms of the {ai},show the one-step transition probability matrix. (b) Suppose we wish to studythe time between replacements. Interpret this time as a first entrance time. (c)Derive a linear system of equations that determines the expected first entrancetimes from states i = 1,2"", N - 1 to state zero. Express these equations interms of the {ai}. (d) Suppose the expected first entrance times in part (c) havebeen determined. Find a sin Ie formula for the ex ected first e e time fromsate 0 to state O. Express this equation in terms of the {ai ex ected

~rs entrance times from part~

3

2 Problem 1

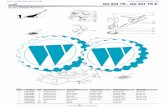

The following diagram helps to illustrate the Markov chain layout

P=1

2.1 Part (A)

P=1/3

Markov Chain for problem one

····0

From the above diagram we see that the one step probability transition matrix P is thefollowing

for states i = 1,2,··· ,r

P;j = {

1 j < ii

0 otherwise

and for state i = 0

P;j ~ {

1 j=O

0 otherwise

In Matrix form

0 1 2 r-1 r

0 1 0 0 0 01 1 ~~ 0 0 0 02 1 1 0 0 0 12 2 fll hi'--

\r-11 1 0 0r-l r-l

r 1 1 1 0r r r Lrfl

4- -l/

2.2 Part (B)

Using next state conditioning we write

E (Yi) = L E (YiIXl = k, Xo = i) Pik

~~--.kEI

~

~ere I is theyet"O'al.1 states 0,1,' .. r. H~ e."e:, ~or states k. > i '. ~k = 0 since M.G. onlysWItches to lo~n bered-siates en It IS III state 0 It sWItches to state 0 always.Then the above becomes

E (Yi) = L E (Yi!X1 = k, Xo = i) Pik

k=O,l,.·· ,i

But due to Markov property we can remove the conditional on earlier states so the above issimplified and becomes

E (Yi) = L E (YiIXl = k) ~kk=O,l,.·· ,i

But E (Yi!Xl = k) = 1 + E (Yic) since we took one step to reach state Xl = k, so the abovebecomes

E (Yi) = L [1 + E (Yk )] ~kk=O,l,.·· ,i

L Pik + L E(Yk)Pikk=O,l,.·· ,i k=O,l,.·· ,i

But L Pik = 1 from the M.G. description, hence the above becomesk=O,l,.·· ,i

E (Yi) = 1 + L E (Yk ) Pik

k=O,l,.·· ,i

Now if state k = 0 then E (Yo) = 0 since M.G. is already in state 0 and so zero steps needed.Hence the summation index in the above needs to start only from 1

E (Yi) = 1 + L E (Yk ) ~kk=l,.·· ,i

i = 1,2,'" ,r (1)

~

Expanding few terms to see the form of the matrices involved we see the following

E (Yi) = 1 + E (Yj.) ~l + E (172) ~2 + ... + E (Yi-l) Pii

Looking at few rows, we see

E (Yd = 1 + E (Yi) PH

E (Y2 ) = 1 + E (Yi) P2l + E (Y2 ) P22

E (Y3) = 1 + E (Yi) P3l + E (172) P32 + E (Y3 ) P33

E (Y4 ) = 1 + E (Y1 ) P41 + E (Y2 ) P42 + E (Y3) P43 + E (Y4 ) P44

5

Hence in matrix form, letting x = [E (Yi) E (Y2 ) ••• E (Y;.)f, and A =

then we write

4~Pn a a a aP21 a a a aP31 P32 a a aP41 P42 P43 a a

aPrl Pr2 Pr3 Pr,r

x = b+Ax

Where b is the e vector (column vector of I's), hence in full form the above can be writtenas

E(Yi)E(Y2)E(YJ)

E(Y;.)

1 Pn a a a a1 P21 a a a a1 P31 P32 a a a

+ P41 P42 P43 a aa

1 Prl Pr2 Pr3 Pr,r

E(Yi)E(Y2)E(YJ)

E(Yr )

Now we can solve the above for x

x-Ax=b

(I - A) x = b

x = (I - A)-l b (2)

So, it we write B = (I - A)-l then x = Bb, in other words, E Cl'i) is the sum of the ith rowof the B matrix.

6

2.3 Extra/not part of exam requirement

This is a small program which shows the result (expected number of steps to reach state 0starting at state i = 1,2, ... ,10). In other words, for r = 10. The program sets up the I - Amatrix, and then solves the above equation (2) showing the E (Yi) for all the states.

Display the one step transition matrix (part A)

In[98)= P [i_, j_] :=1 I i I; (i ~ 1 && j < i)

p[i_, j_] : .. 0

p[i_, j_] : .. 1 I; (io=O&&j==O)

roo 10;

AooTab1e[p[i, j], {i, 0, r}, {j, 0, r}];

MatrixForm [A]

Out[103VIMatriXFarm=1 0 0 0 0 0 0 0 0 0 01 0 0 0 0 0 0 0 0 0 01 1 0 0 0 0 0 0 0 0 02 21 1 1 0 0 0 0 0 0 0 03 3 31 1 1 1 0 0 0 0 0 0 04 4 4 41 1 1 1 1 0 0 0 0 0 05 5 5 5 51 1 1 1 1 1 0 0 0 0 06 6 6 6 6 61 1 1 1 1 1 1 0 0 0 07 7 7 7 7 7 71 1 1 1 1 1 1 1 0 0 08 8 8 8 8 8 8 81 1 1 1 1 1 1 1 1 0 09 9 9 9 9 9 9 9 91 1 1 1 1 1 1 1 .1.. .1.. 0

10 10 10 10 10 10 10 10 10 10

Solve the expected number of steps (part B)

In[104J= BooA[[2;; -1,2;; -1]];

MatrixForm[B];

expectedSteps =Inverse [Identi tyMatrix [r] - B] . Table [1, {r}];

expectedSteps =Table [{i, N [expectedSteps [ [i] ] , 16]}, {i, r}];

In[93J= Tab1eForm [expectedSteps,

Tab1eHeadings ... {None, {"i". "E(Yil"}}]

Out[93jllTableFarm=i E (Y i )

1 1.0000000000000002 1.5000000000000003 1.8333333333333334 2.0833333333333335 2.2833333333333336 2.4500000000000007 2.5928571428571438 2.7178571428571439 2.82896825396825410 2.928968253968254

7

~.

/-

/"

/----

3 Problem 2

3.1 part (A)

The following diagram shows the Markov chain for this problem

Markov chain diagram for problem 2

Pr(H)=pPr(T)=q

T

Transients states are {2, 3, 4} and absorbent states are {a, I}

The one step probability transition matrix is

o 1 2 3 4

Absorbent{Ostates 1

Transientstates

2

3

4

r--':1:0___ J

r--

o :1r--10 01I II I

Ip 0'I fI I

10 Pl'--

-0 0 0

,:0 0 0-o p q

I- -o 0 q

o 0 q

Q matrix

R matrix

One step probability transition matrix

8- -----------

3.2 Part (b)

Let T = {2, 3, 4} the set of transient states. And let I be the set of all states {O, 1,2,3, 4}andlet A be the set of absorbent states {O, I}.

Let E be the event of reaching state 1 (which is T H) eventually. Then we need to findYi = Pr (EIXo = i) Using conditioning on next state, we write

Yi = L Pr (EIXI = k, X o = i) Pr (Xl = klXo = i)kEf

But Pr (Xl = klXo = i) = ~k and Pr (EIXI = k, X o = i) = Pr (E!Xl = k) due to Markovproperty, hence (1) becomes

Yi = LPr(E!Xl = k)PikkEI

But Pr (E!X l = k) = Yk hence the above becomes

Yi = LYkPikkEf

Now consider all the states. The above can be written as

Yi = LYkPik + LYkPikkEA kET

(1)

When k E T, Pik is the same as qik since this is an element of the Q matrix and when k E A,then Pik is the same as Tik since now we are in the R matrix portion of the P matrix. Then(1) can be written as

Yi = LYkTik + LYkqikkEA kET

When k E A and k = 0, then Yk = 0 since once chain is in state 0 it can never switch to state1 since state 0 is absorbing state. And when k = 1, then Yk = 1. Hence the above becomes

Yi = Til + LYkqik I ~kET //

In Matrix Form, the above can be written as

x = b+Qx

(2)

In full form

3.3 Part (c)

x b Q

~[Y2] ,...-A-.,[T2l] '[q22 q~Y3 = T3l + q32 q33Y4 T41 q42 q43

x - Qx = b{T n\ ~ _ h

x

q24]~[Y2]q34 Y3q44 Y4

Now solving this for x. First we note that Q = [~ ~ ~] and b =o 0 q

(I - Q) = [~ ~ ~] - [~ ~ ~] = [~ ~p =~] Let (I - Q) = B, hence B-1

o 0 1 0 0 q 0 0 1-q

d,t(B) adjoint (B) = d,t(B) [cofactar Matrix (Blf ~.--------,

Now the determinant of (I - Q) is 1x (1 - q) +P (0) - q{O) 1 - q and the cofactor elementsare

bll = I~

b12 = I~

Hence

-q 1= 1- q1-q

-q I -01- q -

b13 = I~ ~I = 0

I-p -q Ib21 = 0 1 _ q = -p (1 - q)

1

1 -q Ib22 = = 1- qo 1- q

1

1 -pib23 = 0 0 = 0

I-p -qlb31 = 1 -q = pq + q

11 -qlb32 = =-qo -q

1

1 -pib33 =0 1 =1

[ ]

Tbll -b12 b13

[cofactor Matrix (B)f = -b21 b22 -b23

b31 -b32 b33

[ ]

T1- q 0 0

= p (1 - q) 1 - q 0pq+q q 1

[

1 - q P (1 - q) pq + q]= 0 1-q q

o 0 1

10

/

Hence

1B-

1= let (B) adjoint (B)

[

1 - q p (1 - q) pq + q]o 1- q qo 0 1

1-q

Hence

Then

[

1 p

(I - Q)-l = 0 1

o 0

~]1-q-!L1-q

1l-q

mJ ~ (I - Q)-' b

~~l--i J-o r~ 'hi ~~~~~

-tu ~'a-l+-csJ

m

[

Y2Y3Y4

Ives

@:i~

11

4 Problem 3

Let x represent the number of white balls in urn B at start of each trial. Therefore x cantake on values 0,1,2,," ,N and these will be the states of the Markov chain as well.

x can only change by at most one ball at each step since urn .H can gain at most one whiteball, or it can lose at most one white ball, or the number of white balls in B can remainunchanged.

Hence when the chain is state x the next state can be x-I (unless x = 0 already, see below)or the next state can remain at x or the next state can be x + 1 (unless x = N already, seebelow).

We consider the 2 special cases of x = 0 and x = N and then consider the general case ofO<x<N

1. case x = 0 : In this state, there are no white balls in B, hence all balls in urn B are redand all balls in urn A must therefore be white. Hence one can only select a red ballfrom B and a white ball from A, and this occur with certainty. And hence after theswitch, urn B will gain a white ball in exchange of the red ball it lost. Hence IPO,1 = 11and all other probabilities are zero since no other switches are possible.

2. case x = N : In this state, all balls in urn B are white and all balls in urn A are red.Hence one can only select a white ball from B and a red ball from A and this is donewith certainty. And hence after the switch, urn B will lose one white ball and gain ared ball in its place. Hence IPN,N-I = 11 and all other probabilities are zero since noother switches are possible.

3. case 0 < x < N : In this state, urn B has x white balls and N - x red balls, and urnA has N - x white balls and x red balls. Now we calculate the probabilities of Px,x-I,

Px,x, and Px,x+I

(a) Px,x-l: Here, urn B loses a white ball. This can only happen if B loses a white ball(which can happen with probability of :.r) and is replaced by a red ball from A(which can happen with probability of tv). Hence due to independence we obtain

IPx,x-l = lJilJi = (lJi )21

(b) Px,x: Here the number of white balls in B remain unchanged. This can onlyhappen if B loses a white ball (which can happen with probability of tv) and isreplaced by a white ball from A (which can happen with probability of N;/), orit can happen if B loses a red ball (which can happen with probability of N;/ )

and is replaced by a red ball from A (which can happen with probability of lJi).Hence Ip = ..:£.N-x + N-x..:£. = 2 (N-X..:£.) Ix,x N N N N N N

(c) Px,x+!: Here the number of white balls in B increases by one. This can onlyhappen if B loses a red ball (which can happen with probability of N;/) and isreplaced by a white ball from A (which can happen with probability of N;/).H IP N-x N-x (N-X)21ence x,x+l = --y;j --y;j = --y;j

12

Now that we have found all possible one step probability transitions, we can also show theP matrix for few states for better illustrations

state 0 1 .. . x-I x x+1 ... N-I NU 0 1 0 0 0 0 0

1···x-lX 0 0 (~)~ 2 (N-x x) (N~x)~ 0 0 0N N

x+l···N-lN 0 0 0 0 0 1 0

/'"..-,/

,~

/@;f(;"

13

5 Problem 4

5.1 part (A)

ai = Pi,i+1

= P (L > i + IlL> i)P (L > i + 1, L > i)

P (L > i)

But P (L > i + 1, L > i) = P (L > i + 1) hence above becomes

P (L > i + 1)ai = P(L > i)

Now,

(1)

P (L > i + 1) = P (L = i + 2) + P (L = i + 3) + ... + P (L = N)

= (3i+2 + (3i+3 + ... + (3N (2)

and

P (L > i) = P (L = i + 1) + P (L = i + 2) + ... + P (L = N)

= (3i+1 + (3i+2 + ... + (3N

Substitute (2) and (3) into (1) we obtain

(3)

a- - .8i+2+.8i+3+-+,BNt - .8i+1+.8i+2+--+.8N (4)

Now the probability of switching from state i to state 0 . e next step is that of theprobability of the component failing at age i. Hence

I{,o = P {component fail at age i}= 1 - P {component not fail at age i}

But P {component not fail at age i} is the same as the probability the component live to

age i + 1, hence

Po = 1- P -+1t, t,t

= 1- ai (5)

Using (4) and (5) we can now draw the Markov chain, and set up the one step transitionmatrix as shown in the diagram below

1 - (fN-l

I-a

1- ao

-- -- ---1

Markov chain for problem 4

14

""..--

And the P matrix is

state 0 1 2 3 ... N-2 N-lU 1 - ao ao 0 0 ... 0 0

1 1 - al 0 al 0 ... 0 0

2 1 - a2 0 0 a2 ... 0

· 0· ...·N-2 1- aN-2 0 0 0 0 0 aN-2

N-l 1 0 0 0 0 0 0

5.2 Part(b)

A component is replaced when it fails or when it reaches age N. When a component isreplaced, the chain will be in state 0 and will return back to this state when the componentfails and has to be replaced again or it reaches age N. Hence the average time a componentremains operational, is the average time between replacements. This time is the number of~o reach state 0 for the first time, when starting from stat~ O~ Or in the notatiO'IiWeused in class, we are looking at calculating E (Ti,j) where i = 0 and j = 0 in this case.~----- -- / ~

5.3 Part (c)

Using conditioning on next state, we write

E (Ti,o) = LE (Ti,oIXI = k, X o = i) P (Xl = klXo = i)kEf

By Markov property, E (Ti,oIXI = k, X o = i) = E (Ti,oIXI = k), and also P (Xl = klXo = i)is the same as Pik, hence the above becomes

E (Ti,o) = LE (Ti,oIXI = k) PikkEf

ButE (Ti,oIXI = k) = 1 + E (Tk,o)

Since the chain as already made one switch. Then (1) can be written as

(1)

E (Ti,o) = Lk=O,I,.··,N-I

(1 + E (Tk,o)) Pik

When k = 0, then E (Tk,o) = 0, since already in state 0, and hence the above becomes

E (Ti,o) = 1 x Pi,O + L [1 + E (Tk,o)] Pikk=I,.··,N-I

=1r A ,

= Pi,O + L Pik + L E (Tk,o) Pikk=l,.·· ,N-I k=l,. .. ,N-I

15

Hence

E ('li,o) = 1 + :L PikE (Tk,o)k=I,.··,N-I

Writing few equations to see the pattern

Hence we obtain the following set of equations

E (TI,o) = 1 +PnE (TI,o) +Pl2E (T2,o) + +PI,N-IE (TN-I,O)

E (T2,o) = 1 +P2lE (TI,o) +P22E (T2,o) + +P2,N- IE (TN-I,O)

E (T3,o) = 1 + P3lE (TI,o) +P32E (T2,o) + +P3,N-IE (TN-I,O)

E (TN- 2,O) = 1 +PN-2,IE (TI,o) +PN-2,2E (T2,o) + +PN-2,N-IE (TN-I,O)

E (TN-I,O) = 1 +PN-I,IE (TI,o) +PN-I,2E (T2,o) + +PN-I,N-IE (TN-I,O)

Now many of the above terms will cancel, since Pik = 0 unless k = 0 and k = i + 1, hencewe obtain

E (TI,o) = 1 +Pl2E (T2,o)

E (T2,o) = 1 +P23E (T3,o)

E (T3,o) = 1 +P34E (T4,o)

E (TN- 2,O) = 1 +PN-I,IE (TN-I,O)E (TN-I,O) = 1

Using ai, the above becomes

E (TI,o) = 1 + alE (T2,o)

E (T2,o) = 1 + a2E (T3,o) /E (T3,o) = 1 + a3E (T4,o)

E (TN- 2,O) = 1 + aN-2

E (TN-I,O) = 1

In Matrix form, we can write the above as

x e A xA. /""""-.. A. A, , , v

E (TI,o) 1 0 al 0 0 0 0 E (TI,o)E (T2,o) 1 0 0 a2 0 0 0 E (T2,o)E (T3,o) 1 0 0 0 a3 0 0 E (T3,o)

- +0 0 0 0

E (TN- 2,O) 1 0 0 0 aN-2 0 E (TN- 2,O)E (TN-I,O) 1 0 0 0 0 0 0 E (TN-I,O)

16

Or

x = e+Axx-Ax=e

(I - A)x = e

Hence

5.4 Part(d)

Ix = (I - A)-lei WI r-ffJ-C)

= (1 - ao) -?<{oEill,oJ.-\--/' ;0/ ~ / (2),But from part(c), we found that E (TI,o) = 1 + alE (T2,0) , hence (2) becomes

E (To,o) = (1 - ao) + ao (1 + al (1 + a2 (... + aN-3 (1 + aN-2E (TN-IIO

E (To,o) = (1 - ao) + ao [1 + alEr2,0)]

and also from part(c), E (T2,0) = 1 + a2E (T3,0) hence tho/above becomes/

E (To,o) = (1 - ao) + ao (1 + al {l + a2E (T3,0)))/

and we continue this way I

But E (TN-I,O) = 1 hence we obtain

E (To,o) = (1 - ao) + ao (1

.....

~:~t&:>t .F"

II

al (1 + a2 (... + aN-3 (1 + aN-2))))

/Which can be simplified to

E (To,o) = (1 - ao) + ao + aOal ( + a2 ( + aN-3 (1 + aN-2)))

= (1 - ao) + ao + aOal aOala2 ( + aN-3 (1 + aN-2D

= (1 - ao) + ao + ao + aOala2 ( + aN-3 (1 + aNr 2))

= (1 - ao) + ao + a al + aOala2 + + aOala2" jLN-3 + aOala2' t . aN-3aN-2

HenceE (To,o) = 1 + aOal + aOala2 + aOala2a3 +./ + aOala2' .. aN-3aN-2

orI 2

E (To,o) = 1 + IIai + IIaii=O i=O

'3 N-2'IIai + ... + II aii=O i=O

17

5.5 Extra/not part of exam requirement

This is small code which computes E (To,o) for different values of N. It uses the uniformrandom number generator to general the probabilities (3's needed to compute the a's in theabove equation

A small code to show the implementation of the analytical answer forproblem 4, part (c)

1l(40)' S ..edll..ndoJll[ 1234 I;

..xpect ..dTi.....FroJRZeroToZ ..ro[ ..axN_ I : = Hodul.e[ {},

1J = T ..bl.. [RandoJIR....l[ I, {i, ..axB} I;

.. [i_ I : = ~21J"kl I~u+11J[ [kl I

k

tbl =T..ble[TI .. [iI, (k, 1, ..axB- 2});

i.'..xp..cted = 1 + Total [tbll

dat.. =T..bl.. [ {n, exp..ctedTimeFrolDZeroToZero[nl}, {n, 1, 50} I;

ListPlot [d.. t .. , rra....... True, rr..meLabel ... (B, 'E (To,,) , }, Joined ... True I

N

• This Is a listing of the above data In table format

1l(44). T ..bleForlll(d..t .. [[1;; 20, Al.lII, T ..bleH..ading.... {Bon.. , ('If', 'E(To,o)'}}1

O<i(44VIT_orm-

N E(To,o)

1 12 13 1. 704174 1. 870245 2.271726 2.376087 2.731958 3.900939 4.06677104.60066115.7029112 5.4651913 4.4564214 6.4825815 7.0753216 7.6800717 9.045718 8.382719 9.1648120 9.44079

18