Lab4 Camera Calibration

description

Transcript of Lab4 Camera Calibration

EE125 Lab 4: Image Manipulation & Camera Calibration

Original Authors: Nikhil Naikal and Victor ShiaEditted: Dan Calderone and Aaron Bestick

September 19, 2013

1 IntroductionIn this lab, we will learn how to interface with a simple webcam in ROS, read in images, convert them to OpenCVimages, perform some basic image processing, compensate for the perspective change between the floor of the laband the camera thereby calibrating it, and, lastly, use the camera calibration to compute distances on the floor just bytaking an image.

2 Interfacing With A Webcam

Streaming From The WebcamThe package usb_cam provides functionality for interfacing with usb webcams. To start a node that streams animage from the camera, create a launch file with the following text that will launch a usb_cam_node node fromthe package usb_cam and a image_view node from the package image_view. Launch files are simple xml filesused to start multiple nodes at a time in ROS. They have the extension .launch and they are run using the commandroslaunch.

<launch><node name="usb_cam" pkg="usb_cam" type="usb_cam_node" output="screen" >

<param name="video_device" value="/dev/video0" /><param name="image_width" value="640" /><param name="image_height" value="472" /><param name="pixel_format" value="mjpeg" /><param name="camera_frame_id" value="usb_cam" /><param name="io_method" value="mmap" />

</node>

<node name="image_view" pkg="image_view" type="image_view" respawn="false" output="screen"><remap from="image" to="/usb_cam/image_raw" /><param name="autosize" value="true" />

</node></launch>

The node usb_cam_node pulls a video stream from the webcam /dev/video0 and publishes it to the topic/usb_cam/image_raw. You will need to make sure that your webcam is mapped to the device /dev/video0.In order to do this, type

ls /dev/video*

This will list all the video devices in your /dev folder. If there are multiple video devices in the folder, unplug yourwebcam and re-list them to figure out which device is your webcam. You may also need to check that the size of yourimage is 640x472.

For this lab, we will want the ability to request a single image from the webcam. This can be done in ROS us-ing something called a Service/Client architecture (http://wiki.ros.org/ROS/Tutorials/WritingServiceClient(python));

1

however, usb_cam does not provide this functionality. Thus we will need to create a node that subscribes to the/usb_cam/image_raw/ topic and then provides a single image as service when requested. We will also want tocreate a node that requests an image from the image service node and does the image processing that we want to do.Create a package in a folder contained in your ROS_PACKAGE_PATH that depends on rospy and sensor_msgs.We will refer to this package as lab3_cam. You will need to create subfolders /src to contain source code for thenodes and /srv to contain a new service type inside the package. You will then need to create an image service nodeand a image processing node.

Image Servicing NodeCreate a node called camera_srv.py that subscribes to the /usb_cam/image_raw/ topic, waits for a requestfor an image, and then returns the last image when that request is received. You will need to define a new service typein the folder /srv. Call the file ImageSrv.srv. It should contain the following:

---sensor_msgs/Image last_image

Note that the --- line must be included in the service definition. Most service definitions contain two sets of datatypes. Data types above the --- are request data types that are passed to the service when it is requested. Data typesbelow the --- are return data types that the service returns. In this case, we are requesting a service without sendingit any data and we expect to get back a message of type sensor_msgs/Image.

In the image service node, make sure to include the following modules:

#!/usr/bin/env pythonimport roslib; roslib.load_manifest(’lab3_cam’)import rospyfrom sensor_msgs.msg import Imagefrom lab3_cam import ImageSrv, ImageSrvResponse

Your node should do the following things:

• Initialize the node

• Initialize a variable lastImage that will store the most recent image from the camera.

• Subscribe to the /usb_cam/image_raw/ topic and when an image is received save it to the variable lastImage.

• Initialize a service that waits for a request of type ImageSrv and returns the image stored in lastImage. Aservice is initialized similar to a subscriber.

The main part of the code could look like the following.

class ImgService:#Callback for when an image is receiveddef imgReceived(self, message):

#Save the image in the instance variableself.lastImage = message

#Print an alert to the console#print(rospy.get_name() + ":Image received!")

#When another node calls the service, return the last imagedef getLastImage(self, request):

#Print an alert to the console#print("Image requested!")

2

#Return the last imagereturn ImageSrvResponse(self.lastImage)

def __init__(self):#Create an instance variable to store the last image receivedself.lastImage = None;

#Initialize the noderospy.init_node(’cam_listener’)

#Subscribe to the image topicrospy.Subscriber("/usb_cam/image_raw", Image, self.imgReceived)

#Create the servicerospy.Service(’last_image’, ImageSrv, self.getLastImage)

def run(self):rospy.spin()

#Python’s syntax for a main() methodif __name__ == ’__main__’:

node = ImgService()node.run()

Image Processing NodeCreate an node called image_process.py. This node will need to import several modules.

#!/usr/bin/env pythonimport roslib; roslib.load_manifest(’lab3_cam’)import rospyfrom sensor_msgs.msg import Imagefrom lab3_cam.srv import ImageSrv, ImageSrvResponseimport cv, cv2, time, sysfrom cv_bridge import CvBridge, CvBridgeErrorimport numpy as npfrom numpy.linalg import *

The main part of the image processing node should contain the following code. The comments tell you what each partdoes.

if __name__ == ’__main__’:

# Waits for the image service to become availablerospy.wait_for_service(’last_image’)# Initializes the image processing noderospy.init_node(’image_processing_node’)# Creates a function used to call the# image capture service: ImageSrv is the service typelast_image = rospy.ServiceProxy(’service_name’, ImageSrv)

3

while not rospy.is_shutdown():# Waits for a key input to continueraw_input(’Press enter to capture an image:’)

try:#Request the last image from the image servicerequest = last_image()# Extracts the ROS Image from the ImageSrv service# Remember that ImageSrv.last_image was# defined to be of type sensor_msgs.msg.Imageimgmsg = request.last_image

except rospy.ServiceException, e:print "Service call failed: %s"%e

imgmsg is now an image stored in the ROS Image format. This node is complete for now. (We will modify it in thenext section to do more image processing.) Don’t forget to change the permissions so that camera_srv.py andimage_process.py are executable.

chmod +x camera_srv.pychmod +x image_process.py

Also you will need to edit the CMakeLists.txt so that rosbuild_gensrv() is uncommented. Build thepackage and add the following to the launch file.

<node name="camera_srv" pkg="lab3_cam" type="camera_srv.py"respawn="false" output="screen" />

<node name="image_process" pkg="lab3_cam" type="image_process.py"respawn="false" output="screen" />

3 Simple Image ProcessingIn order to process the image, the first thing we will want to do is to convert the ROS image into a format that OpenCVcan handle. We do this using a special package called cv_bridge. We will first want to create an instance of theCvBridge class.

bridge = CvBridge()

We can then use bridge to convert between ROS images and OpenCV images.

cvimage=bridge.imgmsg_to_cv(imgmsg,’rgb8’)

Note that ’rgb8’ refers to the order of the red, green, and blue channels in the image. rgb8 may actually be thewrong ordering for the color channels. If so the image will appear too red or green or blue when we display it. If theimage doesn’t look right, change the order of ’r’, ’g’, and ’b’ until it does. cvimage is now an image in the OpenCVformat iplimage. OpenCV can also store images as matrices or arrays which we will use later. To show an imagein the iplimage format, we can use the commmands

cv.NamedWindow("CV Image",cv.CV_WINDOW_AUTOSIZE)cv.ShowImage("CV Image",cvimage)cv.WaitKey()

4

The last command is required to make the image continually display. (If the process is dying after a short amount oftime, try changing cv.WaitKey() to cv.WaitKey(1000).) For the next part of the lab, we will want to storethe image as a numpy array. In order to transform the image from the iplimage format to an array, we can use thecommand

cvimage_array = np.asarray(cvimage, dtype=np.uint8)

If we want to display the image when it is in the array format, we can use the command

cv2.imshow(’CV Image (array)’,cvimage_array)cv2.waitKey()

The array format for an image is a 3-dimensional array with dimensions hxwx3 where h is the number of pixels inthe vertical direction, w is the number of pixels in the horizontal direction, and the third dimension has a place for thered color value, the blue color value, and the green color value. By convention, the (0, 0) pixel location is defined tobe the upper left hand corner and pixel values increase as you move to the right and down. Usually, color values rangefrom 0 to 255 where 0 is totally dark and 255 is totally light. Thus [0 0 0] would be a black pixel and [255 255 255]would be a white pixel. By indexing into the image array, we can select a part of the image or change values in thearray in order to change the image.

Add the following section to your code that will allow you to click on points in the image and store their pixellocations.

#Define two global variablesglobal n_clicks, pointsn_clicks = 0tot_clicks = 4# preallocates a list to hold the locations of the points we selectpoints = (np.nan*np.zeros(tot_clicks)).tolist()while n_clicks <= tot_clicks-1:

# Displays the imagecv.ShowImage("CV Image",cvimage)# Calls the callback function ’on_mouse_click’# when the mouse is clicked in the window "CV Image"cv.SetMouseCallback("CV Image",on_mouse_click,param=1)cv.WaitKey()

# Callback function for ’cv.SetMouseCallback’def on_mouse_click(event,x,y,flag,param):

global n_clicks, pointsif(event==cv.CV_EVENT_FLAG_LBUTTON):

print "Point Captured: (%s,%s)" %(x,y)points[n_clicks] = [x,y]n_clicks = n_clicks + 1

This code will allow you to click on points in the ”CV Image” window and it will store the pixel coordinates in thelist points.

4 Floor Plane CalibrationWe will now consider the problem of calibrating the camera pixels corresponding to the floor of the lab. The objectiveis to determine a projective transform H ∈ R3×3 that transforms the ground plane to camera pixels. This mappingis bijective and can also map pixels in the camera back to floor coordinates. For this part of the lab, you will need tocapture an image like the one in Figure 1 that shows an empty rectangular region on the floor.

5

Figure 1: Sample image of floor plane.

Let us consider a point on the lab floor given by X = [x, y]T ∈ R2. In order to keep the transformations linear(instead of dealing with affine projections), we will use homogeneous coordinates, where we append a ”1” to thecoordinates of X as,

X =

[X1

]=

xy1

∈ R3.

We are trying to determine the linear transform H ∈ R3×3 that maps the point X to the pixel Y = [u v 1]T ∈R3. This transform is called a homography and takes on the form,

H :=

h11 h12 h13

h21 h22 h23

h31 h32 1

. (1)

Notice that the last element of the homography is 1. This means that only 8 parameters of the matrix need to beestimated. Once the matrix is estimated, the pixel coordinates, Y = (u, v) can be determined using the followingequations,

u =h11x+ h12y + h13

h31x+ h32y + 1, v =

h21x+ h22y + h23

h31x+ h32y + 1. (2)

These 2 equations can be rewritten in linear form as,

[x y 1 0 0 0 −u.x −u.y0 0 0 x y 1 −v.x −v.y

].

h11

h12

h13

h21

h22

h23

h31

h32

=

[uv

]. (3)

Since Eqn. 3 has 8 unknowns, in order to uniquely determine the unknowns, we will need N ≥ 4 floor point↔image pixel pairs. With these N points, the equation becomes,

A.x = b, (4)

6

where,

A =

x1 y1 1 0 0 0 −u1.x1 −u1.y10 0 0 x1 y1 1 −v1.x1 −v1.y1x2 y2 1 0 0 0 −u2.x2 −u2.y20 0 0 x2 y2 1 −v2.x2 −v2.y2x3 y3 1 0 0 0 −u3.x3 −u3.y30 0 0 x3 y3 1 −v3.x3 −v3.y3x4 y4 1 0 0 0 −u4.x4 −u4.y40 0 0 x4 y4 1 −v4.x4 −v4.y4

...

∈ R2N×8, x =

h11

h12

h13

h21

h22

h23

h31

h32

∈ R8, b =

u1

v1u2

v2u3

v3u4

v4...

∈ R2N .

(5)note: [u, v]T are pixel coordinates and [x, y]T are ground coordinates with respect to the origin that you have defined.

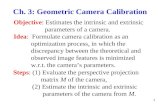

Modify your code to compute the homography matrix between the floor plane and the camera plane. Define the [x, y]T

floor coordinates of the first point you click on to be [0, 0]T . Calculate the [x, y]T coordinates of the other 3 pointsyou click on using the fact that the ground tiles are 30.48 cm (1ft) per side. (See Figure 2 for reference.) Modifyyour code to create and solve the linear equations above for the values of H . (You can use the inv function from thenp.linalg module.) You can check that you calculated the homography correctly by using the following function.(If you calculated the homography correctly, running this function should draw black dots on the intersections of thetiles.)

def check_homography(H,nx,ny,length,image):# H should be a 3x3 numpy.array# nx is the number of tiles in the x direction# ny is the number of tiles in the y direction# length is the length of one side of a tile# image is an image arrayfor i in range(nx):

for j in range(ny):xbar = np.array([[i*length],[j*length],[1]])ubar = np.dot(H,xbar).T[0]u = ubar[0]/ubar[2]v = ubar[1]/ubar[2]cv2.circle(image,(u,v),5,0,-1)

cv2.imshow(’Check Homography’,image)cv2.waitKey()

Lab Report Assignment: In your lab report, include a picture of the floor with black dots at the intersections of thetiles and give an explanation for what the check_homography function is doing. You can save the image in opencvby changing it back from an array to an iplimage and saving it using the cv.SaveImage function.

image_to_save = cv.fromarray(image)cv.SaveImage(’/absolute/path/to/folder/image_name.jpg’, picture)

You should also include any new code that you wrote to compute the homography. (You don’t need to include thecode that was given to you.)

5 Mapping Pixels to Floor CoordinatesNow that we have computed the homography to map points from the Floor coordinates to pixel coordinates, we willconsider the inverse mapping from pixel coordinates, [u, v, 1]T back to floor coordinates [x, y, 1]T . This can simply

7

(a) (b)

Figure 2: (a) Corner selection for ground plane (b) Calibrated image with projected grid.

be done by using the inverse of the homography matrix, H−1. Let this matrix be,

H−1 := Q =

q11 q12 q13q21 q22 q23q31 q32 q33

. (6)

With this inverse transform, the floor coordinates of any pixel can be computed as,

x =q11u+ q12v + q13q31u+ q32v + q33

, y =q21u+ q22v + q23q31u+ q32v + q33

. (7)

Modify your code to compute the distance between two points on the floor when you click on them in the image.Test your code by placing an object of known length on the floor and use your code to measure it. Try measuring thelength of an object that does not lie in the floor plane. Are the measurements still accurate? Why or why not?

Lab Report Assignment: Place an object of known length on the floor and measure it by clicking on the ends ofthe object in the picture from the webcam, recording the pixel points, and use the inverse homography to determinethe length of the object. Save a picture of the object with the points you clicked shown as block dots. (Look at thecheck_homography function to see how to draw the dots.) Compare the known length of the object with thelength you measured using the inverse homography. Are they the same? (If the object is lying directly on the floor,they should be very close.) Now try measuring an object of known length that doesn’t lie in the plane of the floor.Again, include a picture with the points you clicked shown as black dots. Compare the known length of this objectwith the length you measured using the inverse homography. Are they the same? Why or why not? Again, make sureyou include any new code you wrote for this section in your lab report.

8