History and Use of Relative Importance Indices

-

Upload

mihaela-stanescu -

Category

Documents

-

view

9 -

download

1

description

Transcript of History and Use of Relative Importance Indices

http://orm.sagepub.com

Organizational Research Methods

DOI: 10.1177/1094428104266510 2004; 7; 238 Organizational Research Methods

Jeff W. Johnson and James M. Lebreton History and Use of Relative Importance Indices in Organizational Research

http://orm.sagepub.com/cgi/content/abstract/7/3/238 The online version of this article can be found at:

Published by:

http://www.sagepublications.com

On behalf of:

The Research Methods Division of The Academy of Management

can be found at:Organizational Research Methods Additional services and information for

http://orm.sagepub.com/cgi/alerts Email Alerts:

http://orm.sagepub.com/subscriptions Subscriptions:

http://www.sagepub.com/journalsReprints.navReprints:

http://www.sagepub.com/journalsPermissions.navPermissions:

http://orm.sagepub.com/cgi/content/refs/7/3/238 Citations

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

10.1177/1094428104266510ORGANIZATIONAL RESEARCH METHODSJohnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE

History and Use of Relative Importance Indicesin Organizational Research

JEFF W. JOHNSONPersonnel Decisions Research Institutes

JAMES M. LEBRETONWayne State University

The search for a meaningful index of the relative importance of predictors in multi-ple regression has been going on for years. This type of index is often desired whenthe explanatory aspects of regression analysis are of interest. The authors definerelative importance as the proportionate contribution each predictor makes to R2

,considering both the unique contribution of each predictor by itself and its incre-mental contribution when combined with the other predictors. The purposes ofthis article are to introduce the concept of relative importance to an audience ofresearchers in organizational behavior and industrial/organizational psychologyand to update previous reviews of relative importance indices. To this end, the au-thors briefly review the history of research on predictor importance in multiple re-gression and evaluate alternative measures of relative importance. Dominanceanalysis and relative weights appear to be the most successful measures of relativeimportance currently available. The authors conclude by discussing how impor-tance indices can be used in organizational research.

Keywords: relative importance; multiple regression analysis; dominance analy-sis; relative weights; organizational research

Multiple regression analysis has two distinct applications: prediction and explanation(Courville & Thompson, 2001). When multiple regression is used for a purely predic-tive purpose, a regression equation is derived within a sample to predict scores on a cri-terion variable from scores on a set of predictor variables. This equation can be appliedto predictor scores within a similar sample to make predictions of the unknown crite-rion scores in that sample. The elements of the equation are regression coefficients,which indicate the amount by which the criterion score would be expected to increaseas the result of a unit increase in a given predictor score, with no change in any of theother predictor scores. The extent to which the criterion can be predicted by the predic-

Authors’ Note: Correspondence concerning this article should be addressed to Jeff W. Johnson, Per-sonnel Decisions Research Institutes, 43 Main Street SE, Suite 405, Minneapolis, MN 55414; e-mail: [email protected].

Organizational Research Methods, Vol. 7 No. 3, July 2004 238-257DOI: 10.1177/1094428104266510© 2004 Sage Publications

238

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

tor variables (indicated by R2) is of much greater interest than is the relative magnitudeof the regression coefficients.

The other use of multiple regression is for explanatory or theory-testing purposes.In this case, we are interested in the extent to which each variable contributes to theprediction of the criterion. For example, we may have a theory that suggests that onevariable is relatively more important than another. Interpretation is the primary con-cern, such that substantive conclusions can be drawn regarding one predictor withrespect to another. Although there are many possible definitions of importance (Bring,1994; Kruskal & Majors, 1989), this is what is typically meant by the relative impor-tance of predictors in multiple regression.

Achen (1982) discussed three different meanings of variable importance. Theoreti-cal importance refers to the change in the criterion based on a given change in the pre-dictor variable, which can be measured using the regression coefficient. Level impor-tance refers to the increase in the mean criterion score that is contributed by thepredictor, which corresponds to the product of a variable’s mean and its unstandard-ized regression coefficient. This is a popular measure in economics (Kruskal &Majors, 1989). Finally, dispersion importance refers to the amount of the criterionvariance explained by the regression equation that is attributable to each predictorvariable. This is the interpretation of importance that most often corresponds to mea-sures of importance in the behavioral sciences, when the explanatory aspects ofregression analysis are of interest (Thomas & Decady, 1999).

To draw conclusions about the relative importance of predictors, researchers oftenexamine the regression coefficients or the zero-order correlations with the criterion.When predictors are uncorrelated, zero-order correlations and standardized regres-sion coefficients are equivalent. The squares of these indices sum to R2, so the relativeimportance of each variable can be expressed as the proportion of predictable variancefor which it accounts. When predictor variables are correlated, however, these indiceshave long been considered inadequate (Budescu, 1993; Green & Tull, 1975; Hoffman,1960). In the presence of multicollinearity, squared correlations and squared standard-ized regression coefficients are no longer equivalent, do not sum to R2, and take on verydifferent meanings. Correlations represent the unique contribution of each predictorby itself, whereas regression coefficients represent the incremental contribution ofeach predictor when combined with all remaining predictors.

To illustrate the concept of relative importance and the inadequacy of these indicesfor reflecting it, consider an example from a situation in which a relative importanceindex is frequently of interest. Imagine a customer satisfaction survey given to bankcustomers, and the researcher is interested in determining how each specific aspect ofbank satisfaction contributes to customers’ overall satisfaction judgments. In otherwords, what is the relative importance bank customers place on teller service, loanofficer service, phone representative service, the convenience of the hours, and theinterest rates offered in determining their overall satisfaction with the bank? Regres-sion coefficients are inadequate because customers do not consider the incrementalamount of satisfaction they derive from each bank aspect while holding the others con-stant. Zero-order correlations are also inadequate because customers do not considereach bank aspect independent of the others. Rather, they consider all the aspects thatare important to them simultaneously and implicitly weight each aspect relative to theothers in determining their overall satisfaction.

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 239

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

Because neither index alone tells the full story of a predictor’s importance,Courville and Thompson (2001) recommended that both regression coefficients andcorrelations (or the equivalent structure coefficients) be examined when interpretingrelative importance (see also Thompson & Borrello, 1985). Examining two differentindices to try to determine an ordering of relative importance is highly subjective,which is why the search for a single meaningful index of relative importance has beengoing on for years. Although a number of different definitions have been offered overthe years, we offer the following definition of relative importance:

Relative importance: The proportionate contribution each predictor makes to R2, consider-ing both its direct effect (i.e., its correlation with the criterion) and its effect when com-bined with the other variables in the regression equation.

This definition integrates previous definitions, highlights the multidimensional natureof the relative importance construct, and is consistent with contemporary research andthought on this topic (cf. Budescu, 1993; J. W. Johnson, 2000a, 2001a).

A rich literature has developed in the areas of statistics, psychology, marketing,economics, and medicine on determining the relative importance of predictor vari-ables in multiple regression (e.g., Azen & Budescu, 2003; Budescu, 1993; Gibson,1962; Goldberger, 1964; Green, Carroll, & DeSarbo, 1978; Healy, 1990; J. W. John-son, 2000a; Kruskal, 1987). Because there is no unique mathematical solution to theproblem, these indices must be evaluated on the basis of the logic behind their develop-ment, the apparent sensibility of the results they provide, and whatever shortcomingscan be identified.

The purposes of this article are (a) to introduce the concept of relative importance toan audience of researchers in organizational behavior and industrial/organizationalpsychology and (b) to update previous reviews of relative importance indices(Budescu, 1993; Kruskal & Majors, 1989). To this end, we (a) briefly review the his-tory of research on predictor importance in multiple regression, (b) evaluate alterna-tive measures of relative importance, (c) discuss how importance indices can be usedin organizational research, (d) present issues to consider before applying a relativeimportance measure, and (e) suggest directions for future research in this area.

Brief History of Relative Importance Research

Although the proper method of measuring the relative importance of predictors inmultiple regression has been of interest to researchers for years (e.g., Englehart,1936), the first debate on the issue in the psychology literature appeared in Psycholog-ical Bulletin in the early 1960s. Hoffman (1960) sought to statistically describe thecognitive processes used by clinicians when making judgments about patients. Heintroduced the term relative weight, which referred to the proportionate contributioneach predictor makes to the squared multiple correlation coefficient when that coeffi-cient is expressed as the sum of contributions from the separate predictors. He showedthat the products of each variable’s (x) standardized regression coefficient (βx) and itsassociated zero-order correlation with the criterion (rxy) summed to R2, and he assertedthat these products represented the “independent contribution of each predictor”(p. 120). This was an unfortunate choice of words, as the term independent can havemany different meanings. Ward (1962) objected to the use of this term, stating that the

240 ORGANIZATIONAL RESEARCH METHODS

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

independent contribution of a predictor refers to the amount by which R2 increaseswhen the predictor is added to the model and all other predictors are held constant.Hoffman (1962) replied that his relative weights were not intended to measure inde-pendent contribution in this sense but did not reply to Ward’s criticism that negativeweights were uninterpretable.

This exchange led Gibson (1962) to suggest a possible resolution to this differenceof opinion. Noting that both conceptions of the independent contribution of a variableare the same when all predictors are uncorrelated, he suggested a transformation of theoriginal variables to the set of orthogonal factors with which they have the highestdegree of one-to-one correspondence in the least-squares sense. The squared regres-sion coefficients from a regression of the criterion on these orthogonal factors wouldrepresent a proxy for both types of independent contribution.

A few years later, Darlington (1968) published his influential review of the use ofmultiple regression. He reviewed five possible measures of predictor importance andconcluded that no index unambiguously reflects the contribution to variance of a vari-able when variables are correlated. He further concluded that certain indices havevalue in specific situations, but Hoffman’s (1960) index has very little practical value.Despite the criticisms of Hoffman’s index, arguments for its use have continued to per-sist (e.g., Pratt, 1987; Thomas, Hughes, & Zumbo, 1998).

Customer satisfaction researchers have long been interested in determining howcustomers’ perceptions of specific attributes measured on a survey contribute to theirratings of overall satisfaction (Heeler, Okechuku, & Reid, 1979; Jaccard, Brinberg, &Ackerman, 1986; Myers, 1996). Multiple regression is a frequently used technique,although its limitations have been recognized (Green & Tull, 1975; McLauchlan,1992). When attempts have been made to deal with multicollinearity, principal com-ponents analysis has been commonly used to create uncorrelated variables or factors(Green & Tull, 1975; Grisaffe, 1993). Green et al. (1978) used this approach to createan index that better reflected relative importance, but there is little evidence that thisindex has ever been used.

Kruskal and Majors (1989) reviewed the concept of relative importance in manyscientific disciplines, concluding that there is widespread interest in assigning degreesof importance in most or all scholarly fields. The correlation-type importance indicesthat they reviewed were nothing out of the ordinary or particularly clever, and statisti-cal significance was used as a measure of importance to an alarming degree. Kruskaland Majors reviewed several measures but made no recommendations other than a callfor broader statistical discussion of relative importance.

In psychology, the topic of relative importance of predictors in multiple regressionwas resurrected somewhat when Budescu (1993) introduced dominance analysis.This is a technique for determining first whether predictor variables can be ranked interms of importance. If dominance relationships can be established for all predictors,Budescu suggested the average increase in R2 associated with a variable across all pos-sible submodels as a quantitative measure of importance. This measure was computa-tionally equivalent to a measure suggested by Lindeman, Merenda, and Gold (1980),which had not received much attention. This was a major breakthrough in relativeimportance research because it was the first measure that was theoretically meaningfuland consistently provided sensible results.

More recently, J. W. Johnson (2000a) presented a measure of relative importancethat was based on the Gibson (1962) technique of transforming predictors to their

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 241

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

orthogonal counterparts but allowed importance weights to be assigned to the originalcorrelated variables. Despite being based on entirely different mathematical models,Johnson’s epsilon and Budescu’s dominance measures provide nearly identical resultswhen applied to the same data (J. W. Johnson, 2000a; LeBreton, Ployhart, & Ladd,2004 [this issue]). The convergence between these two mathematically differentapproaches suggests that substantial progress has been made toward furnishing mean-ingful estimates of relative importance among correlated predictors.

The relative importance of predictors has been of interest in organizational research(e.g., Dunn, Mount, Barrick, & Ones, 1995; Hobson & Gibson, 1983; Zedeck &Kafry, 1977), although importance has typically been determined by examiningregression coefficients (e.g., Lehman & Simpson, 1992), by examining path coeffi-cients (e.g., Borman, White, & Dorsey, 1995), or by creating uncorrelated variables inpaper-people studies and interpreting the correlations or standardized regression coef-ficients (e.g., Hobson, Mendel, & Gibson, 1981; Rotundo & Sackett, 2002). Recentsymposia at the annual conference of the Society for Industrial and OrganizationalPsychology have introduced the dominance (Budescu, 1993) and epsilon (J. W. John-son, 2000a) indices to an audience of organizational researchers (J. W. Johnson,2000b; LeBreton & Johnson, 2001, 2002). This special issue of OrganizationalResearch Methods represents the most visible and current attempt to communicatehow relative importance indices can be used in organizational research.

Alternative Measures of Importance

Numerous measures of variable importance in multiple regression have been pro-posed. Each measure can be placed into one of three broad categories. Single-analysismethods use the output from a single regression analysis, either by choosing a singleindex to represent the importance of the predictors or by combining multiple indices tocompute a measure of importance. Multiple-analysis methods compute importanceindices by combining the results from more than one regression analysis involving dif-ferent combinations of the same variables. Variable transformation methods transformthe original predictors to a set of uncorrelated variables, regress the criterion on theuncorrelated variables, and either use those results as a proxy for inferring the impor-tance of the original variables or further analyze those data to yield results that aredirectly tied to the original variables. In this section, we review the methods that fallinto these three categories, presenting the logic behind them and the benefits andshortcomings of each.

Single-Analysis Methods

Zero-order correlations. The simplest measure of importance is the zero-order cor-relation of a predictor with the criterion (rxy) or the squared correlation (rxy

2). Impor-tance is then defined as the direct predictive ability of the predictor variable when allother variables in the model are ignored. Individual predictor rxy

2’s sum to the fullmodel R2 when the predictors are uncorrelated, but Darlington (1990) argued thatimportance is proportional to rxy, not rxy

2. In fact, whether rxy or rxy

2 more appropriatelyrepresents importance depends on how importance is defined. If importance is definedas the amount by which a unit increase in the predictor increases the criterion score,

242 ORGANIZATIONAL RESEARCH METHODS

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

importance is proportional to rxy. If importance is defined as the extent to which varia-tion in the predictor coincides with variation in the criterion, importance is propor-tional to rxy

2. Neither measure, however, adequately reflects importance when predic-tors are correlated because they fail to consider the effect of each predictor in thecontext of the other predictors.

Thompson and Borrello (1985) and Courville and Thompson (2001) recom-mended examining the structure coefficients in a multiple regression analysis, alongwith the standardized regression coefficients, to make judgments about variableimportance. A structure coefficient is the correlation between a predictor and the pre-dicted criterion score. Because structure coefficients are simply zero-order correla-tions divided by the model R2, examining structure coefficients is really no differentthan examining zero-order correlations (Thompson & Borrello, 1985).

Standardized regression coefficients. Standardized regression coefficients (or betaweights) are the most common measure of relative importance when multiple regres-sion is used (Darlington, 1990). When predictors are uncorrelated, betas are equal tozero-order correlations, and squared betas sum to R2. When predictors are correlated,however, the size of the beta weight depends on the other predictors included in themodel. A predictor that has a large zero-order correlation with the criterion may have anear-zero beta weight if that predictor’s predictive ability is assigned to one or moreother correlated predictors. In fact, it is not unusual for a predictor to have a positivezero-order correlation but a negative beta (Darlington, 1968), making interpretation ofthe beta impossible in terms of importance.

Unstandardized regression coefficients. Policy capturing is a method for statisti-cally describing decision-making processes by regressing quantitative judgments onthe cue values representing the information available to the judge. The cues are typi-cally designed to be uncorrelated so that regression coefficients can be used to unam-biguously interpret importance, but this threatens the construct validity of the results ifuncorrelated cues do not represent the real-world situation (Hobson & Gibson, 1983).Lane, Murphy, and Marques (1982) argued that unstandardized regression coeffi-cients are the most appropriate measure of importance because they are invariantacross changes in cue intercorrelations. This is because, assuming the linear modelholds, changes in cue intercorrelations lead to changes in the standard deviations of thejudgments and in the correlations between judgments and cues. Lane et al. conducted astudy in which 14 participants rated 144 profiles that were generated from three differ-ent intercorrelation matrices (48 profiles from each). They found no significant differ-ences in mean unstandardized regression coefficients across cue structure but signifi-cant differences for zero-order correlations, betas, and semipartial correlations. It isnot clear how generalizable these results are, however, because (a) only three predic-tors were included, (b) larger differences in cue intercorrelations could have a largereffect, and (c) they are limited to the policy-capturing paradigm.

Usefulness. The usefulness of a predictor is defined as the increase in R2 that is asso-ciated with adding the predictor to the other predictors in the model (Darlington,1968). Like regression coefficients, this measure is highly influenced by multi-collinearity. For example, if two predictors are highly correlated with each other andwith the criterion, and a third is only moderately correlated with the criterion and has

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 243

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

low correlations with the other two predictors, the third predictor will have the largestusefulness simply because of its lack of association with the other predictors.

Semipartial correlation and t statistic. The semipartial correlation is equal to thesquare root of the usefulness (Darlington, 1990). Darlington (1990) and Bring (1994)showed that this measure is superior to the standardized regression coefficient as ameasure of relative importance. Rather than being based on the variable’s standarddeviation, the semipartial correlation is based on the variable’s standard deviation con-ditional on the other predictors in the model. This measure is proportional to the t sta-tistic used to determine the significance of the regression coefficient, so t can be usedas an easily available measure of relative importance (Bring, 1994; Darlington, 1990).This is still not an ideal measure, however, as it is affected by multicollinearity to thesame extent as are regression coefficients, and it can take on small or negative valueseven when predictors have large zero-order correlations with the criterion.

The product measure. Hoffman’s (1960) measure (βxrxy), termed the product mea-sure by Bring (1996), has been criticized extensively because it shares the disadvan-tages of both measures of which it is composed (Bring, 1996; Darlington, 1968; Green& Tull, 1975; Ward, 1962, 1969). Like regression coefficients, this measure can easilybe zero or negative even when a variable contributes substantially to the prediction ofthe criterion (Darlington, 1968). Pratt (1987) presented a theoretical justification forthis index as a measure of relative importance and showed that it has a number of desir-able properties. Three of these properties are particularly noteworthy:

1. The sum of the importance weights is equal to R2.

2. When all predictors are equally correlated and have equal regression coefficients, theimportance of the sum of a subset of predictors is equal to the sum of the importanceweights of the subset.

3. When a subset of predictors is replaced by a linear combination of those predictors, im-portance weights for the remaining predictors remain unchanged.

Bring (1996) noted that variables that are uncorrelated with the criterion but add tothe predictive value of the model (i.e., suppressor variables; Cohen & Cohen, 1983)have no importance according to the product measure. He described this result ascounterintuitive. Thomas et al. (1998) argued that suppressor variables should betreated differently from nonsuppressors and that the contribution of the suppressorsshould be assessed separately by measuring their contribution to R2. Treating sup-pressors and nonsuppressors separately, however, ignores the fact that the two types ofvariables are complexly intertwined. The importance of the nonsuppressors dependson the presence of the suppressors in the model, so treating them separately may alsobe considered counterintuitive.

By the same token, variables that have meaningful correlations with the criterionbut do not add to the predictive value of the model also have no importance under theproduct measure. This is counter to our definition of relative importance, which sug-gests that a measure of importance should consider both the effect a predictor has inisolation from the other predictors (i.e., the predictor-criterion correlation) and in con-junction with the other predictors (i.e., the beta weight). The product measure essen-tially ignores the magnitude of one of its components if the magnitude of the othercomponent is very low.

244 ORGANIZATIONAL RESEARCH METHODS

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

Another aspect of the product measure that limits its utility considerably is the factthat negative importance values are possible and not unusual. Pratt (1987) stated thatboth βx and rxy must be of the same sign for this measure to be a valid measure of impor-tance. Thomas et al. (1998), however, argued that negative importance occurs onlyunder conditions of high multicollinearity. They note that negative importance “of‘large’magnitude can occur only if the variance inflation factor (a standard measure ofmulticollinearity) for the j’th variable is large” (p. 264). The variance inflation factor(VIF) is given by VIFj = 1/(1 – R2

( j)), where R2( j) is the squared multiple correlation

from the regression of variable xj on the remaining x’s. Removing the variable(s) withthe large VIF would eliminate redundancy in the model and leave only positive impor-tance values. It is relatively easy, however, to identify situations in which a variable hasnegative importance even when multicollinearity is low. For example, consider a sce-nario in which five predictors are equally intercorrelated at .30 and have the followingcriterion correlations:

ryx140= .

ryx 250= .

ryx 320= .

ryx 440= .

ryx 550= . .

In this case, the beta weight for x3 is –.104, so β x yxr3

= –.021. Although the extentto which this could be considered of “large” magnitude is debatable, it is a result that isvery difficult to interpret. Because the predictors are all equally intercorrelated, eachpredictor has the same VIF. There is no a priori reason for excluding x3 based on multi-collinearity, so the only reason for excluding it is because the importance weight doesnot make sense. An appropriate measure of predictor importance should be able toprovide an interpretable importance weight for all variables in the model and should beable to do this regardless of the extent to which the variables are intercorrelated. Pratt(1987) showed that the product measure is not arbitrary, but we believe it still leavesmuch to be desired as a measure of predictor importance.

Multiple-Analysis Methods

Average squared semipartial correlation. When the predictors have a relevant,known ordering, Lindeman et al. (1980) recommended using the squared semipartialcorrelation of each predictor as it is added to the model as the measure of importance.In other words, if a theoretically meaningful order was x1, x2, x3, the importance mea-sures would be r ry x y x x⋅ ⋅1

2

2 1

2, ,( ) and ry x x x( ) ,3 1 2

2⋅ respectively. This is simply the progres-

sion of usefulness indices. Lindeman et al. pointed out that a relevant ordering of pre-dictors rarely exists, so they suggested the average of each predictor’s (p) squaredsemipartial correlation across all p! possible orderings of the predictors as a more gen-eral importance index. This defines predictor importance as the average contribution

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 245

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

to R2 across all possible orderings. This index has several desirable properties, includ-ing (a) the sum of the average squared semipartial correlations across all predictors isequal to R2, (b) any predictor that is positively related to the criterion will receive a pos-itive importance weight, and (c) the definition of importance is intuitively meaningful.

Average squared partial correlation. Independent of Lindeman et al. (1980),Kruskal (1987) suggested averaging each predictor’s squared partial correlation overall p! possible orderings. This measure does not sum to R2 and is not as intuitive asLindeman et al.’s (1980) average increase in R2. Theil (1987) and Theil and Chung(1988) built on Kruskal’s (1987) approach by suggesting using a function from statis-tical information theory to transform the average partial correlations to average bits ofinformation provided by each variable. This does allow an additive decomposition ofthe total information, but it does little to add to the understanding of the measure for thetypical user.

Dominance analysis. The approach taken by Budescu (1993) differs from previousapproaches in that it is not assumed that all variables can be ordered in terms of impor-tance. His dominance analysis is a method of determining whether predictor variablescan be ranked. In other words, Budescu contended that there may well be situations inwhich it is impossible to determine an ordering, so a dominance analysis should beundertaken prior to any quantitative analysis. For any two predictor variables, xi and xj,let xh stand for any subset of the remaining p – 2 predictors in the set. Variable xi domi-nates variable xj if, and only if,

R Ry x ix h y x jx h⋅ ⋅≥2 2 (1)

for all possible choices of xh. This can also be stated as xi dominates xj if adding xi toeach of the possible subset models always results in a greater increase in R2 than wouldbe obtained by adding xj. If the predictive ability of one variable does not exceed that ofanother in all subset regressions, a dominance relationship cannot be established andthe variables cannot be rank ordered meaningfully (Budescu, 1993).

The idea behind dominance analysis is attractive, but Budescu’s (1993) definitionof importance is very strict. Consequently, it is usually not possible to order all predic-tor variables when there are more than a few predictors in the model. Recently, how-ever, this strict definition of dominance has been relaxed somewhat (Azen & Budescu,2003). Azen and Budescu (2003) defined three levels of dominance: (a) complete, (b)conditional, and (c) general. Complete dominance corresponds to the original defini-tion of dominance. Conditional dominance occurs when the average additional contri-bution within each model consisting of the same number of variables is greater for onepredictor than for another. General dominance occurs when the average additionalcontribution across all models is greater for one predictor than for another.

The general dominance measure is the same as the quantitative measure Budescu(1993) suggested be computed if all p(p – 1)/2 pairs of predictors can be ordered (i.e.,the average increase in R2 associated with a predictor across all possible submodels:Cx j

). The Cx j’s sum to the model R2, so the relative importance of each predictor can

be expressed as the proportion of predictable criterion variance accounted for by that

246 ORGANIZATIONAL RESEARCH METHODS

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

predictor. The measure is computationally equivalent to Lindeman et al.’s (1980) aver-age squared semipartial correlation over all p! orderings.

The methods developed by Budescu (1993) and Lindeman et al. (1980) seem to beeffective in quantifying relative importance. The average increase in R2 associatedwith the presence of a variable across all possible models is a meaningful measure thatfits our definition of relative importance presented earlier. This measure averages avariable’s direct effect (considered by itself), total effect (conditional on all predictorsin the full model), and partial effect (conditional on all subsets of predictors; Budescu,1993).

Although these methods are theoretically and intuitively appealing, they have atleast one major shortcoming: They become computationally prohibitive as the numberof predictors increases. Specifically, these methods require the computation of R2 forall possible submodels. Computational requirements increase exponentially (with ppredictors, there are 2p – 1 submodels) and are staggering for models with more than10 predictors (Neter, Wasserman, & Kutner, 1985). Lindeman et al. (1980) stated thattheir method may not be feasible when p is larger than five or six, although programshave been written to conduct the analysis with as many as 14 predictors. Azen andBudescu (2003) offer a SAS macro available for download that performs the domi-nance analysis calculations, but the maximum number of predictors allowed is 10.Although dominance represents an improvement over traditional relative importancemethods, the computational requirements of the procedure make it difficult to apply tomany situations for which it is valuable.

Criticality. Azen, Budescu, and Reiser (2001) proposed a new approach to compar-ing predictors in multiple regression, which they termed predictor criticality. Tradi-tional measures of importance assume that the given model is the best-fitting model,whereas criticality analysis does not depend on the choice of a particular model. A pre-dictor’s criticality is defined as the probability that it is included in the best-fittingmodel given an initial set of predictors. The first step in determining predictor critical-ity is to bootstrap (i.e., resample with replacement; Efron, 1979) a large number ofsamples from the original data set. Within each bootstrap sample, evaluate all (2p – 1)submodels according to some criterion (e.g., adjusted R2). Each predictor’s criticalityis determined as the proportion of the time that the predictor was included in the best-fitting model across all bootstrap samples. Criticality analysis has the advantage of notrequiring the assumption of a single best-fitting model, and it has a clear definition.Research comparing predictor criticality to various measures of predictor importanceshould be conducted to gain an understanding of how the two concepts are related.Criticality analysis requires even more computational effort than dominance analysisdoes, however, because 2p – 1 submodels must be computed within each of 100 ormore bootstrap samples. This severely limits the applicability of criticality analysis tosituations in which only a few predictors are evaluated.

Variable Transformation Methods

Transform to maximally related orthogonal variables. Gibson (1962) and R. M.Johnson (1966) suggested that the relative importance of a set of predictors can beapproximated by first transforming the predictors to their maximally related orthogo-nal counterparts. In other words, one creates a set of variables that are as highly related

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 247

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

as possible to the original set of predictors but are uncorrelated with each other. Thecriterion can then be regressed on the new orthogonal variables, and the squared stan-dardized regression coefficients approximate the relative importance of the originalpredictors. This approach has a certain appeal because relative importance is unam-biguous when variables are uncorrelated, and the orthogonal variables can be veryhighly related to the original predictors. The obvious problem with this approach isthat the orthogonal variables are only approximations of the original predictors andmay not be close representations if two or more original predictors are highlycorrelated.

Green, Carroll, and DeSarbo’s (1978) δ2. Green et al. (1978) realized the limita-tions of inferring importance from orthogonal variables that may not be highly relatedto the original predictors and suggested a method by which the orthogonal variablescould be related back to the original predictors to better estimate their relative impor-tance. In their procedure, the orthogonal variables are regressed on the original predic-tors. Then the squared regression weights of the original predictors for predicting eachorthogonal variable are converted to relative contributions by dividing them by thesum of the squared regression weights for each orthogonal variable. These relativecontributions are then multiplied by the corresponding squared regression weight ofeach orthogonal variable for predicting the criterion and summed across orthogonalvariables to arrive at the importance weight, called δ2. The sum of the δ2’s is equal to R2.

Green et al. (1978) showed that this procedure yields more intuitive importanceweights under high multicollinearity than do the Gibson (1962) and R. M. Johnson(1966) methods. It has the further advantages of allowing importance to be assigned tothe original predictors and being much simpler computationally than dominanceanalysis. It has a very serious shortcoming, however, in that the regression weightsobtained by regressing the orthogonal variables on the original predictors are stillcoefficients from regressions on correlated variables (Jackson, 1980). The weightsobtained by regressing the orthogonal variables on the original predictors to determinethe relative contribution of each original predictor to each orthogonal variable are justas ambiguous in terms of importance as regression weights obtained by a regression ofthe dependent variable on the original variables. Green, Carroll, and DeSarbo (1980)acknowledged this criticism but could respond only that their measure was at leastbetter than previous methods of allocating importance. Boya and Cramer (1980) alsopointed out that this method is not invariant to orthogonalizing procedures. In otherwords, if an orthogonalizing procedure other than the one suggested by Gibson (1962)and R. M. Johnson (1966) were used (e.g., principal components), the procedurewould not yield the same importance weights.

Johnson’s relative weights. J. W. Johnson (2000a) proposed an alternative solutionto the problem of correlated variables. Green et al. (1978) attempted to relate theorthogonal variables back to the original variables by using the set of coefficients forderiving the orthogonal variables from the original correlated predictors. Because thegoal is to go from the orthogonal variables back to the original predictors, however, themore appropriate set of coefficients are the coefficients that derive the original predic-tors from the orthogonal variables. In other words, instead of regressing the orthogo-nal variables on the original predictors, the original predictors are regressed on theorthogonal variables. Because regression coefficients are assigned to the uncorrelated

248 ORGANIZATIONAL RESEARCH METHODS

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

variables rather than to the correlated original predictors, the problem of correlatedpredictors is not reintroduced with this method. Johnson termed the weights resultingfrom the combination of the two sets of squared regression coefficients epsilons (ε).They have been more commonly referred to as relative weights (e.g., J. W. Johnson,2001a), which is consistent with the original use of the term used by Hoffman (1960,1962).

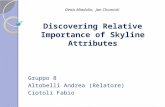

A graphic representation of J. W. Johnson’s (2000a) relative weights is presented inFigure 1. In this three-variable example, the original predictors (Xj) are transformed totheir maximally related orthogonal counterparts (Zk), which are then used to predictthe criterion (Y). The regression coefficients of Y on Zk are represented by βk, and theregression coefficients of Xj on Zk are represented by λjk. Because the Zk’s areuncorrelated, the regression coefficients of Xj on Zk are equal to the correlationsbetween Xj and Zk. Thus, each squared λjk represents the proportion of variance in Zk

accounted for by Xj (J. W. Johnson, 2000a). To compute the relative weight for Xj, mul-tiply the proportion of variance in each Zk accounted for by Xj by the proportion of vari-ance in Y accounted for by each Zk and sum the products. For example, the relativeweight for X1 would be calculated as

ε λ β λ β λ β1 112

12

122

22

132

32= + + . (2)

Epsilon is an attractive index that has a simple logic behind its development. Therelative importance of the Zk’s to Y, represented by βk

2, is unambiguous because the Zk’sare uncorrelated. The relative contribution of Xj to each Zk, represented by λjk

2, is alsounambiguous because the Zk’s are determined entirely by the Xj’s, and the λjk’s are re-gression coefficients on uncorrelated variables. The λjk

2’s sum to 1, so each representsthe proportion of βk

2 that is attributable to Xj. Multiplying these terms ( )λ βjk k2 2 yields

the proportion of variance in Y that is associated with Xj through its relationship withZk, and summing across all Zk’s yields the total proportion of variance in Y that is asso-ciated with Xj.

Relative weights have an advantage over dominance analysis in that they can beeasily and quickly computed with any number of predictors. As noted earlier, domi-nance analysis requires considerable computational effort that typically limits thenumber of predictors to 10 or fewer. A possible criticism is that Boya and Cramer’s(1980) point about Green et al.’s (1978) measure not being invariant to orthogonal-izing procedures also applies to relative weights.

Illustration and Interpretation of Relative Importance Methods

To illustrate the use and interpretation of relative importance methods, we gener-ated a correlation matrix and analyzed it using several of the indices described above.Although this is a contrived correlation matrix, the range and magnitude of the valuesare consistent with the research literature. The upper portion of Table 1 contains thecorrelation matrix; the lower portion contains the results of several importance analy-ses. In this example, organizational commitment was the criterion variable predictedby job satisfaction, leader communication, participative leadership, and worker moti-vation. It is important to note that this correlation matrix was intentionally designed toyield identical rank orders of the predictors across all importance methods. This was

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 249

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

done to illustrate the advantages of the newer statistics, even when the rank orderswere identical.

Importance indexed via squared correlations indicated that all four predictors wereimportant. Examination of the magnitude of the estimates indicated that job satisfac-tion was approximately twice as important as leader communication and participativeleadership, and job satisfaction was approximately 4 times as important as workermotivation. However, the conclusions drawn using squared beta weights and the prod-uct measure were radically different. Using squared betas, only job satisfaction and

250 ORGANIZATIONAL RESEARCH METHODS

β2

β3

β1

λ31

λ11

λ33

λ32

λ23

λ21

λ13

λ12

λ22

X1

X2

X3

Z1

Z2

Z3

Y

Figure 1: Graphic Representation of J. W. Johnson’s (2000a) Relative Weights for ThreePredictors

Table 1Example Correlation Matrix and Relative Importance Weights

Calculated by Different Methods

Variable 1 2 3 4 5

1. Organizational commitment 1.002. Job satisfaction .50 1.003. Leader communication .40 .40 1.004. Participative leadership .35 .40 .60 1.005. Worker motivation .25 .40 .30 .40 1.00

Model R 2 = .301.

Predictor r yi2 β j

2 βjryj C j εj βjryj (%) Cj (%) εj (%)

Job satisfaction .250 .151 .195 .163 .163 64.6 54.0 54.1Leader communication .160 .040 .080 .075 .075 26.5 24.8 24.9Participative leadership .123 .005 .025 .045 .044 8.5 14.8 14.5Worker motivation .063 .000 .001 .020 .020 0.5 6.5 6.5Sum .301 .301 .301 100.0 100.0 100.0

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

leader communication emerged as important predictors, with job satisfaction beingapproximately 4 times as important as leader communication. Using the product mea-sure, job satisfaction and leader communication again emerged as important predic-tors, but now job satisfaction was approximately twice as important as leader commu-nication. Two sets of discrepancies are illustrated in these analyses. The first set ofdiscrepancies was that different predictors emerged as important depending on whichstatistics were applied to the correlation matrix. The second set of discrepancies wasthat the magnitude of relative importance shifted dramatically depending on whichstatistics were applied to the correlation matrix. Job satisfaction was less than twice asimportant as leader communication according to the squared correlations, but job sat-isfaction was nearly 4 times as important according to the squared betas. In contrast tothe ambiguous and inconsistent conclusions obtained using traditional methods ofimportance, the clearer and more consistent conclusions were obtained using domi-nance and epsilon. These estimates were nearly identical and indicated that job satis-faction was approximately twice as important as leader communication and 4 times asimportant as participative leadership. Worker motivation was a relatively unimportantpredictor of commitment.

Table 1 shows that importance weights computed using the product measure, domi-nance, and epsilon sum to the model R2. Therefore, when these importance estimatesare rescaled by dividing them by the model R2 and multiplying by 100, they may beinterpreted as the percentage of the model R2 associated with each predictor. For exam-ple, job satisfaction accounted for 54% of the predictable variance in organizationalcommitment according to both dominance and epsilon but 65% according to the prod-uct measure. The characteristic of importance weights summing to R2 greatlyenhances the interpretability of these weights, making them much easier to present topeople who do not possess a great understanding of statistics.

Summary

Considering all the relative importance indices just reviewed, we suggest that thepreferred methods among those currently available are Budescu’s (1993) dominanceanalysis and J. W. Johnson’s (2000a) relative weights. These indices do not have logi-cal flaws in their development that make it impossible to consider them as reasonablemeasures of predictor importance. Both methods yield importance weights that repre-sent the proportionate contribution each predictor makes to R2, and both consider apredictor’s direct effect and its effect when combined with other predictors. Also, theyyield estimates of importance that make conceptual sense. This is of course highlysubjective, but it is relatively easy to eliminate other indices from consideration basedsolely on this criterion.

Both indices yield remarkably similar results when applied to the same data. J. W.Johnson (2000a) computed relative weights and the quantitative dominance analysismeasure in 31 different data sets. Each index was converted to a percentage of R2, andthe mean absolute deviation between importance indices computed using the twomethods was only 0.56%. The fact that these two indices, which are based on very dif-ferent approaches to determining predictor importance, yield results that differ onlytrivially provides some impressive convergent validity evidence that they are measur-ing the same construct. Either index can therefore be considered equally appropriate as

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 251

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

a measure of predictor importance. Relative weights can be computed much morequickly than can dominance analysis weights, however, and are the only availablechoice when the number of predictors is greater than 15.

Applications of Relative Importance Methodologiesin Organizational Research

Although dominance analysis and relative weights are fairly recent developments,they have been applied in several studies relevant to organizational research. Forexample, James (1998) used dominance analysis to determine the relative importanceof cognitive ability, self-report measures of achievement motivation, and conditionalreasoning tests of achievement motivation for predicting academic performance in-dexed via college grade point average. Similarly, Bing (1999) used dominance analy-sis to gain a better understanding of the relative importance of three personality attri-butes to the criteria of academic honors and college grade point average.

Dominance analysis has also been applied to the predictors of a wide range of jobattitudes and job behaviors. Behson (2002) used dominance analysis to assess the rela-tive importance of several work-family and organizational constructs to a variety ofemployee outcome variables (e.g., job satisfaction, work-to-family conflict). Simi-larly, LeBreton, Binning, Adorno, and Melcher (2004 [this issue]) used dominanceanalysis to test the relative importance of job-specific affect and the Big Five personal-ity traits for predicting job satisfaction, withdrawal cognitions, and withdrawal behav-ior. Baltes, Parker, Young, Huff, and Altmann (2004 [this issue]) used dominanceanalysis to identify which climate dimensions were most important for predicting cri-teria including job satisfaction, intentions to quit, and job motivation. Eby, Adams,Russell, and Gaby (2000) used dominance analysis to gain a better understanding ofhow various employee attitudes and contextual variables relate to employees’ percep-tions of their organization’s readiness for large-scale change. Whanger (2002) ana-lyzed meta-analytic correlation matrices using dominance analysis to explore the rela-tive importance of job autonomy, skill variety, performance feedback, supervisorsatisfaction, and pay satisfaction in the prediction of affective organizational commit-ment, general job satisfaction, and intrinsic motivation. These studies illustrate howrelative importance indices can be used to examine how various types of predictorsrelate to a wide range of organizational criteria.

One of the most frequent uses of relative importance indices has been to model thecognitive processes associated with evaluating employees. J. W. Johnson (2001b)used relative weights to evaluate the extent to which supervisors within each of eightjob families consider different dimensions of task performance and contextual perfor-mance when making overall evaluations. Lievens, Highhouse, and De Corte (2003)used relative weights to evaluate the relative importance of applicants’ Big Five andgeneral mental ability scores to managers’ hirability decisions. Some studies haveexamined different types of raters who have rated the same ratees to determine if char-acteristics of the rater influence perceptions of importance. For example, J. W. John-son and Johnson (2001) used relative weights to evaluate the relative importance of 24specific dimensions of performance to overall ratings of executives made by theirsupervisors, peers, and subordinates. They found that the type of performance that wasimportant to supervisors tended to be very different from the type of performance thatwas important to subordinates. Cochran (1999) examined how characteristics of the

252 ORGANIZATIONAL RESEARCH METHODS

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

rater and the ratee interact to influence the relative importance of performance on spe-cific dimensions to overall evaluations. Using relative weights, she found that maleand female raters had similar perceptions of what is important to advancementpotential when the ratee was male but that perceptions differed when the ratee wasfemale.

Some studies have investigated differences in relative importance across cultures.Using relative weights, J. W. Johnson and Olson (1996) found that the relative impor-tance of individual supervisor attributes to overall performance was related to differ-ences between countries in Hofstede’s (1980) cultural value dimensions of power dis-tance, uncertainty avoidance, individualism, and masculinity. Similarly, Robie,Johnson, Nilsen, and Hazucha (2001) used relative weights to examine differencesbetween countries in the relative importance of 24 performance dimensions to rat-ings of overall performance. Suh, Diener, Oishi, and Triandis (1998) used dominanceanalysis to investigate the relative importance of emotions, cultural norms, and extra-version in predicting life satisfaction across cultures.

There are many ways to apply relative importance indices when analyzing surveydata. Relative importance analysis can reveal the specific areas that contribute the mostto employee or customer satisfaction, which helps decision makers set priorities forwhere to apply scarce organizational resources (Lundby & Fenlason, 2000; Whanger,2002). It can also shorten surveys by eliminating the need for direct ratings of impor-tance. Lundby and Fenlason (2000) compared relative weights to direct ratings ofimportance and employee comments. An examination of employee comments sup-ported the notion that relative weights better reflected the importance employeesplaced on different issues because a greater proportion of written comments weredevoted to those issues that received higher relative weights. Most direct ratings ofimportance tend to cluster around the high end of the scale, with very little variability.Especially with employee opinion surveys, respondents would be likely to rate everyissue as being important for fear that anything that is not given high importance ratingswill be taken away from them. Relative weights allow decision makers to allocatescarce resources to the issues that are actually most highly related to respondentsatisfaction.

There are, of course, many other substantive questions that can be addressed by rel-ative importance methods across a broad spectrum of organizational researchdomains. Some examples include employee selection (e.g., which exercises in anassessment center are most important for predicting criteria such as job performance,salary, and promotion?), training evaluation (e.g., what are the most important predic-tors of successful transfer of training?), culture and climate (e.g., how important arethe various dimensions of culture and climate in predicting organizationally valuedcriteria such as job satisfaction, turnover, organizational commitment, job perfor-mance, withdrawal cognitions, and/or perceived organizational support?), and leadereffectiveness (e.g., which dimensions of transactional and transformational leadershipare most predictive of subordinate ratings of leader effectiveness or overall firmeffectiveness?).

Conclusion

We believe that research on relative importance methods is still in its infancy buthas progressed tremendously in recent years. Additional work is needed on refining

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 253

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

the existing methods as well as developing multivariate extensions of methods such asdominance analysis and relative weights. Furthermore, myriad avenues exist for inte-grating relative importance methods into a wide range of substantive and methodolog-ical areas of research. We hope that the research reviewed in this article and the resultsof the articles presented in this feature topic act as a catalyst for additional work usingrelative importance methods.

References

Achen, C. H. (1982). Interpreting and using regression. Beverly Hills, CA: Sage.Azen, R., & Budescu, D. V. (2003). The dominance analysis approach for comparing predictors

in multiple regression. Psychological Methods, 8, 129-148.Azen, R., Budescu, D. V., & Reiser, B. (2001). Criticality of predictors in multiple regression.

British Journal of Mathematical and Statistical Psychology, 54, 201-225.Baltes, B. B., Parker, C. P., Young, L. M., Huff, J. W., & Altmann, R. (2004). The practical utility

of importance measures in assessing the relative importance of work-related perceptionsand organizational characteristics on work-related outcomes. Organizational ResearchMethods, 7, 326-340.

Behson, S. J. (2002). Which dominates? The relative importance of work-family organizationalsupport and general organizational context on employee outcomes. Journal of VocationalBehavior, 61, 53-72.

Bing, M. N. (1999). Hypercompetitiveness in academia: Achieving criterion-related validityfrom item context specificity. Journal of Personality Assessment, 73, 80-99.

Borman, W. C., White, L. A., & Dorsey, D. W. (1995). Effects of ratee task performance and in-terpersonal factors on supervisor and peer performance ratings. Journal of Applied Psy-chology, 80, 168-177.

Boya, Ö. Ü., & Cramer, E. M. (1980). Some problems in measures of predictor variable impor-tance in multiple regression. Unpublished manuscript, University of North Carolina atChapel Hill.

Bring, J. (1994). How to standardize regression coefficients. American Statistician, 48, 209-213.

Bring, J. (1996). A geometric approach to compare variables in a regression model. AmericanStatistician, 50, 57-62.

Budescu, D. V. (1993). Dominance analysis: A new approach to the problem of relative impor-tance of predictors in multiple regression. Psychological Bulletin, 114, 542-551.

Cochran, C. C. (1999). Gender influences on the process and outcomes of rating performance.Unpublished doctoral dissertation, University of Minnesota, Minneapolis.

Cohen, J., & Cohen, P. (1983). Applied multiple regression/correlation analysis for the behaviorsciences (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum.

Courville, T., & Thompson, B. (2001). Use of structure coefficients in published multiple re-gression articles: β is not enough. Educational and Psychological Measurement, 61, 229-248.

Darlington, R. B. (1968). Multiple regression in psychological research and practice. Psycho-logical Bulletin, 69, 161-182.

Darlington, R. B. (1990). Regression and linear models. New York: McGraw-Hill.Dunn, W. S., Mount, M. K., Barrick, M. R., & Ones, D. S. (1995). Relative importance of per-

sonality and general mental ability in managers’ judgments of applicant qualifications.Journal of Applied Psychology, 80, 500-509.

Eby, L. T., Adams, D. M., Russell, J. E. A., & Gaby, S. H. (2000). Perceptions of organizationalreadiness for change: Factors related to employees’ reactions to the implementation ofteam-based selling. Human Relations, 53, 419-442.

254 ORGANIZATIONAL RESEARCH METHODS

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

Efron, B. (1979). Bootstrap methods: Another look at the jackknife. Annals of Statistics, 7, 1-26.Englehart, M. D. (1936). The technique of path coefficients. Psychometrika, 1, 287-293.Gibson, W. A. (1962). Orthogonal predictors: A possible resolution of the Hoffman-Ward con-

troversy. Psychological Reports, 11, 32-34.Goldberger, A. S. (1964). Econometric theory. New York: John Wiley & Sons.Green, P. E., Carroll, J. D., & DeSarbo, W. S. (1978). A new measure of predictor variable im-

portance in multiple regression. Journal of Marketing Research, 15, 356-360.Green, P. E., Carroll, J. D., & DeSarbo, W. S. (1980). Reply to “A comment on a new measure of

predictor variable importance in multiple regression.” Journal of Marketing Research, 17,116-118.

Green, P. E., & Tull, D. S. (1975). Research for marketing decisions (3rd ed.). Englewood Cliffs,NJ: Prentice Hall.

Grisaffe, D. (1993, February). Appropriate use of regression in customer satisfaction analyses:A response to William McLauchlan. Quirk’s Marketing Research Review, 11-17.

Healy, M. J. R. (1990). Measuring importance. Statistics in Medicine, 9, 633-637.Heeler, R. M., Okechuku, C., & Reid, S. (1979). Attribute importance: Contrasting measure-

ments. Journal of Marketing Research, 16, 60-63.Hobson, C. J., & Gibson, F. W. (1983). Policy capturing as an approach to understanding and im-

proving performance appraisal: A review of the literature. Academy of Management Re-view, 8, 640-649.

Hobson, C. J., Mendel, R. M., & Gibson, F. W. (1981). Clarifying performance appraisal crite-ria. Organizational Behavior and Human Performance, 28, 164-188.

Hoffman, P. J. (1960). The paramorphic representation of clinical judgment. Psychological Bul-letin, 57, 116-131.

Hoffman, P. J. (1962). Assessment of the independent contributions of predictors. Psychologi-cal Bulletin, 59, 77-80.

Hofstede, G. (1980). Culture’s consequences: International differences in work-related values.Beverly Hills, CA: Sage.

Jaccard, J., Brinberg, D., & Ackerman, L. J. (1986). Assessing attribute importance: A compari-son of six methods. Journal of Consumer Research, 12, 463-468.

Jackson, B. B. (1980). Comment on “A new measure of predictor variable importance in multi-ple regression.” Journal of Marketing Research, 17, 113-115.

James, L. R. (1998). Measurement of personality via conditional reasoning. Organizational Re-search Methods, 1, 131-163.

Johnson, J. W. (2000a). A heuristic method for estimating the relative weight of predictor vari-ables in multiple regression. Multivariate Behavioral Research, 35, 1-19.

Johnson, J. W. (2000b, April). Practical applications of relative importance methodology in I/Opsychology. Symposium presented at the 15th annual conference of the Society for Indus-trial and Organizational Psychology, New Orleans, LA.

Johnson, J. W. (2001a). Determining the relative importance of predictors in multiple regres-sion: Practical applications of relative weights. In F. Columbus (Ed.), Advances in psychol-ogy research (Vol. 5, pp. 231-251). Huntington, NY: Nova Science.

Johnson, J. W. (2001b). The relative importance of task and contextual performance dimensionsto supervisor judgments of overall performance. Journal of Applied Psychology, 86, 984-996.

Johnson, J. W., & Johnson, K. M. (2001, April). Rater perspective differences in perceptions ofexecutive performance. In M. Rotundo (Chair), Task, citizenship, and counterproductiveperformance: The determination of organizational decisions. Symposium conducted at the16th annual conference of the Society for Industrial and Organizational Psychology, SanDiego, CA.

Johnson, J. W., & Olson, A. M. (1996, April). Cross-national differences in perceptions of su-pervisor performance. In D. Ones & C. Viswesvaran (Chairs), Frontiers of international I/O

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 255

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

psychology: Empirical findings for expatriate management. Symposium conducted at the11th annual conference of the Society for Industrial and Organizational Psychology, SanDiego, CA.

Johnson, R. M. (1966). The minimal transformation to orthonormality. Psychometrika, 31,61-66.

Kruskal, W. (1987). Relative importance by averaging over orderings. American Statistician,41, 6-10.

Kruskal, W., & Majors, R. (1989). Concepts of relative importance in recent scientific literature.American Statistician, 43, 2-6.

Lane, D. M., Murphy, K. R., & Marques, T. E. (1982). Measuring the importance of cues in pol-icy capturing. Organizational Behavior and Human Performance, 30, 231-240.

LeBreton, J. M., Binning, J. F., Adorno, A. J., & Melcher, K. M. (2004). Importance of personal-ity and job-specific affect for predicting job attitudes and withdrawal behavior. Organiza-tional Research Methods, 7, 300-325.

LeBreton, J. M., & Johnson, J. W. (2001, April). Use of relative importance methodologies in or-ganizational research. Symposium presented at the 16th annual conference of the Societyfor Industrial and Organizational Psychology, San Diego, CA.

LeBreton, J. M., & Johnson, J. W. (2002, April). Application of relative importance methodolo-gies to organizational research. Symposium presented at the 17th annual conference of theSociety for Industrial and Organizational Psychology, Toronto, Canada.

LeBreton, J. M., Ployhart, R. E., & Ladd, R. T. (2004). A Monte Carlo comparison of relativeimportance methodologies. Organizational Research Methods, 7, 258-282.

Lehman, W., & Simpson, D. (1992). Employee substance use and on-the-job behaviors. Journalof Applied Psychology, 77, 309-321.

Lievens, F., Highhouse, S., & De Corte, W. (2003, April). The importance of traits and abilitiesin managers’hirability decisions as a function of method of assessment. Poster presented atthe 18th annual conference of the Society for Industrial and Organizational Psychology,Orlando, FL.

Lindeman, R. H., Merenda, P. F., & Gold, R. Z. (1980). Introduction to bivariate andmultivariate analysis. Glenview, IL: Scott, Foresman and Company.

Lundby, K. M., & Fenlason, K. J. (2000, April). An application of relative importance analysisto employee attitude research. In J. W. Johnson (Chair), Practical applications of relativeimportance methodology in I/O psychology. Symposium conducted at the 15th annual con-ference of the Society for Industrial and Organizational Psychology, New Orleans, LA.

McLauchlan, W. G. (1992, October). Regression-based satisfaction analyses: Proceed with cau-tion. Quirk’s Marketing Research Review, 11-13.

Myers, J. H. (1996). Measuring attribute importance: Finding the hot buttons. Canadian Journalof Marketing Research, 15, 23-37.

Neter, J., Wasserman, W., & Kutner, M. H. (1985). Applied linear statistical models (2nd ed.).Homewood, IL: Irwin.

Pratt, J. W. (1987). Dividing the indivisible: Using simple symmetry to partition variance ex-plained. In T. Pukilla & S. Duntaneu (Eds.), Proceedings of second Tampere conference instatistics (pp. 245-260). University of Tampere, Finland.

Robie, C., Johnson, K. M., Nilsen, D., & Hazucha, J. (2001). The right stuff: Understanding cul-tural differences in leadership performance. Journal of Management Development, 20,639-650.

Rotundo, M., & Sackett, P. R. (2002). The relative importance of task, citizenship, and counter-productive performance to global ratings of job performance: A policy capturing approach.Journal of Applied Psychology, 87, 66-80.

Suh, E., Diener, E., Oishi, S., & Triandis, H. C. (1998). The shifting basis of life satisfactionjudgments across cultures: Emotions versus norms. Journal of Personality and Social Psy-chology, 74, 482-493.

256 ORGANIZATIONAL RESEARCH METHODS

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from

Theil, H. (1987). How many bits of information does an independent variable yield in a multipleregression? Statistics and Probability Letters, 6, 107-108.

Theil, H., & Chung, C. F. (1988). Information-theoretic measures of fit for univariate andmultivariate linear regressions. American Statistician, 42, 249-252.

Thomas, D. R., & Decady, Y. J. (1999). Point and interval estimates of the relative importance ofvariables in multiple linear regression. Unpublished manuscript, Carleton University,Ottawa, Canada.

Thomas, D. R., Hughes, E., & Zumbo, B. D. (1998). On variable importance in linear regression.Social Indicators Research, 45, 253-275.

Thompson, B., & Borrello, G. M. (1985). The importance of structure coefficients in regressionresearch. Educational and Psychological Measurement, 45, 203-209.

Ward, J. H. (1962). Comments on “The paramorphic representation of clinical judgment.” Psy-chological Bulletin, 59, 74-76.

Ward, J. H. (1969). Partitioning of variance and contribution or importance of a variable: A visitto a graduate seminar. American Educational Research Journal, 6, 467-474.

Whanger, J. C. (2002, April). The application of multiple regression dominance analysis to or-ganizational behavior variables. In J. M. LeBreton & J. W. Johnson (Chairs), Application ofrelative importance methodologies to organizational research. Symposium presented atthe 17th annual conference of the Society for Industrial and Organizational Psychology,Toronto, Canada.

Zedeck, S., & Kafry, D. (1977). Capturing rater policies for processing evaluation data. Organi-zational Behavior and Human Performance, 18, 269-294.

Jeff W. Johnson is a senior staff scientist at Personnel Decisions Research Institutes (PDRI). He received hisPh.D. in industrial/organizational psychology from the University of Minnesota. He has directed andcarried out many applied organizational research projects for a variety of government and private-sector clients, with a particular emphasis on the development and validation of personnel assessment andselection systems for a variety of jobs. His primary research interests are in the areas of personnel selection,performance measurement, research methods, and statistics.

James M. LeBreton is an assistant professor of psychology at Wayne State University in Detroit, Michigan.He received his Ph.D. in industrial and organizational psychology with a minor in statistics from the Univer-sity of Tennessee. He also received his B.S. in psychology and his M.S. in industrial and organizational psy-chology from Illinois State University. His research focuses on application of social cognition to personalitytheory and assessment, applied psychometrics, and the application and development of new research meth-ods and statistics to personnel selection and work motivation.

Johnson, LeBreton / HISTORY AND USE OF RELATIVE IMPORTANCE 257

at UNIV OF TEXAS AUSTIN on January 25, 2010 http://orm.sagepub.comDownloaded from