Dual Skew Divergence Loss for Neural Machine Translation · 2019. 8. 23. · (Huszr,2015) showed...

Transcript of Dual Skew Divergence Loss for Neural Machine Translation · 2019. 8. 23. · (Huszr,2015) showed...

-

ARXIV, APRIL 2021 1

Controllable Dual Skew Divergence Loss forNeural Machine Translation

Zuchao Li, Hai Zhao∗, Yingting Wu, Fengshun Xiao, and Shu Jiang

Abstract—In sequence prediction tasks like neural machine translation, training with cross-entropy loss often leads to models thatovergeneralize and plunge into local optima. In this paper, we propose an extended loss function called dual skew divergence (DSD) thatintegrates two symmetric terms on KL divergences with a balanced weight. We empirically discovered that such a balanced weight playsa crucial role in applying the proposed DSD loss into deep models. Thus we eventually develop a controllable DSD loss forgeneral-purpose scenarios. Our experiments indicate that switching to the DSD loss after the convergence of ML training helps modelsescape local optima and stimulates stable performance improvements. Our evaluations on the WMT 2014 English-German andEnglish-French translation tasks demonstrate that the proposed loss as a general and convenient mean for NMT training indeed bringsperformance improvement in comparison to strong baselines.

Index Terms—Dual Skew Divergence, Controllable Optimization, Neural Machine Translation.

F

1 INTRODUCTION

N EURAL machine translation (NMT) [1], [2], [3] hasshown remarkable performance for diverse languagepairs by using the sequence-to-sequence learning framework.Unlike the statistical machine translation (SMT) [4], whichexplicitly models linguistic features of training corpora,NMT aims at building an end-to-end model that directlytransforms a source language sequence into the target one[5], [6].

During NMT training, maximum likelihood (ML) isthe most commonly used strategy, and it maximizes thelikelihood of a target sentence conditioned on the sourcethroughout training corpus. In practice, ML-based loss isoften implemented in a word-level cross entropy form, whichhas proven to be effective for NMT modeling; however, [7]pointed out that ML training suffers from two drawbacks.First, the model is only exposed to training distribution andignores its own prediction errors during training. Second,the model parameters are optimized by a word-level lossduring training, while during inference, the model predictionis evaluated using a sequence-level metric such as BLEU [8].To mitigate such problems, several recent works focused onthe research of more effective and direct training strategies.[9] advocated a curriculum learning approach that graduallyforces the model to take its mistakes into account duringtraining predictions as it must do during inference. [10]proposed a sequence-level loss function based on errors madeduring beam search. [11] applied minimum risk training

• This paper was partially supported by National Key Research andDevelopment Program of China (No. 2017YFB0304100), Key Projects ofNational Natural Science Foundation of China (U1836222 and 61733011),Huawei-SJTU long term AI project, Cutting-edge Machine ReadingComprehension and Language Model (Corresponding author: Hai Zhao).

• Z. Li, H. Zhao, Y. Wu, F. Xiao, and S. Jiang are with the Department ofComputer Science and Engineering, Shanghai Jiao Tong University, andalso with Key Laboratory of Shanghai Education Commission for IntelligentInteraction and Cognitive Engineering, Shanghai Jiao Tong University,and also with MoE Key Lab of Artificial Intelligence, AI Institute, ShanghaiJiao Tong University. E-mail: [email protected], [email protected].

(MRT) from SMT to optimize NMT modeling directly interms of BLEU score. Some other works resorted to reinforce-ment learning based approaches [7], [12].

In this work, we introduce a novel loss called dual skewdivergence (DSD) to alleviate the issues of the original ML-based loss. It can be proved that maximizing the likelihoodis equal to minimizing the Kullback-Leibler (KL) divergence[13] KL(Q||P ) between the real data distribution Q andthe model prediction P . According to [14], minimizingKL(Q||P ) tends to find a P that covers the entire true datadistribution and ignores the rest of incorrect candidates,which leads to models that overgeneralize and generateimplausible samples during inference. As the counterpartof the original KL(Q||P ) loss, minimizing the form ofKL(P ||Q) result in P as the probability mass accordingto the model’s prediction which may correspondingly makethe model less trust the data distribution of training data.To benefit from both of these divergence measures andbalance the the overfitting and underfitting in model training,we interpolate KL(Q||P ) and KL(P ||Q) to form a newDSD loss. As the two symmetric KL-divergence terms areinterpolated by a preset constant (called balanced weight) inthe DSD loss, it is shown that such simple setting cannot wellhandle the case when model training focus may alternate ondeep and complicated models. Thus we further propose anadaptive balanced weighting approach by sampling on thedivergence, which results in the controllable DSD loss forgeneral model training scenarios.

We carry out experiments on the English-German andEnglish-French translation tasks from WMT 2014 and com-pare our models to other works with similar model sizeand matching datasets. Our early experiments indicate thatswitching to DSD loss after the convergence of ML trainingintroduces a simulated annealing like mechanism and helpsmodels quickly escape local optima. Evaluations on the testsets show that our DSD-extended models both outperformtheir ML-only counterparts and significantly outperform aseries of strong baselines.

arX

iv:1

908.

0839

9v2

[cs

.CL

] 1

7 A

pr 2

021

-

ARXIV, APRIL 2021 2

2 NEURAL MACHINE TRANSLATIONNMT generally adopts an encoder-decoder model architec-ture. These architectures roughly fall into three categories:RNN-based [5], CNN-based [15], and Transformer-basedmodels [3]. In our experiments for this work, we includerelevant models for these three categories. This section willgive a brief introduction to these models.

In an RNN-based NMT model, the encoder is a bidi-rectional recurrent neural network (RNN) such as one thatuses Gated Recurrent Units (GRUs) [16] or a Long Short-Term Memory (LSTM) network [17]. The forward RNN readsan input sequence x = (x1, ..., xm) from left to right andcalculates a forward sequence of hidden states (

−→h 1, ...,

−→hm)

as the representation of the source sentence. Similarly, thebackward RNN reads the input sequence in the oppositedirection and learns a backward sequence (

←−h 1, ...,

←−hm). The

hidden states of the two RNNs−→h i and

←−h i are concatenated

to obtain the source annotation vector hi = [−→h i,←−h i]

T as theinitial state of the decoder.

The decoder is a forward RNN that predicts a correspond-ing translation y = (y1, ..., yn) step by step. The translationprobability can be formulated as follows:

p(yj |y

-

ARXIV, APRIL 2021 3

Obviously, DKL(Q||P ) is not identical to its inverse formDKL(P ||Q). DKL(Q||P ) and DKL(P ||Q) on N trainingsamples can be written as:

DKL(Q||P ) =n∑

i=1

Q(xi) logQ(xi)

P (xi),

DKL(P ||Q) =N∑

i=n

P (xi) logP (xi)

Q(xi).

In minimizing the DKL(Q||P ) divergence, since Q is a truedata distribution, it is one-hot when without smoothing, thatis, the correct place is 1 and the wrong place is 0. The meaningis to drive the model’s prediction distributed similarlyin the correct place while ignoring all the wrong places.Q(x) in DKL(Q||P ) as a probability mass is determinedby the true data distribution in DKL(Q||P ) formula. Inother words, the model’s focus on the similarity of thedistribution is determined by the probability quality. While inminimizing theDKL(Q||P ) divergence, the model’s focus onthe distribution similarity is determined by the distribution ofmodel’s prediction P (x) instead of the true data distribution.

In the training of NMT model, the model is essentiallyrequired to predict a high probability on the correct word asmuch as possible, which can be done through DKL(Q||P ).Meanwhile, there are some facts that need to be considered inNMT. First, the presence of noise in the training data samplesleads to inaccuracy of Q and the existence of multipletarget candidates in translation leads to inadequate of Q.Overemphasizing the distribution similarity of the correctposition will lead to overfitting or poor generalization ability.Second, if we completely rely on the predictive distributionas the probability mass in DKL(P ||Q), it is likely that themodel cannot converge, because the model cannot make acorrect estimate of the data distribution in the early trainingperiod. In general, the two different objectives have their ownadvantages and disadvantages, so it is hard to say which isactually better. Despite this, their differences motivate us tofind a more proper loss function.

3.4 Skew DivergenceThe above analysis shows that DKL(P ||Q) may be also agood alternative loss function for NMT training. However,DKL(P ||Q) is only well-defined when Q is not equal tozero, which is not guaranteed in NMT training. To overcomethe this, we will use two different approximation functionsinstead. One such approximation function is the α-skewdivergence [18]:

sα(P,Q) = DKL(P ||αP + (1− α)Q),where α controls the degree to which the function approxi-mates DKL(P ||Q), and 0 ≤ α ≤ 1.

By assigning a small value to α, we can simulate the be-havior of minimizing DKL(P ||Q) with an α-skew divergenceapproximation. In our experiments, α is set to to a constant0.01.

3.5 Dual Skew DivergenceFinally, we introduce a new loss with the aim of retainingthe strengths of the ML objective while minimizing its

weaknesses. In order to obtain a symmetrical form of theloss, we also approximate DKL(Q||P ) with sα(Q,P ). Byinterpolating between both directions of α-skew divergence,we derive the new loss function, which we refer to as dualskew divergence (DSD), below. The interpolated DSD functionis given by

DDS = βsα(Q,P ) + (1− β)sα(P,Q), (2)in which β is called balanced weight as the interpolationcoefficient, and 0 ≤ β ≤ 1.

In terms of notations in Eq.(3.1), we rewrite Eq.(2) in acomputationally implementable form as below:

JDS = −1

n

n∑

i=1

[βyi log((1− α)ŷi + αyi)

−(1− β)ŷi log(ŷi)+(1− β)ŷi log((1− α)yi + αŷi)],

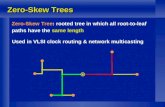

where n is the length of target sequence, and the term aboutH(Q) is omitted since it does not affect the gradient asdiscussed above. Note that in addition to the above equation,we also add a small constant (10−12 in our implementation)to each log term for numerical stability. Our derivation ofthe proposed DSD loss is summarized in the step by stepdiagram of Figure 1.

In machine translation, the DSD loss is ideally usedto reduce overfitting of the model from the target in thetraining set and robustness losing as compared to word-levelML training. In the mainstream ML training in machinetranslation, a smoothing mechanism [19] is usually adoptedfor similar purposes. There is an inconsistency with thereality of machine translation since ML+smoothing actuallygives all incorrect tokens an equal probability of being correctdue to special phenomena like synonyms and synonyms. As-signing equal probability to incorrect tokens is also referredto as negative diversity ignorance problem, which is proposedin [20]. Compared with the D2GPo loss in [20], althoughthe motivation is similar, the D2GPo loss mainly relieson the prior knowledge from the pre-training embeddingfor incorrect tokens, which provide a more flexible andrealistic distribution; while the DSD loss does not rely onthe external distribution at all, it only relies on the model’sown estimation of the predicted token distribution. In DSDloss, symmetrical loss calculations are built, one is optimizedbased on the correct token, and the other is optimized basedon the model’s own estimation. Through adversarial-liketraining between loss items, the ultimate goal is achieved.

3.6 Controllable Dual Skew DivergenceOur proposed dual skew divergence consists of two terms:symmetric skew divergence sα(Q,P ) and sα(P,Q). The firstterm is intended to focus the model on the correct wordonly, while the second term uses the focus distribution,estimated by itself, to learn the distribution of all words.These two influence each other and sometimes conflict. Whensα(Q,P ) is weighted more heavily, the model bases itspredictions more on the true distribution Q of the trainingdata, and when sα(P,Q) is weighted more heavily, themodel assigns the weight of the loss of each word based onits own prediction distribution. This conflict and influence

-

ARXIV, APRIL 2021 4

∑

e(t)

β(t)

+

+ +

-

P

set point

Feedback

(a) (b)

cDSD Loss

I

∑

Kp1 + exp e(t)

−Kit∑

j=0

e(j)

1

Kp1 + exp e(t)

−Kit∑

j=0

e(j)

1

Symmetry

Approximation

Interpolation

Expansion

Skew Divergence

Kullback-Leibler Divergence

Dual Skew Divergence

PI ControlControllable Dual Skew Divergence

DKL(Q||P ) = Ex∼Q[logQ(x)− logP (x)]

DKL(P ||Q) = Ex∼P [− logQ(x) + logP (x)]

sα(Q,P ) = DKL(Q||αQ+ (1− α)P )

sα(P,Q) = DKL(P ||αP + (1− α)Q)

DDS = βsα(Q,P ) + (1− β)sα(P,Q)

JDS = −1

n

n∑

i=1

[βyi log((1−α)ŷi+αyi)−(1−β)ŷi log(ŷi)+(1−β)ŷi log((1−α)yi+αŷi)]

JCDS = −1

n

n∑

i=1

[β(t)yi log((1−α)ŷi+αyi)−(1−β(t))ŷi log(ŷi)+(1−β(t))ŷi log((1−α)yi+αŷi)]

1

DKL(Q||P ) = Ex∼Q[logQ(x)− logP (x)]

DKL(P ||Q) = Ex∼P [− logQ(x) + logP (x)]

sα(Q,P ) = DKL(Q||αQ+ (1− α)P )

sα(P,Q) = DKL(P ||αP + (1− α)Q)

DDS = βsα(Q,P ) + (1− β)sα(P,Q)

JDS = −1

n

n∑

i=1

[βyi log((1−α)ŷi+αyi)−(1−β)ŷi log(ŷi)+(1−β)ŷi log((1−α)yi+αŷi)]

JCDS = −1

n

n∑

i=1

[β(t)yi log((1−α)ŷi+αyi)−(1−β(t))ŷi log(ŷi)+(1−β(t))ŷi log((1−α)yi+αŷi)]

1

DKL(Q||P ) = Ex∼Q[logQ(x)− logP (x)]

DKL(P ||Q) = Ex∼P [− logQ(x) + logP (x)]

sα(Q,P ) = DKL(Q||αQ+ (1− α)P )

sα(P,Q) = DKL(P ||αP + (1− α)Q)

DDS = βsα(Q,P ) + (1− β)sα(P,Q)

JDS = −1

n

n∑

i=1

[βyi log((1−α)ŷi+αyi)−(1−β)ŷi log(ŷi)+(1−β)ŷi log((1−α)yi+αŷi)]

JCDS = −1

n

n∑

i=1

[β(t)yi log((1−α)ŷi+αyi)−(1−β(t))ŷi log(ŷi)+(1−β(t))ŷi log((1−α)yi+αŷi)]

1

DKL(Q||P ) = Ex∼Q[logQ(x)− logP (x)]

DKL(P ||Q) = Ex∼P [− logQ(x) + logP (x)]

sα(Q,P ) = DKL(Q||αQ+ (1− α)P )

sα(P,Q) = DKL(P ||αP + (1− α)Q)

DDS = βsα(Q,P ) + (1− β)sα(P,Q)

JDS = −1

n

n∑

i=1

[βyi log((1−α)ŷi+αyi)−(1−β)ŷi log(ŷi)+(1−β)ŷi log((1−α)yi+αŷi)]

JCDS = −1

n

n∑

i=1

[β(t)yi log((1−α)ŷi+αyi)−(1−β(t))ŷi log(ŷi)+(1−β(t))ŷi log((1−α)yi+αŷi)]

1

DKL(Q||P ) = Ex∼Q[logQ(x)− logP (x)]

DKL(P ||Q) = Ex∼P [− logQ(x) + logP (x)]

sα(Q,P ) = DKL(Q||αQ+ (1− α)P )

sα(P,Q) = DKL(P ||αP + (1− α)Q)

DDS = βsα(Q,P ) + (1− β)sα(P,Q)

JDS = −1

n

n∑

i=1

[βyi log((1−α)ŷi+αyi)−(1−β)ŷi log(ŷi)+(1−β)ŷi log((1−α)yi+αŷi)]

JCDS = −1

n

n∑

i=1

[β(t)yi log((1−α)ŷi+αyi)−(1−β(t))ŷi log(ŷi)+(1−β(t))ŷi log((1−α)yi+αŷi)]

1

DKL(Q||P ) = Ex∼Q[logQ(x)− logP (x)]

DKL(P ||Q) = Ex∼P [− logQ(x) + logP (x)]

sα(Q,P ) = DKL(Q||αQ+ (1− α)P )

sα(P,Q) = DKL(P ||αP + (1− α)Q)

DDS = βsα(Q,P ) + (1− β)sα(P,Q)

JDS = −1

n

n∑

i=1

[βyi log((1−α)ŷi+αyi)−(1−β)ŷi log(ŷi)+(1−β)ŷi log((1−α)yi+αŷi)]

JCDS = −1

n

n∑

i=1

[β(t)yi log((1−α)ŷi+αyi)−(1−β(t))ŷi log(ŷi)+(1−β(t))ŷi log((1−α)yi+αŷi)]

1

DKL(Q||P ) = Ex∼Q[logQ(x)− logP (x)]

DKL(P ||Q) = Ex∼P [− logQ(x) + logP (x)]

sα(Q,P ) = DKL(Q||αQ+ (1− α)P )

sα(P,Q) = DKL(P ||αP + (1− α)Q)

DDS = βsα(Q,P ) + (1− β)sα(P,Q)

JDS = −1

n

n∑

i=1

[βyi log((1−α)ŷi+αyi)−(1−β)ŷi log(ŷi)+(1−β)ŷi log((1−α)yi+αŷi)]

JCDS = −1

n

n∑

i=1

[β(t)yi log((1−α)ŷi+αyi)−(1−β(t))ŷi log(ŷi)+(1−β(t))ŷi log((1−α)yi+αŷi)]

1

Fig. 1. (a) The derivation of our proposed DSD and cDSD loss function. (b) PI controller in cDSD loss.

between the two divergences makes the training somewhatadversarial. To balance the two terms, the balanced weight βis introduced to control the training process.

Since the training is dynamic, a fixed β in the dualskew divergence loss cannot effectively adapt to the trainingfor deep and hyperparameter-sensitive CNN-based andTransformer-based models. The key challenge in applyingDSD loss to deeper and hyperparameter-sensitive modelslies in the difficulty of tuning the β of the divergence termduring model training. Inspired by control systems andControlVAE [21], we further propose a controllable dualskew divergence (cDSD) loss with a feedback control toaddress this inconvenience in the original DSD.

Specifically, in the cDSD loss, we replaced the balanceweight β with a time step related version β(t). Duringtraining, we sample the divergence term sα(Q,P ) at eachtraining step t. We denote this sample as u(t); and tune β(t)with a controller to stabilize the divergence at a desired valueu∗, called the set point. The cDSD loss becomes:

JCDS = −1

n

n∑

i=1

[β(t)yi log((1− α)ŷi + αyi)

−(1− β(t))ŷi log(ŷi)+(1− β(t))ŷi log((1− α)yi + αŷi)],

PID control [22] is a basic (and the most prevalent) form ofthe feedback control algorithm in a wide range of industrialand software performance controls. The general form of thePID controller is defined as:

β(t) = Kpe(t) +Ki

∫ t

0

e(τ)dτ +Kdde(t)

dt,

in which an error e(t) between the set point and the value intime step t is calculated to potentially trigger a correction andreduce the error through the output of the controller β(t);Kp,Ki, and Kd are the coefficients for the P term (changes

with the error), I term (changes with the integral of theerror) and D term (changes with the derivative of the error),respectively.

Following existing practices in the control of noisysystems, we adopt a variant of the PID controller: a nonlinearPI controller for β(t) tuning, which eliminates the use of thederivative (D) term in our controller. Our controller can beexpressed as follows:

β(t) = min(βmax,Kp

1 + exp(e(t))−Ki

t∑

j=0

e(j) + βmin),

where Kp and Ki are both constants. With β(t), when anerror is large and positive, sα(Q,P ) is below the set point;that is, the likelihood loss of the model on the training setis very small, and the model is very likely to overfit. Thus,the first term in controller approaches 0, leading to a lowerβ(t) that encourages the model focus on the word estimatedby the model itself instead of the distribution given by thetraining data . When the error is large and negative, thefirst term approaches its maximum value (i.e. Kp) and theresultant higher β(t) value leads to the model focusing moreon the correct word given by the training data. For thesecond term, errors within T training steps (we use T = 1in this work) are summed to create a progressively strongercorrection. βmin and βmax are application-specific constantsthat effectively constrain the range within which β(t) canvary.

As discussed in [21], constants Kp and Ki in the PIcontroller should guarantee that error reactions are suffi-ciently smooth to allow for gradual convergence. Followingthe settings in ControlVAE [21], in this work, we also letKp = 0.01 and Ki = 0.0001. For the set point of sα(Q,P ),its upper bound is the divergence value when the modelconverges with fixed β = βmin in the original DSD loss.Similarly, its lower bound is the divergence value whenβ = βmax in the original DSD loss. Since the value of set point

-

ARXIV, APRIL 2021 5

is highly customizable to satisfy its different applications,we use the same divergence value that ML’s cross entropyloss with label smoothing calculates as the set point in ourempirical study.

To conform to the training scenarios of deeper andparameter-sensitive models, we propose the cDSD loss.Although model training with DSD loss is not adversarial,the two KL terms in DSD play an adversarial role for themodel in essential. Similar to the adversarial training in aGAN [23] model, it may be challenging to train a stablemodel, especially when there are many parameters that needto be optimized. The reason is that the training process isinherently unstable, resulting in the simultaneous dynamictraining of two competing items (models/objectives). In otherwords, it is a dynamic system in which the optimizationprocess seeks an equilibrium between two forces rather thana minimum. Since the skew divergence in DSD is a term thatintroduces smoothing or noise to the target, when we keepthis noise term at a close to a constant value, the model canrun in a stable manner. PI control is to control the loss ofsmoothing within a reasonable range, which is why cDSDloss is more suitable for deeper models. In the DSD loss, thefixed β makes it impossible to adjust according to the changesin the training of the model; an inappropriate smoothing lossvalue will make the training unstable.

4 EXPERIMENTS4.1 Data Preparation

We perform all experiments on data from the shared task ofWMT 2014 and report results on the English-German andEnglish-French translation tasks. The translation quality ismeasured by case-sensitive 4-gram BLEU score [8], and weuse the sign test [24] to test the statistical significance of ourresults.

For the English-German task, the training set consistsof 4.5M sentence pairs with 91M English words and 87MGerman words. For the English-French task, the training setcontains 39M sentence pairs with 988M English words and1131M French words. The models are evaluated on the WMT2014 test set news-test 2014, and the concatenation of news-test2012 and news-test 2013 is used as the development set.

The preprocessing on both training sets includes a jointbyte pair encoding [25] with 32K merge operations aftertokenization. The final joint vocabulary sizes are around37K and 37.2K for the English-German and English-Frenchtranslation tasks, respectively. Every out-of-vocabulary wordis replaced with a special 〈UNK〉 token.

4.2 Models and Training Details

We train both a baseline SMT system and serveral NMTbaseline systems. For the SMT baseline, we use the phrase-based SMT system MOSES [26]. The log-linear model of MOSESis trained by minimum error rate training (MERT) [27],which directly optimizes model parameters with respectto evaluation metrics. Our SMT baseline is trained with thedefault configurations in MOSES, and it is trained togetherwith a trigram language model trained on the target languageusing SRILM [28]. For the RNN-based NMT baseline, we usethe model architecture of the attention-based RNNSearch [5].

Our RNN-based NMT baseline model is generally similarto [5], except we apply an input feeding approach, and theattention layer is built on top of a LSTM layer instead of aGRU layer. For the CNN-based NMT baseline, we use thesame architecture of model ConvS2S which is introducedin [15]. For the Transformer-based NMT baseline, we adoptthe Transformer model presented in [3] without other modelstructure changes.

As in our RNN-based baseline model, each direction ofthe LSTM encoder and the LSTM decoder has dimension1000. The word embedding and attention sizes are both setto 620. The batch size is set to 128, and no dropout is used forany model. The CNN-based baseline has 20 convolutionallayers in both the encoder and the decoder, and both usekernels of width 3 and hidden size 512 throughout. In theTransformer-based model, there are two commonly usedparameter settings: Transformer-base and Transformer-big. Wechoose the best performing Transformer-big for experiments,consisting of 6 layers each for the encoder and decoder with ahidden size of 1024, 16 attention heads, and 4096-dimensionalfeed-forward inner-layers.

The models trained with our DSD loss and cDSD loss arereferred to DSD-NMT and cDSD-NMT, respectively, hereafter.To apply the proposed DSD loss, we adopt a hybrid trainingstrategy. To provide a reliable initialization, we start trainingthe model with cross entropy loss and then switch to theDSD loss at different switching points. The training set isreshuffled at the beginning of each epoch. 8 Nvidia TeslaV100 GPUs are used to train all the NMT models. For theEnglish-German task, training lasts for 9 epochs in total. Weuse the Adam optimizer for the first 5 epochs with a learningrate of 3.0 × 10−4; and then switch to plain SGD with alearning rate of 0.1. At the beginning of epoch 8, we decaythe learning rate to 0.05. For the English-French task, themodels are trained for 4 epochs. The Adam optimizer and alearning rate of 3.0× 10−4 are used for the first 2 epochs. Wethen switch to SGD with a learning rate of 0.1; and finallydecay the learning rate to 0.05 at the beginning of epoch 4.In the training of cDSD-NMT, beta(0) is initialized to 1, andthe set point for divergence sα(Q,P ) is set to 35 and 33 forthe English-German and English-French tasks, respectively.βmin is set to 0.85, and βmax is set to 0.95 for both tasks.

To demonstrate the source of improvement with ourapproach, we mainly use the RNN-based model as the basisof analysis, we therefore compare our DSD-NMT model toseveral important RNN-based NMT systems with the samedataset and similar model size.

• RNNSearch-LV [29]: a modified version ofRNNSearch based on importance sampling,which allows the model to have a very large targetvocabulary without any substantial increase incomputational cost.

• Local-Attn [6]: a system that applied a local attentionmechanism that focuses only on a small subset of thesource positions when predicting each target word.

• MRT [11]: a system optimized by a loss functionfor minimum risk training. The model parametersare directly optimized with respect to the evaluationmetrics.

• Bahdanau-LL [12]: this model closely followed the

-

ARXIV, APRIL 2021 6

architecture of [5] with ML training and achieved ahigher performance by annealing the learning rateand penalizing insufficiently long output sequencesduring beam search.

• Bahdanau-AC+LL [12]: a neural sequence predictionmodel that combines the actor-critic from reinforce-ment learning with the original ML training.

4.3 Effect of Balanced Weight βAs shown in Section 3.5, the balanced weight β controls thedegree of conservativeness of the model. When β is close toone, the loss function behaves more like DKL(Q||P ), whichtends to predict the correct target on the training datasetas accurate as possible. When β is close to zero, the lossfunction behaves more like DKL(P ||Q), which prefers toconservatively ignore words that are considered correct inthe training set and to choose correct words that matchthe model’s predictions. In RNN-based DSD-NMT, in orderto find an optimal value of β, we study the effect of βon the translation quality of the English-German task. Inaddition, we also include the results of RNN-based cDSD-NMT for better analysis. Table 1 reports the BLEU scoreswith different β in RNN-based DSD-NMT and cDSD-NMTon the development set with greedy search. When onlytaking the DSD-NMT results into consideration, the resultsseem to show that the model trained with β = 0 performsthe best; and suggest that the skew inverse KL divergenceoutperforms the interpolation form in our loss function-applying strategy, but when comparing DSD-NMT with thatwith cDSD-NMT, we see that cDSD-NMT obtains slightlybetter results with the same model. On the one hand, thisshows that dynamic β adjustment has advantages over thefixed β setting, and on the other hand, it also shows thatthe interpolation form is actually a more general loss thana single divergence. It is worth noting that when β = 1,optimizing DSD is actually equivalent to MLE loss, and aperformance improvement of 0.4 is still observed comparedto the baseline. The source of these improvements may beattributed to the Adam-SGD switching training method. Thisswitching of optimizer and learning rate has a possibility ofbreaking optimization from a local optimum.

TABLE 1BLEU scores with different β in RNN-based DSD-NMT and cDSD-NMT

on English-German dev set.

Model Baseline DSD cDSDβ = 1 β = 0.5 β = 0

BLEU 20.51 20.91 21.34 21.72 21.96

4.4 Switching Point and BLEUSince the DSD loss contains two adversarial loss items, ifDSD is leveraged in the early training stage when the modelhas not yet converged yet, the model may oscillate at a lowerperformance level, which will affect the convergence speedand final convergence performance. Therefore, we first usethe ML training model to achieve rapid convergence andthen switch to DSD loss for better performance finetuning.Since we start the training of DSD-NMT and cDSD-NMT

0 50K 100K 150K 200K 250K 300K 350K 400K 450K 500KSteps

4

8

12

16

20

24

BLEU

Sco

re

Baseline BLEU100K-BLEU200K-BLEU245K-BLEU313K-BLEU

40

60

80

100

120

140

160

180

200

Trai

ning

Los

s

Xent lossDSD loss

Fig. 2. BLEU scores and training loss on English-German dev set withDSD switching after 100K, 200K, 245K and 313K steps (β = 0, greedysearch).

TABLE 2BLEU scores with different model architectures and loss functions on the

English-German dev set.

RNN-based CNN-based Transformer-based

Baseline 20.11 22.64 24.76DSD 21.32 22.60 24.55cDSD 21.44 22.98 25.13

with an initial ML-trained model, the model performancewith this training strategy will be influenced by when themodel switches to the new loss function. To intuitively showthe relationship between the switching point and BLEU,we plot the curves of BLEU score and training loss againsttraining steps from the actual training process of RNN-basedDSD-NMT for the English-German task with greedy searchat different switching positions (after 100K, 200K, 245K, and313K steps) in Figure 2, . From the figure, it shows that whenswitching to our new loss function, the BLEU scores of bothsettings are improved. In particular, there is a more than onepoint improvement at steps 245K and 313K. Comparing allthe training curves shows that a better DSD switching pointshould be located around the convergence of the standardML training. After switching to DSD, the cross entropy loss(which should theoretically be minimized) actually increases,as do the BLEU scores, which demonstrates that cross entropyfails to reflect translation quality near the end of training.Sufficiently long time training may cause the model toplunge into a local optimum, but the loss switching operationresembles a simulated annealing mechanism1 and helps themodel move to a better optimum.

4.5 DSD and cDSD in CNN-based and Transformer-based Models

In order to illustrate the effects of DSD and cDSD on differentbaselines, we conducted experiments on English-German

1. We also tried an automatically loss function switching strategysimilar to simulated annealing by switching the loss according to thegrowth rate of BLEU; however, switching DSD back to cross entropydoes not further increase BLEU over the original score.

-

ARXIV, APRIL 2021 7

TABLE 3Performance on the WMT14 English-German task

Model Method BLEU

RNNsearch-LV ML+beam 19.40Local-Attn ML+beam 20.90MRT MRT+beam 20.45Baseline-SMT MERT+greedy 18.83

MERT+beam 19.91

RNN-based NMT

ML+greedy 20.89ML+beam 22.13ML+deep 24.64DSD+greedy 22.02++

DSD+beam 22.60+

DSD+deep 25.00++

CNN-based NMT ML+beam 26.43cDSD+beam 26.72++

Transformer-based NMT ML+beam 28.32cDSD+beam 28.64+

TABLE 4Performance on the WMT14 English-French task

Model Method BLEU

RNNsearch-LV ML+beam 34.60MRT MRT+beam 34.23Bahdanau-LL ML+greedy 29.33

ML+beam 30.71Bahdanau-AC+LL ML+AC+greedy 30.85

ML+AC+beam 31.13

Baseline-SMT MERT+greedy 31.55MERT+beam 33.82

RNN-based NMT

ML+greedy 32.10ML+beam 34.70DSD+greedy 33.56++

DSD+beam 35.04+

CNN-based NMT ML+beam 41.44cDSD+beam 41.72+

Transformer-based NMT ML+beam 41.79cDSD+beam 42.00+

translation; and performed general ML training, DSD train-ing, and cDSD training using the three baselines. The resultsare shown in Table 2. From the results, first, cDSD is generallystronger than DSD in all three model architectures. In RNN-based NMT, the gap between cDSD and DSD is relativelysmall. Therefore, in order to simplify training, we only useDSD on the RNN-based model in subsequent experiments.On the CNN-based and Transformer-based NMT models,the results of using DSD are even inferior to the baseline, butthe cDSD continues to bring improvements. We speculatethat the reason is that as the models deepened, the structurebecame more complex, making the training process moresensitive, so the static β in DSD loss was no longer applicable.Therefore, we used the cDSD loss for training the CNN andTransformer models.

4.6 Results on Test SetsThe results2 on the English-German and English-Frenchtranslation test sets are reported in Tables 3 and 4. Forprevious works, the best BLEU scores of single modelsfrom the original papers are listed. From Tables 3 and 4,we see that RNN-based DSD-NMT model outperforms allthe other models and our own baselines that use standardcross entropy loss with greedy search or beam search. Forthe English-German task, Table 3 shows that even our RNN-based NMT baseline models achieve better performance thanmost of the listed systems, though this result may be due tothe use of joint BPE, input feeding, and the mixed trainingstrategy using the Adam and SGD algorithms. When usinggreedy search, our DSD model outperforms the SMT andNMT baselines by 3.19 and 1.13 BLEU , respectively. This iseven better than the best listed system, Local-Attn [6], whichuses beam search. When using beam search, RNN-basedDSD-NMT outperforms the SMT baseline with improvementof 2.69 BLEU points; however, it only provides a 0.47 BLEUincrease over the NMT baseline. We also test our DSD loss ona deep NMT model where the encoder and decoder are bothstacked 4-layer LSTMs. The result indicates a comparativelyless 0.36 point gain, which illustrates both the usefulness ofour DSD loss and that the improvement decreases as thedepth increases.

For the English-French task, with greedy search, theperformance of DSD-NMT is still superior to other systemslisted in Table 4. It achieves an increase of 1.46 and 2.01 BLEUpoints compared to the NMT and SMT baselines, respectively.With beam search, our DSD-NMT outperforms the SMT andNMT baselines by 1.22 and 0.34 BLEU, respectively. Bothtables’ sigh test results also indicate that DSD-NMT indeedsignificantly enhances the translation quality in comparisonbaselines trained with only cross entropy loss.

The CNN-based and Transformer-based NMT models,whose network depth reaches 20 and 12 layers, respectively,have stronger performance than the RNN-based NMT model.Additionally, on these strong baselines, our cDSD loss stillbrings a stable improvement in translation performance forboth English-German and English-French, indicating that theDSD we proposed is a general loss for NMT model training.

5 ABLATION STUDY5.1 Greedy and Beam SearchBeam search is a commonly used method to jump out of thelocal optimal in the inference stage. In order to compare thesynergy between beam search and DSD loss during training,we plot the variation curves of BLEU scores for the proposedDSD model and the NMT baseline with different beam sizeson the English-German development set in Figure 3. Withincreasing beam size, the BLEU score also increases, andthe best score is given by the beam size of 10 for both theNMT baseline and DSD-NMT. When beam search is used,however, the margin between the proposed DSD methodand ML training becomes smaller, as beam search allowsfor non-greedy local decisions that can potentially lead to asequence with a higher overall probability, therefore is also

2. “++” indicates a statistically significant difference from the NMTbaseline at p < 0.01 and “+” at p < 0.05.

-

ARXIV, APRIL 2021 8

TABLE 5Translation performance for different beam widths on English-German

and English-French test sets.

Model Dataset B=1 B=3 B=5 B=25 B=100

Baseline En-De 27.48 28.19 28.32 27.22 25.27En-Fr 41.18 41.73 41.79 41.55 39.70

+cDSD En-De 28.00 28.46 28.64 27.87 26.11En-Fr 41.79 41.92 42.00 41.91 40.45

tolerable to temporary mistakes. And even with the help ofbeam search, DSD loss can still bring improvement, whichshows the effectiveness of DSD training in jumping out ofthe local optimum.

1 2 3 4 5 6 7 8 9 10Beam Size

20.0

20.5

21.0

21.5

22.0

BLEU

Sco

re

BaselineDSD

Fig. 3. BLEU scores with different beam sizes on English-German devset(β = 0.5).

Since the neural machine translation model only penalizesthe model with the top-1 prediction loss during training, theessence of DSD loss training is to optimize the loss in theopposite direction, so that the model does not trust themodel’s top-1 prediction too much, thereby improving thetop-1 prediction in the inference stage. But in the inferencestage, due to the adoption of beam search, top-n predictionare considered, thus the advantage of DSD on top-1 isreduced. In order to verify our hypothesis, we conducted ex-periments on the Transformer-based NMT model optimizedby ML+smoothing and cDSD under different beam sizes,respectively.

Table 5 present the performance comparison. When thebeam width is 1 (greedy search), we found that our cDSDtraining has the most obvious effect improvements comparedto ML+smoothing training, which verifies our hypothesis inwhich cDSD can more effectively improve the prediction oftop-1, thereby improving the final translation performance.When the beam size increases, because beam search hasthe ability to tolerate top-1 errors, the advantage of cDSDover ML+smoothing becomes smaller. Additionally, thetranslation performance degrades with large beam widthdue to the increasing beam width leads to sequences thatare disproportionately based on early, very low probabilitytokens that are followed by a sequence of tokens with higher(conditional) probability, which is consistent with previous

TABLE 6BLEU scores with models enhanced with back-translation on the

English-German test set.

B=1 B=5 ∆

Baseline 28.55 29.37 0.82cDSD 29.15 29.70 0.55

reports of similar performance degradation [30], [31], [32].

5.2 Improvement over Back-translationBack-translation is one of the most powerful enhancementstrategies for machine translation. We also performed experi-ments on a stronger baseline enhanced with back-translationto demonstrate the effects of the DSD training rather thanthe variance of the model. We sampled 4.5M monolingualsentences from German news-crawl 2019 for back-translation.We first used the original WMT14 4.5M parallel corpus totrain a backward German→English translation model, andthen based on the obtained backward translation model, the4.5M monolingual was decoded and then combined with theparallel data for English→German translation training. Inthe experiment, two settings: greedy search (B=1)and beamsearch (B=5) are adopted respectively (the respective beamwidth is also used in the backward decoding), and the finalresults are shown in Table 6.

After enhanced with back-translation, the performanceof the baseline model has been improved significantly forboth greedy search and beam search. On this strong baseline,cDSD has achieved similar improvements as on the originalbaseline. The performance difference between greedy searchand beam search under cDSD training has become smaller,which shows that cDSD can improve the prediction of top-1,and further verify the source of improvements with cDSDtraining. In addition, the consistent improvement under avariety of experimental settings shows that the improvementof model performance does not come from the variance oftraining, but the better model robustness.

5.3 Comparison between DSD and Optimizer SwitchFinetuningIn the use of DSD loss, we first use XENT+smoothingand Adam optimizer for fast convergence training, andthen switch to DSD to train with the SGD optimizer. Thisoptimizer switch finetuning also has the potential to makethe model jump out of the local optimum. In order to showthat the enhancement of DSD loss comes from the actual lossdesign rather than the optimizer switching finetuning, weintroduce another baseline - OSF, in which the same steps areused to switch the Adam to SGD optimizer, but the originalXENT+smoothing loss function is maintained.

TABLE 7Difference of cDSD training and Optimizer Switch Finetuning (OSF) on

English-German test set.

Model Baseline cDSD OSF

BLEU 28.32 28.64 28.35

-

ARXIV, APRIL 2021 9

As shown in the comparison in Table 7, OSF has a veryslight performance improvement over the baseline, but it isnot significant, while the cDSD loss is relatively improved.Therefore, we can conclude that the source of DSD loss doesnot depend on the optimizer switch finetuning, but the betterand robust convergence.

6 RELATED WORKStandard NMT systems commonly adopt word-level crossentropy loss to learn model parameters; however, this typeof ML learning has been shown to be a suboptimal methodfor sequence model training [7]. A number of recent workshave sought different training strategies or improvements forthe loss function. One of these approaches was by [9], whoproposed gently changing the training process from a fully-guided scheme that uses true previous tokens in predictionto a scheme that mostly uses previously generated tokensinstead. Some other works focused on the study of sequence-level training algorithms. For instance, [11] applied minimumrisk training (MRT) in end-to-end NMT. [10] introduced asequence-level loss function in terms of errors made duringthe beam search. Another sequence-level training algorithm,proposed by [7], directly optimized the evaluation metricsand was built on the REINFORCE algorithm. Similarly, witha reinforcement learning-style scheme, [12] introduced a criticnetwork to predict the value of an output token; given thepolicy of an actor network. This results in a training procedurethat is much closer in practice to the test phase; and allowsthe model to directly optimize for a task-specific score suchas BLEU. [33] presented a comprehensive comparison ofclassical structured prediction losses for seq2seq models.Different from these works, we intend to optimize thetraining loss while allowing for easy implementation andnot introducing more complexities.

In terms of using symmetric KL divergence as loss, [34],[35] improves ML training with additional symmetric KLdivergence as a smoothing-inducing adversarial regularizerto achieve more robust fine-tuning purposes. Different fromboth of the above motivations, in this work, we proposea general-purpose loss approach to cope with sequence-to-sequence tasks like NMT rather than providing an auxiliarydistance measure. We give a new and effective loss forsuch broad range of tasks by integrating two symmetricKL-divergence terms with clear enough model training focus.Our suggested solution in this work is more general andmore convenient for use. In terms of implementation, the lossform of [36] is somewhat similar to ours. By introducing twoKullback-Leibler divergence regularization terms into theNMT training objective, a novel model regularization methodfor NMT training is proposed to improve the agreementbetween translations generated by left-to-right (L2R) andright-to-left (R2L) NMT decoders. Unlike their aims, our DSDloss is to improve the distribution of predicted tokens ratherthan improve the agreement problem between bidirectionaldecoding.

7 CONCLUSIONThis work proposes a general and balanced loss function forNMT training called dual skew divergence. Adopting a hybrid

training strategy with both cross entropy and DSD training,we empirically verify that switching to a DSD loss after theconvergence of ML training gives an effect similar to that ofsimulated annealing and allows the model move to a betteroptimum. While the proposed DSD loss effectively enhancesthe RNN-based NMT, it suffers from unsatisfactorily balanc-ing two symmetrical loss terms for deeper models like theTransformer, thus we further propose a controllable DSD andmitigate this issue. Our proposed DSD loss enhancementmethods improve our diverse baselines, demonstrating avery general loss improvement.

REFERENCES

[1] N. Kalchbrenner and P. Blunsom, “Recurrent continuous translationmodels,” in Proceedings of the 2013 Conference on Empirical Methodsin Natural Language Processing, Seattle, Washington, USA, October2013, pp. 1700–1709.

[2] I. Sutskever, O. Vinyals, and Q. V. Le, “Sequence to sequencelearning with neural networks,” in Advances in Neural InformationProcessing Systems, 2014, pp. 3104–3112.

[3] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N.Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,”in Advances in Neural Information Processing Systems, 2017, pp. 6000–6010.

[4] P. Koehn, F. J. Och, and D. Marcu, “Statistical phrase-basedtranslation,” in Proceedings of the 2003 Conference of the NorthAmerican Chapter of the Association for Computational Linguistics onHuman Language Technology - Volume 1, Stroudsburg, PA, USA, 2003,pp. 48–54.

[5] D. Bahdanau, K. Cho, and Y. Bengio, “Neural machine translationby jointly learning to align and translate,” in Proceedings of 3rdInternational Conference on Learning Representations, 2015.

[6] T. Luong, H. Pham, and C. D. Manning, “Effective approaches toattention-based neural machine translation,” in Proceedings of the2015 Conference on Empirical Methods in Natural Language Processing,Lisbon, Portugal, September 2015, pp. 1412–1421.

[7] M. Ranzato, S. Chopra, M. Auli, and W. Zaremba, “Sequence leveltraining with recurrent neural networks,” in Proceedings of 4rdInternational Conference on Learning Representations, 2016.

[8] K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “Bleu: a methodfor automatic evaluation of machine translation,” in Proceedings of40th Annual Meeting of the Association for Computational Linguistics,Philadelphia, Pennsylvania, USA, July 2002, pp. 311–318.

[9] S. Bengio, O. Vinyals, N. Jaitly, and N. Shazeer, “Scheduledsampling for sequence prediction with recurrent neural networks,”in Advances in Neural Information Processing Systems, 2015, pp. 1171–1179.

[10] S. Wiseman and A. M. Rush, “Sequence-to-sequence learning asbeam-search optimization,” in Proceedings of the 2016 Conferenceon Empirical Methods in Natural Language Processing, Austin,Texas, November 2016, pp. 1296–1306. [Online]. Available:https://aclweb.org/anthology/D16-1137

[11] S. Shen, Y. Cheng, Z. He, W. He, H. Wu, M. Sun, andY. Liu, “Minimum risk training for neural machine translation,”in Proceedings of the 54th Annual Meeting of the Associationfor Computational Linguistics, Berlin, Germany, August 2016,pp. 1683–1692. [Online]. Available: http://www.aclweb.org/anthology/P16-1159

[12] D. Bahdanau, P. Brakel, K. Xu, A. Goyal, R. Lowe, J. Pineau, A. C.Courville, and Y. Bengio, “An actor-critic algorithm for sequenceprediction,” in Proceedings of 5rd International Conference on LearningRepresentations, 2017.

[13] S. Kullback and R. A. Leibler, “On information and sufficiency,”Ann. Math. Statist., vol. 22, no. 1, pp. 79–86, 03 1951.

[14] F. Huszár, “How (not) to train your generative model: Scheduledsampling, likelihood, adversary?” arXiv e-print arXiv:1511.05101,2015.

[15] J. Gehring, M. Auli, D. Grangier, D. Yarats, and Y. N. Dauphin,“Convolutional sequence to sequence learning,” arXiv preprintarXiv:1705.03122, 2017.

https://aclweb.org/anthology/D16-1137http://www.aclweb.org/anthology/P16-1159http://www.aclweb.org/anthology/P16-1159

-

ARXIV, APRIL 2021 10

[16] K. Cho, B. van Merrienboer, C. Gulcehre, D. Bahdanau, F. Bougares,H. Schwenk, and Y. Bengio, “Learning phrase representationsusing rnn encoder–decoder for statistical machine translation,”in Proceedings of the 2014 Conference on Empirical Methods in NaturalLanguage Processing, Doha, Qatar, October 2014, pp. 1724–1734.

[17] S. Hochreiter and J. Schmidhuber, “Long short-term memory,”Neural Comput., vol. 9, pp. 1735–1780, 1997.

[18] L. Lee, “Measures of distributional similarity,” in Proceedings of the37th Annual Meeting of the Association for Computational Linguistics,College Park, Maryland, USA, June 1999, pp. 25–32.

[19] S. F. Chen and J. Goodman, “An empirical study ofsmoothing techniques for language modeling,” in 34thAnnual Meeting of the Association for Computational Linguistics.Santa Cruz, California, USA: Association for ComputationalLinguistics, Jun. 1996, pp. 310–318. [Online]. Available: https://www.aclweb.org/anthology/P96-1041

[20] Z. Li, R. Wang, K. Chen, M. Utiyama, E. Sumita, Z. Zhang,and H. Zhao, “Data-dependent gaussian prior objective forlanguage generation,” in International Conference on LearningRepresentations, 2020. [Online]. Available: https://openreview.net/forum?id=S1efxTVYDr

[21] H. Shao, S. Yao, D. Sun, A. Zhang, S. Liu, D. Liu, J. Wang, andT. Abdelzaher, “Controlvae: Controllable variational autoencoder,”in International Conference on Machine Learning. PMLR, 2020, pp.8655–8664.

[22] K. J. Åström, T. Hägglund, and K. J. Astrom, Advanced PIDcontrol. ISA-The Instrumentation, Systems, and AutomationSociety Research Triangle Park, 2006, vol. 461.

[23] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley,S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,”Advances in Neural Information Processing Systems, vol. 27, pp. 2672–2680, 2014.

[24] M. Collins, P. Koehn, and I. Kučerová, “Clause restructuring forstatistical machine translation,” in Proceedings of the 43rd annualmeeting on association for computational linguistics. Association forComputational Linguistics, 2005, pp. 531–540.

[25] R. Sennrich, B. Haddow, and A. Birch, “Neural machine translationof rare words with subword units,” in Proceedings of the 54thAnnual Meeting of the Association for Computational Linguistics, Berlin,Germany, August 2016, pp. 1715–1725.

[26] Koehn, Philipp, Hoang, Hieu, Alexandra, CallisonBurch, Chris,Federico, and Marcello, “Moses: open source toolkit for statisticalmachine translation,” in Proceedings of the 45th Annual Meeting ofthe ACL on Interactive Poster and Demonstration Sessions, 2007, pp.177–180.

[27] F. J. Och, “Minimum error rate training in statistical machine trans-lation,” in Proceedings of the 41st Annual Meeting of the Association forComputational Linguistics, Sapporo, Japan, July 2003, pp. 160–167.

[28] A. Stolcke, “Srilm — an extensible language modeling toolkit,”in International Conference on Spoken Language Processing, 2002, pp.901–904.

[29] S. Jean, K. Cho, R. Memisevic, and Y. Bengio, “On using very largetarget vocabulary for neural machine translation,” in Proceedingsof the 53rd Annual Meeting of the Association for ComputationalLinguistics and the 7th International Joint Conference on NaturalLanguage Processing, Beijing, China, July 2015, pp. 1–10.

[30] P. Koehn and R. Knowles, “Six challenges for neuralmachine translation,” in Proceedings of the First Workshopon Neural Machine Translation. Vancouver: Association forComputational Linguistics, Aug. 2017, pp. 28–39. [Online].Available: https://www.aclweb.org/anthology/W17-3204

[31] M. Ott, M. Auli, D. Grangier, and M. Ranzato, “Analyzing uncer-tainty in neural machine translation,” in International Conference onMachine Learning. PMLR, 2018, pp. 3956–3965.

[32] E. Cohen and C. Beck, “Empirical analysis of beam search perfor-mance degradation in neural sequence models,” in InternationalConference on Machine Learning. PMLR, 2019, pp. 1290–1299.

[33] S. Edunov, M. Ott, M. Auli, D. Grangier, and M. Ranzato,“Classical structured prediction losses for sequence to sequencelearning,” in Proceedings of the 2018 Conference of the North AmericanChapter of the Association for Computational Linguistics: HumanLanguage Technologies, Volume 1 (Long Papers). Association forComputational Linguistics, 2018, pp. 355–364. [Online]. Available:http://aclweb.org/anthology/N18-1033

[34] H. Jiang, P. He, W. Chen, X. Liu, J. Gao, and T. Zhao,“SMART: Robust and efficient fine-tuning for pre-trained naturallanguage models through principled regularized optimization,”

in Proceedings of the 58th Annual Meeting of the Association forComputational Linguistics. Online: Association for ComputationalLinguistics, Jul. 2020, pp. 2177–2190. [Online]. Available:https://www.aclweb.org/anthology/2020.acl-main.197

[35] A. Aghajanyan, A. Shrivastava, A. Gupta, N. Goyal, L. Zettlemoyer,and S. Gupta, “Better fine-tuning by reducing representationalcollapse,” in International Conference on Learning Representations,2021. [Online]. Available: https://openreview.net/forum?id=OQ08SN70M1V

[36] Z. Zhang, S. Wu, S. Liu, M. Li, M. Zhou, and T. Xu, “Regularizingneural machine translation by target-bidirectional agreement,” inProceedings of the AAAI Conference on Artificial Intelligence, vol. 33,no. 01, 2019, pp. 443–450.

https://www.aclweb.org/anthology/P96-1041https://www.aclweb.org/anthology/P96-1041https://openreview.net/forum?id=S1efxTVYDrhttps://openreview.net/forum?id=S1efxTVYDrhttps://www.aclweb.org/anthology/W17-3204http://aclweb.org/anthology/N18-1033https://www.aclweb.org/anthology/2020.acl-main.197https://openreview.net/forum?id=OQ08SN70M1Vhttps://openreview.net/forum?id=OQ08SN70M1V

1 Introduction2 Neural Machine Translation3 Dual Skew Divergence Loss for NMT3.1 Cross Entropy3.2 Kullback-Leibler Divergence3.3 Asymmetry of KL divergence3.4 Skew Divergence3.5 Dual Skew Divergence3.6 Controllable Dual Skew Divergence

4 Experiments4.1 Data Preparation4.2 Models and Training Details4.3 Effect of Balanced Weight 4.4 Switching Point and BLEU4.5 DSD and cDSD in CNN-based and Transformer-based Models4.6 Results on Test Sets

5 Ablation Study5.1 Greedy and Beam Search5.2 Improvement over Back-translation5.3 Comparison between DSD and Optimizer Switch Finetuning

6 Related Work7 ConclusionReferences