DETECTING SHADOWS AND LOW-LYING OBJECTS IN …doras.dcu.ie/283/1/iet_vie_2005.pdf · INDOOR AND...

Transcript of DETECTING SHADOWS AND LOW-LYING OBJECTS IN …doras.dcu.ie/283/1/iet_vie_2005.pdf · INDOOR AND...

DETECTING SHADOWS AND LOW-LYING OBJECTS ININDOOR AND OUTDOOR SCENES USING HOMOGRAPHIES

Philip Kelly∗, Paul Beardsley

±, Eddie Cooke

∗, Noel O’Connor

∗, Alan Smeaton

∗

* Centre for Digital Video Processing, Adaptive Information Cluster, Dublin City University, Ireland{kellyp, ej.cooke, oconnorn}@eeng.dcu.ie [email protected]

± Mitsubishi Electric Research Laboratories, 201 Broadway, Cambridge MA 02139, [email protected]

Keywords: Homography, Shadow Removal, Low-LyingRegions, Stereo, 2D Analysis

Abstract

Many computer vision applications apply backgroundsuppression techniques for the detection and segmentationof moving objects in a scene. While these algorithms tendto work well in controlled conditions they often fail whenapplied to unconstrained real-world environments. This paperdescribes a system that detects and removes erroneouslysegmented foreground regions that are close to a ground plane.These regions include shadows, changing background objectsand other low-lying objects such as leaves and rubbish. Thesystem uses a set-up of two or more cameras and requires no3D reconstruction or depth analysis of the regions. Therefore,a strong camera calibration of the set-up is not necessary.A geometric constraint called a homography is exploited todetermine if foreground points are on or above the groundplane. The system takes advantage of the fact that regions inimages off the homography plane will not correspond after ahomography transformation. Experimental results using realworld scenes from a pedestrian tracking application illustratethe effectiveness of the proposed approach.

1 Introduction

Numerous computer vision applications depend on accuratedetection and segmentation of moving objects from abackground model as a first step in their algorithmic process.Many of the approaches implemented to achieve this arebased on background suppression techniques that workwell in controlled conditions. However, when these areapplied to unconstrained real-world environments containingdynamic background conditions they often fail. Shadows,ghosts (introduced when objects that initially belonged tothe background model move) and other small objects such asleaves or rubbish entering an outdoor scene can be incorrectlyidentified as valid foreground objects. While techniquesexist to detect and remove shadows and ghosts by means ofexploiting colour and motion information [7, 8, 9], this paperdescribes a method to remove not only ground plane shadowsand ghosts but also other low-lying objects on a plane using apurely 2D geometrical approach.

The technique described in this paper makes use of a geometricconstraint called ahomographybetween multiple views ofthe same scene taken from slightly different viewpoints. Thehomography is used to map points on the ground plane in oneimage to the corresponding ground plane points in anotherimage of the same scene. Other published works usinghomographies include obstacle detection and avoidance [1,2, 6] and road region extraction [12]. Although 3D depthinformation could be computed to determine shadows andlow-lying regions across image pairs it is computationallyexpensive. Computing depth using stereo is of the order ofO(m ∗ n ∗ d), wherem is the number of image pixels,n isthe size of the correlation window, andd is the number ofdisparities searched [2]. Stereo-based homography on the otherhand is computationally cheap. While it does not provide depthinformation, it does however provide enough information todetermine whether a particular image point is on the groundplane or above it. Therefore, the algorithm described in thispaper has two purposes: (i) it identifies and removes shadowsand low-lying objects from segmented foreground regions and(ii) it can be used in a preprocessing phase to remove suchobjects before depth analysis and thereby improving the speedand reliability of the 3D reconstruction.

This paper is organized as follows: Section 2 presents thedetails of the developed algorithmic approach. Firstly, thehomographic transformation and a robust estimation techniqueare described; we then illustrate how the background modellingis implemented and how both low-lying and rising low-lyingregions are detected. In Section 3 we present experimentalresults from both a controlled indoor environment and a realworld outdoor situation. Finally, Section 4 details conclusionsand future work.

2 Algorithm Details

A homography, H, also referred to as aprojectivetransformation or collineation, is an invertible mappingwithin 2D projective space,IP2 , such that three homogeneouspointsx1, x2 andx3 lie on the same line if and only ifH(x1),H(x2) andH(x3) are collinear [10]. A3 × 3 homographymatrix can be used to describe both the relationships betweenreal world planes and a camera’s image plane and between twoperspective views of a planar object.

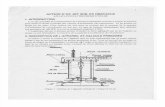

Assuming a point,X, on a planar surface,π, is projected to the

(a) (b)

Figure 1: (a) HomographyH1π between a world plane and theplanes image; (b) HomographyH21 induced by a plane.

point x in the image planeI1, a homographyH1π exists fromπ to I1 as shown in Figure 1(a). Taking a second camera andimage,I2, of the same plane a second distinct homographyH2π

exists fromπ to I2. The composition of the two homographies,H1π andH2π, results in a third homography [16], which existsfrom I1 to I2:

x′ ∼= H2πH−11π x (1)

x′ ∼= H21x (2)

where∼= denotes equality up to a scale factor.

This homography fromI1 to I2, illustrated in Figure 1(b), isknown as aplane inducedhomography asH21 depends on theposition of the real world planeπ. The effect ofH21 is to matchan image pointx = (x, y, 1)T on I1 with its correspondingpointx′ = (x′, y′, 1)T onI2 iff both x andx′ are images of thesame pointX on the planeπ. In other words, Equation (2) istrue if x andx′ are images of the same pointX that is on theplaneπ, otherwise Equation (2) fails. The farther the pointXis off the homography plane the greater the disparity betweenH21x andx’s actual corresponding point inI2.

2.1 Homography Estimation

The 3×3 homography matrix,H, has 9 entries, but it has only 8degrees of freedom as it is a homogeneous matrix and thereforedefined only up to scale. Each 2D homogeneous image pointxi = (xi, yi, 1)T has two degrees of freedom corresponding tothe xi andyi components. A point correspondencexi → x′ibetween the two projective planes gives two constraints onH, since for each pointxi on one projective plane the twodegrees of freedom of the second point must correspond to themapped pointHxi

∼= (x′i, y′i, 1)T . Therefore at least four point

correspondences are needed to constrainH fully. The pointcorrespondences are found by detecting a calibration shape inan image and matching the found image points to real worldpoints on the calibration shape. The calibration shape is placedat multiple positions on the ground plane in order to ensure a

Figure 2: Iterative DLT flow diagram.

good distribution of corresponding points across the whole realworld plane. This is necessary as one important source of errorsis the uneven distribution of input points [6]. Work has beencarried out on automatic extraction of feature points for planarhomography estimation, see [15, 11, 4, 12] for more details.The normalised DLT algorithm [10] is used to compute a linearsolution to the homography. The DLT algorithm generates a2×9 matrixAi for each point correspondencexi → x′i, where

Ai =[

0T −xTi y′ix

Ti

xTi 0T −x′ix

Ti

](3)

If there aren point correspondences then thenAi matrices arecomposed into a single2n × 9 matrix A. A linear solutionto Ah = 0 is found using the least-squares solution [10] to ahomogeneous system of linear equations, whereh is a 9-vectormade up of the entries of the matrixH.

2.1.1 Robust Homography Estimation

Due to incorrect matches in corresponding feature points,called outliers, and also due to noise, errors arise inthe homography estimation process. For a given pointcorrespondencexi → x′i an error value,δi, can be calculatedas the perpendicular distance between the real correspondingpoint to xi, namelyx′i, and the corresponding point inducedby H, namelyHxi. The total error value,δH , for a givenHcan then be calculated as the sum of the errors thatH induceson the point correspondencesxi → x′i. If there are more thanfour point correspondences, this error value can be improvedby applying the normalised DLT algorithm iteratively. Figure2 shows the iterative normalised DLT algorithm process flowdiagram. The iterative process weights each set of thenAi

matrices that correspond to then point correspondences toimprove the estimation accuracy. The value of this weighting,α, is inversely proportional to the error:

αAi = Ai ·1δi

(4)

(a) (b) (c)

Figure 3: (a) Reference image,I1; (b) Other image,I2; (c)TransformedI2 overlaid ontoI1.

As such if the point correspondence represented byAi gives alarge error, i.e. the point is an outlier, thenAi is scaled down.This means that the error induced by the homography for thatpoint correspondence will also be very small, and therefore willbe ignored to a large extent by the minimization algorithm.Alternatively if the point correspondence is found to fit wellthenAi is scaled up. Finally once a linear solution toH isfound, it is then enhanced using Powell minimization [13] toget a non-linear solution, this is necessary as linear methodsgenerally suffer from more errors when compared to non-linearmethods [6].

2.2 Background Modelling

Before extracting foreground regions, each of the referenceimages is transformed viaH into the same image plane forcomparison. LetI1 represent the image plane and letI2...n bethen − 1 other images of the same scene taken from differentviewpoints. To transform each image,Ii, to the referenceimageI1, Equation (2) is used, whereH is the homographyfrom imageIi to imageI1, see Figure 3 for an illustrativeresult. This is implemented to take advantage of the fact thattwo corresponding points on the ground plane would transformto the same point in the reference view, whereas points aboveor below the ground plane would not transform consistently.This can be seen from the zoomed section of the images inFigure 4 note the increasing disparity between the edge of thebuilding, which is perpendicular to the ground plane, the fartherthe height above the ground plane.

After transforming the images an edge-based backgroundsubtraction technique [3] is employed to extract foregroundedge pixels. The technique assumes a static backgroundand camera and incorporates the background subtractiontechnique directly into the edge detection process, to producea modified version of the standard Canny edge detector [5]which computes only those edges which differ from the staticbackground. An edge-based approach is used as it providesthe required input features that would be needed to generatea full 3D model which will be necessary in future work.Each background and foreground edge pixel has a set of data

(a) (b) (c)

Figure 4: (a) Zoomed section of reference image,I1; (b)Zoomed section of other image,I2; (c) Zoomed section oftransformedI2 overlaid ontoI1.

associated with it, this data is as follows:

1. A Gaussian for the pixel RGB value;

2. A Gaussian for the gradient magnitude,gmag, which isthe absolute gradient value of the RGB channel with thestrongest gradient;

3. A Gaussian for the gradient direction,gdir.

Using this technique the foreground edges are obtained in eachof the n images. See Figures 7(a)-(d) and 9(a)-(d) for theresults of the background subtraction technique in two differentscenarios. The use of a background suppression technique canlead to a number of problems. The background may changedue to two factors [7]:

1. Variations in the lighting conditions, for example, cloudscovering the sun in outdoor scenes or lights turned on oroff in indoor scenes.

2. Objects that modify their status from stopped to movingor vice versa.

Background suppression has an inherent trade-off betweenhigh responsiveness to changes and reliable backgroundmodel computation which can lead to erroneous foregroundregion segmentation. An example of this is shadows castby both foreground and background objects being detectedas foreground objects. This is especially true in a dynamicreal world environment where the occluded sun can suddenlyemerge from behind clouds casting strong shadows. Inaddition background suppression can pick upghosts asforeground regions. Ghosts are introduced when objectsbelonging to the background model move. When this occurstwo new foreground regions are detected; the region wherethe object has moved to, and also the region where the object

was previously located in the background model. The secondregion is referred to as a ghost, since it does not correspondto any real moving object [7]. An example of a ghost can befound at the back right hand corner of Figure 9(d) and of ashadow in Figure 7(d), these are typical problems that occurwith background suppression algorithms.

Work has been done on suppressing shadows and ghosts bymeans of exploiting colour information [7, 8, 9]. However,our approach aims to use a purely geometrical approach todetecting objects on or close to the ground plane. Groundplane objects, such as rubbish, cast shadows, and ghosts thatare on the ground plane can therefore be eliminated as they willclosely abide by the homography constraint set out in Equation(2). By using the homography information, the backgroundsubtraction technique can be supplemented in order to developa more robust background subtraction algorithm.

2.3 Low-Lying Region Detection

Let I1 be the reference image and letI2...n be n − 1 otherimages of the same scene taken from different viewpointsthat have been warped into the reference frame ofI1. Letthe segmented foreground edge pixels inI1 be e11, e12...e1n,similarly let the foreground edge pixels inI2 range frome21, e22...e2m. ¿From the background edge subtractiontechnique described in Section 2.2, each foreground edge pixeleri has data associated with it. This data is used in conjunctionwith the homography information to localise low-lying regionsfrom all detected foreground regions.

If a point eri actuallydoeslie on the real world ground plane,then the edge data for the pixel at locationi in eachI2...nwill closely match the edge data for the corresponding pixelin I1. Alternatively, if a pointeri lies above the real worldground plane, then the corresponding edge data in eachI2...nshould not be matched by the edge data forI1 at the locationi, and this discrepancy can be detected. However due to anumber of reasons such as noise, image quantisation, unevenground plane, lighting and incorrect homography estimation,this will not always be the case. A match toeri is thereforeallowed to occur in a small local neighbourhood toeri, thesize of this neighbourhood depends on both the position of thecamera above the plane and also the distance above this planethat pixels should still be detected as low-lying, see Figure 10for an illustration of this. To determine if an edge pixelesj isacceptable as a match toeri:√

(gdirri − gdir

sj )2 < tseed (5)

wheregdirri is the edge gradient direction ateri, gdir

sj is theedge gradient direction atesj and tseed is a threshold valuefor the gradient directions of seed pixels. If a match toeri

is found inall other reference images, theneri is labelled asa low-lying seed pixel, otherwise it is labelled as a pixel off

the ground plane. By applying this algorithm with a relativelylow thresholdfor tseed reliable low-lying region seed pixels areobtained. Information on how to determinetseed is provided inSection 3 and Figures 7(e) and 9(e) illustrate results for twodifferent camera setups.

The generation of reliable seed edge pixels is integral to thistechnique, however, at this stage there are cases when spuriouslow-lying seed pixels are detected. The removal of these seedpixels is a two stage process, firstly the knowledge that groundplane regions should have clusters of seed pixels is applied.The number of seed and non-seed foreground pixels in theneighbourhood of each seederi are computed. Iferi doesnot have above a certain percentage,tperc, of seed to non-seed foreground pixels theneri is reclassified as a non-seedpixel. However, the removal of seed pixels from one imageimplies that corresponding seed pixels in other images may nolonger be valid. The second stage therefore is to check thateacheri has a matching edge pixel in each other image, withinthe allowed neighbourhood, if it does not theneri is reclassifiedas a non-seed pixel. Information on how to determinetperc isprovided in Section 3.

Finally, each seed pixel is grown as follows. Each pixelj in thesmall local neighbourhood of each seed pixeleri is checked tosee if it can be reclassified as a seed pixel. Ifj is a foregroundpixel but not a seed or grown pixel then an attempt is made tofind a match to pixelecj in any other reference image withinthe local neighbourhood toeri. Equation (5) is used with anincreased threshold,tgrow, to determine if two edge pixels area match. A match is allowed to occur inany other imageinstead ofevery other image, as in the case with obtainingseed pixels. The reason for this is that matches to all low-lying edge pixels will not be available in every image, due toocclusion induced by the different viewpoints of the cameras.The growing of seed pixels is done iteratively as initial seedpixels may be sparsely spread throughout the image. Thenumber of iterationstiter, is dependent ontseed, since seedpixels become sparser as this threshold is lowered. By applyingthis algorithm with relativelyhigh thresholdsfor tgrow reliablelow-lying edge pixels are obtained. Information on how todetermine bothtgrow and titer is provided in Section 3 andFigures 7(f) and 9(f) illustrate results of low-lying pixels fortwo different camera setups.

2.4 Rising Low-Lying Region Detection

The low-lying edge pixels detected will consist of objects thatshould be ignored such as shadows on the ground plane, objectsnear to the ground plane such as leaves, paper, etc. Howeverthere will also be low-lying edge pixels that are part of objectsthat rise above the ground plane, these include pedestrians feet,parts of prams or bicycles, etc. These arenot regions to ignoreand so must be reclassified as foreground regions.

A modified version of the iterative connective components4-neighbourhood algorithm [14] is applied to group theforeground edge pixels into regions. The algorithm is alteredso that a new region,R, is grown according to gradientdirection and pixel colour. A foreground edge pixel,eri, is notpart of the region of one of its neighbours,esj , if either of thefollowing equations are true:√

(gdirri − gdir

sj )2 < tdir (6)√(gmag

ri − gmagsj )2 < tmag (7)

where gdirri and gmag

ri are the edge gradient direction andmagnitude ateri respectively, similarlygdir

sj andgmagsj are the

edge gradient direction and magnitude atesj respectively andboth tdir and tmag are threshold values. The thresholdstdir

andtmag are not critical, in our experimentstdir andtmag areset to 15 and 50 respectively. These equations force pixels withabrupt changes in direction or gradient to become new regions,it will however allow for gradual changes in both gradientdirection, which will allow for curved regions, and gradientmagnitude.

Once connected regions are formed the following statistics foreach region,R, are obtained: the percentage of low-lying tonon low-lying region pixelsRperc; the centre of gravity of thelow-lying edge pixelsζlow; and the centre of gravity of all theother foreground edge pixelsζnon. We use these statistics todetermine if a region is low-lying, rising or non low-lying. Thethresholdstlow and thigh, used in the following rules, bothrepresent percentages of low-lying to non low-lying regionpixels in a region. These thresholds are not highly critical, inour experimentstlow is set to 25% andthigh is set to 75%. ForeachR that has at least one low-lying region edge pixel thefollowing is applied:

1. If Rperc < tlow; R is classified as a false positive low-lying region and every foreground edge pixel inR isreclassified as non low-lying region pixels.

2. If Rperc > thigh; R is classified as a true positive low-lying region and every foreground edge pixel inR isreclassified as low-lying region pixels.

3. If Rperc > tlow andRperc < thigh; then the relationshipbetween the orientation ofζlow to ζnon is examined. Thisinformation is used to indicate if the region is a low-lying region that is rising above the ground. If the groundplane occurs at the bottom of the image and object risesabove the ground to the top of the image then: theyvalue of ζnon should be above they value ζlow; theabsolute difference between they values ofζnon andζhigh

should be significantly larger than the absolute differencebetween thex values ofζnon andζhigh. If this is true thenevery foreground edge pixel inR is reclassified as risinglow-lying region pixels.

By applying this technique, spurious low-lying regions will beeliminated, real low-lying regions will have missing sectionsfilled in and rising low-lying regions will be reclassified asrelevant foreground regions. See Figures 7(f)-(g) and 9(f)-(g),note how the problems of the shadow in Figure 7(d) and ghostat the back right hand corner in Figure 9(d) are eliminated. Thismethod has also the added advantage that it becomes possibleto tell if part of an object is close to the ground plane but theobject rises above it, or if the object is never near the groundplane, such as a persons head and shoulders when their feetare not visible. This information will be valuable in our futurework.

3 Experimental Results

The crux of this work is to obtain good low-lying region pixels.This means finding as many correctly identified pixels whileminimizing the number of false positives for a given setup.The technique defined in this paper is therefore dependent onfour major thresholds values, namely;tseed and tperc whichare needed to obtain good seed pixels, andtgrow and titer

which are used to constrain the growth of the seed pixels. Inorder to obtain the threshold values the system is put througha training stage. This training involves using manually maskedinput images, such as the one shown in Figure 5, to obtain aground truth to allow the selection of the best thresholds for agiven setup.

For a given threshold configuration,tci, the low-lying edgepixels are found in a single image via the defined algorithm.These pixels are then compared to the masked image and thenumber of correctly identified low-lying pixels,ci, and thenumber of false positives,fpi, are determined for eachtci.Various tci are tested in a coarse to fine manner in order toobtain an optimal threshold configuration. A graph such asFigure 6(a) is the result of this process, it shows the valuesof ci andfpi for a number of configurations for a given image.The graph has been sorted usingfpi values. Analysis of thisgraph indicates that the value fortseed is the dominating factorfor bothci andfpi and it can be said thatin generalthe graph’sx-axis is mirrored by the value oftseed, which ranges from 0.25to 2.5 as the x-axis diverges from the origin. To determine howtci compares to the other threshold configurations tested,tci

must be benchmarked against them. Asuitability value, svi, isassociated withtci which takes into account both how well theconfiguration finds accurate low-lying pixels and also how wellit controls the generation of false positives in a certain image.

svi =100× n× ci∑n

x=1 cx︸ ︷︷ ︸A

− 100× n× fpi∑nx=1 fpx︸ ︷︷ ︸

B

(8)

The first part of Equation (8),A, represents the percentage ofcorrect low-lying pixels found bytci to the average numberfound for all configurations for that image; partB of the

(a) (b)

Figure 5: (a) Original foreground image; (b) Maskedforeground image (Red: low-lying regions; Blue: non low-lying regions).

equation is similar to the first but calculates a percentage forfalse positives. BothA andB are given an equal weighting aswe are interested in the solution that returns the most numberof accurate low-lying pixels with the least number of falsepositives. If a threshold setup is required for higher precisionrather than recall then the equation can be modified simply byweightingA at a higher value relative toB. The besttci isfound by maximizing the value forsvi. The graph in Figure6(b) showssvi for the configurations sorted in the same orderas Figure 6(a). Figure 6(c) illustratesci, fpi and svi in thesame coordinate system. It can be seen that the graph forsvi

mirrors closely that ofci whenfpi is low, but as the number forfpi becomes greater,svi rapidly declines. The region wheresvi peaks defines the boundaries of the area of interest of thecoarse iteration process. A fine iteration can then be appliedbetween these boundaries to determine the optimal thresholdsetup. This process is repeated for a number of images, weimplement it for 10 images of varying conditions, and thetci

with the highestsvi averaged over the number of frames is theconfiguration used in a given setup.

We define two different setups; setup one consists of threecameras placed approximately 4 metres above the ground planemonitoring real world conditions, and the second setup consistsof three cameras at a height of 1.5 metres above the groundplane monitoring indoor scenarios. The cameras are in a45◦ − 45◦ − 90◦ triangular configuration with a baseline ofapproximately 10cm between each camera and a camera focallength of 3.8mm. The image resolutions are640 × 480 and320 × 240 respectively. For setup one it was found thata threshold configuration oftseed = 1, tperc = 33, tgrow =10 andtiter = 75 works best and for setup two a thresholdconfiguration oftseed = 2, tperc = 25, tgrow = 5 andtiter = 10was used. Both setups used a neighbourhood of 1 vertical and1 horizontal pixel and this can be increased to obtain pixels thatlie at a greater height from the ground plane. The difference inthe thresholds found for these two setups points to the fact thatsetup two was in a controlled environment with little clutter,background activity and consistent lighting conditions. Thismeant that the threshold on seeds and growth could be relaxedand therefore less iterations on growth were necessary. SeeFigures 7, 8 and 9 for detection results from both setups.

Figure 6: Graphs for a number of different thresholdconfigurations (a) Graph ofci (in blue) andfpi (in red) (b)Graph ofsvi (c) Graph ofci (in blue),fpi (in red) and scaledupsvi (in yellow).

(a) (b)

(c) (d)

(e) (f)

(g) (h)

Figure 7: Results for setup one (a) Background image; (b)Background model edges points; (c) Foreground image; (d)Segmented foreground edge points; (e) Seed points (Bluepoints); (f) Grown seed points (Blue points); (g) Rising low-lying regions (Green points); (h) Only non low-lying regions.

(a) (b) (c)

(d) (e) (f)

Figure 8: Results for setup one; (a) and (d) Foreground image;(b) and (e) Segmented foreground edge points; (c) and (f) Onlynon low-lying regions.

(a) (b)

(c) (d)

(e) (f)

(g) (h)

Figure 9: Results for setup two (a) Background image; (b)Background model edges points; (c) Foreground image; (d)Segmented foreground edge points; (e) Seed points (Bluepoints); (f) Grown seed points (Blue points); (g) Rising low-lying regions (Green points); (h) Only non low-lying regions.

(a) (b) (c) (d)

Figure 10: Effects of a changing neighbourhood size (Resultsshown are after growing Seed Edge Points), the neighbourhoodregion consists of an offset of±1 Vertical pixel and (a)±0Horizontal pixels; (b)±1 Horizontal pixel (we use this sizeneighbourhood for the two setups); (c)±2 Horizontal pixels;(d)±3 Horizontal pixels.

4 Conclusions and Future Work

Many computer vision applications require backgroundsuppression techniques for the detection and segmentationof moving objects in a scene. While these algorithms tendto work well in controlled conditions they often fail whenapplied to unconstrained real-world environments. This paperdescribed a system that successfully detects and removeserroneously segmented foreground regions in both constrainedand unconstrained scenarios. The algorithm accurately detectsregions such as shadows, ghosts and other low-lying objects.The approach avoids a costly computation of 3D depth valuesand instead uses an easily computed 2D geometrical constraintcalled a homography defined between multiple images of thesame planar scene. Experimental results are presented thatindicate the correctness of the approach for both an indoorscenario and the outdoor environment of a pedestrian trackingapplication.

The long term goal of this research is to develop a multiplecamera system that can robustly track pedestrians in 3D. Inthat sense, the algorithm described may be regarded as a 2Dpreprocessing step designed to remove erroneous foregroundinformation that would otherwise be tracked. Future workentails computing a full multi-camera calibration allowing thecomputation of fundamental matrices between camera pairs.The derived epipolar geometry will enable the generationof stereo correspondences within the images and hence 3Dtracking. The creation of virtual views would also be possibleand therefore allow users to view the scene from a chosenpedestrian’s point of view.

Acknowledgements

This material is based on works supported by ScienceFoundation Ireland under Grant No. 03/IN.3/I361.

References

[1] R. Alix, F. Le Coat, and D. Aubert. Flatworld homography for non-flat world on-road obstacledetection. InProceedings of the IEEE Symposium onIntelligent Vehicles, pages 310–315, 2003.

[2] P. H. Batavia and S. Singh. Obstacle detectionusing adaptive color segmentation and color stereohomography. InProceedings of the IEEE InternationalConference on Robotics and Automation, pages 705–710,2001.

[3] P. Beardsley and E. Bourrat. Wheelchair detection usingstereo vision. Technical report, MERL, August 2002.

[4] B. Boufama and D. O’Connell. Region segmentation andmatching in stereo images. InProceedings of the 16thInternational Conference on Pattern Recognition, pages631–634, 2002.

[5] J. Canny. A computational approach to edge detection.In IEEE Transactions on Pattern Analysis and MachineIntelligence, pages 679–698, 1986.

[6] Y. H. Chow and R. Chung. Obstacle avoidance of leggedrobot without 3d reconstruction of the surroundings. InProceedings of the IEEE International Conference onRobotics and Automation, pages 2316–2321, 2000.

[7] R. Cucchiara, C. Grana, M. Piccardi, and A. Prati.Detecting objects, shadows and ghosts in video streamsby exploiting color and motion information. In11th International Conference on Image Analysis andProcessing, pages 360–365, 2001.

[8] R. Cucchiara, C. Grana, M. Piccardi, A. Pratii, andS. Sirotti. Improving shadow suppression in movingobject detection with hsv color information. InProceedings of IEEE Intelligent Transportation SystemConference, pages 334–339, 2001.

[9] I. Haritaoglu, D. Harwoord, and L. S. Davis. W4: Real-time surveillance of people and their activities. InIEEETrans. on Pattern Analysis and Machine Intelligence,number 8, pages 809–830, 2000.

[10] R.I. Hartley and A. Zisserman.Multiple View Geometryin Computer Vision Second Edition. CambridgeUniversity Press, 2003.

[11] K. Okuma, J. J. Little, and D. G. Lowe. Automaticrectification of long image sequences. InAsianConference on Computer Vision, 2004.

[12] M. Okutomi and S. Noguchi. Extraction of roadregion using stereo images. InProceedings of the 14thInternational Conference on Pattern Recognition, pages853–856, 1998.

[13] M. J. D. Powell. An efficient method for finding theminimum of a function of several variables withoutcalculating derivatives.The Computer Journal, 1964.

[14] M. Sonka, V. Hlavac, and R. Boyle.Image Processing,Analysis and Machine Vision, Second Edition. PWSPublishing, 1999.

[15] E. Vincent and R. Laganiere. Detecting planarhomographies in an image pair. InProceedings ofthe 2nd International Symposium on Image and SignalProcessing and Analysis, pages 182–187, 2001.

[16] Q.-B. Zhang, H.-X. Wang, and S. Wei. A newalgorithm for 3d projective reconstruction based oninfinite homography. InInternational Conference onMachine Learning and Cybernetics, pages 2882–2886,2003.