Assif Ziv (Cofounder, Fringefy) Machines and the City

-

Upload

augmentedworldexpo -

Category

Technology

-

view

80 -

download

1

Transcript of Assif Ziv (Cofounder, Fringefy) Machines and the City

PowerPoint Presentation

MACHINES AND THE CITY

AWE USA 20161-2 June, 2016 Santa Clara, California

Hi, my name is AssifIm one of the founders of FringefyWe do visual search, for places1

A few months ago I returned from a trip to Japan.While I was walking in the streets of Tokyo, I saw so many cool places, but couldnt tell what many of them were.I also couldnt look them up online because I dont know Japanese.

I wish I could look up places online even when there is a language barrier.

Im sure a similar thing has happened to anyone who has traveled abroad.2

As a teenager, my grandma lived in a fancy house at the Marina, here in San Francisco.The house still stands there to this day.

When I saw it, the first thing I thought was How much does it cost today?I wish I could uncover the hidden layer of real estate prices just by looking at a place.Unfortunately, when theres no sign its hard to search for information online.3

Imagine visiting your friend in her hometown.While looking at a cool cafe, she might tap your shoulder and say:Hey, this cafe does look cool, but the one just around the corner has much better coffee

How did she supply you with the right information at the right time?You were just behaving naturally, looking at a cafe when you felt like a coffee.She recognized what you were looking at, and used her knowledge of the area to give you the most contextual help.

I wish machines could help us in such an intuitive way, just like a local.4

1 in 3 searches on smartphone occur right before consumers visit a storeGoogle, 2014

MANY people hope that their mobile devices will provide such service.Google announced in 2014, that many people search for information about places in front of them, JUST before entering.

But currently, theres no app that answers the question whats that place?.Today, people use non-intuitive ways to access local content:5

When standing in front of a place, people usually type its name in the search bar.Another option is to scroll through a list of nearby places, or to look it up on a map.It is not convenient.Sometimes, it cant be done at all - if theres poor signage or when theres a language barrier.

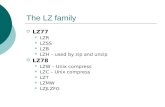

It takes me 7 seconds to find a place in front of me on Yelp. Why so long?Because today we are the middleman, translating what we see to a language the computer can understand.In the past, some attempts have been made to fix this.6

Since 2010 various AR browsers used the phones geo-sensors to estimate the relative direction of places from the users location.Such apps had millions of downloads, but no retention.They did not work- the estimation was not accurate enough to answer the question Whats that?.

Geo-sensors can not help in close range.While walking down the street, the buildings around us fall within that close range.7

12%of android appsuse the camera

60%of the brain isinvolved in vision

How did my local friend, who tapped me on the shoulder, know that I wanted coffee?She visually recognized the cafe that I was looking at.

Vision is THE prime human sense.Mobile devices already have camerasWhy arent they using their visual sensors to recognize buildings?

---

Sources:http://www.pewinternet.org/files/2015/11/PI_2015-11-10_apps-permissions_FINAL.pdfhttps://www.quora.com/How-much-of-the-brain-is-involved-with-vision8

It turns out that general purpose image recognition algorithms fail to recognize storefronts.They have been developed mostly for objects, and work especially well indoor.

They fail in the outdoor urban environment because store fronts usually have a lot of reflections and are affected dramatically by different times of the day.In addition, people in the shops and in the streets, cars and bicycles frequently block the view of the storefronts.9

Point phone to get infoabout whats in front of you

At Fringefy weve been developing an image recognition technology that is specifically focused at solving this problem.

This is a video of our demo app in action.All you need to do is to aim your phones camera at a place, and we recognize it.Take a look:10

Point phone to get infoabout whats in front of you

Its accurate, fast, convenient, very robust to occlusions and works well in different times of day.

In the future, this technology will serve as an infrastructure to support any connected device with a camera,such as smart glasses, drones and even autonomous vehicles.11

The [real] world is the new platformAngela Ahrendts, Apple

Preparing the road for such technologies,the big companies have invested heavily in mapping and imagery during the past year.

During the PC era, Google became so big by indexing text on the internet.Today, in the mobile era, its clear the big players are racing to visually index the real world.12

CLICK HERE

The web economy is driven by the value of a click.Click-through-rate is translated directly into dollars.

With the mobile revolution, clicks are moving from the virtual into the real world.We allow people to click on bricks!13

Its inevitable that AR will be everywhere.We should all think about building infrastructure to get there, while offering value to consumers today!even when using their mobile devices.14

A friend once asked me: can you imagine a machine that knows the answer to any question?Before I got to answer he said it already exists.He works for Google.

Today, I think the machine cant answer anything yet,But if we give it sight,it can drive the car for us,fly a drone to our window,or understand what we want just by looking at us.15

[email protected]@fringefy

Please visit us at booth 602 and check out our demo.Thank you!16