01 Linearization of matrix polynomials expressed in polynomial bases.pdf

-

Upload

maritza-l-m-capristano -

Category

Documents

-

view

213 -

download

0

Transcript of 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

1/15

LINEARIZATION OF MATRIX POLYNOMIALS

EXPRESSED IN POLYNOMIAL BASES

A. Amiraslania,1, R. M. Corlessb, and P. Lancastera

a Department of Mathematics and Statistics, University of Calgary

Calgary, AB T2N 1N4, Canada

{amiram, lancaste}@math.ucalgary.ca

b Department of Applied Mathematics, University of Western Ontario

London, ON N6A 5B7, Canada

Abstract

Companion matrices of matrix polynomials L() (with possibly singular leading co-efficient) are a familiar tool in matrix theory and numerical practice leading to so-calledlinearizations B A of the polynomials. Matrix polynomials as approximations tomore general matrix functions lead to the study of matrix polynomials represented in avariety of classical systems of polynomials, including orthogonal systems and Lagrangepolynomials, for example. For several such representations, it is shown how to con-struct (strong) linearizations via analogous companion matrix pencils. In case L()

has Hermitian or alternatively complex symmetric coefficients, the determination oflinearizations B A with A and B Hermitian or complex symmetric is also discussed.

1 Introduction

An s s matrix polynomial P() of degree n has s2 entries, each of which is a scalar(complex) polynomial in with degree not exceeding n. Grouping like powers of together determines the representation P() =

nj=0

jAj, where the coefficients

Aj Css and An = 0. Clearly, the polynomial could also be uniquely determined by

n + 1 samples of the function: Pj := P(zj), where the points z0, z1, . . . , zn C are

distinct.The process of gathering the n + 1 matrices of coefficients of the successive pow-ers of could be described as interpolation by monomials. Indeed, the matricesP0, P1, . . . , P n may be samples of a function P() of a more general type; analytic, forexample, and one may be interested in how the interpolant P() approximates P().

We consider only matrix polynomials which are regular in the sense that the deter-minant, detP(), does not vanish identically. Practical and algorithmic concerns with

1Correspondence to: Amirhossein Amiraslani, Department of Mathematics and Statistics, University ofCalgary, 2500 University Dr. NW, Calgary, AB T2N 1N4, Canada

1

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

2/15

such polynomials frequently involve the determination of eigenvalues; namely, those0 C for which the rank of P(0) is less than s. Thus, the eigenvalue multiplicityproperties (geometric and algebraic) have a role to play.

It is natural to study spectral properties of the polynomial via the associated pencilC1 C0, where (when n = 4, for example)

C1 =

I 0 0 00 I 0 00 0 I 00 0 0 A4

, C0 =

0 0 0 A0I 0 0 A10 I 0 A20 0 I A3

. (1)This has been extensively used and recognised; see [12] and [13], for example, amongmany other sources. The vital property of this pencil is that it forms a stronglinearization of P() in the sense that it reproduces the multiplicity structures of theeigenvalues ofP(), both finite and infinite. An infinite eigenvalue is said to exist whendetAn = 0 (see [12] and [18], in particular).

A major objective of this paper is to consider the analogous problems which arisewhen P() is represented in other bases (i.e. other than monomials) for the linear space

of scalar polynomials with degree not exceeding n. Applications of matrix polynomialsin other bases occur in Computer-Aided Geometric Design (where Bernstein bases areused) and in the Lagrange basis (see e.g. [4]). In this present paper, analogues of (1)are to be formulated, and the property of strong linearization is to be investigated, i.e.linearizations which preserve the invariant polynomials of both P() and its reverseP() := nP(1/).

The details of this program depend on a particular property of the polynomial basisemployed: whether it is degree-graded (consists of polynomials of degrees 0, 1, 2, . . . , n(like the monomials)), or whether all polynomials have the same degree (as with theLagrange interpolating polynomials). The paper is organised accordingly: Sections 2and 3 are concerned with degree-graded bases. Sections 4 and 5 discuss interpolation

with Bernstein and Lagrange bases, respectively.It will be seen that the strategy adopted below (as in [1]) involves the determination

of-dependent (triangular) LU-decompositions ofC1C0 (and its various analogues).We remark that in some algorithms (especially of Rayleigh-quotient type) it is necessaryto solve linear systems (0C1 C0)x = b (with a fixed 0) many times. The LUdecompositions used here can also play a useful role in this algorithmic context. Thereis an exhaustive study of these LU factorization in [3].

Bases other than the monomials find many applications. For problems in computer-aided geometric design, the Bernstein-Bezier basis and the Lagrange basis are mostuseful (see [10], for example). There are problems in partial differential equationswith symmetries in the boundary conditions where Legendre polynomials are the most

natural. Finally, in approximation theory, Chebyshev polynomials have a special placedue to their minimum-norm property (see e.g. [22]).

2 Degree-graded polynomial bases

2.1 Linearization

Real polynomials {n()}n=0 with n() of degree n which are orthonormal on an

interval of the real line (with respect to some nonnegative weight function) necessarily

2

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

3/15

satisfy a three-term recurrence relation (see Chapter 10 of [8], for example). Theserelations can be written in the form

j() = jj+1() + jj() + jj1(), j = 1, 2, . . . , (2)

where the j, j , j are real, 1() = 0, 0() = 1, and, ifkj is the leading coefficientof j(),

0 = j =kj

kj+1, j = 0, 1, 2, . . . . (3)

The choices of coefficients j , j, j defining three well-known sets of orthogonalpolynomials (asociated with the names of Chebyshev and Legendre) are summarisedin Table 1. Such orthogonal polynomials have well-established significance in mathe-matical physics and numerical analysis (see e.g. [11]). More generally, any sequence ofpolynomials {j()}

j=0 with j() of degree j is said to be degree-graded and obviously

forms a linearly independent set; but is not necessarily orthogonal.

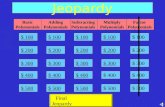

Table 1: Three well-known orthogonal polynomialsPolynomial Tn(x) Pn(x) Cn(x)

Name of polynomial Chebyshev(1st kind) Legendre(Spherical) Chebyshev(2nd kind)

Weight function (1 x2)1

2 1 (1 x2)1

2

Orthogonality interval [1, 1] [1, 1] [1, 1]

Leading coefficient kn 2n1 (2n)!

2n(n!)2 2n

n12

12

n+12n+1

n 0 0 0n 1 1

n

2n+1

An s s matrix polynomial P() of degree n can now be written in terms of a setof degree-graded polynomials:

P() = Ann() + An1n1() + + A11() + A00(). (4)

For convenience, let us assume n = 5 and the generalizations for all positive n willbe clear. Define block-matrices

C0 =

0Is 1Is 0 0 k4A00Is 1Is 2Is 0 k4A1

0 1Is 2Is 3Is k4A20 0 2Is 3Is k4A3 + k54A50 0 0 3Is k4A4 + k54A5

, (5)

C1 =

Is 0 0 0 00 Is 0 0 00 0 Is 0 00 0 0 Is 00 0 0 0 k5A5

, (6)(and observe how the matrices of (1) fit into this scheme). This construction is essen-tially that of a comrade matrix introduced by Barnett; see Chapter 5 of [5] and [6].

3

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

4/15

A little computation shows that0()Is 1()Is 2()Is 3()Is 4()Is

(C1C0) =

0 0 0 0 k4P()

.

(7)The first n 1 row-into-column products simply reproduce some of the relations (2).For the last such product use equations (2), (3), and (4). In the more suggestivenotation of [21] this equation reads: 2

T() I

(C1 C0) = kn1eTn P()

where T() = [0(), 1(), . . . , n1()].Now suppose that 0 is an eigenvalue ofP() with left eigenvector y, i.e. y

HP(0) =0 (where the superscript ()H denotes the Hermitian (complex-conjugate) transpose ofa matrix or vector). Then evaluating (7) at 0 and premultiplying by y

H gives:0(0)y

H 1(0)yH 2(0)y

H 3(0)yH 4(0)y

H

(0C1 C0) = 0 . (8)

This shows that every finite eigenvalue of P() is also an eigenvalue of C1

C0

andalso shows how left eigenvectors ofC1C0 can be generated from those ofP(). (Thisis a generalization of part(ii) of Theorem 5.2 of [5]; special cases have appeared in [1].)The left eigenvectors do not have special role in this discussion. A similar explicitcharacterization of the relationship of a right eigenvector w of P() corresponding tofinite eigenvalue with a right eigenvector of the pencil C1C0 can be made (see [1]).

This argument shows that P() and C1 C0 have the same spectrum, but moreis true. To establish this a Lemma on linearizations is required. A linearization of theregular matrix polynomial P() is generally defined to be an sn sn pencil A Bfor which

E()(A B)F() =

In(s1) 0

0 P()

, (9)

for some unimodular matrix polynomials E() and F(). We need a more generalcharacterization of a linearization as follows:

Lemma 1 If (9) holds for functionsE() andF() which are unimodular and analyticon a neighbourhood of the spectrum of P(), then A B is a linearization of P().

Proof: A linearization A B can be characterized by the property that all of itseigenvalues and their partial multiplicities (including the eigenvalue at infinity if An issingular) are the same as those ofP() (Theorem A.6.2 of [13], for example). The factthat these properties are preserved by the more general matrix functions, E() andF(), follows immediately from Theorem A.6.6 of [13].

Remark. Let Z be the set of all zeros of1(), , n1(). This set is necessarilyfinite. We will see that to use the above lemma correctly, we will have to block pivotwhenever is in a small enough neighbourhood of any of these zeros. It follows fromthe work of [1, 3] that this can always be done.

2The authors thank a helpful reviewer for pointing out the connections with work of [16] and [21]. Thisequation shows a clear connection with the left ansatz of [21, eq. (3.9)]. This analogy suggests that, asin [21], for each polynomial basis () two vector spaces of linearizations may be defined, and that, asin [16], these vector spaces may be explored for linearizations that preserve structure, or are particularlywell-suited for the task at hand. These considerations deserve further study.

4

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

5/15

Theorem 2 LetP() be a matrix polynomial of degree n and {n()}n=0 be a degree-

graded system of polynomials satisfying the recurrence relation (2). Then the pencilC1 C0 defined by (5) and (6) is a strong linearization of P().

Proof: First, assume that Z, the set of all zeros of 1(), , n1() does not

intersect the set of all eigenvalues of P(). In [3] the -dependent block LU factorsof C1 C0 for a pencil of the form (5) (6) and of degree n are explicitly given asfollows:

L() =

Is

0()1()

Is Is. . .

. . .

n2()n1()

Is Is

, (10)

U() =

01()0()

Is 1Is U1,n()

. . .. . .

...

n3n2()n3()

Is n2Is Un2,n()

n2n1()n2()

Is Un1,n()

Un,n()

, (11)

where

Ui,n() =

kn1A0, i = 1

kn1Aj1 +j2()j1()

Uj1,n(), i = 2:(n 2)

kn1An2 +n3()n2()

Un2,n() knn1An, i = n 10()

(0n2)n1()P(). i = n

(12)

Clearly, L() is nonsingular for all Z. For such , det(L()) 1. Thus, U()

is singular at the eigenvalues of P(). If we define U() to be the same as U() exceptfor its last block entry which is replaced by

Un,n() = 0()(0 n2)n1()

Is, (13)

then U() is also nonsingular and det(U()) 1. Now, we can construct the unimod-ular matrices E() = L1 and F() = U1 as follows:

E() =

Is0()

1()Is Is0()2()

Is1()2()

Is Is...

.... . .

0()n1()

Is1()

n1()Is

n2()n1()

Is Is

, (14)

5

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

6/15

Fi,j() =

i1()i1i()

Is, i = j = 1:(n 1)n1()0()

(0 n2)Is, i = j = nj1j1()j1j()

Fi,j1(), i = 1:(n 2);j = (i + 1):(n 1)

0n20() (kn1n2k=0 Akk() knn1Ann2()), i = n 1;j = n0n2i1

kn1n1()0()i()

i1k=0 Akk() +

Fi,n(), i = (n 2):1;j = n(15)

where

Fi,n() =

Fn1,n(), i = n 1ii1()ii()

Fi+1,n(), i = (n 2):1 (16)Now a straightforward computation shows that:

I(n1)s 0

0 P() = E()(C1 C0)F() (17)and, using Lemma 1, this shows that C1 C0 is a linearization of P().

To show that this linearization is strong, we must show that unimodular matricesH() and K() exist such that:

I(n1)s 00 P()

= H()(C1 C0)K() (18)

where P() = nP( 1). In fact, considering the LU factors ofC1 C0, we can writethe LU factors of the reverse pencil C1 C0 as follows:

L1() = L(1

) and U1() = U(1

). (19)

Now we can let H() = L11 () = L1( 1) = E(

1) where E() is given in (14), and

K() = U11 where U1 is the same as U1 except for the very last block entry which isreplaced by: U1n,n() = 0( 1)

(0 n2)n1n1(1)

Is. (20)

Using (15), we can construct K() as follows:

Ki,j() = Fi.j(

1

)

, i = 1:(n 1);j = i:(n 1)

n1

Fi,n(1

), i = 1:n;j = n

(21)

and now it can be verified that (18) holds.If instead Z, then, from the results of [1, 3], there exists a unimodular block

pivot matrix , a neighbourhood B of , and matrices E() and F() analytic in

B() such that all the factorings above may be computed mutatis mutandis.

6

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

7/15

2.2 Symmetrizing the linearization

If the data matrices A0, A1, . . . , An are Hermitian, then the resulting polynomial P()is Hermitian for real . Although the symmetry appears to be lost in the pencilC1 C0, it can be recovered in the monomial case (when An is nonsingular) onpostmultiplication of the companion matrix

C0C11 =

0 0 0 A0A

14

I 0 0 A1A14

0 I 0 A2A14

0 0 I A3A14

.by the Hermitian symmetrizer,

H0 :=

A1 A2 A3 A4A2 A3 A4 0A3 A4 0 0A4 0 0 0

. (22)

In this way the eigenvalue problem for the Hermitian matrix polynomial P() canbe examined in terms of the Hermitian pencil H0 (C0C

11 )H0. This also works if

the data matrices are not Hermitian but rather complex symmetric ATj = Aj. Suchmatrices occur in practice, for example with the symmetric Bezout matrix of a pair ofbivariate polynomials with complex coefficients. In either case, the block symmetries ofsuch a pencil can provide computational advantages and, as well, there is an extensivetheory for problems of this kind developed in [13].

It turns out that, in some cases, this symmetrizing property extends to the pencilsgenerated by other bases. Indeed, the following proposition is easily verified:

Proposition 3 Let{n()}

n=0 be a degree-graded system of polynomials satisfying arecurrence relation (2) in which j = = 0, j = , and j = for all j. Moreover,let P() be a Hermitian matrix polynomial defined in that basis with An nonsingu-lar. Then, when the generalized companion matrix C0C

11 (formed by (5) and (6)) of

P() is multiplied on the right by the Hermitian symmetrizer (22), the result is alsoHermitian. A similar result holds in the complex symmetric case.

Clearly, under the hypotheses of the theorem H0 (C0C11 )H0 is a Hermitian

linearization of P(). For cases when An is singular Hermitian linearizations can befound in [16].

3 Special degree-graded basesAs mentioned above, the family of degree-graded polynomials with recurrence relationsof the form (2) include all the orthogonal bases, but is not limited to them. In this sec-tion, we discuss some well-known non-orthogonal bases of this kind and, consequently,for which the linearization C1 C0 is strong.

7

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

8/15

3.1 Monomial basis

If in (2), we let j = 1 and j = j = 0, we get the monomial basis. Plugging thesevalues into (14) and (15), we get:

E() =

Is

1Is Is12

Is1Is Is

......

. . .1

n1Is

1n2

Is 1Is Is

, (23)

and

Fi,j() =

1Is, i = j = 1:(n 1)

n1Is, i = j = n0s, i = 1:(n 2);j = (i + 1):(n 1)

n2

k=0 Akk, i = n 1;j = n

ni1

i1k=0 Ak

k, i = (n 2):1;j = n,

(24)

and similarly from the fact that H() = E( 1), we get:

H() =

Is

Is Is2Is Is Is

......

. . .

n1Is n2Is Is Is

, (25)

and (21) gives K(). In this case Z = {0}, and for near 0 block pivoting must beused [3].

3.2 Newton basis

Let an s s matrix polynomial P() be specified by the data {(zj, Pj)}nj=0 where the

zjs are distinct. Then, P() can be expressed in the Newton Basis. This basis hasthe following ordered form for k = 0, , n:

Nk() =k1j=0

( zj) (26)

with N0() = 1. Therefore Z = {zj}n1j=0 . Then the polynomial can be written in the

form:

P() = A0N0() + A1N1() + + AnNn(), (27)where the Aj s can be found either by divided differences or, equivalently, by solvingthis system:

II N1(z1)II N1(z2)I N2(z2)I...

......

. . .

I N1(zn)I N2(zn)I Nn(zn)I

A0A1A2

...An

=

P0P1P2...

Pn

. (28)

8

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

9/15

For more details see [3].If in (2), we let j = 1, j = zj and j = 0, we get the Newton basis. Plugging

these values into (14) and (15), we get:

E() =

Is1

(z0)Is

Is1

(z0)(z1)Is

1(z1)

Is Is...

.... . .

1(z0)(zn2)

Is1

(z1)(zn2)Is

1(zn2)

Is Is

, (29)

and

Fi,j() =

1zi1(zi1)

Is, i = j = 1:(n 1)

(z0 zn2)( z0) ( zn2)Is, i = j = n

(z0 zn2)n2

k=0 AkNk(), i = n 1;j = n

(z0 zi2zi zn2)( zi) ( zn2)

i1k=0 AkNk(), i = (n 2):1;j = n

0s, otherwise,(30)and similarly from the fact that H() = E( 1), (21) gives K() and we also have

H() =

Is

(1z0)Is Is

2

(1z0)(1z1)Is

(1z1)

Is Is...

.... . .

n1

(1z0)(1zn2)Is

n2

(1z1)(1zn2)Is

(1zn2)

Is Is

. (31)

3.3 Pochhammer basisThe Pochhammer basis is just a special Newton basis with nodes zj = (a + j),j = 0, . . . , n 1. The Pochhammer basis is used in combinatorial applications andin the solution of difference equations. Some good sparse polynomial interpolationalgorithms have been developed using this basis (see [20], for example). If in (2), welet j = 1, j = (a +j) and j = 0, then the Pochhammer basis is generated.

4 Interpolating with Bernstein polynomials

Bernstein Polynomials have the form:

bj,n(; a, b) = 1(b a)n

nj( a)j(b )nj (32)

for n = 1, 2, and j = 0, 1, , n, and have good (uniform) convergence properties tocontinuous functions on (a, b) (see [8]). They are widely used in geometric computing(see [9] and [10]) and, clearly, they are not degree-graded. Here Z = {a, b} containsonly two elements.

9

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

10/15

4.1 Linearization

An s s matrix polynomial P() of degree n can be written in terms of a set ofBernstein polynomials:

P() = Anbn,n(; a, b)+An1bn1,n(; a, b)+. . .+A1b1,n(; a, b)+A0b0,n(; a, b). (33)

For convenience, let us assume n = 5 and the generalizations for all positive n willbe clear. Define block-matrices

C0 =

5abaIs 0 0 0

bbaA0

bbaIs

4a2(ba)Is 0 0

bbaA1

0 bbaIs3a

3(ba)Is 0 b

baA2

0 0 bbaIs2a

4(ba)Is b

baA3

0 0 0 bbaIsa

5(ba)A5 b

baA4

, (34)

C1 =

5baIs 0 0 0

1ba4A0

1baIs

42(ba)Is 0 0

1baA1

0 1baIs3

3(ba)Is 0 1

baA2

0 0 1baIs2

4(ba)Is 1

baA3

0 0 0 1baIs1

5(ba)A5 1

baA4

. (35)For more details see [17, 23, 1].

A little computation shows that

(

b0,5(; a, b) b1,5(; a, b) b2,5(; a, b) b3,5(; a, b) b4,5(; a, b)

Is)(C1 C0)

=

0 0 0 0 bbaP()

.

This is an obvious analogue of equation (7) for degree-graded polynomials. As in thatcase, it can be seen that C1 C0 and P() have the same eigenvalues. For Zagain block pivoting can be used; but in this case it turns out that we may cover thecase = b together with all = a (in practice we would use the block pivoting if isnear to a, not just equal to it). An analogue of Theorem 2 also holds:

Theorem 4 Let P() be a matrix polynomial of degree n and {bi,n(; a, b)}ni=0 be a

system of Bernstein polynomials. If = a is not an eigenvalue ofP(), then the pencilC1 C0 defined by (34) and (35) is a strong linearization of P(). If = a is aneigenvalue, then block pivoting can be used to get a strong linearization.

Proof: The proof is very similar to the proof of Theorem 2, so we give only a brief

outline. In [3], the -dependent LU factors of C1 C0 corresponding to a pencil ofthe form (34) (35) and of degree n are explicitly given as follows:

L() =

Is

bn(a)Is Is

. . .. . .

(n1)(b)2(a) Is Is

, (36)

10

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

11/15

U() =

n(a)ba Is U1,n()

. . ....

3(a)(n2)(ba)Is Un2,n()

2(a)(n1)(ba)Is Un1,n()

Un,n()

, (37)

where

Ui,n() =

bbaA0, i = 1

bbaAj1 +

(j1)(b)(nj+2)(a)Uj1,n(), i = 2:(n 1)

(ba)n1

n(a)n1P(). i = n

(38)

As in the degree-graded case (Theorem 2), we now replace the last block entryof (37) by Un,n() = b0,n(; a, b)(b a)n1

bn1,n(; a, b)(b )n1Is =

(b a)n1

n( a)n1Is, (39)

Moreover, looking at (15), we only need j, j and kn1 and kn to construct

F(). By comparison, it turns out that in this case j() = bba , j() = 0 andkn1() =

bba . Here, as opposed to (2), and kn1 are -dependent, and (3) is no

longer valid. Now, we can compute a unimodular matrix F() analogous to (15).For the reverse case, instead of (20), we now use

U1n,n() = (b a)n1n(1 a)n1 Is. (40)The rest of the proof is exactly the same as that of Theorem 2.

4.2 Symmetrizing the linearization

The idea discussed in Section 2.2 applies to the Bernstein case as well. Indeed, thefollowing proposition is easily verified:

Proposition 5 Let{bi,n(; a, b)}ni=0 be a system of Bernstein polynomials as in (32).

Moreover, let P() be a Hermitian matrix polynomial defined in that basis. Then,when the generalized companion matrix C0C

11 (formed by (34) and (35)) of P() is

multiplied on the right by the Hermitian symmetrizer (22), the result is also Hermitian.

5 Interpolating with Lagrange polynomials

5.1 LinearizationLagrange polynomial interpolation is traditionally viewed as a tool for theoretical anal-ysis; however, recent work reveals several advantages to computation in the Lagrangebasis (see e.g. [7, 15]). As above, suppose that an s s matrix polynomial P() ofdegree n is sampled at n + 1 distinct points z0, z1, . . . , zn, and write Pj := P(zj).Lagrange polynomials are defined by

j() = wj

nk=0, k=j

( zj), j = 0, 1, . . . , n (41)

11

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

12/15

(and so Z = {zj}nj=0) where the weights wj are

wj =n

k=0, k=j

1

zj zk. (42)

Then P() can be expressed in terms of its samples in the form P() = nj=0 j()Pj.The companion pencil C1 C0 as formulated in Section 3.2 of [1], or equations

(4.5) of [2], has (when n = 3):

C1 C0 =

( z0)I 0 0 0 P0

0 ( z1)I 0 0 P10 0 ( z2)I 0 P20 0 0 ( z3)I P3

w0I w1I w2I w3I 0

. (43)The extension to general n is obvious.

Let us define a polynomial P() by the (apparently) trivial device of adding terms

in n+1 and n+2 with zero matrix coefficients to P() (see [12]). This introducesinfinite eigenvalues that are defective. The following result then determines the natureof the infinite eigenvalue of P() via that of the zero eigenvalue of P().

Proposition 6 Let P() =n

j=0 Ajj with det(An) = 0, An = 0, so that P()has an infinite eigenvalue. If this infinite eigenvalue of P() has partial multiplicitiesm1 mt > 0 then t = n rank(An) and P() has an infinite eigenvalue withpartial multiplicities m1 + 2, , mt + 2, 2, , 2 (the 2 being repeated n t times).

Proof: The partial multiplicities of the eigenvalues of P() at infinity coincide withthose of the zero eigenvalue of P() = nP( 1). By Theorem A.3.4 of [14]

P() = E0() diag m1 , , mt , 1, , 1 F0() (44)for matrix polynomials E0(), F0() invertible at 0 and since P

(0) = An, it followsthat n t = rank(An), or t = n rank(An).

For the reverse polynomial of P(),

P() = nP(1

) = n+2P(

1

) = 2(nP(

1

)) = 2P(). (45)

It follows from (44) that

P() = E0() diag m1+2, , mt+2, 2, , 2 F0(). (46)

But this is just a Smith form for P() and shows that P() itself has an infiniteeigenvalue with the multiplicities claimed.

Theorem 7 The pencil C1 C0 of equation (43) is a strong linearization of P().

Proof: Again the proof is very similar to the proof of Theorem 2. Assume first thatZ does not intersect the set of all eigenvalues of P() and P

().

12

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

13/15

In [3], the -dependent LU factors of C1 C0 corresponding to a pencil of theform (43) and of degree n are explicitly given as follows:

L =

Is. . .

Isw0z0

Is wn

znIs Is

, (47)

U =

( z0)Is P0

. . ....

( zn)Is Pn1

(z0)(zn)P()

. (48)Here, to get U(), we replace the last block entry of (48) by

Un+2,n+2() =

1

( z0) ( zn)Is. (49)

It turns out that

E() =

Is

IsIs

. . .

w0z0

Is w1

z1Is

wnzn

Is Is

, (50)

Fi,j

() = 1

zi1Is, i = j = 1:(n + 1)

( z0) ( zn)Is, i = j = n + 2

( z0) ( zi2)( zi) ( zn)Pi1, i = 1:(n + 1);j = n + 20s, otherwise,

(51)where Pi are the values of P() evaluated at the nodes.

For the reverse case, we use

U1n+2,n+2() = 1(1 z0) (1 zn) Is, (52)instead of (20). As with the other bases, we can use (51) to construct K():

Ki,j() = Fi.j(

1

)

, i = j = 1:(n + 1)

n+1Fi,n+2( 1), i = 1:(n + 2);j = n + 2(53)

The rest of the proof is exactly the same as the proof of Theorem 2 (including thereference to [3] for the block pivoting case).

Remark. Computation of the right eigenvectors of the pencil (43) allows oneto recover the right eigenvectors of P() in the following manner: the right eigen-vectors of the pencil (43) are of the form [0()v, 1()v , . . . , n()v, 0]

T, and since1 =

nk=0 k(), simply adding these subvectors gives v (see [1] for details). The

numerical stability of this procedure has not been established.

13

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

14/15

5.2 Symmetrizing the Lagrangian companion pencil

Multiplying C1 C0 of (43) on the right by the block-diagonal

A := w10 P0 0 0 0

0 w11 P1 0 0

0 0 w12 P2 00 0 0 I

we obtain

(C1 C0)A =

z0w0

P0 0 0 P00 z1w1 P1 0 P10 0 z2w2 P2 P2

P0 P1 P2 0

.As in Section 2.2, the reason for doing this is that the pencil on the right is now

block-symmetric. This can provide computational advantages, but it is particularlyinteresting when, as in many applications, the zj (and hence wj) are real and P0, . . . , P n

are Hermitian (PHj = Pj), or when the data are complex symmetric (PTj = Pj).

References

[1] Amiraslani A., New Algorithms for Matrices, Polynomials and Matrix Polyno-mials, Ph.D. Dissertation, Applied Math., University of Western Ontario, 2006.

[2] Amiraslani A., Aruliah D. A., and Corless R. M., The Rayleigh quotient iterationfor generalised companion matrix pencils. Submitted, 2006.

[3] Amiraslani A., Aruliah D. A., and Corless R. M., BlockLU Factors of GeneralizedCompanion Matrix Pencils. Submitted, 2006.

[4] Aruliah D. A., Corless R. M., Gonzalez-Vega L., Shakoori A., Geometric Appli-cations of the Bezout Matrix in the Bivariate Tensor-Product Lagrange Basis,submitted, 2007.

[5] Barnett S., Polynomials and Linear Control Systems, Dekker, New York, 1983.

[6] Corless R. M., and Litt G., Generalized companion matrices for polynomials notexpressed in monomial bases, Applied Math, University of Western Ontario, TechReport, 2001.

[7] Berrut J., and Trefethen L., Barycentric Lagrange Interpolation, SIAM Review,46:3, 2004, 501-517.

[8] Davis P. J., Interpolation and Approximation, Blaisdell, New York, 1963.[9] Farin G., Curves and Surfaces for Computer-Aided Geometric Design, Acadmeic

Press, San Diego, 1997.

[10] Farouki R. T., Goodman T. N. T., and Sauer T., Construction of orthogonalbases for polynomials in Bernstein form on triangular simplex domains, Comput.Aided Geom. Design. 20, 2003, 209-230.

[11] Gautschi W., Orthogonal Polynomials: Computation and Approximation,Clarendon, Oxford, 2004.

14

-

7/28/2019 01 Linearization of matrix polynomials expressed in polynomial bases.pdf

15/15

[12] Gohberg I., Kaashoek M. A., and Lancaster P., General theory of regular matrixpolynomials and band Toepliz operators, Int. Eq. & Op. Theory, 11, 1988, 776-882.

[13] Gohberg I., Lancaster P., and Rodman L. Indefinite Linear Algebra and Appli-cations, Birkhauser, Basel, 2005.

[14] Gohberg I., Lancaster P., and Rodman L. Invariant Subspaces of Matrices withApplications, SIAM, 2006.

[15] Higham N., The numerical stability of barycentric Lagrange interpolation, IMAJournal of Numerical Analysis, 24, 2004, 547-556.

[16] Higham, N. J., Mackey, D. S., Mackey, N., and Tisseur, F., Symmetric lineariza-tions for matrix polynomials, SIAM J. Matrix Anal. Appl., 29 (2006), pp. 143159.

[17] Jonsson G. F., and Vavasis S., Solving polynomials with small leading coefficients,SIAM Journal on Matrix Analysis and Applications, 26, 2005, 400-414.

[18] Lancaster P., and Psarrakos P., A note on weak and strong linearizations of

regular matrix polynomials, Manchester Center for Computational Mathematics:nareport 470, June, 2005.

[19] Lancaster P., and Rodman L., Canonical forms for hermitian matrix pairs understrict equivamence and congruence, SIAM Review, 47, 2005, 407-443.

[20] Lakshman Y., and Saunders B., Sparse Polynomial Interpolation in Non-standardBases, SIAM J. Comput., 24:2, 1995, 387-397.

[21] Mackey, D. S., Mackey, N., Mehl, C., and Mehrmann, V., Vector spaces of lin-earizations for matrix polynomials, SIAM J. Matrix Anal. Appl., 28 (2006), pp.9711004. 3

[22] Rivlin T., Chebyshev Polynomials, Wiley, New York, 1990.

[23] Winkler J. R., A companion matrix resultant for Bernstein polynomials, LinearAlgebra Appl., 362, 2003, 153-175.

15