VirtualizationSources of virtualization overhead in an XP boot/halt. structions, completing the %cr8...

Transcript of VirtualizationSources of virtualization overhead in an XP boot/halt. structions, completing the %cr8...

-

VirtualizationAdamBelay

-

Whatisavirtualmachine

• Simulationofacomputer• Runningasanapplicationonahostcomputer• Accurate• Isolated• Fast

-

Whyuseavirtualmachine?

• Torunmultipleoperatingsystem(e.g.WindowsandLinux)• Tomanagebigmachines(allocatecoresandmemoryatO/Sgranularity)• Kerneldevelopment(e.g.likeQEMU+JOS)• Betterfaultisolation(defenseindepth)• Topackageapplicationswithaspecifickernelversionandenvironment• Toimproveresourceutilization

-

Howaccuratedowehavetobe?

• MusthandleweirdquirksinexistingOses• Evenbug-for-bugcompatibility

• Mustmaintainisolationwithmalicioussoftware• GuestcannotbreakoutofVM!

• MustbeimpossibleforguesttodistinguishVMfromrealmachine• SomeVMscompromise,modifyingtheguestkerneltoreduceaccuracyrequirement

-

VMsareanoldidea

• 1960s:IBMusedVMstosharebigmachines• 1970s:IBMspecializedCPUsforvirtualization• 1990s:VMwarerepopularized VMsforx86HW• 2000s:AMD&IntelspecializedCPUsforvirtualization

-

ProcessArchitecture

Hardware

OS

vi gcc firefox

-

VMArchitecture

• WhatiftheprocessabstractionlookedjustlikeHW?

Hardware

OS(VMM)

vi gcc firefox

GuestOS

VirtualHW

GuestOS

VirtualHW

-

ComparingaprocessandHW

Process• Nonprivilegedregistersandinstructions• Virtualmemory• Signals• Filesystemandsockets

Hardware• Allregistersandinstructions• Virt.mem.andMMU• Trapsandinterrupts• I/OdevicesandDMA

-

CanaCPUbevirtualized?

Requirementstobe“classicallyvirtualizable”definedbyPopek andGoldbergin1974:1. Fidelity:SoftwareontheVMMexecutes

identicallytoitsexecutiononhardware,barringtimingeffects.

2. Performance:AnoverwhelmingmajorityofguestinstructionsareexecutedbythehardwarewithouttheinterventionoftheVMM.

3. Safety:TheVMMmanagesallhardwareresources.

-

Whynotsimulation?

• VMMinterpretseachinstruction(e.g.BOCHS)• Maintainmachinestateforeachregister• EmulateI/Oportsandmemory• Violatesperformance requirement

-

Idea:ExecuteguestinstructionsonrealCPUwheneverpossible• Worksfineformostinstructions• E.g.add%eax,%ebx• Butprivilegedinstructionscouldbeharmful• Wouldviolatesafety property

-

Idea:RunguestkernelsatCPL3

• Ordinaryinstructionsworkfine• PrivilegedinstructionsshouldtraptoVMM(generalprotectionfault)• VMMcanapplyprivilegedoperationson“virtual”state,nottorealhardware• Thisiscalled“trap-and-emulate”

-

Trapandemulateexample

• CLI/STI– enablesanddisablesinterrupts• EFLAGSIFbittrackscurrentstatus• VMMmaintainsvirtualcopyofEFLAGSregister• VMMcontrolshardwareEFLAGS• ProbablyleaveinterruptsenablesevenifVMrunsCLI

• VMMlooksatvirtualEFLAGSregistertodecidewhentointerruptguest• VMMmustmakesureguestonlyseesvirtualEFLAGS

-

Whataboutvirtualmemory?

• WanttomaintainillusionthateachVMhasdedicatedphysicalmemory• GuestwantstostartatPA0,useallofRAM• VMMneedstosupportmanyguests,theycan’tallreallyusethesamephysicaladdresses• Idea:

• ClaimRAMissmallerthanrealRAM• Keeppagingenabled• Maintaina“shadow”copyofguestpagetable• ShadowmapsVAstodifferentPAthanguestrequests• Real%CR3pointstoshadowtable• Virtual%CR3pointstoguestpagetable

-

Virtualizationmemorydiagram

HostPageTable

HostVirtualAddress

HostPhysicalAddress

-

Virtualizationmemorydiagram

HostPageTable

HostVirtualAddress

HostPhysicalAddress

VMMMap

GuestVirtualAddress

HostPhysicalAddress

GuestPTGuestPhysicalAddress

ShadowPageTable

GuestVirtualAddress

HostPhysicalAddress

-

Example:

• Guestwantsguest-physical page@0x1000000• VMMmapredirectsguest-physical 0x1000000tohost-physical 0x2000000• VMMtrapsifguestchanges%cr3orwritestoguestpagetable• TransferseachguestPTEtoshadowpagetable• UsesVMMmaptotranslateguest-physical pageaddressesinpagetabletohost-physical addresses

-

Whycan’ttheVMMmodifytheguestpagetablein-place?

-

Needshadowcopyofallprivilegedstate• SofardiscussedEFLAGSandpagetables• AlsoneedGDT,IDT,LDTR,%CR*,etc.

-

Unfortunatelytrap-and-emulateisnotpossibleonx86Twoproblems:1. SomeinstructionsbehavedifferentlyinCPL3

insteadoftrapping2. SomeregistersleakstatethatrevealsiftheCPUis

runninginCPL3• Violatesfidelity property

-

x86isn’tclassicallyvirtualizable

Problems->CPL3versusCPL0:• mov %cs,%ax• %cs containstheCPLinitslowertwobits

• popfl/pushfl• Privilegedbits,includingEFLAGS.IFaremaskedout

• iretq• Noringchange,sodoesn’trestoreSS/ESP

-

Twopossiblesolutions

1. Binarytranslation• Rewriteoffendinginstructionstobehavecorrectly

2. Hardwarevirtualization• CPUmaintainsshadowstateinternallyanddirectlyexecutesprivilegedguestinstructions

-

Strawmanbinarytranslation

• ReplaceallinstructionsthatcauseviolationswithINT$3,whichtraps• INT$3isonebyte,socanfitinsideanyx86instructionwithoutchangingsize/layout• Butunrealistic• Don’tknowthedifferencebetweencodeanddataorwhereinstructionboundarieslie• VMware’ssolutionismuchmoresophisticated

-

VMware’sbinarytranslator

• KerneltranslateddynamicallylikeaJIT• idea:scanonlyasexecuted,sinceexecutionrevealsinstructionboundaries• whenVMMfirstloadsguestkernel,rewritefromentrytofirstjump• Mostinstructionstranslateidentically

• Needtotranslateinstructionsinchunks• Calledabasicblock• Either12instructionsorthecontrolflowinstruction,whicheveroccursfirst

• Onlyguestkernelcodeistranslated

-

GuestkernelsharesaddressspacewithVMM• UsessegmentationtoprotectVMMmemory• VMMloadedathighvirtualaddresses,translatedguestkernelatlowaddresses• Programsegmentlimitsto“truncate”addressspace,preventingallsegmentsfromaccessingVMMexcept%GS• Whatifguestkernelinstructionuses%GSselector?• %GSprovidesfastaccesstodatasharedbetweenguestkernelandVMM

• Assumption:Translatedcodecan’tviolateisolation• Canneverdirectlyaccess%GS,%CR3,GDT,etc.

-

WhyputguestkernelandVMMinsameaddressspace?

-

WhyputguestkernelandVMMinsameaddressspace?• Sharedstatebecomesinexpensivetoaccesse.g.cli->“vcpu.flags.IF:=0”• Translatedcodeissafe,can’tviolateisolationaftertranslation

-

Translationexample• AllcontrolflowrequiresindirectionOriginal:isPrime()mov %ecx, %edi # %ecx = %edi (a)mov %esi, $2 # %esi = 2cmp %esi, %ecx # is i >= a?jge prime # if yes jump…

Csource:int isPrime(int a) {

for (int i = 2; i < a; i++) { if (a % i == 0) return 0;

}return 1;

}

Endofbasicblock

-

Translationexample• Allcontrolflowrequiresindirection• Original:isPrime()mov %ecx, %edi # %ecx = %edi (a)mov %esi, $2 # %esi = 2cmp %esi, %ecx # is i >= a?jge prime # if yes jump…

Translated:isPrime()’mov %ecx, %edi # IDENTmov %esi, $2cmp %esi, %ecxjge [takenAddr] # JCCjmp [fallthrAddr]

…

-

Translationexample

• Bracketsrepresentcontinuations• Firsttimetheyareexecuted,jumpintoBTandgeneratethenextbasicblock• Canelide“jmp [fallthraddr]”ifit’sthenextaddresstranslated• Indirectcontrolflowisharder• “(jmp,call,ret)doesnotgotoafixedtarget,preventingtranslation-timebinding.Instead,thetranslatedtargetmustbecomputeddynamically,e.g.,withahashtablelookup.Theresultingoverheadvariesbyworkloadbutistypicallyasingle-digitpercentage.”– frompaper

-

Hardwarevirtualization

• CPUmaintainsguest-copyofprivilegedstateinspecialregioncalledthevirtualmachinecontrolstructure(VMCS)• CPUoperatesintwomodes• VMXnon-rootmode:runsguestkernel• VMXrootmode:runsVMM• HardwaresavesandrestoresprivilegedregisterstatetoandfromtheVMCSasitswitchesmodes• Eachmodehasitsownseparateprivilegerings

• Neteffect:Hardwarecanrunmostprivilegedguestinstructionsdirectlywithoutemulation

-

WhataboutMMU?

• Hardwareeffectivelymaintainstwopagetables• Normalpagetablecontrolledbyguestkernel• Extendedpagetable(EPT)controlledbyVMM• EPTdidn’texistwhenVMwarepublishedpaper

EPT

GuestVirtualAddress

HostPhysicalAddress

GuestPTGuestPhysicalAddress

-

What’sbetterHWorSWvirt?

-

What’sbetterHWorSWvirt?

• Softwarevirtualizationadvantages• Trapemulation:Mosttrapscanbereplacedwithcallouts• Emulationspeed:BTcangeneratepurpose-builtemulationcode,hardwaretrapsmustdecodetheinstruction,etc.

• Calloutavoidance:SometimesBTcaneveninlinecallouts• Hardwarevirtualizationadvantages

• Codedensity:Translatedcoderequiresmoreinstructionsandlargeropcodes

• Preciseexceptions:BTmustperformextraworktorecovergueststate

• Systemcalls:Don’trequireVMMintervention

-

What’sbetterHWorSWvirt?

0.1

1

10

100

1000

10000

100000

ptemoddivzeropgfaultcallretcr8wrinsyscall

CPU

cycle

s (s

mal

ler i

s be

tter)

NativeSoftware VMM

Hardware VMM

Figure 4. Virtualization nanobenchmarks.

tween the two VMMs, the hardware VMM inducing approximately4.4 times greater overhead than the software VMM. Still, this pro-gram stresses many divergent paths through both VMMs, such assystem calls, context switching, creation of address spaces, modifi-cation of traced page table entries, and injection of page faults.

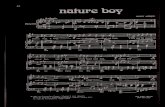

6.3 Virtualization nanobenchmarksTo better understand the performance differences between the twoVMMs, we wrote a series of “nanobenchmarks” that each exer-cise a single virtualization-sensitive operation. Often, the measuredoperation is a single instruction long. For precise control over theexecuted code, we repurposed a custom OS, FrobOS, that VMwaredeveloped for VMM testing.Our modified FrobOS boots, establishes a minimal runtime en-

vironment for C code, calibrates its measurement loops, and thenexecutes a series of virtualization-sensitive operations. The test re-peats each operation many times, amortizing the cost of the binarytranslator’s adaptations over multiple iterations. In our experience,this is representative of guest behavior, in which adaptation con-verges on a small fraction of poorly behaving guest instructions.The results of these nanobenchmarks are presented in Figure 4. Thelarge spread of cycle counts requires the use of a logarithmic scale.

syscall. This test measures round-trip transitions from user-level to supervisor-level via the syscall and sysret instructions.The software VMM introduces a layer of code and an extra privi-lege transition, requiring approximately 2000 more cycles than anative system call. In the hardware VMM, system calls executewithout VMM intervention, so as we expect, the hardware VMMexecutes system calls at native speed.

in. We execute an in instruction from port 0x80, the BIOSPOST port. Native execution accesses an off-CPU register in thechipset, requiring 3209 cycles. The software VMM, on the otherhand, translates the in into a short sequence of instructions thatinteracts with the virtual chipset model. Thus, the software VMMexecutes this instruction fifteen times faster than native. The hard-ware VMM must perform a vmm/guest round trip to complete theI/O operation. This transition causes in to consume 15826 cyclesin the tested system.

cr8wr. %cr8 is a privileged register that determines whichpending interrupts can be delivered. Only %cr8 writes that reduce%cr8 below the priority of the highest pending virtual interruptcause an exit [24]. Our FrobOS test never takes interrupts so no%cr8 write in the test ever causes an exit. As with syscall, thehardware VMM’s performance is similar to native. The softwareVMM translates %cr8 writes into a short sequence of simple in-

0

2

4

6

8

10

translateptemodpgfaultcallretcr8wrin/outsyscall

Ove

rhea

d (s

econ

ds)

Software VMMHardware VMM

Figure 5. Sources of virtualization overhead in an XP boot/halt.

structions, completing the %cr8 write in 35 cycles, about four timesfaster than native.

call/ret. BT slows down indirect control flow. We target thisoverhead by repeatedly calling a subroutine. Since the hardwareVMM executes calls and returns without modification, the hard-ware VMM and native both execute the call/return pair in 11 cycles.The software VMM introduces an average penalty of 40 cycles, re-quiring 51 cycles.

pgfault. In both VMMs, the software MMU interposes onboth true and hidden page faults. This test targets the overheadsfor true page faults. While both VMM paths are logically similar,the software VMM (3927 cycles) performs much better than thehardware VMM (11242 cycles). This is due mostly to the shorterpath whereby the software VMM receives control; page faults,while by no means cheap natively (1093 cycles on this hardware),are faster than a vmrun/exit round-trip.

divzero. Division by zero has fault semantics similar to thoseof page faults, but does not invoke the software MMU. Whiledivision by zero is uncommon in guest workloads, we includethis nanobenchmark to clarify the pgfault results. It allows usto separate out the virtualization overheads caused by faults fromthe overheads introduced by the virtual MMU. As expected, thehardware VMM (1014 cycles) delivers near native performance(889 cycles), decisively beating the software VMM (3223 cycles).

ptemod. Both VMMs use the shadowing technique described inSection 2.4 to implement guest paging with trace-based coherency.The traces induce significant overheads for PTE writes, causingvery high penalties relative to the native single cycle store. Thesoftware VMM adaptively discovers the PTE write and translates itinto a small program that is cheaper than a trap but still quite costly.This small program consumes 391 cycles on each iteration. Thehardware VMM enters and exits guest mode repeatedly, causingit to perform approximately thirty times worse than the softwareVMM, requiring 12733 cycles.To place this data in context, Figure 5 shows the total over-

heads incurred by each nano-operation during a 64-bit WindowsXP Professional boot/halt. Although the pgfault nanobenchmarkhas much higher cost on the hardware VMM than the softwareVMM, the boot/halt workload took so few true page faults that thedifference does not affect the bottom line materially. In contrast,the guest performed over 1 million PTE modifications, causinghigh overheads for the hardware VMM. While the figure may sug-gest that in/out dominates the execution profile of the hardwareVMM, the vast majority of these instructions originate in atypicalBIOS code that is unused after initial boot.

-

What’sbettershadowpagetableorEPT?

-

What’sbettershadowpagetableorEPT?• EPTisfasterwhenpagetablecontentschangefrequently• Fewertraps

• Shadowpagetableisfasterwhenpagetableisstable• LessTLBmissoverhead• Onepagetabletowalkthroughinsteadoftwo

-

Conclusion

• Virtualizationtransformedcloudcomputing,hadatremendousimpact• VirtualizationonPCswasalsobig,butlesssignificant

• VMwaremadevirtualizationpossibleonanarchitecturethatcouldn’tbevirtualized(x86)throughBT• PromptedIntelandAMDtochangehardware,sometimesfaster,sometimesslowerthanBT

-

Adecadelater,what’schanged?

• HWvirtualizationbecamemuchfaster• Fewertraps,bettermicrocode,morededicatedlogic• AlmostallCPUarchitecturessupportHWvirt.• EPTwidelyavailable

• VMMsbecamecommoditized• BTtechnologywashardtobuild• VMMsbasedonHWvirt.aremucheasiertoimplement• Xen,KVM,HyperV,etc.

• I/Odevicesaren’tjustemulated,theycanbeexposeddirectly• IOMMUprovidespagingprotectionforDMA