UNBIASED LOOK AT DATASET BIAS Antonio Torralba Massachusetts Institute of Technology Alexei A. Efros...

-

Upload

quentin-thompson -

Category

Documents

-

view

212 -

download

0

Transcript of UNBIASED LOOK AT DATASET BIAS Antonio Torralba Massachusetts Institute of Technology Alexei A. Efros...

UNBIASED LOOK AT DATASET BIAS

Antonio Torralba

Massachusetts Institute of Technology

Alexei A. Efros

Carnegie Mellon University

CVPR 2011

Outline

1. Introduction 2. Measuring Dataset Bias 3. Measuring Dataset’s Value 4. Discussion

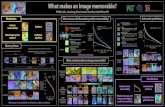

Name That Dataset!

Let’s play a game!

Answer

1 Caltech-101

2 UIUC

3 MSRC

4 Tiny Images

5 ImageNet

6 PASCAL VOC

7 LabelMe

8 SUNS-09

9 15 Scenes

10 Corel

11 Caltech-256

12 COIL-100

UIUC test set is not the same as its training set COIL is a lab-based datasetCaltech101 and Caltech256 are predictably confused with each other

Caltech 101 Caltech256

Pictures of objects belonging to 101 categories. About 40 to 800 images per category

Most categories have about 50 images Collected in September 2003 The size of each image is roughly 300 x

200 pixels

LabelMe

A project created by the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) A dataset of digital images with annotations The most applicable use of LabelMe is in computer vision research As of October 31, 2010, LabelMe has 187,240 images, 62,197 annotated images, and 658,992 labeled objects

Bias

Urban scenes Rural landscapes Professional photographs Amateur snapshots

Entire scenes Single objects

The Rise of the Modern Dataset

COIL-100 dataset (a hundred household objects on a black background)

Corel and 15 Scenes were Professional collections visual complexity

Caltech-101 (101 objects using Google and cleaned by hand) wilderness of the Internet

MSRC and LabelMe (both researcher-collected

sets), complex scenes with many objects

The Rise of the Modern Dataset

PASCAL Visual Object Classes (VOC) was a reaction against the lax training and testing standards of previous datasets

The batch of very-large-scale, Internet-mined datasets

– Tiny Images , ImageNet , and SUN09 – can be considered a reaction against the inadequacies of training and testing on datasets that are just too small for the complexity of the real world

Outline

2. Measuring Dataset Bias -2.1. Cross-dataset generalization -2.2. Negative Set Bias

Cross-dataset generalization

Negative Set Bias

Evaluate the relative bias in the negative sets of different datasets (e.g. is a “not car” in PASCAL different from “not car” in MSRC?).

For each dataset, we train a classifier on its own set of positive and negative instances. Then, during testing, the positives come from that dataset, but the negatives come from all datasets combined

Outline

3. Measuring Dataset’s Value

Measuring Dataset’s Value

Given a particular detection task and benchmark, there are two basic ways of improving the performance

The first solution is to improve the features, the object representation and the learning algorithm for the detector

The second solution is to simply enlarge the amount of data available for training

Market Value for a car sample across datasets

Outline

4. Discussion

Discussion

Caltech-101 is extremely biased with virtually no observed generalization, and should have been retired long ago (as arguedby [14] back in 2006)

MSRC has also fared very poorly. PASCAL VOC, ImageNet and SUN09,

have fared comparatively well