TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS …248 trellises and trellis-based decoding...

Transcript of TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS …248 trellises and trellis-based decoding...

_ • _ LI__

TRELLISES AND TRELLIS-BASED

DECODING ALGORITHMS FOR

LINEAR BLOCK CODES

Part 3

Shu Lin and Marc Fossorier

April 20, 1998

https://ntrs.nasa.gov/search.jsp?R=19990014066 2020-05-08T01:51:51+00:00Z

t

I

13 THE MAP AND RELATED DECODING

ALGORITHMS

In a coded communication system with equiprobable signaling, MLD minimizes

the word error probability and delivers the most likely codeword associated with

the corresponding received sequence. This decoding has two drawbacks. First,

minimization of the word error probability is not equivalent to minimization of

the bit error probability. Therefore, MLD becomes suboptimum with respect

to the bit error probability. Second, MLD delivers a hard-decision estimate of

the received sequence, so that information is lost between the input and output

of the ML decoder. This information is important in coded schemes where the

decoded sequence is further processed, such as concatenated coding schemes,

multi-stage and iterative decoding schemes.

In this chapter, we first present a decoding algorithm which both minimizes

bit error probability, and provides the corresponding soft information at the

output of the decoder. This algorithm is referred to as the MAP (maximum a-

posteriori probability) decoding algorithm [1]. Unfortunately, the trellis-based

implementation of the MAP algorithm is much more complex than that of the

243

244 TRELLISESAND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

trellis-based MLD algorithms presented in the previous chapters. Consequently,

suboptimum versions of the MAP algorithm with reduced decoding complex-

ity must be considered for many practical applications. In Section 13.2, we

present a near-optimum modification of the MAP algorithm, referred to as the

Max-Log-MAP (or SOVA) decoding algorithm [34, 39, 40]. This near-optimum

algorithm performs within only few tenths of a dB of the optimum MAP de-

coding algorithm while reduces decoding complexity drastically. Finally, the

minimization of the bit error probability in trellis-based MLD is discussed in

the last section.

13.1 THE MAP DECODING ALGORITHM

Consider a binary (N, K) linear block code C. Let u = (uh u2,...,uN) be a

codeword in C. Define P(A[B) as the conditional probability of the event A

given the occurrence of the event B. The MAP decoding algorithm evaluates

the most likely bit value ui at a given bit position i based on the received

sequence r = (el,r2,... ,rt¢). It first computes the log-likelihood ratio

P(ui = llr)

Li !log P(u_ 01r ) (13.1)

for 1 < i < N, and then compares this value to a zero-threshold to decode ui

as

1 for Li > 0, (13.2)ui= 0 for L_ ___0.

The value Li represents the soft information associated with the decision on ui.

It can be used for further processing of the sequence u delivered by the MAP

decoder.

In the N-section trellis diagram for the code, let Bi(C) denote the set of

all branches (o'__l,ai) that connect the states in the state space _i-l(C) at

time-(/- 1) and the states in the state space _-,i(C) at time-/for 1 < i < N. Let

By(C) and B:(C) denote the two disjoint subsets of B,(C) that correspond to

the output code bits ui = 0 and u_ = 1, respectively, given by (3.3). Clearly

Bi(C) = B°(C) kJ B_(C) (13.3)

for 1 < i < N. For (a',a) E BI(C), we define the joint probability

Ai(a',a) _a P(ai-1 = a';al = a; r) (13.4)

THE MAP AND RELATED DECODING ALGORITHMS 245

for l<i<N. Then

.o(,,,= 0;,.) = _ _,(_',a), (iS.5)(o',_,)¢_(c)

P(u, = I;,-)= _ _,(_',=). (13.6)(q',a)Ea_(C)

The MAP decoding algorithm computes the probabilities Ai(a',a) which are

then used to evaluate P(ui = 0it) and P(ul = llr) in (13.1) from (13.5) and

(13.6).

For 1 < i < N, 1 < l < m < N, and r_ _ (r_,rt+l .... ,rm) , we define the

probabilities

,;,,Ca)_ PC,:r,=a;,._), (13.7),a,(a) _ P(r_.la,=,,'), (13.8)

-),,(a',a) _ P(a_=a;,-dai_l=a' )

= P(r,l(o',__,a,) = (a',a)) 2(0", = o"1o",-1= o"). (13.9)

Then, for a memoryless channel,

_,(a', a) = _,_lCa') ;,(a', _)_,(_) (13.10)

for 1 <_ i _< N, which shows that the values ,xi(a',a ) can be evaluated by

computing all values ai(a), _i(a) and 71(a', a). [;ased on the total probability

theorem, we can express a/(a), for 1 < i < N, as follows:

_,(_) = E P(a,_, = _';_, = a;,'_-'.,,),a'E_.,_,(C)

= E cli-l(al)_[i(a;'a)"

a'E_:,-z(C)

(13.11)

Similarly, for 1 < i < N,

Z,(_) E P(ri+l; N a' la'_. a')= 1Pi+2; Oi+l : =

tr'EE,+z(C)

= _ _,+_(a')_,+,(,,a').a'E_,+I(C}

(13.12)

246 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

From (13.11),we see that the probabilitiesai(a) with I _< i _< N can be

computed recursivelyfrom the initialstateco to the finalstatea I of the N-

section trellis for code C, once 7i(at, a)'s are computed. This is called the

forward recursion. From (15.12), we see that the probabilities _i(c) with

1 < i < N can be computed recursively in backward direction from the final

state crf to the initial state ao of the N-section trellis of (7. This is called the

backward recursion.

For the AWGN channel with BPSK transmission, we have

PCr,lci_x = c'; ci = a) = (_rNo) -1/2 expC-(ri- c,) lNo) _,(-',a), (13.13)

where

1 if (c',a) e B,(C), (13.14)&i(a',a) = 0 if (a',a) ¢_Bi(C).

For &i(cr',c) = 1, ci = 2ul - 1 is the transmitted signal corresponding to the

label ui of the branch (c',c). Based on (13.13), 7/(c',c) is proportional to the

value

w,(a', ¢) = exp(-(r, - c,)2/No) 3,(a', c) P(c, = cla,-1 = a'). (13.15)

Note that in many applications such as MAP trellis-based decoding of linear

codes, the a-priori probability of each information bit is the same, so that all

states _r E ]Ei(C) are equiprobable. Consequently, P(ci -- alcri-t = c') be-

comes a constant that can be discarded in the definition of wi(a _,a). However,

this is not true in general. For example, in iterative or multi-stage decoding

schemes P(ci = clai-1 = a') has to be evaluated after the first iteration, or

after the first decoding stage. Since in (13.1), we are interested only in the

ratio between P(u, = llr ) and P(ui = 01r ), Ai(c',a) can be scaled by any

value without modifying the decision on uj. Based on these definitions, ai(c)

can be computed recursively based on (13.11) using the trellis diagram from

the initial state co to the final state err as follows:

(1) Assume that ai-t(c') has been computed for all states a' E Ei-I(C).

(2) In the i-th section of the trellis diagram, associate the weight w_(cd, c)

with each branch (c',a) • Bi(C).

THE MAP AND RELATED DECODING ALGORITHMS 247

(3) For each state crE _i(C), evaluate and store the weighted sum

a,(o') = _ w,(o", o') ai-t(o"). (13.16)

e'El:i-l{C):Si{_e,e):l

The initial conditions for this recursion are ao(a0) = 1 and a0(a) = 0 for

Or _ O'0 .

Similarly, _i(a) can be computed recursively based on (13.12) using the

trellis diagram from the final state crI to the initial state ao as follows:

(1) Assume that 3i+l(a') has been computed for all states a' E _]i+I(C).

(2) In the (i + 1)-th section of the trellis diagram, associate the weight

w,+l(a,a') with each branch (a,a') e B,+_(C).

(3) For each state o"E Ei(C), evaluate and store the weighted sum

Z a,+l/.')a'EE,+t(C):_i,,._(a,a')=I

(13.17)

The corresponding initial conditions are fiN (o'f) :: 1 and _N(a) = 0 for a # a I.

The MAP decoding algorithm requires one for _ard recursion from ao to al,

and one backward recursion from a I to a0 to evalt_ate all values al (a) and fli (a)

associated with all states ai E _i(C), for 1 < i < N. These two recursions

are independent of each other. Therefore, the forward and backward recursions

can be executed simultaneously in both directions along the trellis of the code

C. This bidirectional decoding reduces the decoding delay. Once all values of

ai(a) and fli(a) for 1 < i < N have been deterrlined, the values L, in (13.1)

can be computed from (13.5), (13.6), (13.9) and :13.10).

13.2 THE SOVA DECODING ALGORITHM

The MAP decoding algorithm presented in the previous section requires a large

number of computations and a large storage to _ ompute and store the proba-

bilities a,(a), fli(a) and 7i(a',a) for all the stat :s a and state pairs (a',a) in

the trellis for the code to be decoded. For a lolg code with large trellis, the

implementation of the MAP decoder is practical y impossible. Also, the MAP

248 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

decoding algorithm computes probability values, which require much more com-

plicated real value operations than the real additions performed by trellis-based

MLD algorithms, such as the Viterbi decoding and RMLD algorithms.

In this section, we present an algorithm for which the optimum bit error

performance associated with the MAP algorithm is traded with a significant

reduction in decoding complexity. This algorithm is known as the Max-Log-

MAP algorithm or soft-output Viterbi algorithm (SOVA), as it performs

the same operations as the Viterbi algorithm, with additional real additions

and storages.

For BPSK transmission, (13.1) can be rewritten as

for 1 < i < N and ci = 2ul - 1. Based on the approximation

N

log(E 6j) _ log( max {6j}), (13.19)2---1

we obtain from (13.18)

L, _ log( max P(clr)) -log( max P(cJr)). (13.20)

For each code bit ui, the Max-Log-MAP algorithm [40] approximates the cor-

responding log-likelihood ratio Li based on (13.20).

For the AWGN channel with BPSK transmission, we have

PCclr) = P(rlc)P(c)lP(r)

= (_rNo)-N/:e - E;':,{"-c,)'/N°P(c)/P(r). (13.21)

IfcI t I 1 I cO 0 0 0 0= (ct, c2,.. .) represent= (el, C2, ... ,c2y_l,C2y,...) and ,c21-t,c_1,..

the codewords corresponding to the first term and the second term of (13.20),

respectively, it follows from (13.21) that for equiprobable signaling, the approx-

imation of Li given in (13.20) is proportional to the value

ri+ E cjt rj. (13.22)• • • 0 |

THE bIAP AND RELATED DECODING ALGORITHMS 249

We observe that one ofthe terms in (13.20)correspondsto the MLD solution,

while the other term correspondstothe most likelycodeword which differsfrom

the MLD solutionin ui. Consequently, in Max-Log-MAP or SOVA decoding,

the hard-decisioncodeword corresponding to (13.20)isthe MLD codeword

and (13.22)isproportionalto the differenceof squared Euclidean distances

(SED) lip - cIH 2 - Hr - c°H 2. For any two codewords c and d, we define

lllr - cll+- ll,+- e ll=l the reliability difference between c and cp.

For simplicity, we consider the trellis diagram of a rate-l/2 antipodal con-

volutional code C. Hence, the two branches that merge into each state have

different branch labels, as described in Figure t0.1. Also, we assume that

the trellis diagram for the code C is terminated so that N encoded bits are

transmitted. Generalization of the derived results to other trellis diagrams is

straightforward, after proper modification of the notations. At each state cq-t

of the state space Ei-I(C) at time-(/- 1), the SOVA stores the cumulative

correlation metric value M(_ri_l) and the corresl;ondlng decoded sequence

_(ai-1) = (+t(o'+-t),c2(o'+-+,) .... ,+2(+_t)_t(o'i-1),£'2(+_1)(o'+-t)) , (13.23)

as for the Viterbialgorithm.In addition,italso+,toresthe reliabilitymeasures

L(_,_I) = (Ll(oti-1), L2(o',-1),..., L2{i_1)_ 1 (o.i_1), L2(i_l)(o-i_l)) , (13.24)

associated with the corresponding decision _(ai-1).

At the decoding time-i, for each state oq in the state space Ei(C), the SOVA

first evaluates the two cumulative correlation rtetric candidates M(a__x,aq)

and M(a/2_x, cq) corresponding to the two paths terminating in state _q with

transitions from states a__ 1 and o'i_ 1,x respectively As for the Viterbi algorithm,

the SOVA selects the cumulative correlation metric

U(,,) = ,n_l,a._}{M(a__l, ai)}, (13.25)

and updates the corresponding pair (_2i-x(al),t2i(ai)) in the surviving path

e(al) at state ai. Next, L(a,) has to be updated To this end, we define

= min {M(a__,,ai)}. (13.26)hi '_ ,e{1,almax{M(a__x,ai)} -,e{ta}

Based on (13.22) and the fact that the code considered is antipodal, we set

L2,-l(ai) = L21(ai) = a+, (13.2r)

25O

since Ai represents the reliability difference between the two most likely code-

sequences terminating at state cri with different values for both _2i-z and _2i.

The remaining values Lj(cq) for j -- 1,... ,2(i - 1) of the surviving £(ai) at

state ai have to be updated.

In the following, we simplify the above notations and define

L(o.__I) "1 "l LI L t _ (13.28)= (L,,L_,..., 2(i-z)-z, 2(i-,)I

for I = 1,2, as the two setsof reliabilitymeasures corresponding to the two

candidate paths merging into state o'iwith transitionsfrom stateso'__I and

cr__l, respectively. We refer to these two paths as path-1 and path-2, and

without loss of generality assume that path-1 is the surviving path. Similarly,

for l = 1, 2,

_(a__,) -, -t -' -,= (cz,c2,...,c2(i_z}_z,c2(__1)) (13.29)

representthe two setsof decisionscorresponding to path-1 and path-2,respec-

tively.-I *2

First, we consider the case cj 7£ ci, for some j E {1,...,2(i-- 1)}, and

recall that path-1 and path-2 have a reliability difference equal to Ai. Also,

THENIAP AND RELATED DECODING ALGORITHMS 251

L} representsthe reliabilitydifferencebetween path-1 and a code-sequence

representedby a path-m merging with path-1 between the decoding stepsj and

(i- I),with _ # _},asshown inFigure13.1.On the other hand, LI represents

the reliabilitydifferencebetween path-2 and a code-sequence representedby a

path-n merging with path-2 between the decoding steps j and (i- I),with

cj'n= cj.'IHence, Lj'2does not need tobe consideredto update Lj(ai) in L(ai).

Sinceno additionalreliabilityinformationisavailableat statecrl,we update

gj (a.i) = min{Ai, Lj"1-.?. (13.30)

Next, we consider the case _} = _, for some j E {1,... ,2(i - 1)}, so that

path-2 is no longer considered to update Lj(crl) in L(_i). However, path-n

previously defined now satisfies _ # _}. Since the reliability difference between

path-1 and path-n is Ai + LI (i.e. the reliability difference between path-1 and

path-2 plus the reliability difference between path-2 and path-n), we obtain

Lj(cr,) = min{2Xi + L_, L)} (13.31)

The first version of SOVA that is equivalent t o the above development was

introduced by Battail in 1987 [3]. This algorithm was later reformulated in con-

junction with the MAP algorithm in [9, 61] and fcrmally shown to be equivalent

to the Max-Log-MAP decoding algorithm in [34] Consequently, the Max-Log-

MAP or SOVA decoding algorithm can be summarized as follows.

For each state ai of the state space Ei(C):

Step 1" Perform the Viterbi decoding algoritltm to determine the survivor

metric M(ai) and the corresponding code-sequence d(ai).

Step 2: For j E {1,...,2(i- 1)}, set Lj(_,) in L(ai) either to the value

min{Ai, L_}if_ #'2 "2 "1 if_ -2cj, or to the value min{Ai + Lj, Lj } = c).

Step 3: Set and to the vaiue tx .

In [39], a simplified version of SOVA is presented. It is proposed to update

Lj(_ri), for j = 1,2 .... ,2(i- 1), only when c} # c_. Hence (13.30) remains

252 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

unchange while (13.31) simply becomes:

= LJ. (ls.32)

Consequently, the values L_ are no longer needed in the updating rule, so that

this simplified version of SOVA is easier to implement. At the BER 10 -4, its

performance degradation with respect to the MAP decoding algorithm is about

0.6 - 0.7 dB coding gain loss, against 0.3 - 0.4 dB loss for the Ma.x-Log-MAP

decoding algorithm.

13.3 BIT ERROR PROBABILITY OF MLD

In many practical applications, soft output information is not needed and only

the binary decoded codeword is delivered by the decoder. However, it is still

desirable to minimize the bit error probability rather than the word error prob-

ability. In such cases, the SOVA has no advantage over MLD since both algo-

rithms deliver the same binary decoded sequence. Although the MAP decoding

algorithm minimizes the decoding bit error probability, no significant improve-

ment is observed over the bit error probability associated with MLD if properly

implemented. Consequently, NILD remains to be the practical solution due to

its much lower computational cost and implementation flexibility. However,

when a word is in error, different mappings between the information sequences

and the code sequences may result in a different number of bits in error, and

hence a different average bit error probability.

As described in Chapter 3, the trellis diagram for an (N, K) linear block

code is constructed from its TOGM. A trellis-based ML decoder simply finds

the most likely path and its corresponding codeword among the 2_: possible

paths that represent all the codewords generated by the TOGM. Therefore, a

trellis-based decoder can be viewed as a device which searches for the most

likely codeword out of the set of the 2K codewords generated by the TOGM,

independent of the mapping between information sequences and codewords. It

follows that the mapping between information sequences and the 2g codewords

generated by the TOGM, or equivalently the encoder, can be modified without

modifying the trellis-based determination of the most likely codeword. The

corresponding information sequence is then retrieved from the knowledge of

the mapping used by the encoder. Consequently, a trellis-based ML decoder

THEbIAP AND RELATED D_CODING ALGORITHMS 253

can be viewed as the cascade oftwo elements: (I)a trellissearch devicewhich

deliversthe most likelycodeword ofthe code generated by the TOGM; and (2)

an inversemapper which retrievesthe informationsequence from the delivered

codeword. Although allmappings produce the same word errorprobability,

differentmappings may produce differentbit errorprobabilities.

Differentmappings can be obtainedfrom the TOGM by applying elementary

row additionsand row permutations tothismatrix. Let Gt denote the TOGM

ofthe code considered,and letGm denote the new matrix. IfG,_ isused for

encoding, then the inversemapper isrepresentedby the rightinverseofG,n.

Sincethismapping isbijective(orequivalently,G,_ has full-rankK) and thus

invertible,the rightinverseof G,_ isguaranteed to exist.In [33],itisshown

that formany good codes,the best strategyisto have the K columns of the

K x K identitymatrix IK in Gm (in reduced _.helon form). Based on the

particularstructureof the TOGM Gt, thisisreadilyrealizedin K steps of

Gaussian eliminationas follows:For i < i < K, assume that G,,_(i- i) isthe

matrix obtained at step-(/- 1), with G,,_(0) = Gt and G,,,(K) = G .... Let

C i i z= (Q, c:....,c_) T denote the column ofG,_(i- I)that containsthe leading

i 1 and i _ =.'1' of the i-th row of Gm(i - 1). Then ci = ci+ 1 = ci+ 2 .. = c_ = O.

i = 1. This results inFor 1 < j _< i - 1, add row-/ to row-j in G,,_(i .- 1) if cj

matrix G,_(i). For i = K, GIn(K) =Gm contains the K columns of Ia- with

the same order of appearance. This matrix is :;aid to be in reduced echelon

form (REF), and isreferredto as the REF matrix.

Now we perform the encoding based on G,, in REF instead of the TOGM Gt.

Since both matrices generate the same 2 t< codewords, any trellis-based decoder

using the trellis diagram constructed from the ']'OGM Gt can still be used to

deliver the most likely codeword. From the kn,_wledge of this codeword and

the fact that Gm in REF was used for encoding, .he corresponding information

sequence is easily recovered by taking only the po:_itions which correspond to the

columns of IK. Note that this strategy is intuitLvely correct since whenever a

code sequence delivered by the decoder is in error, the best strategy to recover

the information bits is simply to determine them independently. Otherwise,

errors propagate.

254 TRELLISESANDTRELLIS-BASEDDECODINGALGORITHMSFOR LINEAR BLOCK CODES

Example 13.1 In Example 3.1, the

After adding rows 2 and 3 to

Gm 0

TOGM ofthe (8,4)RM code isgiven by

1 i 1 0 0 0 l

I 0 1 1 0 1

0 1 1 i I 0

0 0 0 i i I

row-I, we obtain

0 0 i 0 1 1 Il

i 0 i I 0 1

0 1 i I I 0

0 0 0 1 1 1

We can readilyverifythat Gt and G,_ generate the same 16 codewords, so that

thereisa one-to-onecorrespondence between the codewords generated by G,n

and the paths inthe trellisdiagram constructedfrom Gt. Once the trellis-based

decoder deliversthe most likelycodeword _ = (t_1,u2,..-,us),the correspond-

ing information sequence g = (al, d_, a3, a4) is retrieved by identifying

o,3 ----t_3,

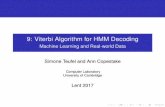

Figure 13.2 depicts the bit error probabilities for the (32, 26) RM code with

encoding based on the TOGM and the REF matrix, respectively. The corre-

sponding union bounds obtained in [33] are also shown in this figure. We see

that there is a gap in error performance of about 1.0 dB and 0.2 dB at the BERs

10 -1"s and 10 -s, respectively. Similar results have been observed for other good

block codes [33, 71]. The situation is different for good convolutional codes of

short to medium constraint lengths, for which the feedforward non-systematic

realizations outperform their equiva]ent feedback systematic realizations [80].

This can be explained by the fact that for short to medium constraint length

convolutional codes in feedforward form, the bit error probability is dominated

by error events of the same structures. Due to the small number of such struc-

tures, an efficient mapping that minimizes the bit error probability can be

THE MAP AND RELATED EECODING ALGORITHMS 255

@

-i

-2

-3

-4

-5

-6

-7

-80

i z , i i i i

I I I I I I I

I I I I I I I

I I I I I I I

I I "- P. I I I I

I "_ I I I I

............... • .... J - ' .LI I • . 1 .... I..... f --

I I • ", I I I

I I ", '. 1 I I

// I_,,_lI I I I I I....... ,_____,___--.,.__.... ,..... ,._I I I I I

I I I I ! I

I I I I I I

I I I I I I

....... I ..... r............... I.... ..L _

...... Si'rr]ul_tldffs ...................... r.':"....

x:TnC'._ , '.,

o:R__ '.... -t ..... r- ........ '-I ..... I'- .... 'I- - - r.- -

I I I I I I " '

I I I I I I ,

I I I I I I

I I I I I I

1 3 4 5 6

SNR (in dB)

Figure 13.2. The bit error probabilities f,,r the (32, 26) RM code.

256 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

devised. This is no longer possible when the error performance is dominated

by numerous unstructured error events, such as for long constraint length good

convolutionai codes or good block codes.

Based on these results, we may conclude: (1) although modest, the differ-

ence in bit error performance between encoding with the TOGM and the REF

is of the same order as the difference in bit error performances between MLD

and some sub-optimum low-complexity decoding methods; (2) the overall er-

ror performance of a conventional concatenated scheme with a RS outer code

performing algebraic decoding is subject to these differences; and most impor-

tantly (3) the gain is free, since only the encoding circuit and the retrieving

of the information sequence have to be modified. Furthermore this approach

can be used for trellis-based MAP or SOVA decodings if a likelihood measure

associated with each bit of the decoded information sequence, rather than each

bit of the decoded codeword is needed, as in [34, 39, 40].

This approach can 'be generalized to any soft decision decoding method.

In general, a particular decoding algorithm is based on a particular structure

of the code considered. For example, majority-logic-decoding of RM codes is

based on the generator matrices of these codes in their original form (presented

in Section 2.5), or trellis-based decoding of linear codes is based on the TOGM

of the code considered. Two cases are possible depending on whether the de-

coder delivers a codeword as in trellis-based decoding or directly an information

sequence as in majority-logic-decoding of RM codes. In the first case, the pro-

cedure previously described is generalized in a straightforward way, while in

the second case, the row additions performed to obtain G,_ from the generator

matrix corresponding to the decoding method considered are applied to the

delivered information sequence by the inverse mapper [33].

APPENDIX AA Trellis Construction Procedure

To decode a linearblock code with a trellis-baseddecoding algorithm, the

code trellismust be constructedto be used effectivelyin the decoding process.

Therefore,the constructionmust meet a number of basic requirements. In

the implementation ofa trellis-baseddecoder,every stateinthe code trellisis

labeled.The labelofa stateisused asthe index tothe memory where the state

metric and the survivor into the stateare stored. For efficientindexing,the

sequence required to labela statemust be as snortas possible.Furthermore,

the labelsof two statesat two boundary locationsof a trellissectionmust

providecomplete informationregardingthe adjacency ofthe two statesand the

labelof the composite branch connectingthe two states,ifthey are adjacent,

in a simple way. In general,a composite branch label appears many times

in a section (see (6.13)). In order to compute the branch metrics efficiently,

all the distinct composite branch labels in a trellis section must be generated

systematically without duplication and stored ii,. a block of memory. Then, for

each pair of adjacent states, the index to the memory storing the composite

branch label (or composite branch metric) between the two adjacent states

must be derived readily from the labels of th_ two states. To achieve this,

we must derive a condition that two composite branches have the same label,

and partition the parallel components in a trellis section into blocks such that

the parallel components in a block have the :ame set of composite branch

labels (see Section 6.4). The composite bran,:h label sets for two different

257

258 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

blocks are disjoint.This localizesthe composite branch metric computation.

We can compute the composite branch metricsforeach representativeparallel

component ina block independently. In thisappendix, we presentan efficient

procedure for constructinga sectionalizedtrellisfor a linearblock code that

meets allthe above requirements. The constructionmakes use ofthe parallel

structureofa trellissectionpresented inSection6.4.

A.1 A BRIEF REVIEW OF THE TRELLIS ORIENTED GENERATOR

MATRIX FOR A BINARY LINEAR BLOCK CODE

We first give a brief review of the trellis oriented generator matrix (TOGM)

for a binary linear block code introduced in Section 3.4. Let G be a binary

K x N generator matrix of a binary (N, K) linear code C. For 1 < i < K, let

ld(i) and tr(i) denote the column numbers of the leading '1' and the trailing

'1' of the i-th row of G, respectively. G is called a TOGM if and only if for

l<_i<i'<_K,

Id(i)< Id(i'), (A.I)

tr(i) _ tr(i'). (A.2)

Let AI be a matrix with r rows. Hereafter,for a submatrix Air'ofM con-

sistingofa subset ofthe rows in hl, the order ofrows in M' isassumed to be

the same as in M. For a submatrix M' of M consisting of the i_-th row, the

i2-th row, ..., the ip-th row of M, let us call the set {it,i2,... ,ip} as the row

number set of M' (as a submatrix of M). M is said to be partitioned into

the submatrices Mr, M2 ..... M, if each row of M is contained in exactly one

submatrix AIi with 1 < i < p.

In Section 3.4, a TOGM G of C is partitioned into three submatrices, G_',

G/h, and G_ (also shown in Figure 6.3), for 0 < h < N. The row number sets

ofGr',h G/h, and G h"are {i : tr(i) _< h}, {i:h<ld(i)}, {i:ld(i)_< h<tr(i)},

respectively. G_, and G_ generate the past and future codes at time-h, Co,n

and Ch,N, respectively (see Section 3.7). That is,

Co,h = r(v_), (A.3)

Ch,N = r(G/), (A.4)

where fora matrix M, F(M) denotes the linearspace generated by M.

APPENDIX .4.:.4.TRELLIS CONS"'RUCTION PROCEDURE 259

Since from (A.1) ld(i) < ld(i') for 1 _< i < i' _ K, the order of information

bits corresponds to the order of rows in G, and therefore, the row number sets

of G_,, G_ and G/h as submatrices of G correspond to A_, A_ and Ah/ (refer

to Section 3.4), respectively. In this appendix, we put the TOGM in reverse

direction such that for 1 _<i < ¢ _< K,

Id(i) # Id(i'),

tr(i) > tr(i').

Using a TOGM in reverseorder,we can storethe states(or statemetrics)at

the leftend ofa parallelcomponent inconsecutivememory cellsusinga simple

addressing method. This method isvery usefulfor designing IC decoder. It

also reduces the actual computation time for a software decoder, since (the

metricsof)the statesatthe leftends are accessedconsecutivelyand computers

have cache memories.

For a binary m-tuple u = (ul,u_ .... ,urn) and a set I = {il,i2 .... ,ip} ofp

positive integers with 1 < il < i2 < ... < ip < _, define

For convenience, for I =

rn-tuples, define

,%).

O, pl(u) g e (the null sequence).

(A.7)

For a set U of

pI(U) _ {pt(u) : u 6 U}. (A.8)

For I = {h + 1, h + 2,..., h'}, P; is denoted by Ph,h'.

Then, for a partition {M,, Ms,..., Mj,} of M with r rows and v 6 {0, 1}',

it follows from the definition of a row number set and (A.7) that

vM = pt,(v)M, + pt_(v)M2 +. • + p,,(v)M,, (A.9)

where Ii denotes the row number set of M, fol 1 < i < p. If Mi is further

partitioned into submatrices Mi,, Mi2,..., then

pt,(v)Mi =pt,t(pt,(v))Mi, +pt,,(pt,(v))Mi 2 + ..., (A.10)

where/it, Ii2,..., denote the row number sets _,f Mit, Mi3,... as submatrices

of Mi.

260 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMSFOR LINEAR BLOCK CODES

In the constructionofthe trellissectionfrom time-h totime-h',the subma-

trixG_ of G takesa key role.Let/h denote the row number set of G_ as a

submatrix G. Then, ph "-[lhl,and the followingproperty ofstatelabelingby

the statedefininginformationset (Section4.1)holds.

The Key Property of State Labeling: Let L(ao,ah,al) A L(ao,ah) o

L(ah,al) be the set of paths in a code trellisfor C that connects the initial

statea0 to the finalstatea I through the stateah at time-h.There isa one=

to-one mapping I from _h(C) to {0,1)p_ with 0 < h < N such that for

ah E IEa(C) and a E {0, 1} K,

if and only if

aGe L(ao, ah, al), (A.11)

Pzh (a) = l(aa). (A.12)

AA

Here, l(aa) E {0, 1) ph is called the label of state ah. This state labeling is simply

the state labeling by the state defining information set given in Section 4.1. We

can readily see that

L(eo,ah, c_S) = l(czh)G_ _ C0.,, _ Ch, N. (A.13)

Note that ifph = 0, G_ isthe empty matrix and l(ah)= _. For convenience,

we definethe product of _ and the empty matrix as the zerovector.

A.2 STATE LABELING BY THE STATE DEFINING INFORMATION

SET AND COMPOSITE BRANCH LABEL

For ah E _,(C) and oh, E _h,(C) with L(ah, Crh,) # 0, the composite branch

label L(ah, O'h,) can be expressed in terms of the labels of crh and ah,. Since

L(ao,_h,ah,,al) _- L(c_0,crh) o L(ah,aW)o L(a,,,,al)

= L(ao,O'h,o'l) n L(ao,O'h,,o'f),

it follows from the key property of state labeling that for a E {0, 1} K,

aGe L(ao,ah,aw,al), (A.14)

APPENDIX A: A TRELLIS CONSTRUCTION PROCEDURE 261

if and only if

p,,(a) = l(,h), (A.15)

p,,,(,,) = t(--h,). (^.16)

Define Ih,h, and Ph,h, as follows:

Ih,h, = IhNIh, (A.17)

Ph,h' = [Ih,h' I. (A.18)

Then Ih,a, = (i : ld(i) < h < h' < tr(i)} is the row number set of the submatrix

of G which consists of those rows in both G_ and G_,. Let G_'_, denote thiss,p f,P

submatrLx. Let Gh,h,, Gfh',sh, and Gh. h, denote the submatrices of G whose row

number sets as submatrices of e are /h\/h,h' = {i : ld(i) < h < tr(i) < h'},

Ih,\Ih,w = (i : h < ld(i) < h' < tr(i)} and _i: h < ld(i) < tr(i) < h'),

respectively. Then,I,p

Ch,h, = F(Gh,h,), (A.19)

..... p f,s f'P and Gh/, (see Figure 6.3).and G is partitioned into G_, Gh.h,, Gh,h,, Gh.h,, Gh, h,

From (a.3), (A.4), (A.9) and (A.Ig), we have that for a • {0, i} K, aG • C

can be expressed as

aG s .... p (A.20)= + +

where u • Co,t, _ Cn,h, (9 Cn,,N.• zy

Since h and h' are fixed hereafter, we abbrewate pt,,t,,(Gh',t,, ) as G _,u where

z e (s,f} and y • {s,p}. If aGE L(ao,ah, aw,al), then pn,h,(aG) ----

aph.h,(e) • L(an,an,). Since n(trn,ah,) is a coset in Pn,n,(C)/C[n, (see

(3.18)), it follows from (A.20) that

L(an,an,) = pti,.h,(a)GS" +pih\th.h,(a)G"P+pr_,\th.,,(a)G't" +Cth_h,. (A.21)

In the following, we will derive a relation betw._en L(o'n, an,) and the labels of

states an and O'h,, l(o'n) and l(tYh,). Lemma A.1 _ives a simple relation between

Ih,n, and lb.

Lemma A.1 Ih,n, consists of the smallest Ph,h integers in Ih.

262 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

Proof: For i E [h,w, we have that h_<:tr(i).For i'E Irh\lh,h,,we have that

tr(i')_<fi'.Hence, tr(i')< tr(i).From (A.6),we have i< i'.

AA

In general, there is no such a simple relation between Ih,h, and Iw.

Let Irh, = {it,J2 .... ,iph,} with I < il < i2 < ... < ip_, be the row number

set of G_,, and let Ih,h, = {ij,,ij3 .... ,ij,h.h, } with I <_ jt < j2"'" < jp,._, _<

Pw be the row number set of G_',_w. By definition, the p-th row of G_, is the

ip-th row of G for 1 < p _< pw and the #-th row of Gh, w is the ij,-th row of

G for 1 < # < Ph,W- Hence, the#-th row of Gh, h, is theju-th row of G_,.

That is, the row number set of G_'_h, as a submatrix of G_,, denoted Jw, is

Jh, = {j_,j2,... ,jp_._,), and Jh, _- {1,2,... ,ph,} \ Jh, is the row number setf,s

of Gh, w.

Suppose (A.14) holds. Then, from (A.15), (A.16) and Lemma A.1,

(A.22)

(A.23)

For simplicity, define

I{')(c%) (A.25)

l{P)(ah) p.^.^,,.h(l(ah)), (A.26)

(A.2?)

(A.2S)

From (A.25) and (A.26), the label l(_rh) of the state ah with l(')(a,,) = ct and

l(p)(aj,) = fl is given by

/(a,,)= ot o ft. (A.29)

The labell(ah,)of the stateah, with l(_)(ah)= a and l(1)(ah,)= 7 can be

easilyobtained from cxand _,using (A.27) and (A.28).

By summarizing (A.14) to (A.16),(A.22) to (A.26)and (A.28),a condition

forthe adjacency between two statesat time-h and time-hP,and the composite

branch labelbetween them (shown in Section 6.3)are given inTheorem A.I.

APPENDIX A: A TRELLIS CONS ['RUCTION PROCEDURE 263

Theorem A.I For crh E Ea(C) and aw E T,_,(C)with 0 <_ h < h' <_ N,

L(ah,aw) _ _ if and only if

t_')(c,h) = I{')(qh,)_

Also if L(_ra, ah,) _ 0, then L(ah, aw) is given by

" (A.30)L(ah, o'w) = l{')(ah)G"" + l(P)(a_,)G "'p+ l(_) (aw)G L° + Ch, w.

AA

We can tc')(ah) (or lC')(_h,)) the first labd part of _h (or _,) and lCP_(_h)

(or /l/l(ah,)) the second label part of a_, (or ah,). Now we partition I]l,(C)

and Za,(C) into 2ph._' blocks of the same size, r,_pectively, in such a way that

two states are in the same block if and only if they have the same first label

part. For ctE {0,I}"_,h',a block,denoted E_, (orE_,) in the above partition

of Eh(C) (or r.w(C)) isdefinedas

E_ A {crh • IEa(C): l(')(,'h) = n,}, (A.31)

E_, __a {_r_,, E E_,,(C): l(_)(_h') = c_}. (A.32)

The blocks E_ and E_, correspond to SL(ah) and Sa(as) in Section 6.4, re-

spectively. That is, for any o_ E {0, 1} ph,h', a subgraph which consists of the set

of state at time-h, E_, the set of states at time-h _, E_,, and all the composite

branches between them form a parallel compon,_nt. This parallel component is

denoted A,.. The total number of parallel coml: onents in a trellis section from

time-h to time-h _ is given by 2p_.h'.

Since all states in _ U Yl_, have the same fizst label part c_, there is a one-

to-one mapping, denoted sh,a, from the set o! the second label parts of the

states in _, denoted Lh, to _, and there is a one-to-one mapping, denoted

sh,.a, from the set of the second label parts of _he states in E_,, denoted Lw,

to _,. Then, Lh = {0, 1}Ph-P_. _', L,,, = {0, 1}' h'--Ph._' and s,,,o and s,,,,o are

the inverse mappings of l(pl and l{I}, respectively.

A.3 TRELLIS CONSTRUCTION

Now consider how to construct a trellis section. When we use a trellis diagram

for decoding, we have to store the state metri,: for each state. Therefore, we

264 TRELLISES AND TRELLIS-BASED DECODINGALGORITHMSFOR LINEAR BLOCK CODES

must assignmemory foreach state.For a state_r,we use the labell(cr)as the

index ofthe allocatedmemory, because: (I)l(a)isthe shortestpossiblelabel

for # and iseasy to obtain,and (2) as shown in Theorem A.1, the complete

informationon L(ah, ah,) isprovided ina very simpleway by I(j)(ah),l(p)(ah),

l(°)(aw)and l(1)(o'w)which can be readilyderivedfrom l(ah) and l(aa,)by

(A.25) to (A.28).

Ifinsteadwe use the statelabelingby parity-checkmatrix forthe statesat

time-h, the label length becomes N- K. Since Ph < rain{N, N- K} (see (5.4)),

if Ph < N - K, a linear mapping from {0, 1} _-K to {0, 1} a_ which depends on

h in general ks necessary to obtain the index of the memory.

The next problem is how to generate branch metrics of adjacent state pairs

and give access to them. The set of composite branch labels of the section from

time-h to time-h', denoted Lh,h,, is the set of the following oasets (see (3.18)):

Lh,h' = ph,h'(C)/C_rh ,. (A.33)

It follows from (A.30) that

= c , {o,t}",L_,.h, {aG"" + _OG"'p + "tG/'" + h,_ : a •

• {o, t}""-",,.,',,-r • {o, (A.34)

Each composite branch label appears

2K-/¢(Co._)-k(Ch,.._-)-k(p_.h,(C))

times in the trellis section (see (6.13)).

Next we consider how to generate all the composite branch labels in Lh,h,

given by (A.34) without duplication. Suppose we choose submatrices G_ 's of

G s's, GSl'P of G _'p and G{ "s of G I'" such that the rows in G_ '_, G_ 'p, G{ 's and

G l'p are linearly independent and

t_tr , {0,Lh,h, = {txlG_," +f_lG_4'+71Gl'S +_.h, h :cq• 1} .... ,

/9, • {0, 1}'"','7, • {0, 1}'"'}, (A.35)

where re,s,rj,pand ri,,denote the numbers of rows of G_", G_ 'p and G{ 's,

respectively.Ifwe generate composite branch labelsby using the right-hand

side of (A.35) and store cqG _'_ +,01G_'P+TtGI's+C_,_h, into a memory indexed

APPENDIX A: A TRELLIS CONSTRUCTION PROCEDURE 265

with (ai, _l, 71), then the duplication can be avoided. The next requirement is

that the index (ax, _3x, 7x) can be readily obtained from a, _3 and 7. To provide

a good solution of the above problem, we first a_nalyze the linear dependency

of rows in ph,h,(G).

For a TOGM G, ph,h,(G) consists of the disjoint submatrices G °,', G',P,

G I'', G f'_ and the all zero submatrices ph,h,(G_) and Ph,h, (G_,).

Lemma A.2 In ph,w(G): (1) the rows in submatrices G l,J and G I,p are lin-

early independent; and (2) the rows in submatrices G ',P and G !,p are linearly

independent.

Proof: If the i-th row of G is in G L° or G l,p, then

h < Id(i) < h'. (A.30)

Similarly, if the i-th row of G is in G ",p or G Lp, then

h < tr(i) _< h'. (A.37)

Hence, (1) and (2) of the lemma followfrom(A.1) and (A.2), respectively.AA

For two binary r x m matrices _[ and M' and a binary linear block code Co

of length m, we write

M - M' (modCo)

if and only if every row in the matrix M - M' i_ in Co, where "-" denotes the

component-wise subtraction.

Partition G I," into two submatrices Go/'' an( G/'' (see Figure A.1) by par-

titioning the rows of G I'' in such a way that

(1) the rows in G1/'', G "'p and G f'p are linearly independent, and

(2) each row of G0/'' is a linear combination of rows in G/'', G ''p and V I'p.

Let vl denote the number of rows of G(", and define v0 -_ ph, - Ph.h' -- vl,

which is the number of rows of Go/'_.

Let el,el,... ,e_ o be the first to the last ro*s of G/'_. From conditions (1)

and (2) of the partitioning of G l,s, there are anique vl 1) e {0,1} _', v_ 2} •

{0, 1} p_-ph h, and ui • C tr such that' h,h'

. (1),,_f,s 12)G s'p + ui,ei=v i u I --t-v forl<i<v0

266 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

Time _,.

h

V---Ao

_z

L,e

t,1

i___

h I

}°

G_ ,°

G_ ,°

G j,s ,Oh,h,

G f'" p - Ph,h'

, G °,P f --Ph,h'

1 L?, Gh, h,

I

#

Figure A.1. Further partitioning of the TOGM G.

Let E (I)denote the u0 x vz matrix whose i-throw isvlz)and E (2)denote the

Uo × (Ph --Ph,h,)matrix whose i-throw isv_2).Then, we have

Go/'' ----E(z)G( '" "1"S(2)G "'P (modC_t,h,). (A.38)

f,, l,, E (z)and E (2)G o , G I , can be efficientlyderived by using standard row

operations.

Next, partitionG"" intotwo submatrices Go" and G_" (seeFigure A.I) by

partitioningthe rows of G"" in such a way that:

(I) the rows in G I''',G{", G °'Pand G I,_are linearlyindependent;and

(2) each row of Go" isa linearcombination ofrows inG I''',G{", G',p and

GI,P.

APPENDIX A: A TRELLIS CONS'IRUCTION PROCEDURE 267

Let A, denote the number of rows of G_ '°,and define A0 _a Ph,h, -- )tl,which

is the number of rows of G_ '°. In a similar way as the derivation of (A.38),

we can find a unique Ao x A, matrix F O}, a unique Ao x vl matrix F (2) and a

unique Ao x (Ph -- Ph,W) matrix F (s) such that

G_'" - F(X)GI '" + Fi2)G f'" + F(_)G _'p (modC_',w). (A.39)

Let R and Q denote the row number sets of G1/'° and GI 'm, respectively, Define

__ A {1,2,... ,Pw - ph,h,}\R and _ __a{1,2,... ,Ph,h'}\Q. Then, from (A.9)

cxG m'" = p<_(a)G_"+pQ(cx)G_", fora6{0,1}P_, h', (A.40)

"¥G I'" = p_('7)Glo'"+pa(_)Gll '°, for _ fi {0,1} "w-p',w. (A.41)

It follows from (A.38) to (A.41) that for c* e {0, 1}0_._', fl 6 {0, 1} °_-"h,h'

and 3' 6 {0, 1} ph'-ph.h',

aG"" + _G "'p+ 7G l'"+ C_[ w

= 'm

+ VR(-r)EC-" +

+(PQ(c_)F (_) +PR(7) +Pk(_')E(1))G( '_ + C,t,[n,. (A.42)

Define

f(1)(a) & pQ(c 0 +pc)(a)_ (1), (A.43)

f(_)Cc_,]_.f) _A pQ(a)F(3)_t_pR(.r)E(2) -b_, (A.44)

/(-_)('_,'/) _ P_(a)F (2)+Pa(7) +P_(7)E (I). (A.45)

When c,, ;3 and 7 run over {0, l}Pn.h', {0, i}p_-pn._' and {0, I) pn'-ph,h',re-

spectively, f(1)(c*)f(2)(c*,_,7 ) and f(3)(cL7 ) rt n over {0, I}x*, {0, 1}°h-oh,_'

and {0, 1} _', respectively. Hence, the set Lh,h, _*f all composite branch labels

is given by

Lh,h,h,h' :

c,, 6{0,1}A',fl, •{0,1}"_P_._',qq •{0,1}u'}. (A.46)

Since the rows in "_ G/'_ and _reG_ , G ,'p, G l,p linearly independent, for

, ' , s,s I'm C tr and(c,_,;9_,'y_) # (cq,_,'7,), the cosets cqG_ +/9_G "'_ +'7_G_ + h.h'

m

268 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

~' _f" C t' disjoint. Consequently, the set of com-,..'_G'_'"+ _'_G'._+ ._.._ + ,,.h'areposite branch labels of a parallel component Aa with ct E {0, l}Ph._', denoted

Lo, is given by

Lo f-lit t{-_c'_'"+ _,c'..+-v,G['"+..,.h:

_I fi {0, 1}"'-"h'_',3'i • {0,1}_'}, (A.47)

where al = f(t)(a) = pQ(a) -{- p0(_)F (I).

Theorem A.2 Let Q denote the row number sets of G l , and define ¢_ --

{1,2,... ,Ph,h,} \ Q. Two parallel components Aa and A°, with a and a' in

{0, 1} p_._' are isomorphic up to composite branch labels, if and only if

pQ(a + a') ----(p0( ¢_ + a'))F (I}, (A.48)

where F (x) is defined in (A.39). If (A.48) does not hold, Lo and Lo, are

disjoint.

Proof: (i) The only-if part: If c_i _ pQ(c_) + p_(a)F (I) 7t a_ _ pQ(c_') +

pc_(a')F (_),then Lo and Lo, are mutually disjoint from (A.47).

(2) The ifpart: Suppose that (A.48) holds, that is,c_ = a_. Let Io+o, denote

the binary (Ph'--ph._,)-tuple such that

pn(l°+(,,) = pc_(a + c(')F(_), (A.49)

pR(Ia+°,) ----0. (A.50)

(_h._')•r._'xr._"_,For any given state pair (o'a,a'h,)• E_ x E_,, define i ,

l(_J(_)= zc_(_)+p_(_+_')F(_.

i.e.,a_ = Sh.=,(l(P)(_h) -I- p¢(c_ -I- c_')F(3)), (A.51)

icI_(_,) = l(_(._,)+(o+o,,i.e,o'_, = Sh, o,(/(1)(aw) + Io+o,). (A.52)

= /CX)(cx'), f(2)(c_,l(P)(Crh),l(f)(_h,))= /C:_)((_',ICP)(O'_),

Hence, it follows from

Then, f(1)(a)

/(f)(a_,)) and f(-_)(o_,l(f)(aw)) = )'(-_)((_',/(1)(a_,,)).

(A.30), and (A.42) to (A.45) that

= L(ah, ah,).L(ah,ah,) ' '

APPENDIX A: A TRELLIS CONSTRUCTION PROCEDURE 269

Note that when aa runs over r._, l(P)(ah) runs cver La = {0, 1}"'-an,h' and

therefore, sh,a,(lO')(_h) +pO(cz + a')F (3)) defines a permutation of _._'. Sim-

ilarly, sw,a,(l(D(aw) + 1..+..,) defines a permutation of _;.

Corollary A.1 The block of the isomorphic parallel components containing

Ao is given by

(Ao+o, :pq(_') = p<_(a')F(*),p¢(_')E {0,1}_°}. (A.53)

Each block of the partition consists of 2_° identical parallel components, where

A0 is the number of rows of G_ '°.

AA

It is shown in [44] that A0 is equal to Ah,h,(C) defined by (6.36).

Example A.1 Consider the RM3,a Code which is a (64, 42) code. The second

section of the 8-section minimal trellis diagram T({0,8, 16,... ,64}) for this

code consists of 16 parallel components, and they are partitioned into two

blocks. Each block of the partition consists of 2_ identical parallel components,

Each parallel components has 8 states at time $ and 64 states at time 16.

Hence, there are 213 = 16 x 8 x 64 composite branches in this trellis section.

However, there are only 2 TM = 2 x 8 x 64 different composite branch labels.

/xA

A.4 AN EFFICIENT TRELLIS CONSTRUCTION PROCEDURE

In this section, an efficient procedure for constru=:ting the trellis section from

time-h to time-h' is presented. First, we present a subprocedure, denoted

GenerateCBL((_), that generates the set of ccmposite branch labels for a

representative parallel component Ao in a block. From Corollary A.1, we can

choose the parallel component Ao with po(c_) = 0 as the representative (in

(A.53), for any (_, ho+o, with p(_(c_') = p_((_) is such one). Let oq & pq(c_).

Then, the subprocedure, GenerateCBL(al), genel ates the set of the composite

branch labels of the parallel component Ao, denoted L°, :

Lo_ = {_IG_" +71G(" +_G"P+C_'h,:

e e .o, 1}..-..,.' },

270 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMSFOR LINEAR BLOCK CODES

tr in theand stores each composite branch label alga'" +3'tG{'" +/gG',P + Ch,w,

memory at the index al o'yl 0/3. This makes it possible to store the composite

branch labels in the parallel component at consecutive memory locations.

It follows from (A.42) that for aa E E_ and aa, E E_, with/(')(ah) = a,

l(P)(_h) = /3 and l(/)(_h,) = % the composite branch label, L(ah, aw) =

(:,G s," +,7G f," + _G s'p + C_,_w (or the maximum metric of L(ah, ah, ) ) is stored

in the memory with the following index:

indxCcx,]_,7) a_ (pQCa)+ p_(a)F (I)) o (p_(a)F (2)+ PRC'Y)+ P_(7) E(D)

o(p¢(a)t ¢_'+ p_(-_)E(_ + _). (^.S4)

[Trellis Construction Procedure Using Isomorphic Parallel Compo-

nents]

For every ¢"i E {0,I}_ {

Construct L(,_, by executing GenerateCBL(al).

(* Construct isomorphic parallel components. *)

Forevery'_oe (0,ipo{Let a be an element in {0, 1} p_._' such that

}}

pQ(") = (:*1 + (_0F (1), and pc(a) = Oto.

Construct A(, by executing ConstructA(,',) subprocedure stated below.

L/x

The following subprocedure ConstructA(c_) to construct h(a) is one to list

(I(an),l(ah,),indxCl(")(a,,),I(P)(a_),l(f)Cab,)))

for every state pair (ah, ah,) E E_ x E_,.

Subprocedure ConstructA(c_):

(* Construct a parallel component Ao. *)

For every 3' E {0, 1} p_'-p_._' {

For every j3E {0,I}"h-ph.''{

APPENDIX A: A TRELLIS CONSTRUCTION PROCEDURE 271

o.tput (t(sh,..(_)),I(s_,,,o('d), m_C_, _,'y)).}

)t_t_

Note that the labels, l(s_,a(]3)), l(s_,,a(_)) a_d indx(a,]9,-y) are given by

(A.29), (A.27) and (A.28), and (A.54), respectively.

REFERENCES

[11

[2]

[3]

[41

[6]

[71

L.R. Bahl, J. Cocke, F. Jelinek, and J. Raviv, "Optimal decoding of linear

codes for minimizing symbol error rate," IEEE Trans. Inform. Theory,

vol.IT-20, pp.284-287, 1974.

A.H. Banihashemi and I.F. Blake, "Trellis complexity and minimal trellis

diagrams of lattices," IEEE Trans. Inform. Theory, submitted for publi-

cation, 1996.

G. Battail, "Pond4ration des symboles d_cod_s par l'algorithm de

Viterbi," Ann. T414commun., vol. 42, pp.31-38, 1987.

E. Berlekamp, Algebraic Coding Theory, Agean Park Press, Laguna Hills,

Revised edition, 1984.

Y. Berger and Y. Be'ery, "Bounds on the trellis size of linear block codes,"

IEEE Trans. Inform. Theory, vol.39, pp.203-209, 1993.

Y. Berger and Y. Be'ery, "Soft trellis-based decoder for linear block

codes," IEEE Trans. Inform. Theory, vol.40, pp.764-773, 1994.

Y. Berger and Y. Be'ery, "Trellis-oriented decomposition and trellis-

complexity of composite length cyclic codes," IEEE, Trans. Inform. The-

ory, vol.41, pp.1185-1191, 1995.

273

274

[81

TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

Y. Berger and Y. Be'ery,"The twistedsquaringconstruction,trelliscom-

plexityand generalizedweights ofBCH and QR codes,"IEEE Trans.In-

form. Theory, voi.42,pp.1817-1827, 1996.

[9] C.Berrou, P.Adde, E.Angui and S.Faudeil,"A low complexity soft-output

Viterbidecoder architecture,"in Proc. of/(:7(:?,pp.737-740, 1993.

[10] P.J. Black and T.H. Meng, "A 140-Mb/s, 32-state,radix-4,Viterbide-

coder,"IEEE J.Solid-StateCircuits,voi.27,December 1992.

[11] R.E. Blahut, Theory and Practice of Error Correcting Codes, Addition-

Wesley, Reading MA, 1984.

[12] I.F. Blake and V. Tarokh, "On the trellis complexity of the densest lattice

packings in R"," SIAM J. Discrete Math., vol.9, pp.597-q301, 1996.

[13] A.R. Calderbank, "Multilevel codes and multi-stage decoding," IEEE

Trans. Commun., vol.37, no.3, pp.222-229, March 1989.

[14] D. Chase, "A class of algorithms for decoding block codes with channel

measurement information," IEEE Trans. Inform. Theory, vol.18, no.l,

pp.170-181, January 1972.

[15] J.F. Cheng and R.J. McEliece, "Near capacity codes for the Gaussian

channel based on low-density generator matrices," in Proc. 34-th AIlerton

Conf. on Comm., Control, and Computing, Monticello, IL., pp.494-503,

October 1996.

[16]

[17]

[18]

G.C. Clark and J.B. Cain, Error Correcting Codes for Digital Commu-

nications, Plenum Press, New York, 1981.

Y. Desaki, T. Fujiwara and T. Kasami, "A Method for Computing the

Weight Distribution of a Block Code by Using Its Trellis Diagram," IE-

ICE Trans. Fundamentals, vol.E77-A, no.8, pp.1230-1237, August 1994.

S. Dolinar, L. Ekroot, A.B. Kiely, R.J. McEliece, and W. Lin, "The per-

mutation trellis complexity of linear block codes," in Proc. 32-nd Aller-

ton Conf. on Comm., Control, and Computing, Monticello, IL., pp.60-74,

September 1994.

REFERENCES 275

[19] A. Engelhart,M. Bossert,and J.Maucher, "Heuristicalgorithmsforor-

deringa linearblock code to reduce the number ofnodes ofthe minimal

trellis,"IEEE Trans.Inform. Theory, submitted forpublicattion,1996.

[20] J. Feigenbaum, G.D. Forney Jr., B.H. Marcus, R.J. McEliece, and

A. Vardy, Special issueon "Codes and Complexity," IEEE Trans. In-

form. Theory, voi.42,November 1996.

[21] G. Fettweis and H. Meyr, "ParallelViterbialgorithm implementation:

breaking the ACS-bottleneck," IEEE Tr_s. Commun., voi.37,no.8,

pp.785-789, August 1989.

[22] G.D. Forney Jr., Concatenated Codes, MIT Press, Cambridge, MA, 1966.

[231 G.D. Forney Jr., "The Viterbi algorithm," Proc. IEEE, voi.61, pp.268-

278, 1973.

[24] G.D. Forney Jr., "Coset codes II: Binary lattices and related codes,"

IEEE Trans. Inform. Theory, vol.34, pp.1152-1187, 1988.

[25] G.D. Forney Jr., "Dimension/length profiles and trellis complexity of

linear block codes," IEEE Trans. Inform. rheory, vol.40, pp.1741-1752,

1994.

[261 G.D. Forney Jr., "Dimension/length profiles and trellis complexity of

lattices, _ IEEE Trans. Inform. Theory, voI.40, pp.1753-1772, 1994.

[27] G.D. Forney Jr., "The forward-backward a gorithm,"in Proc. 34-th Aller-

ton Conf. on Comm., Control, and Compu :ing, Control, and Computing,

Monticello, IL., pp.432-446, October 1996

[28] G.D. Forney, Jr. and M.D. Trott, "The dynamics of group codes:

state spaces, trellis diagrams and canonicfl encoders," IEEE Trans. In-

form. Theory, vol.39, pp.1491-1513, 1993.

[29] G.D. Forney, Jr. and M.D. "l¥ott, "The c ynamics of group codes: dual

group codes and systems," preprint.

276

[sol

TRELLISES AND TRELLIS-BASED DECODING ALGORITHbIS FOR LINEAR BLOCK CODES

M.P.C. Fossorier and S. Lin, =Coset codes viewed as terminated convo-

lutional codes, _ IEEE Trans. Comm., vol.44, pp.1096--II06, September

1996.

[31] M.P.C. Fossorier and S. Lin, "Some decomposable codes: the la + z[b +

z[a + b + z I construction, _ IEEE Trans. Inform. Theory, vol.IT-43,

pp.1663-1667, September 1997.

[32] M.P.C. Fossorier and S. Lin, "Differential trellis decoding of conventional

codes," IEEE Trans. Inform. Theory, submitted for publication.

[33] M.P.C. Fossorier, S. Lin, and D. Rhee, "Bit error probability for max-

imum likelihood decoding of linear block codes," in Proc. International

Symposium on Inform. Theory and its Applications, Victoria, Canada,

pp.606--609, September 1996, and submitted to IEEE Trans. Inform. The-

ory, June 1996.

[34] M.P.C. Fossorier, F. Burkert, S. Lin, and J. Hagenauer, "On the equiva-

lence between SOVA and Max-Log-MAP decoding," IEEE Comm. Lee-

ters, submitted for publication, August 1997.

[35l B.J. Frey and F.R. Kschischang, "Probability propagation and iterative

decoding," in Proc. 34-th A11erton Conf. on Comm., Control, and Com-

puting, Monticello, IL., pp.482-493, October 1996.

[36] T. Fujiwara, T. Kasarni, R.H. Morelos-Zaragoza, and S. Lin, "The state

complexity of trellis diagrams for a class of generalized concatenated

codes," in Proc. 1994 IEEE International Symposium on Inform. The-

ory, Trondheim, Norway, p.471, June/July, 1994.

[37] T. Fujiwara, H. Yamamoto, T. Kasami, and S. Lin, "A trellis-based re-

cursive maximum likelihood decoding algorithm for linear codes," IEEE

Trans. Inform. Theory, vol.44, to appear, 1998.

[3s] P.G. Gulak and T. Kailath, "Locally connected VLSI architectures for the

Viterbi algorithms," IEEE 3. Select Areas Commun., vol.6, pp.526-637,

April 1988.

[39]

[40]

[41]

[42]

[43]

[44]

[451

[46]

[47]

[4sl

REFERENCES 277

J.Hagenauer and P. Hoeher, "A Viterbialgorithm with soft-decisionout-

puts and itsapplications,"inProc. IEEE Globecom Conference,Dallas,

TX, pp.1680-1686, November 1989.

J.Hagenauer, E. O er,and L. Papke, "Iterativedecoding ofbinary block

and convolutionalcodes," IEEE Trans.Inform. Theory, voi.42,pp.429-

445, March 1996.

B. Honary and G. Marl<arian, Trellis decoding o.f block codes: a practical

approach, Kluwer Academic Publishers, Boston, MA, 1997.

G.B. Horn and F.R. Kschischang, "On the intractability of permuting a

block code to minimize trellis complexity," IEEE Trans. Inform. Theory,

vol.42, pp.2042-2048, 1996.

H. Imai and S. Hirakawa, "A new multilevel coding method using error

correcting codes," IEEE Trans. Inform. Theory, vol.23, no.3, May 1977.

T. Kasami, T. Fujiwara, Y. Desaki, and S. Lin, "On branch labels of

parallel components of the L-section mbtimal trellis diagrams for bi-

nary linear block codes," IEICE Trans. Fundamentals, vol.E77-A, no.6,

pp.1058-1068, June 1994.

T. Kasami, T. Takata, T. Fujiwara, and S. Lin, "On the optimum bit

orders with respect to the state complexity of trellis diagrams for binary

linear codes," IEEE Trans. Inform Theory, vol.39, no.l, pp.242-243, Jan-

uary 1993.

T. Kasami, T. Takata, T. Fujiwara, and S. Lin, "On complexity of trellis

structure of linear block codes," IEEE Trans. Inform. Theory, vol.39,

no.3, pp.1057-1064, May 1993.

T. Kasami, T. Takata, T. Fujiwara, and S. Lin, "On structural complexity

of the L-section minimal trellis diagrams :br binary linear block codes,"

IEICE Trans. Fundamentals, vol.E76-A, ro.9, pp.1411-1421, September

1993.

T. Kasami, T. Koumoto, T. Fujiwara, tl. Yamamoto, Y. Desaki and

S. Lin. "Low weight subtrellises for binar_ linear block codes and their

278 TRELLISESANDTRELLIS-BASEDDECODINGALGORITHMSFORLINEARBLOCKCODES

applications" IEICE Trans. Fundamentals, vol.ES0-A, no.ll, pp.2095-

2103, November 1997.

[49] T. Kasami, T. Sugita and T. Fujiwara, "The splitweight (wt,,wR)

enumeration of Reed-Muller codes for WL + wR < 2dmis," in Lecture

Notes in Comput. Sci. (Proc. 12th International Symposium, AAECC-

12), vo1.1255, pp.197-211, Springer-Verlag, June 1997.

[so] T. Kasami, H. Tokushige, T. Fujiwara, H. Yamamoto and S. Lin, "A re-

cursive maximum likelihood decoding algorithm for Reed-Muller codes

and related codes," Technical Report of NAIST, no.NAIST-IS-97003,

January 1997.

[51] A.B. Kiely, S. Dolinar, R.J. McEliece, L. Ekroot, and W. Lin, "Trellis

decoding complexity of linear block codes," IEEE Trans. Inform. Theory,

vol.42, pp.1687-1697, 1996.

[52l T. Komura, M. Oka, T. Fujiwara, T. Onoye, T. Kasami, and S. Lin,

"VLSI architecture of a recursive maximum likelihood decoding algo-

rithm for a (64, 35) subcode of the (64, 42) Reed-Muller code," in Proc. In-

ternational Symposium on Inform. Theory and Its applications, Victoria,

Canada, pp.709-712, September 1996.

[53] A.D. Kot and C. Leung, "On the construction and dimensionality of

linear block code trellises," in Proc. IEEE Int. Symp. Inform. Theory,

San Antonio, TX., p.291, 1993.

[54] F.R. Kschischang, "The combinatorics of block code trellises," in Proc.

Biennial Syrup. on Commun., Queen's University, Kingston, ON.,

Canada, May 1994.

[55] F.R. Kschischang, "The trellis structure of maximal fixed-cost codes,"

IEEE Trans. Inform. Theory, vol.42, pp.1828-1838, 1996.

[56] F.R. Kschischang and G.B. Horn, "A heuristic for ordering a linear

block code to minimize trellis state complexity," in Proc. 32-nd Aller-

ton Conf. on Comm., Control, and Computing, pp.75-84, Monticello,

IL., September 1994.

[571

[55]

[59]

[60]

[61]

[62]

[63]

[64]

[65]

[66]

[67l

[68]

[69]

REFERENCES 279

F.R. Kschischang and V. Sorokine, "On the trellisstructureof block

codes,"IEEE Trans. Inform. Theory, vol.41,pp.1924-1937, 1995.

A. Lafourcade and A. Vardy, "AsymptodcMly good codes have infi-

nite trellis complexity," IEEE Trans. Inform. Theory, vol.41, pp.555-559,

1995.

A. Lafourcade and A. Vardy, "Lower bounds on trelliscomplexityofblock

codes,"IEEE Trans. Inform. Theory, voi.41,pp.1938-1954, 1995.

A. Lafourcade and A. Vardy, "Optimal sectionalization of a trellis," IEEE

Tcans. Inform. Theory, vol.42, pp.689--703, 1996.

L. Lin and R.S. Cheng, "Improvements in SOVA-based decoding for turbo

codes," Proc. of ICC, pp.1473-1478, 1997.

S. Lin and D.J. Costello, Jr., Error Control Coding: Fundamentals and

Applications, Prentice Hall, Englewood Cl:ffs, NY, 1983.

S. Lin, G.T. Uehara, E. Nakamura, and W.P. Chu, "Circuit design ap-

proaches for implementation of a subtrellis IC for a Reed-Muller sub-

code," NASA Technical Report 96-001, February 1996.

C.C. Lu and S.H. Huang, "On bit-level trellis complexity of Reed-Muller

codes," IEEE Trans. Inform. Theory, vol._l, pp.2061-2064, 1995.

H.H. Ma and J.K. Wolf, "On tail biting convolutional codes," IEEE

Trans. Commun., vol.34, pp.104-111, 1985.

F.J. MacWilliams and N.,I.A. Sloane, The Theory of Error Correcting

Codes, North-Holland, Amsterdam, 1977.

J.L. Massey, "Foundation and methods cf channel encoding," in Proc.

Int. Conf. Inform. Theory and Systems, N'['G-Fachberichte, Berlin, 1978.

J.L. Massey and M.K. Sain, "Inverses of liaear sequential circuits," IEEE

Trans. Computers, vol.COMP-17, pp.330-337, April 1968.

R.J. McEliece, "On the BCJR trellis fi,r linear block codes," IEEE

Trans. Inform. Theory, vol.42, pp.1072-1( 92, 1996.

280

[701

TRELLISESANDTRELLIS-BASEDDECODINGALGORITHMSFORLINEARBLOCKCODES

R.J. McEliece and W. Lin, "The trellis complexity of convolutional

codes," IEEE Trans. Inform. Theory, vol.42, pp.1855-1864, 1996.

[71] A.M. Michelson and D.F. Freeman, "Viterbi decoding of the (63,57)

Hamming codes -- Implementation and performance results," IEEE

Trans. Comm., voi.43, pp.2653-2656, 1995.

[72] H.T. Moorthy and S. Lin, "On the labeling of minimal trellises for linear

block codes," in Proc. International Symposium on Inform. Theory and

its Applications, Sydney, Australia, vol.1, pp.33-38, November 20-24,

1994.

[73] H.T. Moorthy, S. Lin, and G.T. Uehara, "Good trellises for IC implemen-

tation of Viterbi decoders for linear block codes," IEEE Trans. Comm.,

vol.45, pp.52--63, 1997.

[74] H.T. Moorthy and S. Lin, "On the trellis structure of (64, 40, 8) subcode

of the (64, 42, 8) third-order Reed-Muller code," NASA Technical Report,

1996.

[75] H.T. Moorthy, S. Lin, and T. Kasami, "Soft-decision decoding of bi-

nary linear block codes based on an iterative search algorithm," IEEE

Trans. Inform. Theory, vol.43, no.3, pp,1030-1040, May 1997.

[76] R.H. Morelos-Zaragoza, T. Fujiwara, T. Kasami, and S. Lin, "Construc-

tions of generalized concatenated codes and their trellis-based decod-

ing complexity," IEEE Trans. Inform. Theory, submitted for publication,

1996.

[77] D.J. Muder, "Minimal trellises for block codes," IEEE Trans. In-

form. Theory, vol.34, pp.1049-1053, 1988.

[78] D.E. Muller, "Application of Boolean algebra to switching circuit design

and to error detection," IEEE Trans. Computers, vol.3, pp.6-12, 1954.

[79] J.K. Omura, "On the Viterbi decoding algorithm," IEEE Trans. In-

form. Theory, vol.IT-15, pp.177-179, 1969.

[80] L.C. Perez, "Coding and modulation for space and satellite communica-

tions," Ph.D Thesis, University of Notre Dame, December 1994.

REFERENCES281

[81] W.W. Peterson and E.J. Weldon, Jr., Error Correcting Codes, 2nd edi-

tion, MIT Press, Cambridge, MA, 1972.

[82] J.G. Proakis, Digita! Communications, 3rd edition, McGraw-Hill, New

York, 1995.

[83] I.S. Reed, "A class of multiple-error-correcting codes and the decoding

scheme," IEEE Trans. Inform. Theory, vol.IT-4, pp.38-49, 1954.

[84l I. Reuven and Y. Be'cry, "Entropy/length profiles and bounds on trellis

complexity of nonlinear codes," IEEE Trans. Inform. Theory, submitted

for publication, 1996.

[85] D. Rhee and S. Lin, "A low complexity and high performance concate-

nated scheme for high speed satellite communications, _ NASA Technical

Report, October 1993.

[86] R.Y. Rubinstein, Simulated and the Monte Carlo Method, New York,

Wiley, 1981.

[87] G. Schnabl and M. Bossert, "Soft decision decoding of Reed-Muller codes

and generalized multiple concatenated codes," IEEE Trans. Inform. The-

ory, vol.41, no.l, pp.304-308, January 1995.

[88] P. Schuurman, "A table of state complexity bounds for binary linear

codes," IEEF, Trans. Inform. Theory, vol.42, pp.2034-2042, 1996.

[89] V.R. Sidorenko, "The minimal trellis has maximum Euler characteristic

IV[ - [E[," Problemy Peredachi Informatsii, to appear.

[90] V.R. Sidorenko, G. Markarian, and B. Honary, "Minimal trellis design

for linear block codes based on the Shannon product," IEEE Trans. In-

form. Theory, vol.42, pp.2048-2053, 1996.

[91] V.R. Sidorenko, I. Martin, and B. Honary, "On separability of nonlinear

block codes," IEEE Trans. Inform. Theory, submitted for publication,

1996.

[92] G. Solomon and H.C.A. van Tilborg, _A connection between block and

convolutional codes," SIAM J. Appl. Math., vol.37, pp.358-369, 1979.

282

[931

{94]

[95]

[96]

[97]

[98]

[99]

[1001

[i0i]

[102]

[lO31

TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

V. Sorokine,F.R. Kschischang, and V. Durand, "Trellis-baseddecoding

of binary linear block codes," in Lecture No_es in Comput. Sd. (Proc. 3rd

Canadian Workshop on Inform. Theory), voi.793, pp.270-286, Springer-

Verlag, 1994,

D.J. Taipaie and M.B. Pursley, _An improvement to generalizedmin-

imum distance decoding," IEEE Trans. Inform. Theory, voi.37,no.l,

pp.167-172, 1991.

T. Takata, S. Ujita,T. Kasami, and S. Lin, _Multistagedecoding of

multilevelblock M-PSK modulation codes and itperformance analysis,"

IEEE Trans.Inform. Theory, voi.39,no.4,pp.1024-1218, 1993.

T. Takata,Y. Yamashita, T. Fujiwara,T. Kasami, and S.Lin, "Subopti-

mum decoding ofdecomposable blockcodes,"IEEE Trans.Inform. The-

ory,vol.40,no.5,pp.1392-1405, 1994.

V. Tarokh and I.F. Blake, "Trellis comple:-:ity versus the coding gain of

lattices I," IEEE Trans. Inform. Theory, v,_1.42, pp.1796-1807, 1996.

V. Tarokh and I.F. Blake, "Trellis comple::ity versus the coding gain of

lattices II," IEEE Trans. Inform. Theory, ,'ol.42, pp.1808-1816, 1996.

V. Tarokh and A. Vardy, "Upper bounds on trellis complexity of lattices,"

IEEE Trans. Inform. Theory, vol.43, pp.1294-1300, 1997.

H. Thapar and J.Ciofli,"A block processingmethod fordesigninghigh-

speed Viterbidetectors,"in Proc. ICC, vo:.2,pp.1096-1100, June 1989.

H. Thirumoorthy, "Efficient near-optimum decoding algorithms and trel-

lis structure for linear block codes," Ph.D dissertation, Dept. of Elec. En-

grg., University of Hawaii at Manoa, Nove nber 1996.

A. Vardy and Y. Be'ery, "Maximum-likelil,ood soft decision decoding of

BCH codes," IEEE Trans. Inform. Theory vol.40, pp.546--554, 1994.

A. Vardy and F.R. Kschischang, "Proof o"a conjectureof McEliece re-

garding the expansion index of the minilnal trellis,"IEEE Trans. In-

form. Theory, voi.42,pp.2027-2033, 1996.

[104]

[1o51

[106]

[i07]

[108]

[109]

[ii0]

[111]

[112]

REFERENCES 283

V.V. Vazirani,H. Saran, and B. Sunder Rajan, "An eflidentalgorithm

for constructingminimal trellisesfor codes over finiteabelian groups,"

IEEE Trans. Inform. Theory, voi.42, pp.1839--1854, 1996.

A.J. Viterbi,"Error bounds forconvolutionalcodes and asymptotically

optimum decoding algorithm,"IEEE Trans.Inform. Theory, vol.IT-13,

pp.260-269, 1967.

Y.-Y. Wang and C.-C. Lu, "The trelliscomplexity ofequivalentbinary

(17,9) quadratic residue code is five,"in Proc. IEEE Int. Symp. In-

form. Theory, San Antonio, TX., p.200,1993.

Y.-Y. Wang and C.-C. Lu, "Theory and algorithm for optimal equivalent

codes with absolute trellis size," preprint.

X.A. Wang and S.B. Wicker, "The design and implementation of trellis

decoding of some BCH codes," IEEE Trans. Commun., submitted for

publication, 1997.

J.K. Wolf, "Efficient maximum-likelihood decoding of linear block codes

using a trellis," IEEE Trans. Inform. Theory, vol.24, pp.76-80, 1978.

J.Wu, S.Lin,T. Kasami, T. Fujiwara,and T. Takata, "An upper bound

on effectiveerror coefficientof two-stage decoding and good two level

decompositionsofsome Reed-Muller codes,"IEEE Trans.Comm., voi.42,

pp.813-818, 1994.

H. Yamamoto, "Evaluating the block error probability of trellis codes

and designing a recursive maximum likelihood decoding algorithm of lin-

ear block codes using their trellis structure," Ph.D dissertation, Osaka

University, March 1996.

H. Yamamoto, H. Nagano, T. Fujiwara, T. Kasami, and S. Lin, _Re-

cursive MLD algorithm using the detail trellis structure for linear block

codes and its average complexity analysis," in Proc. 1996 International

Symposium on Inform. Theory and Its Applications, Victoria, Canada,

pp.704-708, September 1996.

284 TRELLISESANDTRELLIS-BASEDDECODINGA LGORITHMS FOR LINEAR BLOCK CODES

[113] O. Ytrehus, "On the trellis complexity of certain binary linear block

codes," IEEE Trans. Inform. Theory, vol.4C, pp.559--560, 1995.

[114] V.A. Zinovlev, "Generalised cascade codes," Problemy Pered_M:hi/at'or-

mats//, vo1.12.1, pp.2-9, 1976.

[115] V.V. Zyablov and V.R. Sidorenko, "Bound_ on complexity oftrellisde-

coding of linearblock codes," Problem.v _'eredachiInformats//,voi.29,

pp.3-9,1993 (inRussian).

INDEX

active span, see span

add-compare-select (ACS), see decoding

antipodM convolutional code, 187

bipartite graph, 85

block code, 6

concatenated code, 139-144

coset, 9

cyclic code, 86-68

decoding, 13

decomposable code, 120

direct sum, i0, 127, 131

dual code, 8

encoding, 7

extended BCH, 111-113, 216-219

interleaved, 136

minimum distance, 11

non-binary code, 68-69

product code, 137-138

punctured code, 39

Reed- Muller,

Boolean representation, 17-22

concatenation, 144-147

minimum weight subtrellis, 110-

111

squaring construction, 118-127

terminated convolutional code,

165-174

[ulu + v I construction, 20-22

Reed-Solomon, 69

squaring construction, 115--120

weight distribution, 12

weight profile, 12

bound

Wolf, 60-61

branch

branch complexity,

N-section trellis, 64

sectionalized trellis, 74-78

branch complexity profile, 77

branch dimension profile, 77

parallel branches, 72, 74

butterfly subtrellis, 188

Cartesian product, see trellis

code decomposition, 120

coded modulation, 137

compare-select-add (CSA), 189-191

composite branches, see parallel branches

concatenated code, see block code

connectivity, see state connectivity

constraint length, 30, 151

convolutional code, 150-152

antipodal, 187

punctured, 156-159

puncturing matrix, 156

termination,

tailbiting, 162-164

285

286 TRELLISES AND TRELLIS-BASED DECODING ALGORITHMS FOR LINEAR BLOCK CODES

zero-tail, 159-181

correlation, 15-17

colet, 9

coset representative, I0

decoding

bidirectional decoding, 73

block code, 13

Chase decoding algorithm-II, 227

convolutional code, 175

differential trellis decoding, 186-193

compare-select-add (CSA), 189-

191

divide and conquer, 195

iterative low-weight search, 221-241

maximum a-poateriori (MAP), 243--

247

maximum likelihood (ML), 14--17

bit error probability, 252-256

Max-Log-MAP decoding, 247-252

recursive MLD, 195-219

CombCBT procedure, 202-208

CombCBT-U procedure, 211-215

CombCBT-V procedure, 208-209

composite branch metric table,

198

composite branch label set, 211

MakeCBT procedure, 201-202

RMLD-(G,U) algorithm, 215-216

RMLD-(G,V) algorithm, 209-210

RMLD-(I,V) algorithm, 209-210

SOVA decoding, 247-252

Viterbi decoding, 176-177

add-compare-select (ACS), 176-

177

IC implementation, 179-188

differential trellis decoding, see decoding

direct sum, see block code

discrepancy, 224

distance

Euclidean, 15

Hamming, 11

divide-and-conquer, 195

edge, see branch

encoding

block rode, 7

convolutional code, 152

extended BCH code, see block code

Gaussian elimination, 29

generating pattern, 152

generator matrix

block code, 6

convolutional code, 151-152

trellis oriented generator matrix, 29

reduced echelon form, 253

hard-decision decoding, 13

iterative low-weight search, see decoding

Kronecker product, 19, 117

labeling, see state labeling

linear block code, 6

[og-like_ibood function, 14

low-weight subtrellis, see subtrellis

maximt_m a-posteriori probability (MAP)

decoding, see decoding

maxim, Lm likelihood (MLD) decoding, see

decoding

Max-Leg-MAP decoding, see decoding

memor7 order, 151

minimal trellis,

construction, 78-84

N-section trellis, 62-65

se :tionalized trellis, 74

minimt m distance, 11

minimt m-weight trellis, 101-103

mirror symmetry, 55-57

multilevel concatenation, 139-147

nearest neighbor test threshold, 226-227

non-bil ary code, see block code

optima ity test threshold, 226-227

optimum sectionalization, aee sectional-

isation

parallel branches, T2

parallel decomposition of trellises, 93-100

parity-check matrix, 8

permutation, see symbol permutation

profile, 28

branch complexity profile, 77

branch dimension profile, 77

state space complexity profile, 28

state space dimension profile, 28

punctured convolutional code, lee convo-

lutional code

purging procedure, _ee subtrellis

radix number, 76

rate, 9

recursive MLD, see decoding

reduced echelon form matrix, 253

Reed-Muller codes, see block code

row number set, 258

sectionalization, 71

optimum, 177-178,217-219

uniform, 72

Shannon product, see trellis

soft-decision decoding, 13

SOVA decoding, see decoding

span, 30

active span, 30

squaring construction, see block code

state

adjacent states, 28

final state, 24

initial state, 24

state complexity, 60-62

state connectivity, 76

state labeling, 43

information set, 44-47, 260-263

parity-check matrix, 48-55

state space, 24, 31,

state space complexity, 28

state space dimension, 28

INDEX 287

state trmlsition, 24, 32

subcode, 9

subtrellis, 72

butterfly subtreUis, 188

low-weight subtrellis, 100-113

purging procedure, 102-104

symbol permutation, 64,217

terminated convolutional code, see con-

volutional code

test

optimality test, 226-227

test error pattern, 227-228

threshold

nearest neighbor test threshold,

226-227

optimality test threshold, 226-227

trellis

Cartesian product, 135-137

concatenated code, 144-147

construction procedure, 43-47, 48-

55, 78-84,263-271

convolutional code, 152-155

minimal, see minimal trellis

minimum-weight trellis, 101-103

construction, 104-113

mirror symmetry, 55-57

N-section trellis, 27

parallel structure, 73, 85-91

sectionalized trellis, see sectionaliza-

lion

Shannon product, 127-136

squaring construction, 120-127

time invariant trellis, 25

time varying trellis, 27, 35-36

trellis complexity, 59

trellis diagram, 2, 24