Tradeoffs in Automatic Provenance Capture

-

Upload

paul-groth -

Category

Technology

-

view

1.865 -

download

3

Transcript of Tradeoffs in Automatic Provenance Capture

Trade-offs in Automatic Provenance Capture

Manolis Stamatogiannakis, Hasanat Kazmi,Hashim Sharif, Remco Vermeulen,

Ashish Gehani, Herbert Bos, and Paul Groth

2

Capturing ProvenanceDisclosed Provenance

+ Accuracy+ High-level semantics– Intrusive– Manual Effort

Observed Provenance– False positives– Semantic Gap+ Non-intrusive+ Minimal manual effort

CPL (Macko ‘12)Trio (Widom ‘09)

PrIME (Miles ‘09)Taverna (Oinn ‘06)

VisTrails (Fraire ‘06)

ES3 (Frew ‘08)Trec (Vahdat ‘98)

PASSv2 (Holland ‘08)

DTrace Tool (Gessiou ‘12)

OPUS (Balakrishnan ‘ 13)

3https://github.com/ashish-gehani/SPADE/wiki

• Strace Reporter– Programs run under strace. Produced log is

parsed to extract provenance.• LLVMTrace– Instrumentation added to function boundaries

at compile time.• DataTracker– Dynamic Taint Analysis. Bytes associated with

metadata which are propagated as the program executes.

SPADEv2 – Provenance Collection

4

SPADEv2 flow

5

Current Intuition

6

Current Intuition

7

Incomplete Picture• Faster, but how much?• What is the performance “price” for

fewer false positives?• Does a compile-time solution worth

the effort?

8

How can one get more insight?

Run a benchmark!

9

Which one?• LMBench, UnixBench, Postmark,

BLAST, SPECint…

• [Traeger 08]: “Most popular benchmarks are flawed.”

• No-matter what you chose, there will be blind spots.

10

Start simple: UnixBench• Well understood sub-benchmarks.• Emphasizes on performance of system calls.• System calls are commonly used for the

extraction of provenance.

• More insight on which collection backend would suit specific applications.

• We’ll have a performance baseline to improve the specific implementations.

11

UnixBench Results

12

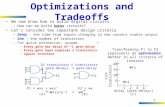

TRADEOFFS

13

Performance vs. Integration Effort

• Capturing provenance from completely unmodified programs may degrade performance.

• Modification of either the source (LLVMTrace) or the platform (LPM, Hi-Fi) should be considered for a production deployment.

14

Performance vs. Provenance Granularity

• We couldn’t verify this intuition for the case of strace reoporter compared to LLVMTrace.– Strace reporter implementation is not

optimal.

• Tracking fine-grained provenance may interfere with existing optimizations.– E.g. buffering I/O does not benefit

DataTracker.

15

Performance vs.False Positives/Analysis Scope

• “Brute-forcing” a low false-positive ratio with the “track everything” approach of DataTracker is prohibitively expensive.

• Limiting the analysis scope gives a performance boost.

• If we exploit known semantics, we can have the best of both worlds.– Pre-existing semantic knowledge: LLVMTrace– Dynamically acquired knowledge: ProTracer [Ma

2016]

16

TAKEAWAYS

17

Takeaway: System Event Tracing

• A good start for quick deployments• Simple versions may be expensive• What happens in the binary?

18

Takeaway: Compile-time Instrumentation

• Middle-ground between disclosed and automatic provenance collection.

• But you have to have access to source

19

Takeaway: Taint Analysis• Prohibitively expensive

for computation-intensive programs.

• Likely to remain so, even after optimizations.

• Reserved for provenance analysis of unknown/legacy software.

• Offline approach (Stamatogiannakis TAPP’15)

20

Generalizing the Results• Only one implementation

was tested for each method.• Repeating testing with

alternative implementations will provide confidence for the insights gained.

• More confidence when choosing a specific collection method. Different methods

Diffe

rent

im

plem

enta

tions

21

Implementation Details Matter

• Our results are influenced by the specifics of the implementation.

• Anecdote: The initial implementation of LLVMTrace was actually slower than strace reporter.

22

Provenance Quality• Qualitative features of the

provenance are also very important.

• How many vertices/edges are contained in the generated provenance graph?

• Precision/Recall based on provenance ground truth.

Performance Benchmarks

Qual

itativ

e Be

nchm

arks

23

Where to go next?• UnixBench is a basic benchmark.• SPEC: Comprehensive in terms of

performance evaluation.– Hard to get the provenance ground truth

– assess quality of captured provenance.• Better directions:– Coreutils based micro-benchmarks.–Macro-benchmarks (e.g. Postmark,

compilation benchmarks).

24

Conclusion• Automatic provenance capture is an

important part of the ecosystem• Trade-offs in different capture modes• Benchmarking – to inform• Common platforms are essential

25

The End

26

UnixBench Results

![Practical Whole-System Provenance Capture · a number of illustrative provenance use cases. Retrospective Security [7, 54, 79, 91] is the detection of security violations after execution,](https://static.fdocuments.net/doc/165x107/5f4c04a8350fcc65426b7bfa/practical-whole-system-provenance-capture-a-number-of-illustrative-provenance-use.jpg)