Toward Interactive and Intelligent Decision Support System

Transcript of Toward Interactive and Intelligent Decision Support System

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

1/23

ELSEVIER

European Journal of Operational Research IO7 ( 1998) 507-529

EUROPEAN

JOURNAL

OF OPERATIONAL

RESEARCH

Theory and Methodology

Multi-attribute decision making:

A simulation comparison of select methods

Stelios H. Zanakis aY , Anthony Solomon b, Nicole Wishart a, Sandipa Dublish

aDecision Sciences and Information Systems Department, College of Business Administration, Florida International University,

Miami, FL 33199, US A

Decision & Information Science Department, Oakland University, Rochester, MI 4U309. USA

Marketing Department, Fairleigh Dickinson IJniversiQ, Tea neck, NJ 07666, USA

Received 7 August 1996; accepted 18February 1997

Abstract

Several methods have been proposed for solving multi-attribute decision mak ing problems (MAD M). A major criticism

of MA DM is that different techniques

may yield different results

when applied to the same problem. The problem

considered in this study consists of a decision matrix input of N criteria w eights and ratings of L alternatives on each

criterion. The comparative performance of some methods has been investigated in a few, mostly field, studies. In this

simulation experiment we investigate the performance of eight methods: ELE CTR E, TOP SIS, Mu ltiplicative Exponential

Weighting (MEW ), Simple Additive Weighting (SAW ), an d four versions of AHP (original vs. geometric scale and right

eigenvector vs. mean transformation solution). Simulation parameters are the number of alternatives, criteria and their

distribution. The solutions are analyzed using twelve measures of similarity of performance. Similarities and differences in

the behavior of these methods are investigated. Dissimilarities in weights produced by these methods become stronger in

problems with few alternatives;

however, the corresponding final rankings of the alternatives vary across methods m ore in

problems with many alternatives. Although less significant, the distribution of criterion weights affects the methods

differently. In general, all AHP versions behave similarly and closer to SAW than the other methods. ELEC TRE is the least

similar to SAW (except for closer matching the top-ranked alternative), followed by MEW . TOPSIS behaves closer to AHP

and differently from ELEC TRE and MEW , except for problems with few criteria. A similar rank-reversal experiment

produced the following performance order of methods: SAW and ME W (best), followed by TOPS IS, AHPs and ELEC TRE .

It should be noted that the ELE CTR E version used was adapted to the common MAD M problem and therefore it did not

take advantage of the methods capabilities in handling problems with ordinal or imprecise information. 0 199 8 Elsevier

Science B.V.

Keywords:

Multiple criteria analysis; Decision theory; Utility theory; Simulation

1 Introduction

Multiple criteria decision making (MCDM) refers

to making decisions in the presence of multiple,

Corresponding author. Fax: + I-305-348-4126; e-mail:

usually conflicting criteria. MC DM problems are

commonly catego rized as continuous or discrete, de-

pending on the domain of alternatives. Hwa ng and

Yoon (1 981) classify them as (i) Multiple Attribute

Decision Making (M ADM ), with discrete, usually

limited, number of prespecifie d alternatives, requir-

ing inter and intra-attribute compar isons, involving

0377-2217/98/ 19.00 0 1998 Elsevier Science B.V. All rights reserved

PII SO377-2217(97)00147-l

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

2/23

508

S.H. Zanakis et al. / European Journal

o

perational Research 107 1998) 507-529

implicit or explicit tra deoffs; and (ii) Multiple Objec-

tive Decision Making (M ODM ), with decision vari-

able values to be determined in a continuous or

integer do main, of infinite or large number of choices,

to best satisfy the DM constraints, preferen ces or

priorities. MA DM methods have also been used for

combining good MO DM solutions based on DM

preferences (Kok, 1986; Kok and Lootsma, 1985).

In this paper we focus on MA DM which is used

in a finite selection or choice pro blem. In litera-

ture, the term MC DM is often u sed to indicate

MA DM , and sometimes MO DM m ethods. To avoid

any ambiguity we would hence forth use the term

MA DM when referring to a discrete M CDM prob-

lem. Met hods involving only ranking discrete alter-

natives with equal criteria weights, like voting

choices, will not be examined in this paper .

Churchman et al. (1957) were among the earlier

academicians to look at the MA DM problem for-

mally using a simple additive weighting metho d.

Over the years different behavioral scientists, opera-

tional researc hers and decision the orists h ave pro-

posed a variety of methods describing how a DM

might arrive at a preference judgment when choosing

among multiple attribute alternatives. For a survey of

MC DM methods and applications see Stewart (1992)

and Zanakis et al. (199 5).

Gershon and Duckstein (1983) state that the major

criticism of MA DM methods is that different tech-

niques yield different results wh en applied to the

same problem, apparently under the same assump-

tions and by a single DM . Comparing 23 cardinal

and 9 qualitative aggregation methods, Voogd (1983 )

found that, at least 40% of the time, each technique

produ ced a different result from any other technique.

The inconsistency in such results occurs becau se:

(a> the techniques use weigh ts differently in their

calculations;

(b) algorithms differ in their approach to selecting

the best solution;

cc> many algorithms attempt to scale the objec-

tives, which affects the weights already chosen;

(d) some algorithms introduce additional param e-

ters that affect w hich solution will be chosen.

This is compo unded by the inherent differences in

experimental conditions and human information pro-

cessing be tween DM , even under similar prefer-

ences. Other researchers have argued the opposite;

namely that, given a type of problem , the solutions

obtained by different MA DM methods are essen-

tially the same (Belton, 1986; Timmermans et al.,

1989; Karni et al., 1990; Goicoechea et al., 1992;

Olson et al., 1995). Schoemaker and Waid (1982 )

found different additive utility models produce gen-

erally different weigh ts, but predicted equally well

on the averag e. Practitioners seem to prefer simple

and transparent methods, which, however, are un-

likely to represent weigh t trade-offs that users are

willing to make (H obbs et al., 1992).

The wide variety of available techniques, of vary-

ing complexity and possibly solutions, confuses po-

tential users. Several MAD M methods may appear to

be suitable for a particular decision problem. Hence

the user faces the task of selecting the most appropri-

ate metho d from among several alternative feasible

methods.

The need for comparing MC DM methods and the

importance of the selection problem were probably

first recognized by MacCrimmon (1973) who sug-

gested a taxonomy of MC DM methods. More re-

cently several authors have outlined p rocedu res for

the selection of an appropriate MC DM method such

as Ozernoy (1992), Hw ang and Yoon (1981), Hobbs

(1986), Ozernoy (1987). These classifications are

primarily driven by the input requirements of the

method (type of information that the DM must pro-

vide and the form in which it must be provide d).

Very often these classifications serve more as a tool

for elimination rather than selection of the right

method. The use of expert systems has also been

advocated for selecting MC DM methods (Jelassi and

Ozernoy, 1988).

Our literature search rev ealed that a limited num-

ber of works has been done in terms of comparing

and integrating the different m ethod s. Denpon tin et

al. (1983) developed a comprehensive catalogue of

the different metho ds, but concluded that it was

difficult to fit the metho ds in a classification schem a

since decision studies varied so much in quantity,

quality and precision of information. Many authors

stress th e validity of the metho d as the key criterion

for choosing it. Validity implies that the metho d is

likely to yield choices that accurately reflect the

values of the user (Hobbs et al., 1992). How ever

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

3/23

S.H. Zunakis et al/European Journal of Operational Research 107 (1998) 507-529

509

there is no absolute, objective standard of validity as

preferen ces can be contradictory when articulated in

different w ays. Resea rchers often measure v alidity

by checking how well a given method predicts the

unaided decisions made independently of judgments

used to fit the model (Schoemaker and Waid, 1982;

Currim and Sarin, 1984 ). Decision scientists q uestion

the applicability of this criterion, particularly in com-

plex problems that will cause users to adopt less

rational heuristics and to be inconsistent. Studies in

decision making have shown tha t the efficiency of a

decision made h as an inverted U shape d relationship

with the amount of information provided (Kok, 1986;

Gemunden and Hauschildt, 1985).

Researchers, who have attempted the task of com-

paring the different MA DM methods have used ei-

ther real life cases or formulated a real life like

problem and presented it to a selected group of users

(Currim and Satin, 1 984; Gemunden and Hausch ildt,

1985; Belton, 1986; Roy and Bouyssou, 1986; Hobbs,

1986; Buchanan and Daellenbach, 1987; Lockett and

Stratfor d, 1987; Stillwell et al., 1987; Karni et al.,

1990; Stewart, 1992; Goicoechea et al., 1992). Such

field experimen ts are valuable tools for comparing

MA DM methods, based on user reactions. If prop-

erly designed, they assess the impact of human

information processing and judgmental decision

making, beyond the nature of the methods employed.

Users may compare these methods along different

dimensions, such as perceived simplicity, trustwor-

thiness, robustness and quality. How ever, field stud-

ies have the following limitations and disadvantages:

are not affecte d significantly by the choice o f

decision maker or which of these methods is

used. The fact that judgments were elicited from

working professionals in one study and gradua te

students in the other may explain partially the

discrepancy.

(f) It is impossible or difficult to answer questions

like:

1. Which method is more approp riate for what

type of problem?

2. What are the advantages/disadvantages of us-

ing one method over another?

3. Do es a decision change when using different

methods? If yes, why and to what extent?

The above limitations may be overco me via simula-

tion. How ever, since they cannot ca pture hum an

idiosyncrasies, their findings should supplement

rather than substitute those of the field experiments.

We have found only three simulation studies com-

paring solely AHP type methods.

(a) The sample size and range of problems studied

is very limited.

(b) The subjects are often students, rather than real

decision makers.

(c) The way the information is elicited may influ-

ence the results more than the model used (Olson

et al., 1995).

(d) The learning effect biases outcom es, especially

when a subject employs various methods sequen-

tially (Kok, 1986).

Zahedi (1986) generated symmetric AHP and

asymmetric matrices of size 6 and 22 from uniform,

gamm a and lognormal distributions, with muhiplica-

tive error term. Criteria weights were derived using

six metho ds: Right eigenvalue, row and column geo-

metric means, harmonic mean, simple row average,

and row averag e of columns normalized first by their

sum (called mean transformation method). The accu-

racy of the corresponding weight and rank estimators

was evaluated using MAE, M SE, Variance and

Theils coefficient. She concluded that, when the

input matrix is symmetric, the mean transformation

method outperformed all other methods in accuracy,

rank preservation and robustness towa rd error distri-

bution. Differenc es between m ethods w ere notice-

able only under a gamm a e rror distribution, whe re

the eigenvalue method did poorly, while the row

geome tric mean exhibited better rank preservation

with large-size matrix. All methods performed

equally well (except simple row average) and much

better when errors had a uniform than lognormal

distribution.

(e> Inherent human differences led Hobb s et al.

Takeda et al. (1987) conducted an AHP simula-

(1992) to conclude that decisions can be as or

tion study, with multiplicative random er rors, to

more sensitive to the method used as to which

evaluate different eigen-weight vectors. They advo-

person applies it. How ever, in a similar study ,

cate using their graded eigenvector method over

Goicoechea et al. (1992) concluded that rankings

Saatys simpler right eigenvector approach.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

4/23

510

S.H. Zunak is et al./ European Journal of Operat i onal Research 107 1998) 507-529

Triantaphyllou and Mann (198 9) simulated ran-

dom AHP matrices of 3-21 criteria and alternatives.

Each problem was solved using four methods:

Weighted sum model (WSM ), weighted product

model (WPM ), right-eigenvector AHP and AHP re-

vised by normalizing each column by the maximum

rather the sum of its elements, according to Belton

and Gear (1984) suggestion for reducing rank rever-

sals. Solutions were compared against the WSM

benchm ark and rate of change in best alternative

when a nonoptimal alternative is replaced by a wor se

one. They concluded that the revised A HP appears to

perform closest to the WSM ; AHP tends to behave

like WS M as the number of alternatives increases;

and that the rate of change does not depend on the

number of criteria.

The first two studies are limited to a single AH P

matrix; i.e. different metho ds for deriving weig hts

only for the criteria or only for the alternatives under

a single criterion - not simultaneously for the entire

MA DM problem. And all three are limited to vari-

ants of the AH P. A further limitation of the third

study is that it employs only two measures of perfor-

mance: The percentag e contradiction between a

methods rankings to WSM , and the rate of rank

reversal of top priority. There is clearly a need fo r a

simulation study comparing also other MA DM type

methods, using various measures of performance.

Our w ork in that regard is explained in the next

section. The MA DM problem under consideration is

depicted by the following DM matrix of preferences

for m alternatives rated on n criteria:

Criterion

Alternative c, c2 . . . cJ . . . cN

1

rll

rt* . . . rIj . . .

rl N

2

r 21

rz2 . . . rlj . . .

r 2N

i

r

11

ri2 . . . rij . . . riN

r, . .

TL I

rL2 . . . rLj

. ._

LN

Where c, is the importance (weight) of the jth

criterion and rij is the rating of the ith alternative on

the jth criterion. As commonly done, w e will as-

sume that the latter are column normalized, to also

add to one. Different MA DM methods will be exam-

ined for eliciting these judgments and aggregating

them into an overall score S, for each alternative.

Then, the overall evaluation (weig ht) of each alterna-

tive will be W, = S,/CS,, leading to a final ranking

of all alternatives. Develop ment of a cardinal mea-

sure of overall prefe rence of alternatives (S;) have

been criticized by advoc ates of outranking metho ds

as not reliably portraying true or incomplete prefer-

ences. Such methods establish measures of outrank-

ing relationships among p airs of alternatives, leading

to a comp lete or partial ordering of alternatives.

2. Methods compared

Of the many MA DM methods available we have

chosen th e following five for comparison in our

research, when applied to solve the same problem

with the decision matrix information stated earlier:

1.

2

3

4

5

Simple Additive Weighting SAW): Si = Cjcjri,.

Multiplicative Exponent Weighting MEW ): Si =

n, rz.

Analytic Hierarchy Process AHP) - four ver-

sions.

ELECTRE.

TOPSIS Technique for Preference by Similarity

to the Ideal Solution).

The rationale for selection has been that most of

these are among the more popular and widely used

methods and each method reflects a different ap-

proach to solve MA DM problems. SAWs simplicity

makes it very popular to practitioners (Hob bs et al.,

1992, Zanakis et al., 1995 ). MEW is a theoretically

attractive contrast against SA W. Howev er, it has not

been applied often, because of its practitioner-unat-

tractive mathem atical concept, yet in spite of its

scale invariant proper ty (depend s only on the ratio of

ratings of alternatives). TOPSIS (Hwang and Yoon,

1981 ) is an exception in that it is not widely used;

we have included it because it is unique in the way it

appr oache s the problem and is intuitively appealing

and easy to understand. Its fundamental premise is

that the best alternative, say ith, should have the

shortes t Euclidean distance S, = [C rij - r,?)2]12

from the ideal solution r,?, made up of the best

value for each attribute regard less of alternative) and

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

5/23

S.H. Zmak is et al ./ European Journal of Operat ional Research 107 1998) 507-529

511

the farthest distance S; = [C(rjj -

r,: 2]/2

from the

negative-ideal solution (r,:, m ade up of the wor st

value for each attribute). The alternative with the

highest relative closeness measure S,T/

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

6/23

512

S.H. Zmukis et d/European Journul

of

Operational Research 107 19981507-529

and U.S. Army Corps Engineers evaluate A HP,

ELECTR E, SAW and other methods on water supply

planning studies. The ir results were contradictory;

the first found perceived differences across methods

and users, while the latter study did not. Finally,

Comes (198 9) compared ELECT RE to his method

TODIM (a combination of direct rating, AH P

weighting and dominance ordering rules) on a trans-

portation problem and concluded that both methods

produ ced essentially the same ranking of alterna-

tives. The above findings highlight our motivation

and justification for undertaking this simulation

study. Our major objective was to conduct an exten-

sive numerical comparison of several MCDA meth-

ods, contrasted in several field studies, when applied

to a common problem (a decision matrix o f explic-

itly rated alternatives and criteria weigh ts) and deter-

mine when and how their solutions differ.

3.

Simulation experiment

According to Hobbs et al. (1992) a good experi-

ment should satisfy the following conditions:

(a) Compare methods that are widely used, repre-

sent divergent philosoph ies of decision m aking or

claimed to represent imp ortant m ethodolo gical im-

provements.

(b) Address the question of appropriateness, ease

of use and validity.

(c) Well controlled, uses large samples and is

replicable.

(d) Compares methods across a variety of prob-

lems.

(e) Problems involved are realistic.

Our simulation experimen t satisfies all conditions

except the second one.

Computer simulation was used for the purpose of

comparing the MA DM methods. The reason for

using simulation was that it is a flexible and versatile

method which allows us to generate a range of

problem s, and replicate them several times. This

provides a vast database of results from which we

can study the patterns of solutions provided by the

different methods.

The following parameters were chosen for our

simulation:

1.

2

3

4

5

Number of criteria N: 5 10 15 20.

Number of alternatives L: 3 5 7 9.

Ratings of alternatives rjj: randomly generated

from a uniform distribution in O-l

Weig hts of criteria c,: set all equal (l/N), ran-

domly gene rated from a uniform distribution in

O-l (std. dev. l/12) or from a beta U-shaped

distribution in O-l (std. dev. l/24 ).

Number of replications: 100 for each combina-

tion, thus producing 4 criteria levels

4

alterna-

tive levels X 3 weigh t distributions 100 replica-

tions = 480 0 pro blems, resulting in a total of

38,400 solutions, across eight approaches - four

methods plus AHP with four versions.

An explanation of these choice s is in order. Th e

range for the number of criteria and alternatives is

typical of those found in many applications. This is

representative of a typical MA DM problem, where a

few alternatives are evaluated on the basis of a wide

set of criteria, as explained below. Many empirical

studies on the size of the evoked set in the consumer

and industrial mark et context h ave shown that the

number of intensely discussed alternatives does not

exceed 4-5 (Gemunden and Hauschildt, 1985). In

practice a simple check-list of desirable features will

rule out unacceptable alternatives early, thus leaving

for consideration only a small number. The number

of criteria, th ough , can be considerably higher. T hree

distributions for weigh ts were assumed: No distribu-

tion, i.e. all weigh ts equal to l/N (class of problems

where criteria are replaced by judges or voters of

equal impact); u niform distribution, which may re-

flect an unbiased, indecisive or uninformed user; and

a U shape distribution, which may typify a biased

user, strongly favoring some issues while rigidly

opposing others. Under group pressure , similar situa-

tion may not arise often in openly supporting pet

projects. For this reason and in order to keep this

simulation size manageab le, we considered only one

distribution (uniform) for ratings under each crite-

rion.

Additional care was taken during the data genera-

tion phase. The ratio of any two criteria weights or

alternative ratings should not be extremely high or

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

7/23

S.H. Zanakis et ul. / Eurc~peun Joumul of Operutionul Reseurch 107 1998) 507-529

513

extremely low; this will avoid pathological cases or

scale-induced imbalances between methods, whose

performance then deteriorates (Zahedi, 1986). After

some experimentation, this was set at 75 (and l/75),

one step beyond the maximum e4 of the geometric

AHP scale. Symmetric reciprocal matrices were ob-

tained from these ratio entries for the AHP methods.

No alternative was kept if it was dominating all

others on every criterion, or if it was dominated by

another alternative on all criteria. For each criterion,

all weights were normalized to add up to one. Simi-

lar normalization was applied to the final weigh ts o f

the alternatives overall criteria in each problem. The

AHP pairwise comparisons a,, (> 1) were generated

by selecting the closest o riginal (Saaty) or geom etric

scale value to the ratio c,/ci for two criteria and

rrk/rlk for two alternative ratings under criterion k;

and then filling the symme tric entries using the

reciprocal ratio condition aji = l/a;,.

for selecting SAW as the benchmark is that its

simplicity make s it extremely popular in practice.

For each method, the following measures of similar-

ity were computed on its final evaluation (weig hts or

ranks) against those of the SAW m ethod, averaged

over all alternatives in the problem:

1. Mean squared error of weights (MSEW ) and the

same for ranks (M SER).

2. Mean Absolute error of weights (M AEW ) and the

same for ranks (M AER).

3. Theils coefficient U for weights (UW) and the

same for ranks CUR).

4. Kendalls correlation Tau for weights (K WC).

5. Spermans correlation for ranks (SRC).

6. Weighted rank crossing 1 (WRCI).

7. Weighted rank crossing 2 (WRC 2).

8. Top rank matched count (TOP).

9. Number of ranks matched, as % of number of

The generated data were also altered subsequently

to simulate rank reversal conditions, when a non-op-

timal new alternative is introduced. This is a primary

criticism of AH P and has created a long and intense

controversy among researchers (Belton and Gear,

1984; Saaty, 1984; Saaty, 1990; Dyer, 1990; Harker

and Vargas, 1990; Stewart, 1992). This experimenta-

tion was applied to each method solution and initial

problem, say of L alternatives, as follows: (i) A new

alternative is introduced in the problem by randomly

generating n ratings for each criterion from the

uniform distribution; (ii) the ranks of L + 1 alterna-

tives in the new problem are determined; (iii) if the

new (L + 1) alternative gets th e first rank, it is

rejected and another alternative is generated as in

step (ii); (iv) if the new alternative gets any other

rank, the new rank ord er of the old alternatives is

determined after removing the new alternative rank.

Thus an original array o f ranks and a new array o f

ranks are produced for each problem and method.

These tw o rank arrays are used in computing the

rank reversal measures.

alternatives L (MAT CH% ).

The reason for looking at measur es for both final

weights and ranks is because methods may produce

different final weigh ts for alternatives, but they can

result in the same or different rank or der o f alterna-

tives. Our last four measures capture this rank dis-

agreement (crossings of rank order), of which mea-

sures, two are giving more w eight to higher rank

differences:

W RC

5

W,R.s w R,.blETHv

Y

i

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

8/23

514

S.H. Zanakis et al. /European Journal qfOperational Research 107 II 998) 507-529

duction of a new nonoptimal alternative (TOP ); and

the total number or ranks not altered as a percent of

number of alternatives (MAT CH% ) for that problem.

Here we would like to clarify that the efficiency

of a metho d is not merely a function of the theory

supporting it or how rigorou s it is mathematically

speaking. The other aspects which are also very

important, relate to its ease of using it, user under-

standing and faith in the results, metho d reliability

(consistency) vs. variety. These are important and

have been tackled by some authors (Buchanan and

Daellenbach, 1987; Hobbs et al., 1992; Stewart,

1992 ). Such issues can not be studied in a simulation

experiment.

4. Analysis of experimental results

The simulation results were analyzed using the

SAS package. Each measure of performance was

analyzed via parametric ANO VA and nonparametric

(Kruskal-W allis). The results are summa rized in

Tables 1 and 3. The nonparametric tests reveal th at

N, L and distribution type affect all perform ance

Table 1

Summary of ANOVA significance levels for factors and interactions

measures at the 95% confidence level, except by

distribution type for KWC , SRC , MSE R, U R, and

marginally for MA ER, W RCl and WRC 2. Accord-

ing to the parametric ANO VA, the number of alter-

natives, number of criteria and metho d, as well as

most of their interactions, affect significantly all

measures of performance. However, the distribution

type and few of its interactions, do not influence

significantly four perform ance measures; namely

KWC and UR (as was the case with the nonparamet-

ric tests), SRC and MSER at the 95% level.

Table 5 portrays the average performance mea-

sure for each method, along with Tukeys studen-

tized range test of mean differences. Perform ance

measures on weights are not given for ELECT RE,

since it only rankord ers the alternatives. The four

AH P metho ds produ ce indistinguishable results on

all measures, and they were always closer to SAW

than the other three methods. The only exception is

the TOP result for ELECT RE, indicating that it

matched the top ranked alternative produced by SAW

90% of the time, vs. 82% for the AHPs. Any differ-

ences among the four AHP version results are af-

fected mo re by the scale (original vs. geom etric) than

KWC

MATCH WRCl WRC2 SRC

MSER MAER MSEW MAEW UW UR

L

0.0001 0.0001 0.0001

0.0001 0.0001 0.0001 0.0001

0.0001 0.0001 0.0001

0.0001

V

0.0019 0.0410

0.0373 - - 0.0607

0.0001 0.0001 0.0001

-

METH

0.0001 0.0001 0.0001

0.0001 0.0001 0.0001 O.oool

0.0001 0.0001 0.0001

0.0001

N

0.0001 0.0001 0.0001

0.0001 0.0001 0.0001 0.0001

o.ooo1 0.0001 0.0001

0.0001

L*V

0.0001 0.0001 0.0001

O.cOOl 0.0001 0.0001 0.0001

0.0001 0.0001 -

0.0001

L*METH

0.0001 0.0001 0.0001

0.0001 0.0001 0.0001 0.0001

0.0001 0.0001 0.0001

0.0001

N*L

0.0001 0.0001 0.0001

0.0001 0.0001 0.0001 0.0001

0.0001 o.Oc01 0.0001

0.0001

V * METH

0.0410 0.0001 0.0001

0.0001 0.0001 0.0001 0.0001

0.0010 0.0079 0.0001

0.0001

N*V

0.0001 0.0001 0.0001

0.0001 0.0001 0.0001 0.0001

0.0787 0.0577 0.0138

0.0001

N+METH

0.0001 0.0001

0.0001 0.0001 0.0001 0.0001

0.0001 0.0001 0.0001

0.0001

N*L*V

0.0071 0.0001 0.0058

0.0025 0.0015 0.0998 0.0155

0.0013 0.0004 0.0001

0.0094

N* L*METH

0.0001 0.0001

0.0001 0.0001 0.0001 0.0001

0.0001 - -

0.0001

N*V*MJTH

0.0001 0.0498

- - 0.0503 0.0204

- 0.0329 0.0001

0.0253

L* V*METH

-

0.0002

0.0030 - 0.0001 0.0002

- - -

-

N*L*V*h4ETH

- -

-: Indicates not significant result (P-value > 0.10).

L: Number of alternatives.

N: Number of criteria.

V: Type of distribution = 1 equal weights; 2 uniform; 3 beta U.

MISTH: Method = 1 Simple Additive Weighting (SAW); 2 AHP with original scale using eigenvector; 3 AHP with geometric scafe using

eigenvector; 4 AHP with original scale using mean transformation; 5 AHP with geometric scale using mean transformation; 6 Multiplicative

Exponential Weighting;

7 TOPSIS; 8 ELECTR.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

9/23

S.H. Zanaki s et al. European Journal qf Operat iona l Research 107 I 998) 507-529

515

Table 2

Summary of ANOVA significance levels for factors and interactions rank reversal experiment

MATCH

WRCl

WRC2 SRC

MSER

MAER

L

0.0001

0.0001

0.0001 0.0001 0.0001

0.0001

V

METH

N

L*V

L * METH

N*L

V * METH

N*V

N*METH

N*L*V

N*

L*METH

N* V*METH

L* v*METH

N*

L*V*METH

0.0001

0.0001

0.0001

0.0001

0.0001

0.0001

0.0001

0.0226

O.OOQl

0.0055

0.0001

0.0146

0.0001

O.OCQl

0.0001

0.0753

0.0001

0.0001

0.0001

0.0039

0.0001

0.0077

0.0001

0.005 1

0.0001

0.0181

0.0001

0.0001

0.0001

O.OQOl

O.CQOl

0.0030

O.oool

0.0185

0.0001

0.0001

0.0261

0.0001

0.0433

0 0001

O.OOQl

0.0001

0.0001

0.0001

0.007 1

0.0001

0.0126

0.0001

0.0796

0.0001

0.0001

0.0001 0.0001

0.0001 0.0001

0.0001 0.0089

0.0001 0.0001

O.OOfll

0.0001

0.0001

0.0001

0.0006

0.0041

0.0001 0.0001

0.0110 0.0161

0.0001

0.0001

0.0001

0.0004

0.0001 0.0001

0.0001 0.0175

-: Indicates not significant result (P-value > 0. IO).

L:

Number of alternatives.

N: Number of criteria.

V: Type of distribution = 1 equal weights; 2 uniform; 3 beta U.

METH: Method = 1 Simple Additive Weighting (SAW); 2 AHP with original scale using eigenvector; 3 AHP with geometric scale using

eigenvector; 4 AHP with original scale using mean transformation; 5 AHP with geometric scale using mean transformation; 6 Multiplicative

Exponential Weighting; 7 TOPSIS; 8 ELECTRE.

by the solution appro ach (eigenvector vs. mean

produ ces significantly different results from all AH P

transformation). The latter contradicts Zahedis

versions on all measures. MEW and ELECT RE be-

(1986) study that examined single AH P matrices,

have similarly in SRC and MSER, but differ accord-

possibly due to the aggregating effect of looking at ing to MA R, UR, WRC l and WCR 2. TOPSIS dif-

criteria and alternatives together. The MAEW for

fers from ELECTRE and MEW on all measures; and

each AHP version was only about 0.008, implying agrees with AHP only on SRC and UR (only for

weights of about +0X% away from those of SAW original scale). The rankord er results o f all metho ds

on the average. The most dissimilar method to SAW

mostly agree with those of SAW, as indicated by

is ELECT RE followed by MEW , and TOPSIS to a

their high correlations (all SRC > 0.80). In light of

lesser extent. More specifically, the MEW method

the prior comments, SRC gives a stronger impression

Table 3

Summary of Kruskal-Wallis nonparametric ANOVA significance levels

SRC

MSER

MAER UR

WRCl

WRC2 MAEW

MSEW UW KWC

MATCH

Alternatives O.oool 0.0001 0.0001 0.0001

0.0001 0.0001 0.0001 0.0001 O.OQOl O.Oc@l 0.0001

Criteria o.ooo3 0.0006 0.0004 0.0004

0.0010 0.0005 0.0001 0.0001 0.0001 0.0002 O.OflOl

Distribution 0.0473 0.0151 0.0177 0.0518

0.0260 0.0464 O.OtlOl O.oool 0.0001 0.1021 0.0234

Method 0.0001 0.0001 0.0001 0.0001

0.0001 O.oool 0.0001 0.0001 0.0001 0.0001 0.0001

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

10/23

516

S.H. Zanak is et al. European Journal of Operat ional Research 107 1998) 507-529

Table 4

Summary of Kruskal-Wallis nontwametric ANOVA significance levels rank reversal exoeriment

SRC

MSER MAER

WRC 1

WRC2

MATCH

Alternatives 0.0001 0.0001 0.0001 0.0001 0.0001 0.0001

Criteria 0.0001 0.0001 0.0001 0.0001 0.0001 0.0001

Distribution 0.0001 0.0001 0.0001 0.0001 0.0001 0.0001

Method 0.0001 0.0001 0.0001 0.0001 0.0001 0.0001

of similarity than it actually exists. F or the large

sample sizes involved, SRC should be below 0.04

approxim ately to imply no correlation or above 0.96

to imply perfect rank agreement, neither o f which is

the case here. SRC results sometimes contradicted

those of the other rank performance measures. In

those cases we lean towards the latter, since SRC

does not consider rank importance , unlike our mea-

sures WRC l and WR C2 (the former giving larger

values than the later by design). Comparing SRC to

WRC l or WCR 2, one may observe that although

TOPSIS and the four AHPs have similar SRC, the

higher WRC values imply that TOPSIS differs from

the AHPs more in higher ranked than lower ranked

alternatives. Similarly, E LECTR E differs from MEW

also more in higher ranked alternatives than lower

ones. An interesting finding is that although ELEC -

TRE matches SAW top rank more often (90%) than

the other methods, its match of all SAW ranks

(MAT CH% ) is far smaller than any of the other

methods. Many graphs w ere also drawn to further

identify parameter value impacts, mean differences

and important interactions. How ever, space limita-

tions prevent showing all of them.

ESfect of number of alternatives L): As the num-

ber of alternatives L increases, all metho ds tend to

produce overall weights closer to SAWs (especially

TOPS IS). This is reflected in higher corre lations

KWC (except for the insensitive method MEW ) and

SRC, higher Theils UW (only for AHPs), and lower

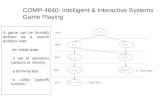

0.65 I

2 3 4 5 6 7

Method

Fig. 1. KWC by number of alternatives.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

11/23

S.H. Zanuki.s et al. / European Journal ~fOperutionu1 Research 107 1998) 507-529

517

Table 5

Average performance measures by method and Tukeys test on differences

SRC

WRC I

Methods

Mean

Tukey Mean

AHP, Original, eigen 0.8967 A

0.362 1

AHP, Geometric, eigen 0.8992 A

0.3507

AHP, Original, MTM 0.8969 A

0.3626

AHP, Geometric, MTM 0.8992 A

0.3500

MEW 0.8045 B

0.6278

TOPSIS 0.8921 A

0.4047

ELECTRE 0.8078 B

0.7267

WRC2

Tukey Mean

Tukey

D 0.3253 D

D 0.3142 D

D 0.3258 D

D 0.3138 D

B 0.5726 B

C 0.3723 C

A 0.686 I A

Methods

KWC

Mean

Tukey

MSEW

Mean

Tukey

MAEW

Mean

Tukey

AHP, Original, eigen

0.8257

A

O.OQOl7

B 0.0085 C

AHP, Geometric, eigen 0.8280 A

0.00019 B 0.0087 C

AHP, Original, MTM

0.8257

A

0.ooo17 B

0.0084 C

AHP, Geometric, MTM 0.827 1 A

0.00019 B 0.0087 C

MEW 0.7329 C

0.00074 A 0.0194 A

TOPSIS 0.7764 B

o.OOQ77 A 0.0158 B

ELECTRE

Methods

MSER

Mean

Tukey

MAER

Mean Tukey

uw

Mean Tukey

AHP, Original, eigen 0.4972 C

0.3590 D 0.023 C

AHP, Geometric, eigen 0.4784 C

0.348 1 D 0.0236 C

AHP, Original, MTM 0.4974 C

0.3592 D 0.0232 C

AHP, Geometric, MTM 0.4779 C

0.3474 D 0.0235 C

MEW 1.1820 A

0.6376 B 0.0565 A

TOPSIS 0.6747 B

0.4093 C 0.0416 B

ELECTRE 1.2132 A

0.7250 A

Methods

UR

Mean Tukey

TOP

Mean Tukey

MATCH

Mean Tukey

AHP, Original, eigen 0.0663

CD

0.8215 B 0.6910 A

AHP, Geometric, eigen 0.0647 D 0.8246 B 0.6966 A

AHP, Original, MTM 0.0663

CD

0.8206

B 0.6908 A

AHP, Geometric, MTM 0.0646 D 0.8254 B 0.6950 A

MEW 0.1055

B

0.7548

C 0.567 1 C

TOPSIS 0.0690 C 0.7549 C 0.6343 B

ELECTRE

0.1168 A

0.9035 A 0.3537 D

Note: The same letter (A, B, C, D) indicates no significant average difference between methods, based on Tukeys test. Letter order A to D

is from largest to smallest average value.

MSEW and MAE W. However, when the number of

alternatives is large, rank discrepancies are amplified

(to a lesser extent for TOPSIS), as evident by higher

rank performance measures MAER, MSER , WRC l,

WR C2 and to some extent UR. In contrast to the

clear rank results of MAT CH% , WRC l and WRC 2,

SRC produces mixed results as L increases; this

demon strates further its inability to account for dif-

ferent rank importance. ELECT RE matched the SAW

top (all) ranked alternatives more (less) often than

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

12/23

518

S.H. Zanaki s et al ./ European Journal of Operat ional Research 107 1998) 507-529

0.035

0.03

0.025

3

B

P t

p 0.02

E

t

z

0.015

z

P

0.01

I

f

OC

2 3 4 5 6 7

Method

Fig. 2. MAE W by number of alternatives.

any other method, resulting in larger WRC s, regard-

less of the number of alternatives. The change in L

affects each AH P version the same way. See Figs.

1-6.

Effect of numbe r of criteria N): Most perfor-

mance measures (MA ER, M SER, SRC, KWC , UR,

WRC I, WRC 2) for most methods changed slightly

with N, but significantly according to AN OV A. This

i.2

.~_

7

+3

+5

L

A-7

9

I

3

4 5

6 7 8

Method

Fig.

3. MAER by number of alternatives.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

13/23

S.H. Zanaki s et al . European Journal of Operat io nal Research 107 1998) 507-529

519

0. 1 -

I

Fig. 4. TOP by number of alternatives.

is because MEW and the four AHPs are hardly

sensitive to changes in N (no change in KW C and

all rank perform ance measure s). As the number of

criteria N increases, the methods (especially ELEC-

TRE but not TOPSIS) tend to produce different

rankings of the alternatives from those of SAW, as

documented by higher MAE R, MSE R, UR, WRCl,

WR C2 and lower SRC; and to some extent different

0.1

Fig. 5. MAT CH by number of alternatives.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

14/23

520

S .H . Zanak i s e t a l . / Eu r opean Jou r na l o f Ope r a t i o n a l Resea r c h 107 1998 ) 507 -529

0

-~__

3 4 5 6 7 8

Method

Fig. 6. WRCl by number of alternatives

weigh ts of alternatives, as implied by some what

differently from the other methods, more so in its

smaller K WC . Howev er, differences in the final final rankings than its final weigh ts. TOPS IS rank-

weights for alternatives were larger in problems with ings differ from those of SAW and the AHPs w hen

fewer criteria, as proven by increased MAE W, N is large (= 20) and, to a lesser extent, when N is

MSEW , UW and lower KW C. TOPSIS behaved small (= 5) where it behaved more like ELECT RE

0 025

P

P 0.02

L

$

0

ii 0.015

d

J

I

5 0.01

P

0.005

3

4 5

6 7

Method

Fig. 7. MAEW by number of criteria.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

15/23

S .H . Zanak i s e t a l . / Eu r opean Jou r na l qf Ope r a t i o n a l Resea r c h 107 1998 ) 507 - 529

521

09

0.6

0.7

: 0.3

i

0 ~~

2 3 4 5 6 7 a

Method

Fig. 8. MAERE by number of criteria.

and MEW . This is evident by its increased MA ER,

MSER, UR , WRCl, WRC2 and reduced TOP,

MATCH % and SRC. Again, ELECTRE matched the

SAW top (all) ranked alternatives more (less) often

than any other method, resulting in larger WR Cs,

regardless of the number of criteria. The change in L

affects each AHP version the same w ay. See Figs.

7-11.

EfSect of distribution of criteria weights V): It

does not affect significantly several we ight measures

0.9

,

2 0 5

f

a

t

op .5

L

Fig. 9. TOP by number of criteria.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

16/23

522

S.H. Zmak is et al/ European Journal of Operat ional Research 107 1998) 507-529

. . ____

1

0.9 i

0.6

0.7 --

P

f 0.8 --

d

5

E 0.5 --

8

s

e

0.41

0.3

1

0.2

0.1

0

I__ --_-____~-_-_+

1

2

3 4 5 6 7

8

Method

Fig. 10. MA TCH by number of criteria.

(VW,

MAE W, MSEW - except TOPSIS), while the

native weigh t differences between me thods. Surp ris-

effect is mixed according to rank measures. As

ingly, how ever, final weigh t dissimilarities between

expected, equal criteria weights V = 1) reduce alter- methods w ere higher under the uniform than beta

0.6

7

0.7 i

_ 0.6

e

i

g 0.5

5

i

o.4

E

0.3

0.2

0.1

I

Fig. Il. WRC 1 by number of criteria.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

17/23

S.H. Zunaki s er al . European Journal

q f

Operat i onal Research 107 1998) 507-529

523

o--.

I

3

Fig. 12. MAEW by criterion weight distribution.

distribution. In the case of AH P, the uniform distri-

bution differentiates slightly m ore its final rankings

and weights from SAW when using the original

scale rather than the geometric scale. TOPSIS final

rankings differ from those of SAW more (least)

under the beta (equal constant) distribution. ELE C-

TRE and MEW methods differentiate their final

rankings more (least) under the equal constant (uni-

form) distribution. See Figs. 12-15.

4.1.

ank rever sa l resu l t s

Similar analyses were performed on the rank re-

versa1 experimental results. Here each method results

0 /

3 4 5 6 7 8

Method

Fig. 13. MAER by criterion weight distribution.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

18/23

524

S.H. Zanaki s et al. European Journal

o

Operat i onal Research 107 1998) 507-529

Fig. 14. TOP by criterion weight distribution

were compared to its own (not SAW), before and

after the introduction of a new (not best) alternative.

The major findings are summa rized in Tables 2, 4

and 6. The parametric and non-parametric ANO VAs

0.9

0.6

07

_

06

@

' I

I

0'

0.5

reveal that all factors (num ber of alternatives, num-

ber of criteria, distribution and metho d), and most of

their interactions, are highly significant (Tables 2

and 4).

2

3 4

5

5

7

Method

Fig. 15. WRCl by criterion weight distribution.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

19/23

S.H. Zanak is et al. European Journal of Operat io nal Research 107 1998) 507-529

525

Table 6

Average performance measures by method and Tukeys test on differences rank reversal experiment

Methods

SAW

AHP, Original, eigen

AHP, Geometric, eigen

AHP, Original, MTM

AHP, Geometric, MTM

MEW

TOPSIS

ELECTRE

SRC WRCl

Mean Tukey Mean

Tukey

I.0

A 0 D

0.9530 C 0.1532 B

0.9499 C 0.1595 B

0.9560 C 0.1520 B

0.95 1 I C 0.1610 B

1.0 A 0 D

0.9692 B 0.1116 C

0.9356 D 0.2138 A

WRC2

Mean Tukey

0 D

0.1361 B

0.1421 B

0.1351 B

0.1446 B

0 D

0.097 C

0.1996 A

Methods

MSER

Mean Tukey

MAER

Mean Tukey

TOP

Mean Tukey

MATCH

Mean Tukey

SAW

0

AHP, Original, eigen 0.1752

AHP, Geometric, eigen 0.1854

AHP, Original, MTM

0.1740

AHP, Geometric, MTM 0.1820

MEW 0

TOPSIS 0.1379

ELECTRE 0.3479

0

0.1522

0.1581

0.1515

0.1568

0

0.1104

0.2347

1

o

0.9258

0.9235

0.9258

0.9165

1.0

0.9531

0.4402

1 o

0.8584

0.8544

0.8590

0.855 1

1.0

0.9005

0.7501

Note: The same letter (A, B, C, D) indicates no significant average difference between methods, based on Tukeys test. Letter order A to D

is from largest to smallest average value.

d

0.2

L

0

i

2 0.15

I

4

5

g 01

0.05

0

1

2

3

4

5

6

7

8

Fig. 16. Rank reversal MAER by number of alternatives

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

20/23

526

S .H . Zuzak i s e t a l . / Eu r op ean Jou r na l qf Ope r a t i o n a l Resea r c h 107 1998 ) 507 - 529

0.7

z

5 t

B 0. 6

9

t

/

I - -

5

L 0.5 i

i Z: '

B

-A-l

5

*9

-

. E

B

0.4

t

i

03 1

0.2 1

0.1

I

0

k -~--~-~~ ~~

-c-

----_t------~r ~ ~~~~

1

2 3 4

5

6 7 8

Method

Fig. 17. Rank reversal MATCH by number of alternatives.

As summarized in Table 6, the MEW and SAW

methods did not produce any rank reversals, which

was expected. The next best method was TOPSIS,

followed by the four AHPs, according to all rank

reversal performance measures (larger TOP,

MATCH% , SRC, and smaller RMSER, RMAER,

WRC l and WRC 2). The rank reversal performance

of each AHP version was statistically not different

03

0.25 L

I

p 0.2 j

+5

+10

d-15;

.- rt20/

1 2 3

4 5 6 7

Fie. 18. Rank reversal MAER bv number of criteria.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

21/23

SH . Z u n a k i s e t a l ./ E u r o p e a n J o u r n a l

qf

Ope r a t i o n a l Resea r c h 107 1998 ) 507- 529

527

from the other three AHPs. E LECTR E exhibited the

worst rank reversal performance of all the methods

in this experiment, and more s o in TOP than all

ranks (MATC H% ). The last finding should be inter-

preted with caution, since it does not reflect E LEC -

TRE s versatile capabilities when used directly by a

human; it is only indicative of its restrictive ability to

discriminate among several alternatives, based on

prespecified threshold parameters.

Effect of number of alt ernati ves L) on rank

reuersal: In general, more rank reversals occur in

problems with more alternatives. This is evident by

lower MATCH% and higher MAER, WRCl and

WR C2 Among AHPs. That increase was a little

faster for the AHP with original scale and MTM

solution. The MTM AHP has a slight advantage over

the eigenvector AHP when there are not many alter-

natives. Reversals of the top rank occur more often

in problem s with more alternatives for the AH Ps, but

fewer alternatives for ELECT RE. TOPSIS top rank

reversals seem to be insensitive to L. See Figs. 16

and 17.

Effect of number of crit eri a N) on rank reversal:

The number of rank reversals was influenced less by

the number of criteria than by the number of alterna-

tives. For all AH P versions, rank reversals for top

(all) ranks remained at about 9% (14%) of

L,

regard-

less of the number of criteria. Howev er, the geomet-

ric scale in AH P see ms to reduce rank rev ersals

when the number of criteria is small, as docume nted

by smaller MAER and higher MAT CH% . According

to the SRC criterion, rank reversals for TOPSIS and

the AH Ps with original scale are not sensitive to N.

Interestingly enough, TO PSIS exhibits its wors t rank

reversals when N is small, while ELECTRE does

the same when N is large. See Fig. 18.

Effect of distr ibut ion of crit eri a w eight s V) on

rank reversal:

In general, more rank reversals were

observed under constant weights, and fewer under

uniformly distributed weigh ts. This was negligible

for TOPSIS, but most profound on ELECT RE. See

Fig. 19.

5 Conclusion and recommendations

This simulation e xperiment evaluated eigh t

MA DM methods (including four variants of AHP)

under different number of alternatives

CL),

criteria

(N) and distributions. The final results ar e affected

by these thr ee facto rs in that order. In general, as the

number of alternatives increases, the metho ds tend to

produ ce similar final weigh ts, but dissimilar rank-

ings, and more rank reversals (few er top rank rever-

sals for ELECT RE). The number of criteria had little

effect on AHPs, M EW and ELECT RE. TOPSIS

rankings differ from those of SAW m ore when N is

V

3 4 5 6 7 a

Method

Fig. 19. Rank reversal MAER by criterion weight distribution.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

22/23

528

S.H. Zunakis et al. /European Journal q Operational Research 107 1998) 507-529

large, w hen it also exhibits its fewe st rank rev ersals.

ELECT RE produces more rank reversals in problems

with many criteria.

The distribution of criteria weigh ts affects fewe r

performance measures than does the number of alter-

natives or the number of criteria. How ever, it affects

differently the metho ds exam ined. Equal criterion

weights reduces final weight differences between

metho ds, it differentiates further the rankings pro-

duced by ELECT RE and MEW , and produces more

rank reversals than the other distributions. Surpris-

ingly, how ever, final weigh t dissimilarities between

methods were higher under the uniform than beta

distribution, while the latter prod uced the fewe st

rank reversals. A uniform distribution of criteria

weigh ts differentiates more the AH P final rankings

from SAW when using the original scale rather than

the geome tric scale. Finally, a beta distribution of

criterion weights affects more TOPSIS, whose final

rankings differ even more from those of SAW.

In general, all AH P versions behave similarly and

closer to SAW than the other methods. ELECT RE is

the least similar to SAW (except for best matching

the top-ranked alternative), followed by the MEW

method. TOPSIS behaves closer to AHP and differ-

ently from ELECT RE and MEW , except for prob-

lems with few criteria. In terms o f rank reversals, the

four AHP versions were uniformly worse than TOP-

SIS, but more robust than ELECTRE.

lated beyond the type of MA DM problem considered

in this study; namely a decision matrix input of N

criteria weigh ts and explicit ratings of L alternatives

on each criterion. Theref ore, metho d variations capa-

ble of handling different problems were not consid-

ered in this simulation. This standardization ham-

pers ELECTRE more than any of the other methods.

It unavoidably did not consider the variety of fea-

tures of the many versions of this method developed

to handle different problem types. It did not take

advantage of the metho ds capabilities in handling

problems with ordinal or imprecise information. Even

in the form used here, ELECT RE may produce

different results for different thresho lds of concor-

dance and discordance indexes (wh ich of course

leaves op en the question on which index sho uld th e

user select). Finally, any MA DM metho d cannot be

considered as a tool for discovering an objective

truth. Such models sh ould function within a DS S

context to aid the user to learn more about the

problem and solutions to reach the ultimate decision.

Such insight-gaining metho ds are better termed deci-

sion aids rather than decision making. MA DM meth-

ods should not be considered as single-pass tech-

niques, without a posteriori robustness analysis. A

sensitivity (robustness) analysis is essential for any

MA DM method, but this is clearly beyond the scope

of this simulation experimen t.

The detailed findings of this simulation study can

provide useful insights to researc hers and practition-

ers of MA DM . A users interest in evaluating alter-

natives may be in one or more of the final o utput,

namely their weigh ts, ranking or rank reversals. This

experimen t reveals when a users results are likely to

be practically the same, regardless of the subset of

methods employed; or when and by how much the

solutions may differ, thus guiding a user in selecting

an appropriate method. SAW was selected as the

basis to which to compare the other methods, be-

cause its simplicity make s it used often by practition-

ers. Even some researchers argue that SAW should

be the standard for compariso ns, because it gives

the most acceptable results for the majority of

single-dimensional problems (Triantaphyllou and

Mann, 1989).

References

Belton, V., 1986. A comparison of the analytic hierarchy process

and a simple multi-attribute value function. European Journal

of Operational Research 26, 7-2 I

Belton, V., Gear, T., 1984. The legitimacy of rank reversal - A

comment. Omega 13, 143-144.

Buchanan, J.T., Daellenbach, H.G., 1987. A comparative evalua-

tion of interactive solution methods for multiple objective

decision models. European Journal of Operational Research

29, 353-359.

Churchman, C.W., Ackoff, R.L., Amoff, E.L., 1957. Introduction

to Operations Research. Wiley, New York.

Currim, I.S., Satin, R.K., 1984. A comparative evaluation of

multiattribute consumer preference models. Management Sci-

ence 30, 543-561.

Denpontin, M., Mascarola, H., Spronk, J., 1983. A user oriented

listing of MCDM. Revue Beige de Researche Operationelle

23, 3-11.

Some caution, however, must be used when con-

Dyer, J., 1990. Remarks on the analytic hierarchy process. Man-

sidering our findings. They should not be extrapo - agement Science 36, 249-258.

-

7/26/2019 Toward Interactive and Intelligent Decision Support System

23/23

S.H. Zanakis er ul. / Europeun Journd of Operutwnul Research IO? (I 998) 507-529

529

Dyer. J., Fishbum, P., Steuer, R., Wallenius, J., Zionts, S., 1992.

Multiple criteria decision making, multiattribute utility theory:

The next ten years. Management Science 38, 645-654.

Gemunden, H.G., Hauschildt, J., 1985. Number of alternatives

and efficiency in different types of top-management decisions.

European Journal of Operational Research 22, 178- 190.

Gershon, M.E., Duckstein, L., 1983. Multiobjective approaches to

river basin planning. Journal of Water Resource Planning 109,

13-28.

Goicoechea, A., Stakhiv, E.Z., Li, F., 1992. Experimental evalua-

tion of multiple criteria decision making models for applica-

tion to water resources planning. Water Resources Bulletin 28,

89- 102.

Gomes, L.F.A.M., 1989. Comparing two methods for multicrite,ria

ranking of urban transportation system alternatives. Journal of

Advanced Transportation 23, 217-219.

Harker, P.T., Vargas, L.G., 1990. Reply to Remarks on the

analytic hierarchy process by J.S. Dyer. Management Sci-

ence 36, 269-273.

Hobbs, B.F., 1986. What can we learn from experiments in

multiobjective decision analysis. IEEE Transactions on Sys-

tems Management and Cybernetics 16, 384-394.

Hobbs, B.J., Chankong, V., Hamadeh, W., Stakhiv, E., 1992.

Does choice of multicriteria method matter? An experiment in

water resource planning. Water Resources Research 28, 1767-

1779.

Hwang, C.L. Yoon, K.L., 198 1. Multiple Attribute Decision Mak-

ing: Methods and Applications. Springer-Verlag, New York.

Jelassi, M.T.J., Ozemoy, V.M., 1988. A framework for building

an expert system for MCDM models selection. In: Lockett,

A.G., Islei, G. (Eds.), Improving Decision Making in Organ-

zations. Springer-Verlag, New York, pp. 553-562.

Karni, R., Sanchez, P., Tummala, V., 1990. A comparative study

of multiattribute decision making methodologies. Theory and

Decision 29, 203-222.

Kok, M., 1986. The interface with decision makers and some

experimental results in interactive multiple objective program-

ming methods. European Journal of Operational Research 26,

96- 107.

Kok, M., Lootsma, F.A., 1985. Pairwise-comparison methods in

multiple objective programming, with applications in a long-

term energy-planning model. European Journal of Operational

Research 22, 44-55.

Lockett, G., Stratford, M., 1987. Ranking of research projects:

Experiments with two methods. Omega 15, 395-400.

Legrady, K., Lootsma, F.A., Meisner, J., Schellemans, F., 1984.

Multicriteria decision analysis to aid budget allocation, In:

Grower, M., Wierzbicki, A.P., (Ed ), Interactive Decision

Analysis. Springer-Verlag, pp. 164-174.

Lootsma, F.A., 1990. The French and American school in multi-

criteria decision analysis. Recherche Operationelle 24, 263-

285.

MacCrimmon, K.R., 1973. An overview of multiple objective

decision making. In: Co&ran, J.L., Zeleny, M. (Eds.), Multi-

ple Criteria Decision Making. University of South Carolina

Press, Columbia.

Olson, D.L., Moshkovich, H.M., Schellenberger, R., Mechitov,

A.]., 1995. Consistency and accuracy in decision aids: Experi-

ments with four multiattribute systems. Decision Sciences 26,

723-748.

Ozemoy, V.M., 1987. A framework for choosing the most appro-

priate discrete alternative MCDM in decision support and

expert systems. In: Savaragi, Y., et al. (Eds.), Toward Interac-

tive and Intelligent Decision Support Systems. Springer-Verlag,

Heildelberg, pp. 56-64.

Ozemoy, V.M., 1992. Choosing the best multiple criteria deci-

sion-making method. INFOR 30, I59- I7 I

Pomerol, J., 1993. Multicriteria DSS: State of the art and prob-

lems Central European Journal for Operations Research and

Economics 2, 197-212.

Roy, B., Bouyssou, D., 1986. Comparison of two decision-aid

models applied to a nuclear power plant siting example.

European Journal of Operational Research 25, 200-215.

Saaty, T.L., 1984. The legitimacy of rank reversal. OMEGA 12,

513-516.

Saaty, T.L., 1990. An exposition of the AHP in reply to the paper

remarks on the analytic hierarchy process. Management Sci-

ence 36, 259-268.

Schoemaker, P.J., Waid, CC., 1982. An experimental comparison

of different approaches to determining weights in additive

utility models. Management Science 28, I82- 196.

Stewart, T.J., 1992. A critical survey on the status of multiple

criteria decision making theory and practice. OMEGA 20,

569-586.

Stillwell, W., Winterfeldt, D., John, R., 1987. Comparing hierar-

chical and nonhierarchical weighting methods for eliciting

multiattribute value models. Management Science 33, 442-

450.

Takeda, E., Cogger, K.O., Yu, P.L., 1987. Estimating criterion

weights using eigenvectors: A comparative study. European

Journal of Operational Research 29, 360-369.

Timmermans, D., Vlek, C., Handrickx, L., 1989. An experimental

study of the effectiveness of computer-programmed decision

support. In: Locket& A.G., Islei, G. (Eds.), Improving Deci-

sion Making in Organizations. Springer-Verlag, Heidelberg,

pp. 13-23.

Triantaphyllou, E., Mann, S.H., 1989. An examination of the

effectiveness of multi-dimensional decision-making methods:

A decision-making paradox. Decision Support Systems 5,

303-312.

Voogd, H., 1983. Multicriteria Evaluation for Urban and Regional

Planning. Pion, London.

Zahedi, F., 1986. A simulation study of estimation methods in the

analytic hierarchy process. Socio-Economic Planning Sciences

20, 347-354.

Zanakis, S., Mandakovic, T., Gupta, S., Sahay, S., Hong, S.,

1995. A review of program evaluation and fund allocation

methods within the service and government sectors. Socio-

Economic Planning Sciences 29, 59-79.