Thesis Sadri

-

Upload

sadicsfaction-si-atat -

Category

Documents

-

view

233 -

download

0

Transcript of Thesis Sadri

-

8/8/2019 Thesis Sadri

1/108

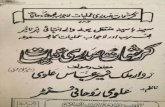

University of California

Los Angeles

Optimization of Sequence Queries in Database

Systems

A dissertation submitted in partial satisfaction

of the requirements for the degree

Doctor of Philosophy in Computer Science

by

Mohammad Reza Sadri

2001

-

8/8/2019 Thesis Sadri

2/108

c Copyright by

Mohammad Reza Sadri

2001

-

8/8/2019 Thesis Sadri

3/108

The dissertation of Mohammad Reza Sadri is approved.

D. Stott Parker

Richard Muntz

Dominique Hanssens

Carlo Zaniolo, Committee Chair

University of California, Los Angeles

2001

ii

-

8/8/2019 Thesis Sadri

4/108

To the Memory of My Father, Who Inspired Me to Start This Path

and

To Katerina, Who Stood by Me to Finish It

iii

-

8/8/2019 Thesis Sadri

5/108

Table of Contents

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 State of the Art . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 Procedural Extensions . . . . . . . . . . . . . . . . . . . . 2

1.1.2 SEQUIN and SRQL . . . . . . . . . . . . . . . . . . . . . 3

1.1.3 Tangram . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41.1.4 SQL/LPP . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.5 Composite Events in Active Databases . . . . . . . . . . . 5

1.2 Optimization of Sequence Searches . . . . . . . . . . . . . . . . . 6

2 The SQL-TS Language . . . . . . . . . . . . . . . . . . . . . . . . . 8

3 Search Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.1 Searching Simple Text Strings . . . . . . . . . . . . . . . . . . . . 13

3.2 General Predicates . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.3 Optimized Pattern Search . . . . . . . . . . . . . . . . . . . . . . 17

3.4 Implications between elements . . . . . . . . . . . . . . . . . . . . 19

4 Patterns with Stars and Disjunctions . . . . . . . . . . . . . . . . 26

4.1 Finding next and shift for the Star Case . . . . . . . . . . . . . . 29

4.2 Complexity of Calculating next and shift . . . . . . . . . . . . . 36

4.3 Disjunctive Patterns . . . . . . . . . . . . . . . . . . . . . . . . . 37

5 Calculating and . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

iv

-

8/8/2019 Thesis Sadri

6/108

5.1 GSW Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

5.1.1 Satisfiability . . . . . . . . . . . . . . . . . . . . . . . . . 45

5.1.2 Implication . . . . . . . . . . . . . . . . . . . . . . . . . . 46

5.2 Calculating and for Disjunctive Pattern Elements . . . . . . . 49

6 Experimental Results . . . . . . . . . . . . . . . . . . . . . . . . . . 53

7 Improvements and Generalizations . . . . . . . . . . . . . . . . . 57

7.1 Mapping between Set Theoretic and Logical Domains . . . . . . . 57

7.2 Interval Patterns . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

7.2.1 One Dimensional Intervals . . . . . . . . . . . . . . . . . . 61

7.2.2 Multi-dimensional Intervals . . . . . . . . . . . . . . . . . 63

7.3 Multi Dimensional Patterns . . . . . . . . . . . . . . . . . . . . . 64

7.3.1 Patterns on Multi Dimensional Ordered Sets . . . . . . . . 65

7.3.2 Vector Time Series . . . . . . . . . . . . . . . . . . . . . . 66

7.3.3 Multi-dimensional SQL-TS Queries . . . . . . . . . . . . . 69

8 Syntax and Formal Semantics of SQL-TS . . . . . . . . . . . . . 74

8.1 SQL-TS Syntax . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

8.2 Formal Semantics . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

8.3 All Answers Semantics . . . . . . . . . . . . . . . . . . . . . . . . 78

8.4 Left Maximality Semantics . . . . . . . . . . . . . . . . . . . . . . 80

8.5 Aggregates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

9 Further Work & Conclusion . . . . . . . . . . . . . . . . . . . . . . 84

v

-

8/8/2019 Thesis Sadri

7/108

A SQL-TS Syntax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

vi

-

8/8/2019 Thesis Sadri

8/108

List of Figures

2.1 Effects of SEQUENCE BY and CLUSTER BY on data . . . . . . . . . . . . 9

3.1 The meaning of next(j) . . . . . . . . . . . . . . . . . . . . . . . . 15

3.2 Shifting the pattern k positions to the right . . . . . . . . . . . . . 21

3.3 Next and Shift definitions for OPS . . . . . . . . . . . . . . . . . . 22

3.4 Comparison between path curve of the naive search (top chart) and

OPS (bottom chart) . . . . . . . . . . . . . . . . . . . . . . . . . . 25

5.1 Directed weighted graph for determining the satisfiability of a set

of inequalities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

6.1 The relaxed double bottom pattern. . . . . . . . . . . . . . . . . 54

6.2 Doublebottoms found in the DJIA data are shown by boxes. The

bottom picture is zoomed for the area pointed by arrow in the top

picture and shows one of the matches. . . . . . . . . . . . . . . . 56

7.1 Table for the hight of the surface water . . . . . . . . . . . . . . . 65

7.2 Illustration of example 14 over a two-dimensional ordered set. . . . 67

7.3 Illustration of the two-dimensional vector series in example 15 . . . 69

7.4 Table for the trajectory of molecules . . . . . . . . . . . . . . . . . 70

7.5 Illustration of the two-dimensional vector series in example 16 . . . 72

vii

-

8/8/2019 Thesis Sadri

9/108

List of Tables

5.1 Logic matrix elements for disjunction of pattern elements. . . . . 50

7.1 Mapping between set theoretic logic relations among pattern ele-

ments and corresponding OPS speedups for scanning left to right. 59

7.2 Detailed mapping between set theoretic logic inclusions among pat-

tern elements and the logic matrices elements. . . . . . . . . . . . 60

viii

-

8/8/2019 Thesis Sadri

10/108

Acknowledgments

Writing this thesis marks the conclusion of a journey that I started many

years ago. Throughout this journey I enjoyed and benefited from the support

and companionship of many people.

First I would like to thank Carlo Zaniolo, my advisor, whose invaluable guid-

ance in both academic and personal issues provided me with much needed direc-

tion and support. I am as much indebted to him for his understanding of my

personal circumstances as I am in his intellectual contribution to my academic

growth. I also would like to thank Dominique Henssens, Dick Muntz, and Stott

Parker for accepting to be in my committee.

I am indebted to my dear friends Jafar (Iman) Adibi and Amir Masoud

Zarkesh for their help in prototyping this work and utilizing it in a real ap-

plication. Their support along with numerous discussions that cleared up manyambiguities was instrumental in the advancement of my work to this stage. I

also would like to thank my friend Bahram Fazeli for reviewing parts of this

manuscript and for sharing many happy moments at UCLA.

During the seven years that I was at UCLA, My family went through hard

times. Most difficult of all was the illness and loss of my father. In spite of

all that, their uninterrupted love and support has enabled me to reach my goal.

The memory of my last conversation with my father when he asked me to finish

my PhD is always fresh in my mind. My Mother endured unbelievable amount

of pressure in all this, and her love and prayers have given me the strength to

persevere.

My wife, Katerina, has been my main support during these years. Dealing

ix

-

8/8/2019 Thesis Sadri

11/108

with all the ups and downs of this journey every day and giving me her love and

support has kept me on my feet. I am also grateful to my brother, Mohsen, my

sister, Mojgan, their families, my younger brothers Majid and Saeid, my nephew

Nima, and my niece Dimitra, who bring joy to our life.

There are many that I am indebted to for their help: Henderson Yeung and

Shigako Seki In CSU Fresno who helped me through my first experience with

academic life in the US; my uncle Ahmad Tadayon and his family, Alipour family,

Peters Family, Medy Sanadidi, my supervisor and my good friend in NCR; Reza

Ahmadi,Hamid Jafarkhani, Rasool Jalili, Hadi Moradi, Reza Sadaghiani, Vali

Tadayon, Homayoun Yousefi-Zadeh, who shared their friendships with me; Alex

Razmjoo, Aktay Aydin, Frank Alaghband, Dan Cossack, and many other friends

and coworkers in Procom who showed great understanding and flexibility allowing

me to continue my PhD while working full time.

x

-

8/8/2019 Thesis Sadri

12/108

Vita

1967 Born, Eghlid, Iran.

1988 B.S., Electrical Engineering, Tehran University, Tehran, Iran.

1988-91 Software Engineer, Sanyo Electric, Tehran, Iran

1991-93 Teaching Assistant, Computer Science, California State Univer-

sity, Fresno, California

1993 M.S., Computer Science, California State University, Fresno,

California

1993-95 Research Assistant, Medical Imaging Department, UCLA, Los

Angeles, California

1995-96 Intern, AT&T GIS (Now NCR), El Segundo, California

1996-2000 Principal Software Engineer, Procom Technology, Irvine, Cali-

fornia

2000-Present Chief Technologist, Procom Technology, Irvine, California

Publications

Reza Sadri, Carlo Zaniolo, Amir M. Zarkesh, Jafar Adibi, Optimization of Se-

quence Queries in Database Systems, Accepted for publication in PODS 2001.

xi

-

8/8/2019 Thesis Sadri

13/108

Amir M. Zarkesh, Jafar Adibi, Cyrus Shahabi, Reza Sadri, Vishal Shah, Analysis

and Design of Server Informative WWW-Sites, in CIKM 1997 pages 254-261.

Bruce K.T. Ho, Zheng Chen, Ramesh Panwar, Reza Sadri, Pongskorn Saipetch,

Marco Ma, Medical Imaging Supercomputing in a PACS infrastructure, in Pro-

ceedings of SPIE, PACS Design and Evaluation 2435: 536-546, 1995.

Bruce K.T.Ho, Reza Sadri, Woodrew Chao, Lu Huang, Ricky Taira, Henry Shih,

Data clustering and other archive retrieval strategies for teleradiology and picture

archiving communication systems, Journal of Digital Imaging, 8(4): 180-190,

1995.

Woodrew Chao, Bruce K. T. Ho, John T. Chao. Reza Sadri, Lu Huang, Ricky

Taira, Implementation of System Intelligence in a 3-tier Tele-Medicine PACS

Hierarchical Storage Management System, Proceedings of SPIE, PACS Designand Evaluation 2435: 423-429, 1995.

Bruce Ho, Woodrew Chao, Johnny Chao, Reza Sadri, Richard Steckel, Hooshang

Kangarloo, A Multi-Client Global Teleradiology System Proceedings of SPIE,

PACS Design and Evaluation 2435: 479-490, 1995.

Carlo Zaniolo, Reza Sadri, A Simple Model for Active Rules and their Behavior

in Deductive Databases, in Workshop on Deductive Databases and Logic Pro-

gramming, pages 13-25, 1994.

xii

-

8/8/2019 Thesis Sadri

14/108

Abstract of the Dissertation

Optimization of Sequence Queries in Database

Systems

by

Mohammad Reza Sadri

Doctor of Philosophy in Computer Science

University of California, Los Angeles, 2001

Professor Carlo Zaniolo, Chair

The need to search for complex and recurring patterns in database sequences is

shared by many applications. In this work, we discuss how to express and support

efficiently sophisticated sequential pattern queries in relational database systems.

Thus, we first introduce SQL-TS, an extension of SQL, to express these patterns,

and then we study how to optimize search queries for this language. We take

the optimal text search algorithm of Knuth, Morris and Pratt, and generalize

it to handle complex queries on sequences. Our algorithm exploits the inter-

dependencies between the elements of a sequential pattern to minimize repeated

passes over the same data. We then present extensions of the algorithm for

detecting repeated patterns and disjunctive patterns. We also provide methods

for finding the inter-dependencies between the pattern elements for important

domains including intervals and vector time-series. In addition, a logic based

semantics for SQL-TS is given. Experimental results on typical sequence queries,

such as double bottom queries, confirm that substantial speedups are achieved

by our new optimization techniques.

xiii

-

8/8/2019 Thesis Sadri

15/108

CHAPTER 1

Introduction

Many applications require processing and analyzing sequential data. Examples

include the analysis of stock market prices [3], meteorological events [13], and the

identification of patterns of purchases by customers over time [1, 12]. These ap-

plications focus on finding patterns and trends in sequential data. The patterns

of interest in actual applications range from very simple ones, such as finding

three consecutive sunny days, to the more complex patterns used in datamining

applications [1, 4, 8]. These applications have motivated researchers to extend

database query languages with the ability of searching for and manipulating se-

quential patterns.

The time-series datablades [8] introduced by Informix provide a library of

functions that can be called from an SQL query, and most commercial DBMSs

support similar extensions. But datablades lack in expressive power, flexibility

and integration with DB query languages; thus, DB researchers have been seeking

time-series tools that are more powerful, more flexible, and more integrated with

DB query languages. In particular, the PREDATOR system proposed an SQL

extension called SEQUIN [22, 23, 21] for querying sequences. Then, SRQL [19]

extended the relational algebra with sequence operators for sorted relations, and

added constructs for querying sequences to SQL.

In this thesis, we view sorted relations as sequences as in SRQL, but propose

a new and more powerful SQL-like language for pattern searching, and advanced

1

-

8/8/2019 Thesis Sadri

16/108

techniques for optimizing queries in such a language.

1.1 State of the Art

SQL is not a good candidate for expressing sequential queries since it is based on

a set oriented model with a limited expressive power. There has been much effort

for developing database systems that can handle sequential data more efficiently

than SQL. Previous research efforts are discussed below.

1.1.1 Procedural Extensions

Informix time-series datablade [8] is a set of functions written for time-series ap-

plications, which can be called from SQL queries. These functions are mostly

statistical functions particularly useful for business applications. Therefore, In-

formix time-series datablade is meant for a known set of business applications

and problems. Other commercial systems support similar extensions: for in-

stance Red Brick system RISQL [26] is similar in nature to Informix datablades.

It provides a set of functions that can be used in time-series analysis, and suf-

fers from the same problems as Informix time-series datablades: It has weak

expressive power and it is useful only for specific applications. Oracle cartridges

and IBM DB2 universal database extenders are other examples of procedural

extensions to relational databases.

Besides limited expressive power, the datablades approach also suffers from

problems with respect to optimization. Indeed, while the individual functions

that exist in these systems are highly optimized for their specific tasks, there is

no optimization between these functions and the rest of the query.

2

-

8/8/2019 Thesis Sadri

17/108

1.1.2 SEQUIN and SRQL

SEQ and PREDATOR database systems [23, 21] use different data models and

different database languages for different EADTs (Enhanced Abstract Data Type).

It uses SQL for querying sets and SEQUIN [22, 23, 21] for querying sequences.

Query blocks from different languages can be nested inside each other with the

help of directives that convert data between these data types. SEQUIN has its

own algebra that makes the optimization of sequence queries possible. There are

two major drawbacks to this approach. One is that it does not support optimiza-tion between blocks of sequence and set queries, and the other is that it lacks the

expressive power needed for many important applications.

SEQ provides three different techniques for query optimization:

Using meta-information about the input sequence (range of sequence, den-

sity of sequence, distribution of values and empty positions). This infor-

mation is used to calculate the range of the output sequence, and theneliminate parts of input sequences that cant affect the output.

Heuristic query transformations like pushing the selections inside joins.

These can be used to create different query plans.

Using cost-based decisions to choose between different plans.

While Some of SEQ methods could also be used in the language proposed in

this thesis, we will provide a more general and extensible approach.

SRQL [19] introduces a solution that augments relational algebra with a se-

quential model using sorted relations. This approach is better than SEQ since

sequences are expressed in the same framework as sets, thus enabling more effi-

cient optimization of queries that involve both. SRQL also extends SQL with a

3

-

8/8/2019 Thesis Sadri

18/108

few constructs for querying sequences.

An overview of the optimization techniques used in SRQL, including well-

known heuristics of pushing selections inside the joins, is given in [19]

1.1.3 Tangram

Tangram is a stream processing system from UCLA [17]. The basic constructs in

Tangram are transducers. Transducers are operators that transform input data

streams to output data streams. Transducers can be combined to make more

complex operators for answering ad-hoc queries on streams. Tangram is a Prolog

based system, implemented on a functional language called Log(F) that can be

translated to Prolog. The major issue remaining in terms of databases is the

impedance mismatch between Prolog that returns the results one by one and

RDBMSs that return all the results at once as a set.

1.1.4 SQL/LPP

Another system that adds time-series extensions to SQL is discussed in [18].

It models time-series as attributed queues (queues augmented with attributes

that are used to hold aggregate values and are updated upon modifications to

the queue). Segments of time series data are ordered and there are directives

for the search engines that specify the next segment that should be checked

against the query. For searching desired time-series, the criteria for the segmentsthat qualify are first specified and then they are used in the main SQL query.

The language for specifying the desired patterns is somehow decoupled from the

main query; this is similar to the separation between the sequential part and

relational part in SEQ. This separation also introduces problems similar to those

of SEQ ( such as, complexity and added difficulty to learn and use, and lack of

4

-

8/8/2019 Thesis Sadri

19/108

optimization between sequential and relational parts). Also, SQL/LPP doesnt

report recursive patterns and this limits the expressive power. It also has a

limited number of basic aggregate functions. It is possible to build more complex

aggregate functions using the basic aggregate functions but new functions can

not be introduced from scratch. Also SQL/LPP can not be applied directly to

existing tables because it requires a specific structure in the underlying table.

SQL/LPP optimization uses pattern length analysis to prune the search space

and to deduce properties of composite patterns based on properties of the simple

patterns. These properties are used at runtime to reduce the search time.

1.1.5 Composite Events in Active Databases

There has been some work in active databases for implementing complex events.

Examples are ODE [6], SAMOS [5], and TREPL [15]. These systems are for

tracking complex events. Here we use similar ideas to detect complex patterns in

sequential data. Our methods has the following similarities to these languages:

They both utilize a good marriage of regular expressions and database query

languages.

They both have good support for temporal aggregations.

They have strong formal bases. ODE uses finite state machines, SAMOS

uses petri-nets and TREPL and our extensions use logic based semantics.

Many of language constructs of TREPL are relevant to the design of SQL-TS;

however the implementation and optimization techniques are very different since

event-based languages for active databases work on the live real time data and

need to generate the answers as soon as the corresponding events happen.

5

-

8/8/2019 Thesis Sadri

20/108

1.2 Optimization of Sequence Searches

Current database systems that support some sort of sequential queries follow one

of the following approaches for optimization:

A fixed set of optimized functions that work on sequences. This approach

is taken in ADT based systems. This functions dont fit in the traditional

model of relational databases.

Modeling some time-series queries based on an extended relational algebra

and applying traditional optimization approaches (like pushing joins into

the selections). SEQ and SRQL use this approach.

In this thesis we explore a new approach based on the observation that find-

ing sequential patterns in databases is somehow similar to finding a phrase in a

text. However, instead of searching for a sequence of letters usually from a finite

alphabet, we search for a sequence of tuples with rich structure and with infinite

possibilities. Since our approach is motivated by the text searching problem, we

give a short overview of some of existing text searching algorithms, their perfor-

mance, and their limitations. These algorithms are used to find the occurrences

of a given pattern string pattern within a given text string. The brute force case

for searching the pattern has a time complexity of O(mn). The Karp-Rabin [9]

algorithm has a worst time complexity of O(nm) and an expected running time

ofO(n + m). It works based on creating the hash values for possible substrings of

size m and its efficiency depends to the alphabet size. The Boyer-Moore pattern

matcher [14] (also found independently by Gosper) works best where the pattern

is long and the alphabet is large. It has been used in some versions of Unix grep

string searching function. Its worst case performance is O(nm), and its best case

6

-

8/8/2019 Thesis Sadri

21/108

performance is O(n/m). An undesirable characteristics of these algorithms is

that they assume a finite alphabet size and their performance is dependent on

the size of the alphabet. The Knuth-Morris-Pratt (KMP) algorithm [11] creates a

prefix function from the pattern that allows the building of a transition function

to expedite the searching. The prefix function is built in O(m) time, and the

algorithm has a worst case time complexity of O(n + m), independent from the

alphabet size. Performance comparison by Wright, Cumberland, and Fang [29]

shows that in general KMP has the best performance. This along with the fact

that KMP is independent of the alphabet size makes it the best candidate for

generalization to handle sequential database pattern searches.

7

-

8/8/2019 Thesis Sadri

22/108

CHAPTER 2

The SQL-TS Language

Our Simple Query Language for Time Series (SQL-TS) adds to SQL simple con-

structs for specifying complex sequential patterns. For instance, say that we have

the following table of closing prices for stocks:

CREATE TABLE quote ( name Varchar(8),

date Date,

price Integer )

Now, to find stocks that went up by 15% or more one day, and then down by20% or more the next day, we can write the SQL-TS query of Example 1:

Example 1 Using the FROM clause to define patterns

SELECT X.name, Z.price - X.price, Z.date

FROM quote

CLUSTER BY name

SEQUENCE BY date

AS (X, Y, Z)

WHERE Y.price > 1.15 * X.price

AND Z.price < 0.80 * Y.price

8

-

8/8/2019 Thesis Sadri

23/108

name price date

... ... ...

INTC $60 1/25/99

INTC $63.5 1/26/99

INTC $62 1/27/99

... ... ...

IBM $81 1/25/99

IBM $80.50 1/26/99

IBM $84 1/27/99

... ... ...

Figure 2.1: Effects of SEQUENCE BY and CLUSTER BY on data

Thus, SQL-TS is basically identical to SQL, but for the following additions to

the FROM clause:

A CLUSTER BY clause specifying that data for each stock is processed sep-

arately (i.e., as it were a separate stream.)

A SEQUENCE BY clause specifying that the data must be traversed by as-

cending date. Figure 2.1 shows how SEQUENCE BY and CLUSTER BY affect

the input. Rows are grouped by their CLUSTER BY attribute(s) (not nec-

essarily ordered), and data in each group are sorted by their SEQUENCE BY

attributes(s). This is similar to SRQL, where we have GROUP BY and

SEQUENCE BY attributes [19].

The AS clause, which in SQL is mostly used to assign aliases to the table

names, is here used to specify a sequence of tuple variables from the specified

table. By (X, Y, Z) we mean three tuples that immediately follow each

9

-

8/8/2019 Thesis Sadri

24/108

other. Tuple variables from this sequence can be used in the WHERE clause

to specify the conditions and in the SELECT clause to specify the output.

Expressing the same query using SQL would require three joins and would be

more complex, less intuitive, and much harder to optimize.

A key feature of SQL-TS is its ability to express recurring patterns by using

a star operator. Take the following example:

Example 2 Find the maximal periods in which the price of a stock fell more

than 50%, and return the stock name and these periods

SELECT X.name, X.date AS start_date,

Z.previous.date AS end_date

FROM quote

CLUSTER BY name

SEQUENCE BY dateAS (X, *Y, Z)

WHERE Y.price < Y.previous.price

AND Z.previous.price < 0.5 * X.price

In SQL-TS, each tuple is viewed as containing two additional fields that refer

to the previous and the next tuple in the sequence within the same cluster.

Thus, for instance Z.previous (X.next) delivers the last tuple (the first tuple) in

the Y sequence, and Z.previous.date is the date of this last tuple (the SQL3

syntax Z.previous date is also supported). Here the star construct Y is used

to specify a sequence of one or more Ys of increasing price, as per the condi-

tion the condition Y.price < Y.previous.price. In general, a star denotes a

10

-

8/8/2019 Thesis Sadri

25/108

sequence of one or more (not zero or more!) tuples that satisfy all applicable con-

ditions in the where clause. Thus, Z here is the first tuple where the price of the

stock is no longer smaller than the previous one. Constructs similar to the star

have been proposed previously in several query languages, and their semantics, is

easily formalized using recursive Datalog programs [15]. Also observe that a left

maximality condition in implicit in the SQL-TS semantics, meaning that when

two overlapping sequences satisfy the query, we return only the one that starts

first.

11

-

8/8/2019 Thesis Sadri

26/108

CHAPTER 3

Search Optimization

Since SQL-TS is a superset of SQL, all the well-known techniques for query

optimization remain available, but in addition to those we find new query op-

timization opportunities using techniques akin to those used for text searching.

For instance, take the following example:

Example 3 Find companies whose closing stock price in three consecutive days

was 10, 11, and 15.

SELECT X.name

FROM quote

CLUSTER BY name

SEQUENCE BY date

AS (X, Y, Z)

WHERE X.price =10 AND Y.price=11

AND Z.price=15

The text searching algorithms by Knuth, Morris and Pratt (KMP), discussed

below, provides a solution of proven optimality for this query [11, 29]. Unfor-

tunately, the KMP algorithm is only applicable when the qualifications in the

query are equalities with constants as those of Example 3

12

-

8/8/2019 Thesis Sadri

27/108

Therefore, in this paper, we extend the KMP algorithm to handle the condi-

tions that are found in general queriesin particular inequalities between terms

involving variables such as those in the next example.

Example 4 For IBM stock prices, find all instances where the pattern of two

drops followed by two increases, and the drops take the price to a value between

40 and 50, and the first increase doesnt move the price beyond 52.

SELECT X.date AS start_date, X.price

U.date AS end_date, U.price

FROM quote

CLUSTER BY name

SEQUENCE BY date

AS (X, Y, Z, T, U)

WHERE X.name=IBM

AND Y.price < X.priceAND Z.price < Y.price

AND 40 < Z.price < 50

AND Z.price < T.price

AND T.price < 52

AND T.price < U.price

3.1 Searching Simple Text Strings

The KMP algorithm takes a sequence pattern of length m, P = p1 . . . pm, and

a text sequence of length n, T = t1 . . . tn, and finds all occurrences of P in

T. Using an example from [11], let abcabcacab be our search pattern, and

babcbabcabcaabcabcabcacabc be our text sequence. The algorithm starts from

13

-

8/8/2019 Thesis Sadri

28/108

the left and compares successive characters until the first mismatch occurs. At

each step, the ith element in the text is being compared with the jth element in

the pattern (i.e., ti is compared with pj). We keep increasing i and j until a

mismatch occurs.

j,i 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

ti a b c b a b c a b c a a b c a b c

pj a b c a b c a c a b

For the example at hand, the arrow denotes the point where the first mismatch

occurs. At this point, a naive algorithm would reset j to 1 and i to 2, and restart

the search by comparing p1 to t2, and then proceed with the next input charac-

ter. But instead, the KMP algorithm avoids backtracking by using the knowledge

acquired from the fact that the first three characters in the text have been suc-

cessfully matched with those in the pattern. Indeed, since p1 = p2, p1 = p3, andp1p2p3 = t1t2t3 we can conclude that t2 and t3 cant be equal to p1, and we can

thus jump to t4. Then, the KMP algorithm resumes by comparing p1 with t4;

since the comparison fails, we increment i and compare t5 with p1:

i 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

ti a b c b a b c a b c a a b c a b c

j 1 2 3 4 5 6 7 8 9 10

pj a b c a b c a c a b

Now we have the mismatch where j = 8 and i = 12. Here since we know that

p1 . . . p4 = p4 . . . p7 and p4 . . . p7 = t8 . . . t11, p1 = p2, and p1 = p3, we conclude

14

-

8/8/2019 Thesis Sadri

29/108

1 j

1 next[j]

pattern

pattern

1 j

1 next[j]

pattern

pattern

Figure 3.1: The meaning of next(j)

that we can move pj four characters to the right, and resume by comparing p5 to

t12. Therefore, by exploiting the relationship between elements of the pattern, we

can continue our search without without moving back in the text (i.e., without

changing the value of i). As shown in [11], the KMP algorithm never requires

backtracking on the text. Moreover, the index on the pattern can be reset to a

new value next(j), where next(j) only depends on the current value, and is inde-

pendent from the text. For a pattern of size m, next(j) can be stored on an array

of size m. (Thus this array can be computed once as part the query compilation,

and then used repeatedly to search the database, and its time-varying content.)

The array next(j) can be defined as follows:

1. Find all integers k, 0 < k < j, for which pk = pj and such that for every

positive integer s < k, ps = pjk+s (i.e.,

p1 = pjk+1 . . . pk1 = pj1).

2. If no such k exists, then next(j) = 0 else next(j) is the least of these ks.

This definition is clarified by Figure 3.1. The upper line shows the pattern,

and the lower line shows the pattern shifted by k; the thick segments show where

the two are identical. When no shift exists for which the shifted pattern can

match the original one, we have next(j) = 0, and the pattern is shifted to the

right till its first element is at position j + 1, i.e., one after the current position

in the text. In the KMP algorithm, this is the only situation in which the search

15

-

8/8/2019 Thesis Sadri

30/108

pattern is advanced following a failure. (Of course, the search pattern is always

advanced after success.)

The KMP Algorithm:

j = 1; i = 1;

while j m i n do {

while j > 0 ti = pj do

j = next[j];i = i + 1; j = j + 1; }

if i > n thenfailure

else success;

The KMP algorithm is shown above. An efficient algorithm for computing the

array next is given in [11]. The complexity of the complete algorithm, including

both the calculation of the next for the pattern and the search of pattern over

text, is O(m + n), where m is the size of the pattern and n is the size of the text

[11]. When success occurs, the input text tim+1 . . . ti matches the pattern.

3.2 General Predicates

The original KMP algorithm can be used to optimize simple queries, such as that

of Example 3, in which conditions in the WHERE clause are equality predicates and

t is a tuple variable:

p1(t) = (t.price = 10)

p2(t) = (t.price = 11)

p3(t) = (t.price = 15)

16

-

8/8/2019 Thesis Sadri

31/108

However, for the powerful sequence queries of SQL-TS we need to support:

1. General Predicates: In particular we need to support systems of equali-

ties and inequalities such as those of Example 4 where we have the following

predicates:

p1(t) = (t.price < t.previous.price)

p2(t) = (t.price < t.previous.price)

(40 < t.price < 50)

p3(t) = (t.price > t.previous.price)

(52 < t.price)

p4(t) = (t.price > t.previous.price)

2. Recursive pattern expressions: The KMP algorithm assumes that the

pattern consists of a fixed number of elements. To support queries such

as that of Example 2, we need to optimize searches involving recurring

patterns expressed by the star.

3. More general objects: In modern database systems we store many dif-

ferent types of objects, such as images, text, and XML objects, along with

user-defined methods and predicates on these objects.

3.3 Optimized Pattern Search

In this section we provide the Optimized Pattern Search (OPS) algorithm which

is an extension the KMP algorithm. The OPS algorithm is directly applicable

to the optimization of SQL-TS queries, since it handles the much more general

17

-

8/8/2019 Thesis Sadri

32/108

conditions that occur in time series applications, including repeating patterns

that can be expressed by the star construct.

Say that we are searching the input stream for a sequential pattern, and

a mismatch occurs at the j-th position of the pattern. Then, we can use the

following two sources of information to optimize our next steps in the search:

Conditions for elements 1 through j 1 in the search pattern were satisfied

by the corresponding items in the input sequence, and

The condition for the jth element in the search pattern was not satisfied by

its corresponding input element.

Therefore, much as in the KMP algorithm, we can capture the logical rela-

tionship between the elements of the pattern, and then infer which shifts in the

pattern can possibly succeed; also, for a given shift, we can decide which condi-

tions need not be checked (since their validity can be inferred from the two kinds

of information described above).

Therefore, we assume that the pattern has been satisfied for all positions

before j and failed at position j, and we want to compute the following two

items,

shift(j): this determines how far the pattern should be advanced in the

input, and

next(j): this determines from which element in the pattern the checking of

conditions should be resumed after the shift.

Observe that the KMP algorithm only used the next(j) information. Indeed,

for KMP, the search pattern was never shifted in the text (except for the case

18

-

8/8/2019 Thesis Sadri

33/108

where next(j) = 0 and the pattern was shifted by j). The richer set of possibilities

that can occur in OPS demand the use of explicit shift(j) information. Further-

more, the computation for next and shift is now significantly more complex and

requires the derivation of several three-valued logic matrices.

3.4 Implications between elements

The OPS algorithm begins by capturing all the logical relations among pairs of

the pattern elements using a positive precondition logic matrix , and a negative

precondition logic matrix . These matrices are of size m, where m is the length

of the search pattern. The ij and ij elements of these matrices are only defined

for i j; thus we have lower-triangular matrices of size m. We define:

jk =

1 if pj pk pj F

0 if pj pk

U else

jk =

1 if pj pk

0 if pj pk pj T

U else

We have added the terms pj F in definition of, and pj T in definition of,

to make sure that the left side of the implication relationships are not equivalent

to false, because in that case the value of the corresponding element in the matrixcould be both 0 and 1. By excluding those cases, we have removed the ambiguity.

Logic matrices and contain all the possible pairwise logical relations between

pattern elements. For instance, for Example 4 we have:

Example 5 Computing the matrices and for Example 4

19

-

8/8/2019 Thesis Sadri

34/108

p2 p1 therefore 21 = 1

p3 p1 therefore 31 = 0

p3 p2 therefore 32 = 0

p4 p2 therefore 42 = 0

p4 p1 therefore 41 = 0

p4 p3 therefore 43 = 0

Therefore we have

=

11 1

0 0 1

0 0 U 1

=

0

U 0

U U 0

U U 0 0

From matrices and , we can now derive another triangular matrix S that

describes the logical relationships between whole patterns. The Sjk entries in the

matrix, which are only defined for j > k, are computed as follows:

Sjk = k+1,1 k+2,2 j1,jk1 j,jk

Thus, say that the pattern was satisfied up to, and excluding, element j;then, Sjk = 0 means that the pattern cannot be satisfied if shifted k positions.

Moreover, Sjk = 1 (Sjk = U) means that the pattern is certainly (possibly)

satisfied after a shift of k. Figure 3.2 illustrates the situation. In calculating

matrix S, we use standard 3-valued logic, where U = U, U 1 = U, and

U 0 = 0. For the example at hand we have:

20

-

8/8/2019 Thesis Sadri

35/108

k

1 k + 1 j

1

j -1

j -kj -k -1

k

k

1 k + 1 j

1

j -1

j -kj -k -1

k

Figure 3.2: Shifting the pattern k positions to the right

Example 6 Computing the matrix S for Example 5

S2,1 = 2,1 = U

S3,1 = 2,1 3,2 = 1 U = U

S3,2 = 3,1 = U

S4,1 = 2,1 3,2 4,3 = 0

S4,2 = 3,1 4,2 = 0S4,3 = 4,1 = U

S =

U

U U

0 0 U

We can now compute shift(j) that is the least shift to the right for which

the overlapping sub-patterns not to contradict each other (Figure 3.3). Thus,

shift(j) is the column number for the leftmost non-zero entry in row j of S.

When all these entries are equal to zero, then a failure will occur for any shift up

21

-

8/8/2019 Thesis Sadri

36/108

Shifted Pattern

i j + 1

1

1

ij + shift(j) + 1 i -j + shift(j) + next(j)

shift(j) + 1 shift(j) + next(j)

i

j

next(j) j -shift(j)

Input

Pattern

shift(j)

Shifted Pattern

i j + 1

1

1

ij + shift(j) + 1 i -j + shift(j) + next(j)

shift(j) + 1 shift(j) + next(j)

i

j

next(j) j -shift(j)

Input

Pattern

shift(j)

Figure 3.3: Next and Shift definitions for OPS

to j. In this case, we set shift(j) = j; thus, the pattern is shifted to the right

till its first position coincides with the current position of the cursor in the text.

More formally:

shift(j) =

j if k < j, jk = 0

min({k | Sjk = 0}) otherwise

Thus, shift(j) tells us how much the pattern can be shifted to the right

before we have to start testing the input. We can now compute next(j) which

denotes the element in the pattern from which checking against the input should

be resumed. The are basically three case. The first case is when shift(j) = j, and

thus the first element in the pattern must be checked next against the current

element in the input. The second case is when shift(j) < j and Sj,shift(j) =

1; In this case we only need to begin our checking from the element in the

pattern that is aligned with the first input element after current input position

thus, next(j) = j shift(j) + 1. The third case occurs, when neither of the

previous cases hold; then the first pattern element should be applied to the inputelement i j + shift(j) + 1; but ifshift(j)+1,1 = 1, then the comparison becomes

unnecessary (and similar conditions might hold for the elements that follow).

Thus, we set next(j) to the leftmost element in the pattern that must be tested

against the input. Figure 3.3 shows how this works. Now we can formally define

next as follows:

22

-

8/8/2019 Thesis Sadri

37/108

1. if shift(j) = j then next(j) = 0, else

2. if Sj,shift(j) = 1 then

next(j) = j shift(j) + 1, else

3. if neither condition is true, then

next(j) = min(

{t | 1 t < j shift(j) shift(j)+t,t = 1}

{j shift(j)|j,jshift(j) = 1})

For the example at hand we have:

Example 7 Calculate the arrays next and shift for Example 5

shift(1) = 1

shift(2) = 1 since S21 = 0

shift(3) = 1 since S31 = 0

shift(4) = 3 since S41 = 0 S42 = 0

S43 = 0

next(1) = 0 since shift(1) = 1

next(2) = 1 since 12 = 1

next(3) = 2 since 21 = 1 23 = 1

next(4) = 1 since 41 = 1

The calculation of arrays next and shift is done as part of the query compi-

lation. This is discussed in Section 5.

The main algorithm. We can use the values stored in arrays next and shift

to optimize the pattern search at run time. Consider a predicate pattern P =

23

-

8/8/2019 Thesis Sadri

38/108

p1p2 . . . pm. Now, pj(ti) is equal to one, when the i-th element in sequence T

satisfies a pattern element pj ; otherwise, it is zero.

The OPS Algorithm

j = 1; i = 1;

while j m i n do {

while j > 0 pj (ti) do {

i = i j + shift(j) + next(j);

j = next(j); }

i = i + 1; j = j + 1; }

if i > n then failure

else success;

Here, as in the KMP algorithm, success denotes that tim+1 . . . ti satisfies the

the pattern. However, we see the following generalizations with respect to KMP:

The equality predicate ti = pj is replaced by pj (ti) that tests if pj holds for

the i-th element in the input( i.e., the j-th tuple of the sorted cluster).

When there is a mismatch, we modify both j and i, which, respectively,

index the input and the pattern. The new value for j is next(j) and the

new value for i is i j + shift(j) + next(j).

For instance, we used the pattern in the query of Example 4 to search the following

sequence:

5 5 5 0 4 5 5 7 5 4 5 0 4 7 4 9 4 5 4 2 5 5 5 7 5 9 6 0 5 7.

Figure 3.4 shows the values of j and i as the algorithm progresses for the naive

approach versus the OPS approach. Clearly, the backtracking episodes are less

24

-

8/8/2019 Thesis Sadri

39/108

2 4 6 8 10 12 141

1.5

2

2.5

3

3.5

4

Naive Search Path

i

j

2 4 6 8 10 12 141

1.5

2

2.5

3

3.5

4

OPS Search Path

i

j

Figure 3.4: Comparison between path curve of the naive search (top chart) andOPS (bottom chart)

frequent and less deep, and therefore the length of the search path is significantly

shorter for the OPS algorithm.

25

-

8/8/2019 Thesis Sadri

40/108

CHAPTER 4

Patterns with Stars and Disjunctions

An important advantage of the OPS algorithm is that it can be easily generalized

to handle recurrent input patterns which, in SQL-TS, are expressed using the star.

For example if pj is

ti.price < ti1.price

then pj matches sequences of records with decreasing prices.

The calculation of logic matrices and remains unchanged in the presence

of star patterns; thus, the formulas given in Section 3.4 will still be used. How-

ever, the calculation of the arrays next and shift must be generalization for star

patterns as described next.

At the runtime we maintain an array of counters (one per pattern element)

to keep track of the cumulative number of input objects that have matched the

pattern sequence so far. Take the following SQL-TS example:

Example 8 Find patterns consisting of a period of rising prices, followed by a

period of falling prices, followed another period of rising prices.

SELECT X.name, FIRST(X).date AS sdate,

LAST(Z).date AS edate

FROM quote

26

-

8/8/2019 Thesis Sadri

41/108

CLUSTER BY name

SEQUENCE BY date

AS ( *X, *Y, *Z)

WHERE X.price > X.previous.price

AND Y.price < Y.previous.price

AND Z.price > Z.previous.price

Therefore our three star predicates that must be satisfied on their input tuples:

p1(X) = (X.price > X.previous.price)

p2(Y) = (Y.price < Y.previous.price)

p3(Z) = (Z.price > Z.previous.price)

These will be called starpredicates because they are prefixed with a star in the

from clause of the query, which searches for the pattern: p1(X), p2(Y), p3(Z).

Assume our input data for t.price is:

2 0 2 1 2 3 2 4 2 2 2 0 1 8 1 5 1 4 1 8 2 1

Let us represent the counter for the j-th element of the pattern by count(j).

After matching the pattern with the text we have:

count(1) = 4

count(2) = 9 since 5 elements satisfy p2

count(3) = 11 since 2 elements satisfy p3

We update and use these counters at the run time. These are the modifications

that we need to support the star. When the current input element satisfies the

pattern then move to the next input, and

27

-

8/8/2019 Thesis Sadri

42/108

1. if the current pattern element is not a star element then move to the next

one, otherwise

2. update the current count.

When the current input element does not satisfy the current pattern element

then:

1. It this is a star element, whose predicate has already been satisfied by the

previous input element, move to the next pattern element and the next

input.

2. If this is not a star element, or is a star predicate tested for the first time,

then:

reset j (the index in the pattern) to next(j), and

reset i (the index in the input) to i count(j 1) + count(shift(j) +

next(j) 1).

In the presence of stars, the computation shift(j) and next(j) is more

complex and it is discussed next.

28

-

8/8/2019 Thesis Sadri

43/108

4.1 Finding next and shift for the Star Case

Consider the following graph based on the matrix (excluding the main diagonal)

21

31 32

41 42 43

The entry jk in our matrix correlates pattern predicates pj with pk, k < j,

when these are evaluated on the same input element. Therefore, we can picture

the simultaneous processing of the input on the original pattern, and on the same

pattern shifted back by j k. Thus the arcs between nodes in our matrix aboveshow the combined transitions in the original pattern and in the shifted pattern.

In particular, consider kj where neither pk nor pj are star predicates; then after

success in pj and pk, we transition to pj+1 in the original pattern, and to pk+1

in the shifted pattern: this transition is represented by an arc kj k+1,j+1.

However, if pj is not as star predicate, while pk is, then the success of both

will move pk to pk+1, but leave pj unchanged: this is represented by the arc

kj k+1,j. In general, it is clear that only a subset of the arcs listed in theprevious matrix represent valid transitions and should be considered and this set

is also limited by the values of . In particular, since all the predicates in the

pattern must be satisfied by the shifted input, every kj = 0 entry must removed

with all its incoming and departing arcs: we only retain entries that are either 1

or U.

29

-

8/8/2019 Thesis Sadri

44/108

Considering all possible situations, and assuming that all the neighbors are

non-zero entries, we conclude that only the following transitions are needed when

building the graph

1. If both elements j and k of the pattern sequence are star predicates and

jk = U, then we have three outgoing arcs from jk: one to j+1,k, one to

j+1,k+1 and one to j,k+1. Pictorially,

U j,k+1

j+1,k j+1,k+1

2. If both element j and element k of the pattern are stars and jk = 1, we

have two outgoing arcs from jk: one to j+1,k+1 and the other to j,k+1.

Pictorially,

1 j,k+1

j+1,k j+1,k+1

There is no arc to j,k+1, because j,k = 1; thus all input tuples that satisfy

pj must also satisfy pk.

3. If both elements j and k of the pattern are non-star predicates, then we

have only one arc from jk to j+1,k+1. Pictorially,

jk j,k+1

j+1,k j+1,k+1

4. If element j of the pattern is a star predicate, but element k is not, then

we have two arcs from jk : one to j+1,k+1 and the other to j,k+1,

30

-

8/8/2019 Thesis Sadri

45/108

jk j,k+1

j+1,k j+1,k+1

5. If element k of the pattern is a star predicate but element j is not, then we

have two arcs from jk: one to j+1,k+1 and the other to j+1,k. Thus we

have:

jk j,k+1

j+1,k j+1,k+1

These rules assume that the end nodes of the arcs are either U or 1; but when

such nodes are 0 the incoming arcs will be dropped.

The directed graph produced by this construction will be called the Implica-

tion Graph for pattern sequence P, and is denoted as GP. For each value of j

this graph must be further modified with entries from to account for the fact

that jth element of the pattern failed on the input.

Therefore, we replace the jth row of this graph (the row that starts with j,1)

with the jth row of matrix and remove rows greater than j. In addition we

update the arcs between elements in row j 1 and row j according to the new

values of elements in row j. We use the same rules that we used for arcs between

elements. If element k is star, there are up to two arcs from j1,k to row j:

one to jk and one to j,k+1. This is in addition to the possible existing arc fromj1,k to j1,k+1. If element k is not an star, then there will be only an arc from

j1,k to row j that goes to jk . Again in addition to possible existing arc from

j1,k to j1,k+1. Again we assume that the e nd nodes of the arcs are either U

or 1; but when such nodes are 0 the incoming arcs will be dropped. The resulting

graph will be called the Implication Graph for pattern element j, denoted GjP.

31

-

8/8/2019 Thesis Sadri

46/108

Take the following example where we want to find occurrences of this pattern

in IBMs stock price: increasing price to between 30 and 40 followed by a period of

decreasing price followed by another period of increasing price to a value between

35 and 40, followed by a decreasing period to a value below 30. The query written

in SQL-TS is:

SELECT X.NEXT.date, X.NEXT.price,

S.previous.date, S.previous.price

FROM quote

CLUSTER BY name,

SEQUENCE BY date

AS (*X, Y, *Z, *T, U, *V, S)

WHERE

X.name=IBM

AND X.price > X.previous.price

AND 30 < Y.price

AND Y.price < 40

AND Z.price < Z.previous.price

AND T.price > T.previous.price

AND 35 < U.price

AND U.price < 40

AND V.price < V.previous.price

AND S.price < 30

Therefore our pattern predicates (on an input tuple t) are:

32

-

8/8/2019 Thesis Sadri

47/108

p1(t) = (t.price > t.previous.price)

p2(t) = (30 < t.price < 40)

p3(t) = (t.price < t.previous.price)

p4(t) = (t.price > t.previous.price)

p5(t) = (35 < t.price < 40)

p6(t) = (t.price < t.previous.price)

p7(t) = (t.price < 30)

Observe that p1, p3, p4, and p6 are star predicates, and the others are not.

Our matrices and are:

=

1

U 1

0 U 1

1 U 0 1

U 1 U U 1

0 U 1 0 U 1

U 0 U U 0 U 1

=

0

U 0

U U 0

0 U U 0

U U U U 0

U U 0 U U 0

U U U U U U 0

33

-

8/8/2019 Thesis Sadri

48/108

Since p1, p3, p4, and p6 are star predicates, and p2 and p5 are not, we can connect

the elements of (after excluding the main diagonal) as follows:

GP =

U

0 U

1 U 0

U 1 U U

0 U 1 0 U

U 0 U U 0 U

Say now that we want to build G6P. We replace row 6 of GP with row 6 of

and update the paths from the 5th row to the 6th row according to new value.

Then, we have the following graph:

34

-

8/8/2019 Thesis Sadri

49/108

G6P =

U

0 U

1 U 0

U 1 U U

U U 0 U U

Consider now the node 41 in this graph. Observe that there are several paths

consisting of either 1 nodes or U nodes that take us to nodes in the last row of

the matrix. Therefore, the input shifted by 4 can succeeds along any of thesepaths. However, there is no path to the last row starting from node 31: thus, 3

is not a possible shift. Also there is not path to the last row starting from 21

and 11; thus shifts of size 2 and 1 can never succeed. Therefore, we conclude

that shift(6) = 3.

In general, we define shift(j) as follows:

Definition 1 For a pattern P

shift(j)=min{s| t where there is a path from s+1,1 to j,t in GjP}

For the case that the above set is empty, we have:

if j1 = 0 then shift(j) = j 1 else shift(j) = j

Now we can define next. Note that there might be more than one path found

in the definition of shift, but next must return a unique value to be used in

35

-

8/8/2019 Thesis Sadri

50/108

restarting the search. Therefore let us say that a node in our GjP graph is deter-

ministic if there is exactly one arc leaving this node, and the end-node of this arc

has value 1 (thus a deterministic node cannot take us to an U node or to several

1 nodes). Thus, we start from shift(j)+1,1, and if this is not deterministic, we set

next(j) = 1. Otherwise we move to the unique successor of this deterministic

node and repeat the test. When the first non-deterministic node is found in this

process, next(j) is set to the value of its column. If the search takes us to the

last row in GjP, that means that none of the input elements previously visited

needs to be tested again: thus next(j) = j shift(j).

For the example at hand, there is a non-zero path from node 41 to 61, thus

shift(6) = 3. We now consider 41 = 1 and see that this is not a deterministic

node, since there more than one arc leaving the node. Thus, we conclude that

next(6) = 1.

4.2 Complexity of Calculating next and shift

The GP graph built in the last section has at most m(m 1)/2 node and each

node has a constant number of (maximum 3) outgoing edges. Thus at the worst

case, traversing the graph in depth first search manner for finding shift(j) will

have the complexity of O(j2), and the worst case complexity for finding all the

shift values will be O(m3). Note that the first j 2 levels of graph Gj1P are

the same as the first j 2 levels of graph Gj

P, therefore we can use the results ofprevious traversal for j 1 in the current traversal for j. If we store the values of

indexes of the paths from the first column to j 2 row in GjP, then for calculating

shift(j) we can only take the starting points from the (j 2)nd row. This way

the complexity will be reduced to O(m2) because the complexity of going from

the (j 2)nd row to the jth row is constant for each element (since the branching

36

-

8/8/2019 Thesis Sadri

51/108

factor is less than or equal to 3).

4.3 Disjunctive Patterns

We will next consider queries that search for the disjunction of two patterns,

meaning that the input sequence should satisfy either one of the two pattern

sequences, or both. In effect it is equivalent to two independent queries, but we

can execute them in one scan of the database. Take the following example:

Example 9 Find 4 consecutive rise in the stock price or 4 consecutive closing

price between 55 and 57 for IBM

SELECT X.NEXT.date, X.NEXT.price,

S.previous.date, S.previous.price

FROM quote

SEQUENCE BY dateAS (X, Y, Z, T)

WHERE X.name=IBM

AND (

( X.previous.price < X.price

X.price < Y.price

AND Y.price < Z.price

AND Z.price < T.price

)

OR

( X.price > 55

AND X.price < 57

AND Y.price > 55

37

-

8/8/2019 Thesis Sadri

52/108

AND Y.price < 57

AND Z.price > 55

AND Z.price < 57

AND T.price > 55

AND T.price < 57

)

)

A naive approach in processing this query would consists in making a firstpass through data to satisfy the first pattern followed by a second pass to satisfy

the second pattern. This approach will not be considered since it is likely to

require each page in the secondary store to be retrieved twice. We next consider

two other approaches that do not suffer from this drawback. These are:

Multiple Stream model, and

Single Stream model.

Multiple Data Stream In this model, the starting i and j values for each

pattern are kept in a queue. When the pattern being tested fails, its new i

and j values are computed and replace the old values in the queue. Then, the

scheduler looks at the values in the queue and select a pattern for processing

according to some optimization criteria. The queue can be prioritized based on

different criteria for optimization. For instance, by selecting the pattern which

has the least value of i, we can ensure that all patterns are served fairly and no

pattern stays behind. This in turn minimizes the size of data that needs to be

kept in temporary memory.

An advantage of this method is its simplicity and amenability to both serial

and parallel processing. It can be implemented as a client-server model where

38

-

8/8/2019 Thesis Sadri

53/108

the server provides the next values for i and j to each client process. Thus, this

method is amenable to parallel execution based on multiple data streams.

Single Data Stream This model assumes that all the patterns are tested in

parallel against the current element in the input being scanned. Only patterns

that are known to be false or true from the matrices are excused. Take the

query in Example 9. That query is equivalent with two queries, one that finds

occurrences with 4 consecutive closing prices between 55 and 57 and the other

that finds 4 consecutive rise in the price. We calculate , , shift and next

independently for each pattern, but at the run time we handle both queries

simultaneously. The run time algorithm can be revised as follows.

39

-

8/8/2019 Thesis Sadri

54/108

The OPS Algorithm For Concurrent Disjunctive Patterns

j1 = 1; j2 = 1; i = 1;

while j1 m1 j2 m2 i n do {

while pj1(ti) qj2(ti) do {

j1 = j1 + 1; j2 = j2 + 1; i = i + 1;

}

iO = i;

if pj1(ti) then {while i iO do {

while j > 0 pj1(ti) do {

i = i j1 + next1(j1) + shift1(j1);

j1 = next1(j1);

}

j1 = j1 + 1; i = i + 1;

}else { / pj2(ti) /

while i iO do {

while j > 0 qj2(ti) do {

i = i j2 + next2(j2) + shift2(j2);

j2 = next2(j2);

}

j2 = j2 + 1; i = i + 1;

}

}

}

if i > n then failure

else success;

40

-

8/8/2019 Thesis Sadri

55/108

As the algorithm shows, we keep proceeding until one of the concurrent patterns

fails. At this point we save the current value of i in iO and reset i and j for the

failed pattern and keep searching only for the fail pattern until i becomes greater

than iO (since we know the other pattern doesnt have to checked against input

up to the point iO). In this way, we only scan the pattern once and we need only

one buffer to keep recent values of the input.

Disjunctive Normal Form Consider now the following query:

Example 10 Find patterns in IBM stock price where there is a sequence of de-

creases of more than 2% in price until it goes below 50 or it starts increasing,

followed by a period of price increases of more than 2 percent until it goes above

100 or it starts decreasing:

SELECT X.NEXT.date, X.NEXT.price,

S.previous.date, S.previous.price

FROM quote

CLUSTER BY name

SEQUENCE BY date

AS (X,*Y, Z, *T,U )

WHERE

X.name=IBM

X.price > 0.98 * X.previous.price

AND Y.price < 0.98 * Y.previous.price

AND (Z.price > Z.previous.price OR Z.price < 50)

AND T.price > 1.02 * T.previous.price

AND (U.price < U.previous.price OR U.price > 100)

41

-

8/8/2019 Thesis Sadri

56/108

The pattern in the where clause is:

p1 p2 (p3a p3b) p4 (p5a p5b)

where

p1(t) = t.price > 0.98 t.previous.price

p2(t) = t.price < 0.98 t.previous.price

p3a(t) = t.price > t.previous.price

p3b(t) = t.price < 50

p4(t) = t.price > 1.02 t.previous.price

p5a(t) = t.price < t.previous.price

p5b(t) = t.price > 100

This can be computed by expanding the where clause into its normal form con-

sisting of the following four patterns and processed accordingly:

p1 p2 p3a p4 p5a

p1 p2 p3a p4 p5b

p1 p2 p3b p4 p5a

p1 p2 p3b p4 p5b

This approach is the one that is preferable as long as it does not lead to too many

alternative patterns.

A second approach consists in evaluating this query as it contained only one

conjunctive pattern. In this case, the and matrices will have to be constructed

42

-

8/8/2019 Thesis Sadri

57/108

for implications between disjunctive clauses. This problem is discussed in Section

5.2.

For very complex disjunctive conditions the two approaches can be combined.

43

-

8/8/2019 Thesis Sadri

58/108

CHAPTER 5

Calculating and

Elements of and are calculated according to the semantics of the pattern

elements. Satisfiability and implication results in databases [7, 27, 10, 20, 24, 25]

are relevant to the computation of and for a class of patterns that involve

inequalities in a totally ordered domain (such as real numbers). Ullman [27] has

given an algorithm for solving the implication problem between two queries Sand

T. His algorithm works for queries which are conjunctions of terms of the form

X op Y, where op {}, and has complexity ofO(|S|3 +|T|). Klug

[10] has studied the implication problem in a broader range of queries that are

conjunction of terms of the form X op C and X op Y. Rosenkrantz and Hunt [20]

provided an algorithm for satisfiability problem with complexity |S|3, where S is

the query that is tested for satisfiability and is a conjunction of terms of the form

X op C, X op Y, and X op Y+C. In our implementation, we compute matrices

and first using the algorithms by Guo, Sun and Weiss (GSW) [7] for computing

implication and satisfiability of conjunctions of inequalities as explained below.

5.1 GSW Algorithm

The GSW algorithm deals with inequalities of the form X op C, X op Y, and

X op Y + C where X and Y are variables, C is constant, and op {=, =, ,

,< ,>}. Complexity of their algorithm is O(|S| n2 + |T|) for testing implication

44

-

8/8/2019 Thesis Sadri

59/108

(for the 1 entries in our matrices) and O(|S| + n3) for testing satisfiability (for

the 0 entries); n is the number of variables in S and |S| and |T| are the number

of inequalities in S and T. Given the limited number of variables and inequalities

used in queries, these compilation costs are quite reasonable. GSW starts with

applying the following transformations:

1. (X Y + C) (Y X C)

2. (X < Y + C) (X Y + C) (X = Y + C)

3. (X > Y + C) (Y X C) (X = Y + C)

4. (X = Y + C) (Y X C) (X Y + C)

5. (X < C) (X C) (X = C)

6. (X > C) (X C) (X = C)

7. (X = C) (X C) (X C)

After these transformations, for all the inequalities of the form X op Y + C, we

have op {, =}, and for all the inequalities of the form X op C, op {, =, }

(X op Y is a special form of X op Y + C where C = 0).

5.1.1 Satisfiability

For determining the satisfiability of a conjunctive query S, a directed weighted

graph Gs = (Vs, Es) is built where Vs is the set of variables in S, and there is a

directed edge from X to Y with weight C in Es, if and only if (X < Y + C) S.

Inequalities of the form (X < C) are transformed to the form (X < V0 + C) by

introducing dummy variable V0.Thus the following results are proven in [7]: If

45

-

8/8/2019 Thesis Sadri

60/108

there is a negative weighted cycle a cycle that sum of the weights of its edges

is negative, then S is unsatisfiable. If all the cycles are positive weighted, then S

is satisfiable. For the case that there are zero weighted cycles, the necessary and

sufficient condition for satisfiability is that for any two variables X and Y on the

same cycle, if the path from X to Y has a cost C, then (X = Y + C) S. As

shown in [7], this algorithm has the time complexity of O(|S| + n3) where |S| is

the number of inequalities in S and n is the number of variables (size ofVs). The

following example clarifies how the algorithm works:

Example 11 Assume that we want to find out if jk is zero or not where the two

pattern elements pj and pk are as follows:

pj = X < Y + 4 Y < Z

pk = Z < X+ 2 X < 6 Z > 7

To see ifpj pk is satisfiable or not, we first build a graph for pj pk as in Figure5.1. There are two cycles in the graph. Cycle X Y Z X , has weight of 6 and cycle

XV0ZX has weight of 1. Since there are no negative weighted cycles, pj pk is

satisfiable and value of jk is not zero.

5.1.2 Implication

The implication problem takes to queries S and T and determines ifS implies T.

S and T are assumed to be conjunction of inequalities of the form X op Y + C.

For the inequalities of type X op C, a dummy variable V0 is defined that can

take only value of zero and the inequality is transformed to X op V0 + C. It

can be proven [7] that under this transformation the implication problem doesnt

change. The algorithm starts by introducing the closure of S, i.e., a complete

46

-

8/8/2019 Thesis Sadri

61/108

X

ZY

V0

4

6

-7

0

2

X

ZY

V0

4

6

-7

0

2

Figure 5.1: Directed weighted graph for determining the satisfiability of a set of

inequalities

47

-

8/8/2019 Thesis Sadri

62/108

set that contains all the inequalities implied by S. Then, T is implied by S Iff

T is a subset of closure of S. Since the number of inequalities implied by S

is boundless, a set called modulo closure of S is defined that contains only non

redundant inequalities that belong to closure of S. For example if Y < X + C1

is in closure of S, for any C2 > C1, inequality Y < X + C2 would be a redundant

member of closure of S. The modulo closure of S can be computed by applying

the following set of axioms to S:

A1. X X+ 0;

A2. X = Y + C implies Y = X C where Y and X are distinct variables;

A3. X Y + C and Y V + C implies X V + C+ C;

A4. X W + C1, W Y + C2, X Z+ C3, Z Y + C4, W = C + Z, and

C = C3 C1 = C2 C4 imply X = Y + C1 + C2 where X and Y are distinct

variables. Also Z and W are distinct variables.

GSW proves that the size of the modulo closure called Sclosure is finite and

calculating it has the time complexity of O(|S| n2). After calculating Sclosure,

S implies T if and only if S is unsatisfiable or:

for every (X Y + C) T, there exist (X Y + C0) Sclosure such that

C0 < C; and

for any (X = Y + C) T,

1. (X = Y + C) Sclosure or

2. there exist (X Y + C1) Sclosure such that C1 < C or

3. there exist (Y X+ C2) Sclosure such that C2 < C.

48

-

8/8/2019 Thesis Sadri

63/108

This step takes O(|T|) [7]; therefore, the whole algorithm complexity is O(|S|

n2 + |T|) While the GSW algorithm is sufficient to handle examples listed so far,

a minor extension is needed to handle the next query Example 13. In this query

inequalities have the form say X op C Y. Then, we introduce a new variable

Z = X/Y and use Z op C, given that the domain ofY is positive numbers (stock

prices). Even though the GSW algorithm covers a broad range of queries, it is

still limited since it only covers conjunctive queries of very limited forms. In the

next section we consider disjunctive patterns.

5.2 Calculating and for Disjunctive Pattern Elements

We next discuss the problem of how to determine the and matrices for con-

junctive patterns that include disjunctive terms. Assume we have the pattern

P = p1, p2, p3, . . . , pm where pj = pja pjb for some 1 j m. We can first cal-

culate implication and satisfiability relations between pja and pjb and every other

element of the pattern P using the methods described in the previous sections.

For every k where 1 k < j, we need to calculate jk , i.e., we must calculate

the logical value of pj pk. If pja pk and pjb pk are true, pj pk is true

and the value of jk is 1. In a similar way if pja pk and pjb pk, then jk

is 0. In other cases, the information available is not enough and we need to set

the value of jk to U. For each value k, where j < k m, we need to calculate

the truth value of pk pj . In this case, if either pk pja or pk pjb always

have a truth value of 1, then we have that pk pj is true and the value of kj is

1. Also, since pj = (pja pjb) = pja pjb, if pk pja and pk pjb are

both true, then we can conclude that pk pj is true and kj is 0.

The same arguments can be used for calculating . For calculating jk when

k < j, we want to see if value of pj pk is always true or not. Since pj =

49

-

8/8/2019 Thesis Sadri

64/108

k < j ja,k = 1 jb,k = 1 jk = 1

ja,k = 0 jb,k = 0 jk = 0

else jk = U

ja,k = 1 jb,k = 1 jk = 1

ja,k = 0 jb,k = 0 jk = 0

ja,k = U jb,k = U jk = U

k > j ja,k = 1 jb,k = 1 jk = 1

ja,k

= 0 jb,k

= 0 jk

= 0

else jk = U

ja,k = 1 jb,k = 1 jk = 1

ja,k = 0 jb,k = 0 jk = 0

else jk = U

Table 5.1: Logic matrix elements for disjunction of pattern elements.

(pja pjb) = pja pjb , if pja pk or pjb pk, then we can conclude

that jk is one.

Table 5.2 summarizes different possibilities for and . In this table, by

ja,k we mean value of jk if we would replace pj with pja. The same notational

conventions are used for ja,k, and k,ja. When we are calculate jk, and both j

and k are disjunctive patterns, we can first decompose one of the elements and

use the technique just described for calculating , and then combine the results.

Obviously we can use the same technique for calculating . Furthermore, eventhough we only used two terms in the disjunctive elements, the number of terms

in the disjunctions doesnt have to be limited to two. As an example of disjunctive

elements, lets consider the following SQL-TS query:

Example 12 Calculate and for the following query

50

-

8/8/2019 Thesis Sadri

65/108

SELECT X.NEXT.date, X.NEXT.price,

S.previous.date, S.previous.price

FROM djia

SEQUENCE BY date

AS (X,*Y, Z, *T,U )

WHERE X.price > 0.98 * X.previous.price

AND Y.price < 0.98 * Y.previous.price

AND (0.98*Z.previous.price < Z.price OR Z.price > 50)

AND T.price > 1.02 * T.previous.price

AND (0.98*U.previous.price < U.price OR U.price > 50)

This can be represented as a pattern sequence P:

P=p1, p2, p3, p4, p5, where:

p1(t) = t.price > 0.98 t.previous.price

p2(t) = t.price < 0.98 t.previous.price

p3(t) = t.price > 0.98 t.previous.price t.price > 50

p4(t) = t.price < 0.98 t.previous.price

p5(t) = t.price > 0.98 t.previous.price t.price > 50

We can break p3 and p5 to p3 = p3a p3b and p5 = p5a p5b where:

p3a(t) = p5a(t) = t.price > 0.98 t.previous.price and

p3b(t) = p5b(t)t.price > 50

51

-

8/8/2019 Thesis Sadri

66/108

As an example, in order to calculate 32, notice that p3a(t) p2(t), p3b(t) p2(t),

and p3b(t) p2(t) are not equivalent to true; however, p3a(t) p2(t) is equiv-

alent to true. In other words, 3a,2 = 0 and 3b,2 is U; therefore, 32 = U. On the

other hand, to calculate 43, we have 4,3a = 0 and 4,3b = U; therefore 4,3 = 0.

In conclusion we obtain the following matrix:

P =

1

0 1

0 0 10 0 0 1

0 0 1 0 1

52

-

8/8/2019 Thesis Sadri

67/108

CHAPTER 6

Experimental Results

In order to measure performance, we count the number of times that input el-

ements are tested against pattern elements. Thus, in our experiments we mea-

sured speedups ranging from the modest accelerations obtained for simple search

patterns, such as that of Figure 3.4, to speedups of more than two orders of mag-

nitude for the more complex patterns used in practical applications. For instance,

a common search in stock market data analysis is for a double-bottom pattern,

where the stock price has two consecutive local minima. We searched the closing

price of DJIA (Dow Jones Industrial Average) in the last 25 years, for relaxed

double-bottoms. By a relaxed double bottom we mean a local maximum sur-

rounded by two local minima, where we only consider the increases or decreases

of more than 2%. In other words, if the price moves less than 2%, we consider it

as if it hasnt changed (Figure 6.1).

Example 13 expresses the relaxed double bottom pattern in SQL-TS; Z, U,

and W represent the areas where changes are less than 2% and the curve is con-

sidered approximately flat (Figure 6.1). For the relaxed double-bottom query,