Tesserae: addressing scalability & flexibility concerns

description

Transcript of Tesserae: addressing scalability & flexibility concerns

Tesserae: addressing scalability & flexibility concernsCHRIS EBERLE

Background

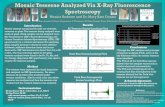

Tesserae: A linguistics project to compare intertextual similarities Collaboration between University of Buffalo and UCCS Live version at http://tesserae.caset.buffalo.edu/ Source code at https://github.com/tesserae/tesserae

Tesserae

Background

The good: Well-designed, proven, robust algorithm

See “Intertextuality in the Digital Age” by Neil Coffee, J.-P. Koenig, Shakthi Poornima, Roelant Ossewaarde, Christopher Forstall, and Sarah Jacobson

See “The Tesserae Project: intertextual analysis of Latin poetry” by Neil Coffee, Jean-Pierre Koenig, Shakthi Poornima, Christopher W. Forstall, Roelant Ossewaarde and Sarah L. Jacobson

Simple website, intuitive operations, meaningful scores (user friendly)

Multi-language support Large corpus (especially Latin)

Background

The bad: Perl outputs PHP outputs HTML Error-prone deployments (hand-edit Perl scripts)

The ugly: Mixing data and display layers Custom file formats

Perl nested dictionaries serialized to external text files -- slow Results must be partially pre-computed

Statistics are pre-computed at ingest time Text vs. text comparisons done all at once, in memory, results written to disk, paginated by another

script – searches represent a “snapshot in time”, not a live search. No online ingest

All offline, involving multiple scripts to massage incoming data Can only compare one text to another; no per-section, per paragraph, per-line, or per-author

comparisons

Goals Tesserae-NG: The next generation of Tesserae

Performance Use live caches & lazy computation where appropriate, no more bulk computation Make certain operations threaded / parallel

Scalability Proven storage backend (Solr) used for storage rather than custom binary formats Use industry-standard practices to separate data and display, allowing the possibility for clustering, load-

balancing, caching, and horizontal scaling as necessary. Make all operations as parallel as possible

Flexibility Use Solr’s extensible configuration to support more advanced, flexible searches (more than simple “Text

A” vs “Text B” searches) Ease of deployment

Create a virtual environment that can easily be used by anyone to stand up their own instance User interface

Create a modern, user-friendly user interface that both improves on the original design AND gives administrators web-based tools to manage their data.

Goals

In short: rewrite Tesserae to address scalability and flexibility concerns

(with a secondary focus on ease of development and a nicer UI)

Architecture

Frontend: Django-powered website with online uploader Middleware: Asynchronous ingest engine to keep the frontend

responsive Backend: Solr-powered database for data storage and search

Architecture: Frontend Powered by Django, jQuery, Twitter Bootstrap, and Haystack

Simple MVC paradigm, separation of concerns (no more data logic in the frontend)

Nice template engine, free admin interface, free input filtering / forgery protection.

Responsive modern HTML5 UI thanks to jQuery and Twitter Bootstrap Python-based, modular, well-documented Solr searches very easy thanks to Haystack

Scalability provided by uWSGI and Nginx Interpreter is only run once, bytecode is cached and kept alive Automatic scaling (multiple cores / multiple machines) Static content doesn’t even get handled by Python, very cheap now

Architecture: Middleware

Celery Accepts texts to ingest Each text is split into 100-line chunks and distributed amongst

workers Each worker translates the text into something Solr can ingest, and

makes the required ingest call to Solr Highly parallel, fairly robust. Interrupted jobs are automatically re-

run. Ensures that any large texts ingested from the frontend can’t

degrade the frontend experience Uses RabbitMQ to queue up any unprocessed texts

Architecture: Backend

Apache Solr for Storage and Search Proven search engine, fast, efficient Perfectly suited for large quantities of text

Efficient, well-tested storage, easily cacheable, scales well Flexible schema configuration

Support any kind of query on the data we wish to perform Does not have text-vs-text comparison tool built-in

A custom Solr plugin was written to accommodate this, based on the original Tesserae algorithm

Tomcat for application container Can quickly create a load-balanced cluster if the need arises

Architecture: Other concerns Web-based ingest is tedious for batch jobs

Provide command-line tools to ingest large quantities of texts, just for the initial setup (use of these tools are optional)

Solr’s storage engine can’t / won’t handle some of the metadata that the current Tesserae format expects (e.g. per-text frequency data) Use a secondary key-value database to the side to store this extra information

(LevelDB – very fast lookups) Tesserae’s CSV-based Lexicon database is too slow, and won’t fit into

memory Create an offline, one-time transformer to ingest the CSV file into a LevelDB

database that will be quicker to read Metrics – where are the slow points?

Use the Carbon / Graphite to collect metrics (both stack-wide, and in-code) May want to access texts directly – view only mode, no search

PostgreSQL for simple storage

Architecture

Solr Plugin No built-in capability for Solr to compare one Document to

another Solr is a simple web-wrapper with configuration files Uses Lucene under the covers for all heavy lifting No built-in support for comparisons in Lucene either, but writing a

Solr wrapper to do this is possible

Solr Plugin: Design decisions What will be searched?

Simple one document vs another? Portions of a document vs another? Actual text within document? What is a “document”? A text? A volume of texts?

General approach Treat each line in the original text as its own document

This “minimal unit” is configurable at install time Dynamically assemble two “texts” at runtime based on whatever

parameters the user wishes. Can compare two texts, two volumes, two authors, a single line vs. a whole

text, a portion of a text vs. an entire author, etc, etc. Only limited by the expressive power of Solr’s search syntax, and the schema

Solr Plugin: Schema ExampleAuthor

Title Volume

Line #

Text

Lucan Bellum Civile

1 1 Bella per Emathios plus quam civilia campos

Lucan Bellum Civile

1 2 Iusque datum sceleri canimus, populumque potentem

Lucan Bellum Civile

1 3 In sua victrici conversum viscera dextra,

…Vergil Aeneid 12 950 hoc dicens ferrum adverso sub pectore conditVergil Aeneid 12 951 fervidus. Ast illi solvuntur frigore membraVergil Aeneid 12 952 vitaque cum gemitu fugit indignata sub umbras.Each row, in Solr parlance, is called a “document”. To be sure, these are actually documentfragments from the user’s perspective. Each “document” has a unique ID and can be addressed individually. We can combine them at runtime into two “pools” of documents, which will be compared to one another for similarity.

Solr Plugin: Ingest Logic Receive a batch of lines + metadata For each line, do the following:

Split the line into words (done automatically with Solr’s tokenizer) Take each word, normalize it, and look up the stem word from a Latin lexicon DB Look up all forms of the stem word in the DB Place the original word, and all other forms of the word in the Solr index

Encode the form into the word so we can determine at search time which form it is Allows this line to match no matter which form of a word is used

Update a global (language-wide) frequency database with the original word, and all other forms of the word

Metadata is automatically associated, no intervention required Final “document” is stored and indexed by Solr. Term vectors are calculated

automatically.

Solr Plugin: Search Logic Take in two queries from the user

Source query, and Target query Gather together Solr documents that match each query

Collect each result set in parallel as “source set” and “target set” Treat each result set as two large meta-documents

Dynamically build frequency statistics on each meta-document Dynamically construct a stop-list based on global statistics

Global statistics must live from one run to the next, use an external DB Global statistics don’t change from one search to the next, cached

Run the core Tesserae algorithm on the two meta-documents Compare all-vs-all, only keeping line-pairs that share 2 or more terms

Words that are found in the stoplist above are ignored Calculate distances for each pair, throw away distances above some threshold Calculate a score based on distance and frequency statistics Order results by this final score (high to low) Format results, try to determine which words need highlighting Stream result to caller (pagination is automatic thanks to Solr)

Solr Plugin: Flexible Query Language

Compare "Bellum Civile“ with “Aeneid” (all volumes) http://solrhost:8080/solr/latin?tess.sq=title:Bellum

%20Civile&tess.tq=title:Aeneid Compare line 6 of “Bellum Civile” with all of Vergil’s works

http://solrhost:8080/solr/latin?tess.sq=title:Bellum%20Civile%20AND%20line:6&tess.tq=author:Vergil

Compare Line 3 of Aeneid Part 1 with Line 10 of Aeneid Part 1 http://solrhost:8080/solr/latin?tess.sq=title:Aeneid%20AND

%20volume:1%20AND%20line:3&tess.tq=title:Aeneid%20AND%20volume:1%20AND%20line:10

Rich query language provided by Solr, most queries easily supported https://wiki.apache.org/solr/SolrQuerySyntax

Solr Plugin: Difficulties Solr is optimized for text search, not text comparison

Bulk reads of too many documents can be very slow because the index isn’t used Rather than loading the actual documents, use an experimental feature called “Term

Vectors” which store frequency information for the row directly in the index. Use the Term Vectors exclusively until the actual document is needed

The meta-document approach makes it impossible to pre-compute statistics. Calculating this at runtime is somewhat costly. Using a cache partially mitigates this problem for related searches.

The original Tesserae has a multi-layered index Actual word + location -> Stemmed word + All other forms

Allows the engine to make decisions about which word form to use at each stage of the search Solr is flat: word + location

Had to “fake” the above hierarchy by packing extra information into each word Implies each word must still be split apart and parsed, this can be slow for large document collections. Would need a custom Solr storage engine to fix this (yes, this is possible – Solr is very pluggable) Would also need my own Term Vector implementation (also possible)

Easy deployment: Vagrant Many components, complicated build process, multiple languages, dozens of

configuration files Need to make this easy to deploy, or no one will use this

Solution: Vagrant Create a Linux image by hand with some pre-installed software

Java, Tomcat, Postgres, Maven, Ant, Sbt, Nginx, Python, Django, RabbitMQ, etc Store all code, setup scripts, and configuration in git Automatically download the Linux image, provision it, and lay down the custom

software and configuration. Automatically start all services, and ingest base corpora Entire deployment boiled down to one command: vagrant up Average deployment time: 10 minutes

Encourages more participation (lower barrier to entry)

The final product Step 1: Clone the project

The final product Step 2: Vagrant up (automatic provisioning, install, config, &

ingest)

The final product Step 3: Search

The final product

Live Demo

Results Results are generated within a similar time-frame to the original (a couple seconds on

average for one core) Scores are nearly identical (many thanks to Walter Scheirer and his team for the help

on translating and explaining the original algorithm, as well as testing the implementation).

Results are truly dynamic, no need to pre-compute / pre-sort No temporary or session files used

Related accesses are very fast (10s of milliseconds) Faster than original site Possible thanks to Solr’s ability to cache search results

Scales very well Numbers are relatively constant regardless of how many other documents occupy the

database (storage volume doesn’t impede speed) Can be made noticeably faster by deploying on a multi-core machine Biggest determining speed factor is how big the two “meta-documents” are

Can’t be made truly parallel, each phase relies on the previous being done Only data that will be displayed is actually transmitted, no wasted bandwidth per search.

Analysis Success!

Both primary and secondary goals were met While single searches on single-core setups won’t see any improvements, using multiple cores

definitely improves speed All original simple-search functionality is intact New functionality added

Sub/super-document comparisons via custom plugin Single-document text search is a given with Solr Solr multi-core support

Can configure multiple instances of Solr to run at the same time, not only means multiple languages but also multiple arbitrary configurations.

Online asynchronous ingest Search and storage caching Web-based administration

Because Solr uses the JVM, no need to run a costly interpreter for each and every search – JVM will compile the most-used pieces of code to near-native speeds.

Original scoring algorithm is O(m*n) (as a result of the all-vs-all comparison) – parallelism only helps so much

Conclusion The results speak for themselves Unfortunate that Solr doesn’t have a built-in comparison endpoint

Writing own turned out to be necessary anyway, doubtful they’d have a scoring scheme based on the original Tesserae algorithm

Lucene API provided everything needed to do this comparison, very few “hacks” necessary Should provide the Tesserae team with a nice framework moving forward

Easy to deploy Separation of concerns Nice UI

Simple, scriptable MVC frontend Written against a well-documented set of APIs

Robust backend Scales better than the perl version A formal, type-checked, thread-safe, compiled language for the core algorithm Written against a well-documented set of APIs

Rich batch tools

Future work UI frontend

Add more advanced search types to frontend Full UI management of ingested texts (view, update, delete) Free-text search of available texts

Solr backend Word highlighting (expensive right now) Core algorithm: address O(n*m) implementation Refactor code, a tad jumbled right now Address slow ingest speed Add support for index rebuild

Vagrant / installer Flush out “automatic” corpora selection Multi-VM installer (automatic load balancing)

Further information

Source code at https://github.com/eberle1080/tesserae-ng Documentation at https://github.com/eberle1080/tesserae-ng/wiki Live version at http://tesserae-ng.chriseberle.net/ SLOC statistics

3205 lines of Python 3119 lines of Scala 2034 lines of XML 719 lines of Bash 548 lines of HTML 237 lines of Java

Questions?