tdt4260

Transcript of tdt4260

1

TDT 4260 – lecture 1 – 2011• Course introduction

– course goals– staff– contents– evaluation– web, ITSL

1 Lasse Natvig

• Textbook– Computer Architecture, A

Quantitative Approach, Fourth Edition

• by John Hennessy & David Patterson(HP90 - 96 – 03) - 06

• Today: Introduction (Chapter 1)– Partly covered

Course goal• To get a general and deep understanding of the

organization of modern computers and the motivation for different computer architectures. Give a base for understanding of research themes within the field.

• High level

2 Lasse Natvig

• High level• Mostly HW and low-level SW• HW/SW interplay• Parallelism• Principles, not details

inspire to learn more

Contents• Computer architecture fundamentals, trends, measuring

performance, quantitative principles. Instruction set architectures and the role of compilers. Instruction-level parallelism, thread-level parallelism, VLIW.

• Memory hierarchy design, cache. Multiprocessors, shared memory architectures, vector processors, NTNU/Notur supercomputers distributed shared memory

3 Lasse Natvig

supercomputers, distributed shared memory, synchronization, multithreading.

• Interconnection networks, topologies• Multicores,homogeneous and heterogeneous, principles and

product examples• Green computing (introduction)• Miniproject - prefetching

TDT-4260 / DT8803• Recommended background

– Course TDT4160 Computer Fundamentals, or equivalent.

• http://www.idi.ntnu.no/emner/tdt4260/– And Its Learning

• Friday 1215-1400– And/or some Thursdays 1015-1200

4 Lasse Natvig

– 12 lectures planned

– some exceptions may occur

• Evaluation– Obligatory exercise (counts 20%). Written

exam counts 80%. Final grade (A to F) given at end of semester. If there is a re-sit examination, the examination form may change from written to oral.

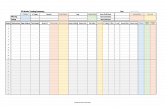

Lecture planDate and lecturer Topic

1: 14 Jan (LN, AI) Introduction, Chapter 1 / Alex: PfJudge

2: 21 Jan (IB) Pipelining, Appendix A; ILP, Chapter 2

3: 28 Jan (IB) ILP, Chapter 2; TLP, Chapter 3

4: 4 Feb (LN) Multiprocessors, Chapter 4

5: 11 Feb MG(?)) Prefetching + Energy Micro guest lecture

Subject to change

5 Lasse Natvig

6: 18 Feb (LN) Multiprocessors continued

7: 25 Feb (IB) Piranha CMP + Interconnection networks

8: 4 Mar (IB) Memory and cache, cache coherence (Chap. 5)

9: 11 Mar (LN) Multicore architectures (Wiley book chapter) + Hill Marty Amdahl

multicore ... Fedorova ... assymetric multicore ...

10: 18 Mar (IB) Memory consistency (4.6) + more on memory

11: 25 Mar (JA, AI) (1) Kongull and other NTNU and NOTUR supercomputers (2) Green

computing

12: 1 Apr (IB/LN) Wrap up lecture, remaining stuff

13: 8 Apr Slack – no lecture planned

EMECS, new European Master's Course in Embedded Computing Systems

6 Lasse Natvig

2

Preliminary reading list, subject to change!!!• Chap.1: Fundamentals, sections 1.1 - 1.12 (pages 2-54)• Chap.2: ILP, sections 2.1 - 2.2 and parts of 2.3 (pages 66-81), section 2.7

(pages 114-118), parts of section 2.9 (pages 121-127, stop at speculation), section 2.11 - 2.12 (pages 138 - 141). (Sections 2.4 - 2.6 are covered by similar material in our computer design course)

• Chap.3: Limits on ILP, section 3.1 and parts of section 3.2 (pages 154 -159), section 3.5 - 3.8 (pages 172-185).

• Chap.4: Multiprocessors and TLP, sections 4.1 - 4.5, 4.8 - 4.10 • Chap.5: Memory hierachy, section 5.1 - 5.3 (pages 288 - 315).

App A: section A 1 (Expected to be repetition from other courses)

7 Lasse Natvig

• App A: section A.1 (Expected to be repetition from other courses)• Appendix E, interconnection networks, pages E2-E14, E20-E25, E29-E37

and E45-E51.• App. F: Vector processors, sections F1 - F4 and F8 (pages F-2 - F-32, F-

44 - F-45)• Data prefetch mechanisms (ACM Computing Survey)• Piranha, (To be announced)• Multicores (New bookchapter) (To be announced)• (App. D; embedded systems?) see our new course TDT4258

Mikrokontroller systemdesign

People involvedLasse Natvig

Course responsible, [email protected]

Ian Bratt

Lecturer (Al t Til )

8 Lasse Natvig

Lecturer (Also at Tilera.com)[email protected]

Alexandru Iordan

Teaching assistant (Also PhD-student)[email protected]

http://www.idi.ntnu.no/people/

research.idi.ntnu.no/multicore

9 Lasse Natvig

Some few highlights:- Green computing, 2xPhD + master students- Multicore memory systems, 3 x PhD theses- Multicore programming and parallel computing- Cooperation with industry

Prefetching ---pfjudge

10 Lasse Natvig

”Computational computer architecture” • Computational science and engineering (CSE)

– Computational X, X = comp.arch.• Simulates new multicore architectures

– Last level, shared cache fairness (PhD-student M. Jahre)– Bandwidth aware prefetching (PhD-student M. Grannæs)

• Complex cycle-accurate simulators– 80 000 lines C++ 20 000 lines python

11 Lasse Natvig

– 80 000 lines C++, 20 000 lines python– Open source, Linux-based

• Design space exploration (DSE)– one dimension for each arch. parameter– DSE sample point = specific multicore configuration– performance of a selected set of configurations evaluated by

simulating the execution of a set of workloads

Experiment Infrastructure• Stallo compute cluster

– 60 Teraflop/s peak

– 5632 processing cores

– 12 TB total memory

– 128 TB centralized disk

– Weighs 16 tons

12 Lasse Natvig

• Multi-core research– About 60 CPU years allocated per

year to our projects

– Typical research paper uses 5 to 12 CPU years for simulation (extensive, detailed design space exploration)

3

The End of Moore’s lawfor single-core microprocessors

13 Lasse Natvig

But Moore’s law still holds for FPGA, memory and multicore processors

Motivational background• Why multicores

– in all market segments from mobile phones to supercomputers

• The ”end” of Moores law

• The power wall

• The memory wall

14 Lasse Natvig

The memory wall

• The bandwith problem

• ILP limitations

• The complexity wall

Energy & Heat Problems• Large power

consumption– Costly

– Heat problems

– Restricted battery operation time

15 Lasse Natvig

operation time

• Google ”Open House Trondheim 2006”– ”Performance/Watt

is the only flat trend line”

The Memory Wall

CPU60%/year

DRAM9%/1

10

100

1000

P-M gap grows 50% / year

Per

form

ance

“Moore’s Law”

16 Lasse Natvig

• The Processor Memory Gap

• Consequence: deeper memory hierachies– P – Registers – L1 cache – L2 cache – L3 cache – Memory - - -

– Complicates understanding of performance• cache usage has an increasing influence on performance

9%/year1

1980 1990 2000

The I/O pin or Bandwidth problem

• # I/O signaling pins– limited by physical

tecnology

– speeds have not increased at the same rate as processor clock rates

17 Lasse Natvig

• Projections– from ITRS (International

Technology Roadmap for Semiconductors)

[Huh, Burger and Keckler 2001]

The limitations of ILP (Instruction Level Parallelism) in Applications

20

25

30

2

2.5

3

cycl

es (

%)

dup

18 Lasse Natvig

0 1 2 3 4 5 6+0

5

10

15

0 5 10 150

0.5

1

1.5

Fra

ctio

n of

tota

l

Number of instructions issued

Spe

ed

Instructions issued per cycle

4

Reduced Increase in Clock Frequency

19 Lasse Natvig

Solution: Multicore architectures (also called Chip Multi-processors - CMP)

• More power-efficient– Two cores with clock frequency f/2

can potentially achieve the same speed as one at frequency f with 50% reduction in total energy consumption[Olukotun & Hammond 2005]

20 Lasse Natvig

• Exploits Thread Level Parallelism (TLP)– in addition to ILP

– requires multiprogramming orparallel programming

• Opens new possibilities for architectural innovations

Why heterogeneous multicores?• Specialized HW is

faster than general HW– Math co-processor

– GPU, DSP, etc…

• Benefits of

Cell BE processor

21 Lasse Natvig

customization– Similar to ASIC vs. general

purpose programmable HW

• Amdahl’s law– Parallel speedup limited by

serial fraction• 1 super-core

CPU – GPU – convergence(Performance – Programmability)

Processors: Larrabee, Fermi, …Languages: CUDA, OpenCL, …

22 Lasse Natvig

Parallel processing – conflicting goals

PowerefficiencyProgrammability

Portability

PerformanceThe P6-model: Parallel Processing challenges: Performance, Portability, Programmability and Power efficiency

23 Lasse Natvig

PowerefficiencyProgrammability

• Examples;

– Performance tuning may reduce portability• Eg. Datastructures adapted to cache block size

– New languages for higher programmability may reduce performance and increase power consumption

Multicore programming challenges• Instability, diversity, conflicting goals … what to do?• What kind of parallel programming?

– Homogeneous vs. heterogeneous– DSL vs. general languages– Memory locality

• What to teach?– Teaching should be founded on

active research

• Two layers of programmers

24 Lasse Natvig

y p g– The Landscape of Parallel Computing Research: A View from

Berkeley [Asan+06]• Krste Asanovic presentation at ACACES Summerschool 2007

– 1) Programmability layer (Productivity layer) (80 - 90%)• ”Joe the programmer”

– 2) Performance layer (Efficiency layer) (10 - 20%)• Both layers involved in HPC• Programmability an issue also at the performance-layer

5

Personal Health

Image Retrieval

Hearing, Music

SpeechParallel Browser

Design Patterns/Motifs

Parallel Computing Laboratory, U.C. Berkeley,(Slide adapted from Dave Patterson )

Easy to write correct programs that run efficiently on manycore

Composition & Coordination Language (C&CL)

P ll l

C&CL Compiler/Interpreter

orm

ance

25 Lasse Natvig25

Sketching

Legacy Code

SchedulersCommunication & Synch.

Primitives

Efficiency Language Compilers

Legacy OS

Multicore/GPGPU

OS Libraries & Services

RAMP Manycore

Hypervisor

Parallel Libraries

Parallel Frameworks

Autotuners

Efficiency Languages

Dia

gn

osi

ng

Po

wer

/Per

fo

Classes of computers• Servers

– storage servers– compute servers (supercomputers) – web servers– high availability– scalability– throughput oriented (response time of less importance)

• Desktop (price 3000 NOK – 50 000 NOK)– the largest market

26 Lasse Natvig

g– price/performance focus– latency oriented (response time)

• Embedded systems– the fastest growing market (”everywhere”)– TDT 4258 Microcontroller system design– ATMEL, Nordic Semic., ARM, EM, ++

Falanx (Mali) ARM Norway

27 Lasse Natvig

Borgar FXI Technologies”An idependent compute platform to gather the fragmented mobile space and thus help accelerate the prolifitation of content and applications eco- systems (I.e build an ARM based SoC, put it

28 Lasse Natvig

• http://www.fxitech.com/– ”Headquartered in Trondheim

• But also an office in Silicon Valley …”

, pin a memory card, connect it to the web- and voila, you got iPhone for the masses ).”

Trends • For technology, costs, use

• Help predicting the future

• Product development time – 2-3 years

– design for the next technology

29 Lasse Natvig

– Why should an architecture live longer than a product?

Comp. Arch. is an Integrated Approach

• What really matters is the functioning of the complete system – hardware, runtime system, compiler, operating system, and

application

– In networking, this is called the “End to End argument”

30 Lasse Natvig

• Computer architecture is not just about transistors(not at all), individual instructions, or particular implementations– E.g., Original RISC projects replaced complex instructions with a

compiler + simple instructions

6

Computer Architecture is Design and Analysis

Design

Analysis

Architecture is an iterative process:• Searching the space of possible designs• At all levels of computer systems

C ti it

31 Lasse Natvig

Creativity

Good IdeasGood IdeasMediocre IdeasBad Ideas

Cost /PerformanceAnalysis

TDT4260 Course FocusUnderstanding the design techniques, machine

structures, technology factors, evaluation methods that will determine the form of computers in 21st Century

Technology ProgrammingLanguages

Parallelism

32 Lasse Natvig

Languages

OperatingSystems History

Applications Interface Design(ISA)

Measurement & Evaluation

Computer Architecture:• Organization• Hardware/Software Boundary

Compilers

Holistic approache.g., to programmability

33 Lasse Natvig

Multicore, interconnect, memory

Operating System & system software

Parallel & concurrent programming

Moore’s Law: 2X transistors / “year”

34 Lasse Natvig

• “Cramming More Components onto Integrated Circuits”– Gordon Moore, Electronics, 1965

• # of transistors / cost-effective integrated circuit double every N months (12 ≤ N ≤ 24)

Tracking Technology Performance Trends• 4 critical implementation technologies:

– Disks, – Memory, – Network, – Processors

• Compare for Bandwidth vs. Latency

35 Lasse Natvig

improvements in performance over time• Bandwidth: number of events per unit time

– E.g., M bits/second over network, M bytes / second from disk

• Latency: elapsed time for a single event– E.g., one-way network delay in microseconds,

average disk access time in milliseconds

Latency Lags Bandwidth (last ~20 years)

100

1000

10000

Relative BW

Processor

Memory

Network

Disk

• Performance Milestones

• Processor: ‘286, ‘386, ‘486, Pentium, Pentium Pro, Pentium 4 (21x,2250x)

• Ethernet: 10Mb, 100Mb, 1000Mb, 10000 Mb/s (16x,1000x)

• Memory Module: 16bit plain DRAM, P M d DRAM 32b 64b SDRAM

CPU high, Memory low(“Memory Wall”)

36 Lasse Natvig

1

10

100

1 10 100

Relative Latency Improvement

Improvement

(Latency improvement = Bandwidth improvement)

Page Mode DRAM, 32b, 64b, SDRAM, DDR SDRAM (4x,120x)

• Disk : 3600, 5400, 7200, 10000, 15000 RPM (8x, 143x)

(Processor latency = typical # of pipeline-stages * time pr. clock-cycle)

7

COST and COTS• Cost

– to produce one unit

– include (development cost / # sold units)

– benefit of large volume

• COTSdit ff th h lf

37 Lasse Natvig

– commodity off the shelf

Speedup• General definition:

Speedup (p processors) =

• For a fixed problem size (input data set), performance = 1/time

Performance (p processors)

Performance (1 processor)

Superlinear speedup ?

38 Lasse Natvig

performance 1/time– Speedup

fixed problem (p processors) =

• Note: use best sequential algorithm in the uni-processor

solution, not the parallel algorithm with p = 1

Time (1 processor)

Time (p processors)

Amdahl’s Law (1967) (fixed problem size)• “If a fraction s of a

(uniprocessor) computation is inherently serial, the speedup is at most 1/s”

• Total work in computation– serial fraction s– parallel fraction p

39 Lasse Natvig

p p– s + p = 1 (100%)

• S(n) = Time(1) / Time(n)

= (s + p) / [s +(p/n)]

= 1 / [s + (1-s) / n]

= n / [1 + (n - 1)s]• ”pessimistic and famous”

Gustafson’s “law” (1987)(scaled problem size, fixed execution time)

• Total execution time on parallel computer with nprocessors is fixed– serial fraction s’– parallel fraction p’– s’ + p’ = 1 (100%)

• S’(n) = Time’(1)/Time’(n)

40 Lasse Natvig

• S (n) = Time (1)/Time (n) = (s’ + p’n)/(s’ + p’)= s’ + p’n = s’ + (1-s’)n= n +(1-n)s’

• Reevaluating Amdahl's law, John L. Gustafson, CACM May 1988, pp 532-533. ”Not a new law, but Amdahl’s law with changed assumptions”

How the serial fraction limits speedup

• Amdahl’s law

• Work hard to

41 Lasse Natvig

reduce the serial part of the application– remember IO

– think different(than traditionally or sequentially)

= serial fraction

1

TDT4260 Computer architectureMini-project

PhD candidate Alexandru Ciprian IordanInstitutt for datateknikk og informasjonsvitenskap

2

What is it…? How much…?

• The mini-project is the exercise part of TDT4260 course

• This year the students will need to develop and evaluate a PREFETCHER

• The mini-project accounts for 20 % of the final grade in TDT4260

• 80 % for report• 20 % for oral presentation

3

What will you work with…

• Modified version of M5 (for development and evaluation)

• Computing time on Kongull cluster (for benchmarking)

• More at: http://dm-ark.idi.ntnu.no/

4

M5

• Initially developed by the University of Michigan

• Enjoys a large community of users and developers

• Flexible object-oriented architecture

• Has support for 3 ISA: ALPHA, SPARC and MIPS

5

Team work…

• You need to work in groups of 2-4 students

• Grade is based on written paper AND oral presentation (chose you best speaker)

6

Time Schedule and Deadlines

More on It’s learning

7

Web page presentation

TDT 4260App A.1, Chap 2

Instruction Level Parallelism

Contents

• Instruction level parallelism Chap 2

• Pipelining (repetition) App A

▫ Basic 5-step pipeline

• Dependencies and hazards Chap 2.1

▫ Data, name, control, structural

• Compiler techniques for ILP Chap 2.2

• (Static prediction Chap 2.3)

▫ Read this on your own

• Project introduction

Instruction level parallelism (ILP)

• A program is sequence of instructions typically written to be executed one after the other

• Poor usage of CPU resources! (Why?)

• Better: Execute instructions in parallel

▫ 1: PipelinePartial overlap of instruction execution

▫ 2: Multiple issueTotal overlap of instruction execution

• Today: Pipelining

Pipelining

(1/3)

Pipelining (2/3)

• Multiple different stages executed in parallel

▫ Laundry in 4 different stages

▫ Wash / Dry / Fold / Store

• Assumptions:

▫ Task can be split into stages

▫ Storage of temporary data

▫ Stages synchronized

▫ Next operation known before last finished?

Pipelining (3/3)

• Good Utilization: All stages are ALWAYS in use

▫ Washing, drying, folding, ...

▫ Great usage of resources!

• Common technique, used everywhere

▫ Manufacturing, CPUs, etc

• Ideal: time_stage = time_instruction / stages

▫ But stages are not perfectly balanced

▫ But transfer between stages takes time

▫ But pipeline may have to be emptied

▫ ...

Example: MIPS64 (1/2)

• RISC

• Load/store

• Few instruction formats

• Fixed instruction length

• 64-bit▫ DADD = 64 bits ADD

▫ LD = 64 bits L(oad)

• 32 registers (R0 = 0)

• EA = offset(Register)

• Pipeline▫ IF: Instruction fetch

▫ ID: Instruction decode / register fetch

▫ EX: Execute / effective address (EA)

▫ MEM: Memory access

▫ WB: Write back (reg)

Example: MIPS64 (2/2)

Instr.

Order

Time (clock cycles)

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 6 Cycle 7Cycle 5

Big Picture:

• What are some real world examples of pipelining?

• Why do we pipeline?• Does pipelining increase or decrease instruction

throughput?• Does pipelining increase or decrease instruction

latency?

Big Picture (continued):

• Computer Architecture is the study of design tradeoffs!!!!

• There is no “philosophy of architecture” and no “perfect architecture”. This is engineering, not science.

• What are the costs of pipelining?• For what types of devices is pipelining not a

good choice?

Improve speedup?

• Why not perfect speedup?▫ Sequential programs▫ One instruction dependent on another▫ Not enough CPU resources

• What can be done?▫ Forwarding (HW)▫ Scheduling (SW / HW)▫ Prediction (SW / HW)

• Both hardware (dynamic) and compiler (static) can help

Dependencies and hazards

• Dependencies▫ Parallel instructions can be executed in parallel▫ Dependent instructions are not parallel

� I1: DADD R1, R2, R3� I2: DSUB R4, R1, R5

▫ Property of the instructions• Hazards

▫ Situation where a dependency causes an instruction to give a wrong result

▫ Property of the pipeline▫ Not all dependencies give hazards

� Dependencies must be close enough in the instruction stream to cause a hazard

Dependencies

• (True) data dependencies

▫ One instruction reads what an earlier has written

• Name dependencies

▫ Two instructions use the same register / mem loc

▫ But no flow of data between them

▫ Two types: Anti and output dependencies

• Control dependencies

▫ Instructions dependent on the result of a branch

• Again: Independent of pipeline implementation

Hazards

• Data hazards

▫ Overlap will give different result from sequential

▫ RAW / WAW / WAR

• Control hazards

▫ Branches

▫ Ex: Started executing the wrong instruction

• Structural hazards

▫ Pipeline does not support this combination of instr.

▫ Ex: Register with one port, two stages want to read

Instr.

Order

add r1,r2,r3

sub r4,r1,r3

and r6,r1,r7

or r8,r1,r9

xor r10,r1,r11

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Data dependency � Hazard?Figure A.6, Page A-16

• Read After Write (RAW)InstrJ tries to read operand before InstrI writes it

• Caused by a true data dependency

• This hazard results from an actual need for communication.

Data Hazards (1/3)

I: add r1,r2,r3J: sub r4,r1,r3

• Write After Read (WAR)InstrJ writes operand before InstrI reads it

• Caused by an anti dependencyThis results from reuse of the name “r1”

• Can’t happen in MIPS 5 stage pipeline because:

▫ All instructions take 5 stages, and

▫ Reads are always in stage 2, and

▫ Writes are always in stage 5

I: sub r4,r1,r3 J: add r1,r2,r3

Data Hazards (2/3) Data Hazards (3/3)• Write After Write (WAW)

InstrJ writes operand before InstrI writes it.

• Caused by an output dependency

• Can’t happen in MIPS 5 stage pipeline because: ▫ All instructions take 5 stages, and ▫ Writes are always in stage 5

• WAR and WAW can occur in more complicated pipes

I: sub r1,r4,r3 J: add r1,r2,r3

Instr.

Order

add r1,r2,r3

sub r4,r1,r3

and r6,r1,r7

or r8,r1,r9

xor r10,r1,r11

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

RegALU

DMemIfetch Reg

RegALU

DMemIfetch Reg

ForwardingFigure A.7, Page A-18

IF ID/RF EX MEM WB

Instr.

Order

Ld r1,r2

add r4,r1,r3

and r6,r1,r7

or r8,r1,r9

xor r10,r1,r11

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Can all data hazards be solved via

forwarding???IF ID/RF EX MEM WB

Structural Hazards (Memory Port)Figure A.4, Page A-14

Instr.

Order

Time (clock cycles)

Load

Instr 1

Instr 2

Instr 3

Instr 4

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 6 Cycle 7Cycle 5

Reg

ALU

DMemIfetch Reg

Hazards, Bubbles (Similar to Figure A.5, Page A-15)

Instr.

Order

Time (clock cycles)

Load

Instr 1

Ld r1, r2

Stall

Add r1, r1, r1

RegALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Reg

ALU

DMemIfetch Reg

Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 6 Cycle 7Cycle 5

Reg

ALU

DMemIfetch Reg

Bubble Bubble Bubble BubbleBubble

How do you “bubble” the pipe? How can we avoid this hazard?

Control hazards (1/2)

• Sequential execution is predictable,(conditional) branches are not

• May have fetched instructions that should not be executed

• Simple solution (figure): Stall the pipeline (bubble)▫ Performance loss depends on number of branches in the program

and pipeline implementation

▫ Branch penaltyC

Possibly wrong instruction Correct instruction

Control hazards (2/2)• What can be done?

▫ Always stop (previous slide)

� Also called freeze or flushing of the pipeline

▫ Assume no branch (=assume sequential)

� Must not change state before branch instr. is complete

▫ Assume branch

� Only smart if the target address is ready early

▫ Delayed branch

� Execute a different instruction while branch is evaluated

� Static techniques (fixed rule or compiler)

Example

• Assume branch conditionals are evaluated in the EX stage, and determine the fetch address for the following cycle.

• If we always stall, how many cycles are bubbled? • Assume branch not taken, how many bubbles for an

incorrect assumption? • Is stalling on every branch ok? • What optimizations could be done to improve stall

penalty?

Dynamic scheduling

• So far: Static scheduling

▫ Instructions executed in program order

▫ Any reordering is done by the compiler

• Dynamic scheduling

▫ CPU reorders to get a more optimal order

� Fewer hazards, fewer stalls, ...

▫ Must preserve order of operations where reordering could change the result

▫ Covered by TDT 4255 Hardware design

Compiler techniques for ILP

• For a given pipeline and superscalarity▫ How can these be best utilized?

▫ As few stalls from hazards as possible

• Dynamic scheduling▫ Tomasulo’s algorithm etc. (TDT4255)

▫ Makes the CPU much more complicated

• What can be done by the compiler?▫ Has ”ages” to spend, but less knowledge

▫ Static scheduling, but what else?

Example

Source code:

for (i = 1000; i >0; i=i-1)x[i] = x[i] + s;

Notice:

• Lots of dependencies

• No dependencies between iterations

• High loop overhead

� Loop unrolling

MIPS:

Loop: L.D F0,0(R1) ; F0 = x[i]

ADD.D F4,F0,F2 ; F2 = s

S.D F4,0(R1) ; Store x[i] + s

DADDUI R1,R1,#-8 ; x[i] is 8 bytes

BNE R1,R2,Loop ; R1 = R2?

Static schedulingLoop: L.D F0,0(R1)

stopp

ADD.D F4,F0,F2

stopp

stopp

S.D F4,0(R1)

DADDUI R1,R1,#-8

stopp

BNE R1,R2,Loop

Loop: L.D F0,0(R1)

DADDUI R1,R1,#-8

ADD.D F4,F0,F2

stopp

stopp

S.D F4,8(R1)BNE R1,R2,Loop

Result: From 9 cycles per iteration to 7(Delays from table in figure 2.2)

Loop unrolling

Loop: L.D F0,0(R1)

ADD.D F4,F0,F2

S.D F4,0(R1)

DADDUI R1,R1,#-8

BNE R1,R2,Loop

Loop: L.D F0,0(R1)

ADD.D F4,F0,F2

S.D F4,0(R1)

L.D F6,-8(R1)

ADD.D F8,F6,F2

S.D F8,-8(R1)

L.D F10,-16(R1)

ADD.D F12,F10,F2

S.D F12,-16(R1)

L.D F14,-24(R1)

ADD.D F16,F14,F2

S.D F16,-24(R1)

DADDUI R1,R1,#-32

BNE R1,R2,Loop

• Reduced loop overhead

• Requires number of iterations divisible by n (here n=4)

• Register renaming

• Offsets have changed

• Stalls not shown

Loop: L.D F0,0(R1)

L.D F6,-8(R1)

L.D F10,-16(R1)

L.D F14,-24(R1)

ADD.D F4,F0,F2

ADD.D F8,F6,F2

ADD.D F12,F10,F2

ADD.D F16,F14,F2

S.D F4,0(R1)

S.D F8,-8(R1)

DADDUI R1,R1,#-32

S.D F12,-16(R1)

S.D F16,-24(R1)BNE R1,R2,Loop

Loop: L.D F0,0(R1)

ADD.D F4,F0,F2

S.D F4,0(R1)

L.D F6,-8(R1)

ADD.D F8,F6,F2

S.D F8,-8(R1)

L.D F10,-16(R1)

ADD.D F12,F10,F2

S.D F12,-16(R1)

L.D F14,-24(R1)

ADD.D F16,F14,F2

S.D F16,-24(R1)

DADDUI R1,R1,#-32

BNE R1,R2,Loop

Avoids stall after: L.D(1), ADD.D(2), DADDUI(1)

Loop unrolling: Summary

• Original code 9 cycles per element

• Scheduling 7 cycles per element

• Loop unrolling 6,75 cycles per element

▫ Unrolled 4 iterations

• Combination 3,5 cycles per element

▫ Avoids stalls entirely

Compiler reduced execution time by 61%

Loop unrolling in practice

• Do not usually know upper bound of loop• Suppose it is n, and we would like to unroll the loop

to make k copies of the body• Instead of a single unrolled loop, we generate a pair

of consecutive loops:▫ 1st executes (n mod k) times and has a body that is the

original loop▫ 2nd is the unrolled body surrounded by an outer loop

that iterates (n/k) times

• For large values of n, most of the execution time will be spent in the unrolled loop

TDT 4260Chap 2, Chap 3

Instruction Level Parallelism (cont)

Review

• Name real-world examples of pipelining

• Does pipelining lower instruction latency?

• What is the advantage of pipelining?

• What are some disadvantages of pipelining?

• What can a compiler do to avoid processor stalls?

• What are the three types of data dependences?

• What are the three types of pipeline hazards?

Contents

• Very Large Instruction Word Chap 2.7

▫ IA-64 and EPIC

• Instruction fetching Chap 2.9

• Limits to ILP Chap 3.1/2

• Multi-threading Chap 3.5

• CPI ≥ 1 if issue only 1 instruction every clock cycle

• Multiple-issue processors come in 3 flavors:

1. Statically-scheduled superscalar processors• In-order execution

• Varying number of instructions issued (compiler)

2. Dynamically-scheduled superscalar processors • Out-of-order execution

• Varying number of instructions issued (CPU)

3. VLIW (very long instruction word) processors• In-order execution

• Fixed number of instructions issued

Getting CPI below 1

VLIW: Very Large Instruction Word (1/2)

• Each VLIW has explicit coding for multiple operations▫ Several instructions combined into packets

▫ Possibly with parallelism indicated

• Tradeoff instruction space for simple decoding▫ Room for many operations

▫ Independent operations => execute in parallel

▫ E.g., 2 integer operations, 2 FP ops, 2 Memory refs, 1 branch

VLIW: Very Large Instruction Word (2/2)

• Assume 2 load/store, 2 fp, 1 int/branch▫ VLIW with 0-5 operations.

▫ Why 0?

• Important to avoid empty instruction slots▫ Loop unrolling

▫ Local scheduling

▫ Global scheduling

� Scheduling across branches

• Difficult to find all dependencies in advance▫ Solution1: Block on memory accesses

▫ Solution2: CPU detects some dependencies

Recall:

Unrolled Loop

that minimizes

stalls for Scalar

Loop: L.D F0,0(R1)

L.D F6,-8(R1)

L.D F10,-16(R1)

L.D F14,-24(R1)

ADD.D F4,F0,F2

ADD.D F8,F6,F2

ADD.D F12,F10,F2

ADD.D F16,F14,F2

S.D F4,0(R1)

S.D F8,-8(R1)

DADDUI R1,R1,#-32

S.D F12,-16(R1)

S.D F16,-24(R1)BNE R1,R2,Loop

Source code:

for (i = 1000; i >0; i=i-1)x[i] = x[i] + s;

Register mapping:

s � F2

i � R1

Loop Unrolling in VLIW

Memory Memory FP FP Int. op/ Clockreference 1 reference 2 operation 1 op. 2 branch

L.D F0,0(R1) L.D F6,-8(R1) 1

L.D F10,-16(R1) L.D F14,-24(R1) 2

L.D F18,-32(R1) L.D F22,-40(R1) ADD.D F4,F0,F2 ADD.D F8,F6,F2 3

L.D F26,-48(R1) ADD.D F12,F10,F2 ADD.D F16,F14,F2 4

ADD.D F20,F18,F2 ADD.D F24,F22,F2 5

S.D 0(R1),F4 S.D -8(R1),F8 ADD.D F28,F26,F2 6

S.D -16(R1),F12 S.D -24(R1),F16 7

S.D -32(R1),F20 S.D -40(R1),F24 DSUBUI R1,R1,#48 8

S.D -0(R1),F28 BNEZ R1,LOOP 9

Unrolled 7 iterations to avoid delays

7 results in 9 clocks, or 1.3 clocks per iteration (1.8X)

Average: 2.5 ops per clock, 50% efficiency

Note: Need more registers in VLIW (15 vs. 6 in SS)

Problems with 1st Generation VLIW

• Increase in code size

▫ Loop unrolling

▫ Partially empty VLIW

• Operated in lock-step; no hazard detection HW

▫ A stall in any functional unit pipeline causes entire processor to stall, since all functional units must be kept synchronized

▫ Compiler might predict function units, but caches hard to predict

▫ Moder VLIWs are “interlocked” (identify dependences between bundles and stall).

• Binary code compatibility

▫ Strict VLIW => different numbers of functional units and unit latencies require different versions of the code

VLIW Tradeoffs

• Advantages▫ “Simpler” hardware because the HW does not have to

identify independent instructions.

• Disadvantages▫ Relies on smart compiler▫ Code incompatibility between generations▫ There are limits to what the compiler can do (can’t move

loads above branches, can’t move loads above stores)

• Common uses▫ Embedded market where hardware simplicity is

important, applications exhibit plenty of ILP, and binary compatibility is a non-issue.

IA-64 and EPIC• 64 bit instruction set architecture

▫ Not a CPU, but an architecture▫ Itanium and Itanium 2 are CPUs

based on IA-64

• Made by Intel and Hewlett-Packard (itanium 2 and 3 designed in Colorado)

• Uses EPIC: Explicitly Parallel Instruction Computing • Departure from the x86 architecture• Meant to achieve out-of-order performance with in-

order HW + compiler-smarts▫ Stop bits to help with code density▫ Support for control speculation (moving loads above

branches)▫ Support for data speculation (moving loads above stores)

Details in Appendix G.6

Instruction bundle (VLIW)

Functional units and template

• Functional units:▫ I (Integer), M (Integer + Memory), F (FP), B (Branch),

L + X (64 bit operands + special inst.)

• Template field:▫ Maps instruction to functional unit

▫ Indicates stops: Limitations to ILP

Code example (1/2)

Code example 2/2Control Speculation

• Can the compiler schedule an independent load above a branch?

Bne R1, R2, TARGET

Ld R3, R4(0)

• What are the problems?

• EPIC provides speculative loadsLd.s R3, R4(0)

Bne R1, R2, TARGET

Check R4(0)

Data Speculation

• Can the compiler schedule an independent load above a store?

St R5, R6(0)

Ld R3, R4(0)

• What are the problems?

• EPIC provides “advanced loads” and an ALAT (Advanced Load Address Table)

Ld.a R3, R4(0) � creates entry in ALAT

St R5, R6(0) �looks up ALAT, if match, jump to fixup code

EPIC Conclusions• Goal of EPIC was to maintain advantages of VLIW, but

achieve performance of out-of-order.

• Results:

▫ Complicated bundling rules saves some space, but makes the hardware more complicated

▫ Add special hardware and instructions for scheduling loads above stores and branches (new complicated hardware)

▫ Add special hardware to remove branch penalties (predication)

▫ End result is a machine as complicated as an out-of-order, but now also requiring a super-sophisticated compiler.

Instruction fetching

• Want to issue >1 instruction every cycle

• This means fetching >1 instruction▫ E.g. 4-8 instructions fetched every cycle

• Several problems▫ Bandwidth / Latency

▫ Determining which instructions� Jumps

� Branches

• Integrated instruction fetch unit

Branch Target Buffer (BTB)

• Predicts next instruction address, sends it out beforedecoding instruction

• PC of branch sent to BTB

• When match is found, Predicted PC is returned

• If branch predicted taken, instruction fetch continues at Predicted PC

Branch Target Buffer (BTB)

• Predicts next instruction address, sends it out beforedecoding instruction

• PC of branch sent to BTB

• When match is found, Predicted PC is returned

• If branch predicted taken, instruction fetch continues at Predicted PC

Possible Optimizations????

Return Address Predictor

• Small buffer of return addresses acts as a stack

• Caches most recent return addresses

• Call ⇒ Push a return address on stack

• Return ⇒ Pop an address off stack & predict as new PC

0%

10%

20%

30%

40%

50%

60%

70%

0 1 2 4 8 16

Return address buffer entries

Misprediction frequency

go

m88ksim

cc1

compress

xlisp

ijpeg

perl

vortex

Integrated Instruction Fetch Units

• Recent designs have implemented the fetch stage as a separate, autonomous unit

▫ Multiple-issue in one simple pipeline stage is too complex

• An integrated fetch unit provides:

▫ Branch prediction

▫ Instruction prefetch

▫ Instruction memory access and buffering

Limits to ILP

• Advances in compiler technology + significantly new and different hardware techniques may be able to overcome limitations assumed in studies

• However, unlikely such advances when coupled with realistic hardware will overcome these limits in near future

• How much ILP is available using existing mechanisms with increasing HW budgets?

Chapter 3

Ideal HW Model1. Register renaming – infinite virtual registers

all register WAW & WAR hazards are avoided

2. Branch prediction – perfect; no mispredictions

3. Jump prediction – all jumps perfectly predicted

2 & 3 ⇒ no control dependencies; perfect speculation & an unbounded buffer of instructions available

4. Memory-address alias analysis – addresses known & a load can be moved before a store provided addresses not equal

1&4 eliminates all but RAW

5. perfect caches; 1 cycle latency for all instructions; unlimited instructions issued/clock cycle

Upper Limit to ILP: Ideal Machine(Figure 3.1)

Programs

0

20

40

60

80

100

120

140

160

gcc espresso li fpppp doducd tomcatv

54.862.6

17.9

75.2

118.7

150.1

Integer: 18 - 60

FP: 75 - 150

Inst

ruct

ion

s P

er C

lock

Instruction window

• Ideal HW need to know entire code

• Obviously not practical▫ Register dependencies scales quadratically

• Window: The set of instructions examined for simultaneous execution

• How does the size of the window affect IPC?▫ Too small window => Can’t see whole loops

▫ Too large window => Hard to implement

5563

18

75

119

150

3641

15

61 59 60

1015 12

49

16

45

10 13 11

35

15

34

8 8 914

914

0

20

40

60

80

100

120

140

160

gcc espresso li fpppp doduc tomcatv

Inst

ruct

ions

Per

Clo

ck

Infinite 2048 512 128 32

More Realistic HW: Window ImpactFigure 3.2

FP: 9 - 150

Integer: 8 - 63

IPC

Thread Level Parallelism (TLP)

• ILP exploits implicit parallel operations within a loop or straight-line code segment

• TLP explicitly represented by the use of multiple threads of execution that are inherently parallel

• Use multiple instruction streams to improve:1. Throughput of computers that run many programs

2. Execution time of a single application implemented as a multi-threaded program (parallel program)

Multi-threaded execution

• Multi-threading: multiple threads share the

functional units of 1 processor via overlapping▫ Must duplicate independent state of each thread e.g., a

separate copy of register file, PC and page table

▫ Memory shared through virtual memory mechanisms

▫ HW for fast thread switch; much faster than full process switch ≈ 100s to 1000s of clocks

• When switch?▫ Alternate instruction per thread (fine grain)

▫ When a thread is stalled, perhaps for a cache miss, another thread can be executed (coarse grain)

Fine-Grained Multithreading

• Switches between threads on each instruction▫ Multiples threads interleaved

• Usually round-robin fashion, skipping stalled threads

• CPU must be able to switch threads every clock

• Hides both short and long stalls▫ Other threads executed when one thread stalls

• But slows down execution of individual threads▫ Thread ready to execute without stalls will be delayed by

instructions from other threads

• Used on Sun’s Niagara

• Switch threads only on costly stalls (L2 cache miss)• Advantages

▫ No need for very fast thread-switching▫ Doesn’t slow down thread, since switches only when

thread encounters a costly stall

• Disadvantage: hard to overcome throughput losses from shorter stalls, due to pipeline start-up costs▫ Since CPU issues instructions from 1 thread, when a stall

occurs, the pipeline must be emptied or frozen ▫ New thread must fill pipeline before instructions can

complete

• => Better for reducing penalty of high cost stalls, where pipeline refill << stall time

Coarse-Grained Multithreading

Do both ILP and TLP?

• TLP and ILP exploit two different kinds of parallel structure in a program

• Can a high-ILP processor also exploit TLP?▫ Functional units often idle because of stalls or

dependences in the code

• Can TLP be a source of independent instructions that might reduce processor stalls?

• Can TLP be used to employ functional units that would otherwise lie idle with insufficient ILP?

• => Simultaneous Multi-threading (SMT)▫ Intel: Hyper-Threading

Simultaneous Multi-threading

1

2

3

4

5

6

7

8

9

M M FX FX FP FP BR CCCycleOne thread, 8 units

M = Load/Store, FX = Fixed Point, FP = Floating Point, BR = Branch, CC = Condition Codes

1

2

3

4

5

6

7

8

9

M M FX FX FP FP BR CCCycleTwo threads, 8 units

Simultaneous Multi-threading (SMT)

• A dynamically scheduled processor already has many HW mechanisms to support multi-threading▫ Large set of virtual registers

� Virtual = not all visible at ISA level

� Register renaming

▫ Dynamic scheduling

• Just add a per thread renaming table and keeping separate PCs▫ Independent commitment can be supported by logically

keeping a separate reorder buffer for each thread

Multi-threaded categories

Time (processor cycle) Superscalar Fine-Grained Coarse-Grained Multiprocessing

Simultaneous

Multithreading

Thread 1

Thread 2

Thread 3

Thread 4

Thread 5

Idle slot

Design Challenges in SMT• SMT makes sense only with fine-grained

implementation▫ How to reduce the impact on single thread performance?▫ Give priority to one or a few preferred threads

• Large register file needed to hold multiple contexts• Not affecting clock cycle time, especially in

▫ Instruction issue - more candidate instructions need to be considered

▫ Instruction completion - choosing which instructions to commit may be challenging

• Ensuring that cache and TLB conflicts generated by SMT do not degrade performance

1

TDT 4260 – lecture 4 – 2011• Contents

– Computer architecture introduction• Trends• Moore’s law• Amdahl’s law• Gustafson’s law

1 Lasse Natvig

Gustafson s law

– Why multiprocessor? Chap 4.1• Taxonomy• Memory architecture• Communication

– Cache coherence Chap 4.2• The problem• Snooping protocols

Updated lecture plan pr. 4/2Date and lecturer Topic

1: 14 Jan (LN, AI) Introduction, Chapter 1 / Alex: PfJudge2: 21 Jan (IB) Pipelining, Appendix A; ILP, Chapter 23: 3 Feb (IB) ILP, Chapter 2; TLP, Chapter 34: 4 Feb (LN) Multiprocessors, Chapter 4 5: 11 Feb MG Prefetching + Energy Micro guest lecture by Marius Grannæs &

pizza6: 18 Feb (LN) Multiprocessors continued

2 Lasse Natvig

7: 24 Feb (IB) Memory and cache, cache coherence (Chap. 5)8: 4 Mar (IB) Piranha CMP + Interconnection networks

9: 11 Mar (LN) Multicore architectures (Wiley book chapter) + Hill Marty Amdahl multicore ... Fedorova ... assymetric multicore ...

10: 18 Mar (IB) Memory consistency (4.6) + more on memory11: 25 Mar (JA, AI) (1) Kongull and other NTNU and NOTUR supercomputers (2)

Green computing12: 7 Apr (IB/LN) Wrap up lecture, remaining stuff

13: 8 Apr Slack – no lecture planned

Trends • For technology, costs, use

• Help predicting the future

• Product development time – 2-3 years

– design for the next technology

3 Lasse Natvig

– Why should an architecture live longer than a product?

Comp. Arch. is an Integrated Approach

• What really matters is the functioning of the complete system – hardware, runtime system, compiler, operating

system, and application

– In networking this is called the “End to End argument”

4 Lasse Natvig

– In networking, this is called the End to End argument

• Computer architecture is not just about transistors(not at all), individual instructions, or particular implementations– E.g., Original RISC projects replaced complex

instructions with a compiler + simple instructions

Computer Architecture is Design and Analysis

Design

Analysis

Architecture is an iterative process:• Searching the huge space of possible designs• At all levels of computer systems

C ti it

5 Lasse Natvig

Creativity

Good IdeasGood IdeasMediocre IdeasBad Ideas

Cost /PerformanceAnalysis

TDT4260 Course FocusUnderstanding the design techniques, machine

structures, technology factors, evaluation methods that will determine the form of computers in 21st Century

Technology ProgrammingLanguages

Parallelism

6 Lasse Natvig

Languages

OperatingSystems History

Applications Interface Design(ISA)

Measurement & Evaluation

Computer Architecture:• Organization• Hardware/Software Boundary

Compilers

2

Holistic approache.g., to programmability combined with performance

TBP (Wool, TBB)Energy aware task pool implementation

NTNU-principle: Teaching based on research, example, PhD-project of Alexandru Iordan:

7 Lasse Natvig

Multicore, interconnect, memory

Operating System & system software

Parallel & concurrent programming

Multicore memory systems (Dybdahl-PhD, Grannæs-PhD, Jahre-PhD, M5-sim, pfJudge)

Moore’s Law: 2X transistors / “year”

8 Lasse Natvig

• “Cramming More Components onto Integrated Circuits”– Gordon Moore, Electronics, 1965

• # of transistors / cost-effective integrated circuit double every N months (12 ≤ N ≤ 24)

Tracking Technology Performance Trends• 4 critical implementation technologies:

– Disks, – Memory, – Network, – Processors

• Compare for Bandwidth vs. Latency

9 Lasse Natvig

improvements in performance over time• Bandwidth: number of events per unit time

– E.g., M bits/second over network, M bytes / second from disk

• Latency: elapsed time for a single event– E.g., one-way network delay in microseconds,

average disk access time in milliseconds

Latency Lags Bandwidth (last ~20 years)

100

1000

10000

Relative BW

Processor

Memory

Network

Disk

• Performance Milestones

• Processor: ‘286, ‘386, ‘486, Pentium, Pentium Pro, Pentium 4 (21x,2250x)

• Ethernet: 10Mb, 100Mb, 1000Mb, 10000 Mb/s (16x,1000x)

• Memory Module: 16bit plain DRAM, P M d DRAM 32b 64b SDRAM

CPU high, Memory low(“Memory Wall”)

10 Lasse Natvig

1

10

100

1 10 100

Relative Latency Improvement

Improvement

(Latency improvement = Bandwidth improvement)

Page Mode DRAM, 32b, 64b, SDRAM, DDR SDRAM (4x,120x)

• Disk : 3600, 5400, 7200, 10000, 15000 RPM (8x, 143x)

-----------------

(Processor latency = typical # of pipeline-stages * time pr. clock-cycle)

COST and COTS• Cost

– to produce one unit

– include (development cost / # sold units)

– benefit of large volume

• COTS

11 Lasse Natvig

• COTS– commodity off the shelf

• much better performance/price pr. component

• strong influence on the selection of components for building supercomputers in more than 20 years

Speedup• General definition:

Speedup (p processors) =

• For a fixed problem size (input data set), performance = 1/time

Performance (p processors)

Performance (1 processor)

12 Lasse Natvig

performance 1/time– Speedup

fixed problem (p processors) =

• Note: use best sequential algorithm in the uni-processor

solution, not the parallel algorithm with p = 1

Time (1 processor)

Time (p processors)

Superlinear speedup ?

3

Amdahl’s Law (1967) (fixed problem size)• “If a fraction s of a

(uniprocessor) computation is inherently serial, the speedup is at most 1/s”

• Total work in computation– serial fraction s– parallel fraction p

13 Lasse Natvig

p p– s + p = 1 (100%)

• S(n) = Time(1) / Time(n)

= (s + p) / [s +(p/n)]

= 1 / [s + (1-s) / n]

= n / [1 + (n - 1)s]• ”pessimistic and famous”

Gustafson’s “law” (1987)(scaled problem size, fixed execution time)

• Total execution time on parallel computer with nprocessors is fixed– serial fraction s’– parallel fraction p’– s’ + p’ = 1 (100%)

• S’(n) = Time’(1)/Time’(n)

14 Lasse Natvig

• S (n) = Time (1)/Time (n) = (s’ + p’n)/(s’ + p’)= s’ + p’n = s’ + (1-s’)n= n +(1-n)s’

• Reevaluating Amdahl's law, John L. Gustafson, CACM May 1988, pp 532-533. ”Not a new law, but Amdahl’s law with changed assumptions”

How the serial fraction limits speedup

• Amdahl’s law

• Work hard to

15 Lasse Natvig

reduce the serial part of the application– remember IO

– think different(than traditionally or sequentially)

= serial fraction

Single/ILP Multi/TLP• Uniprocessor trends

– Getting too complex– Speed of light– Diminishing returns from ILP

• Multiprocessor

16 Lasse Natvig

Multiprocessor – Focus in the textbook: 4-32 CPUs– Increased performance through parallelism– Multichip– Multicore ((Single) Chip Multiprocessors – CMP)– Cost effective

• Right balance of ILP and TLP is unclear today– Desktop vs. server?

Other Factors Multiprocessors• Growth in data-intensive applications

– Databases, file servers, multimedia, …

• Growing interest in servers, server performance• Increasing desktop performance less important

– Outside of graphics

17 Lasse Natvig

Outside of graphics

• Improved understanding in how to use multiprocessors effectively – Especially in servers where significant natural TLP

• Advantage of leveraging design investment by replication – Rather than unique design

• Power/cooling issues multicore

Multiprocessor – Taxonomy• Flynn’s taxonomy (1966, 1972)

– Taxonomy = classification

– Widely used, but perhaps a bit coarse

• Single Instruction Single Data (SISD)– Common uniprocessor

Si l I t ti M lti l D t (SIMD)

18 Lasse Natvig

• Single Instruction Multiple Data (SIMD)– “ = Data Level Parallelism (DLP)”

• Multiple Instruction Single Data (MISD)– Not implemented?– Pipeline / Stream processing / GPU ?

• Multiple Instruction Multiple Data (MIMD)– Used today– “ = Thread Level Parallelism (TLP)”

4

Flynn’s taxonomy (1/2)Single/Multiple Instruction/Data Stream

19 Lasse Natvig

SISD uniprocessor

MIMD w/shared memorySIMD w/distributed memory

Flynn’s taxonomy (2/2), MISDSingle/Multiple Instruction/Data Stream

20 Lasse Natvig

MISD (software pipeline)

Advantages to MIMD• Flexibility

– High single-user performance, multiple programs, multiple threads

– High multiple-user performance

– Combination

• Built using commercial off-the-shelf (COTS) t

21 Lasse Natvig

components– 2 x Uniprocessor = Multi-CPU

– 2 x Uniprocessor core on a single chip = Multicore

MIMD: Memory architecture

P1 Pn

P1

$ $

Pn

22 Lasse Natvig

$

Interconnection network (IN)

$Mem MemInterconnection network (IN)

Mem Mem

Centralized Memory Distributed Memory

Centralized Memory Multiprocessor • Also called

• Symmetric Multiprocessors (SMPs)

• Uniform Memory Access (UMA) architecture

• Shared memory becomes bottleneck

23 Lasse Natvig

y

• Large caches single memory can satisfy memory demands of small number of processors

• Can scale to a few dozen processors by using a switch and by using many memory banks

• Scaling beyond that is hard

Distributed (Shared) Memory Multiprocessor

• Pro: Cost-effective way to scale memory bandwidth • If most accesses are to

local memory

• Pro: Reduces latency of local memory accesses

24 Lasse Natvig

• Con: Communication becomes more complex

• Pro/Con: Possible to change software to take advantage of memory that is close, but this can also make SW less portable– Non-Uniform Memory Access (NUMA)

5

MP (MIMD), cluster of SMPs

Proc.

Caches

Node Interc Network

Proc.

Caches

Proc.

Caches

Proc.

Caches

Node Interc Network

Proc.

Caches

Proc.

Caches

25 Lasse Natvig

Cluster Interconnection Network

Memory I/O

Node Interc. Network

Memory I/O

Node Interc. Network

• Combination of centralized and distributed

• Like an early version of the kongull-cluster

Distributed memory

1. Shared address space• Logically shared, physically distributed

• Distributed Shared Memory (DSM)

NUMA hit t

P

M

Network

P P

Conceptual Model

26 Lasse Natvig

• NUMA architecture

2. Separate address spaces• Every P-M module is a separate

computer

• Multicomputer

• Clusters

• Not a focus in this course

Conceptual Model

P

M

P

M

P

M

Network

Implementation

Communication models• Shared memory

– Centralized or Distributed Shared Memory– Communication using LOAD/STORE– Coordinated using traditional OS methods

• Semaphores, monitors, etc.

– Busy-wait more acceptable than for uniprosessor

27 Lasse Natvig

Busy wait more acceptable than for uniprosessor

• Message passing– Using send (put) and receive (get)

• Asynchronous / Synchronous

– Libraries, standards• …, PVM, MPI, …

Limits to parallelism• We need separate processes and threads!

– Can’t split one thread among CPUs/cores• Parallel algorithms needed

– Separate field– Some problems are inherently serial

• P-complete problems– Part of parallel complexity theory

• See minicourse TDT6 - Heterogeneous and green

28 Lasse Natvig

See minicourse TDT6 Heterogeneous and green computing

• http://www.idi.ntnu.no/emner/tdt4260/tdt6

• Amdahl’s law– Serial fraction of code limits speedup– Example: speedup = 80 with 100 processors require

maximum 0,25% of the time spent on serial code

SMP: Cache Coherence Problem

/O

P1

cache cache cache

P2 P3

34 5

u = ?u = ?

u :5 u :5

u = 7

29 Lasse Natvig

• Processors see different values for u after event 3• Old (stale) value read in event 4 (hit)• Event 5 (miss) reads

– correct value (if write-through caches)– old value (if write-back caches)

• Unacceptable to programs, and frequent!

I/O devices

Memory

12u :5

Enforcing coherence• Separate caches makes multiple copies frequent

– Migration

• Moved from shared memory to local cache

• Speeds up access, reduces memory bandwidth requirements

– Replication

• Several local copies when item is read by several

30 Lasse Natvig

p y

• Speeds up access, reduces memory contention

• Need coherence protocols to track shared data– Directory based

• Status in shared location (Chap. 4.4)

– (Bus) snooping

• Each cache maintains local status

• All caches monitor broadcast medium

• Write invalidate / Write update

6

Snooping: Write invalidate

• Several reads or one write: No change• Writes require exclusive access• Writes to shared data: All other cache copies

i lid t d

31 Lasse Natvig

invalidated– Invalidate command and address broadcasted– All caches listen (snoops) and invalidates if necessary

• Read miss:– Write-Through: Memory always up to date

– Write-Back: Caches listen and any exclusive copy is put on the bus

Snooping: Write update• Also called write broadcast

• Must know which cache blocks are shared

32 Lasse Natvig

• Usually Write-Through– Write to shared data: Broadcast, all caches listen and updates their

copy (if any)

– Read miss: Main memory is up to date

Snooping: Invalidate vs. Update• Repeated writes to the same address (no reads) requires

several updates, but only one invalidate

• Invalidates are done at cache block level, while updates are done of individual words

• Delay from a word is written until it can be read is shorter for updates

33 Lasse Natvig

updates

• Invalidate most common– Less bus traffic

– Less memory traffic

– Bus and memory bandwidth typical bottleneck

An Example Snoopy Protocol• Invalidation protocol, write-back cache

• Each cache block is in one state– Shared : Clean in all caches and up-to-date in

34 Lasse Natvig

memory, block can be read

– Exclusive : One cache has only copy, its writeable, and dirty

– Invalid : block contains no data

Snooping: Invalidation protocol (1/6)Processor

0Processor

1Processor

2Processor

N-1

read x

35 Lasse Natvig

Interconnection Network

I/O Systemox

Main Memory

read miss

Processor0

Processor1

Processor2

ProcessorN-1

ox

Snooping: Invalidation protocol (2/6)

36 Lasse Natvig

Interconnection Network

I/O Systemox

Main Memory

oxshared

7

Processor0

Processor1

Processor2

ProcessorN-1

ox

read x

Snooping: Invalidation protocol (3/6)

37 Lasse Natvig

Interconnection Network

I/O Systemox

Main Memory

oxshared

read miss

Processor0

Processor1

Processor2

ProcessorN-1

ox ox

Snooping: Invalidation protocol (4/6)

38 Lasse Natvig

Interconnection Network

I/O Systemox

Main Memory

oxshared

oxshared

Processor0

Processor1

Processor2

ProcessorN-1

ox ox

write x

Snooping: Invalidation protocol (5/6)

39 Lasse Natvig

Interconnection Network

ox

Main Memory

oxshared

oxshared

invalidate

I/O System

Processor0

Processor1

Processor2

ProcessorN-1

1x

Snooping: Invalidation protocol (6/6)

40 Lasse Natvig

Interconnection Network

I/O Systemox

Main Memory

1xexclusive

Prefetching

Marius Grannæs

Feb 11th, 2011

www.ntnu.no M. Grannæs, Prefetching

2

About Me

• PhD from NTNU in Computer Architecture in 2010• “Reducing Memory Latency by Improving Resource Utilization”• Supervised by Lasse Natvig• Now working for Energy Micro• Working on energy profiling, caching and prefetching• Software development

www.ntnu.no M. Grannæs, Prefetching

3

About Energy Micro• Fabless semiconductor company• Founded in 2007 by ex-chipcon founders• 50 employees• Offices around the world• Designing the world most energy friendly microcontrollers• Today: EFM32 Gecko• Next friday: EFM32 Tiny Gecko (cache)• May(ish): EFM32 Giant Gecko (cache + prefetching)• Ambition: 1% marketshare...

• of a $30 bn market.

www.ntnu.no M. Grannæs, Prefetching

3

About Energy Micro• Fabless semiconductor company• Founded in 2007 by ex-chipcon founders• 50 employees• Offices around the world• Designing the world most energy friendly microcontrollers• Today: EFM32 Gecko• Next friday: EFM32 Tiny Gecko (cache)• May(ish): EFM32 Giant Gecko (cache + prefetching)• Ambition: 1% marketshare...• of a $30 bn market.

www.ntnu.no M. Grannæs, Prefetching

4

What is Prefetching?

Prefetching

Prefetching is a technique for predicting future prefetches andfetching the data into the cache

www.ntnu.no M. Grannæs, Prefetching

5

The Memory Wall

1

10

100

1000

10000

100000

1980 1985 1990 1995 2000 2005 2010

Per

form

ance

Year

CPU performanceMemory performance

W.Wulf and S. McKee, "Hitting the Memory Wall: Implications ofthe Obvious"

www.ntnu.no M. Grannæs, Prefetching

6

A Useful Analogy• An Intel Core i7 can execute 147600 Million Instructions per

second.• ⇒ A carpenter can hammer one nail per second.

• DDR3-1600 RAM can perform 65 Million transfers per second.• ⇒ The carpenter must wait 38 minutes per nail.

www.ntnu.no M. Grannæs, Prefetching

6

A Useful Analogy• An Intel Core i7 can execute 147600 Million Instructions per

second.• ⇒ A carpenter can hammer one nail per second.

• DDR3-1600 RAM can perform 65 Million transfers per second.

• ⇒ The carpenter must wait 38 minutes per nail.

www.ntnu.no M. Grannæs, Prefetching

6

A Useful Analogy• An Intel Core i7 can execute 147600 Million Instructions per

second.• ⇒ A carpenter can hammer one nail per second.

• DDR3-1600 RAM can perform 65 Million transfers per second.• ⇒ The carpenter must wait 38 minutes per nail.

www.ntnu.no M. Grannæs, Prefetching

7

Solution

Solution outline:1 You bring an entire box of nails.2 Keep the box close to the carpenter

www.ntnu.no M. Grannæs, Prefetching

7

Solution

Solution outline:1 You bring an entire box of nails.2 Keep the box close to the carpenter

www.ntnu.no M. Grannæs, Prefetching

8

Analysis: CarpentingHow long (on average) does it take to get one nail?

www.ntnu.no M. Grannæs, Prefetching

8

Analysis: CarpentingHow long (on average) does it take to get one nail?

Nail latency

LNail = LBox + pBox is empty · (LShop + LTraffic)

LNail Time to get one nail.LBox Time to check and fetch one nail from the box.

pBox is empty Probabilty that the box you have is empty.LShop Time to go to the shop (38 minutes).LTraffic Time lost due to traffic.

www.ntnu.no M. Grannæs, Prefetching

9

Solution: (For computers)

• Faster, but smaller memory closer to the processor.• Temporal locality

• If you needed X in the past, you are probably going to need Xin the near future.

• Spatial locality• If you need X , you probably need X + 1

⇒ If you need X, put it in the cache, along with everything elseclose to it (cache line)

www.ntnu.no M. Grannæs, Prefetching

9

Solution: (For computers)

• Faster, but smaller memory closer to the processor.• Temporal locality

• If you needed X in the past, you are probably going to need Xin the near future.

• Spatial locality• If you need X , you probably need X + 1

⇒ If you need X, put it in the cache, along with everything elseclose to it (cache line)

www.ntnu.no M. Grannæs, Prefetching

10

Analysis: Caches

Nail latency

LSystem = LCache + pMiss · (LMain Memory + LCongestion)

LSystem Total system latency.LCache Latency of the cache.

pMiss Probabilty of a cache miss.LMain Memory Main memory latency.

LCongestion Latency due to main memory congestion.

www.ntnu.no M. Grannæs, Prefetching

11

DRAM in perspective• “Incredibly slow” DRAM has a response time of 15.37 ns.• Speed of light is 3 · 108m/s.• Physical distance from processor to DRAM chips is typically

20cm.

2 · 20 · 10−3m3 · 108m/s

= 0.13ns (1)

• Just 2 orders of magnitude!• Intel Core i7 - 147600 Million Instructions per second.• Ultimate laptop - 5 · 1050 operations per second/kg.

Lloyd, Seth, “Ultimate physical limits to computation”

www.ntnu.no M. Grannæs, Prefetching

11

DRAM in perspective• “Incredibly slow” DRAM has a response time of 15.37 ns.• Speed of light is 3 · 108m/s.• Physical distance from processor to DRAM chips is typically

20cm.2 · 20 · 10−3m

3 · 108m/s= 0.13ns (1)

• Just 2 orders of magnitude!

• Intel Core i7 - 147600 Million Instructions per second.• Ultimate laptop - 5 · 1050 operations per second/kg.

Lloyd, Seth, “Ultimate physical limits to computation”

www.ntnu.no M. Grannæs, Prefetching

11

DRAM in perspective• “Incredibly slow” DRAM has a response time of 15.37 ns.• Speed of light is 3 · 108m/s.• Physical distance from processor to DRAM chips is typically

20cm.2 · 20 · 10−3m

3 · 108m/s= 0.13ns (1)

• Just 2 orders of magnitude!• Intel Core i7 - 147600 Million Instructions per second.• Ultimate laptop - 5 · 1050 operations per second/kg.

Lloyd, Seth, “Ultimate physical limits to computation”

www.ntnu.no M. Grannæs, Prefetching

12

When does caching not work?The four Cs:• Cold/Compulsory:

• The data has not been referenced before• Capacity

• The data has been referenced before, but has been thrown out,because of the limited size of the cache.

• Conflict• The data has been thrown out of a set-assosciative cache

because it would not fit in the set.• Coherence

• Another processor (in a muti-processor/core environment) hasinvalidated the cacheline.

We can buy our way out of Capacity and Conflict misses, but notCold or Coherence misses!

www.ntnu.no M. Grannæs, Prefetching

12

When does caching not work?The four Cs:• Cold/Compulsory:

• The data has not been referenced before• Capacity

• The data has been referenced before, but has been thrown out,because of the limited size of the cache.

• Conflict• The data has been thrown out of a set-assosciative cache

because it would not fit in the set.• Coherence

• Another processor (in a muti-processor/core environment) hasinvalidated the cacheline.

We can buy our way out of Capacity and Conflict misses, but notCold or Coherence misses!

www.ntnu.no M. Grannæs, Prefetching

13

Cache Sizes

1

10

100

1000

10000

1985 1990 1995 2000 2005 2010

Cac

he s

ize

(kB

)

Year

8048

6DX

Pent

ium

Pent

ium

Pro

Pent

ium

IIPe

ntiu

m II

IPe

ntiu

m 4

Pent

ium

4E

Cor

e 2

Cor

e i7

www.ntnu.no M. Grannæs, Prefetching

14

Core i7 (Lynnfield) - 2009

www.ntnu.no M. Grannæs, Prefetching

15

Pentium M - 2003

www.ntnu.no M. Grannæs, Prefetching

16

Prefetching

Prefetching increases the performance of caches by predictingwhat data is needed and fetching that data into the cache before itis referenced. Need to know:• What to prefetch?• When to prefetch?• Where to put the data?• How do we prefetch? (Mechanism)

www.ntnu.no M. Grannæs, Prefetching

17

Prefetching Terminology

Good PrefetchA prefetch is classified as Good if the prefetched block isreferenced by the application before it is replaced.

Bad PrefetchA prefetch is classified as Bad if the prefetched block is notreferenced by the application before it is replaced.

www.ntnu.no M. Grannæs, Prefetching

17

Prefetching Terminology

Good PrefetchA prefetch is classified as Good if the prefetched block isreferenced by the application before it is replaced.

Bad PrefetchA prefetch is classified as Bad if the prefetched block is notreferenced by the application before it is replaced.

www.ntnu.no M. Grannæs, Prefetching

18

Accuracy

The accuracy of a given prefetch algorithm that yields G goodprefetches and B bad prefetches is calculated as:

Accuracy

Accuracy = GG+B

www.ntnu.no M. Grannæs, Prefetching

19

Coverage

If a conventional cache has M misses without using any prefetchalgorithm, the coverage of a given prefetch algorithm that yields Ggood prefetches and B bad prefetches is calculated as:

Accuracy

Coverage = GM

www.ntnu.no M. Grannæs, Prefetching

20

Prefetching

System Latency

Lsystem = Lcache + pmiss · (Lmain memory + Lcongestion)

• If a prefetch is good:• pmiss is lowered• ⇒ Lsystem decreases

• If a prefetch is bad:• pmiss becomes higher because useful data might be replaced• Lcongestion becomes higher because of useless traffic• ⇒ Lsystem increases

www.ntnu.no M. Grannæs, Prefetching

20

Prefetching

System Latency

Lsystem = Lcache + pmiss · (Lmain memory + Lcongestion)

• If a prefetch is good:• pmiss is lowered• ⇒ Lsystem decreases

• If a prefetch is bad:• pmiss becomes higher because useful data might be replaced• Lcongestion becomes higher because of useless traffic• ⇒ Lsystem increases

www.ntnu.no M. Grannæs, Prefetching

20

Prefetching

System Latency

Lsystem = Lcache + pmiss · (Lmain memory + Lcongestion)

• If a prefetch is good:• pmiss is lowered• ⇒ Lsystem decreases

• If a prefetch is bad:• pmiss becomes higher because useful data might be replaced• Lcongestion becomes higher because of useless traffic• ⇒ Lsystem increases

www.ntnu.no M. Grannæs, Prefetching

21

Prefetching TechniquesTypes of prefetching:• Software

• Special instructions.• Most modern high performance processors have them.• Very flexible.• Can be good at pointer chasing.• Requires compiler or programmer effort.• Processor executes prefetches instead of computation.• Static (performed at compile-time).

• Hardware• Hybrid

www.ntnu.no M. Grannæs, Prefetching

21

Prefetching Techniques

Types of prefetching:• Software• Hardware

• Dedicated hardware analyzes memory references.• Most modern high performance processors have them.• Fixed functionality.• Requires no effort by the programmer or compiler.• Off-loads prefetching to hardware.• Dynamic (performed at run-time)

• Hybrid

www.ntnu.no M. Grannæs, Prefetching

21

Prefetching Techniques

Types of prefetching:• Software• Hardware• Hybrid

• Dedicated hardware unit.• Hardware unit programmed by software.• Some effort required by the programmer or compiler.

www.ntnu.no M. Grannæs, Prefetching

22

Software Prefetchingf o r ( i =0; i < 10000; i ++) {

acc += data [ i ] ;}

MOV r1, 0 ; Acc

MOV rO, #0 ; i

Label: LOAD r2, r0(#data) ; Cache miss! (400 cycles!)

ADD r1, r2 ; acc += date[i]

INC r0 ; i++

CMP r0, #100000 ; i < 100000

BL Label ; branch if less

www.ntnu.no M. Grannæs, Prefetching

22

Software Prefetchingf o r ( i =0; i < 10000; i ++) {

acc += data [ i ] ;}

MOV r1, 0 ; Acc

MOV rO, #0 ; i

Label: LOAD r2, r0(#data) ; Cache miss! (400 cycles!)

ADD r1, r2 ; acc += date[i]

INC r0 ; i++

CMP r0, #100000 ; i < 100000

BL Label ; branch if less

www.ntnu.no M. Grannæs, Prefetching

23

Software Prefetching II

f o r ( i =0; i < 10000; i ++) {acc += data [ i ] ;

}

Simple optimization using __builtin_prefetch()

f o r ( i =0; i < 10000; i ++) {_ _ b u i l t i n _ p r e f e t c h (& data [ i + 1 0 ] ) ;acc += data [ i ] ;

}

Why add 10 (and not 1?)Prefetch Distance - Memory latency >> Computation latency.

www.ntnu.no M. Grannæs, Prefetching

23

Software Prefetching II

f o r ( i =0; i < 10000; i ++) {acc += data [ i ] ;

}

Simple optimization using __builtin_prefetch()

f o r ( i =0; i < 10000; i ++) {_ _ b u i l t i n _ p r e f e t c h (& data [ i + 1 0 ] ) ;acc += data [ i ] ;

}

Why add 10 (and not 1?)Prefetch Distance - Memory latency >> Computation latency.

www.ntnu.no M. Grannæs, Prefetching

24

Software Prefetching IIIf o r ( i =0; i < 10000; i ++) {

_ _ b u i l t i n _ p r e f e t c h (& data [ i + 1 0 ] ) ;acc += data [ i ] ;

}

Note:• data[0]→ data[9] will not be prefetched.• data[10000]→ data[10009] will be prefetched, but not used.

Accuracy =G

G + B=

999010000

= 0.999 = 99,9%

Coverage =GM

=999010000

= 0.999 = 99,9%

www.ntnu.no M. Grannæs, Prefetching

24

Software Prefetching IIIf o r ( i =0; i < 10000; i ++) {

_ _ b u i l t i n _ p r e f e t c h (& data [ i + 1 0 ] ) ;acc += data [ i ] ;

}

Note:• data[0]→ data[9] will not be prefetched.• data[10000]→ data[10009] will be prefetched, but not used.

Accuracy =G

G + B=

999010000

= 0.999 = 99,9%

Coverage =GM

=999010000

= 0.999 = 99,9%

www.ntnu.no M. Grannæs, Prefetching

25

Complex Softwaref o r ( i =0; i < 10000; i ++) {

_ _ b u i l t i n _ p r e f e t c h (& data [ i + 1 0 ] ) ;i f ( someFunction ( i ) == True ){

acc += data [ i ] ;}

}

Does prefetching pay off in this case?

• How many times is someFunction(i) true?• How much memory bus access is perfomed in

someFunction(i)?• Does power matter?

We have to profile the program to know!

www.ntnu.no M. Grannæs, Prefetching

25

Complex Softwaref o r ( i =0; i < 10000; i ++) {

_ _ b u i l t i n _ p r e f e t c h (& data [ i + 1 0 ] ) ;i f ( someFunction ( i ) == True ){

acc += data [ i ] ;}

}

Does prefetching pay off in this case?• How many times is someFunction(i) true?• How much memory bus access is perfomed in

someFunction(i)?• Does power matter?

We have to profile the program to know!

www.ntnu.no M. Grannæs, Prefetching

26

Dynamic Data Structures I

typedef s t r u c t {i n t data ;node_t next ;

} node_t ;

wh i le ( ( node = node−>next ) != NULL) {acc += node−>data ;

}

www.ntnu.no M. Grannæs, Prefetching

27

Dynamic Data Structures II

typedef s t r u c t {i n t data ;node_t next ;node_t jump ;

} node_t ;

wh i le ( ( node = node−>next ) != NULL) {_ _ b u l t i n _ p r e f e t c h ( node−>jump ) ;acc += node−>data ;

}

www.ntnu.no M. Grannæs, Prefetching

28

Hardware PrefetchingSoftware prefetching:• Need programmer effort to implement• Prefetch instructions is not computing• Compile-time• Very flexible

Hardware prefetching:• No programmer effort• Does not displace compute instructions• Run-time• Not flexible

www.ntnu.no M. Grannæs, Prefetching

28

Hardware PrefetchingSoftware prefetching:• Need programmer effort to implement• Prefetch instructions is not computing• Compile-time• Very flexible

Hardware prefetching:• No programmer effort• Does not displace compute instructions• Run-time• Not flexible

www.ntnu.no M. Grannæs, Prefetching

29

Sequential Prefetching

The simplest prefetcher, but suprisingly effective due to spatiallocality.

Sequential Prefetching

Miss on address X⇒ Fetch X+n, X+n+1 ... , X+n+j

n Prefetch distancej Prefetch degree

Collectively known as prefetch agressiveness.

www.ntnu.no M. Grannæs, Prefetching

30

Sequential Prefetching II

1

1.5

2

2.5

3

3.5

4

4.5

5

libqu

antu

m

milc

lesl

ie3d

Gem

sFD

TD lbm

sphi

nx3

Spe

edup

Benchmark

Sequential