SWENG 505 Lecture 2: Basics concepts By Dr. Phil Laplante, PE.

-

Upload

hector-morton -

Category

Documents

-

view

228 -

download

1

Transcript of SWENG 505 Lecture 2: Basics concepts By Dr. Phil Laplante, PE.

SWENG 505 Lecture 2: Basics concepts

By Dr. Phil Laplante, PE

2

Today’s topics

The software crisis? Software uncertainty Life cycle models Software artifacts Some basic software management principles

3

The software crisis?

The Chaos report Patterns of software systems failure and

success State of the practice survey by Neill and

Laplante

4

The Chaos report (Standish Group 95)

The study has been conducted every two years since 1994 with data having been accumulated from a major survey on project success and failure.

This is a widely cited, report (you can find the 1994 one on the Web).

Predicted that US companies would spend $81 billion on cancelled software projects out of a total of $250 billion in spending in 1995.

31% of software projects studied would cancel before they were completed.

53% of software projects would overrun by more than 50% Only 9% of software projects for large companies would be

delivered on time and within budget. For medium-sized and small companies, the numbers improved to 16% and 28%, respectively.

5

The Chaos report (2004)

In 2004 Project success rates have increased to just over a third or 34% of all projects. (a 300% improvement since 1994).

Project failures have declined to 15%, versus 31% in 1994. The lost dollar value for US projects in 2002 is estimated at $38

billion with another $17 billion in cost overruns (for $255 billion in project spending).

Time overruns have significantly increased to 82% from a low of 63% in the year 2000.

52% of required features and functions make it to the released product. This compares with 67% in the year 2000.

Source: www.standish.com, September 2004.

Chaos Report 2006

35% of software projects started in 2006 can be categorized as successful (vs 16.2 % in 1994) meaning they were completed on time, on budget and met user requirements.

9% of projects begun were outright failures, compared with 31.1% in 1994.

46% of projects described as challenged, meaning they had cost or time overruns or didn’t fully meet the user’s needs,from 52.7% in 1994.

In 2006, software value was measured at 59 cents on the dollar. In 1998, that figure was 25 cents on the dollar.

45% of an application's features are never used, 19% are rarely used, 16% are sometimes used, 13% are often used, and finally, 7% are always used.

6

7

Do you believe the Standish Report?

A critical examination of the 1998 Chaos report, revealed several flaws:– Showed an improvement in cost overruns of 69%

from 1994 report – hardly likely– It didn’t define how cost overrun was measured

and described it inconsistently– It didn’t report how projects were selected for

inclusion (likely only failure stories made it in)

Source: Dyba, Kitchenham, Jorgensen

8

Payoff: dealing with failed projects

1. Prepare a communication plan2. Perform a post-mortem audit3. Form a contingency plan jointly with the sponsor4. Modify the current development process to reflect the lessons

learned5. Reflect on your own role and responsibilities6. Ensure continuity of service7. Provide staff counseling and appropriate new assignments8. Learn from mistakes9. Review related project decisions and long-range IT plans10. Determine responsibility of vendors

Source: Iacovou and Dexter

9

State of the practice survey by Neill and Laplante (Neill 2003)

Web based survey instrument.

Invite participation by email.

Reminder sent. Data collection for

several weeks. Data cleaning. Data analysis

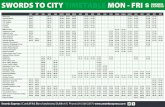

Location of Sur vey Par tic ipants

PennsylvaniaNew Jersey

Delaware

Maryland

Penn State - Great Valley Campus

10

Response statistics (2003)

Invitation sent

3/14/02

% of those sent

Reminder sent 3/21/02

%

Number Sent 1917 -- 1519 -- Bounced Back 420 21.9 30 2.0 Received 1497 78.1 1489 98.0 Viewed Survey 886 57.8 606 40.7 Interacted 378 25.3 176 11.8 Did Not Interact 1119 74.7 1318 88.2 Opted Out 10 0.7 5 0.3

Yield = 194/1519 = 12.77%

11

Surprise

It is not true that software projects are always late and over budget. On the contrary, they are frequently on time and on budget.

About 42% of the respondents thought that project costs were within budget estimates,

• 35% believed that their projects were not. Roughly 45% of respondents thought that projects

where finished on time • but an equal number thought that their projects overran.

12

Surprise

About 15% of the respondents thought that “project goals were achieved earlier than predicted,”

• but 60% who disagreed with this statement.

However 41% of the respondents thought that the project could have been completed faster, but at the expense of quality.

• About 40% disagreed with that statement.

13

Other findings

43% reported that error severity was reported to be significant. • 34% believed that the corrective hours needed to

resolve run-time problems were not minimal. • 42% thought that the corrective hours needed

were minimal.

2008 Survey

Repeated 2003 survey(V. Marinelli* and P. Laplante)

Same questions plus new questions on tools, software type, frameworks, quality management

Relatively low response rate But,.. Most of the previous findings prevailed

14*Vincent Marinelli, “An Analysis of Current Trends in Requirements Engineering Practice“, Master of Software Engineering Thesis, Penn State, October 2008. Also, to appear in Proceedings of the Hawaii Conference on Systems and Software, 2010.

Response Statistics

15

Participation rate 93/1855 ≈ 5%

Main Findings (1)

Use cases and scenarios are still the most popular requirements elicitation techniques.

Semi-formal notations such as UML are used about 30% of the time and formal methods are still infrequently used.

Storyboarding and interviews have emerged as popular requirements elicitation methods.

Perception of product capability and ease of use has improved since the previous survey.

16

Main Findings (2)

Delivery delays seem to be a bigger problem in the 2008 survey than they were in the 2002 survey.

The present survey also provided a number of new findings:

The commitment to quality and process dictated by the adoption of an SQM framework has a positive effect on the outcome of a project.

17

Main Findings (3)

There is no significant improvement in the quality and productivity when compared to users that either used no ALM tool or were unaware if one was used.

The selection of a given SDLC methodology does not correlate well with quality and productivity measures, nor does the selection of methodology change the subjective assessments provided by the respondents.

18

Life Cycle Model Used

19

Software Quality Management

20

Software Development Frameworks

21

Project Costs Were Within Budget

22

The Duration of the Project was Within Schedule

23

The Quality of the Development Team's Work was Acceptable

24

The Project Could Have Been Completed Faster, But That Would Have Meant A Lower Quality Product

25

26

Patterns of software systems failure and success (Jones 96)

Technologies on unsuccessful projects

Technologies on successful projects

No historical software measurement data Accurate software measurement

Failure to use automated estimating tools Early use of estimating tools

Failure to use automated planning tools Continuous use of planning tools

Failure to monitor progress or milestones Formal progress reporting

Failure to use effective architecture Formal architecture planning

Failure to use effective development methods

Formal development methods

Failure to use design reviews Formal design reviews

Failure to use code inspections Formal code inspections

27

Patterns of software systems failure and success (Jones 96)

Technologies on unsuccessful projects

Technologies on successful projects

Failure to include formal risk management Formal risk management

Informal, inadequate testing Formal testing methods

Manual design and specification Automated design and specifications

Failure to use formal configuration control Automated configuration control

More than 30% creep in user requirements

Less than 10% creep in user requirements

Inappropriate use of 4GLs Use of suitable languages

Excessive and unmeasured complexity Controlled and measured complexity

Little or no reuse of certified materials Significant reuse of certified materials

Failure to define database elements Formal database planning

28

Patterns of software systems failure and success (Jones 96)

Social factors observed on unsuccessful projects

Social factors observed on successful projects

Excessive schedule pressure Realistic schedule expectation

Executive rejection of estimates Executive understanding of estimates

Severe friction with clients Cooperation with clients

Divisive corporate politics Congruent management goals

Poor team communications Excellent team communications

Naïve senior management Experienced senior executives

Project management malpractice Capable project management

Unqualified technical staff Capable technical staff

29

Why do projects fail?

Unrealistic or unarticulated goals Inaccurate estimates of needed resources Badly defined system requirements Poor reporting of the project’s status Unmanaged risks Poor communication Use of immature technology Inability to handle complexity Sloppy development practices Poor project management Stakeholder politics Commercial pressures

Source: Charette

30

Software uncertainty

There are two main reasons why software project management is so difficult

– Project management of anything is difficult– Software development is fraught with uncertainty

In a general sense we can view the management of any process as a two part problem: people problems, and domain specific problems.

Let’s deal with the uncertainty aspect of software.

“The future is uncertain, you can count on it,”— Murphy

31

Some sources of software uncertainty

Software Quality

Possible Measurement Approach Sources of Uncertainty

correctness probabilistic measures, MTBF, MTFF

Dispersion about the mean – confidence intervals are uncertain.

interoperability compliance with open standards When open standards do not exist. Uncertainty about compliance with open standards.

maintainability anecdotal observation of resources spent

No direct measures other than person hours after the fact.

performance algorithmic complexity analysis, direct measurement, simulation

Guarantees of performance for non-trivial systems is an NP-hard or NP-complete problem.

portability anecdotal observation Anecdotes are unreliable and therefore uncertain.

reliability probabilistic measures, MTBF, MTFF, heuristic measures

Dispersion about the mean – confidence intervals are uncertain.

usability user feedback from surveys and problem reports

These are based on human observation, and hence, uncertain.

verifiability software monitors Measures the absence of these properties, which is inherently uncertain. Does not capture process or life cycle issues.

32

Uncertainty ≠ risk

Technical uncertainties– We’ve discussed some of these

Some personnel uncertainties– Will your project really get all of the best people?– Are there critical skills for which nobody is identified?– Are there pressures to staff with available warm bodies?– Are there pressures to overstaff in the early phases?– Are the key people compatible?

Virtually all of this course is about risk identification, anticipation, and mitigation

33

Boehm’s top 10 software risks (Boehm 1991)

1. Personnel shortfall2. Unrealistic schedules and budgets3. Developing the wrong functions and properties4. Developing the wrong user interface5. Gold-plating6. Continuing stream of requirements changes7. Shortfalls in externally furnished components8. Shortfalls in externally performed tasks9. Real-time performance shortfalls10. Straining computer-science capabilities

34

Gilb’s risk principles

The risk principle: if you don’t actively attack the risks, they will actively attack you.

The risk sharing principle: The real professional is one who knows the risks, their degree, their causes, and the action necessary to counter them and shares this knowledge with his colleagues and clients.

The risk prevention principle: risk prevention is more cost-effective than risk detection.

35

Gilb’s risk principles

The principle of risk exposure: the degree of risk, and its causes, must never be hidden from decision makers.

The asking principle: if you don’t ask for risk information, you are asking for trouble.

36

Twelve tough questions to ask about risk (Gilb)

1. Why isn't the improvement quantified?

2. What is degree of the risk or uncertainty and why?

3. Are you sure? If not, why not?

4. Where did you get that from? How can I check it out?

5. How does your idea affect my goals and budgets, measurably?

6. Did we forget anything critical to survival?

37

Twelve tough questions to ask about risk (Gilb)

7. How do you know it works that way? Did it before?

8. Have we got a complete solution? Are all requirements satisfied?

9. Are we planning to do the 'profitable things' first?

10. Who is responsible for failure or success?

11. How can we be sure the plan is working, during the project, early?

12. Is it ‘no cure, no pay’ in a contract? Why not?

38

Life cycle models

The Waterfall model V model Evolutionary model Incremental model The spiral model Agile methodologies Unified Process Model (UPM)

39

The Waterfall model

Attributed to Winston Royce

The V Model

SystemRequirements

SoftwareRequirements

SoftwareDesign

SoftwareImplementation

UnitTesting

IntegrationTesting

SoftwareTesting

SystemTesting

system test plan

software test plan

integration test plan

unit test plan

ProductRelease

time

UserNeed

Problem: if we rely on waterfall testing alone the defects created first are detected last

Managing and Leading Software Projects,by R. Fairley, © Wiley, 2009

41

Evolutionary model

Iteratively defines requirements for new system increments based on experience from previous iterations.

Also known as Evolutionary Prototyping, Rapid Delivery, Evolutionary Delivery Cycle and Rapid Application Delivery (RAD).

Cautions: – Difficulty in estimating costs and schedule. – Overall project completion time may be greater than if the scope and

requirements are established completely before design. – Care must be taken to ensure so that the system does not resemble a

patchwork of afterthought add-ons. – Additional time must also be planned for integration and regression testing

as increments are developed and added to the system.

42

Incremental model

Involves a series of detailed system increments, Each increment incorporating new or improved functionality to the

system. Increments may be built serially or in parallel Difference between the Incremental and Evolutionary models:

– Incremental model allows for parallel increments and multiple releases are pre-planned.

– Evolutionary model is sequential and each release is based on previous release.

Incremental model advantages: – ease of understanding,– early development of initial functionality, – project management is more manageable for each increment.

May lead to increased system development because of necessary rework

The Spiral Approach

A meta-level model for iterative/evolutionary development models– earlier activities are revisited, revised, and refined on each pass of

the spiral Each cycle of a spiral model involves four steps:

Step 1 - determine objectives, alternatives, and constraintsStep 2 - identify risks for each alternative

and choose one of the alternativesStep 3 - implement the chosen alternativeStep 4 - evaluate results and

plan for the next cycle of the spiral The cycles continue until the desired objectives are achieved (or until

time and resources are used up) Can be implemented in both an evolutionary and iterative fashion

44

The spiral model (meta)

risk analysis

risk analysis

risk analysis

prototype 1 prototype 2 prototype 3 prototype 3

concept

requirements

design

maintenance

test

code

simulations, benchmarks

planning

planning

planning

An Evolutionary Spiral Model

Each Cycle is One Month or Less

time

xx

xxx

x

x

x

x

x

xxx

x

x

x

x

x

x

x

x

x

xx

x

x

x

x

x

x

x

xx

x

x

x

x

x

xxx

xxx

x

x

x

xx

x

xx

x

xx

xx

x

1. ANALYZE:Determineobjectives,alternatives,and constraints

2. DESIGN:evaluate alternatives,Identify risks of eachalternative &choose one

3. DEVELOP:Implement and test the chosen alternative

4. EVALUATE:examine resultsand decide whatto do next

x

x

start here

end here

Managing and Leading Software Projects,by R. Fairley, © Wiley, 2009

An Incremental Spiral Model

Each Cycle is One Week or Less

time

xx

xxx

x

x

x

x

x

xxx

x

x

x

x

x

x

x

x

x

xx

x

x

x

x

x

x

x

xx

x

x

x

x

x

xxx

xxx

x

x

x

xx

x

xx

x

xx

xx

x

x

x

1. DESIGN:revise as necessary; select algorithms, data structures, and interface details for the next incremental build

2. IMPLEMENT:determine ways to obtain the codeevaluate the risk of eachobtain the code and integrate it into the present version

3. VERIFY & VALIDATE:determine acceptability of the build; rework as necessary

4. DEMONSTRATE:evaluate the build with customer, users and other stakeholders

Managing and Leading Software Projects,by R. Fairley, © Wiley, 2009

47

Agile methodologies

Adaptive rather than predictive People, not process oriented Are a response to the problem of constantly

changing requirements Also known as “lightweight” More on these later

48

Unified Process Model (UPM)

A metamodel for defining processes and their components.

Closely associated with the development of systems using object-oriented techniques.

Models a family of related software processes via the Unified Modeling Language (UML)

Developed to support the Rational Unified Process Consist of four phases, one or more iterations of each,

in which some technical capability is produced and demonstrated against a set of criteria..

Any tool based on UPM would be a tool for process authoring and customizing.

49

Phases of UPM

Inception– Establish software scope, use cases, candidate

architecture, risk assessment Elaboration

– Baseline vision, baseline architecture, select components Construction

– Component development, resource management and control

Transition– Integration of components, deployment engineering,

acceptance testing

50

Software artifacts

Management Requirements Design Implementation Deployment

51

Management artifacts

Work breakdown structure

Business case Release specifications Software development

plan

Release descriptions Status assessments Software change order

database Deployment documents Environment

52

Requirements artifacts

Concept document Requirements model(s)

– RFPs– User stories– Use cases– …

What else?

53

Design artifacts

Design model(s) Test model Software architecture description What else?

54

Implementation artifacts

Source code baselines Associated compile-time files Component executables Anything else?

55

Deployment artifacts

Integrated product executable baselines Associated run-time files After the fact reports User manual What else?

56

Some basic software management principles

Boehm (old?) Davis (newer?) Royce (newest?)

57

Boehm’s top 10 list of industrial software metrics (Boehm 87)

Not really a set of metrics, but some rules of thumb 1. Finding and fixing a software problem after delivery costs`

100 times more than finding and fixing the problem in early design phases.

2. You can compress software development schedules 25% of nominal, but no more.

3. For every $1 you spend on development, you will spend $2 on maintenance.

4. Software development and maintenance costs are primarily a function of the number of source lines of code.

5. Variations among people account for the biggest differences in software productivity

58

Boehm’s top 10 list of industrial software metrics (Boehm 87)

6. The overall ratio of software to hardware costs is still growing. In 1955 it was 15:85 in 1985 85:15.

7. Only about 15% of software development effort is devoted to programming.

8. Software systems and products typically cost 3 times as much per SLOC as individual software programs. Software-systems products cost 9 times as much.

9. Walkthroughs catch 60% of the errors.10. 80% of the contribution comes from 20% of the

contributors.

59

Davis’ software management principles (Davis 95)

1. Make quality #12. High-quality software is possible3. Give products to customers early4. Determine the problem before writing the

requirements5. Evaluate design alternatives6. Use an appropriate process model7. Use different languages for different places8. Minimize intellectual distance (software structure

should be close to real-world structure)

60

Davis’ software management principles

9. Put techniques before tools10. Get it right before you make it faster11. Inspect code12. Good management is more important than

good technology13. People are the key to success14. Follow with care (beware of what you adopt)15. Take responsibility

61

Davis’ software management principles

16. Understand the customer’s priorities

17. The more they see, the more they need

18. Plan to throw one away (from Brooks)

19. Design for change

20. Design without documentation is not design

21. Use tools, but be realistic

22. Avoid tricks

23. Encapsulate

62

Davis’ software management principles

24. Use coupling and cohesion

25. Use the McCabe complexity measure*

26. Don’t test your own software

27. Analyze causes for errors

28. Realize that software’s entropy increases

29. People and time are not interchangeable

30. Expect excellence*While Royce agrees with many of Davis’ principles, he seems to dismiss metrics as relatively unimportant. This is a failing of UPM.

63

Royce’s software management principles (Royce 98)

1. Base the process on an architecture-first approach

2. Establish an iterative life-cycle process

3. Transition design methods to emphasize component-based development

4. Establish a change management environment

5. Enhance change freedom through tools that support round-trip engineering

64

Royce’s software management principles (Royce 98)

6. Capture design artifacts in rigorous, model-based notation (e.g. UML – is this rigorous?)

7. Instrument the process for objective quality control and progress assessment

8. Use a demonstration-based approach

9. Plan intermediate releases in groups of usage scenarios in evolving levels of detail

10. Establish a configurable process

The Main Points of Chapter 2 (1)

A development-process framework is a generic process model that can be tailored and adapted to fit the needs of various projects.

The development process for each software project must be designed with the same care used to design the product.

Process design is best accomplished by tailoring and adapting well-known development process models and process frameworks, just as product design is best accomplished by tailoring and adapting well-known architectural styles and architectural frameworks.

There are several well-known and widely used software development process models, including waterfall, incremental, evolutionary, agile, and spiral models.

The Main Points of Chapter 2 (2)

There are various ways to obtain the needed software components; different ways of obtaining software components require different mechanism of planning, measurement, and control.

The development phases of a software project can be interleaved and iterated in various ways.

Iterative development processes provide the advantages of: – continuous integration, – iterative verification and validation of the evolving product, – frequent demonstrations of progress, – early detection of defects, – early warning of process problems, – systematic incorporation of the inevitable rework that occurs in

software development, and – early delivery of subset capabilities (if desired).

The Main Points of Chapter 2 (3)

Depending on the iterative development process used, the duration of iterations range from 1 day to 1 month.

Prototyping is a technique for gaining knowledge; it is not a development process.

SEI, ISO, IEEE, and PMI, provide frameworks, standards, and guidelines relevant to software development process models (see Appendix 2A to Chapter 2)

67

68

References

Barry Boehm, “Industrial Software Metrics Top 10 List”, IEEE Software, vol. 4, No. 5, 1987.

Barry Boehm, “Software Risk Management: Principles and Practice,” IEEE Software, vol. 8, No.1 , 1991, pp. 32-41.

R. Charette, “Why Software Fails,” IEEE Spectrum, September 2005, pp. 42-49.

Alan Davis, “Fifteen Principles of Software Engineering” IEEE Software, vol. 11, No. 6, 1994, pp. 94-96, 101.

Richard Fairly, Managing and Leading Software Projects, © Wiley, 2009

Tom Gilb, “Principles of Risk Management”, http://www.gilb.com/Download/Risk.pdf.

C. Iacovou and A. Dexter, “Surviving IT Project Cancellations,” Communications of the ACM, April 2005, vol. 48, no. 4, pp. 83-86.

69

References

Capers Jones, Patterns of Software Systems Failure and Success, International Thomson Computer Press, Boston MA, 1996.

T. Dyba, B. Kitchenham, and M. Jorgensen, “Evidence-Based Software Engineering for Practitioners,” IEEE Software, January/February, 2005, pp. 58-65.

Colin J. Neill and Phillip A. Laplante, “Requirements Engineering: The State of the Practice”, IEEE Software, vol. 20, no. 6, November 2003, pp. 40-46.

Dave Pultorak, “An Introduction to IT Service Management: ITIL, MOF, and the ITSM Reference ITIL, MOF, and the ITSM Reference Model” presentation to the CIO Institute, http://www.techcouncil.org/whitepapers/davidpultorak.pdf, 2/18/04.

Standish Group, Chaos, 1995 (can be found on the Web).