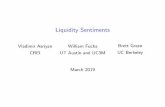

Sentiments Improvement

-

Upload

misha-kozik -

Category

Engineering

-

view

99 -

download

4

description

Transcript of Sentiments Improvement

Sentiment improvements

Proposed ideas:

Part I. Data preprocessing

Part II. PMI-IR approach

Team members:

Denys AstaninMykhailo Kozik

Data preprocessing

Raw data

Preprocessed data

NarrowingLong words

EmoticonsDecoding

SpellCorrection

AbbreviationsDecoding

TagsDetection

:'( → cry@Alex nice photo

#photoworld

goooood → good

lol → laughing out loud

I am shure that is realy exsellent plece|

I am sure that is really excellent place

Narrowing long words

This hotel so goooooooood! This hotel so good!

NEUTRAL POSITIVE

Using regexp narrow more than 2 duplicate letters in word to just 2

goooooood → good (correct narrowing)

baaaaaad → baad (incorrect narrowing, but will be corrected with spell-checker)

This place not coooooool! This place not cool!

NEUTRAL NEGATIVE

Try this regexp: http://regexr.com?30abm

Narrowing long words. Examples

dancing with the stars and two and a half men toniiiight

@BrunoMars you were AMAZINGGGGGG at the vma's need to see you!

RT @BriannaStull13: I hateeeeeee pandora ads....

It was sooo badddd

Woooooooooooow I Like that, very nice and big like

Thts cooool

i hack any thing but for moneyyyy

who know how hacked one add fb??? pleaseeee

Narrowing long words. Performance

10K 100K 1M

Long words 83.13 msec 828.30 msec 8370.97 msec~8 sec.

Normal words 31.92 msec 275.34 msec 2763.77 msec~3 sec.

Mixed words* 35.23 msec 339.23 msec 3370.31 msec~3 sec.

* assume that 1% of words are long words

Emoticons decoding

Using map of smile meanings convert smile to word that it means

<3 → love

:( → sad

Look at her http://t.co/12345 <3 Look at her http://t.co/12345 love

NEUTRAL POSITIVE

I will be out of work tomorrow :( I will be out of work tomorrow sad

NEUTRAL NEGATIVE

List of emoticons: http://en.wikipedia.org/wiki/List_of_emoticons

Emoticons decoding. Examples

Awww He is Too cute :) Thanks bae next weekend..

@LenovoDoTour I have missed these two days in Belgrade :(

Katie Holmes <3 #VMA

ahaha just to warn you!! ;)

it's amazing how Oracle can do so much! I'm loving it <3

please someone help me i need to finish this im out of time!! thank!! :D

Boa noite, viajantes! Menos um diazinho nessa semana =)

:-( don't have my Mcard number required to fill out form

Emoticons decoding. Performance

10K 100K 1M

1 smile list 45.03 msec 444.62 msec 4426.74 msec~4 sec.

5 smile list 189.87 msec 1304.10 msec~1 sec.

12355.37 msec~12 sec.

10 smile list 227.26 msec 2325.23 msec~2 sec.

26954.26 msec~27 sec.

We have so poor performance when smile list grow up due to method that performreplacements. Better results can achieved with using state machines or regexps

Abbreviations decoding

Using map of abbreviations convert abbr to word that it means

lol → laughing out loud

thx → thanks

Got it! lol Got it! laughing out loud

NEUTRAL POSITIVE

I was DWI, haha I was driving while intoxicated, haha

NEUTRAL NEGATIVE

List of abbreviations: http://www.smartdefine.org/internet_slang/abbreviations/r

Abbreviation decoding. Examples

No offense though.. Lol

O lmao!

http://t.co/Evvh4hj ROFL

JFYI #blackcarpet

Nice code LOL

TNX you Rose! We appreciate it!

OMG, FML!

Wait me, i will be AFK

Emoticons and Abbreviations

Alternative approach Abbreviations, acronyms, slang words are already parsed as tokens

Parse smiles as tokens also in FX

Now we can use ”Tune sentiments” on these tokens

Spell correction

Perform spell correction on data before sentiment calculation

I lov this hotel! I love this hotel!

NEUTRAL POSITIVE

They have terryble servic They have terrible service

NEUTRAL NEGATIVE

Spell corection. Examples

i hope @ladygaga will take some rest now becauce of...

But its still also hilarioouss

Shoukd i wast my money?

Business eviroment

It's impossibru!

I like dansing! <3

You can dowload the data from http://to.download/file

Coleguaues, lets keep it clean.

Spell correction. Edit distance

Edit types: Deletion beauetiful → beautiful

Insertion speling → spelling

Substitution performanse → performance

Swaping yaer → year

Examples

unsucesful → unsuccesful → unsuccessful (2 edits)

wardoub → wardroub → wardrobu → wardrobe (3 edits)

Spell correction. Algorithm

Peter Norvig's spelling corrector Bayes rule approach Train data Simple implementation High performance Low accuracy

More theory: http://norvig.com/spell-correct.html

Train data: http://norvig.com/big.txt

Spell correction. Coverage

Edit1 + Edit2 covers 98%!!!

Spell correction. Accuracy

Test data 1 Test data 2

1 edit 61.8% 67.2%

2 edits 71.2% 74.1%

Test data 1: Wikipedia – Common misspelled words (~4k)http://en.wikipedia.org/wiki/Wikipedia:Lists_of_common_misspellings/For_machines

Test data 2: Birkbeck spelling error corpus (270)http://www.ota.ox.ac.uk/headers/0643.xml

Spell correction. Performance

10K 100K 1M

1 edit 11350.52 msec~11 sec.

117261.12 msec~2 min.

1252882.23 msec~20 min.

2 edits 4300631.29 msec~70 min.

Due to quadratic complexity these testsmake no sense

Spell-check complexity for word:

Edit distance 1: O(C·n)Edit distance 2: O(C²·n²)

* n – length of word** C ~= 50

Spell correction. Improvements

Performance Memoize correction (Best → O(1))

Give ability to user to perform spell-correction

Improve train data

Coverage & Accuracy Use more edits candidates

Use common mispelling rules

Use weights for edit operations

Hit part of speech

Hit context

Improve train data

Tags detection

Process differently source-specific information (twitter)

I say to @love hello! I say to - hello!

NEUTRALPOSITIVE

I mean that i #hatetwitter I mean that i hate twitter

NEUTRAL NEGATIVE

● Hashtag (#music) use word splitter

● Username (@LadyGaga) just ignore it

Tags detection. Examples

@INevaTrustEm ok :) we need to make a date for this

Watching @danieltosh #toofunny

#lovetolaugh

#sick

Avatar, #wasteofmoney

#soft #thissucks

#happytweet

RT @BriannaStull13: what do you mean?

Tags detection. Words splitting

Dynamic programming Statistical approach due to ambiguity

#orcore → [orc_ore], [or_core]

#expertsexchange → [expert_sex_change], [experts_exchange]

Train data Dictionary (default linux ~100K words)

Tags detection. Twitter hashtags

Twitter hashtags crawled from (~800):http://hashtags.org/http://kingnetforums.weebly.com/twitter-hashtags-lists.htmlhttp://edudemic.com/2011/10/twitter-hashtag-dictionary/http://nicolehumphrey.net/60-favorite-twitter-hashtags-for-writers-clickable-list/http://www.dailywritingtips.com/40-twitter-hashtags-for-writers/http://greeneconomypost.com/green-twitter-hashtag-17290.htm

Tags detection. Performance

100 400 800

Time 4019.73 msec~4 sec.

6429.19~6 sec.

7897.23~8 sec.

Accuracy 83.00% 86.25% 84.88%

Main problems:

● Train set not often solves ambiguity problem● Dictionary hits filter lot of right candidates

#rapnotamusic → [ra_p_not_a_music]

Words splitting. Improvements

Performance Memoize splitting

Prefix tree approach

Viterbi algorithm (http://en.wikipedia.org/wiki/Viterbi_algorithm)

Improve train data

Accuracy Use famous names, geographic locations, slang, abbreviations,

acronyms,...

Big dictionary

Improve train data (twitter-specific)

Preprocessing performance

Input conditions:

Data: 2.4K (incorrect) of 15.8K (total) from Omniture15K.xls file (15%) Emoticons size: 14 most common smilesAbbreviations size: 8 most common abbrsSpell-correction distance: 1Train data: big.txtDictionary: linux-words.txt

Results:

Sentence count: 2412Preprocessing time: 29214.88 msec (~29 sec.)Number of corrected sentences: 368Percent of corrected to incorrect data: 15.28%Percent of corrected to total data: 2.33%

Data preprocessing. Future.

Sentence breaker

Environment

Hardware CPU: 2 x Intel Pentium Dual T2370 @ 1.73GHz

RAM: 2.0 GB

Software OS: Ubuntu 11.04

Kernel: Linux 2.6.38-13-generic

IDE: Emacs 23.2.1

Programming: Clojure 1.3