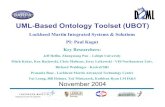

Semantic Knowledge Management : an Ontology-based Framework

Transcript of Semantic Knowledge Management : an Ontology-based Framework

Semantic Knowledge Management: An Ontology-Based Framework

Antonio ZilliUniversity of Salento, Italy

Ernesto DamianiUniversity of Milan, Italy

Paolo CeravoloUniversity of Milan, Italy

Angelo CoralloUniversity of Salento, Italy

Gianluca EliaUniversity of Salento, Italy

Hershey • New YorkInformatIon scIence reference

Director of Editorial Content: Kristin KlingerSenior Managing Editor: Jennifer NeidigManaging Editor: Jamie SnavelyAssistant Managing Editor: Carole CoulsonTypesetter: Amanda Appicello Cover Design: Lisa TosheffPrinted at: Yurchak Printing Inc.

Published in the United States of America by Information Science Reference (an imprint of IGI Global)701 E. Chocolate Avenue, Suite 200Hershey PA 17033Tel: 717-533-8845Fax: 717-533-8661E-mail: [email protected] site: http://www.igi-global.com

and in the United Kingdom byInformation Science Reference (an imprint of IGI Global)3 Henrietta StreetCovent GardenLondon WC2E 8LUTel: 44 20 7240 0856Fax: 44 20 7379 0609Web site: http://www.eurospanbookstore.com

Copyright © 2009 by IGI Global. All rights reserved. No part of this publication may be reproduced, stored or distributed in any form or by any means, electronic or mechanical, including photocopying, without written permission from the publisher.

Product or company names used in this set are for identification purposes only. Inclusion of the names of the products or companies does not indicate a claim of ownership by IGI Global of the trademark or registered trademark.

Library of Congress Cataloging-in-Publication Data

Semantic knowledge management : an ontology-based framework / Antonio Zilli ... [et al.], editor.

p. cm.

Summary: "This book addresses the Semantic Web from an operative point of view using theoretical approaches, methodologies, and software applications as innovative solutions to true knowledge management"--Provided by publisher.

Includes bibliographical references and index.

ISBN 978-1-60566-034-9 (hardcover) -- ISBN 978-1-60566-035-6 (ebook)

1. Knowledge management. 2. Semantic Web. 3. Semantic networks (Information theory) I. Zilli, Antonio.

HD30.2.S457 2009

658.4'038--dc22

2008009117

British Cataloguing in Publication DataA Cataloguing in Publication record for this book is available from the British Library.

All work contributed to this book set is original material. The views expressed in this book are those of the authors, but not necessarily of the publisher.

If a library purchased a print copy of this publication, please go to http://www.igi-global.com/agreement for information on activating the library's complimentary electronic access to this publication.

Editorial Advisory Board

Emanuele CaputoUniversity of Salento, Italy

Maria Chiara CascheraCNR Institute of Research on Population and Social Policies, Italy

Paolo CeravoloUniversity of Milan, Italy

Virginia CisterninoUniversity of Salento, Italy

Angelo CoralloUniversity of Salento, Italy

Maurizio De TommasiUniversity of Salento, Italy

Gianluca EliaUniversity of Salento, Italy

Fernando FerriCNR Institute of Research on Population and Social Policies, Italy

Cristiano FugazzaUniversity of Milan, Italy

Marcello LeidaUniversity of Milan, Italy

Carlo MastroianniInstitute of High Performance Computing and Net-working, CNR-ICAR, Italy

Davy MonticoloSeT Laboratory, University of Technology UTBM, France

Gianfranco PedoneComputer and Automation Research Institute of the Hungarian Academy of Sciences, Hungary

Giuseppe PirròUniversità della Calabria, Italy

Karl ReedLa Trobe University, Australia

Monica ScannapiecoUniversità di Roma “La Sapienza”, Italy

Giusy SecundoUniversity of Salento, Italy

Nico SicaPolimetrica Publisher, Italy

Cesare TaurinoUniversity of Salento, Italy

Giuseppe TurrisiUniversity of Salento, Italy

Marco VivianiUniversity of Milan, Italy

Dario ZaUniversity of Salento, Italy

Antonio ZilliUniversity of Salento, Italy

Preface ...............................................................................................................................................xvii

Acknowledgment ................................................................................................................................ xxi

Section IKnowledge-Based Innovations for the Web Infrastructure

Chapter IKIWI: A Framework for Enabling Semantic Knowledge Management ................................................ 1 Ernesto Damiani, University of Milan, Italy Paolo Ceravolo, University of Milan, Italy Angelo Corallo, University of Salento, Italy Gianluca Elia, University of Salento, Italy Antonio Zilli, University of Salento, Italy

Chapter IIIntroduction to Ontology Engineering ................................................................................................. 25 Paolo Ceravolo, University of Milan, Italy Ernesto Damiani, University of Milan, Italy

Chapter IIIOntoExtractor: A Tool for Semi-Automatic Generation and Maintenance of Taxonomies from Semi-Structured Documents ................................................................................................................ 51 Marcello Leida, University of Milan, Italy

Chapter IVSearch Engine: Approaches and Performance ..................................................................................... 74 Eliana Campi, University of Salento, Italy Gianluca Lorenzo, University of Salento, Italy

Table of Contents

Chapter VTowards Semantic-Based P2P Reputation Systems ............................................................................ 101 Ernesto Damiani, University of Milan, Italy Marco Viviani, University of Milan, Italy

Chapter VISWELS: A Semantic Web System Supporting E-Learning ................................................................ 120 Gianluca Elia, University of Salento, Italy Giustina Secundo, University of Salento, Italy Cesare Taurino, University of Salento, Italy

Chapter VIIApproaches to Semantics in Knowledge Management ...................................................................... 146 Cristiano Fugazza, University of Milan, Italy Stefano David, Polytechnic University of Marche, Italy Anna Montesanto, Polytechnic University of Marche, Italy Cesare Rocchi, Polytechnic University of Marche, Italy

Chapter VIIIA Workflow Management System for Ontology Engineering ........................................................... 172 Alessandra Carcagnì, University of Salento, Italy Angelo Corallo, University of Salento, Italy Antonio Zilli, University of Salento, Italy NunzioIngraffia,EngineeringIngegneriaInformaticaS.p.A.,Italy Silvio Sorace, Engineering Ingegneria Informatica S.p.A., Italy

Section IISemantic in Organizational Knowledge Management

Chapter IXActivity Theory for Knowledge Management in Organisations ........................................................ 201 Lorna Uden, Staffordshire University, UK

Chapter XKnowledge Management and Interaction in Virtual Communities .................................................... 216 Maria Chiara Caschera, Institute for Research on Population and Social Policies, Italy Arianna D’Ulizia, Institute for Research on Population and Social Policies, Italy Fernando Ferri, Institute for Research on Population and Social Policies, Italy Patrizia Grifoni, Institute for Research on Population and Social Policies, Italy

Chapter XIAn Ontological Approach to Managing Project Memories in Organizations ..................................... 233 Davy Monticolo, SeT Laboratory, University of Technology UTBM, France Vincent Hilaire, SeT Laboratory, University of Technology UTBM, France Samuel Gomes, SeT Laboratory, University of Technology UTBM, France AbderrafiaaKoukam,SeTLaboratory,UniversityofTechnologyUTBM,France

Section IIISemantic-Based Applications

Chapter XIIK-link+: A P2P Semantic Virtual Office for Organizational Knowledge Management .................... 262 Carlo Mastroianni, Institute of High Performance Computing and Networking CNR-ICAR, Italy Giuseppe Pirrò, University of Calabria, Italy Domenico Talia, EXEURA S.r.l., Italy, & University of Calabria, Italy

Chapter XIIIFormalizing and Leveraging Domain Knowledge in the K4CARE Home Care Platform ................ 279 Ákos Hajnal, Computer and Automation Research Institute of the Hungarian Academy of Sciences, Hungary Antonio Moreno, University Rovira i Virgili, Spain Gianfranco Pedone, Computer and Automation Research Institute of the Hungarian Academy of Sciences, Hungary David Riaño, University Rovira i Virgili, Spain László Zsolt Varga, Computer and Automation Research Institute of the Hungarian Academy of Sciences, Hungary

Chapter XIVKnowledge Management Implementation in a Consultancy Firm .................................................... 303 Kuan Yew Wong, Universiti Teknologi Malaysia, Malaysia Wai Peng Wong, Universiti Sains Malaysia, Malaysia

Chapter XVFinancial News Analysis Using a Semantic Web Approach .............................................................. 311 Alex Micu, Erasmus University Rotterdam, The Netherlands Laurens Mast, Erasmus University Rotterdam, The Netherlands Viorel Milea, Erasmus University Rotterdam, The Netherlands Flavius Frasincar, Erasmus University Rotterdam, The Netherlands Uzay Kaymak, Erasmus University Rotterdam, The Netherlands

Chapter XVIEnhancing E-Business on the Semantic Web through Automatic Multimedia Representation .......... 329 Manjeet Rege, Wayne State University, USA Ming Dong, Wayne State University, USA Farshad Fotouhi, Wayne State University, USA

Chapter XVIIUtilizing Semantic Web and Software Agents in a Travel Support System ....................................... 341 Maria Ganzha, EUH-E and IBS Pan, Poland Maciej Gawinecki, IBS Pan, Poland Marcin Paprzycki, SWPS and IBS Pan, Poland RafałGąsiorowski,WarsawUniversityofTechnology,Poland Szymon Pisarek, Warsaw University of Technology, Poland Wawrzyniec Hyska, Warsaw University of Technology, Poland

Chapter XVIIIPersonalized Information Retrieval in a Semantic-Based Learning Environment ............................ 370 Antonella Carbonaro, University of Bologna, Italy Rodolfo Ferrini, University of Bologna, Italy

Compilation of References .............................................................................................................. 390

About the Contributors ................................................................................................................... 419

Index ................................................................................................................................................ 427

Preface ...............................................................................................................................................xvii

Acknowledgment ................................................................................................................................ xxi

Section IKnowledge-Based Innovations for the Web Infrastructure

Chapter IKIWI: A Framework for Enabling Semantic Knowledge Management ................................................ 1 Ernesto Damiani, University of Milan, Italy Paolo Ceravolo, University of Milan, Italy Angelo Corallo, University of Salento, Italy Gianluca Elia, University of Salento, Italy Antonio Zilli, University of Salento, Italy

Research on semantic-aware knowledge management provides new solutions, technologies, and methods to manage organizational knowledge. These solutions open new opportunities to “virtual challenges” as e-collaboration, e-business, e-learning, and e-government. The research carried out for the KIWI (Knowledge-based Innovation for the Web Infrastructure) project is focused on the strategies for the current Web evolution in the more powerful Semantic Web, where formal semantic representation of resources enables a more effective knowledge sharing. The first pillar of the KIWI framework concerns development of ontologies as a metadata layer. Resources can be formally and semantically annotated with these metadata, while search engines or software agents can use them for retrieving the right in-formation item or applying their reasoning capabilities. The second pillar of the KIWI framework is focused on the semantic search engine. Their capabilities and functionalities have to be improved in order to take advantage of the new semantic descriptions. A set of prototypal tools that enable knowl-edge experts to produce a semantic knowledge management system was delivered by the project. The KIWI framework and tools are applied in some projects for designing and developing knowledge-based platforms with positive results.

Chapter IIIntroduction to Ontology Engineering ................................................................................................. 25 Paolo Ceravolo, University of Milan, Italy Ernesto Damiani, University of Milan, Italy

Detailed Table of Contents

This chapter provides an introduction to ontology engineering discussing the role of ontologies in infor-mative systems, presenting a methodology for ontology design, and introducing ontology languages. The chapter starts by explaining why ontology is needed in informative system, then it introduces the reader to ontologies by leading the reader in a stepwise guide to ontology design. It concludes by introducing ontology languages and standards. This is a primer reading aimed at preparing novice readers of this book to understanding more complex dissertations, for this reason it can be avoided by expert readers.

Chapter IIIOntoExtractor: A Tool for Semi-Automatic Generation and Maintenance of Taxonomies from Semi-Structured Documents ................................................................................................................ 51 Marcello Leida, University of Milan, Italy

This chapter introduces OntoExtractor, a tool for semi-automatic generation of taxonomy from a set of documents or data sources. The tool generates the taxonomy in a bottom-up fashion: starting from structural analysis of the documents, it generates a set of clusters, which can be refined by a further grouping generated by content analysis. Metadata describing the content of each cluster is automati-cally generated and analysed by the tool for generating the final taxonomy. A simulation of a tool, based on implicit and explicit voting mechanism, for the maintenance of the taxonomy is also described. The author describes a system that can be used to generate taxonomy from a heterogeneous source of information, using wrappers for converting the original format of the document to a structured one. This way OntoExtractor can virtually generate taxonomy from any source of information just adding the proper wrapper. Moreover, the trust mechanism allows a reliable method for maintaining the taxonomy and for overcoming the unavoidable generation of wrong classes in the taxonomy.

Chapter IVSearch Engine: Approaches and Performance ..................................................................................... 74 Eliana Campi, University of Salento, Italy Gianluca Lorenzo, University of Salento, Italy

This chapter summarizes technologies and approaches that permit to search information in a knowledge base. The chapter suggests that the search based on both keywords and ontology allows a more effective search, which understands the semantic of the information in a variety of data. The chapter starts with the presentation of current methods to build taxonomy, fill the taxonomy with documents, and search docu-ments. Then, we describe our experience related to the creation of an informal taxonomy, the automatic classification and the validation of search results with traditional measures, which are precision, recall and f-measure. We intend to show that the use of ontology for domain representation and knowledge search offers a more efficient approach for knowledge management. This approach focuses on the meaning of the word, and thus becoming an important element in the building of the Semantic Web.

Chapter VTowards Semantic-Based P2P Reputation Systems ............................................................................ 101 Ernesto Damiani, University of Milan, Italy Marco Viviani, University of Milan, Italy

Peer-to-peer (P2P) systems represent nowadays a large portion of Internet traffic, and are fundamental data sources. In a pure P2P system, since no peer has the power or responsibility to monitor and restrain others behaviours, there is no method to verify the trustworthiness of shared resources, and malicious peers can spread untrustworthy data objects to the system. Furthermore, data descriptions are often simple features directly connected to data or annotations based on heterogeneous schemas, a fact that makes difficult to obtain a single coherent trust value on a resource. This chapter describes techniques where the combination of Semantic Web and peer-to-peer technologies is used for expressing the knowledge shared by peers in a well-defined and formal way. Finally, dealing with Semantic-based P2P networks, the chapter suggests a research effort in this direction, where the association between cluster-based overlay networks and reputation systems based on numerical approaches seems to be promising.

Chapter VISWELS: A Semantic Web System Supporting E-Learning ................................................................ 120 Gianluca Elia, University of Salento, Italy Giustina Secundo, University of Salento, Italy Cesare Taurino, University of Salento, Italy

This chapter presents a prototypal e-Learning system based on the Semantic Web paradigm, called SWELS (Semantic Web E-Learning System). The chapter starts by introducing e-Learning as an efficient and just-in-time tool supporting the learning processes; then a brief description of the evolution of distance learning technologies will be provided, starting from first generation e-Learning systems until the current Virtual Learning Environments and Managed Learning Environments, by underling the main differences between them and the need to introduce standards for e-Learning with which to manage and overcome problems related to learning content personalization and updating. Furthermore, some limits of the tra-ditional approaches and technologies for e-Learning will be provided by proposing the Semantic Web as an efficient and effective tool for implementing new generation e-Learning systems. In the last section of the chapter the SWELS system is proposed, by describing the methodology adopted for organizing and modelling its knowledge base, by illustrating its main functionalities, and by providing the design of the tool together with the implementation choices. Finally, future developments of SWELS will be presented, together with some remarks regarding the benefits for the final user in using such system.

Chapter VIIApproaches to Semantics in Knowledge Management ...................................................................... 146 Cristiano Fugazza, University of Milan, Italy Stefano David, Polytechnic University of Marche, Italy Anna Montesanto, Polytechnic University of Marche, Italy Cesare Rocchi, Polytechnic University of Marche, Italy

There are different approaches to modelling a computational system, each providing a different seman-tics. We present a comparison between different approaches to semantics and aim at identifying which peculiarities are needed to provide a system with a uniquely interpretable semantics. We discuss different approaches, namely, description logics, artificial neural networks, and relational database management systems. We identify classification (the process of building a taxonomy) as common trait. However, in this paper we also argue that classification is not enough to provide a system with a Semantics, which

emerges only when relations between classes are established and used among instances. Our contribu-tion also analyses additional features of the formalisms that distinguish the approaches: closed vs. open world assumption, dynamic versus. static nature of knowledge, the management of knowledge, and the learning process.

Chapter VIIIA Workflow Management System for Ontology Engineering ........................................................... 172 Alessandra Carcagnì, University of Salento, Italy Angelo Corallo, University of Salento, Italy Antonio Zilli, University of Salento, Italy NunzioIngraffia,EngineeringIngegneriaInformaticaS.p.A.,Italy Silvio Sorace, Engineering Ingegneria Informatica S.p.A., Italy

The Semantic Web approach based on the ontological representation of knowledge domains seems very useful for improving document management practices, the formal and machine-mediated communication among people and work team and supports knowledge based productive processes. The effectiveness of a semantic information management system is set by the quality of the ontology. The development of ontologies requires experts on the application domain and on the technical issues as representation formalism, languages and tools. In this chapter a methodology for ontology developing is presented. It is structured in six phases (feasibility study, explication of the knowledge base, logic modelling, implementation, test, extension, and maintaining) and highlights the flow of information among phases and activities, the external variables required for completing the project, the human and structural resources involved in the process. The defined methodology is independent of any particular knowledge field, so it can be used whenever an ontology is required. The methodology for ontology developing was implemented in a prototypal workflow management system that will deployed in the back office area of the SIMS--Semantic Information Management System, a technological platform that is going to be developed for the research project DISCoRSO founded by the Italian Minister of University and Research. The main components of the workflow management system are the editor and the runtime environment. The Enhydra JaWE and Enhydra Shark are well suited as they implement the workflow management standards (languages), are able to manage complex projects (many tasks, activities, people) are open source.

Section IISemantic in Organizational Knowledge Management

Chapter IXActivity Theory for Knowledge Management in Organisations ........................................................ 201 Lorna Uden, Staffordshire University, UK

Current approaches to knowledge management systems (KMS) tend to concentrate development mainly on the technical aspects, but ignore the social organisational issues. Effective KMS design requires that the role of technologies should be that of supporting business knowledge processes rather than storing data. Cultural historical activity theory (CHAT) can be used as a theoretical model to analyse the devel-

opment of knowledge management systems and knowledge sharing. Activity theory as a philosophical and cross disciplinary framework for studying different forms of human practices is well suited for study research within a community of practice such as knowledge management in collaborative research. This paper shows how activity theory can be used as a kernel theory for the development of a knowledge management design theory for collaborative work.

Chapter XKnowledge Management and Interaction in Virtual Communities .................................................... 216 Maria Chiara Caschera, Institute for Research on Population and Social Policies, Italy Arianna D’Ulizia, Institute for Research on Population and Social Policies, Italy Fernando Ferri, Institute for Research on Population and Social Policies, Italy Patrizia Grifoni, Institute for Research on Population and Social Policies, Italy

This chapter provides a classification of virtual communities of practice according to methods and tools offered to virtual community’s members for knowledge management and for the interaction process. It underlines how these methods and tools support users during the exchange of knowledge, enable learning, and increase user ability to achieve individual and collective goals. In this chapter virtual communities are classified in virtual knowledge-sharing communities of practice and virtual learning communities of practice according to the collaboration strategy. A further classification defines three kinds of virtual communities according to the knowledge structure: ontology-based VCoP; digital library-based VCoP; and knowledge map-based VCoP. This chapter describes also strategies of interaction used to improve the knowledge sharing and learning in groups and organization. It shows how agent-based method sup-ports interaction among community’s members, improves the achievement of knowledge and encourages the level of participation of users. Finally this chapter describes the system’s functionalities that support browsing and searching processes in collaborative knowledge environments.

Chapter XIAn Ontological Approach to Managing Project Memories in Organizations ..................................... 233 Davy Monticolo, SeT Laboratory, University of Technology UTBM, France Vincent Hilaire, SeT Laboratory, University of Technology UTBM, France Samuel Gomes, SeT Laboratory, University of Technology UTBM, France AbderrafiaaKoukam,SeTLaboratory,UniversityofTechnologyUTBM,France

Knowledge Management (KM) is considered by many organizations to be a key aspect in sustaining competitive advantage. In the mechanical design domain, the KM facilitates the design of routine product and brings a saving time for innovation. This chapter describes the specification of a project memory as an organizational memory to specify knowledge to capitalize all along project in order to be reuse. Afterwards it presents the design of a domain ontology and a multi agent system to manage project memories all along professional activities. As a matter of fact, these activities require that engineers with different specialities collaborate to carry out the same goal. Inside professional activities, they use their know-how and knowledge in order to achieve the laid down goals. The professional actors competences and knowledge modelling allows the design and the description of agents’ know-how. Furthermore, the paper describes the design of our agent model based on an organisational approach and the role of a domain ontology called OntoDesign to manage heterogeneous and distributed knowledge.

Section IIISemantic-Based Applications

Chapter XIIK-link+: A P2P Semantic Virtual Office for Organizational Knowledge Management .................... 262 Carlo Mastroianni, Institute of High Performance Computing and Networking CNR-ICAR, Italy Giuseppe Pirrò, University of Calabria, Italy Domenico Talia, EXEURA S.r.l., Italy, & University of Calabria, Italy

This chapter introduces a distributed framework for OKM (Organizational Knowledge Management) which allows IKWs (Individual Knowledge Workers) to build virtual communities that manage and share knowledge within workspaces. The proposed framework, called K-link+, supports the emergent way of doing business of IKWs, which require allows work at any time from everywhere, by exploiting the VO (Virtual Office) model. Moreover, since semantic aspects represent a key point in dealing with organizational knowledge, K-link+ is supported by an ontological framework composed of: (i) an UO (Upper Ontology), which defines a shared common background on organizational knowledge domains; (ii) a set of UO specializations, namely workspace ontologies or personal ontologies, that can be used to manage and search content; (iii) a set of COKE (Core Organizational Knowledge Entities) which provide a shared definition of human resources, technological resources, knowledge objects, services and (iv) an annotation mechanism that allows one to create associations between ontology concepts and knowledge objects. K-link+ features a hybrid (partly centralized and partly distributed) protocol to guarantee the consistency of shared knowledge and a distributed voting mechanism to foster the evolu-tion of ontologies on the basis of user needs.

Chapter XIIIFormalizing and Leveraging Domain Knowledge in the K4CARE Home Care Platform ................ 279 Ákos Hajnal, Computer and Automation Research Institute of the Hungarian Academy of Sciences, Hungary Antonio Moreno, University Rovira i Virgili, Spain Gianfranco Pedone, Computer and Automation Research Institute of the Hungarian Academy of Sciences, Hungary David Riaño, University Rovira i Virgili, Spain László Zsolt Varga, Computer and Automation Research Institute of the Hungarian Academy of Sciences, Hungary

This chapter proposes an agent-based architecture for home care support whose main capability is to continuously admit and apply new medical knowledge, entered into the system, capturing and codifying implicit knowledge deriving from the medical staff. Knowledge is the fundamental catalyst in all ap-plication domains and this is particularly true especially for the medical context. Knowledge formaliza-tion, representation, exploitation, creation and sharing are some of the most complex issues related to Knowledge Management. Moreover, Artificial Intelligence techniques and Multi-Agent System (MAS) in health care are increasingly justifying the large demand for their application since traditional techniques are often not suitable to manage complex tasks or to adapt to unexpected events. The manuscript presents

also a methodology for approaching medical knowledge management, from its representation symbolism to the implementation details. The codification of health care treatments, as well as the formalization of domain knowledge, serves as an explicit, a priori asset for the agent platform implementation. The system has the capability of applying new, implicit knowledge emerging from physicians.

Chapter XIVKnowledge Management Implementation in a Consultancy Firm .................................................... 303 Kuan Yew Wong, Universiti Teknologi Malaysia, Malaysia Wai Peng Wong, Universiti Sains Malaysia, Malaysia

KM has become an important strategy for improving organisational competitiveness and performance. Organisations can certainly benefit from the lessons learnt and insights gained from those that have adopted it. This chapter presents the results of a case study conducted in a consultancy firm and the major aim is to identify how KM has been developed and implemented. Specifically, the elements investigated in the case study include the following KM aspects: strategies and activities; leadership and coordination; systems and tools; training; culture and motivation; outcomes and measurement; and implementation approach. Hopefully, the information extracted from this study will be beneficial to other organisations that are embarking on the KM journey.

Chapter XVFinancial News Analysis Using a Semantic Web Approach .............................................................. 311 Alex Micu, Erasmus University Rotterdam, The Netherlands Laurens Mast, Erasmus University Rotterdam, The Netherlands Viorel Milea, Erasmus University Rotterdam, The Netherlands Flavius Frasincar, Erasmus University Rotterdam, The Netherlands Uzay Kaymak, Erasmus University Rotterdam, The Netherlands

In this chapter we present StockWatcher, an OWL-based Web application that enables the extraction of relevant news items from RSS feeds concerning the NASDAQ-100 listed companies. The application’s goal is to present a customized, aggregated view of the news categorized by different topics. We distin-guish between four relevant news categories: i) news regarding the company itself; ii) news regarding direct competitors of the company; iii) news regarding important people of the company; and iv) news regarding the industry in which the company is active. At the same time, the system presented in this chapter is able to rate these news items based on their relevance. We identify three possible effects that a news message can have on the company, and thus on the stock price of that company: i) positive; ii) negative; and iii) neutral. Currently, StockWatcher provides support for the NASDAQ-100 companies. The selection of the relevant news items is based on a customizable user portfolio that may consist of one or more of these companies.

Chapter XVIEnhancing E-Business on the Semantic Web through Automatic Multimedia Representation .......... 329 Manjeet Rege, Wayne State University, USA Ming Dong, Wayne State University, USA Farshad Fotouhi, Wayne State University, USA

With the evolution of the next generation Web—the Semantic Web—e-business can be expected to grow into a more collaborative effort in which businesses compete with each other by collaborating to provide the best product to a customer. Electronic collaboration involves data interchange with multimedia data being one of them. Digital multimedia data in various formats have increased tremendously in recent years on the Internet. An automated process that can represent multimedia data in a meaningful way for the Semantic Web is highly desired. In this chapter, we propose an automatic multimedia representation system for the Semantic Web. The proposed system learns a statistical model based on the domain specific training data and performs automatic semantic annotation of multimedia data using eXtensible Markup Language (XML) techniques. We demonstrate the advantage of annotating multimedia data using XML over the traditional keyword based approaches and discuss how it can help e-business.

Chapter XVIIUtilizing Semantic Web and Software Agents in a Travel Support System ....................................... 341 Maria Ganzha, EUH-E and IBS Pan, Poland Maciej Gawinecki, IBS Pan, Poland Marcin Paprzycki, SWPS and IBS Pan, Poland RafałGąsiorowski,WarsawUniversityofTechnology,Poland Szymon Pisarek, Warsaw University of Technology, Poland Wawrzyniec Hyska, Warsaw University of Technology, Poland

The use of Semantic Web technologies in e-business is hampered by the lack of large, publicly-available sources of semantically-demarcated data. In this chapter, we present a number of intermediate steps on the road toward the Semantic Web. Specifically, we discuss how Semantic Web technologies can be adapted as the centerpiece of an agent-based travel support system. First, we present a complete description of the system under development. Second, we introduce ontologies developed for, and utilized in, our system. Finally, we discuss and illustrate through examples how ontologically demarcated data collected in our system is personalized for individual users. In particular, we show how the proposed ontologies can be used to create, manage, and deploy functional user profiles.

Chapter XVIIIPersonalized Information Retrieval in a Semantic-Based Learning Environment ............................ 370 Antonella Carbonaro, University of Bologna, Italy Rodolfo Ferrini, University of Bologna, Italy

Active learning is the ability of learners to carry out learning activities in such a way that they will be able to effectively and efficiently construct knowledge from information sources. Personalized and customiz-able access on digital materials collected from the Web according to one’s own personal requirements and interests is an example of active learning. Moreover, it is also necessary to provide techniques to locate suitable materials. In this chapter, we introduce a personalized learning environment providing intelligent support to achieve the expectations of active learning. The system exploits collaborative and semantic approaches to extract concepts from documents, and maintaining user and resources profiles based on domain ontologies. In such a way, the retrieval phase takes advantage of the common knowledge base used to extract useful knowledge and produces personalized views of the learning system.

Compilation of References .............................................................................................................. 390

About the Contributors ................................................................................................................... 419

Index ................................................................................................................................................ 427

xvii

Preface

In the last few years, many international organizations and enterprises have designed, developed, and deployed advanced knowledge management systems that are now vital for their daily operations. Multi-faceted, complex content is increasingly important for companies and organizations’ successful operation and competitiveness.

The Semantic Web perspective has added to knowledge management systems a new capability-- rea-soning on ontology-based metadata. In many application fields, however, data semantics is getting more and more context- and time-dependent, and cannot be fixed once and for all at design time.

Recently, some novel knowledge generation and access paradigms such as augmented cognition, case-study-based reasoning, and episodic games have shown the capability of accelerating the kinetics of ideas and competence transmission in creative communities, allowing organizations to exploit the high interactive potential of broadband and mobile network access.

In this new scenario, traditional design-time data semantics frozen in database schemata or other metadata is only a starting point. Online, emergent semantics are playing an increasingly important role. The supply of semantics is twofold: firstly, human designers are responsible for providing initial semantic mappings between information and its environment used for context-aware access. Secondly, the meaning of data is dynamically augmented and adapted taking into account organizational processes and, in general, human cognition.

The Semantic Web paradigm was proposed to tackle some of the problems related to implicit rep-resentation of data semantics affecting Web-related data items (e.g., email messages or HTML pages), providing the capability of updating and modifying ontology-based semantic annotations. Today, ad-vanced knowledge management platforms incorporate on-demand production of Semantic-Web style metadata based on explicit, shared reference systems such as ontology vocabularies, which consist of explicit though partial definitions of the intended meaning for a domain of discourse. However, provid-ing a consistent Semantic-Web style explicit representation of an organization’s data semantics is only a first step for leveraging organizational knowledge.

It is widely recognized that business ontologies can be unstable and that managing ontology evolution and alignment is a constant challenge, as well as a heavy computational burden. Indeed, this problem cannot be tackled without realizing that integrating initial, design-time semantics with emergent, interac-tion-time semantics is as much an organizational, business-related process as a technology-based one.

The KIWI VIsIon

The KIWI (Knowledge-based Innovation for the Web Infrastructure) vision was born out of an interdisci-plinary research project involving a computer science research group, SESAR lab (http://ra.crema.unimi.it)

xviii

and eBMS, an advanced business school working in the e-business management area (http://www.ebms.unile.it). The project was funded by the Italian Ministry of Research - Basic Research Fund (FIRB).

KIWI envisioned a distributed community composed of information agents sharing content, for example, in advanced knowledge management platforms, corporate universities or peer-to-peer mul-timedia content sharing systems. In this community, agents and human actors are able to cooperate in building new semantics based on their interaction and adding it to content, irrespective of the source (and vocabulary) of the initial semantics of the information.

The KIWI vision considers emergent semantics constructed incrementally in this way as a powerful tool for increasing content validity and impact.

The observation that emergent semantics result from a self-organizing process has also some interest-ing consequences on the stability of the content from the business management and social sciences point of view. Also, this perspective promises to address some of the inherently hard problems of classical ways of building semantics in information systems. Emergent semantics provides a natural solution as its definition is based on a process of finding stable agreements; constant evolution is part of the model and stable states, provided they exist, are autonomously detected. Also, emergent semantics techniques can be applied to detect and even predict changes and evolution in the state of an organization or a community.

ThIs BooK’s sTrucTure

This book contains a number of contributions from well-recognized international researchers who, although working independently, share at least some of the aims and the interdisciplinary approach of the original KIWI project.

The contents are structured in three sections. The first one is completely related to the KIWI project; the activities, the theoretical results, and the prototypes developed are presented and discussed. The work was developed in a methodological framework that represents the phases and the tools for an effective introduction of a semantic-based knowledge management platform in a community. The second one presents other theoretical works related to the introduction of the semantic description of knowledge resource in organization or in technological environment. The third section instead is devoted to the description of technological systems and applications that are planned and developed for improving with the semantic aspect the management of knowledge resources.

More in particular, Chapter I, “KIWI: A Framework for Enabling Semantic Knowledge Manage-ment,” by Paolo Ceravolo, Angelo Corallo, Ernesto Damiani, Gianluca Elia, and Antonio Zilli, provides a general overview of the KIWI vision and approach, while Chapter II, “Introduction to Ontology Engi-neering,” written by Paolo Ceravolo and Ernesto Damiani, provides a no-prerequisites introduction to Semantic-Web style explicit representation of data semantics. Thanks to these introductory chapters the reader will be able to understand the basic techniques of data annotation by means of ontology-based vocabularies. Semantic-Web style annotations give an explicit representation of the data semantics as perceived at design-time by the data owners and creators. However, in many cases semantic annotations are not created manually, but extracted from existing data.

Chapter III, “OntoExtractor: A Tool for Semi-Automatic Generation and Maintenance of Taxonomies from Semi-Structured Documents,” by Marcello Leida, and Chapter IV, “Search Engine: Approaches and Performance” written by Eliana Campi and Gianluca Lorenzo, respectively discuss semi-automatic techniques and tools for generating semantic annotations, and the performance of classic (as opposed to semantics-aware) access and search techniques. These chapters identify many potential advantages

xix

and pitfalls of a “straightforward” application of Semantic Web techniques to add semantics-aware an-notations to business data.

Then, the scope of the book broadens, taking into account later additions to annotations expressing data semantics due to interactions. Chapter V“Toward Semantic-based P2P Reputation Systems,” by Ernesto Damiani and Marco Viviani, shows how peer-to-peer interaction at different levels of anonymity can be used to superimpose new annotations to existing metadata, assessing their reliability and trustworthi-ness. An important field for exploiting online, emergent semantics based on interactions is Web-based e-learning, where the learner patterns of behavior when interacting with content can be captured and transformed into additional annotations to the content itself or to its original metadata.

Chapter VI, “SWELS: A Semantic Web System Supporting e-Learning,” by Gianluca Elia, Giustina Secundo, and Cesare Taurino, explores this semantics-aware perspective on e-learning, while Chapter VII, “Approaches to Semantics in Knowledge Management,” by Cristiano Fugazza, Stefano David, Anna Montesanto, and Cesare Rocchi, discusses some fundamental problems raised by the adoption of explicit semantics representation techniques as the basis of knowledge management systems.

The next two chapters deal with the relation between design-time and emergent data semantics on one side and the definition of the business processes where data are used on the other side. Namely, Chapter VIII, “A Workflow Management System for Ontology Engineering,” by Alessandra Carcagnì, Angelo Corallo, Antonio Zilli, Nunzio Ingraffia, and Silvio Sorace, describes a methodology and its implementation in a workflow management system for producing ontology-based representations. Chapter IX, “Activity Theory for Knowledge Management in Organizations,” by Lorna Uden, proposes a theoretical foundation to workflows for generating knowledge.

The following chapters discuss in detail the application of the KIWI vision to specific business-related scenarios. Namely, Chapter X, “Knowledge Management and Interaction in Virtual Communities,” by Maria Chiara Caschera, Arianna D’Ulizia, Fernando Ferri, and Patrizia Grifoni, is about the practical integration of design-time and emergent semantics in the context of the highly dynamic virtual com-munities of Web 2.0. Chapter XI, “An Ontological Approach to Manage Project Memories in Orga-nizations,” by Davy Monticalo, Vincent Hilaire, Samuel Gomes, and Abderrafiaa Koukam, goes back to organizational knowledge management, elaborating on the specific problems posed by managing semantically rich content such as project memories. Chapter XII “K-link+: A P2P Semantic Virtual Of-fice for Organizational Knowledge Management,” by Carlo Mastroianni, Giuseppe Pirrò, and Domenico Talia describes a practical solution relying on peer-to-peer technology and protocols supporting different levels of anonymity.

The book’s concluding chapters contain highly interesting and practical case-studies. Namely, Chap-ter XIII, “Formalizing and Leveraging Domain Knowledge in the K4CARE Home Care Platform,” by Ákos Hajnal, Antonio Moreno, Gianfranco Pedone and David Riaño, deals with the increasingly important scenario of knowledge management supporting healthcare and assisted living environments. Chapter XIV, “Knowledge Management Implementation in a Consultancy Firm”, by Kuan Yew Wong and Wai Peng Wong, presents a case study related to managing the information produced in a consul-tancy activity, which present interesting problems related to intellectual rights management. Chapter XV, “Financial News Analysis Using a Semantic Web Approach,” by Alex Micu, Laurens Mast, Viorel Milea, Flavius Frasincar, and Uzay Kaymak, discusses the user centered extraction of semantics from financial newsfeeds.

In Chapter XVI, “Enhancing E-Business on the Semantic Web through Automatic Multimedia Representation,” by Manjeet Rege, Ming Dong, and Farshad Fotouhi, a Semantic Web automatic data description process is applied to multimedia content; the system is aimed at improving electronic col-laboration between firms and customers.

xx

Chapter XVII, “Utilizing Semantic Web and Software Agents in a Travel Support System,” by Maria Ganzha, Maciej Gawinecki, Marcin Paprzycki, Rafal Gasiorowski, Szymon Pisarek, and Wawrzyniec Hyska, presents an ontology based e-business application: ontologies are used to make functioning an agent-based travel support system, the ontology , used to demarcate data, enable to manage user profiles. In the end, Chapter XVIII, “Personalized Information Retrieval in a Semantic-based Learning Environ-ment,” by Antonella Carbonaro and Rodolfo Ferrini, discusses a learning system able to arrange course using an ontological description of contents and users.

conclusIon

With the rapid emergence of social applications on the Web, self-organization effects have once again proven their interest as a way to add semantics to existing business knowledge.This book discusses how identifying emerging relationships among previously unrelated content items (e.g., based on user and community interaction) may dramatically increase the content’s business value. ES (Emergent Semantics) techniques enrich content via a self-organizing process performed by distributed agents adaptively developing the proper interpretation via multi-party cooperation and conflict resolu-tion. Emergent content semantics is dynamically dependent on the collective behavior of communities of agents, which may have different and even conflicting interests and agendas.

According to the KIWI overall vision, a new generation of content will self-organize around end-us-ers semantic input, increasing its business value and timeliness. The KIWI approach envisions a more decentralized, user-driven “imperfect,” time-variant Web of semantics that self-organizes dynamically, tolerating conflicts.

Prof. Ernesto Damiani, University of Milan, Italy Prof. Giuseppina Passiante, University of Salento, Italy

xxi

Acknowledgment

We would like to thank the team that worked at the KIWI project, some of whom contrib-uted chapters to this book. In particular, we would like to thank Prof. E. Damiani (Uni-versità degli Studi di Milano, Milano, Italia) and Prof. Passiante (Università del Salen-to, Lecce, Italia) who coordinated the two research units involved in the KIWI project. The KIWI project was funded by the Italian Minister for Scientific Research (MIUR) through the Basic Research Fund (FIRB).

Special thanks to Dott Cristina Monteverdi (Università degli Studi di Milano, Milano, Italia) for her effective and energetic help in revising the English spelling of this book.

We would like to express our gratitude to all authors of this book, to all reviewers who participated in the process to improve the contents, and to all those who gave us the possibility to publish this book.

The EditorsP. Ceravolo, A. Corallo, E. Damiani, G. Elia, A. Zilli

Section IKnowledge-Based Innovations

for the Web Infrastructure

�

Chapter IKIWI:

A Framework for Enabling Semantic Knowledge Management

Ernesto DamianiUniversity of Milan, Italy

Paolo CeravoloUniversity of Milan, Italy

Angelo CoralloUniversity of Salento, Italy

Gianluca EliaUniversity of Salento, Italy

Antonio ZilliUniversity of Salento, Italy

Copyright © 2009, IGI Global, distributing in print or electronic forms without written permission of IGI Global is prohibited.

ABsTrAcT

Research on semantic-aware knowledge management provides new solutions, technologies, and methods to manage organizational knowledge. These solutions open new opportunities to “virtual challenges” as e-collaboration, e-business, e-learning and e-government. The research carried out for the KIWI (Knowledge-based Innovation for the Web Infrastructure) project is focused on the strategies for the current Web evolution in the more powerful Semantic Web, where formal semantic representation of resourcesenablesamoreeffectiveknowledgesharing.Thefirstpillarofthe KIWI framework concerns development of ontologies as a metadata layer. Resources can be formally and semantically annotated with these metadata, while search engines or software agents can use them for retrieving the right information item or applying their reasoning capabilities. The second pillar of the KIWI framework is

�

KIWI

InTroducTIon And MoTIVATIons

The widespread diffusion of Internet, broadband availability and accessing devices have changed the way human beings develop their professional lives, the way people work and look for informa-tion, the way people book and have entertainment, and the way people live their personal relation-ships. This new “hardware” context (i.e., cabled and wireless networks) has opened the doors to a new way of content diffusion and to a new generation of applications, called generally Web 2.0, which is enabling Web surfers to be direct protagonists of the content creation (Anderson, 2007).

This powerful technological context and this wider and wider content availability have put a new question: how can we use them? This is the context the project “KIWI” tried to face. The strategy and the solutions provided by the research carried out for this project contribute to the “Semantic Web” research stream (Berners-Lee et al., 2001).

While the new “hardware” conditions enable new software capabilities, society and all its business processes require users to exploit them to obtain new and more powerful results. Tech-nological innovations enable Web surfers to put into practice their creativity and imagination. In the end, all aspects of the everyday life are nicked by this technological trend. “Knowledge workers” (Drucker, 1994) have now an extremely powerful tool for their work. Being information reachable in a few clicks, the knowledge worker can focus on its more valuable activities: extracting knowledge from the information space, creating new knowl-edge from the assembled information, planning

and carrying out knowledge-based projects, con-figuring connections among data and information. That is, the knowledge worker can be focused more on reasoning and applying knowledge than looking for data and information.

Another important aspect of the life of orga-nizations which changed with Internet concerns team management and the collaborative behaviour in a team and among teams. Today, people can collaborate on a global scale, expert communi-ties have emerged and are glued together using Internet-based collaboration; networks of prac-titioners meet on virtual squares (Gloor, 2006). Wider teams and communities mean more (tacit and explicit) knowledge, more perspectives, more expertise, and then more creative capabilities (Nonaka & Takeuchi, 1995). Being so, organiza-tions started to use Web to improve collaboration in their teams, and as a by-product, even free interests-based communities emerged, generally called CoP (Wenger, 1999).

The Web is a platform even for other types of social networks: user and consumer communi-ties that are communities that have as main aim the exchange of knowledge on specific topics, as the performance and the usability of a software or the behaviour of firms. In the end, people are experiencing a new way of collaborating, sharing knowledge, obtaining helps and suggestions, so that today very few questions cannot be answered via Internet.

From an organizational point of view, proj-ects can be carried out without any regional limits in this way organizations are transforming themselves from being “multinational” to being really “global,” from a stage where each factory

focused on the semantic search engine. Their capabilities and functionalities have to be improved in order to take advantage of the new semantic descriptions. A set of prototypal tools that enable knowl-edge experts to produce a semantic knowledge management system was delivered by the project. The KIWI framework and tools are applied in some projects for designing and developing knowledge-based platforms with positive results.

�

KIWI

builds its relations and partnerships on a local or national level to a stage where supply chains connect actors from many nations. From this point of view, the Internet is the infrastructure on which globalization spreads over, while com-munication is cheaper, simpler and more complete day by day; working side by side is not a location problem but a band problem and this makes the “global company” real (Malone, 2004; Tapscott & Williams, 2006).

At the same time (and substantially for the same reasons), directors, managers, workers, all people need more and more information and knowledge, and they need them “now.” But while the Web contains quite surely the needed information, the question to answer is: where is it? It might be already organized in a Web page (then the problem is to find this page), or it must be assembled by aggregating data and informa-tion from different sources (then the problem is even more complex: recognizing the elements composing the answers, finding each of them and aggregating them together). Perhaps it is in an of-ficial Web site, or it is in a forum of experts or in a blog, and so on; in other words, is it trustful? The knowledge worker does not have to work hard in looking for knowledge, but he has to work hard for recognizing and extracting the right knowledge in the ocean of overflowing information.

The evolution of Web applications in its firsts 15 years proposes this wide range of issues. Web users live in a closed loop of needs and solutions. Web developers propose each day a huge quantity of applications under any type of licenses for the most diverse problems. The researcher and practitioner communities are defining standards for data and applications, and a new horizon for the future.

The KIWI project is aimed at participating at this research stream by facing the semantic aspects of data and information, that is, how to build a metadata layer that formalizes semantic descrip-tions of the resources useful for improving the managing capabilities of resources themselves.

seMAnTIc WeB: ProBleMs And soluTIons

The concept of “Semantic Web” was introduced by Tim Berners-Lee in 2001 with a pioneering paper on Scientific American (Berners-Lee et al., 2001). After this publication the “Semantic Web” concept was applied to quite all the different as-pects of the Web, from information management to Web services. The W3C (World Wide Web Consortium) is facing the topic and some stan-dards were already suggested as RDF (Recourse Description Framework) or OWL (Ontology Web Language). These are descriptive languages for building semantic schemas to which data and in-formation resources can refer. But many different approaches are continuously tested with the aim of improving the machine capabilities. The obvious limits the Web had in 2001 concern the capability of surfing automatically through Web pages and Web services, and to manage personal and public data. The Semantic Web is the possible answer to the need of a fast interaction among human users who have the personal goal of browsing the Web, and Internet as a whole composed of many ac-tors (Web sites) and services (Web services) . As stated in (Berners-Lee et al., 2001), the Semantic Web is a place in which users have to think, and applications have to act.

The Semantic Web approach is applied in many business sectors but no clear results are available. One of the most appealing applicative contexts is the tourist sector; many projects are on going for developing technological systems based on ontologies, (see www.teschet.net.). From a technological point of view, this applicative context shows interesting challenges: many ac-tors and services to coordinate (housing service, transportation service, cultural services, payment services); a huge amount of data (user personal and private data, payment data, timing data, etc.); many services to distribute (information service, booking services and ticketing service, etc.). From the business point of view, the innovation

�

KIWI

introduced by semantic technologies changed the way organizations (especially tourist firms) interact with customers, and, mainly, customers take significant advantages of these technologi-cal innovations. Anyway, the lacking of globally accepted standards reduces the range of techno-logical innovations.

The other most important applicative context is Web itself, that is the “Semantic Web” should be a Web where reaching the right information is simpler. The Web is growing at exponential rate. Figure 1 shows the number of the hostnames from 1995 to early 2007 (Netcraft, 2007). A similar trend is recognizable for the number of pages for a Web site (Huberman & Adamic, 1999). It is obvious that each Web surfer knows only an extremely low percentage of the Web, and search engines are his compass. A lot of search engines are available on the Web. They differ in many features. The two most important elements are

the algorithm for indexing and ranking pages and the presentation of query’s results.

Search engines designed in the ‘90s indexed Web pages using keywords extracted from documents while the ranking (the importance of document with respect to each keyword) was built on the number of similar words it contains, the distance among them, and other topological data of the resource.

The first revolutionary search engine was (or is) Googlea. Its indexing and ranking process is managed by the software PageRank™, the most important innovation it introduced concerns the page ranking. It assumes a link from the page A to the page B as a “vote” for the page B, so the page B is more important and trustful, and its rank is higher.

Another interesting search engine is Vivisimob; its power lies in the capability of clustering in real time the query results: they are aggregated

Figure 1. Total sites across all domains December 1995–September 2007, source: (Netcraft, 2007)

�

KIWI

at query time according to their keywords, and the user can browse the cluster structure refining the query’s terms.

A fascinating search engine is Kartooc for its graphical interface. Results are showed in maps. Each Web page (the query result) is a node in the map, and, what is more interesting, nodes are connected by “labelled links” that reflect the connection semantic between the two Web sites. Then, users can browse the map and after each action the search engine refine the query and then the map.

While Google is the most powerful and used search engine, the other two engines mentioned above underline what it totally missing--the ca-pability of showing connections among the query results. Vivisimo and Kartoo use the keywords provided by the user for extracting a Web section, then they point out connections among Web pages and make them usable for a more aware analysis of the results.

The KIWI ProjecT

The KIWI project was aimed to study the pos-sibilities to manage huge amounts of data and information through ontologies, that is, through their semantic descriptions.

An ontology is an “explicit specification of a conceptualization” (Gruber, 1993). Ontologies have to be public and reachable by users (explicit); they represent a formal and logical description (specification) of a view of the world (conceptu-alization) that users are committed to. Ontologies are schemas of the world in which every items (class, relationship, attribute) are described using a natural language vocabulary, the explicit and formal triple composed of a class, a relation-ship and another class (as relationship value) or composed of a class, attribute and value (of the attribute) are expressed by a declarative language like RDF (Beckett, D. 2004) or OWL (Smith et al., 2004).

The first problem that comes to mind is related to the definition of the ontology. To be useful, an ontology needs to represent a shared conceptualization of the knowledge domain, that is, users recognize the interpretation of the world the ontology states.

Ontologies show their power in two main applicative contexts: (a) in managing data by different application exchanging and processing them, that is, when many applications are (or might be) integrated in a technological platform or system; and (b) in searching data, information and knowledge on the Web.

In the first case, ontologies may be treated as a general standard to which data and applications are adapted. Currently, quite all applications have their specific database, call data with application-specific name, use application-specific standards, and so on. If two applications or Web services (that is the most frequent case) have to exchange automatically data, integration must be organized by hand with a lot of development efforts. Instead, if shared ontologies were used for referencing data during the application’s development phase, data could retain the same name and the same relations and attributes for every application and could be exchanged without effort.

The Semantic Web is not only ontology. Data, information and service and whatever type of resources should be indexed using metadata extracted from the ontology to be manageable through their semantics. This is the most com-plex task for Semantic Web researchers. While automatic indexing tools are quick but do not guarantee precision (it depends on the number of resources to be processed), manual indexing tools can be very precise but they are so extremely time consuming that only a small quantity of resources can be processed.

Furthermore, indexed documents must be opportunely managed. A semantic-based data structure needs right tools to be accessed and made usable by users. Search engines should access and manage new forms of data embed-

�

KIWI

ded in (Web) documents, generally RDF-based tags and their values; databases should represent not only a simple and unlabeled relationship but labeled, structured, and directed connections among resources. Moreover, the representation of the metadata structure and ontological con-cepts and their relationship need to be effective to improve the user experience. Search engines are trying many different approaches to this last topic as already explained for the examples about Vivisimo.com and Kartoo.com.

The main research questions pursued for the KIWI project are listed below:

1. Are there any methodologies for developing and maintaining ontologies? Are there any useful tools to this aim?

2. Which are the best strategies for indexing resources? And in which context?

3. How semantic search functionalities can be presented to users?

These research questions are clearly con-nected in a workflow that starts from non-digital knowledge about a specific domain and aims at providing to users a “semantic search” interface where looking for knowledge resources in a wide pool of documents and services.

Applications and methodologies developed for the KIWI project are conceived to be integrated in a knowledge management platform in order to introduce a semantic layer between knowledge resources and applications. In this way, resources can be managed (by push or pull engine) automati-cally respect their effective contents.

The AIM of KIWI

Today, communities and organizations need technological systems and internet connections in order to manage their documents, their processes, and their knowledge archives (goods, services, customers, etc.). Even collaborations and inter-

actions among project teams can be mediated by Web applications that enable synchronous and asynchronous communications. Moreover, the latest Web applications, generally called Web 2.0 (Anderson, 2007), try to promote and sustain individual creativity and to encourage a virtual meeting and social network among users based on their individual interests and jobs.

Technological platforms are used as medium on which communication flows and knowledge is stored; they may be simply a passive system that users manage, or active systems that sup-port users in their “on line life.” The innovations connected with the Semantic Web research are focused on the development of active technologi-cal platforms. The assumption at the base of the Semantic Web research is if a clear, standardized and semantic-based description of resources and users is available, then it is possible to automate “reasoning,” to aggregate data and information resources and to provide the right resource to the right user.

This assumption is very simple and reasonable, but it is not straightforward, it needs to develop a complex system of components:

1. a description of knowledge domain: the development of ontologies is a complex and time consuming activity, moreover today there are not any clear standard for the user description while ontologies that describe knowledge domains are extremely “application focused,” that is not reusable out of the its specific context;

2. a standardized, semantic-based and clear description of the resources (data and in-formation): a. “standardized” means that the de-

scription must be developed using a standard language (RDF, OWL, etc.) in order to be accessible by any “reader,”

b. “semantic-based” means that the de-scription must be referred to an ontol-

�

KIWI

ogy (or at least a taxonomy), a world statement able to define elements and relationships among them, then to define a semantic for each term,

c. “clear” means that the description must be unambiguous. Logical properties of the language and the ontological description of the knowledge domain can help in achieving a high level of clearness;

3. then it is possible “to reason”: the “artificial intelligence” research stream has a long history on this issue but no human like “reasoning” capability has been obtained.

The KIWI project is focused principally on the issues of defining ontologies and on develop-ing a semantic search engine able to access the ontology itself and to extract resources described with semantic metadata. Any study on descrip-tive languages is devoted only to acquiring their structure as we assume the importance to use a widespread standard that only international bodies like the W3C can guarantee.

The final aim of the project is to sketch a methodology for developing ontology and de-scribing knowledge resources and to develop an integrated set of tools for enabling users without deep technological skills to use them for build-ing their semantic knowledge base. The strategy that is pursued is based on the awareness that the Semantic Web needs two types of skills: a technical expertise for using languages, tools and systems and knowledge expertise in order to develop effective ontologies.

The APPlIcATIVe conTexTs

Two major applicative contexts of the project are “local business districts” and “public administra-tion and decision makers.”

In business districts built by several actors (firms, associations, workers, etc.) working in the

same industry or in a supply chain it is extremely important to have a knowledge platform on which to share insights, business strategies, technologi-cal trends, opportunities, and, at the same time, on which to debate about productive strategies and techniques, marketing solutions, customer care, political strategies, and similar issues. Ac-tors involved in a supply chain can improve their productive coordination if they share information about warehouse, forecasts and limits. Moreover in the knowledge society these issues concern not only business actors but even public administra-tions and then decision makers, so they should be involved as users of the platform in order to be aware about needs, trends, and opportunities on going. In this context, the speed in recognizing information is a determinant performance factor for the network as whole.

Actually, people to whom we are referring as possible users of our technological system prefer direct face to face contacts for building their information network and avoid wasting time in looking for data and information.

A semantic knowledge platform should reduce difficulties in sharing information and knowledge through an exact description of the resource meaning. Actors of the district, as users of the platform, can browse the ontology for reaching the resources they are interested in. The ontology, the language spoken by the platform, will make the information system more familiar, while the simplicity of searching, retrieving, recognizing, and accessing the information will make the system more appealing for busy people.

The most important platform aspects are the quality of the ontology and the capability to browse it, that is, the same as browsing the knowledge base.

The KIWI project wants to provide knowledge domain experts with simple and user friendly tools to build ontologies implemented in the RDF language. This language was chosen for its simplicity and its diffusion on the Web (even in the form of “dialect” as RSS), so the future

�

KIWI

inclusion of a new type of resources for extending the knowledge base of the system will be easy to execute. The ontologies are stored in databases that represent the “triplet” structure of the RDF and are accessed by a tool (Assertion Maker) for describing the knowledge resources. The Asser-tion Maker is able to open an ontology and a docu-ment (in a “xml” format), the user can associate to each section of the document (title, paragraphs or others) a concept or a triple (subject-predicate-object) extracted from the ontology. In this way, a set of “semantic keywords” (simple assertion) or complex assertions are stored in the database for each document. The ontology and the assertions the knowledge experts created are used by the “Semantic Navigator,” a semantic search engine. The Semantic Navigator proposes the ontology in two views. The first shows the taxonomy based on the relation “Is_A” as an indented list of concepts, and the second one enables user to build on line assertions from the ones already available in the RDF implementation of the ontology.

The described process is not feasible as stated. The indexing phase as described before is a very long hand-made process and it could be performed only on a limited set of resources. A necessary improvement is to introduce a semiautomatic system for pre-processing information resources.

Specifically it is possible to assign them to one or more concepts of the “Is_A” taxonomy extracted from the ontology, and in a second phase expert users can improve assertions associated to the resources. In this way, a wide pool of docu-ments can be indexed with semantic keywords (that are triples such as “document,” “speak of,” “concept/instance”), while a reduced number of resources (that need it) are indexed with the more detailed complex assertions (“document,” “speak of,” “concept/instance,” “relation/attribute,” “con-cept/instance/value”).

This strategy was applied to the Virtual eBMSd experiment and it will be discussed in the Chapter 5 of this book.

Semantic Navigator, at the top of the presented process, empowers the search and retrieval capa-bilities. Users can be quite sure that the results’ list contains documents related to the assertion built. The assurance is based on the preciseness of the classification process. But our system can be further improved.

A “trust evaluation” component is planned to be added in the Semantic Navigator. It is expected that users that retrieve a knowledge resource with Semantic Navigator will assign a vote to the sen-tences the resources is indexed by. So, time after time, the assertion’s base may be extended and

Figure 2. Structure of the tools developed for the KIWI projects and their inputs and outputs

�

KIWI

refined directly by knowledge domain experts (users of the platform that hosts the KIWI tools). In the end, Semantic Navigator gives as result of a query a list of knowledge items built on the ontological representation of their contents and ranked respect to the votes users gave to all the assertions associated to each of them.

This strategy is well suited for the knowledge society. The quick changes of the cultural and scientific background of people and the applica-tion of existing concepts and theory to new and different cases need dynamic knowledge bases, and the descriptive layer (ontology and resource metadata) is an integral part of it. Knowledge workers have to evolve their skills and compe-tences day by day. Their daily work is a constant application of knowledge to new problems through a focused learning process. In the first case, ex-periences improve the worker’s understanding and it is fundamentally peer-recognized, while in the second case there is a formal evaluation (the exam) and the profile of the worker can be officially extended.

Similarly, innovations emerge from the appli-cation of old knowledge items to new applicative contexts, or from the creation of new knowledge items for solving new problems (Afuah, 2002). Innovations change the view of the world, or, in the Semantic Web words, change ontologies, and then resources’ metadata. To apply these changes we need to update ontologies and metadata, but this task need strong efforts and in a knowledge-based platform each change generally impacts on a wide part of the knowledge base. Instead, if an evolutionary approach is used, as we described before, the evolution of the knowledge base is managed by the user community itself: experts introduce simple changes (new concepts or new relations) and start to update metadata of resources and readers assign trust values to them. In this way, the knowledge base (ontologies and meta-data) is updated, but old versions are not deleted. Therefore, structural updates of the knowledge bases rarely are necessary.

In the following sections, a deeper description of the framework developed for the KIWI project is presented. Methodologies and tools are discussed from the conceptual and usability point of view. The developing of ontologies is only partially a technological task. The most important phase of this task is the conceptualization of the world the ontology is going to represent, the definition of the context in which the ontology will be used, the user needs, and technological context in which it will be inserted. All these issues will define the characteristic the ontology should show. The technological application details are discussed in other chapters of this book.

The same approach is used for the other tools that compose the KIWI framework: the Assertion Maker and the Semantic Navigator.

At the end of this chapter, the main applica-tions of the KIWI framework we are working on are discussed. The framework is applied in three projects of technological and Web-based innovation in communities of experts. In these cases, the Semantic Web approach to knowledge sharing was chosen and the KIWI framework is effective in helping them to design by themselves their knowledge base.

Tools And MeThodologIes