Rui Tuo and C. F. Jeff Wu CAS and Oak Ridge Nat Labs Georgia Institute of Technology

description

Transcript of Rui Tuo and C. F. Jeff Wu CAS and Oak Ridge Nat Labs Georgia Institute of Technology

1

A Theoretical Framework for Calibration in Computer Models: Parametrization,

Estimation and Convergence Properties

Rui Tuo and C. F. Jeff Wu

CAS and Oak Ridge Nat Labs

Georgia Institute of Technology

• Mathematical Framework for Calibration Problems• Kennedy-O’Hagan Method• Least Calibration• Concluding Remarks

Supported by NSF DMS and DOE ASCR programs

2

Calibration Parameters

• Consider a computer experiment problem with both computer output and physical response.– Physical experiment has control variables.– Computer/simulation code is deterministic.– Computer input involves control variables and

calibration parameters.• Calibration parameters represent inherent

attributes of the physical system, not observed or controllable in physical experiment, e.g., material porosity or permeability in comp. material design.

3

Calibration Problems

• In many cases, the true value of the calibration parameters cannot be measured physically.

• Kennedy and O’Hagan (2001) described the calibration problems as:– “Calibration is the activity of adjusting the

unknown (calibration) parameters until the outputs of the (computer) model fit the observed data.”

4

A Spot Welding Example

• Consider a spot welding example from Bayarri et al. (2007). Two sheets of metal are compressed by water-cooled copper electrodes under an applied load.

• Control variables– Applied load– Direct current of magnitude

• Calibration parameter– Contact resistance at the faying

surface

5

Notation and Assumptions

• Denote the control variable by , and the calibration parameter by , the physical response by , and the computer output by .

• For simplicity, assume that the physical response has no random error.

• Calibration problems can be formulated as

where is the true value of the calibration parameter and is the discrepancy between the physical response and the computer model.

• Usually is nonzero and possibly highly nonlinear because the computer model is built under assumptions which do not match the reality. Classical parametric modeling of does not work well in many problems.

6

Parameter identifiability

• The true calibration parameter is unidentifiable because both and are unknown.– “A lack of (likelihood) identifiability, ..., persists

independently of the prior assumptions and will typically lead to inconsistent estimation in the asymptotic sense.” (Wynn, 2001).

• The identifiability issue is also observed by Bayarri et al. (2007) and Han et al. (2009) etc.

• Identifiable if parametric assumptions are imposed (as done by K-O.)

7

distance projection

• Let .• Define the distance projection of by

where is the domain for and is the experimental region for .

• We treat as the “true” calibration parameter and the problem becomes well defined.

• The value of is not known because is an unknown function. An estimator is called consistent if as n .

8

Physical and Computer Experiments

• Physical experiments are costly and thus can only be conducted on finite points of , denoted as .

• A computer experiment is said to be cheap if for each , the functional form of is known.

• Usually computer experiments are expensive so that only finite computer runs can be taken and needs to be estimated.

9

Fill distance

• Suppose is a set of design points.• As a property of design, we define the fill

distance as

• The design minimizing the fill distance is known as the minimax distance design.

• To develop an asymptotic theory, assume a sequence of designs, denoted by with fill distances .

10

Kernel Interpolation and Gaussian Process Models

• Suppose is a symmetric positive definite function. Let For let .

• The linear system has a unique solution . Denote . For any , let

• defines an interpolator of , called the kernel interpolator.• Suppose is a Gaussian process on with mean zero and

covariance function . Then given , the predictive mean of has the same form as (1).

11

Kennedy-O’Hagan Method

• Kennedy and O’Hagan (2001) proposed a modeling framework for calibration problems.

• Main idea:– Consider the model

– Choose a prior distribution for .– Assume that and are independent realizations of

Gaussian processes. Then the posterior distribution of can be obtained.

• By imposing such a stochastic structure, there is no identifiability problem.

12

Technical Assumptions

• For the ease of mathematical analysis, we make the following assumptions:– The computer code is cheap (relaxed later).– is a realization of a Gaussian process with

mean 0 and covariance function , where is an unknown parameter and is known.

– Maximum likelihood estimation (MLE) is used to estimate instead of Bayesian analysis.

13

Simplified Kennedy-O’Hagan Method

• The log-likelihood function is given by

where is the covariance matrix.• The MLE for is

• We refer to this method as the likelihood calibration. Denote the likelihood calibration under design as .

14

Reproducing Kernel Hilbert Space

• To study the asymptotics, we need the reproducing kernel Hilbert spaces, aka the native spaces.

• Suppose is a symmetric positive definite function on . Define the linear space

and equip this space with the bilinear form

• The native Hilbert function space is defined as the closure of under the inner product , denoted as .

15

Limiting Value of Likelihood Calibration

• By some calculations and using the definition of the native norm, we have

where is the kernel interpolate of given by .

• Theorem 1. If there exists a unique such that

Then under certain conditions as .

16

Insight on calibration inconsistency

• In general, the limiting value of the likelihood calibration differs from distance projection . Thus is inconsistent.

• To study the difference , consider the difference between and .

• Define the integral operator for . Denote the eigenvalues of by .

17

Comparison between two norms

• Let be the eigenfunction associated with and with . Then

where the first equality follows for any (Wendland, 2005).• Because is a compact operator, . This yields

.• There are some functions with very small norm but their

native norm is bounded away from zero. Therefore, the likelihood calibration can give results that are far from the projection.

18

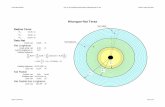

An Illustrative Example

• Let and .• Consider a calibration

problem with a three-level calibration parameter, corresponding to computer codes with discrepancy .

• and are the first and second eigenfunctions of with .

• . . • The third computer code is

the distance projection.

The solid and dashed lines are the first and second eigenfunction of . The dotted line shows the function .

19

An Illustrative Example (cont’d)

• The likelihood calibration with the correlation function gives a different ranking:

The likelihood calibration wrongly chooses the first code.

• is smaller than and for every , i.e., the point-wise predictive error for the third computer code is uniformly smaller than the first two. This gives a good justification for choosing the norm rather than the native norm.

20

Estimation Efficiency

• Wendland (2004) gives convergence rate for kernel interpolation. Suppose has continuous derivatives, there is an error bound

• Given this rate, we call an estimator efficient if .

• A natural question is: does there exist a consistent and efficient estimator?

21

Least Distance Calibration

• Let be the kernel interpolator for under design . The least distance calibration is defined by:

• The following theorem shows that is efficient.

• Theorem 2. Suppose has continuous derivatives. Under some regularity conditions, .

22

Calibration for Expensive Code

• Let be a design for the computer experiment. Denote its fill distance as .

• Choose a positive definite function over . For kernel and design , let be the interpolate for .

• Define the calibration by

23

Convergence Rate

• Theorem 3. Suppose has continuous derivatives and has continuous derivatives. Under some regularity conditions, .

• This convergence rate is slower than , because it is dominated by .

24

Conclusions

• We establish the first theoretical framework for the KO type calibration problems.

• Through theoretical analysis and numerical illustration, we show the inconsistency of the likelihood calibration. Same for Bayesian version.

• We propose a novel calibration method, called the calibration, which is consistent and efficient.

• Conclusions should be similar if is estimated. Would be a very messy analysis.