Robotics: Science and Systems 2020 Corvalis, Oregon, USA ... · takes as input a task instruction...

Transcript of Robotics: Science and Systems 2020 Corvalis, Oregon, USA ... · takes as input a task instruction...

Robotics: Science and Systems 2020Corvalis, Oregon, USA, July 12-16, 2020

1

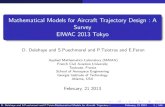

Concept2Robot: Learning Manipulation Conceptsfrom Instructions and Human Demonstrations

Lin Shao, Toki Migimatsu, Qiang Zhang, Karen Yang, and Jeannette Bohg

Abstract—We aim to endow a robot with the ability to learnmanipulation concepts that link natural language instructions tomotor skills. Our goal is to learn a single multi-task policy thattakes as input a natural language instruction and an image ofthe initial scene and outputs a robot motion trajectory to achievethe specified task. This policy has to generalize over different in-structions and environments. Our insight is that we can approachthis problem through Learning from Demonstration by leveraginglarge-scale video datasets of humans performing manipulationactions. Thereby, we avoid more time-consuming processes suchas teleoperation or kinesthetic teaching. We also avoid having tomanually design task-specific rewards. We propose a two-stagelearning process where we first learn single-task policies throughreinforcement learning. The reward is provided by scoring howwell the robot visually appears to perform the task. This score isgiven by a video-based action classifier trained on a large-scalehuman activity dataset. In the second stage, we train a multi-taskpolicy through imitation learning to imitate all the single-taskpolicies. In extensive simulation experiments, we show that themulti-task policy learns to perform a large percentage of the 78different manipulation tasks on which it was trained. The tasksare of greater variety and complexity than previously consideredrobot manipulation tasks. We show that the policy generalizesover variations of the environment. We also show examples ofsuccessful generalization over novel but similar instructions.

I. INTRODUCTION

Humans have gradually developed language, mastered com-plex motor skills, created and utilized sophisticated tools,and built scientific theories to understand and explain theirenvironment. Concepts are fundamental to these abilities asthey allow humans to mentally represent, summarize, andabstract diverse knowledge and skills [42]. By means ofabstraction, concepts that are learned from a limited numberof examples can be extended to a potentially infinite set ofnew and unseen entities. Current robots lack this generalizationability which hampers progress towards deploying them to realdomestic, industrial or logistic environments.

Most related work in cognitive science and robotics havefocused on lexical concepts that correspond to words in naturallanguage [42]. A large body of work in robotics has studiedhow robots can acquire concepts that relate to objects, theirattributes (like color, shape, and material), and their spatialrelations [17, 43, 51, 1]. In this paper, we endow a robot with

L. Shao, T. Migimatsu, K. Yang and J.Bohg are with the Stan-ford AI Lab, Stanford University, Stanford, California 94305. Email:{lins2,takatoki, kaiyuany, bohg}@stanford.edu. Q. Zhang is with the ZhiyuanCollege, Shanghai Jiao Tong University, 200240 Shanghai, China. Email:[email protected]. Toyota Research Institute (”TRI”) providedfunds to assist the authors with their research but this article solely reflectsthe opinions and conclusions of its authors and not TRI or any other Toyotaentity.

Put sth behind sth

Put sth into sth

Put sth in front of sth

Fig. 1: We propose a method that learns manipulation concepts from videos of humandemonstrations labelled with natural language instructions. After training, our networktakes as input a task instruction and image of the start scene, and then outputs a robotmotion trajectory that will succeed at the task.

the ability to acquire manipulation concepts [25] that can beseen as mental representations of verbs in a sentence.

The way concepts are represented and acquired is debatedwithin Cognitive science. In the classical view, concepts re-flect contingencies in the environment that can be perceivedthrough different sensors [28]. In this paper, we follow thisclassical view and propose a robot learning framework toacquire manipulation concepts from human video demonstra-tions. Here, manipulation concepts reflect contingencies in thedemonstration videos that instantiate specific natural languageinstructions. By using videos, we can leverage large-scaledatasets such as 20BN-something-something (Sth Sth) [13]instead of providing demonstrations through the more time-consuming processes of kinesthetic teaching or teleoperation.

Specifically, we train a model that takes as input an image ofthe environment and a natural language instruction. The outputof the model is a robot motion trajectory that is executed open-loop. During training, we compute a reward for the executedtrajectory by scoring the resulting robot video using the videoclassifier trained on Sth Sth. In experiments, we show howour framework is capable of learning 78 different single-taskpolicies from human video demonstrations that generalize over

variations of the environment—in our case, varying objectposes. Given these single-task policies, we can then learn amulti-task model. For this part, we use an imitation learningapproach where the multi-task policy imitates the trajectoriesof the single-task policies. This multi-task model can then notonly generalize over variations of the environment but alsoaccept instructions that are similar to those it was trained on.

Due to the complex nature of learning manipulation con-cepts from human visual demonstrations and natural languageinstructions, our learning framework is composed of manysubcomponents that each plays a critical role in the workingof the entire system. The focus of our paper is not thespecific algorithms used for each subcomponent, but ratherthe combination of these subcomponents into a whole learningframework. Our primary contributions are as follows: 1) anovel learning framework to acquire a unified model of diversemanipulation concepts from human visual demonstrations andlanguage instructions, 2) a suite of 78 diverse simulated ma-nipulation tasks for multi-task learning, 3) extensive evaluationand demonstrations on our approach in simulation on the 78manipulation tasks.

II. RELATED WORK

A. Vision and Language Grounding

In this paper, we are interested in associating natural lan-guage, especially verbs, with robot motion skills for variousmanipulation scenarios. Bridging vision with language hasbeen extensively studied for captioning images or videoswith natural language [8, 9, 49, 50, 52]. Using naturallanguage and vision to guide mobile robot behaviors also hasa rich literature, ranging from pioneering work on connectinglanguage instructions such as “pointing left” and “pointingright” with the behavior experiences of a mobile robot [43] tomore recent work on vision-language navigation [51, 1].

Recently, the use of natural language in robot learning hasgained increasing interest [31]. Task descriptions formulatedin natural language are used to condition policy learning.Jiang et al. [18] use language to structure compositional tasklearning in the context of hierarchical RL, where the high-levelpolicy produces language instructions that direct the low-levelpolicy. Natural language can induce the rewards in an inversereinforcement learning context. Bahdanau et al. [4] proposedlanguage-conditional reward functions trained on (instruction,goal state) pairs from demonstrations. Transferring knowledgefrom unsupervised language learning on large web corporaallows learned policy to generalize to instructions outside ofthe training distribution.

Our approach is more related to work on using naturallanguage and vision to learn manipulation skills. Shu et al.[39] use hierarchical reinforcement learning with a stochastictemporal grammar to decide when to use previously learnedpolicies or acquire new skills based on their language descrip-tions. Jiang et al. [17] similarly use the compositional structureof language to learn hierarchical abstractions of manipulationskills. However, these works are limited to tasks such asfetching, arranging, and sorting objects. We propose a learning

framework that allows a robot to autonomously associate lan-guage instructions with a diverse set of 78 manipulation skills(Fig. 3), including complex tasks such as “scoop something upwith something” or “let something roll up a slanted surface”.

B. Learning from Visual Demonstration

Learning from Demonstration (LfD) [3] enables a robot tolearn a policy from expert demonstrations. LfD significantlyreduces human effort in robot skill learning by avoidingthe need to manually design task-specific reward functions.Because we propose to learn from human activity videos, herewe review only approaches that are based on visual data. Fora more comprehensive review of LfD, we refer to [3].

Recent advances in processing large-scale image and videodatasets with deep learning have made it possible to leveragevisual data for LfD. Fu et al. [10] propose a variational inversereinforcement learning method to learn policies when a largenumber of desired goal state samples are available. Singhet al. [41] present a follow-up work to enable a robot to learnfrom a modest number of successful examples followed byactively solicited queries, where the robot shows the user astate and asks whether the task was successfully completed.Gupta et al. [14] propose a method for solving long-horizontasks which contains an imitation learning stage to producegoal-conditioned hierarchical policies, and a reinforcementlearning phase to fine-tune these policies for task performance.The goal is to reproduce an image of the final successful state.However, the final state may not be enough to characterize amanipulation skill where the shape of the motion trajectory isimportant, such as pouring water into a cup.

In this paper, we propose to evaluate the entire video of arobot action by leveraging a video classifier trained on a large-scale video dataset [13]. This is in contrast to using singleimages to classify goal states. One concern in learning fromhuman activity videos is the domain gap between the videosthat the classifier is trained on and the videos rendered fromthe robot simulation environment. However, our hypothesisis that a video-based activity classifier captures the visualdynamics of the different actions rather than the appearanceof the environment. If the visual dynamics are shared betweenthe simulated and real environments, then a video-based actionclassifier should be able to guide the robot to successfully learnvarious manipulation concepts. In experiments, we demon-strate that reward provided by a video classifier is indeed moreinformative than an image-based classifier.

C. Motion Trajectory Representation

How to best represent motion skills is an age-old questionin robotics with a rich literature. Pastor et al. [34] proposeDynamic Movement Primitives (DMPs) to encode movementtrajectories using the attractor dynamics of a nonlinear dif-ferential system. DMPs are commonly used for representingand learning basic movements in robotics, such as swingingtennis rackets [16, 29], playing drums [48], or writing [23].Khansari-Zadeh and Billard [21] propose learning nonlineardynamical systems with Gaussian mixture models that are

robust to spatial and temporal perturbations. Paraschos et al.[32] and Meier et al. [30] present a probabilistic formulation ofDMPs that maintains a distribution over trajectories to enablecomposition and blending of multiple primitives.

In contrast to these methods, we adopt a simple second-order spring-damper system with auxiliary forces at eachtimestep to modify the shape and velocity profile of thetrajectory. The simplicity and efficiency of this attractor systemworks well for our application, where a complex neuralnetwork needs to learn and output these trajectory parametersfrom high-dimensional language and image inputs. In exper-iments, we show that the auxiliary forces are an importantingredient to this motion representation in addition to the goalto shape the trajectories of more complex manipulation tasks.

D. Multi-Task Learning

If we want robots to be broadly useful in realistic environ-ments, we need algorithms that can learn a wide variety ofskills reliably and efficiently. To this end, Yu et al. [53] re-leased a multi-task learning benchmark containing simulationenvironments for 50 different manipulation tasks. While thisbenchmark uses one-hot vectors to represent each task, we usenatural language instructions as input to our multi-task policynetwork. In this way, we can achieve generalization to variouseven unseen instructions and manipulation scenes.

In terms of learning procedures, the majority of previousmulti-task learning (MTL) work mainly falls into two families:(i) modular policy design [2], where a multi-task policymaintains separate subpolicies for different tasks, and (ii)knowledge transfer through distillation [36, 33, 45], where asingle unified policy is learned from multiple experts. Devinet al. [6] decompose network policies into task-specific androbot-specific modules to enable robots to learn multiple skillsfrom other robots. Competing objectives for individual taskscan make MTL difficult, but Sener and Koltun [37] formulateMTL as a multi-objective optimization with the goal of findinga Pareto optimal solution. Teh et al. [44] propose using ashared “distilled” policy that captures common behavior acrosstasks. Each single-task policy is trained to solve its own taskwhile constrained to stay close to the shared policy, while theshared policy is trained to be the centroid of all task policies.

Our multi-task learning method falls under the policy dis-tillation category. Single-task policies are trained to solveindividual tasks, and after their performance stabilizes, wetrain the multi-task policy to imitate the behaviors of allthe single-task policies. In experiments, we show how ourapproach outperforms related policy distillation approaches.

III. TECHNICAL APPROACH

A. Overview

Our model takes as input a natural language instructiondescribing the task along with an RGB image of the initialscene, and outputs the parameters of a motion trajectory toaccomplish the task in the given environment. These inputsare first fed into a semantic context network to combine theinformation from natural language with the visual perception

of the robot in order to produce a joint task embedding.This serves as a description of the desired task. The taskembedding is then input to a policy network, which synthesizesthe parameters of a motion trajectory. The trajectory is finallyexecuted by the robot with Operational Space Control [22].An overview of the system is given in Fig. 2.

We learn manipulation concepts from human demonstra-tions by scoring how closely a robot resembles a humanexecuting the same task. For scoring, we use a video-basedaction classifier that is trained on human activity videos in SthSth. With this classifier as a proxy reward, we first learn single-task policies through reinforcement learning. Then, we traina multi-task policy over all the tasks by performing imitationlearning on the single-task policies. The final result is a multi-task policy that can take a new natural language instructionand environment image and execute the desired task using itsknowledge base of 78 previously learned tasks.

In this section, we describe the subcomponents of ourframework. While we chose to implement each subcomponentwith certain algorithms, the focus of this paper is not thespecific algorithms. Instead, the focus is the integration ofthese subcomponents to allow learning manipulation con-cepts from rewards that are provided by a video classifiertrained to distingiush human manipulation actions. We leavethe optimization of these subcomponents to future work. Toensure reproducability, we provide a detailed description of theimplementation in an appendix in addition to the source code,both available on https://sites.google.com/view/concept2robot.

B. Semantic Context Network

1) Instruction Encoding: To represent a natural languageinstruction, we tokenize the sentence and feed it into BERT [7]to extract an instruction feature with dimension 1024. Thisfeature is fed into a Multilayer Perceptron (MLP) to reduce thedimensionality to 128. The weights of the MLP are optimizedduring training, while the BERT model is frozen.

2) Vision Encoding: To represent the image of the initialscene, we leverage ResNet18 [15]. The resulting feature is fedinto an MLP to reduce the dimensionality to 256. The weightsof both ResNet18 and the MLP are optimized during training.

We concatenate the instruction and image features to get thefinal task instance embedding of dimension 384. This vectoris fed into a policy network to produce a motion trajectory.

C. Policy Network

The objective of the policy network is to output the param-eters of an open-loop motion trajectory that, when executedby the robot, achieves the manipulation task in the currentenvironment. In this paper, we choose to represent the motiontrajectory by a second-order dynamical system of the form:

y(t) = kp(g − y(t))− kd y(t) + f(t) (1)

where y, y, and y are position, velocity, and acceleration of theend-effector, g is the goal pose, and f are the additional forcesat each timestep that modify the shape and velocity profile ofthe trajectory. kp and kd are standard PD control gains. This

InstructionsClose the drawer

128

BERT

ResNet-18

Goal pose

T ×

7

Forces

1x7

Scene image

20bn-sth-sthclassifier

Executionimage sequence

1024

128

256

1D u

pcon

v1D

upc

onv

Motiontrajectory

Posi

tion

(3D

)

Ang

le a

xis (

3D)

Grip

per (

1D)

768

256

Fig. 2: System architecture. The input to the network is an initial image of the environment and a natural language command from the 20BN Something Something Dataset.ResNet18 [15] and BERT produce feature vectors for the image and the command sentence, respectively. These feature vectors are concatenated and processed with 1D upconvolutionto produce 7-dof vectors for the trajectory goal pose and forces, where 3-dof represent position, 3-dof represent angle axis orientation, and the last dof represents the gripper state.The controller for this trajectory is executed in simulation, and a classifier trained on the 20BN Something Something Dataset classifies the task from the resulting image sequence.The classification score is treated as a proxy reward for completing the task, and is fed back to the network for training.

kind of dynamical system is analytically well understood andis widely utilized in the robotic community [21].

While the above representation is similar to a DMP, DMPshave a canonical system in which the phase variable exponen-tially decays to zero [34]. The forcing term is weighted bythe phase variable, thus also decaying to zero and ensuringconvergence. DMPs have often been used for reaching move-ments, where it makes sense to slow down towards the end.However, we are considering a variety of 78 tasks involvingcontact, such as “hit sth with sth” or “close sth with sth”,where diminishing forces is less desirable. For example, for“close sth with sth”, the robot needs to apply large forcesuntil the end to overcome the sliding friction of the drawer.While it may be possible to capture such motions with DMPsby, for example, placing the goal behind the drawer,we foundit difficult for the neural network to learn trajectories in thismanner. Thus, we choose a free parameterization of the forcingterms so the network has the freedom to apply large forces atthe end, without being hindered by exponential decay. Whilethis means we can no longer ensure convergence, the videoclassifier encourages the network to learn stable trajectories.

The motion trajectory operates in a 7-dimensional space: 3Dposition and orientation of the end effector and 1D movementof the two-fingered gripper. For orientation, we adopt the angleaxis representation, which has been shown to be effective forneural networks due to its lack of parameter constraints [38].

The final task embedding from the semantic context networkwith shape 1 × 384 is simultaneously fed into an MLP(equivalent to 1D upconvolution) to generate the goal g withshape 1× 7 and into a separate 1D upconvolution network togenerate the forces f with shape T×7, where T is the numberof timesteps in the trajectory. The force network applies 1Dupconvolution along the time dimension of the task embeddingto expand its size from 1 to T , and reduces the featurechannels in the second dimension from 384 to 7. We chooseto use upconvolutions across time because there may be tra-jectory features that are independent of time. Upconvolutionsallow us to share weights across the trajectory, reducing thenumber of parameters required and thus improving learningefficiency [27].

D. Video Classification Network

In our LfD framework, the unknown reward function for agiven task Ti is substituted by a video classifier that is trainedto classify actions in Sth Sth videos. We use 3D convolutionfor the classifier, which is reported to be effective for learningspatio-temporal relationships in videos [47].

Note that after training on the Sth Sth dataset, we fixthe weights of the video classifier. When training the policynetwork, the output trajectory is executed open-loop by therobot and rendered into a video. This video is then scored bythe video classifier. For a given task Ti and a video V , theclassification score of the corresponding category is used as areward signal R(V, Ti) to update the policy network.

E. Single-Task Learning

As a first step towards learning manipulation concepts, wetrain single-task policies πi per task Ti, for i ∈ [1 . . . 78].Given the video classifier as a substitute for the reward, weframe this problem as a reinforcement learning problem pertask Ti. The actions are the motion trajectory parametersa = [g, f ] ∈ A, which represent the goal and forces for theentire trajectory (Eq. 1). The reward is the video classificationscore R(V, Ti) as defined above. Note that since the output ofthe policy network is directly transformed into a continuousmotion trajectory, our single-task learning problem is a Con-textual bandit problem [24], where the contextual informationis the language instruction and the image of initial scene.

To solve the Contextual bandit problem, we combine DeepDeterministic Policy Gradients (DDPG) [26] with the CrossEntropy Method (CEM) [35]. The DDPG critic network is usedto approximate the Q value function. The output of the DDPGactor network is used to initialize CEM. Then we run CEM tosearch the landscape of the critic network for a better action ina broad neighborhood of the initial action [20]. For the firstiteration of CEM, we sample a batch of M points in the actionspace A and fit a Gaussian to the top N samples. In successiveiterations, we sample M points from the Gaussian and againupdate the Gaussian with the top N samples. We run CEMfor four iterations to select actions in both the training and teststages. In the training stages, we store the transition (s, a, r)

where s represents the state, a is the action selected after CEM,r is the reward from the video classifier. The critic loss is Lc =‖r − Q(s, a)‖2, and the actor loss is La = −Q(s,Actor(s)),where Actor(s) is the output of the actor network. Note that theweights of critic network are not updated when optimizing theloss of the actor network. In the contextual bandit problem, thecritic network is trained to directly approximate the Q value, sooverestimation is not an issue when using neural networks tolearn the Q value [11]. Thus, the critic learns more stably thanthe actor, and CEM allows us to leverage the faster learningspeed of the critic to improve the output of the actor. Moreanalysis of this effect is provided in the Appendix.

F. Multi-Task Learning

After training single-task policies, we now have expertmodels for each task that we can use to train a multi-taskmodel through imitation learning. We found that this stagedapproach works better than directly training a multi-task modelfrom scratch and provide supporting evaluation in Sec. V.

For each task Ti, we use the corresponding single-taskpolicy πi to produce N rollouts of state-action tuples(si, ai,yi

)Nj=1

and save them into a large replay buffer. Here,si is the feature vector that represents the language instructionand initial image of the scene, ai contains the motion trajectoryparameters provided by πi, and yi is the actual end effectortrajectory of length T recorded when the robot executes ai.

After generating rollouts for all 78 tasks, we train the multi-task policy πθ to generate an action a = [g, f ] that imitates thesingle-task policies by minimizing the following loss function:

minθ

T∑t=1

∥∥y(a)t − yit∥∥2+‖g − y(a)T ‖2+∥∥g − y (ai)T∥∥2 (2)

where y(a)T and y(ai)T

are the final end effector poses of thetrajectory predicted by the multi-task and single-task policy,respectively. The predicted trajectory is computed by forwardsimulating the dynamical system in Eq. 1. Note that y usuallydiffers from y due to physical contact or joint limits.

The first term in the loss function ensures that the predictedtrajectory y(a) from the multi-task policy πθ is similar to thetrajectory yi of the single-task policy πi. The second termensures that the goal g of the multi-task policy is not too farfrom the predicted final state of the trajectory y(a)T . Thiscould occur if the force terms f are too large towards the endof the trajectory and prevent the end-effector from reachingthe goal. The third term ensures that g is not too far from thepredicted final state of the single-task trajectory y

(ai)T

.Note that the gradients of y with respect to g and f are

non-trivial, since y is computed by integrating the dynamicalsystem in Eq. 1 twice across all timesteps. We compute thegradients based on the derivation in [12].

IV. ENVIRONMENT SETUP

A. Human Demonstration Data

At the time of writing, the Sth Sth dataset contained 108,499videos of 174 manipulation tasks, with durations of 2–6

seconds. Labels are textual descriptions based on templates,such as “dropping [something] into [something],” containingslots (“[something]”) that serve as placeholders for objects. Weselect 78 of the 174 tasks that are appropriate for our environ-ment setting. The remaining 96 tasks either require dual-armmanipulation or are difficult to simulate in PyBullet [5], suchas “tearing sth”. For the 78 chosen tasks, we adopt the sametask IDs used in Sth Sth, as listed in Fig. 3.

B. Robot Environment

We use PyBullet [5] to simulate each environment asso-ciated with one of the 78 tasks. Our robot is a simulated7-DoF Franka Panda robot arm with a two-fingered Robotiq2F-85 gripper. A camera is statically mounted to capture theenvironment state as RGB images downsampled to 120×160.Every time the environment is reset after an RL episode, themanipulation objects in each environment are initialized witha random pose within manually defined bounds for each task.

C. Evaluation Metric

The reward that the robot receives from the video clas-sification is a proxy objective that may not directly reflecthow successful a policy is with respect to its task. Therefore,we manually define task-specific success metrics to evaluate(not train) the policies. For example, “hit sth with sth” issuccessful if there is a collision between the object grasped bythe robot and the target object, and the grasped object remainsin the robot’s gripper after the collision. We report the averagesuccess rate over 100 episodes.

V. EXPERIMENTS

The core idea of this paper is to use a video classifieras a proxy reward for learning a multi-task policy and tospecify tasks with natural language. We propose a new multi-task learning algorithm that outputs an entire robot motiontrajectory. In the following experiments, we seek to justifythese choices or demonstrate a performance gain over previousapproaches. The hypotheses we want to test are:

H1. A motion trajectory representation that can capturecomplex trajectory shapes and velocity profiles isimportant for completing the tasks in our dataset.

H2. For learning complex tasks, it is important to takethe video of an entire robot trajectory into accountrather than only the before and after frames.

H3. Policies trained on the video classifier as a proxyreward for true task success perform comparably topolicies trained on true task success.

H4. Our imitation learning method for multi-task learningoutperforms other state-of-the-art methods.

H5. The multi-task policy performs comparably to single-task policies.

H6. Incorporating natural language through task embed-dings allows the multi-task policy to generalize tonovel instructions.

Fig. 3: Initial scene images for each of the 78 tasks that form the input to the single and multi-task network. The top left image shows an overhead view of the simulationenvironment. More examples are shown in the supplementary video.

A. Single-Task Policies

To conduct an ablation study of our choices for the motionrepresentation and reward signal, we first evaluate the perfor-mance of single-task policies.

1) Comparison of goal+forces to goal-only trajectories(H1): To evaluate whether a motion trajectory representationthat can capture complex trajectories is important for learningtasks, we run an ablation experiment where we remove theauxiliary forces f at each timestep from our trajectory repre-sentation in Eq. 1. We train policies on the resulting straight-line trajectories with only goal poses g, which is equivalent toPD control to the goal. The results are presented in Fig. 4. Theaverage task performance of the full trajectory representation(Video w/ Goal+Forces) is 61%, while the average of the goal-only representation (Video w/ Goal) is 54%. For most simpletasks, the goal-only representation is sufficient, but some tasks,like “show sth is empty” and “push sth so that it slightlymoves” require more complex trajectory shapes or velocityprofiles that can only be provided by the additional force term.

2) Comparison of video-based to image-based classification(H2): To demonstrate the importance of using videos to learndynamic manipulation tasks, we run an ablation experimentwhere we replace the video-based action classifier with a clas-

sifier that only takes the initial and goal image of the trajectoryas input. Because videos in Sth Sth do not necessarily endexactly when the task is finished, i.e. when the hand reachesthe goal pose, we train the image classifier by randomlyselecting a frame from the last 25% of the video to be thegoal image. The image-based action classifier feeds the initialand goal images into a pretrained ResNet50 network [40]. Theresulting features are concatenated and passed to an MLP witha softmax layer to output a probability distribution over the174 manipulation tasks. The image classifier achieves a top-1accuracy of 28% and top-5 accuracy of 43%, while the videoclassifier achieves 35% and 58%, respectively.

Fig. 4 (second column) compares the performance of thepolicies trained with the video- and image-based action clas-sifier. In general, policies trained on the image-based classifierperform worse, particularly on tasks like “hit sth with sth” or“poke sth so that it slightly moves”, which require intermediateframes in the trajectory, not just the goal image, to identify.

3) Comparison of video-based with ground truth reward(H3): In this work, we are proposing to learn manipulationconcepts from a reward signal that is provided by a video-based action classifier trained on human video demonstrations.However, there is concern that this proxy reward is noisy and

0 0.2 0.4 0.6 0.8 1

Poke sth so it slightly movesPoke sth so that it falls over

Uncover sthPull sth from right to left

Pull sth out of sthPush sth from right to leftPush sth so that it slightly

movesPush sth with sth

Take sth out of sthLet sth roll down a slanted

surfaceTip sth over

Move sth away from the cameraPick sth up

Remove sth: reveal sth behindMove sth up

Push sth from left to rightHold sth

Move sth away from sthLift sth with sth on it

Move sth downMove sth toward the camera

Open sthShow that sth is inside sth

Let sth roll along a flatsurface

Move sth closer to sthPull sth from left to right

Close sthPush sth onto sthPour sth onto sth

Tilt sth with sth on it slightlyso it doesn t fall down

Put sth on a surfaceHold sth over sth

Tilt sth with sth on it until itfalls off

Fail to put sth into sth becausesth does not fit

Drop sth behind sthPush sth so that it almost falls

off but doesn tShow sth is emptyDrop sth onto sth

Unfold sthDrop sth next to sth

Pour sth into sthPretend to poke sth

Pull sth from behind of sthPush sth off of sth

Lift sth upPut sth behind sth

Put sth underneath sthHit sth with sth

Pull sth onto sthPut sth into sth

Push sth so that it falls offHold sth in front of sth

Poke a stack of sth so the stackcollapse

Poke sth so that it spins aroundThrow sth onto a surface

Fold sthHold sth next to sth

Cover sth with sthThrow sth

Roll sth on a flat surfaceThrow sth against sth

Twist sthPretend to throw sth

Put sth in front of sthPlug sth into sth

Poke a stack of sth without thestack collapsing

Touch sthPoke sth so lightly that it

almost doesn t movePut sth next to sth

Put sth on the edge of sth so itis not supported and fails

Drop sth into sthHold sth behind sth

Lay sth on the table on its sideTurn sth upside down

Wipe sth offScoop sth up with sth

Drop sth in front of sthLet sth roll up a slanted

surface and it rolls back down

0 0.2 0.4 0.6 0.8 1 0 0.2 0.4 0.6 0.8 1 0 0.2 0.4 0.6 0.8 1

Video w/ Goal+Forces Video w/ Goal Image w/ Goal+Forces RL Multitask

Fig. 4: Task Performance of Single and Multi-task policies. The blue bars in all plotsrepresents the task performance of single-task policies when trained with reward from thevideo classifier and with the motion being represented by goal poses and forces at eachtime step. The bar plot on the left shows the effect of removing forces from the motionrepresentation (orange). The second plot from the left shows the effect of replacing thevideo classifier with an image classifier (red). These two ablation studies show that witha few exceptions, being able to shape the trajectory with forcing terms improves taskperformance, and that taking the video of the entire robot motion into account instead ofonly before and after images also improves task performance. The third plot shows theeffect of replacing the video classifier with the handcrafted RL reward that reflects truetask success (purple). For most of the high-performing tasks, the video classifier performscomparably (in some cases better), while for the low-performing tasks, the video classifierfaces a drop in performance, possibly due to video classification inaccuracies. The ploton the right compares single-task to multi-task performance, showing that the multi-taskframework is able to maintain performance on most of the tasks.

may not provide a sufficient signal to reach a high successrate. To test this, we first gauge the difficulty of each taskby training 78 single-task policies with a hand-designed, task-specific, binary reward signal that reflects real task success.To evaluate the effectiveness of using the video-based actionclassification score as a reward signal, we compare the per-formance of the resulting policies to those trained with ourhandcrafted reward functions. Ideally, there is no differencein task success. However, because the action classifier is notperfect, we expect some drop in performance. The results arepresented in the third column of Fig. 4. Most of the high-performing, video-based policies match the performance of the“handcrafted RL” policies. Some of them even perform better,e.g. “push sth so that it slightly moves” and “fail to put sth intosth because sth does not fit”. One possible explanation for thisis that the ground truth rewards are binary, whereas the video-based classifier provides a continuous classification score thatoffers some feedback for attempts that almost achieve thetask but fail. For some of the lower-performing, video-basedpolicies, the gap to the performance of the handcrafted RLpolicies is quite large, indicating that the video-based actionclassifier only provides a noisy reward signal for these tasks.For other low-performing, video-based policies, the gap to theperformance of the handcrafted RL policy is small, indicatingthat the task is hard to learn in general.

B. Multi-Task Policies

We aim to learn a unified model for all the 78 tasks such thatthis model can also generalize over novel instructions. In thissection, we evaluate the performance of our learning methodcompared to baselines and show qualitative examples of howthe model outputs reasonable actions for novel instructions.

1) Comparison of multi-task algorithms (H4): We firstselect eight tasks for a simple multi-task benchmark, shown inTable I. The tasks are grouped into complementary pairs, like“move sth away from sth”/“move sth closer to sth” and “pullsth from right to left”/“pull sth from left to right”. We run ourproposed multi task learning algorithm on the benchmark.

The first baseline, which we call Simple, trains a singlemulti-task policy on all eight environments with the corre-sponding video classification score as the reward. Becausethis model needs to learn a policy that maximizes the videoclassifier reward on all eight tasks, the objective function iscomplex, and policy gradient optimization easily gets stuckin local minima. For example, among the eight tasks, task 42dominates, while the others remain low. Overall, the Simplebaseline only achieves an average success rate of 17.4%.

We implement a second baseline based on the Distral algo-rithm [44]. Since our method generates deterministic actions,we replace the KL divergence—used to measure the differencebetween the single- and multi-task policy distributions—withthe Euclidean distance between the actions generated by thesingle- and multi-task policy. Distral greatly outperforms Sim-ple, but does not perform as well as our method. One possibleexplanation for this is that Distral requires that the multi-taskpolicy is trained in conjunction with the single-task policies.

However, jointly optimizing the policies may be difficult inour task setting. First, the reward signal from the learned videoclassifier is noisy. Second, the magnitude of rewards for theoptimal single-task policies may vary across tasks, since thevideo classifier may be more confident in scoring some tasksthan others. The noise and bias in the reward function maycause Distral to get stuck in local optima.

The reason we learn the single-task policies independentlyof the multi-task policy is that the noisy and biased rewardfrom the video classifier may cause the multi-task policy tobe unstable and perform poorly in the early stages of learning.By learning the single-task policies first, we can ensure thatthe instability of the multi-task learning does not affect thesingle-task performance, especially since single-task learningis unaffected by the reward bias when trained alone.

SuccessRate% Average T40 T42 T86 T87 T93 T94 T103 T104Simple 17.4 24 52 20 28 1 10 3 1Distral 61.5 88 78 80 100 63 36 45 3Ours 76.3 94 74 82 100 100 96 60 4

TABLE I: Comparison of multi-task baselines. The instructions for the eight tasks are“Move sth away from sth”, “Move sth closer to sth”, “Pull sth from left to right”, “Pullsth from right to left”, “Push sth from left to right”, “Push sth from right to left”, “Putsth behind sth”, and “Put sth in front of sth”. We report average success rate over fourrandom seeds. The mean and standard deviation of the success rates of the correspondingsingle-task policies are: 91±3.4, 78±4.6, 88±3.1, 96±3.8, 96±3.2, 94±3.1,51 ± 7.3, and 2 ± 2.2. This shows that the performance is stable across seeds.

2) Comparison of multi-task to single-task (H5): Learninga unified policy for multiple tasks is harder than learning apolicy for a single task. Therefore, we expect the multi-taskpolicy to perform worse than the single-task policies. Thiscomparison is shown in the fourth column of Fig. 4. The multi-task policy achieves an average success rate of 54%, whilethe single-task policies collectively achieve 61%. As expected,this indicates a drop in performance, although not too severe.In future work, we will investigate other multi-task learningframeworks, such as from the meta-learning community.

3) Generalization to new instructions (H6): We demon-strate that our model shows reasonable generalization to newnatural language instructions. We test the generalization at twolevels. First, we replace words in a known task instructionwith novel but similar words. For example, “move sth up” isreplaced with “move sth higher” or “put sth higher”. As shownin Table II, these new instructions reach comparable taskperformance. The performance of generalization decreases themore novel words are in the instruction.

Second, we try to compose tasks. For example, combining“pull sth from left to right” and “move sth up” becomes “pullsth from left to right and move sth up”. Qualitatively, thepolicy network seems to linearly interpolate between the twotasks. This can be observed in the supplementary video. Weevaluate the motion to be successful if it satisfies the successconditions in both tasks. However, the success rate drops byabout half, which may not be surprising because we do notspecifically train the multi-task network to be able to composemultiple tasks. This is a potential direction for future work.

Task Instruction SuccessRate

Known: Move sth up 88%Unseen: Move sth higher 81%Unseen: Put sth higher 79%Unseen: Put an object higher 43%Known: Move sth down 83%Unseen: Move sth lower 83%Unseen: Put sth lower 71%Unseen: Put an object lower 34%Known: Pull sth from left to right 93%Unseen: Pull sth from left to right and move sth up 45%

TABLE II: Comparison of performance between unseen and related known instructions.

VI. CONCLUSION

In this paper, we approached the problem of learning amulti-task policy that links natural language instructions tomotor skills. Specifically, we propose to learn from demon-strations that are provided in the form of videos of humanmanipulation actions. Our framework used a two-stage pro-cess. In the first stage, we trained single-task policies through areinforcement learning algorithm where the reward is providedby a video-based activity classifier. This classifier scored howmuch the robot executing the current policy appears to performthe specified task. In a second stage, we then proceeded totrain one multi-task policy by letting it imitate the single-task policies. In extensive simulated experiments, we showedhow our policy can learn to perform a large number of the78 complex manipulation tasks it was trained on. In ablationstudies, we also motivated our choice of using a video- ratherthan an image-based classifier. Furthermore, we demonstratedhow parameterizing the action through end effector goal poseand forces per time step enables the robot to learn a largernumber of the 78 tasks than when only using the goal. Finally,we showed in qualitative examples how the multi-task policygeneralizes to novel natural language instructions that aresimilar to the ones it was trained on.

Our approach opens exciting new future directions. Forexample, we would like to explore different algorithms formulti-task reinforcement learning as well as meta-learningto learn new tasks faster [53]. Furthermore, in this currentapproach, we have only learned to perform basic manipulationskills. However, for more complex manipulation tasks, wemay want to compose or sequence skills which may requireintegrating task and motion planners similar to [19, 46].Currently, we are only learning tasks on the coarsest level ofnatural language instructions that is provided by Sth Sth [13].However, some actions are qualified by the kind of objectthey are applied to. For example, ”closing a bottle” requires adifferent motion from ”closing a drawer”. Expanding manip-ulation concept learning to these more complex instructionsis also an interesting future direction. And finally, we wouldlike to execute these learned manipulation concepts on a realrobotic platform with real time closed-loop behavior.

REFERENCES

[1] Peter Anderson, Qi Wu, Damien Teney, Jake Bruce, MarkJohnson, Niko Sunderhauf, Ian Reid, Stephen Gould, and

Anton van den Hengel. Vision-and-language navigation:Interpreting visually-grounded navigation instructions inreal environments. In Proceedings of the IEEE Confer-ence on Computer Vision and Pattern Recognition, pages3674–3683, 2018.

[2] Jacob Andreas, Dan Klein, and Sergey Levine. Modularmultitask reinforcement learning with policy sketches.In Proceedings of the 34th International Conference onMachine Learning-Volume 70, pages 166–175. JMLR.org, 2017.

[3] Brenna D Argall, Sonia Chernova, Manuela Veloso,and Brett Browning. A survey of robot learning fromdemonstration. Robotics and autonomous systems, 57(5):469–483, 2009.

[4] Dzmitry Bahdanau, Felix Hill, Jan Leike, EdwardHughes, Pushmeet Kohli, and Edward Grefenstette.Learning to follow language instructions with adversarialreward induction. arXiv preprint arXiv:1806.01946,2018.

[5] Erwin Coumans and Yunfei Bai. Pybullet, a pythonmodule for physics simulation for games, robotics andmachine learning. http://pybullet.org, 2016–2019.

[6] Coline Devin, Abhishek Gupta, Trevor Darrell, PieterAbbeel, and Sergey Levine. Learning modular neuralnetwork policies for multi-task and multi-robot transfer.In 2017 IEEE International Conference on Robotics andAutomation (ICRA), pages 2169–2176. IEEE, 2017.

[7] Jacob Devlin, Ming-Wei Chang, Kenton Lee, andKristina Toutanova. Bert: Pre-training of deep bidirec-tional transformers for language understanding. arXivpreprint arXiv:1810.04805, 2018.

[8] Jeffrey Donahue, Lisa Anne Hendricks, Sergio Guadar-rama, Marcus Rohrbach, Subhashini Venugopalan, KateSaenko, and Trevor Darrell. Long-term recurrent convo-lutional networks for visual recognition and description.In Proceedings of the IEEE conference on computervision and pattern recognition, pages 2625–2634, 2015.

[9] Hao Fang, Saurabh Gupta, Forrest Iandola, Rupesh K Sri-vastava, Li Deng, Piotr Dollar, Jianfeng Gao, XiaodongHe, Margaret Mitchell, John C Platt, et al. From captionsto visual concepts and back. In Proceedings of the IEEEconference on computer vision and pattern recognition,pages 1473–1482, 2015.

[10] Justin Fu, Avi Singh, Dibya Ghosh, Larry Yang, andSergey Levine. Variational inverse control with events:A general framework for data-driven reward definition.In Advances in Neural Information Processing Systems,pages 8538–8547, 2018.

[11] Scott Fujimoto, Herke Van Hoof, and David Meger.Addressing function approximation error in actor-criticmethods. arXiv preprint arXiv:1802.09477, 2018.

[12] Andrej Gams, Ales Ude, Jun Morimoto, et al. Deepencoder-decoder networks for mapping raw images todynamic movement primitives. In 2018 IEEE Interna-tional Conference on Robotics and Automation (ICRA),pages 1–6. IEEE, 2018.

[13] Raghav Goyal, Samira Ebrahimi Kahou, Vincent Michal-ski, Joanna Materzynska, Susanne Westphal, Heuna Kim,Valentin Haenel, Ingo Frund, Peter Yianilos, MoritzMueller-Freitag, Florian Hoppe, Christian Thurau, IngoBax, and Roland Memisevic. The ”something some-thing” video database for learning and evaluating visualcommon sense. CoRR, abs/1706.04261, 2017. URLhttps://20bn.com/datasets/something-something.

[14] Abhishek Gupta, Vikash Kumar, Corey Lynch, SergeyLevine, and Karol Hausman. Relay policy learning: Solv-ing long horizon tasks via imitation and reinforcementlearning. Conference on Robot Learning (CoRL), 2019.

[15] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and JianSun. Deep residual learning for image recognition. InProceedings of the IEEE conference on computer visionand pattern recognition, pages 770–778, 2016.

[16] Auke Jan Ijspeert, Jun Nakanishi, and Stefan Schaal.Movement imitation with nonlinear dynamical systemsin humanoid robots. In Proceedings 2002 IEEE Interna-tional Conference on Robotics and Automation (Cat. No.02CH37292), volume 2, pages 1398–1403. IEEE, 2002.

[17] Yiding Jiang, Shixiang Gu, Kelvin Murphy, and ChelseaFinn. Language as an abstraction for hierarchical deep re-inforcement learning. arXiv preprint arXiv:1906.07343,2019.

[18] Yiding Jiang, Shixiang Shane Gu, Kevin P Murphy, andChelsea Finn. Language as an abstraction for hierarchicaldeep reinforcement learning. In Advances in NeuralInformation Processing Systems, pages 9414–9426, 2019.

[19] Leslie Pack Kaelbling and Tomas Lozano-Perez. Hi-erarchical task and motion planning in the now. InProceedings of the 1st AAAI Conference on Bridging theGap Between Task and Motion Planning, AAAIWS’10-01, page 33–42. AAAI Press, 2010.

[20] Dmitry Kalashnikov, Alex Irpan, Peter Pastor, JulianIbarz, Alexander Herzog, Eric Jang, Deirdre Quillen,Ethan Holly, Mrinal Kalakrishnan, Vincent Vanhoucke,et al. Qt-opt: Scalable deep reinforcement learningfor vision-based robotic manipulation. arXiv preprintarXiv:1806.10293, 2018.

[21] S Mohammad Khansari-Zadeh and Aude Billard. Learn-ing stable nonlinear dynamical systems with gaussianmixture models. IEEE Transactions on Robotics, 27(5):943–957, 2011.

[22] Oussama Khatib. A unified approach for motion andforce control of robot manipulators: The operationalspace formulation. IEEE Journal on Robotics andAutomation, 3(1), 1987.

[23] Tomas Kulvicius, KeJun Ning, Minija Tamosiunaite, andFlorentin Worgotter. Joining movement sequences: Mod-ified dynamic movement primitives for robotics applica-tions exemplified on handwriting. IEEE Transactions onRobotics, 28(1):145–157, 2011.

[24] John Langford and Tong Zhang. The epoch-greedy algo-rithm for contextual multi-armed bandits. In Proceedingsof the 20th International Conference on Neural Informa-

tion Processing Systems, NIPS’07, page 817–824, RedHook, NY, USA, 2007. Curran Associates Inc. ISBN9781605603520.

[25] Miguel Lazaro-Gredilla, Dianhuan Lin, J Swaroop Gun-tupalli, and Dileep George. Beyond imitation: Zero-shottask transfer on robots by learning concepts as cognitiveprograms. arXiv preprint arXiv:1812.02788, 2018.

[26] Timothy P Lillicrap, Jonathan J Hunt, Alexander Pritzel,Nicolas Heess, Tom Erez, Yuval Tassa, David Silver, andDaan Wierstra. Continuous control with deep reinforce-ment learning. arXiv preprint arXiv:1509.02971, 2015.

[27] Jonathan Long, Evan Shelhamer, and Trevor Darrell.Fully convolutional networks for semantic segmentation.In Proceedings of the IEEE conference on computervision and pattern recognition, pages 3431–3440, 2015.

[28] Eric Margolis and Stephen Laurence, editors. Concepts:Core readings. The MIT Press, Cambridge, MA, US,1999. ISBN 0-262-13353-9 (Hardcover); 0-262-63193-8(Paperback).

[29] Takamitsu Matsubara, Sang-Ho Hyon, and Jun Mori-moto. Learning stylistic dynamic movement primitivesfrom multiple demonstrations. In 2010 IEEE/RSJ Inter-national Conference on Intelligent Robots and Systems,pages 1277–1283. IEEE, 2010.

[30] F. Meier, E. Theodorou, F. Stulp, and S. Schaal. Move-ment segmentation using a primitive library. In 2011IEEE/RSJ International Conference on Intelligent Robotsand Systems, pages 3407–3412, 2011.

[31] Dipendra Misra, Andrew Bennett, Valts Blukis, EyvindNiklasson, Max Shatkhin, and Yoav Artzi. Mappinginstructions to actions in 3d environments with visualgoal prediction. arXiv preprint arXiv:1809.00786, 2018.

[32] Alexandros Paraschos, Christian Daniel, Jan R Peters,and Gerhard Neumann. Probabilistic movement prim-itives. In Advances in neural information processingsystems, pages 2616–2624, 2013.

[33] Emilio Parisotto, Jimmy Lei Ba, and Ruslan Salakhutdi-nov. Actor-mimic: Deep multitask and transfer reinforce-ment learning. arXiv preprint arXiv:1511.06342, 2015.

[34] Peter Pastor, Heiko Hoffmann, Tamim Asfour, and StefanSchaal. Learning and generalization of motor skills bylearning from demonstration. In 2009 IEEE InternationalConference on Robotics and Automation, pages 763–768.IEEE, 2009.

[35] Reuven Y. Rubinstein and Dirk P. Kroese. The CrossEntropy Method: A Unified Approach To CombinatorialOptimization, Monte-Carlo Simulation (Information Sci-ence and Statistics). Springer-Verlag, Berlin, Heidelberg,2004. ISBN 038721240X.

[36] Andrei A Rusu, Sergio Gomez Colmenarejo, CaglarGulcehre, Guillaume Desjardins, James Kirkpatrick, Raz-van Pascanu, Volodymyr Mnih, Koray Kavukcuoglu,and Raia Hadsell. Policy distillation. arXiv preprintarXiv:1511.06295, 2015.

[37] Ozan Sener and Vladlen Koltun. Multi-task learningas multi-objective optimization. In Advances in Neural

Information Processing Systems, pages 527–538, 2018.[38] Lin Shao, Parth Shah, Vikranth Dwaracherla, and Jean-

nette Bohg. Motion-based object segmentation based ondense rgb-d scene flow. IEEE Robotics and AutomationLetters, 3(4):3797–3804, 2018.

[39] Tianmin Shu, Caiming Xiong, and Richard Socher.Hierarchical and interpretable skill acquisition inmulti-task reinforcement learning. arXiv preprintarXiv:1712.07294, 2017.

[40] Karen Simonyan and Andrew Zisserman. Very deepconvolutional networks for large-scale image recognition.arXiv preprint arXiv:1409.1556, 2014.

[41] Avi Singh, Larry Yang, Kristian Hartikainen, ChelseaFinn, and Sergey Levine. End-to-end robotic reinforce-ment learning without reward engineering. arXiv preprintarXiv:1904.07854, 2019.

[42] Vladimir M Sloutsky. From Perceptual Categories toConcepts: What Develops? Cognitive science, 34(7):1244–1286, sep 2010. ISSN 1551-6709. doi: 10.1111/j.1551-6709.2010.01129.x.

[43] Yuuya Sugita and Jun Tani. Learning semantic com-binatoriality from the interaction between linguistic andbehavioral processes. Adaptive behavior, 13(1):33–52,2005.

[44] Yee Teh, Victor Bapst, Wojciech M Czarnecki, JohnQuan, James Kirkpatrick, Raia Hadsell, Nicolas Heess,and Razvan Pascanu. Distral: Robust multitask rein-forcement learning. In Advances in Neural InformationProcessing Systems, pages 4496–4506, 2017.

[45] Chen Tessler, Shahar Givony, Tom Zahavy, Daniel JMankowitz, and Shie Mannor. A deep hierarchicalapproach to lifelong learning in minecraft. In Thirty-First AAAI Conference on Artificial Intelligence, 2017.

[46] Marc Toussaint, Kelsey Allen, Kevin Smith, and JoshuaTenenbaum. Differentiable physics and stable modes fortool-use and manipulation planning. In Proceedings ofRobotics: Science and Systems, Pittsburgh, Pennsylvania,June 2018. doi: 10.15607/RSS.2018.XIV.044.

[47] Du Tran, Lubomir Bourdev, Rob Fergus, Lorenzo Tor-resani, and Manohar Paluri. Learning spatiotemporalfeatures with 3d convolutional networks. In Proceedingsof the IEEE international conference on computer vision,pages 4489–4497, 2015.

[48] Ales Ude, Andrej Gams, Tamim Asfour, and Jun Mori-moto. Task-specific generalization of discrete and peri-odic dynamic movement primitives. IEEE Transactionson Robotics, 26(5):800–815, 2010.

[49] Oriol Vinyals, Alexander Toshev, Samy Bengio, andDumitru Erhan. Show and tell: A neural image captiongenerator. In Proceedings of the IEEE conference oncomputer vision and pattern recognition, pages 3156–3164, 2015.

[50] Xin Wang, Wenhan Xiong, Hongmin Wang, and WilliamYang Wang. Look before you leap: Bridging model-freeand model-based reinforcement learning for planned-ahead vision-and-language navigation. In Proceedings of

the European Conference on Computer Vision (ECCV),pages 37–53, 2018.

[51] Xin Wang, Qiuyuan Huang, Asli Celikyilmaz, JianfengGao, Dinghan Shen, Yuan-Fang Wang, William YangWang, and Lei Zhang. Reinforced cross-modal match-ing and self-supervised imitation learning for vision-language navigation. In Proceedings of the IEEE Confer-ence on Computer Vision and Pattern Recognition, pages6629–6638, 2019.

[52] Licheng Yu, Patrick Poirson, Shan Yang, Alexander CBerg, and Tamara L Berg. Modeling context in referringexpressions. In European Conference on ComputerVision, pages 69–85. Springer, 2016.

[53] Tianhe Yu, Deirdre Quillen, Zhanpeng He, Ryan Ju-lian, Karol Hausman, Chelsea Finn, and Sergey Levine.Meta-world: A benchmark and evaluation for multi-task and meta reinforcement learning. arXiv preprintarXiv:1910.10897, 2019.