400-million years of 'fishes': a survey of sampling biases based on the UK record

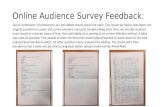

Response Biases in Survey Research

description

Transcript of Response Biases in Survey Research

Response Biases in Survey Research

Hans BaumgartnerSmeal Professor of Marketing

Smeal College of Business, Penn State University

Response biases in survey research

Response biases when a researcher conducts a survey, the expectation is that

the information collected will be a faithful representation of reality;

unfortunately, this is often not the case, and survey researchers have identified many different sources of error in surveys;

these errors may contaminate the research results and limit the managerial usefulness of the findings;

if the response provided by a respondent does not fully reflect the “true” response, a response (or measurement) error occurs (random or systematic);

response biases (systematic response errors) can happen at any of the four stages of the response process, are elicited by different aspects of the survey, and are due to a variety of psychological mechanisms;

Response biases in survey research

T

E

M

T1 T2

E2E1

M1 M2

The relationship between observed measurements and constructs of interest

The total variability of observed scores consists of trait (substantive), random error, and systematic error (method) variance.

Random and systematic errors are likely to confound relation-ships between measures and constructs and between different constructs.

They also complicate the comparison of means.

Response biases in survey research

Outline of the talk Misresponse to reversed and negated items

□ Item reversal and negation, types of misresponse, and mechanisms

□ Reversed item bias: An integrative model□ Eye tracking of survey responding to reversed and

negated items Item grouping and discriminant validity Extreme and midpoint responding as satisficing

strategies in online surveys Stylistic response tendencies over the course of

a survey

Response biases in survey research

The issue of item reversal Should reverse-keyed items (also called oppositely-

keyed, reversed-polarity, reverse-worded, negatively worded, negatively-keyed, keyed-false, or simply reversed items) be included in multi-item summative scales?

If reversed items are to be used, does it matter whether the reversal is achieved through negation or through other means?

What’s the link between reversal and negation, what types of MR result, what psychological mechanisms are involved, and how can MR be avoided?

Response biases in survey research

Item reversal vs. item negation

authors often fail to draw a clear distinction between reversals and negations and use ambiguous terms such as ‘negatively worded items’, which makes it unclear whether they refer to reversed or negated items, or both;

examples from the Material Values scale (Richins and Dawson 1992):

□ It sometimes bothers me quite a bit that I can't afford to buy all the things I’d like.

□ I have all the things I really need to enjoy life.□ I wouldn't be any happier if I owned nicer things.

Response biases in survey research

Item negation items can be stated either as an assertion (affirmation) or

as a denial (disaffirmation) of something (Horn 1989); negation is a grammatical issue; classification of negations in terms of two dimensions:

□ what part of speech is negated (how a word is used in a sentence: as a verb, noun/pronoun, adjective, adverb or preposition/conjunction);

□ how the negation is achieved (by means of particle negation, the addition of no, the use of negative affixes, negative adjectives and adverbs, negative pronouns, or negative prepositions);

Response biases in survey researchNegated by means of

Part of speech

Not, n’t No negative affixes negative adjectives

and adverbs

negative pronouns

negative prepositions

Total

Verb [This salesperson does not make false claims.]

n.a. dislike, disagree, etc.

[I dislike food shopping very

much.]

reluctant, hesitant, never, rarely, seldom, hardly (ever), less, little, etc.

[I seldom daydream.]

n.a. without, instead of, rather than,

etc.[This supplier

sometimes promises to do things without actually doing

them later.]

135 (44.7%) 5 (1.7%) 26 (8.6%) 5 (1.7%) 171 (56.6%)

Noun/pronoun

not everyone, not (only), etc.

[I and my family will consume only certain brands, not

others.]

no object, no reason, no

purpose, etc.[Clipping,

organizing, and using coupons is

no fun.]

discomfort, disagreement,

etc.[There is

considerable disagreement as

to the future directions that this hospital should take.]

little, few, a lack of, none of the,

not much, neither of, etc.

[Many times I feel that I have little influence over things that happen to me.]

no-one, nobody, none, nothing,

etc.[Energy is really not my problem because there is simply nothing I can do about it.]

except for, without, with the

exception of, instead of, rather

than, etc.[American

people should always buy

American-made products instead

of imports.]

4 (1.3%) 17 (5.6%) 5 (1.7%) 10 (3.3%) 4 (1.3%) 14 (4.6%) 54 (17.9%)

Adjective n.a. n.a. uninterested, dishonest, etc.

[Most charitable organizations are

dishonest.]

rarely, less, etc.[I would be less loyal to this rep

firm, if my salesperson

moved to a new firm.]

n.a. n.a.

55 (18.2%) 4 (1.3%) 59 (19.5%)

Response biases in survey researchNegated by means of

Part of speech

Not, n’t No negative affixes negative adjectives

and adverbs

negative pronouns

negative prepositions

Total

Adverb not much, etc.[After I meet

someone for the first time, I can

usually remember what they look like, but not much about them.

no longer, etc.[Hard work is no longer essential

for the well-being of society.]

[I often dress unconventionally even when it's likely to offend

others.]

rarely, less, etc.[I feel I have to do things hastily and maybe less

carefully in order to get everything

done.]

n.a. n.a.

2 (.7%) 1 (.3%) 3 (1.0%) 1 (.3%) 7 (2.3%)

Preposition/ Conjunction

not for, not (just) in, not (only) as, not until, not if (incl. unless),

not because, etc.[I enjoyed this

shopping trip for its own sake, not just for the items

I may have purchased.]

n.a. n.a. n.a. for nothing, etc.[I don't believe

in giving anything away for nothing.]

n.a.

10 (3.4%) 1 (.3%) 11 (3.7%)

Total 151 (50.0%) 18 (6.0%) 68 (22.5%) 41 (13.5%) 5 (1.7%) 19 (6.3%) 302

Response biases in survey research

Item reversal an item is reversed if its meaning is opposite to a

relevant standard of comparison (semantic issue); three senses of reversal:

□ reversal relative to the polarity of the construct being measured;

□ reversed relative to other items measuring the same construct:

reversal relative to the first item reversal relative to the majority of the items

□ reversal relative to a respondent’s true position on the issue under consideration (Swain et al. 2008);

Response biases in survey research

Integrating item negation and item reversal

Item reversal

Non-reversed Reversed

Item negation

Non-negated

Regular (RG) items

Talkative, enjoying talking to people

Polar opposite (PO) items

Quiet, preferring to do things by oneself

Negated

Negated polar opposite (nPO) items

Not quiet, preferring not to be by oneself

Negated regular(nRG) items

Not talkative, not getting much pleasure chatting with people

Response biases in survey research

Misresponse to negated and reversed items

Item Consistent responding

Misresponse to negated items

(NMR)

Misresponse to reversed items

(RMR)

Misresponse to polar opposites

(POMR)

Talkative (RG) A A A A

Not talkative (nRG) D A A D

Quiet (PO) D D A A

Not quiet (nPO) A D A D

MR → within-participant inconsistency in response to multiple items intended to measure the same construct;

Response biases in survey research

Using reversed and negated items in surveys: Some recommendations

although responding to reversed items is error prone, wording all questions in one direction does not solve the problem;

negations should be employed sparingly, esp. if they do not result in an item reversal (note: negations come in many guises);

polar opposite reversals can be beneficial (esp. at the retrieval stage), but they have to be used with care;

Response biases in survey researchAn integrative model of reversed item

bias:Weijters, Baumgartner, and Schillewaert

(2012) two important method effects:□ response inconsistency between regular and reversed

items;□ difference in mean response depending on whether

the first item measuring the focal construct is a regular or reversed item;

three sources of reversed item method bias:□ acquiescence□ careless responding□ confirmation bias

Response biases in survey research

The survey response process (Tourangeau et al. 2000)

Attending to and interpreting survey questions (careless responding)Comprehension

Retrieval

Judgment

Response

Generating a retrieval strategy and retrieving relevant beliefs from memory (confirmation bias)

Integrating the information into a judgment

Mapping the judgment onto the response scale and answering the question (acquiescence)

Response biases in survey research

Empirical studies (net) acquiescence and carelessness explicitly measured; confirmation bias modeled via a manipulation of two item

orders in the questionnaire, depending on the keying direction of the first item measuring the target construct;

three item arrangements: □ grouped-alternated condition (related items are grouped

together and regular and reverse-keyed items are alternated);□ grouped-massed condition (items are grouped together, but

the reverse-keyed items follow a block of regular items, or vice versa;

□ dispersed condition (items are spread throughout the questionnaire, with unrelated buffer items spaced between the target items);

Response biases in survey research

Results for Study 2 both NARS (gNARS = .33, p < .001) and IMC (gIMC = .31, p

< .001) were highly significant determinants of inconsistency bias;

the effect of NARS on inconsistency bias was invariant across item arrangement conditions, as expected;

the effect of IMC did not differ by item arrangement condition;

the manipulation of whether or not the first target item was reversed (FIR) did not affect responses (although in the first study the effect was significantly negative);

the effect of FIR did not differ by item arrangement condition;

Response biases in survey research

Eye tracking of survey responding(with Weijters and Pieters)

eye-tracking data may provide more detailed insights into how respondents process survey questions and arrive at an answer;

eye movements can be recorded unobtrusively, and eye fixations show what respondents attend to while completing a survey;

Response biases in survey research

Eye tracking study 101 respondents completed a Qualtrics survey and

their eye movements were tracked; effective sample size is N=90;

Design:□ each participant completed 16 four-item scales shown

in a random sequence;□ the fourth (target) item on each screen was an RG,

nRG, PO, or nPO item (4 scales each);

Response biases in survey research

Areas of interestAOI1a to AOI1e

AOI2a

AOI2b

AOI2c

AOI2d

AOI3a

AOI3b

AOI3c

AOI3d

AOI4a

AOI5aAOI5b

AOI4b

Response biases in survey research

Mean fixation duration Percentage of total

fixation durationaoi1 1.05 0.050

aoi1a 0.14 0.006aoi1b 0.24 0.011aoi1c 0.38 0.016aoi1d 0.21 0.010aoi1e 0.08 0.004

Aoi2 15.45 0.624aoi2a 3.75 0.153aoi2b 3.39 0.140aoi2c 4.11 0.162aoi2d 4.20 0.169

aoi5a 0.61 0.025aoi5b (for nRG and nPO) 0.39 0.016

aoi3 4.88 0.212aoi3a 1.40 0.061aoi3b 1.12 0.048aoi3c 1.15 0.049aoi3d 1.20 0.053

aoi4 0.43 0.020other areas 2.20 0.099Total 24.01 1.000

Fixation durations for various AOI’s

Response biases in survey research

Determinants of total fixation durationfor fourth item (logaoi23dplus1)

Solutions for Fixed Effects

Effect Estimate S. E. t Value Pr > |t|

Intercept 0.6203 0.1601 3.87 0.0015

Survey completion time 0.0013 0.0002 8.66 <.0001

Screen sequence -0.0052 0.0020 -2.65 0.0082

Number of words 0.0387 0.0112 3.46 0.0006

Reversal of item 0.0411 0.0185 2.23 0.0261

Negation of item 0.1249 0.0202 6.20 <.0001

Use of a PO in the item 0.0605 0.0188 3.22 0.0013

Note: These results are based on a mixed model with respondent and construct as random effects.

Response biases in survey research

Determinants of misresponse

Solutions for Fixed Effects

Effect Estimate S. E. t Value Pr > |t|

Intercept 0.6418 0.0850 7.55 <.0001

Fixation duration for item (logaoi23plus1)

0.1394 0.0417 3.34 0..0009

Reversal of item 0.0383 0.0184 2.08 0.0380

Negation of item 0.0159 0.0185 0.86 0.3912

Use of a PO in the item 0.1197 0.0187 6.40 <.0001

Note: These results are based on a mixed model with dist=gamma and construct as a random effect.

Response biases in survey research

Item grouping and discriminant validity (Weijters, de Beuckelaer, and Baumgartner, forthcoming)

question whether items belonging to the same scale should be grouped or randomized:□ grouped format is less cognitively demanding and often

improves convergent validity;□ random format may reduce demand effects, respondent

satisfacing, and carryover effects, as well as faking; effect of item grouping on discriminant validity:

□ grouping of items enhances discriminant validity (Harrison and McLaughlin 1996);

□ item grouping may lead to discriminant validity even when there should be none;

Response biases in survey research

Method 523 respondents from an online U.S. panel questionnaire contained the 8-item frugality scale of

Lastovicka et al. (1999) and 32 filler items; frugality scale presented in two random blocks of 4

items each, with the 32 filler items in between□ Condition 1: 1-2-3-4 vs. 5-6-7-8□ Condition 2: 1-2-7-8 vs. 3-4-5-6

within blocks item order was randomized across respondents;

Response biases in survey research

ResultsCOND Model MODEL ² DF CFI TLI SRMR RMSEA

Cond 1 Two factors Hypothesized model 53.82 19 .964 .946 .037 .083

Best of alternative permutations 198.13 19 .810 .720 .090 .188

Average of alternative permutations 221.84 19 .788 .687 .096 .200

One factor One-factor model 234.04 20 .776 .686 .095 .200

Cond 2 Two factors Hypothesized model 43.67 19 .961 .943 .040 .071

Best of alternative permutations 88.24 19 .880 .823 .069 .120

Average of alternative permutations 129.93 19 .825 .743 .076 .151

One factor One-factor model 140.83 20 .810 .734 .076 .154

Response biases in survey research

Results (cont’d)

40 50 60 70 80 90 100

110

120

130

140

150

160

170

180

190

200

210

220

230

240

0

1

2

3

4

5

6

7

8

9

10

11

12

condition 1 condition 2

² value

frequ

ency

Response biases in survey research

Results (cont’d)

Condition 1 Condition 2

Factor 1 Average Variance Extracted (AVE) .54 .53

Composite Reliability (CR) .82 .81

Factor 2 Average Variance Extracted (AVE) .61 .40

Composite Reliability (CR) .86 .71

Factors 1 and 2 Correlation .61 .61

Shared Variance (SV) .38 .38

Response biases in survey research

Extreme and midpoint responding as satisficing strategies in online surveys

(Weijters and Baumgartner) when respondents minimize the amount of effort they

invest in formulating responses to questionnaire items by selecting the first response that is deemed good enough, they are said to be satisficing; when respondents put in the cognitive resources required to arrive at an optimal response, they are optimizing (Krosnick 1991);

the effectiveness of procedural remedies to prevent or at least reduce satisficing (MacKenzie & Podsakoff 2012) is limited;

post hoc indices designed to identify satisficers often exhibit limited convergent validity and unambiguous cutoff values are often unavailable;

Response biases in survey research

Satisficing in online surveys (cont’d)

online surveys are likely to contain data from respondents who are satisficing, but what will be the consequences?

we review satisficing and related measures that have been proposed in the literature and propose a new measure called OPTIM;

we investigate the effect of satisficing on two stylistic response tendencies (ERS and MRS) and we demonstrate that the direction of the relationship varies across individuals;

Response biases in survey research

The concept of satisficing the notion of satisficing is consistent with the view of

people as cognitive misers (Fiske and Taylor 1991); satisficing is conceptually similar to carelessness,

inattentiveness, insufficient effort responding, and content-nonresponsive, content-independent, noncontingent, inconsistent, variable or random responding;

Krosnick (1991) argues that in weak forms of satisficing each of the four steps of the response process (comprehension, retrieval, judgment, response) might be compromised to some extent, whereas in strong forms of satisficing the second and third steps might be skipped entirely;

Response biases in survey research

Measures of satisficing

DEDICATED MEASURES Special items or scales are

included in the questionnaire to measure satisficing

NO DEDICATED MEASURES

Satisficing is inferred from respondents’ answers to

substantive questions

DIRECT MEASUREMENT Satisficing is assessed directly by measuring respondents’ tendency to minimize time and effort when responding to a survey

CATEGORY 1

Self-reported effort (e.g., I carefully read every survey item).

CATEGORY 2

Response time

INDIRECT MEASUREMENTSatisficing is assessed indirectly based on the presumed consequences of respondents’ attempts to minimize time and effort on the quality of responses

CATEGORY 3

Quality of responses to special items or scales (e.g. bogus items,

instructed response items)

CATEGORY 4

Quality of responses to substantive questions (e.g.,

outlier analysis, lack of consistency of responses, excessive consistency of

responses)

Response biases in survey research

Measures of satisficing (cont’d) a single-category measure is unlikely to assess

satisficing adequately; direct measures of satisficing are desirable (esp.

response time measures); bogus items and IMC’s have limitations; response differentiation for unrelated items might be

a good outcome-based measure;

Response biases in survey research

A new measure of satisficing optimizing as the time-intensive differentiation of responses

to items that are homogeneous in form but heterogeneous in content:

OPTIM=log(TIME*DIFF) survey duration:

□ input side of effort (indicator of the cognitive resources invested by a respondent);

□ time taken to complete the survey (in minutes), rescaled to a range of 0 to 10;

response differentiation: □ output side of effort (indicator of optimizing for heterogeneous items);□ DIFF = (f1+1)*(f2+1)*(f3+1)*(f4+1)*(f5+1), rescaled to a range of 0 to

10;

Response biases in survey research

ERS and MRS as satisficing strategies

previous research suggests that both ERS and MRS may be used as satisficing strategies (even though ERS and MRS tend to be negatively correlated), although the empirical findings have not been very consistent;

different respondents may use different satisficing strategies: □ some respondents may simplify the rating task by

only using the extreme scale positions (resulting in increased ERS);

□ others may refrain from thinking things through and taking sides (resulting in increased MRS);

Response biases in survey research

Method two online studies with Belgian (n=320) and Dutch

(n=401) respondents; in dataset A 10 heterogeneous attitudinal items and

in dataset B Greenleaf’s (1992) ERS scale; these items were used to construct the ERS

(number of extreme responses), MRS (number of midpoint responses) and DIFF measures; survey duration was measured unobtrusively;

use of a multivariate Poisson regression mixture model of ERS and MRS on OPTIM;

Response biases in survey research

Regression estimates by class DV Intercept B SE 90% CI

DATASET A Class 1 (46.3%) ERS 2.64 -.54 .10 [-.70, -.38]

Extreme responders MRS -3.08 1.39 .20 [1.06, 1.72]

Class 2 (47.1%) ERS -1.04 .90 .11 [.72, 1.08]

Yeah-sayers MRS -.53 .53 .11 [.35, .71]Class 3 (6.7%) ERS -7.25 3.04 .83 [1.67, 4.41]

Midpoint responders MRS 2.39 -.49 .14 [-.72, -.26]

DATASET B Class 1 (43.7%) ERS -1.73 1.13 .12 [.93, 1.33]

Midpoint responders MRS 2.59 -.53 .08 [-.66, -.40]

Class 2 (35.7%) ERS -.41 .88 .39 [.24, 1.52]

Yeah-sayers MRS .24 .44 .25 [.03, .85]Class 3 (20.6%) ERS 2.91 -.48 .25 [-.89, -.07]

Extreme responders MRS -.40 .57 .15 [.32, .82]

Response biases in survey research

Discussion OPTIM as an unobtrusive measure that integrates

several aspects of optimizing/satisficing; across two distinct samples, three satisficing

segments emerged:□ extreme responders□ midpoint responders□ acquiescent responders

OPTIM is useful if a continuous measure of satisficing is required, but it may be less useful as a screening device for careless responders;

Response biases in survey researchStylistic response tendencies over the course of a survey (Baumgartner and

Weijters) three perspectives on stylistic responding:

□ nonexistence of response styles (complete lack of consistency);

□ instability of response styles (local consistency);□ stability of response styles (global consistency);

Weijters et al. (2010) showed that□ the nonexistence of response styles was strongly

contradicted by the empirical evidence for both extreme responding and acquiescent responding;

□ there was a strong stable component in the ratings; and□ there as a weaker local component (as indicated by a

small time-invariant autoregressive effect);

Response biases in survey research

Unresolved questions how do stylistic response tendencies evolve over the

course of a questionnaire? prior research has only considered the effect of stylistic

responding on the covariance structure of items or sets of items and has ignored the mean structure;

are there individual differences in both the extent to which stylistic response tendencies occur across respondents and the manner in which stylistic response tendencies evolve over the course of a survey?

prior research has not emphasized heterogeneity in stylistic response tendencies across people;

Response biases in survey research

Method data from 523 online respondents; each participant responded to a random selection of

eight out of 16 possible four-item scales shown on eight consecutive screens in random order;

eight separate response style indices were computed for both (net) acquiescence response style or NARS (i.e., respondents’ tendency to express more agreement than disagreement) and extreme response style or ERS (i.e., respondents’ disproportionate use of more extreme response options);

the design guarantees that there is no systematic similarity in substantive content over the sequence of eight scales across respondents;

Response biases in survey research

ResultsEstimate SE T p 95% confidence

intervalMeans iERS .918 .019 49.03 < .001 [ .881, .955]

sERS -.009 .003 -2.77 .006 [ -.015, -.003]iNARS 3.298 .027 123.59 < .001 [3.246, 3.350]sNARS -.019 .005 -3.77 < .001 [ -.029, -.009]

Variances iERS .105 .012 9.00 < .001 [ .082, .128]sERS .001 .000 2.49 .013 [ .000, .002]iNARS .155 .024 6.38 < .001 [ .108, .203]sNARS .001 .001 1.30 .195 [-.001, .003]

Correlations iERS with sERS -.312 .120 -2.60 .009 [-.547, -.077]

iERS with iNARS .491 .082 5.98 < .001 [ .330, .651]

iERS with sNARS -.189 .204 -.93 .352 [-.588, .210]

iNARS with sNARS -.583 .132 -4.42 < .001 [-.842, -.325]

sERS with iNARS -.074 .172 -.43 .669 [-.411, .264]

sERS with sNARS .129 .388 .33 .740 [-.632, .889]

Response biases in survey research

NARS and ERS trajectories

1 2 3 4 5 6 7 80.00

0.20

0.40

0.60

0.80

1.00

1.20

1

2

3

4

5

3.30

3.28

3.26

3.24

3.22

3.20

3.18

3.17

0.92

0.86

NARS ERS ERS - 1 SD slope ERS + 1 SD slope

Questionnaire page (screen) number

ERS

NARS

Response biases in survey researchResponse distributions on the first

and the last screen of the questionnaire

Response biases in survey research

A comprehensive model of measurement error

yijt = ijt + ijt jt + ijt + ijt

yijt a person’s observed score on the ith measure of construct j at time t

jt a person’s unobserved score for construct j at time t

ijt systematic error scoreijt random error scoreijt coefficient (factor loading) relating yijt to jt

ijt intercept term (additive bias)

systematicerror

randomerror

Response biases in survey research

Empirical data we analyzed items from volumes 1 through 36 of

JCR (1974 till the end of 2009) and volumes 1 through 46 of JMR (1964 to 2009);

we included all Likert-type scales for which the items making up the scale were reproduced in the article and factor loadings or item-total correlations were reported;

total of 66 articles in which information about 1330 items measuring 314 factors was provided;

of the 1330 distinct items in the data set, 608 came from JCR and 722 from JMR;

Response biases in survey research

Item reversal (cont’d) in our data set of 1330 items, between 83 and 86

percent of items were nonreversed (depending on the definition of reversal);

the proportion of factors (or subfactors in the case of multi-factor constructs) that do not contain reversed items was 70 percent;

only 8 percent of factors (out of 314 factors) were composed of an equal number of reversed and nonreversed items (i.e., the scale was balanced);

Response biases in survey research

Cross-classification of negation and reversal

Reversal relative to

Polarity of construct Polarity of first item Dominant keying direction Total

Non-reversed Reversed Non-

reversed Reversed Non-reversed Balanced Reversed

No negation(s) 71.1% 8.7% 71.0% 8.7% 70.3% 5.2% 4.2% 79.7%

Negation(s) 11.7% 8.7% 15.4% 4.9% 13.7% 2.9% 3.7% 20.3%

Total 82.8% 17.4% 86.4% 13.6% 84.0% 8.1% 7.9% 100.0%

Response biases in survey research

Theoretical explanations of MR:Reversal ambiguity and

comprehension Rs may not view antonyms as polar opposites [POMR]; contradictories vs. contraries:

□ Antonym reversals can be contradictories or contraries, depending on whether they are bounded or unbounded (Paradis and Willners 2006);

□ Negation reversals are contradictories if the core concept is the same; the situation is more complicated for the negation of bounded and unbounded antonyms;

simultaneous disagreement with contraries is more likely when items are worded extremely (McPherson and Mohr 2005);

“Buddhism’s ontology and epistemology appear to make East Asians relatively comfortable with apparent contradictions” (Wong et al. 2003, p. 86) [RMR];

Response biases in survey research

Theoretical explanations of MR:Comprehension

Careless responding (Schmitt and Stults 1985):□ respondents fail to pay careful attention to individual

items and respond based on their overall position on an issue [RMR];

□ more likely when a reversed item is preceded by a block of nonreversed items;

□ Remedies: make Rs more attentive and/or explicitly alert them to

the presence of reversed items; use balanced scales, alternate the keying direction, and

disperse the items;

Response biases in survey research

Theoretical explanations of MR:Comprehension (cont’d)

Reversal ambiguity: Rs may not view antonyms as polar opposites [POMR]; “Buddhism’s ontology and epistemology appear to make

East Asians relatively comfortable with apparent contradictions” (Wong et al. 2003, p. 86) [RMR];

contradictories vs. contraries: Negated statements are contradictories; Antonyms can be contradictories or contraries, depending on

whether they are bounded or unbounded (Paradis and Willners 2006);

simultaneous disagreement is more likely when items are worded extremely (McPherson and Mohr 2005);

Response biases in survey research

Theoretical explanations of MR:Comprehension (cont’d)

□ Remedies: use more sophisticated procedures to identify

appropriate antonyms (formulate linguistic contrasts in two stages; see Dickson and Albaum 1977);

may be particularly useful in cross-cultural research; bounded antonyms have to be pretested and

unbounded antonyms have to be used with care; extreme statements should be avoided;

Response biases in survey research

Theoretical explanations of MR:Retrieval

Item-wording effects:□ Confirmation bias (Davies 2003; Kunda et al. 1993);□ Directly applicable to antonymic reversals;□ For negation reversals, confirmation bias can lead to

MR if a non-negated polar opposite schema is readily available (Mayo et al. 2004);

□ Remedies: Use polar opposite reversals to get richer belief samples,

even though they may increase apparent MR; Negation reversals have few retrieval benefits;

Response biases in survey research

Theoretical explanations of MR:Retrieval

Positioning effects:□ Dispersed PO items reduce carryover effects and can

increase coverage, but the task is more taxing for Rs and internal consistency may suffer;

□ Item similarity may determine whether Rs engage in additional retrieval when items are grouped together;

□ Remedies: The use of dispersed antonyms should encourage the

generation of distinct belief samples; Avoid very similar (negated) statements when items are

grouped;

Response biases in survey research

Theoretical explanations of MR:Judgment

Item verification difficulty (Carpenter and Just 1975; Swain et al. 2008):□ MR is a function of the complexity of verifying the

truth or falsity of an item relative to one’s true beliefs, which depends on whether the item is stated as an affirmation or negation [NMR];

□ Remedies: Negations are problematic because they increase the

likelihood of making mistakes (remember there are many types of negations);

Negated polar opposites are most error-prone; Mix of regular and PO reversals should be best;

Response biases in survey research

Item verification difficulty

Truth valueTrue False

Negation

Affirmation

MR

Response biases in survey research

Theoretical explanations of MR:Response

Acquiescence: Rs initially accept a statement and subsequently re-consider it based on extant evidence; the first stage is automatic, the second requires effort (Knowles and Condon 1999) [RMR];

Remedies: □ Although response styles are largely individual

difference variables, situational factors may be under the control of the researcher (e.g., reduce the cognitive load for Rs);

□ Problems with online surveys;

Response biases in survey research

Theoretical explanations of MR:Response (cont’d)

Asymmetric scale interpretation: the midpoint of the rating scale may not be the boundary between agreement and disagreement for Rs (esp. if the response categories are not labeled; cf. Gannon and Ostrom 1996) [RMR];

Remedies:□ Use fully labeled 5- or 7-point response scales;

Response biases in survey research

Careless responding respondents do not always pay attention to the instructions,

the wording of the question, or the response options before answering survey questions because of satisficing; respondents may form expectations about what is being measured

and respond to individual items based on their overall position concerning the focal issue, rather than specific item content;

this can result in inconsistent responding to reverse-keyed items; esp. likely when constructs are labeled and when grouped-massed

item positioning is used; measurement:

instructional manipulation checks (IMC), bogus items, and self-report measures of response quality

response times post hoc response consistency indices (too much or too little)

Response biases in survey research

Examples of IMC’s

Between 14% and 46% of respondents failed this test in Oppenheimer et al. (2009)

Response biases in survey research

Examples of IMC’s

About 7% of respondents (out of over 1000) failed this test (see Oppenheimer et al. 2009)

Response biases in survey research

(Dis)Acquiescence tendency to agree (ARS) or disagree (DARS) with items

regardless of content (agreement or yea-saying vs. disagreement or nay-saying bias);

leads to response inconsistency for reversed items; Measurement:

simultaneous (dis)agreement with contradictory statements; (dis)agreement with many heterogeneous items; net acquiescence as the relative bias away from the midpoint;

different arrangements of the items in the questionnaire should have no differential effect on acquiescent responding;

Response biases in survey research

Confirmation bias when respondents answer a question, they tend to

activate beliefs that are consistent with the way in which the item is stated (positive test strategy, inhibition of disconfirming evidence);

this leads to a bias in the direction in which the item is worded (e.g., Are you introverted? vs. Are you extraverted?) and differences in mean response;

the effect of the keying direction of the first item on confirmation bias should be strongest in the grouped-massed condition and weakest in the dispersed condition;

Response biases in survey research

Example item with 4 negationsTop management in my company has let it be known in no uncertain terms that unethical behaviors will not be tolerated.

Response biases in survey research

Revised Life Orientation Test (LOT) In uncertain times, I usually expect the best. I’m always optimistic about my future . Overall, I expect more good things to happen to me

than bad. If something can go wrong for me, it will. I hardly ever expect things to go my way. I rarely count on good things happening to me.

Response biases in survey research

Weijters, Baumgartner, and Schillewaert (forthcoming)

models in which method effects are included generally yield a much better fit to the data than models in which only substantive factors are included;

it is often difficult to clearly distinguish between different method effect specifications on the basis of statistical criteria;

the psychological processes causing method effects are frequently left unspecified;

although method factors have been related to a variety of other psychological constructs, the choice of these other constructs often seems ad hoc;

Response biases in survey research

Weijters, Baumgartner, and Schillewaert (forthcoming)

models in which method effects are included generally yield a much better fit to the data than models in which only substantive factors are included;

it is often difficult to clearly distinguish between different method effect specifications on the basis of statistical criteria;

the psychological processes causing method effects are frequently left unspecified;

although method factors have been related to a variety of other psychological constructs, the choice of these other constructs often seems ad hoc;

Response biases in survey research

Responses to bogus items(Meade and Craig, 2012)

% strongly disagree or disagree responses

(1,2)

% other responses(3-7)

I sleep less than one hour per night. 90 10

I do not understand a word of English. 90 10

I have never brushed my teeth. 92 8

I am paid biweekly by leprechauns. 80 20

All my friends are aliens. 82 18

All my friends say I would make a great poodle. 73 27

Response biases in survey research

Strongly Disagree

(1)

Disagree (2)

Neither Agree nor Disagree

(3)

Agree (4)

Strongly Agree

(5)

I feel satisfied with the way my body looks right now.

I am satisfied with my weight.

I am pleased with my appearance right now.

I feel attractive.

I don’t feel attractive.

I feel ugly.

I don’t feel ugly.

Response biases in survey research

Strongly Disagree (1)

Disagree (2) Neither Agree nor Disagree (3)

Agree (4) Strongly Agree (5)

A product is more valuable to me if it has some snob appeal.

The government should exercise more responsibility for regulating the advertising, sales and marketing activities of manufacturers.

Most retailers provide adequate service.

I feel attractive.

I don’t feel attractive.

I feel ugly.

I don’t feel ugly.