Protocol-level Reconfigurations for Autonomic Management of Distributed Network Services

description

Transcript of Protocol-level Reconfigurations for Autonomic Management of Distributed Network Services

Protocol-level Reconfigurations for

Autonomic Management of

Distributed Network ServicesK. Ravindran and M. Rabby

Department of Computer Science

City University of New York (City College)

16th April 2012

Organization of presentation• Service model to accommodate application adaptations

when network and environment changes

• Protocol-level control of QoS provisioning for applications

• Dynamic protocol switching for adaptive network services

• Meta-level management model for protocol switching

• Case study of distributed network applications: (replica voting for adaptive QoS of information assurance)

• Open research issues

OUR BASIC MODEL OFSERVICE-ORIENTED NETWORKS

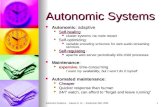

Adaptive distributed applicationsApplications have the ability to:

^^ Determine the QoS received from system infrastructure

^^ Adjust its operational behavior by changing QoS expectations

Service-oriented protocol

Application

systeminfrastructure Adjust QoS

expectation

notify QoSoffering

notify resource

changes

externalenvironment

incidence

of hostile

conditions

(e.g., a

irborne p

olice n

etworks,

edge-managed In

ternet p

aths)

protocol P(S) exports only an interface behavior to client applications, hidingits internal operations on the infrastructure resources from clients

Service-oriented distributed protocols: run-time structure

p-1 p-3p-2

asynchronous processesim

plementing protocol P

(S)

signaling messages

application

Distributed realization of infrastructure ‘resources’

access service S{q-a,q-b, . .}

agents implementingservice interface for S

protocolinternal state

map protocol state ontoservice interface state

exercise resources{rA,rB,rC, . .}

{q-a, q-b, . .} : QoS parameter space --- e.g., content access latency in CDN

{rA, rB,rC, . .} : Resource control capabilities --- e.g., placement of mirror sites in a CDN

What is our granularity of network service composition ?

PROTOCOL !!A protocol exports only an interface behavior to client applications, hiding itsInternal operations on the infrastructure resources from clients

Examples: 1. ‘reliable data transfer’ service TCP is the underlying protocol 2. ‘data fusion’ service multi-sensor voting is the underlying protocol 3. ‘wide-area content distribution’ content push/pull across mirror sites is the underlying protocol

Given a network application, different types/variants of protocols are possible (they exercise network resources in different ways, while providing a given service

A protocol good in one operating region of network may not be good in another region

“one size does not fit all”

choose an appropriate protocol based on the currently prevailing resource and environment conditions (dynamic protocol switching)

P1(S), P2(S) : Protocols capable of providing service S

pi1,pi2,pi3, . . : Distributed processes of protocol Pi(S), exercising the infrastructure resources --- i=1,2

NE

TW

OR

KS

ER

VIC

EP

RO

VID

ER

Management view of distributed protocol services

Client application

. .

INFRASTRUCTURERESOURCES

. .P1(S)

p12p13

p11

. .P2(

S)

p22p33

p21

invoke service S(a)

serv

ice

bind

ing

service

binding

exercise resources

r=F(a,e)

invokeprotocol

a: desiredQoS parameters

Service-level

managem

ent module

(SM

M)

hostile externalenvironment(e)

service interface(realized by agents) reconfiguration policies,

adaptation rules

match QoS achieved (a’)

with desired QoS (a)

protocol selection,

QoS-to-resource mapping, . .

Modeling of environmentQoS specs a, protocol parameters par, network resource allocation Rare usually controllable inputs

In contrast, environment parameters e E* are often uncontrollableand/or unobservable, but they do impact the service-level performance (e.g., component failures, network traffic fluctuations, etc)

environment parameter space:E* = E(yk) E(nk) E(ck)

parameters that thedesigner knows about

parameters that thedesigner does not

currently know about

parameters that thedesigner can never

know about

Protocol-switching decisions face this uncertainty

What is the right protocol to offer asustainable service assurance ?

Service goals: Robustness against hostile environment conditions

Max. performance with currently available resources

These two goals often conflict with each other !!

A highly robust protocol is heavy-weight, because it makes pessimistic assumptionsabout the environment conditions protocol is geared to operate as if system failures are going to occur at any time, and is hence inefficient under normal cases of operations

A protocol that makes optimistic assumptions about environment conditions achieves good performance under normal cases, but is less robust to failures protocol operates as if failures will never occur, and are only geared to recover from a failure after-the-fact (so, recovery time may be unbounded)

Need both types of protocols, to meet the performance and robustness requirements

EXAMPLE APPLICATION 1:CONTENT DISTRIBUTION NETWORK

p-a

p-ap-b

p-b

late

ncy

mon

itor

agen

t 3

p-b U({p-b})

U({p-a,

p-b})

U({

p-a,

p-b}

)

content pages

update message for pages {x} U({x}):

CONTENT DISTRIBUTION NETWORK

LayeredView

client2

client1

client3

contentserver

R

agent 1

agent 2

Proxy-capable node & interconnection

Local access link

Content push/pull-capable proxy node

Content-forwarding proxy node

Content accessservice interface

Net

wor

k in

fras

truc

ture

[ove

rlay

tre

e as

dis

trib

uti

on t

opol

ogy,

nod

e/n

etw

ork

res

ourc

es]

Serv

ice-

laye

r[a

dap

tive

alg

orit

hm

for

con

ten

t p

ush

/pu

llto

/fro

m p

roxy

nod

es]

Ap

plic

atio

n la

yer

[lat

ency

sp

ec, c

onte

nt

pu

bli

sh-s

ub

scri

be,

adap

tati

on lo

gic]

Infrastructure interface

client traffic & mobility,content dynamics, . .

clients

contentupdates

server R

c1

pa pb

sub(Pb)sub(Pa)

c2 c3

L L’

L: latency specs to CDN systemL’: latency monitored as system output

pushpa,pb

pull pa,pb

exercise resourcs

control logic

x

z

y

u

v

w

q

x

y

v

u

z

q

w

x

y

z

u

v

q

w

environment (E*)

Management-oriented control ofCDN exercisable at three levels

application-levelreporting & matching of

QoS attributes(e.g., client-level latency

adaptation,server-level content scaling)

adjust parameters ofcontent access protocols(e.g., proxy-placement,

choosing a push or pull protocol)

infrastructure resource adjustment(e.g., allocation more link

bandwidth, increasing proxystorage capacity, increasing

physical connectivity)

Ou

r stu

dy

Control dimensions

Client-driven update scheme (time-stamps without server query)

.

.(page changes)

server Sproxy X(S)client

GTS=1

GTS=2

GTS=3

(LTS=1,GTS=1)request(p)

content(p)

request(p)

content(p)

request(p)

content(p) update_TS(p,2)

(LTS=1,GTS=2)request(p)

content(p)

get_page(p)

update_page(p)

request(p)

content(p)

(LTS=2,GTS=2)

(page changes)

(local copy)

(updated local copy)

c >> s

TIME

PULL protocol

c: client access rates: server update rate

server Sproxy X(S)client

request(p)

content(p)

update_page(p)

request(p)

content(p)

(page changes)(local copy)

c << s

TIME

Server-driven update scheme (PUSH protocol)

update_page(p)

update_page(p)

update_page(p)

update_page(p)

update_page(p)

Minimal service in the presence of resource depletions (say, less # of proxy nodes due to link congestion)

Max. revenue margin under normal operating conditions

server-driven protocol (PUSH) and client-driven protocol (PULL) differ in theirunderlying premise about how current a page content p is when a client accesses p

PUSH is heavy-weight (due to its pessimistic assumptions) operates as if client-level accesses on p are going to occur at any time, and hence is inefficient when c << s

PULL is light-weight (due to its optimistic assumptions) operates as if p is always up-to-date, and hence incurs low overhead under normal cases, i.e., c >> s

CDN service provider goals

2.5

2.0

1.5

1.0

0.5

0.00.040.0 0.06 0.08 0.10.02

c

s

x

xx

xxx

000

0000

push

pull

Nor

mal

ized

mes

sage

ove

rhea

d pe

r re

ad

100

200

300

400

500

600

700

0.040.0 0.06 0.08 0.10.02

xxxx

xx

00

00000

push

pull

c

s

0

late

ncy

incu

rred

per

read

(m

sec)

content distribution topology(simulated)

Content forwarding node

Content distributing node

clie

nts

clie

nts

c

c

c

c

c

c

s

serverR

content size: 2 mbytes

link bandwidths: set between 2 mbps to 10 mbps

contentupdates

read request

Situational-context based proxy protocol control

parametric description ofclient workloads & QoS specs

Model-basedestimation of

overhead/latency

i, j, k

CDNsimulator

requ

est arrivalsfrom

clients i, j, k

(different content size/type)

task planning & scheduling

task events(based on combined

client requestarrival specs)

Controller

observedQoS ’

QoSspecs

[L,O]

error= -’

schedule tasksto resources at

proxy nodes

place proxiesV” V’ to

reduce [tree T(V’,E’) G(V,E), A]

[optimal methods for“facility placement”

(greedy,evolutionary, . .)]

Context & Situationalassessment module

set o

f nod

es &

inte

rcon

nect

s[G

(V,E

), co

sts,

polic

y/ru

les,

. .]

client demographics, cloud leases,QoE, node/link status, . .

plug-in ofCDN model

T(V

’,E’)G

(V,E

)

node/linkoutages

trafficbursts

state feedback (node/link usage)

sign

al,

sta

ble

xx

xx

oo

o oN

orm

aliz

edco

st-m

easu

re(o

verh

ead)

0.51.0

1.5

2.0

2.5

0

percentage of nodes used as content distributing proxies5% 10% 20% 30%

'

"

V

V

Base topology(from network map of US carriers):

|V’|: 280 nodes;226 client clusters

Average # of hops traversedby a client request: 4

A: greedy

A: Optimization algorithm employed for computing proxy placement

A: genetic

(a)

(b)

EXAMPLE APPLICATION 2:MULTI-SENSOR DATA FUSION

sensor devices,data end-user

Replica votingprotocol

(fault detection,asynchrony control)

maintenance ofdevice replicas

(device heterogeneity, message security)

Layered View

data

deli

very

serv

ice in

terf

ace

(dat

a in

tegr

ity

& a

vaila

bilit

y)

Fault-tolerance in sensor data collection

N: degree of replicationfm: Max. # of devices that are assumed as vulnerable to failure (1 fm < N/2 )fa: # of devices that actually fail (0 fa fm)

raw data collected fromexternal world sensors (e.g., radar units)

YE

SNO

deliver data(say, d-2, later)

vote collator

USER

voter1

. .

replica voting

apparatus

proposedata

voterN

voter2

d-1 d-Nd-2

voter3

d-3

YES

faulty

QoS-oriented spec:data miss rate

how often [TTC > ] ??

YES/NO:consent/dissent vote

(message-transportnetwork)

: timeliness constraint on data; TTC: observed time-to-deliver data

infr

astr

uctu

reVoti

ng

serv

iceD

ata

fu

sio

nap

plicati

on

Modified 2-phase commit protocol (M2PC)environment (E*)device attacks/faults,

network message loss,device asynchrony, . .

Control dimensions for replica votingProtocol-oriented: 1. How many devices to involve 2. How long the message are 3. How long to wait before asking for votes . .

QoS-oriented: 1. How much information quality to attain 2. How much energy in the wireless voting devices . .

System-oriented: 1. How good the devices are (e.g., fault-severity) 2. How accurate and resource-intensive the algorithms are . .

A voting scenario under faulty behavior of data collection devicesdevices = {v1,v2,v3,v4,v5,v6}; faulty devices = {v3,v5}

v2,v4 dissent;v6, v5 consents;

omission failure at v3

wri

tego

od d

ata

in b

uffe

r by

v1

v1,v2,v4,v6dissent, v5 consents

wri

teba

d da

tain

buf

fer

by v

3

ST

AR

T

NO YES

TTC (time-to-complete voting round)

message overhead (MSG):[3 data, 14 control] messages

attempt 1(data ready at v6but not at v2,v4)

v3,v5 dissent;v1,v2,v4 consent

attempt 3

wri

tego

od d

ata

in b

uffe

rby

v6

TIME

delivergood data

from bufferattempt 2

(data ready at v2,v4as well)

collusion-type offailure by v3 and v5to deliver bad data

random behaviorof v3 and v5

Had v3 also consented,good data delivery would have

occurred at time-point A

A B C

Had v3 proposed a good data,correct data delivery would have

occurred at time-B

collusion-type offailure by v3 and v5to prevent delivery

of good data

Malicious collusions among faulty devices:•Leads to an increase in TTC (and hence reduces data availability [1-])•Incurs a higher MSG (and hence expends more network bandwidth B)

K: # of voting iterations (4 in this scenario)

fa=2; fm=2

Observations on M2PC scenario

^^ Large # of control message exchanges: worst-case overhead = (2fm+1).N

[too high when N is large, as in sensor networks]

Not desirable in wireless network settings, since excessive message

transmissions incur a heavy drain on battery power of voter terminals

In the earlier scenario of N=6 and fa=2,

# of YES messages = 7, # of NO messages =12

^^ Integrity of data delivery is guaranteed even under severe failures

(i.e., a bad data is never delivered)

Need solutions that reduce the number of control messages generated

d vote(d,{1,3,4,5})

YY

YN

B v1 v2 v5v4v3

d

N

B v1 v2 v5v4v3

d d d d’ d d d dvote(d,{4})

d’ d

data proposedby v2

data

pro

pose

d by

v2

vote(d,{3})

Y

deliver d to end-user

deliver d to end-user

TIMET

TC

TT

C

ALLV protocol (pessimistic scheme)expends 5 messages total, K=1 iteration

SELV protocol (optimistic scheme)expends 4 messages total, K=2 iterations

faul

ty

faul

ty

Solution 1: Selective solicitation of votesPoll only fm voters at a time (B specifies the voter list as a bit-map)

Sample scenario for M2PC: N=5, fm=1 ( need YES from 2 voters, including the proposer)actual # of faulty voters: fa=1

wasteful messages !!

N=9

fm (SELV)

1

2

3

4

1.25

9.16

K

3.11

5.70

K (ALLV) = 8.0 N=8

fm (SELV)

1

2

3

1.29

K

3.29

6.20

K (ALLV) = 7.0

N=7

fm (SELV)

1

2

3

1.33

K

3.53

6.95

K (ALLV) = 6.0

N=6

fm (SELV)

1

2

1.40

K

3.90

K (ALLV) = 5.0

N=5

fm (SELV)

1

2

1.50

K

4.50

K (ALLV) = 4.0

Analytical results

K : mean number ofvoting iterations per round

Employ implicit forms of vote inference

Implicit Consent Explicit Dissent (IC-M2PC) mode of voting NO NEWS IS GOOD NEWS !!

A voter consents by keeping quiet; dissents by sending NO message

(in earlier scenario, saving of 7 YES messages) IC-M2PC mode lowers control message overhead significantly when: ^^ (Tp) is small many voters generate data at around the same time Tp

^^ fm « N/2 only a very few voters are bad (but we don’t know who they are !!)

worst-case control message overhead: O(fm .N ) for 0 < c < 1.0c

depends on choice of vote solicitation time

Solution 2:

Protocol-level performance and correctness issues

Under strenuous failure conditions, the basic form of

IC-M2PC entails safety risks (i.e., possibility of delivering incorrect data)

normal-case performance is meaningless unless the protocols areaugmented to handle correctness problems may occasionally occur !!

VOT_RQ (d’)

d1 d2

decide todeliver d’ to user

buffermanager

d’

2.T

net

T : maximum message

transfer delay

net

voter 1(good)

voter 2(good)

voter 3(bad)

NONO

‘safety’ violation !!

IC-M2PC mode

VOT_RQ (d’)

d1 d’d2

NO

NO

YES

voter 1(good)

voter 3(bad)

voter 2(good)

M2PC mode (reference protocol)

decide tonot deliver d’ to user

buffermanager

optimistic protocol (i.e., ‘NO NEWS IS GOOD NEWS’)

^^ very efficient, when message loss is small, delays have low variance, and fm << N/2 --- as in normal cases

^^ need voting history checks after every M rounds before actual data delivery, where M > 1

message overhead: O(N.fm/M); TTC is somewhat high

message overhead: O(N^2); TTC is low

Dealing with message loss in IC-M2PC mode

How to handle sustained message loss that prevent voter dissentsfrom reaching the vote collator??

^^ Make tentative decisions on commit, based on the implicitly perceived consenting votes ^^ Use aggregated `voting history’ of voters for last M rounds to sanitize results before final commit (M >

1)

1. If voting history (obtained as a bit-map) does not match with the implicitly perceived voting profile of voters, B suspects a persistent message loss and hence switches to the M2PC mode

2. When YES/NO messages start getting received without a persistent loss,

B switches back to IC-M2PC mode

Batched delivery of M “good” results to user “bad” result never gets delivered (integrity goal)

round 1

round 2 (say, dissent from V-x was lost)round 3

[y,n,y,y]

[y,n,y,y]

[*,y,n,n]

round 4

round 6 (dissent from V-x and V-z lost)

round 5 (dissent from V-x and V-z lost)

deliver d1,d3,d4 – and discard d2 (sporadic message loss)

[n, n ]

[n, n ]

discard d5 and d6 (suspect persistent message loss)

IC-M

2PC

IC-M

2PC

round 8round 7

M2P

C

consent and dissent messagesare not lost (so, message loss rate has reduced)

M2P

C round 9

V-x V-zV-y (faulty) buffer managerTIME d-i : result tentatively

decided in round i under ICED mode

depict incorrect decisions (y: YES; n: NO)*: voter was unaware of voting (due to message loss)

voters

Xomission failure

swit

ch m

odes

Non-delivery of data in a round, such as d2, is compensated bydata deliveries in subsequent rounds (`liveness’ of voting algorithm in real-time contexts)

“history vector” based sanitization of results

M=4

M=2

Control actions during votingM2PC mode: if (num_YES fm) deliver data from tbuf to userIC-M2PC modeupon timeout 2T since start of current voting iteration if (num_NO < N-fm) optimistically treat data in tbuf as (tentatively) deliverable if (# of rounds completed so far = M) invoke “history vector”-based check for last M rounds

Both M2PC and IC-M2PC if num_NO (N-fm) discard data in tbuf if (# of iterations completed so far < 2fm) proceed to next iteration else declare a ‘data miss’ in current round

num_YES/NO: # of voters from which YES/NO responses are received for data in proposal buffer tbuf

fm: Assumed # of faulty devicesdata size = 30 kbytes

control message size = 50 bytes

N=10;# of YES votes needed = 6;(Tp)=50 mec; (Tp)=50 msec;

IC-M2PCM2PC

TT

C (

in m

sec)

DA

T o

verh

ead

(# o

f da

ta

prop

osal

s)

CN

TR

L o

verh

ead

(vot

es, d

ata/

vote

requ

ests

, etc

)

1

0

3

2

200

400

300

0

10

20

15

25

05

450

150

1 2 3 4 1 2 3 4 1 2 3 4

x x x x

x

xxx

xx

xxz z

zz

z

zz

z

zzz

z

x x x x

xxx

xx

xx

zz z

z

z

zzz

zz

zz

x

xx x x

x

xx

xx

xxz z z

z

zz

zz

z z

zz x

fm fm fm

network loss l=0% network loss l=2% network loss l=4%

Experimental study to compare M2PC and IC-M2PC

P ac ket L os s vs Zeta for different fm [fm= 1,2,3,4, M=5, r=1.0]

0.00E + 00

5.00E -02

1.00E -01

1.50E -01

2.00E -01

2.50E -01

0 0.01 0.02 0.03 0.04 0.05 0.06 0.07 0.08 0.09

P a c ket L oss R a te (l)

Zet

a f

or

vari

ou

s fm

Zeta (fm= 1)

Zeta (fm= 2)

Zeta (fm= 3)

Zeta (fm= 4)

analytical results of IC-M2PC from probabilistic estimates To keep < 2%,fm=1-3 requires l < 4%;fm=4 requires l <1.75%.

N=10, Q=5, (Tp)=50 msec, Tw=125 msec(Q: # of YES votes awaited in IC-M2PC mode)

message loss rate (l) X 10^2 %

loss rate

0.00%

1.00%

2.00%

3.00%

4.00%

5.00%

6.00%

7.00%

8.00%

9.00%

10.00%

1 168 337 509 680 856 1027 1203

Time(second)

loss rate

loss rate

# of messages

0

5

10

15

20

25

30

1 168 337 509 680 856 1027 1203

Time(second)

# of m

essages

# of messages

EXPLICIT mode

10

20

30

0

6%

2%

changes innetwork state

0 168 337 509 680 856 1027 1203 Time (in seconds)

10%

0%

mes

sag

elo

ss r

ate

inn

etw

ork

protocol mode

sustainedattacks

IMPLICITmode

IMPLICITmode

IMPLICITmode

0.0 0.02 0.06 0.080.040.0

0.05

0.10

0.15

0.20

0.25

fm=4

fm=3

Sample switching between M2PC and IC-M2PC modes

dat

a m

iss

rate

at e

nd

-use

r le

vel

()

X 1

0^2

%

fm=2fm=1

0

EXPLICIT mode

Nu

mb

er o

fm

essa

ges

Establishes the mapping ofagent-observed parameter onto

infrastructure-internal parameters l and fa

fa: actual # offailed devices(we assumethat fa=fm)

Rep

lica

voti

ng

prot

ocol

exte

rnal

envi

ronm

ent

para

met

ers

(fm

)

data

-ori

ente

dpa

ram

eter

s(s

ize,

)

. .BR

userobserve

data missrate(’)

controller

v1 v2 vN

B & N

’=1-’

datadelivery

rate ’

IA a

pplic

atio

n

voting QoSmanager

[fau

lt-s

ever

ity,

IC-M

2PC

/M2P

C]

situationassessment module

scripts& rules

protocoldesigner

Global applicationmanager QoS of

other applications

systemoutput

SI

SI

SI: system inputs

Situational-context based replica voting control

E*

OUR MANAGEMENT MODEL FORAUTONOMIC PROTOCOL SWITCHING

‘resource cost’ based view protocol behavior

External event e

MACROSCOPIC VIEW

protocol p1is good

protocol p2is good

e.g., ‘reliable data transfer’ service e packet loss rate in network (‘goback-N’ protocol is better at lower packet loss rate; ‘selective repeat’ protocol is better at higher packet loss rate)

protocol p1(S(a))

r = F (a,e)p1

protocol p2(S(a))

r = F (a,e)p2

F (a,e): policy function embodied in protocol p to support QoS a for service S

phigher value of e

more hostile environment

a’: actual QoS achieved with resource allocation r (a’ a)

Observations: ^^ Resource allocation r =F(a,e) increases monotonically convex w.r.t. e ^^ Cost function (a’) is based on resource allocation r under environment condition e [assume (a’)=k.r for k > 0]

ee

norm

aliz

ed c

ost

incu

rred

by

prot

ocol

(

a’)

e

penalty measure for “service degradation”

pena

lty

mea

sure

d as

user

-lev

el d

issa

tisf

acti

onut

ilit

y va

lue

ofne

twor

k se

rvic

eu

(a’)

0.0

1.0

0.0

service-levelQoS enforced

(a’)

AreqAmin Amax0.0

a’

user displeasure due to the actual QoS a’ being lower than the desired QoS a

infrastructure resource cost forproviding service-level QoS a’

higher value of a

better QoS

Degree of service (un)availability is also modeled as a cost

[r=F(a’,e)]

net penalty assigned to service = k1.[1-u(a,a’)] + k2.(a’) for k1, k2 > 0

e

Optimal QoS control problem

Consider N applications (some of them mission-critical), sharing aninfrastructure-level resource R with split allocations r1, r2, . . . , rN

)],(1[1

2 ' 1

aaukRk iii

N

i i

Minimize:

total resource costs(split across N applications)

displeasure of i-th applicationdue to QoS degradation

'a i : QoS achieved for i-th application with a resource allocation ri

RN

iir

1

Uaau iiii (min))',( :subject to

ai : desired QoS achieved for i-th application

Policy-based realizations in our management model

• ‘outsourced’ implementation Network application requests policy-level decision from management module (say, a business marketing executive, or a military commander may be a part of management module)

• User-interactive implementation Application-level user interacts with management module to load and/or modify policy functions

Design issues in supporting our management model

^^ Prescription of ‘cost relations’ to estimate projected resource costs of various candidate protocols

^^ Development of ‘protocol stubs’ that map the internal states of a protocol onto the service-level QoS parameters

^^ Strategies to decide on protocol selection to provide a network service

^^ Engineering analysis of protocol adaptation/reconfiguration overheads and ‘control-theoretic’ stability during service provisioning (i.e., QoS jitter at end-users)

^^ QoS and security considerations, wireless vs wired networks, etc