Preface - Open Repositoryarizona.openrepository.com/arizona/bitstream/10150/624917/1/azu... ·...

Transcript of Preface - Open Repositoryarizona.openrepository.com/arizona/bitstream/10150/624917/1/azu... ·...

Taking it Home: Assessing MelodicExpectancies in 14-month Old Infants

Item Type text; Electronic Thesis

Authors Bosch, Sarah Marie

Publisher The University of Arizona.

Rights Copyright © is held by the author. Digital access to this materialis made possible by the University Libraries, University of Arizona.Further transmission, reproduction or presentation (such aspublic display or performance) of protected items is prohibitedexcept with permission of the author.

Download date 16/07/2018 21:15:16

Link to Item http://hdl.handle.net/10150/624917

2

Preface

The motivation for this research was born from my simultaneous love for music,

language, and brilliant babies. Having studied the flute for 12 years and language development

for the last 3, I knew for a while that I wanted to combine these interests in my honors thesis.

Language and music are so wonderfully connected, it’s hard not to be curious about the

similarities in their development. As one of my favorite conductors told me, music is like

language in that phrases of both are full thoughts, not isolated notes or letters. The relationship

between a specific pair of notes common to many musical phrases forms the backbone of this

thesis.

Abstract

The purpose of this study was to explore the associative learning of statistically-frequent,

hierarchically-derived melodic sequences in 14-month-old infants, with the ultimate goal of

comparing infant musical and linguistic knowledge. Using the Head-Turn Preference Procedure

(Nelson et al., 1995), we assessed infant listening preferences for Western Tonal melodies with

expected endings, and Western Tonal melodies with unexpected endings, specifically asking

whether the infant participants would be able to discriminate the melodic sets. Successful

discrimination would indicate knowledge of the statistically-frequent, expected nature of the

expected-ending melodies. After comparing mean listening times for the two sets of stimuli, we

found no significant difference, which would imply that – at 14 months – infants do not have

expectations for the expected nature of our melodies. However, female and male participants

demonstrated longer listening times for different stimuli, which may indicate that 14-month-old-

infants can discriminate expected and unexpected melodies, but that the two genders possess

3

opposing listening preferences. More participants would be needed to further assess this

inference.

Introduction

One of the longstanding debates in cognitive science concerns theories of knowledge

acquisition; specifically, cognitive scientists question whether understanding of regularities or

patterns in a domain (like language) is attained through general or specific perceptual and

learning mechanisms. Proponents of domain-general learning argue that a core set of cognitive

mechanisms can be used to represent multiple types of information and learn a variety of skills

(such as botany, language, or music) and that knowledge in each of these domains influences

knowledge in others (Saffran & Thiessen, 2009). In contrast, proponents of domain-specific

learning believe that individual modules are devoted to learning individual skills: language, face

recognition, or mathematical reasoning, for example (Fodor, 1985; Hirschfeld & Gelman, 1994).

While this proposed dichotomy is arguably a false division of the potential explanations of

human cognition (Sternberg, 1989), understanding in what ways cognition is specific or general

is an interesting objective. One recent approach to achieving this goal has been the increased

exploration of similarities and differences in the perception, representation, processing, and

development of two similar cognitive domains: language and music.

Existing findings from behavioral studies support both the generality and specificity of

processing mechanisms in the domains of language and music. For example, there have been

several reported cases of individuals with amusia – a disorder leading to difficulty with musical

pitch processing, memory, and recognition – also having difficulties with voice recognition,

suggesting that similar mechanisms may be used to interpret and remember inputs to these

domains (Trehub & Hannon, 2006). Proponents of domain specificity, however, reference

4

instances of impairment in one of these domains existing alongside normal abilities in the other:

for example, there are documented instances of individuals with severe aphasia maintaining the

ability to compose melodies (Luria, Tsvetkova & Futer, 1965).

Again, cognition in musical and linguistic domains is undoubtedly more nuanced than

skills being acquired through either completely specific modules or entirely general mechanisms.

For this reason, much research is currently directed at untangling exactly what aspects of musical

and linguistic processing are independent or shared, with the ultimate aim of understanding how

the brain simultaneously supports such complicated, rich, and species-specific processes.

The current study investigates the role of statistical frequencies in generating musical

expectations. Much is known about the importance of statistical frequencies and associative

learning in language acquisition; therefore, the goal of this research was to determine if

statistically-frequent events also motivate learning in the musical domain. Evidence that they do

would provide further support for the existence of certain domain-general learning mechanisms.

Before discussing that in more detail, however, it will be important to review some of the

findings on how language and music are similar.

How are language and music similar?

Music and language are two cognitive functions unique to the human species, and they

are both present in all cultures (Trehub, 2001). Additionally, the development of both music and

language seems to have an environmental component – though all cultures possess forms of

language and music, specific structures differ between cultures (Cross, 2001; Hannon & Trainor,

2007). As a linguistic example, tone is used contrastively in Mandarin Chinese (e.g. má means

‘hemp’, and mà means ‘scold’), but not in English (e.g. cát and càt both mean ‘cat’). Musically,

5

Chinese melodies frequently incorporate pentatonic (wusheng) scales (five notes per octave),

while one of the most fundamental features of Western Tonal music is the major scale (seven

notes per octave) (Lu-Ting & Kuo-huang, 1982). (It should be noted that, though the pentatonic

scale is certainly deeply-rooted in Chinese music, it is not the only existing or important scalar

function.) Further, language and music both use hierarchically organized units (words and

pitches) to construct larger phrases (sentences and melodies) (Patel, 2003). It can be argued that

the hierarchical phrases in both domains are used to communicate ideas between individuals, be

they explicitly defined sentences or the symbolic ideas, sounds, and “texts” in a piece of

programmatic music (Karel Husa’s Music for Prague 1968 effectively demonstrates this idea)

(Wade, 2004).

Another similarity between music and spoken language exists in their basic auditory

properties: both melodies and speech consist of rising and falling sequences of pitches, and these

contribute to the overall contour of the auditory stimulus. Contour in speech often provides the

implicit meaning of a sentence (whether it is a question or a command, for example), while

contour in a melody is a fundamental feature of its sound (Nooteboom, 1997). Melodic contour

is often a reflection of the structural rules of a particular musical tradition. (More on common

structural regularities of Western Tonal music – as well as how they might subserve melodic

prediction – will be discussed in later sections.

In addition to these auditory similarities, it has also been shown that certain aspects of

language and music processing may utilize similar neural circuitry. Patel, Gibson, Ratner,

Besson, & Holcomb (1998) demonstrated that the P600 event-related potential resulting from

linguistic syntactic incongruities (e.g. “The child throw the toy”) is also evoked by syntactic

incongruities in music (based on uncommon key and harmony features). This suggests that there

6

might be syntactic processing similarities in the two domains. From this data, Patel (2003)

proposed the Shared Syntactic Integration Resource (SSIR) Hypothesis, which claims that a

shared syntactic processing network (whose anatomical location is still uncertain) brings mental

representations of both melodic and linguistic elements to the activation level necessary to

integrate them into the preceding context. The more unexpected a tone or word is, the greater the

activation of this network (Patel, 2003). Results of a following behavioral study supported this

hypothesis by demonstrating that the time necessary to read and understand a garden path

sentence (a sentence with particularly difficult syntactic processing, such as “The old man the

boat.”) increases significantly when a harmonically unexpected chord progression is played

during reading (Slevc, Rosenberg, & Patel, 2009). Overlap in brain regions managing musical

and linguistic syntactic processing could account for this effect.

The SSIR Hypothesis provides one example of how domain-general mechanisms might

be used to extract information from musical and linguistic stimuli. This hypothesis also supports

the idea that there are similarities in predictive processing in these two domains, as this type of

processing is dependent upon the ability to understand and integrate input so that it can inform

future expectations.

How do we study prediction in these domains?

In cognition, predictive processing is the integration of past and present information into

conscious expectations for future events (Bubic, Von Cramon, & Schubotz, 2010). As of late,

studying the predictive brain has become of increasing interest, as the ability to predict outcomes

of a situation (based on learning drawn from prior experiences) is undoubtedly a useful skill

when navigating the challenges that life poses. Additionally, knowledge and skill in a particular

7

domain – such as language or music – are often assessed through predictive capabilities (Bubic,

Von Cramon, & Schubotz, 2010).

Traditionally, linguistic prediction has been studied with the use of the linguistic cloze

task. The purpose of this task is to illustrate how individual linguistic structures and

combinations of structures contribute to a participant’s prediction of a missing linguistic element;

different constructions can constrain the prediction of a specific element to varying degrees

(Taylor, 1953). For example, a sentence such as “I needed to buy some milk, so I went to the

____” highly constrains for the syntactic, semantic, and lexical properties of the missing word

“store.” However, a sentence such as “I went to my room to feed my pet ____” is less specific in

its lexical constraint; the syntactic and semantic properties of a singular animal are certainly

expected, but the specifically referenced animal is less certain. Importantly, linguistic cloze tasks

can use variation in syntactic relationships or semantic features to assess the weight that each

provides to the prediction of a missing word (Taylor, 1953).

Linguistic prediction has been studied in infants by using the Head-Turn Preference

procedure, a procedure that allows researchers to infer implicit expectations from infant listening

preferences (Nelson et al., 1995). For example, Kedar, Casasola, & Lust (2006) examined

English-learning infants’ ability to use a common determiner (“the”) to form expectations about

what follows in a sentence. After playing sentences with an appropriately-used “the” preceding a

target noun, infants oriented visually to the correct corresponding image significantly more

quickly than when an inappropriate or nonsense determiner was used. This demonstrates that 18-

month-olds are able to use common English determiners to facilitate their expectations for what

linguistic element follows (Kedar, Casasola, & Lust, 2006). Infant linguistic expectation has also

been observed in research on rule formation and listening preferences. A study by Figueroa and

8

Gerken (in preparation) focuses on implicit knowledge of the English past-tense “-ed” rule in 16-

month-old, English-acquiring infants. By assessing what types of past-tense verbs infants like to

listen to in groups of sentences (correct, irregular past-tense verbs [ex: threw], or incorrect, over-

regularized past-tense verbs [ex: throwed]), these researchers are inferring the rule for past-tense

formation that infants understand (which informs their expectations for what they predict and

prefer to hear in sentences) (Figueroa & Gerken, 2016).

Until recently, musical prediction tasks have not been directly comparable to the

linguistic cloze task. Certain studies have investigated the degree of relatedness participants feel

there is between provided continuations and melodic stems, but this methodology assesses

expectations post hoc instead of determining real-time predictions (Schmuckler, 1989). Studies

that have asked participants to produce their expectations (by singing or playing) either focus

specifically on musically-trained individuals (Schmuckler, 1989) or provide shorter stems – only

two to four preceding tones – that are less analogous to sentence fragments (Carlsen, 1981;

Povel, 1996; Schellenberg, Adachi, Purdy, & McKinnon, 2002). While these studies do assess

rudimentary musical expectations (that will be discussed in the following section), the broader

factors that contribute to expectation in music (such as melodic and harmonic contour, rhythm,

and tempo) are not well-embodied by sequences as short as these (Rohrmeier & Koelsch, 2012).

Additionally, the oversimplified structure of the traditional “musical cloze task” makes it

difficult to compare associated results to those of the linguistic cloze task, because there are

fewer available melodic manipulations. Given the previously mentioned similarities between

music and language – particularly, their predicted overlap in syntactic processing – developing a

methodology to comparably test prediction in the two domains became a prudent goal.

Researchers at Tufts University developed their version of the melodic cloze task in response to

9

this need. By constructing longer melodies (5-9 notes in length) that followed either more or less

predictable progressions of Western Tonal music, they created a database of musical “sentences”

that can be used to study musical prediction in a manner comparable to the linguistic cloze task

(Fogel, Rosenberg, Lehman, Kuperberg, & Patel, 2015).

How do adults form musical expectations?

While not everyone is a trained musician, most American adults have some level of

sustained exposure to music – particularly music with features common to the Western Tonal

tradition, a major influencer of the melodic and harmonic structures of popular music.

In tonal music, pitches exist in hierarchical relationships, with certain pitches being

perceived as more or less stable than others; the most stable pitch in a melody is referred to as

the tonic (Laitz, 2008). The stability of another note relative to the tonic is associated with the

consonance of the harmony between that note and the tonic; consonant harmonies emerge when

the frequency ratio between the notes contains small integers (Bain, 2003). Melodies often move

towards ending on these stable pitches (Laitz, 2008).

In Western tonal music, one of the most salient hierarchical relationships is the major

scale, an ordered set of seven pitches – beginning on the tonic – related by a constant pattern of

half and whole steps. Major scale patterns are so fundamental to Western tonal music that the

major triad (a harmony created by the first, third, and fifth notes of the scale) has been referred to

as the “prototype of tonal structure” (Trehub, Cohen, Thorpe, & Morrongiello, 1986). Further,

the final note sequence of a completed major scale (scale degree 7 [^7] to scale degree 1 [^1], or

“leading tone” to “tonic”) serves as a foundation for melodic completeness, as evidenced by its

presence in authentic cadences. An authentic cadence – one of the most common harmonies used

10

to conclude a tonal piece of music – is a progression where the penultimate and final chords

contain ^7 and ^1, respectively (Wade, 2004). The tonic is often considered the ultimate goal of

tonal melodies, and the fundamental (even referred to as “axiomatic”) ^7à^1 progression is one

of the most frequent ways music achieves this goal (Laitz, 2008; Moore, 1995). (Because the

overarching objective of our study was to assess expectations for statistically-frequent

contingencies in music, we ultimately focused on this progression.)

Many of the harmonic and melodic preferences that adults have can be related to both

this progression and the overall structure of the major scale. For example, adults have a

perceptual preference for scales patterns with unequal steps (i.e. scales where each note isn’t

separated by the same interval) that could be derived from innate sensitivities of the human

auditory system, as unequal-step scales are found in most musical contexts, and equal-step scales

are incredibly rare (Trehub, Schellenberg, & Kamenetsky, 1999). The major scale is an unequal-

step scale. Additionally, most adults indicate a preference for consonant harmonies (harmonies

where the ratio between the frequencies of simultaneous pitches is a small integer), and the major

triad is the prototypical exemplar of a consonant harmony (Hannon & Trainor, 2007; Tillmann,

Poulin-Charronnat, & Bigand 2014). Finally, adult listeners also prefer melodies that resolve

dissonance (like that created by ^7 in the penultimate chord of an authentic cadence) with

consonance (the final, major, ^1-contining chord of an authentic cadence) (Tillmann, Poulin-

Charronnat, & Bigand 2014). Movement from dissonance to consonance creates a pleasing

psychological experience of released tension that is common in Western Tonal music (Tillmann,

Poulin-Charronnat, & Bigand 2014).

Preference for progression from dissonance to consonance has been formalized and

expanded in the Implication-Realization (IR) Model of Melodic Expectancy, which makes the

11

general claim that Western Tonal listeners expect implicative (unfinished, open-sounding) notes

in a melody to be followed by realized (finished, closed-sounding) notes. More specifically,

listeners expect these realized conclusions to be close in pitch proximity to the implicative

preface (Schellenberg, 1997; Schellenberg, Adachi, Purdy, & McKinnon, 2002). While several

factors can contribute to implicative and realized qualities, one of the most intuitive features is

stability in the musical key: less stable notes (such as ^7) are perceived as implicative, and more

stable notes (such as ^1) are perceived as realized (Schellenberg, Adachi, Purdy, & McKinnon,

2002).

These preferences and expectations seem to imply that adults exposed to Western Tonal

music would have some form of expectation for the structure of a major scale (and melodies

based upon it) – past research has confirmed that adults do form these expectations. Adults with

little musical training are able to consciously detect tuning discrepancies in melodies based off of

the major scale with high accuracy, but they are unable to do the same for less common melodic

contexts (Lynch & Eilers, 1992; Trainor & Trehub, 1994). As musical training increases, adults

also become more able to correctly identify more complicated out-of-harmony changes to

melodies (Trainor & Trehub, 1994).

In their melodic cloze task, Fogel and colleagues (2015) further challenged these

preferences and expectations in musically- and non-musically-trained participants by asking

them to predict the conclusions to 48 unfinished melodies. (This research is the basis of the

current study.) In the study, after hearing an opening melodic sequence, participants sang the

note they thought should come next. The melodic stimuli varied in terms of how significantly the

introductory phrase constrained for the final note; phrases constraining highly for the final note

were referred to as “authentic cadence” (AC) melodies, and phrases that were not as constraining

12

were referred to as “non-cadence” (NC) melodies. It was found that highly constraining melodies

(utilizing implicative relationships that many adults already have implicit understandings of, as

stated above) led to the most consistent level of note prediction among participants, regardless of

musical training.

While adult musical preferences and processing are certainly important to consider

(studying the development of musical cognition would be challenging without an ending

framework in mind), the purpose of this research is more related to infant processing of frequent

constructs. For that reason, I’ll now turn to a discussion of what we know young listeners

understand.

What do we know about infant musical knowledge?

Even though they have less exposure to music than adults, infants are not musical

novices. The amount of passive contact with music that infants receive in their everyday

environments cannot be ignored: television shows and advertisements, radio programming, and

even parent-produced lullabies are all examples of the types of common and frequent contact that

infants have with music containing features of the Western Tonal tradition (Trehub and Trainor,

1998). Experimental research with infants has also demonstrated that their musical knowledge

and expectations are certainly more than nothing; in fact, they can process patterns and perceive

music in many of the ways that adults do (Trehub & Hannon, 2006).

Importantly, it is clear that infants develop musical expectations (just as they develop

linguistic expectation) through prolonged exposure, which allows them to ultimately detect the

musical structure of their particular environment. For example, before 5 months of age, infants

do not treat the notes of the major scale as relative to each other, but rather as absolute pitches.

13

This means that they cannot recognize a melody if it is played in a different key than the one

they originally heard it in. As early as 5 months, however, infants demonstrate their

understanding of relational pitch by recognizing melodies they have heard before, even when

transposed to new keys (Chang & Trehub, 1977). This awareness of relative pitch blossoms into

awareness of context-relevant hierarchical relationships rather quickly: between 6 and 12 months

of age, it appears that infant perception of music becomes more specifically “reorganized” to be

sensitive to and receptive of common musical structures in the environment. In the Western

Tonal tradition, this reorganization favors the specific unequal-step patterns of the major scale

(for which adults are known to have a preference). At 6 months, infants are able to detect

mistunings in melodies based off of major and augmented scales equally well. Both of these

scales utilize unequal steps, but augmented scales are infrequently used in popular music (Lynch

& Eilers, 1992). At 9 months, infants are still able to detect mistunings in melodies based off

familiar (major) and unfamiliar unequal-step scales, but they are unable to detect mistunings in a

melody based off of an equal-step scale (Trehub et al., 1999). By 12 months of age, however,

infants are only able to detect mistunings in melodies based off of unequal-step major scales

(Lynch & Eilers, 1992). This narrowing in perceptual sensitivity demonstrates the relevance of

environmentally-frequent events in music cognition.

This early perceptual sensitivity to the tones of major scales informs later conscious

preferences. By the age of five years, children can explicitly judge whether entire melodies are

pleasant-sounding. When presented with major melodies containing either no change, an out-of-

key change, or an out-of-harmony change to one of the notes, five-year olds consistently prefer

the no-change melodies (Corrigall & Trainor, 2014). At this age, there is not yet an explicit

distaste for out-of-harmony changes, as this level of conscious melodic sensitivity takes many

14

years to develop (Trainor & Trehub, 1994); however, electroencephalography data demonstrate

that disruptions in key or harmony increase processing demands in 4 year olds, suggesting that

younger children might implicitly understanding the irregularity of these structural changes

(Corrigall & Trainor, 2014). By third grade, a specific preference for melodies ending on tones of

the tonic major triad arises, mirroring the adult preference for consonant harmonies and

demonstrating again that environmental exposure informs preferences and expectations

(Krumhansl & Keil, 1982).

Because implicit knowledge of more complex melodic structures has been demonstrated

before 5 years of age, and sensitivity to major-scale patterns has been demonstrated by 12

months, we selected 14 months to be the target age of our study. Known perceptual capabilities

make this a reasonable starting point for assessing whether infants form expectations for

statistically-frequent, major-scale progressions (and whether they are able to implicitly detect the

disruption of these progressions in novel melodies). With that in mind, it will now be important

to explicitly address how infants use the statistics of their environment to learn about the stimuli

they are exposed to.

How do infants use statistics to make generalizations and learn from their environment?

One of the strategies infants employ to extract patterns – and eventually meaning – from

a stimulus-rich environment is the tracking of transitional probabilities between individual

stimuli (in other words, they determine how likely it is that stimulus x is followed by stimulus y

in a particular domain). Infants then compute the relative strengths of these probabilities and use

them to guide their expectations for future stimulus sequences (Saffran, Aslin, & Newport,

1996). Formally, this is referred to as Associative Learning. In this type of learning, associations

15

between sequential stimuli are strengthened as the learner accumulates pieces of evidence

confirming that particular association. The use of Associative Learning strategies in infants has

been demonstrated in both linguistic and tonal segmentation tasks (Gerken, 2009).

A demonstration that infants implicitly compute something like transitional probabilities

in language comes from studies that focus on word segmentation: the process of extracting single

words from the continuous streams of speech that constitute language input (Gerken, 2009). In

natural speech, word boundaries are not often marked by breaks (or silences) in the acoustic

stimulus; in fact, there are sometimes larger pauses in the middle of words than there are between

words (Ohala, 2017). This forces language learners to rely upon other cues to determine where a

word begins and ends. Multiple studies (e.g. Aslin, Saffran, & Newport, 1998; Saffran, Aslin, &

Newport, 1996) have indicated that infants as young as 8-months old are able to use the

statistical frequencies between sequential syllables to determine word boundaries. That is, infants

recognize that if two syllables are frequently located next to each other, it is likely that they are

part of the same word when heard sequentially in a speech stream. Conversely, if two syllables

are rarely heard next to each other, it is likely that they mark a word boundary when heard

sequentially in a speech stream.

Subsequent and related research with infant perception of tone sequences demonstrated

that this statistical segmentation strategy is not limited to linguistic input. In their 1999 study,

Saffran, Johnson, Aslin, & Newport showed that 8-month-old infants are able to learn “tone

words” (one tone word being a sequence of three pitches) from a continuous stream of pitches

whose boundaries were only marked by transitional probabilities. This suggests that the infant

participants utilized the statistics of sequential tone relationships to successfully assign the

correct boundaries to the stimuli. While segmenting tone words is a relatively low level analysis

16

of musical stimuli – infants are simply marking units and not actually forming rules for any sort

of hierarchical relationships or dependencies – these results are important in their demonstration

of statistical learning mechanisms being applied to the musical domain.

This set of word and tone segmentation research suggests that statistical inferences can be

used to extract low-level meaning from two different types of auditory stimuli. An important

question remains, though: can infants use transitional probabilities embedded in everyday

exemplars of more complex (and hierarchically-derived) rules to form expectations for the

occurrence of those rules? The previously mentioned research with past-tense over-regularization

in 16-month olds suggests that high-frequency exposure to the addition of ‘-ed’ to verbs (to

format them in the past tense) has allowed infants to associate this rule with all verbs (even those

that necessitate an irregular past-tense construction) (Figueroa & Gerken, 2016). In the musical

domain, learning of major scale relationships and pitch sequences based off of a familiar melody

(Twinkle Twinkle, Little Star) has been demonstrated and can be reasonably linked to high-

frequency exposure (Lynch & Eilers, 1992; Trehub, Schellenberg, & Kamenetsky, 1999).

However, there has yet to be work exploring infant ability to apply statistically-frequent musical

progressions to expectations for more complex novel stimuli, which provided further motivation

for this study.

The Present Study

The purpose of the present study was twofold: to determine if 14-month-olds form

statistical associations that they can use to inform melodic expectations in novel stimuli, and to

further assess whether Associative Learning is a domain-general learning mechanism for more

complex, hierarchically-derived rules. We specifically asked whether infants are able to learn the

17

statistically-frequent ^7à^1 progression (from everyday musical exposure) and use that

association to inform their musical expectations, similar to how they are able to compute

frequencies in language.

Using twelve of the most highly-constraining stimuli from the research of Fogel,

Rosenberg, Lehman, Kuperberg, & Patel (2015), we created two sets of melodies: melodies that

end on the composed tonic (as would be expected by leading tone progressions in Western Tonal

music), and melodies that end on the “deceptive” sixth scale degree. By using the Head-Turn

Preference Procedure, we aimed to determine whether infant participants possessed specific

listening preferences for these stimuli. Importantly, a significantly different average listening

time between the stimulus sets would indicate two things: discrimination of the stimuli, and a

heightened interest in the stimulus that is listened to for a longer period of time. Based on the

frequent nature of the ^7à^1 progression and infant knowledge of major scales, we

hypothesized that 14-month-olds do have associative knowledge of ^7à^1 and are able to form

expectations for these endings. Consequently, we predicted that their listening times for each

stimulus would differ significantly. (Discrimination of the stimuli would imply that infants are

noticing the change between them that is disrupting their expectations.) We could not, however,

predict the specific direction of difference in listening time that the infants would show. Because

the expected melodies are thought to be more aesthetically pleasing from the standpoint of

Western Tonal music, it was possible that infants would prefer them. In contrast, the unexpected

melodies are surprising, and infants could have been more interested in what is new.

18

Methods

Participants

Sixteen infants (7 females and 9 males) between the ages of 13.6 and 14.9 months (with

an average age of 14.1 months) were included in the data analysis of this study. All of the infants

were born at gestational ages of at least 37 weeks and with a birth weight of 5.5 pounds or

greater. No participants were at risk for a language delay. Eight participants had significant

foreign language exposure (FLE); foreign languages they were exposed to included Spanish,

German, and Dutch. Data from 4 participants was discarded due to fussiness, parental

interference, or technological difficulties.

Auditory Stimuli

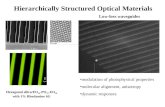

For this study, we used 12 of the most highly-constraining melodic stems from the

research of Fogel and colleagues (2015) as the auditory stimuli (composed by Jason C.

Rosenberg; Figure 1). The cloze probability of these melodies – analogous to the ratio between

adult participants predicting the correct final note and total adult participants – ranged from 0.76

to 1. (These high cloze probabilities represent a high level of agreement on what the expected

final note is, which led us to infer that these melodies would be most accessible for infants.)

These 12 melodies are written in six of the twelve possible major keys (C, E-flat, A-flat, G, D,

and F-sharp), and each key was used twice. All are composed in common meter; in common

meter, there are four beats in a measure, and the quarter note is assigned the beat. Two versions

of each melody were created: one with the expected, composed final note (^1), and one with an

unexpected final note (^6). The sixth scale degree was chosen as the unexpected ending note

because the progression of a melody to ^6 – when it constrains for ^1 through the leading tone –

is considered by music theorists to be unexpected, “deceptive” motion (Laitz, 2008). During the

19

modification of each melody to its unexpected form, the unexpected final note was placed above

the preceding leading tone to preserve the contour of the original melody. This placement created

a large interval between the penultimate and final notes of the melody, which contributed further

to its unexpected nature: as noted previously, listeners frequently expect small intervals between

successive notes (Schellenberg, 1997).

Audio files of the stimuli were created with Finale software. The melodies were

transcribed into the computer program and performed by a Grand Piano at the standard tempo of

120 beats per minute. Two blocks of six melodies (one of each key) were created, with a

randomized order of the melodies in each block; there was no significant difference in average

cloze probability between melodic blocks. Within a block, the conclusion of one melody and the

beginning of the next was separated by one quarter rest lasting 500 milliseconds. The duration of

the first block was 41 seconds, and the duration of the second block was 38 seconds. Finally,

two versions of each randomized block were generated: one where all melodies ended on their

expected final pitches, and one where all melodies ended on the unexpected final pitch.

Examples of the stimulus blocks (with their expected endings) can be seen in Figure 1. These

Finale transcripts were exported as .aiff sound files that were then imported into the experimental

control program.

20

Figure 1: Melodic Stimulus Blocks

Stimulus Set 1: Expected Endings

Stimulus Set 2: Expected Endings

Figure 1: Transcription of auditory stimuli (12 total melodies), shown here with their expected

endings.

21

Apparatus and Procedure

All trials of this experiment took place within a sound-proof booth. The booth housed

three flashing lights (one on the front wall, and one each on the left and right walls) and two

speakers (one each on the left and right walls) (see Figure 2). Infant listening times were

assessed using the Head-Turn Preference procedure (Nelson et al., 1995). Infants visually fixate

on what they are listening to, and this procedure uses this tendency to determine listening times.

The experiment began when the front-wall light began flashing. After the participant directed

their gaze to this light (and the experimenter indicated such), either the left- or right-facing light

began flashing. Once the participant visually fixated on the newly flashing light – by turning their

head at least 30 degrees towards the light – the melodic stimulus began to play. The participant

was able to continue listening to the stimulus for as long as their gaze remained fixated on its

corresponding light – once they looked away for longer than two seconds, playback terminated,

and the cycle repeated with flashing of the light on the front wall. The participant listened to a

total of eight trials: each melodic block (4 in total) was played once in a randomized order, and

the melodic blocks were then repeated in another randomized order. The order was randomized

to avoid a confound with the side the stimulus played from, and the random order of the trials

was managed by the experimental control program. Looking behavior of the participant was

observed by the experimenter through a video monitor in a separate room, and this behavior was

recorded on the computer’s keypad; the control program converted this input into timed head-

turn responses.

Throughout the test phase, the participant was seated on their caregiver’s lap. The

caregiver listened to music of their own through a set of ear-covering headphones, and they were

instructed not to point or otherwise direct the attention of their child. If the participant

22

successfully listened to all eight trials without fussing out or experiencing any other technical

difficulties, their data was included in the final analysis.

Figure 2: Head-Turn Preference Procedure

Figure 2: Diagram of the HPP set-up (Nelson et al., 1995).

23

Data

In keeping with standard procedures for the HPP paradigm, looking times (for an

individual participant) shorter than two seconds and longer than 2.5 standard deviations above

the mean were excluded from data analysis.

Table 1: Average Looking Times Trial Type Mean Looking

Time (s) N P

All - E 7.36 (3.20) 16 0.472

All - U

7.95 (2.12)

Female - E

7.74 (2.05) 7 0.555

Female - U

7.18 (2.80)

Male - E

7.07 (2.26) 9 0.250

Male - U 8.56 (3.52)

FLE - E

7.07 (2.70) 8 0.941

FLE - U 7.15 (1.94)

No FLE - E 7.66 (1.48) 8 0.391

No FLE - U

8.76 (4.09)

Table 1: Average looking times for infant participants (and standard deviations), as well as the significance of the difference between the expected and unexpected looking times (as determined

by a paired t-test).

24

Table 2: ANOVA Group Average Looking

Time (s) F P

Female - E

7.74

1.682

0.216 Female - U

7.18

Male - E

7.07

Male - U 8.56

Table 2: Output of a two-way ANOVA considering gender and melodic trial.

Chart 1

Chart 1: Looking times for all 16 participants.

0

2

4

6

8

10

12

14

16

18

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

LookingTime(s)

ParticipantNumber

MeanLookingTimes- IndividualParticipants

ExpectedEndings UnexpectedEndings

25

Chart 2

Chart 2: Difference between unexpected and expected looking times for all 16 participants.

Chart 3

Chart 3: Average looking times for five separate groups of participants, with looking times

weighted by the number of participants in each group. Error bars reflect standard error.

-6

-4

-2

0

2

4

6

8

10

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

Diffe

renceB

etweenLookingTimes

[Une

xpected-E

xpected](s)

ParticipantNumber

DifferenceBetweenLookingTimes- AllParticipants

0

20

40

60

80

100

120

140

160

All Females Males FLE Non-FLE

WeightedLookingTimes(s)

ParticipantGroup

MeanLookingTimes- Group

ExpectedEndings UnexpectedEndings

26

Chart 4

Chart 4: Average difference between unexpected and expected looking times for five separate

groups. Error bars reflect standard error.

Results

As mentioned earlier, we hypothesized that infants can process the statistical frequency

of ^7à^1 in Western Tonal music and subsequently predicted that infants would discriminate

expected and unexpected melodic trials. Although overall average looking times were longer for

the unexpected stimulus blocks – as shown in Table 1 and Chart 3 – we did not find a significant

difference between looking times.

Using a two-tailed, paired t-test, we analyzed the difference in looking time means and

found no significant difference between them (t = -0.737, p = 0.472 > 0.05). In order to see if

there were any effects within subgroups of the entire sample, we performed four additional t-

tests comparing expected and unexpected looking times: with female data, with male data, with

FLE data, and with non-FLE data. It should be noted that, due to the smaller sample size for each

-1.5

-1

-0.5

0

0.5

1

1.5

2

All Female Male FLE NoFLE

AverageDiffe

renceB

etweenLookingTimes(s)

ParticipantGroup

AverageDifferenceBetweenLookingTimes- Groups

27

of these subgroups (N = 7, 9, 8, and 8, respectively), the associated tests hold less statistical

power.

As was the case with the entire participant sample, no significant differences between

looking times were found for any of the four specified groups (see Table 1); however, there are a

few interesting trends worth noting. First, infant participants with experience in at least one

foreign language demonstrated the lowest level of discrimination between trial types (p = 0.941);

any difference in looking times was almost entirely due to chance. Second, it appears as if

differences in melodic processing might exist between genders. The female subgroup is the only

subgroup with longer looking times for expected-ending trials (Chart 4), and the male subgroup

discriminated the expected and unexpected melodies at the highest level (p = 0.250). These

noticeable differences in gender performance motivated further analysis of this data subset;

consequently, a two-way ANOVA of gender and looking times was performed. As demonstrated

in Table 2, there is no significant interaction between these variables (F = 1.682, p = 0.216 >

0.05); however, the small male and female sample sizes reduce the statistical power of this

analysis.

Discussion

Based on these results, we cannot say that 14-month-old infants as a group are able to

discriminate expected and unexpected melodic endings. This might have at least two

interpretations. One is that infants are not yet forming specific expectations for ^7à^1

progressions in Western tonal music. If taken at face value, it would seem that, at this age,

associative learning mechanisms are not applied to hierarchical melodic stimuli in the way that

they are used to extract information from novel sentences. Ultimately, this would imply that this

28

type of associative learning is not used in a domain-general manner. However, several

considerations prevent the unquestioned acceptance of this conclusion.

A different interpretation of this data concerns the observation that we were not able to

predict which type of melody infants would listen to longer – the more “settled” expected

melodies or the more surprising unexpected melodies. Our findings show that most girls listened

longer to the expected melodies, while most boys listened longer to the unexpected melodies.

Unfortunately, given the small number of infants of each gender, neither of these opposing

preferences was statistically significant. While a sample size of 16 total participants is standard

in infant research (given the time-consuming nature of recruiting and running infants), more data

points could help elucidate what seem to be emerging trends. It is possible that – with 16 male

and 16 female participants – each gender would show a statistically reliable, but opposite

preference. Such a finding would indicate that each gender discriminates melodies based on their

likelihood but that they have different preferences. Additionally, it should be noted that boys in

this study discriminated melodies at a higher level (Figure 1). It would be interesting if, with a

greater sample size, only boys show significant discrimination of the melodic sets, as there is

emerging evidence that girls are developmentally ahead of boys in terms of language acquisition

and production (Balter, 2013).

It also appears as if there might be correlations between language exposure and music

processing. Exposure to musical contexts other than the Western Tonal tradition might be more

likely in households that also speak multiple languages, which may influence music perception

in such a manner that ^7à^1 is less notable to or expected by these infants. In the future,

controlling for language and music exposure would be an important improvement to study

design. It should also be noted that the majority of the FLE population was male (6 males, 8

29

FLE). This is interesting because, as shown in Table 1, the FLE population demonstrated the

lowest ability to discriminate between ending types, and the male population demonstrated the

highest ability to discriminate endings. For these reasons, it would be useful to increase the

overall sample size and work to untangle these complex relationships.

If we ultimately find that, even with more participants, no group of infants shows

significant discrimination, one possible alley to explore concerns the complexity of the novel

stimuli used in this experiment: the complicated stimuli we used might have introduced

processing difficulty to the discrimination task. Similar to many common nursery rhymes, the

major key of each melody in this stimulus set is established within the first measure by outlining

the tonic triad; theoretically, it seems that this would allow listeners to determine the appropriate

(and expected) ^7à^1 progression of each melody with relative ease, setting the stage for

disruption by the unexpected endings. However, the nature of the unexpected endings we chose

for the stimuli is complex, because the sixth scale degree still legally operates within the key of

each melody. Perceiving the “unexpectedness” of the progression from ^7à^6 is a particularly

sophisticated task in that it draws upon harmonic expectations that often don’t develop until later

in childhood (Corrigall & Trainor, 2009). At 14 months, it might be the case that listeners –

through Associative Learning – have formed expectations for ^7 leading to ^1, but that these

expectations are not yet stronger or more defined than ^7 leading to ^6. In a future version of this

study, it would be interesting to see if 14-month-old infants are able to discriminate melodies

ending with ^7à^1 and melodies ending with ^7àillegal note (where the illegal note does not

exist in the key of the melody). Successful discrimination here might imply that infants can form

melodic expectations through associative analysis, but that more information and exposure is

needed to firmly distinguish the expected nature of this association from other legal progressions.

30

In addition to the complicated endings of these stimuli, it might also have been the case

that the melodies – in their entirety – were too long or complex to accurately assess infant

knowledge of the final 7à1 melodic progression. Participants only listened to each melodic

block for average times of just under 8 seconds, and this is approximately the length of only two

melodies. Because the overall looking times were so short, participants likely were not listening

long enough to truly react to the unexpected conclusions. In future iterations of this study, it

could be useful to simplify the stimuli (while still maintaining their sentence-like lengths) so that

participant interest is maintained for a more substantial portion of each set; simplification could

come in the form of smoother contours and rhythms, for example. To more effectively draw

attention to (and assess knowledge of) the melodic conclusions, it might also be useful to

lengthen the final, target pitch of each melody to a half-note value. In Western Tonal melodies,

note length is often correlated with completion, and the current quarter-note length of our

endings might not be maximally conclusive (Schellenberg, Adachi, Purdy, & McKinnon, 2002).

Ideally, with these improvements to experimental design, a clearer picture of 14-month-

old knowledge of ^7à^1 as a statistically-frequent progression would unfold; subsequently,

more firm evidence could be contributed to the domain-generality/domain-specificity debate. If it

turns out that, in this improved novel stimulus environment, infants are still unable to

discriminate expected and unexpected endings, it could be useful to employ certain

methodological expansions. Raising the participant age to determine when these implicit

expectations start to be formed would be a simple first step; based on linguistic research, it seems

that 16 months would be a reasonable starting point. It might also be interesting to analyze EEG

recordings of infant neural activity during melodic exposure to see if either an early right-

anterior negativity (ERAN) or late bilateral-frontal negativity appear. These peaks are

31

respectively reflective of the violation of sound expectancy and increased processing demands of

integrating unexpected notes into a melodic context (similar to the P600 peak in syntactic

processing), and their existence would indicate more implicit knowledge of the ^7à^1

progression than the Head-Turn Preference Procedure can assess (Koelsch, Gunter, Friederici, &

Schröger, 2000). It could also be worthwhile to question whether 14-month-old participants can

learn the traditionally conclusive nature of the ^7à^1 progression in an experimental situation

(if environmental exposure to Western Tonal music is in fact not sufficient). One might

accomplish this by constructing a “melodic segmentation task,” similar to the word and tone

segmentation tasks mentioned earlier. After a habituation phase of many melodies concluding on

^7à^1, we would assess whether or not infants can segment a continuous stream of melodies

using this concluding progression by playing individual melodic sequences (from the original

stream) that either do or do not end on ^7à^1. If infants do segment with ^7à^1, we would

expect a difference in time spent listening to individual melodies that possess this ending and

those that do not.

Ultimately, this initial analysis of infant melodic processing implies that 14-month-olds

have not yet formed expectations for the statistically-frequent ^7à^1 progression. However,

improvements and expansions to our experimental design would allow us to more conclusively

determine the specific expectations that these infants do possess.

32

Acknowledgements

An enormous thank you goes to Dr. Gerken for her unyielding support of both my dream

to design this study and my (at times) incessant question asking. I also send a huge amount of

gratitude to Megan Figueroa, who has been the embodiment of strength, brilliance, and kindness

in research (and life) that I so needed during my time at the UA. Finally, thanks and love to my

mom and dad, who pushed me to college’s bigger stage and believed in my ability to finish this

project (even when I wasn’t always sure I would).

33

References

Aslin, R. N., Saffran, J. R., & Newport, E. L. (1998). Computation of conditional probability

statistics by 8-month-old infants. Psychological science, 9(4), 321-324. Bain, R (2003). The harmonic series. Retrieved from

http://in.music.sc.edu/fs/bain/atmi02/hs/hs.pdf.

Balter, M (2013). ‘Language gene’ more active in young girls than boys. Retrieved from http://www.sciencemag.org/news/2013/02/language-gene-more-active-young-girls-boys.

Bubic, A., Von Cramon, D. Y., & Schubotz, R. I. (2010). Prediction, cognition and the

brain. Frontiers in human neuroscience, 4, 25. Carlsen, J. C. (1981). Some factors which influence melodic expectancy. Psychomusicology: A

Journal of Research in Music Cognition, 1(1), 12. Chang, H. W., & Trehub, S. E. (1977). Auditory processing of relational information by young

infants. Journal of Experimental Child Psychology, 24(2), 324-331. Corrigall, K. A., & Trainor, L. J. (2009). Effects of musical training on key and harmony

perception. Annals of the New York Academy of Sciences, 1169(1), 164-168. Corrigall, K. A., & Trainor, L. J. (2014). Enculturation to musical pitch structure in young

children: evidence from behavioral and electrophysiological methods. Developmental science, 17(1), 142-158.

Cross, I. (2001). Music, cognition, culture, and evolution. Annals of the New York Academy of

sciences, 930(1), 28-42. Figueroa, M. & Gerken, L. (2016). Rethinking past tense overregularization. The

Symposium on Research in Child Language Disorders. Madison, WI. Fodor, J. A. (1985). Precis of the modularity of mind. Behavioral and brain sciences, 8(01), 1-5.

34

Fogel, A. R., Rosenberg, J. C., Lehman, F. M., Kuperberg, G. R., & Patel, A. D. (2015).

Studying musical and linguistic prediction in comparable ways: The melodic cloze probability method. Frontiers in psychology, 6.

Gerken, L. (2009). Language development. San Diego, CA: Plural Publishing. Hannon, E. E., & Trainor, L. J. (2007). Music acquisition: effects of enculturation and formal

training on development. Trends in cognitive sciences, 11(11), 466-472. Hirschfeld, L. A., & Gelman, S. A. (1994). Mapping the mind: Domain specificity in cognition

and culture. Cambridge University Press.

Husa, K. (1968). Music for prague 1968. Kedar, Y., Casasola, M., & Lust, B. (2006). Getting There Faster: 18-and 24-Month-Old Infants'

Use of Function Words to Determine Reference. Child Development, 77(2), 325-338. Koelsch, S., Gunter, T., Friederici, A. D., & Schröger, E. (2000). Brain indices of music

processing: “nonmusicians” are musical. Journal of Cognitive Neuroscience, 12(3), 520- 541.

Krumhansl, C. L., & Keil, F. C. (1982). Acquisition of the hierarchy of tonal functions in

music. Memory & Cognition, 10(3), 243-251. Laitz, S. G. (2008). The complete musician: An integrated approach to tonal theory, analysis,

and listening (Vol. 1). Oxford University Press, USA. Luria, A. R., Tsvetkova, L. S., & Futer, D. S. (1965). Aphasia in a composer. Journal of the

neurological sciences, 2(3), 288-292. Lu-Ting, H., & Kuo-huang, H. (1982). On Chinese scales and national modes. Asian

music, 14(1), 132-154. Lynch, M. P., & Eilers, R. E. (1992). A study of perceptual development for musical

35

tuning. Perception & Psychophysics, 52(6), 599-608. Moore, A. (1995). The so-called ‘Flattened Seventh’ in rock. Popular Music, 14(02), 185-201. Nelson, D. G. K., Jusczyk, P. W., Mandel, D. R., Myers, J., Turk, A., & Gerken, L. (1995). The

head-turn preference procedure for testing auditory perception. Infant behavior and development, 18(1), 111-116.

Nooteboom, S. (1997). The prosody of speech: melody and rhythm. The handbook of phonetic

sciences, 5, 640-673. Ohala, D. (2017). Nature and history of psycholinguistics [Powerpoint]. Retrieved from

University of Arizona D2L site.

Patel, A. D., Gibson, E., Ratner, J., Besson, M., & Holcomb, P. J. (1998). Processing syntactic

relations in language and music: An event-related potential study. Journal of cognitive neuroscience, 10(6), 717-733.

Patel, A. D. (2003). Language, music, syntax and the brain. Nature neuroscience, 6(7), 674-681. Povel, D. J. (1996). Exploring the elementary harmonic forces in the tonal system. Psychological

Research, 58(4), 274-283. Rohrmeier, M. A., & Koelsch, S. (2012). Predictive information processing in music cognition.

A critical review. International Journal of Psychophysiology, 83(2), 164-175. Saffran, J. R., Aslin, R. N., & Newport, E. L. (1996). Statistical learning by 8-month-old infants. Saffran, J. R., Johnson, E. K., Aslin, R. N., & Newport, E. L. (1999). Statistical learning of tone

sequences by human infants and adults. Cognition, 70(1), 27-52. Saffran, J.R. & Thiessen, E. (2009). Domain-General Learning Capacities. In Hoff, E., & Shatz,

M. (2009). Blackwell handbook of language development (68-82). John Wiley & Sons. Schellenberg, E. G., Adachi, M., Purdy, K. T., & McKinnon, M. C. (2002). Expectancy in

36

melody: tests of children and adults. Journal of Experimental Psychology:

General, 131(4), 511. Schmuckler, M. A. (1989). Expectation in music: Investigation of melodic and harmonic

processes. Music Perception: An Interdisciplinary Journal, 7(2), 109-149. Slevc, L. R., Rosenberg, J. C., & Patel, A. D. (2009). Making psycholinguistics musical: self-

paced reading time evidence for shared processing of linguistic and musical syntax. Psychonomic bulletin & review, 16(2), 374-381.

Sternberg, R. J. (1989). Domain-generality versus domain-specificity: The life and impending

death of a false dichotomy. Merrill-Palmer Quarterly (1982-), 115-130. Taylor, W. L. (1953). “Cloze procedure”: a new tool for measuring readability. Journalism

Bulletin, 30(4), 415-433. Tillmann, B., Poulin-Charronnat, B., & Bigand, E. (2014). The role of expectation in music:

from the score to emotions and the brain. Wiley Interdisciplinary Reviews: Cognitive Science, 5(1), 105-113.

Trainor, L. J., & Trehub, S. E. (1994). Key membership and implied harmony in Western tonal

music: Developmental perspectives. Attention, Perception, & Psychophysics, 56(2), 125- 132.

Trehub, S. E., Cohen, A. J., Thorpe, L. A., & Morrongiello, B. A. (1986). Development of the

perception of musical relations: Semitone and diatonic structure. Journal of Experimental Psychology: Human Perception and Performance, 12(3), 295.

Trehub, S. E., & Trainor, L. (1998). Singing to infants: Lullabies and play songs. Advances in

infancy research, 12, 43-78.

Trehub, S. E., Schellenberg, E. G., & Kamenetsky, S. B. (1999). Infants' and adults' perception

37

of scale structure. Journal of experimental psychology: Human perception and performance, 25(4), 965.

Trehub, S. E. (2001). Musical predispositions in infancy. Annals of the New York Academy of

Sciences, 930(1), 1-16. Trehub, S. E., & Hannon, E. E. (2006). Infant music perception: Domain-general or domain-

specific mechanisms?. Cognition, 100(1), 73-99. Wade, B. C. (2004). Thinking musically: Experiencing music, expressing culture (p. xxiii180).

New York: Oxford University Press.