Practical attacks on the i>clicker classroom response system · Practical attacks on the i>clicker...

Transcript of Practical attacks on the i>clicker classroom response system · Practical attacks on the i>clicker...

-

Practical attacks on the i>clicker classroom response system

Thomas HebbTufts University

Dec 29, 2016

Abstract

As embedded computing technology has becomemore affordable, a number of products have emergedthat attempt to enhance traditional lecture-basedacademic courses by giving instructors digital meansof soliciting student participation. One such prod-uct is the i>clicker, which allows instructors to posemultiple-choice questions during a lecture and see theresponses of students in real time. In this paper, Ipresent the results of a security audit of the proto-cols and software that make up the i>clicker system,with special focus to attacks that can be carried outby an average student without access to specializedhardware.

1 Introduction

In recent years, professors at a number of col-leges and universities—including at Tufts—have inte-grated classroom response systems into their lectures.These systems enable instructors to collect and ag-gregate students’ responses to questions in real time.Each student purchases an RF remote, often calleda “clicker,” which has, at minimum, buttons markedA through E which the student can use to privatelyrespond to multiple-choice questions. The clickersreport responses over radio to a USB base station,which in turn sends the responses to software runningon the instructor’s computer. The software allows theinstructor to aggregate and display responses of ev-eryone in a class, associate students with their click-ers, and optionally track attendance and responsesfor grading purposes.

When used effectively, clickers can benefit botha professor and their students. Consider a profes-sor who writes a problem on the board and wantsto gauge how well their students understand how tosolve it. In a traditional lecture, one or two studentsmight raise their hands and answer. But studentswho volunteer answers are likely to be those withthe best understanding of the problem. To judge theclass’ understanding as a whole, the professor ideallywants to collect an answers from all the students.One way to do this is to present a set of possible an-swers and ask for a show of hands for each choice.But this method makes each student’s answer pub-lic knowledge and can encourage students to go withthe majority opinion even if they disagree with ordon’t understand it. Clickers solve the problem byallowing the professor to collect responses privatelyand display them in an aggregate, anonymized form.Some professors keep responses entirely anonymous,using them only to gauge understanding, while oth-ers count the presence or correctness of a student’sresponses towards that student’s grade.

It should be clear why, in the latter case, the se-curity model of a classroom response system is animportant concern. A system with poor attributionor confidentiality guarantees can be exploited by astudent to improve their own grade (by sniffing andcopying correct answers of others) or to sabotage thegrades of others (by transmitting spoofed, incorrectanswers attributed to a victim). Prior research intothe i>clicker system—one of the most popular class-room response systems and the focus of this paper—has shown its protocol to be entirely unauthenticated,allowing those with sufficient hardware to indiscrim-

1

-

inately sniff and spoof responses [2]. However, thehardware required to do this is nontrivial: one musthave either a clicker unit and means to reprogram itor a generic, reprogrammable radio that can receiveand transmit on the frequencies used by i>clicker re-motes. The time and effort needed to obtain andconfigure this hardware is likely not worth the smallgrade improvement a malicious student could obtain,especially since clicker responses generally make up asmall (< 10%) portion of a course’s overall grade.

One might assume that, in courses where students’clicker responses don’t affect their grades at all, thesecurity of the clicker system is irrelevant since stu-dents have no motivation to sniff or spoof clicker re-sponses. This assumption is incorrect. In this pa-per, I present two new vulnerabilities in the i>clickersystem, both of which can be exploited by a studentwith only a laptop computer and used to gain remotecode execution on the instructor’s computer. Addi-tionally, I provide a proof-of-concept exploit for oneof the vulnerabilities.

2 To the Community

I chose this topic to serve as a case study of how themost prominent security vulnerabilities in a systemare often not the most serious. In the case of click-ers, it’s natural to assume that sniffing and spoof-ing students’ responses is the most an attacker cando after breaking the system. However, as I show,flaws in the implementation of the i>clicker applica-tion software expose much higher-value vulnerabili-ties to attackers—vulnerabilities which have nothingat all to do with the intended purpose of the sys-tem. Specifically, vulnerabilities are present whichmake remote code execution much easier. Thus,the introduction of i>clicker into a network allowsattackers to gain control of previously-secure com-puters, potentially using the compromised systemsas footholds to exfiltrate information or leveragepreviously-unexploitable vulnerabilities to gain evenhigher privilege levels.

Cases like these highlight the need both for im-proved security auditing of vendors by corporate andacademic IT departments and for application-level

sandboxing at the OS level: a compromised applica-tion should not be able to read arbitrary data belong-ing to users who run it, nor should it be able to spreaditself to network mounts and removable drives thatit doesn’t normally require access to; yet on modernWindows and Linux systems, both are all too possi-ble.

3 Discoveries

The i>clicker system consists of three components:the remotes (clickers), the base station with which theremotes communicate over RF, and the applicationsoftware with which the base station communicatesover USB.

To audit each of these components, I took abottom-up approach: I started by analyzing whathappens at the lowest layer of each system, and usedthat knowledge to figure out what the higher layersdo. For each hardware component, this meant re-moving the casing from a unit and identifying themicrocontrollers powering it, finding those microcon-trollers’ datasheets, and, if possible, extracting theprograms running on those microcontrollers. For theapplication software, this meant running it on anopen operating system (Linux) and analyzing the net-work and USB traffic it generated.

The clicker units turned out to have almost no at-tack surface. The units are entirely self-contained,and the only way to reprogram one is to disassembleit and connect a specialized programmer to the pro-gramming port (Figure 1). Even with a programmer,the existing software on the unit can’t be read due tosecurity features integrated into the microcontroller1,so an attacker would have to write entirely new soft-ware for the unit by tracing connections on the PCBand referencing publicly-available datasheets. This isa significant amount of work for not much reward, asall a compromised clicker can affect is the system’sradio communications. As previously discussed, sniff-ing and spoofing radio communications gives an at-tacker little to no benefit in most situations.

1This was not the case for the (now-obsolete) hardware ver-sion studied by Gourlay et al. [2].

2

-

Figure 1: i>clicker+ PCB, showing NXPMC9S08QE8 microcontroller (left) and programmingport (center bottom)

The other two components of the system—the basestation and the application software—were muchmore promising. The base station connects to a com-puter over USB, which means that an attack can con-ceivably be carried out with no specialized hardware.As it turns out, the base station exposes an unpro-tected USB firmware update mechanism, so any at-tacker who gains brief physical access to a base sta-tion can reprogram it with malicious firmware whichattempts to infect any computer subsequently con-nected to that base station. This attack is describedin Section 3.2.

The application software is the most high-valuetarget, as an attacker who compromises it immedi-ately gains remote code execution. Unfortunately,this sensitivity is not reflected in the software’s im-plementation: the software also falls to a poorly-implemented update mechanism. Any attacker whocan intercept a victim’s network traffic—i.e. any at-tacker on the same network as their victim—can sup-ply a forged software update and plant arbitrary codeon the victim’s computer. This attack is described inSection 3.1.

3.1 Forged software update

The i>clicker software is capable of updating itself.The update process is initiated by a user clicking“Check for Update” in the software’s Help menu.Once begun, the process is vulnerable to a man-in-the-middle attack: the software uses HTTP to down-load an XML file which indicates if an update is avail-able. If one is, the XML file contains the URL of a zip(for Windows) or tar (for Linux and macOS) archive

containing the update. The software then downloadsthat archive, checks its size and checksum againstvalues from the XML file, and, if they match, re-places the existing installation with its contents. AsHTTP is an unauthenticated protocol, an attackercan intercept the software’s request for the XML fileand provide a forged file pointing to an archive thatcontains a malicious payload. If the payload is con-tained in the application’s main executable, it willautomatically run when the software restarts itselfafter updating.

For an attacker to exploit this vulnerability usingtraditional man-in-the-middle methods (ARP andDNS spoofing), they must be on the same networksegment as the victim. This is a reasonable assump-tion to make, as any student attempting to attack aninstructor’s computer will almost certainly be con-nected to the same wireless network as the instructorduring the lectures where i>clickers are used.

I had originally planned to use the existingEvilgrade [1] framework to implement a proof-of-concept exploit for this vulnerability. However, Ifound the framework to be lacking in documenta-tion and have poor core code quality. Addition-ally, it couldn’t repack archives on-the-fly, so I wouldhave had to hardcode a payload for the exploit. Be-cause of these limitations, I instead wrote my ownPython script [3] to exploit the vulnerability. Myscript runs a web server which emulates the reali>clicker update server. By rredirecting requeststo update.iclickergo.com:80 to the script, an at-tacker can install a malicious payload on their vic-tim’s computer.

Admittedly, this attack is hampered by the factthat it requires user action—the operator of thei>clicker software must click the “Check for Update”button and agree to the update. However, with a bitof social engineering, this is only a small obstacle. Inthe situation where the attacker is a student conduct-ing a man-in-the-middle attack during a class, thestudent can (1) stop forwarding packets, (2) wait forthe inevitable symptoms of a missing internet con-nection to appear, and (3) interject with a helpfulcomment to the effect of “Excuse me! This happenedin my last class, and updating the i>clicker softwarefixed it.” As most school IT departments strongly en-

3

-

courage students and instructors to keep their soft-ware patched and up-to-date, most instructors willprobably attempt an update without suspicion. Oncethe malicious payload is installed, the student can be-gin forwarding packets again. From the instructor’spoint of view, the update completed successfully andhad the expected effect. They likely won’t give it asecond thought.

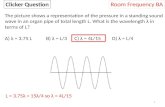

3.2 Malicious base station firmware

Although it involves hardware instead of software,this attack is actually quite similar to the previousone. The i>clicker base station contains two micro-controllers, shown in Figure 2: the first is an In-tel 8052 microcontroller contained within the USBcontroller IC, which manages USB communicationsand the base’s LCD display; the second is an AtmelAVR microcontroller, which manages radio commu-nications. i>clicker provides a utility to update thefirmware of the base station. By analyzing the net-work traffic of this utility, I found that it downloadsunsigned, unencrypted firmware images for both mi-crocontrollers. Unfortunately, another man-in-the-middle attack is likely infeasible, as the base stationupdater is a separate program which most instructorswon’t bother installing. However, the fact that thefirmware images aren’t authenticated in any way sug-gests another attack: an attacker with physical accesscan use the same USB protocol that the firmwareupdate utility uses to install malicious firmware onthe USB controller IC. The IC can be configured infirmware to present itself as any type of USB de-vice [6]. An attacker could, for instance, make it emu-late a USB keyboard, send keystrokes to launch a ter-minal, and run arbitrary commands on any computerit’s connected to. There is significant prior work thatfurther develops attacks possible with USB access toa victim’s computer—Kamkar [4], for example.

4 Defenses

Both of these attacks can be prevented by someonewho’s aware of their existence: a savvy user can en-sure that they never run updates on an untrusted

Figure 2: i>clicker Base PCB, showing TI TAS1020BUSB controller IC (left center), associated firmwareEEPROM (center), and Atmel ATMEGA16A (top)

network and that they never forfeit physical controlof their base station. However, as I said in Section 2,the underlying issue is that software is often deployedwithout being audited at even a cursory level for se-curity vulnerabilities: if IT departments more thor-oughly screened technology used on their networks,the two vulnerabilities I found would likely have beenfixed (or at least mitigated) a long time ago.

These attacks also show security weaknesses ofmodern desktop operating systems: the first attack,for example, works only if the target machine willexecute the malicious payload installed by the flawedupdate process and allow it to access or modify sensi-tive data. Modern Windows and Linux systems, alas,allow both of these to happen: no code signing orsandboxing is enforced for processes running withina single user session without elevated privileges. Soa malicious binary can access and modify every filebelonging to the compromised user—including fileson mounted network shares and session cookies forwebsites saved by the user’s browser. I suspect thatthe situation is better on macOS, which by default

4

-

requires applications to be signed. Default securitypolicies such as these are crucial to limiting the dam-age that can be caused by poorly-written and inse-cure software.

The second attack is harder to mitigate in a genericway: USB was designed as a single protocol for a widevariety of different devices; as such, USB devices areallowed to expose any functionality they desire. Oneway operating systems can prevent malicious devicesfrom silently taking control is by prompting the userto authorize each device upon first connection. Thisbrings its own set of problems, however: for one, anexception would have to be made for input devices, asa user can’t respond to the prompt for a mouse or key-board without being able to use that mouse or key-board! Additionally, users would likely just acceptthe prompts without reading them, as infamouslyhappened with Windows Vista’s UAC prompts.

USB-based attacks have entered the spotlight in re-cent years, notably in the form of BadUSB [5], whichrefers to a set of vulnerabilities in USB flash drivecontroller chips that let attackers reprogram them toact as different device types—just like attackers canreprogram the i>clicker base.

5 Summary

In this paper, I presented two new attacks on thei>clicker classroom response system, each allowing amalicious student in a course to achieve remote codeexecution on their instructor’s computer. Neitherattack used any novel techniques; had the i>clickersoftware been engineered with security in mind, nei-ther exploitable vulnerability should have existed.These vulnerabilities highlight the need for propri-etary products to undergo security audits before be-ing deployed on computers with access to sensitiveinformation and also show the security benefits ofOS-level sandboxing and signature verification.

References

[1] Francisco Amato. Evilgrade. GitHub. Software.url: https : / / github . com / infobyte /evilgrade (visited on 12/17/2016).

[2] Derek Gourlay et al. Security Analysis ofthe i>clicker Audience Response System. TermProject Report. University of British Columbia,Dec. 7, 2010. url: https://courses.ece.ubc.ca/cpen442/term_project/reports/2010/

iclicker.pdf (visited on 12/19/2016).

[3] Thomas Hebb. Clickjacker. GitHub. Software.url: https : / / github . com / tchebb /clickjacker (visited on 12/29/2016).

[4] Samy Kamkar. USBdriveby. Dec. 17, 2014. url:http : / / samy . pl / usbdriveby/ (visited on12/20/2016).

[5] Karsten Nohl, Sascha Krißler, and Jakob Lell.BadUSB. On accessories that turn evil. Talk atPacSec 2014. url: https://srlabs.de/wp-content/uploads/2014/11/SRLabs-BadUSB-

Pacsec-v2.pdf (visited on 12/20/2016).

[6] TAS1020B USB Streaming Controller DataManual. Texas Instruments. May 2011. 119 pp.url: http://www.ti.com/lit/gpn/tas1020b(visited on 10/11/2016).

5

https://github.com/infobyte/evilgradehttps://github.com/infobyte/evilgradehttps://courses.ece.ubc.ca/cpen442/term_project/reports/2010/iclicker.pdfhttps://courses.ece.ubc.ca/cpen442/term_project/reports/2010/iclicker.pdfhttps://courses.ece.ubc.ca/cpen442/term_project/reports/2010/iclicker.pdfhttps://github.com/tchebb/clickjackerhttps://github.com/tchebb/clickjackerhttp://samy.pl/usbdriveby/https://srlabs.de/wp-content/uploads/2014/11/SRLabs-BadUSB-Pacsec-v2.pdfhttps://srlabs.de/wp-content/uploads/2014/11/SRLabs-BadUSB-Pacsec-v2.pdfhttps://srlabs.de/wp-content/uploads/2014/11/SRLabs-BadUSB-Pacsec-v2.pdfhttp://www.ti.com/lit/gpn/tas1020b

IntroductionTo the CommunityDiscoveriesForged software updateMalicious base station firmware

DefensesSummary