Robotics · phase sequence that uses these pattern recog-nition algorithms for the...

Transcript of Robotics · phase sequence that uses these pattern recog-nition algorithms for the...

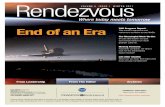

1SPIE’s International Technical Group Newsletter

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

Roboticsand Machine Perception

SPIE’sInternational

TechnicalGroup

Newsletter

Pattern recognition for goal-based rover navigationThe baseline ’03/’05 Mars Sample Re-turn missions require the return of ascience rover to the lander for the trans-fer of sample cache containers to aMars Ascent Vehicle or MAV. Alongthese lines, the newest mission guide-lines for the Mars Sample Return callfor a science rover to descend from thelander using ramps, acquire coresamples from as far away as hundredsof meters from the lander, return to thelander, and then ascend the ramps todeposit these samples in the MAV. Thereturn operation requires tracking anddocking techniques for the develop-ment of necessary integrated rover ca-pabilities key to the lander rendezvousoperation. The science rover must au-tonomously recognize, track, and pre-cisely rendezvous with the lander fromdistances as far away as hundreds ofmeters. The Sample Return Rover, orthe SRR, is a rover prototype that wasoriginally developed for the rapid re-trieval of samples collected by longerranging mobile science systems, andthe return of these samples to an Earthascent vehicle.

We have developed a multifeaturefusion algorithm that integrates the out-puts of horizontal line and wavelet-based visualarea-of-interest operators1 for lander detectionfrom significant distances. The horizontal linedetection algorithm is used to localize possiblelander candidates based on detection of the landerdeck. The wavelet transform is then performedon an acquired image and a texture signature isextracted in a local window of the wavelet coef-ficient space for each of the lander candidatesfound above. The multifeature fusion algorithmeliminates the false positives in this process aris-ing from features in the surrounding terrain. Thistechnique is coupled with a 3D visual terminalguidance algorithm that can extract and utilizecooperative features of the lander to accurately,iteratively estimate structure range-and-pose es-

continued on p. 3

VOL. 9, NO. 1 MARCH 2000

Figure 1. Horizontal line and wavelet derived features used for fusion to obtain lander heading from long distances (~125meters). (Left) Canny derived edge features. (Middle) Wavelet coefficients with a 2 level transform. (Right) Detectedlander labeled by boxed area.

Figure 2. Truss structure features used for mid-range navigation. (Left) Original image. (Middle) Edge features. (Right)Truss structure features.

Newsletter now available on-lineBeginning with this issue, Technical Group members will be offered the option to receive the Robotics andMachine Perception Newsletter in an electronic format. An e-mail notice is being sent to all group membersadvising you of the web site location for this issue and asking you to choose between the electronic orprinted version for future issues. If you have not yet received this e-mail message, then SPIE does not haveyour correct e-mail address in our database. To receive future issues of this newsletter in the electronicformat please send your e-mail address to [email protected] with the word ROBOTICS in thesubject line of the message and the words "Electronic version" in the body of the message.

If you prefer to continue to receive the newsletter in the printed format, but want to send your correct e-mail address for our database, include the words "Print version preferred" in the body of your message.

timates, and then steer the rover to the bottom ofthe ramps.

We have tested, in the arroyo at JPL, a three-phase sequence that uses these pattern recog-nition algorithms for the lander-rendezvous

portion of the Mars Sample Return missions.The first phase is the long-range traverse, inwhich the SRR initiates a search for the landerusing images acquired by the goal camera

SPIE’s International Technical Group Newsletter2

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

EditorialModular robotics and distributed systems

where industry can expect robotics solutionsthat speak more directly to their needs.

The emergence of the modular systemsapproach in robotics, and indeed the devel-opment of layered robot architectures andcooperative robotics systems, has drawn theattention of the robotics community to mes-sage passing and networking models forcommunicating between local and distrib-uted entities. We should take particular note,therefore, of the release of the Jini™ speci-fication3 from Sun Microsystems and theentertainment and media industry develop-ment of the HAVi specification4 for homenetworking. These developments aim at pro-viding integration of devices distributedacross industrial, office and home networks.

The key issues of concern to all of theseareas is the modelling and management ofresources, the integration of resources intosystems that perform useful functions, andthe development of software toolkits to re-flect and support these activities. Robotics

Modular robotics is an emerging area of re-search focused on the configuration of roboticresources into reconfigurable structures andcontrol systems. Recent work has aimed atdeveloping a range of standard off-the-shelfmechatronic modules that can be plugged to-gether to create diverse robot manipulatorconfigurations. The CMU ReconfigurableModular Manipulator System (RMMS)1 is arepresentative example of research in thisarea.

The recent conference on Sensor Fusionand Decentralized Control in Robotics Sys-tems2 featured a number of sessions thataddressed this important area and high-lighted some of its most significant devel-opments. Of particular note was the numberof real systems that have now been fieldedin order to investigate the practical feasibil-ity of both configurable and reconfigurablesystems. Many of these were simulations inthe eye of the researchers a couple of yearsago. Now they offer insights into a new era,

can contribute to these developments, whichoffer in turn the potential for robotics andartificial intelligence technologies to pen-etrate new application areas in industry andthe home.

Gerard McKeeRobotics and Machine PerceptionTechnical Group ChairThe University of Reading, [email protected]

References1. C.J. J. Paredis and P. K. Khosla, Synthesis

Methodology for Task Based Reconfigurationof Modular Manipulator Systems, Proc. 6thInt’l Symp. Robotics Research: ISRR, 1993.

2. Proc. SPIE 3839, 1999.3. The JiniTM Technology Specification, Sun

Microsystems, at http://www.sun.com/jini/specs/.

4. HAVi: Home Audio/Video Interoperability.Information and specifications at http://www.havi.org/.

Self-repairing mechanical systemWe have proposed the concept of a self-repair-able machine made up of homogeneous me-chanical units. Each unit is an active roboticelement with some mobility to allow it tochange its local connections, an ability that al-lows a collection of units to metamorphose intoa desired shape through local cooperation (self-assembly). When some of the system units aredamaged, they can be detected via communi-cation error and then eliminated, after whichspare units are brought in so that the shape canbe reassembled (self-repair).

This kind of system can be used as a pri-mary structure in space or deep-sea applica-tions because it is able to change its shape andfunctionality according to environmen-tal changes. It can also be used as a flex-ible robotic manipulator that can changeits configuration according to requiredworkspace, or change into various typesof walking machine to cope with un-known terrain. Ordinary mechanicalsystems with fixed configurations can-not achieve this versatility.

We have developed several of thesesystems and verified their self-assem-bly and self-repair capabilities.1,2 Thefirst prototype system is made up of 2Dunits that connect with other unitsthrough electro-magnetic interaction

(see Figure1). By switching the polarity of theelectromagnets, they can change their local situ-ation. Figure 2 shows the basic reconfigurationprocedure. Repeating these local processes, agroup of the units can change its global shape.

Each unit has an onboard CPU (Z80) thatcontrols its motion. It also has digital commu-nication channels to adjacent units, thus allow-ing the full collective to work as a distributedintelligent system. We have developed a ho-mogeneous distributed algorithm to allow theunits to form a target shape and then repair theshape when the system contains a faulty unit.Here, “homogeneous” means that each unit isdriven by an identical program and data set. In

other words, they do not know theirrole in the system at the initial time,thus they have to differentiate to theirappropriate roles in the systemthrough cooperation via inter-unitcommunication.

A 3D unit system has also beendeveloped (see Figure 3). This fullymechanical system has one DC mo-tor that rotates six orthogonal arms,with a drive connection mechanismat the end of each arm. The motion ofthe unit is rather slow (it takes 60s tochange connection points), which isdue to the mechanical connection be-

Figure 1. Two dimensional electro-magnetic unitsystem.

Figure 2. Basic procedures of self-assembly/self-repair.

3SPIE’s International Technical Group Newsletter

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

used to compute the heading direction. This op-eration is shown in Figure 2, where the origi-nal image is shown on the right, and the visu-ally extracted features are shown on the left.

During the third phase prior to climbing theramps, SRR uses a distinctive pattern of stripeson the ramps to position itself within 10cm ofthe base of the ramps. Once the SRR is inaround 5m range, it will circle around thelander. The close-range ramp alignment algo-rithm includes three major steps: feature ex-traction, feature match, and pose estimation.Features are the six stripes that were deliber-ately arranged on the ramps so that any twostrips have a unique combination spatially andtopologically. This unique configurationgreatly reduced uncertainty and computationalcomplexity. An edge detection algorithm

(Canny edge detection) is applied first, then allstraight-line segments are extracted. In orderto find the stripes, we first look for the ramps,which are defined by a set of long straight andnearly parallel lines.

With a single detected stripe in the image,a linear affine transformation can be con-structed based on the four corners. If this matchis correct, the transformation can help to findother matches. Because there are relatively fewstripes (six on the ramps), an exhaustive searchis used to pick up the best match. Once thematches are found, the pose and orientation areestimated by using the outside corners of thestripes. A minimum of four stripes is used forsafe navigation. This operation is shown in Fig-ure 3, where the original image is shown onthe right, and the visually extracted features areshown on the left.

Using the SRR, we have demonstrated pat-tern recognition algorithms for the lander re-turn phase of the planned Mars Sample Returnmissions. The SRR autonomously acquired thelander from 125m away in an outdoor envi-ronment using multifeature fusion of line andtexture features, traversed the intervening dis-tance using features visually derived from thelander truss structure, and aligned itself withthe base of the ramps prior to climbing usingprecise pattern matching for determining roverpose and orientation.

This research was carried out by the Jet Pro-pulsion Laboratory, California Institute ofTechnology, under a contract with the NationalAeronautics and Space Administration.

Yang Cheng and Terry HuntsbergerJet Propulsion LaboratoryCalifornia Institute of Technology4800 Oak Grove DrivePasadena, CA 91109, USAE-mail: {yang.cheng, terry.huntsberger}@jpl.nasa.gov

Reference1. F. Espinal, T. Huntsberger, B. D. Jawerth, and T.

Kubota, Wavelet-based fractal signature analysisfor automatic target recognition, OpticalEngineering 37 (1), p. 166, January, 1998.

Figure 3. Ramp pattern features used for close-range navigation. (Left) Original image. (Right) Detectedramp pattern features.

Pattern recognition

ing very stiff. We have built four units to verifythe reconfiguration functionality. Homoge-neous software for self-assembly and self-repair for 3D systems has also been developedin simulation.

We are now working on new systems. Oneof them is a homogeneous modular system ca-pable of self-assembly, self-repair, and alsomotion generation as a robotic system.3 As partof a lattice system (such as those described pre-viously), the modules can metamorphose intovarious configurations without outside help,then function as a robotic system to generate agroup motion such as walking or wall climb-ing. The other is a miniaturized unit system.By using a shape memory alloy (SMA) actua-tor, we have built a small (4cm) 2-D unit.4

Satoshi MurataMechanical Engineering LaboratoryAIST, MITI1-2 Namiki, Tsukuba, 305-8564 JapanPhone: +81-298-58-7125Fax.:+81-298-58-7091E-mail: [email protected]

References1. S. Murata, H. Kurokawa, and S. Kokaji, Self-

assembling Machine, Proc. IEEE Int’l. Conf.Robotics and Automation, pp. 441-448, 1994.

2. S. Murata, et al., A 3-D Self-reconfigurableStructure, Proc. IEEE Int’l. Conf. Robotics andAutomation, pp. 432-439, 1998.

3. S. Murata, et al., Self-reconfigurable ModularRobotic System, Intl. Workshop on EmergentSynthesis, IWES ’99, pp. 113-118, 1999.

4. E.Yoshida, et al., Miniaturized Self-reconfigurableSystem using Shape Memory Alloy, Proc. IEEE/RSJ Int. Conf. on Intelligent Robots andSystems: IROS99, pp.1579-1585, 1999.

Figure 3. Three dimensional mechanical unitsystem.

(continued from cover)mounted on its arm. A raster grid search pat-tern is implemented by serving the arm. Theimages are analyzed using the multifeature fu-sion algorithm that has been tuned to the spe-cific characteristics of the lander. This opera-tion is shown in Figure 1, where the outputs ofthe horizontal line algorithm and the waveletbased area-of-interest operator are shown onthe left and the final targeting is shown on theright. The rover was 125m from the lander atthis point in time. After the SRR visually ac-quires the lander, the traverse is started withvisual reacquisition of the lander every 5m. Thismode of operation continues until SRR is within25m of the lander based on fusion of wheelodometry data and visual angle sizing of thelander.

During the second phase, the SRR traversesfrom 25m to a ramp standoff location of 5m.Since the relative distance is more importantthan absolute position during this phase, we useparallel line features visually extracted from thelander truss structure. In order to correctly dis-tinguish the truss structure itself, the landerdeck is detected first, which appears as a set ofnearly leveled lines. Any parallel lines belowthe deck are considered as part of the truss struc-ture. The average distance between the paral-lel pairs is then used to estimate the distance,and the center of mass of these parallel lines is

SPIE’s International Technical Group Newsletter4

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

I-Cubes: A modular self-reconfigurable bipartite systemStatically stable gaits currently available formobile robots include using wheels, treads, andsimilar devices that limit the robot’s locomo-tion capabilities. A robot on wheels is usuallyincapable of climbing a set of stairs, or mov-ing over large obstacles. Recent trends in au-tonomous mobile robot research have tried tofind solutions for this problem by designingrobots with different gait mechanisms that canproviding locomotion in non-ideal environ-ments. These efforts are aimed at slowly emerg-ing applications that use small inexpensive ro-bots to accomplish tasks in unstructured envi-ronments and narrow spaces.

The I-Cubes (or ICES-Cubes)1 projectcomes out of a vision of a modular self-reconfigurable group of robots comprised oftwo module types with different characteris-tics. When combined as a single entity, suffi-cient modules will be capable of self-reconfiguring into defined shapes, which in turnwill provide a new type of locomotion gait thatmay be combined with other capabilities. Alarge group of modules that can change itsshape according to the locomotion, manipula-tion or sensing task at hand will then be ca-pable of transforming into a snake-like robotto travel inside a air duct or tunnel, a leggedrobot to move on unstructured terrain, a climb-ing robot that can climb walls or move overlarge obstacles, a flexible manipulator for spaceapplications, or an extending structure to forma bridge.

Designing identical elements for a modularsystem has several advantages over large andcomplex robotic systems. The units can bemass-produced, and their homogeneity can pro-vide faster production at a lower cost. A largesystem consisting of many elements is lessprone to mechanical and electrical failures,since it would be capable of replacingnonfunctioning elements by removing themfrom the group and reconfiguring its elements.Homogeneous groups of modules that are ca-pable of self-reconfiguring into different shapesalso provide a manufacturing solution at thedesign phase where identical elements are con-sidered, while providing a modular system thatcan be rearranged for different tasks.

To obtain the advantages listed above, amodular system must have several essentialproperties, such as geometric, physical, andmechanical compatibility among individualmodules. Several design issues need to be con-sidered for a modular self-reconfiguring sys-tem to be truly autonomous.

I-Cubes are a bipartite robotic system,which is a collection of independently con-trolled mechatronic modules (links) and pas-sive connection elements (cubes). Links are

capable of connecting to and disconnectingfrom the faces of the cubes. Using this prop-erty, they can move themselves from one cubeto another. In addition, while attached to a cubeon one end, links can move a cube attached tothe other end (Figure 1). We envision that allactive (link) and passive (cube) modules arecapable of permitting power and informationflow to their neighboring modules. A group oflinks and cubes does in fact form a dynamic 3-D graph where the links are the edges, and thecubes are the nodes. When the links move, thestructure and shape of this graph changes.

This self-reconfiguring system has the fol-lowing properties:• Elements can be independently controlled;

only the cube attached to the moving end ofa link is affected by link motions.

• All elements have the same characteristicsand are mechanically/computationally com-patible, i.e., any link can connect to any cube.

• The 3-D graph formed by the elements fits acubicle lattice to guarantee interlocking ofneighboring elements

• Active elements have sufficient degrees offreedom to complete motions in three-di-mensional space.Since all the actuation for self-reconfigur-

ation (with the exception of the attachmentmechanism) is provided by the links, cubes canbe used to provide computation, sensing andpower resources. If the modules are designedto exchange power and information, the cubescan be equipped with on-board batteries, mi-croprocessors and sensing modules to becomethe “brains” of the system. Furthermore, it ispossible to remove some of the attachmentpoints on the cubes to provide these moduleswith different and faster gaits, such as wheeledlocomotion. Specifically, we envision small

(a)

(b)Figure 1. (a) A link transferring from one cube to another. (b) Two links exchanging a cube.

continued on p. 7

5SPIE’s International Technical Group Newsletter

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

Modular robotics development at MMSModular Motion Systems Inc (MMS)in Palos Verdes, California, is engagedin the development and marketing ofmodular robotic equipment and controlsoftware for industrial and research ap-plications. The company, working withmany local organizations providingmanufacturing services, holds foreignand domestic patents covering basicmodular robotic concepts and offers awide range of robotic modules, softwarefor application programming, simula-tion and runtime operation, applicationengineering services ,and customer sup-port.

The MMS system offers a family ofrobotic modules that allow applicationengineers or researchers to build ma-nipulator systems that fit their needs.There are two types of MMS module:joints and links, both provided in a rangeof sizes (5, 7, 10, 14 and 20cm diam-eter). The joints are the active elementsof the system, and come in two types:rotary joints and linear joints. These ac-tive joints are connected together withpassive link modules that provide thestructural geometry of manipulator con-figurations (see Figure 1). The user mayconfigure as many degrees of freedom(i.e. number of joints), in any desiredarrangement (links), as are desired forthe application.

The configurations are programmedand operated by a single desktop PCcontrol computer (ACP) which offersthe user a programming environmentcalled the Model Manager. This pre-sents an array of graphical program-ming tools for online and off-line operations.Central to the system architecture, the ModelManager embodies software designs that hostthe integration of several machine intelligencetechnologies, including computer vision, plan-ning, simulation and multi-agent cooperation.

The joint modules are completely self-con-tained. Each has its own control processor todecode motion commands, perform kinematicsolution calculations, generate precise trajec-tory interpolations, and manage power flow tothe DC servomotor. Each joint also has an on-board power amplifier driving the servomotorin pulse-width-modulated (PWM) mode, gen-erating high power levels with high position-ing accuracy and very low levels of waste heat.The only connection required for each mod-ule, 48V dc with RF communication superim-posed, is made automatically as the modulesare assembled. As seen in Figure 2, there areno external cables.

The modular architecture offers many ad-vantages in practice. The original objective wasto provide a cost-effective means of buildingeither simple or complex systems that are notcommercially available. Substantial cost sav-ings have been discovered, however, whenbuilding standard robot configurations likePUMA or SCARA units. These advantagesderive from the use of much simpler systembuilding blocks, i.e. the 1-DOF modules, whichare easy to manufacture and maintain.

Simple MMS configurations might includeone degree-of-freedom (DOF) arrangements tosteer a vehicle or control a plant process, twoDOF arrangements to gimbal a camera or an-tenna, or three or four DOF systems to performsimple repetitive tasks. While hard automationdevices that require engineering and fabrica-tion might be more appropriate for some longterm applications, rapid deployment and easy

reuse of modules make MMS more costeffective for shorter term needs.

Complex MMS configurations, onthe other hand, can be assembled anddeployed at much lower cost thanwould be spent to develop a specialpurpose design. Such applications asmulti-legged walkers, branching armswith multiple end-effectors, mobileplatforms hosting diverse activities, ormultiple arms working in coordinationon a single task, are all examples ofcomplex systems that might be de-ployed at much lower cost with a modu-lar system. In addition, the modular ar-chitecture makes maintenance quickerand easier. Even the standard factoryconfigurations can be serviced in min-utes instead of hours with a simplemodule replacement, the Model Man-ager control software knows when andwhere problems may exist.

Several design improvements are indevelopment for the next generation ofMMS units. The new module intercon-nect latch is practically impossible toattach incorrectly and cannot loosenfrom activity. The new electrical inter-connect scheme associated with thenew latch is simpler and more reliable.The use of fiber composite materials isfurther reducing the cost and weight ofthe link modules. Every module con-figuration requires a unique kinematicsolution: a set of equations translatingCartesian end-effector position intojoint angles. An algorithm based onFuzzy Associative Memory (FAM)methods has been developed to auto-

mate this procedure. Most important, the ModelManager control software is continuously ex-panding to include more services. It has alsobeen rehosted from Lisp to a Java platform andthe simulation functions now use Java3D.

More detailed information is availablethrough the web address below and the refer-ence given.1

William SchonlauModular Motion Systems31107 Marne DriveRancho Palos Verdes, CA 90275E-mail: [email protected]://www.mms-robots.com

Reference1. William Schonlau, MMS: a modular robotic system

and model-based control architecture, Proc. SPIE3839, pp. 289-296, 1999.

Figure 1. The two MMS Module types.

Figure 2. Modules assembled into PUMA configuration.

SPIE’s International Technical Group Newsletter6

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

PolyBot and Proteo:Two modular reconfigurable robot systemsModular robotic systems are com-posed of modules that can bedisconnected and reconnected indifferent arrangements to forma new system enabling new func-tionalities. Self-reconfigurabilityarises when the system can rear-range its own modules. We areinterested in leveraging the re-peated use of one or two moduletypes giving a large variety ofconfigurations of increasing com-plexity without a high cost in de-sign. The resulting systems prom-ise to be versatile (by adaptingtheir shape to the task), robust(through redundancy), and lowcost (via batch fabrication).1-4

After prototyping several module types overthe past eight years to prove the basic conceptsin,4,6 as well as more recent developmentwork,2,3,7,8 it is clear that the feasibility of thesesystems has been established. While theseprojects have involved the construction of onlytens of modules at the most, we are now inter-ested in exploring issues that arise as the num-bers of modules are increased to hundreds orthousands while maintaining a small numberof module types. We are exploring two basicsystems: one called PolyBot, the most recentsystem under development, which involvessnake-like or octopus-like structures and mo-tions; and another called Proteo, which canform rigid structures (somewhat like autono-mous bricks) that can rearrange themselves intodifferent shapes.

PolyBotPolyBot is comprised of two types of modules,one called a segment, which contains most ofthe functionality, the other called a node. It iseasiest to visualize the modules as cube-shaped.Segments have two connection plates (for bothmechanical and communications and powercoupling), and one rotational degree of free-dom. Each segment contains a low-speed hightorque motor, a Motorola Power PC 555 mi-cro-controller, motor current and position sens-ing, and proximity sensing. The node is rigidwith six connection plates (one on each side ofthe cube). It serves two purposes: it allows morethan serial chain configurations, and the spaceinside the cube can house such things as morepowerful computing resources and batteries.

An earlier project, Polypod demonstratedthree different classes of locomotion (a tum-bling motion, an earthworm-like gait, and acaterpillar-like gait) with the 11 modules that

were constructed. In addition, another fiveclasses of locomotion were simulated.9 Its suc-cessor PolyBot, has demonstrated another fourclasses of locomotion (a rolling-track, a sinu-soidal snake-like gait, a platform of with up tosix legs, and a four-legged spider) with up to32 modules connected together. In addition,PolyBot has demonstrated distributed manipu-lation of paper, boxes and tennis balls.10 Thesedemonstrations show the versatility of modu-lar systems.

In addition to particular tasks and configura-tions, we have demonstrated reconfigurationfrom a loop to a snake and from a snake into afour-legged spider configuration. This lastreconfiguration involved 24 modules and wasguided by a human teleoperator. The spider con-figuration is shown in Figure 1. A similarreconfiguration sequence was simulated with 180modules.11

ProteoProteo is the name of a system thatuses substrate or latticereconfiguration. The motions ofthe modules are along one dimen-sional paths stopping only at po-sitions that form a lattice. In addi-tion each module can only moveitself relative to one neighbor.These constraints ease the compu-tational problems of inverse andforward kinematics and self-col-lision detection by restricting themotions to be local and discrete.12

The current design of Proteo in-volves a rhombic dodecahedron(RD) shape which packs in a face-centered-cubic fashion. Actuation

would occur by the rotation of the RD aboutan edge into one of the 12 adjacent positions.In contrast to PolyBot, the Proteo modules wereintended to be simpler and smaller since theyneed little sensing, and only discrete actuation.In practice, it is turning out to be difficult toconstruct.

Since one module can only move itself (or,equivalently, one neighbor) to an adjacent po-sition, the functionality is essentially thereconfiguration of the conglomerate shape. Wehave been exploring the planning problem offorming arbitrary shapes,13 and have developedand simulated six algorithms. The latest is thecombination of two methods: the first is a goal-ordered method in which every module at-tempts to reach the closest available goal posi-tion in the target configuration according a pre-planned order; and the second is a simulatedheat flow method in which module motion isdirected by the flow of heat which is generatedat goal positions and propagated between mod-ules. This system has proven to be able to suc-cessfully plan the motion of up to 600 moduleswith only local communications and stateknowledge between modules14 (see Figure 2).

Future workBy the end of year 2001 we hope to have dem-onstrated 200 PolyBot modules performingautonomous reconfiguration and locomotion.We should also have demonstrated Proteo mod-ules reconfiguring into multiple shapes as wellas simulating thousands of modules. While themodules will each fit inside a cube of 5cm on aside by the 2001 deadline, the long term goalis to shrink the modules down as far as pos-sible while increasing the numbers past thethousands: perhaps to millions.

This work is funded in part by the Defense

Figure 1. PolyBot with 25 modules in a four-legged spider configuration.

Figure 2. Proteo simulation with 441 modules in ateacup formation.

7SPIE’s International Technical Group Newsletter

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

Advanced Research Project Agency (DARPA)contract # MDA972-98-C-0009. Contributorsto this effort include Sunil Agrawal, AranchaCasal, David Duff, Craig Eldershaw, RobGorbet, Leo Guibas, Maia Hoeberechts, SamHomans, Kea Kissner, An Thai Nguyen, KimonRoufas, John Suh, Kevin Wooley, and YingZhang.

Mark YimXerox Palo Alto Research Center3333 Coyote Hill RdPalo Alto, CA 94304E-mail: [email protected]

References1. T. Fukuda and S. Nakagawa, Dynamically

Reconfigurable Robotic System, Proc. IEEE Int’lConf. on Robotics and Automation, pp. 1581-1586, 1988.

2. S. Murata, H. Kurokawa, E. Yoshida, K. Tomita,and S. Kokaji, A 3D SelfReconfigurableStructure, Proc. IEEE Int’l Conf. on Roboticsand Automation, pp. 432-439, May 1998.

3. A. Pamecha, C. Chiang, D. Stein, and G.S.Chirikjian, Design and Implementation ofMetamorphic Robots, Proc. ASME Design Eng.Tech. Conf. and Computers in Eng. Conf.,Irvine, California, August 1996.

4. M. Yim, New Locomotion Gaits, Proc. IEEEInt’l Conf. on Robotics and Automation, pp.2508-2514, May 1994.

5. M. Yim, A Reconfigurable Modular Robot withMany Modes of Locomotion, Proc. of JSME Int’lConf. on Advanced Mechatronics, pp.283-288,1993

6. M.Yim, D.G. Duff, and K.D. Roufas, PolyBot: AModular Reconfigurable Robot, accepted to,Proc. of the IEEE Intl. Conf. on Robotics andAutomation, San Francisco 2000

7. D. Rus and M. Vona, Self-reconfigurationPlanning with Compressible Unit Modules, Proc.IEEE Int’l Conf. on Robotics and Automation,pp. 25132520, May 1999.

8. P. Will, A. Castano, and W.-M. Shen, Robotmodularity for selfreconfiguration, Proc. SPIE3839, pp. 236-245, September 1999.

9. http://www.parc.xerox.com/modrobots (onlinesince 1997)

10. M. Yim, J. Reich, and A.A. Berlin, TwoApproaches to Distributed Manipulation,Distributed Manipulation, K. Bohringer and H.Choset (eds.), Kluwer Academic Publishing,2000.

11. http://robotics.stanford.edu/users/mark/multimode.html (online since 1994)

12. A. Casal and M. Yim, Self-ReconfigurationPlanning for a Class of Modular Robots, Proc.SPIE 3839, pp. 246-257, September 1999.

13. A.T. Nguyen, L.J. Guibas, and M. Yim,Controlled Module Density HelpsReconfiguration Planning, accepted 4th Int’lWorkshop on Algorithmic Foundations ofRobotics: WAFR , 2000.

14. Y. Zhang, M. Yim, J. Lamping, and E. Mao,Distributed Control for 3D Shape Metamorpho-sis, submitted Autonomous Robots, special issueon self-reconfigurable robots, 1999.

groups of robots that can reposition themselvesto form a group capable of changing its gait inorder to move over obstacles that single ele-ments cannot overtake. Similar scenarios thatrequire reconfiguration include climbing stairsand traversing pipes.

Path planning for modular self-reconfigurable systems has proven to be dif-ficult at best, due to the total number of spa-tial configurations for a given number of mod-ules: the possibilities grow exponentially withthis number. In addition to the large numberof configurations, motion-planning algorithmsneed to be able to consider multiple sequencesof these configurations in order to find the onethat will lead from a given initial position/shape to a final one. We are currently testing

I-Cubescontinued from p. 4

The IRIS Laboratory

walls, roads, opponent robots, and obstacles.All of these objects may be included in a simu-lation by editing an easily understandable hu-man-readable description file.

TeamBots supports the low-cost platformin simulation and in hardware (Figure 2).

Multirobot formationsThe potential-field approach is a well-knownstrategy for robot navigation. In this paradigm,repulsive and attractive fields are associatedwith important objects in the environment (eg.goal locations or obstacles to avoid). To navi-gate, the robot computes the value of the vec-tors corresponding to each relevant field, thencombines them (usually by summation) to com-pute a movement vector based on its currentposition. The result is emergent navigationalbehavior reflecting numerous c constraints and/or intentions encoded in the robot’s task-solv-ing behavior.

We have extended the mechanism to mul-tiple robots so that the potential field impact-ing a robot’s path is shaped by the presence ofteam or opponent robots. We call these poten-tial functions social potentials. This approachprovides an elegant means for specifying teamstrategies in tasks like foraging, soccer andcooperative navigation.6 This work is continu-ing as we seek to formalize and carefully ana-lyze the various types of potentials appropri-ate for various multirobot tasks.

Tucker BalchThe Robotics InstituteCarnegie Mellon University5000 Forbes AvenuePittsburgh, PA 15217

heuristic and knowledge-based algorithms tofind intelligent solutions that can be imple-mented in real time.

Cem ÜnsalCarnegie Mellon UniversityInstitute for Complex Engineered Systems(ICES)5000 Forbes AvenuePittsburgh, PA 15213-3890E-mail: [email protected]://www.cs.cmu.edu/~unsal/research/ices/cubes.html

Reference1. C. Ünsal, H. Kiliÿÿöte, and P.K. Khosla, I(CES)-

Cubes: A Modular SelfReconfigurable BipartiteRobotic System, Proc. SPIE 3839, pp. 258-269,1999.

continued from p. 12

Figure 3. A formation of four simulated robotsnavigates using the social potentials approach,initially developed at Georgia Tech and extendedat the MultiRobot Lab.

Phone: 412/268-1780E-mail: [email protected]://www.cs.cmu.edu/~trb

References1. http://www.cs.cmu.edu/~multirobotlab2. S. Lenser and M. Veloso, Sensor Resetting

Localization, IEEE Int’l Conf. Robotics andAutomation, 2000, to appear.

3. T. Balch, Hierarchic Social Entropy: AnInformation Theoretic Measure of Robot GroupDiversity, Autonomous Robots 8 (3), to appear.

4. http://www.cs.cmu.edu/~coral/minnow5. http://www.teambots.org6. T. Balch, Social Potentials for Scalable Multi-

Robot Formations, IEEE Int’l Conf. Roboticsand Automation 2000, to appear.

SPIE’s International Technical Group Newsletter8

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

Modelling modular robotics systemsRobotic systems can be created by com-bining the functionality of a set of sepa-rate system components such as sensors,effectors, and computational resources.The effort of the programmer in thesecircumstances is often spent in makingexplicit the inherent functionality thatcan emerge when these components arecombined. Benefits, at the very least interms of saved programming effort, canbe achieved if an automated system canreason about these interactions. In orderto achieve this, the functionality pro-vided by the resources must be describedin a manner that allows reasoning to beperformed about their suitability for aparticular task and the consequences oftheir interaction with other resources. Ata more practical level, resources mustalso be provided with facilities that sup-port their interconnection, includingconsiderations of data requirements andappropriate control structures.

The MARS model, developed at the Uni-versity of Reading, addresses these issues. Itschief contribution is the introduction of an ex-plicit, declarative description of the individualresources and their target configuration in amodular robot system. A reasoning model ex-ploits this description to identify and resolvethe consequences, both beneficial and detri-mental, of the interactions between the re-sources. MARS exploits annotations, seman-tic descriptions added to source code as com-ments, to repackage the software defining therobotics resources as ‘modules’; the basic unitthe model recognizes. MARS identifies physi-cal (sensors and effectors) and non-physical(algorithmic) modules, and provides notationfor describing both the modules and the physi-cal, data, and control relationships betweenthem. A configuration defining a modular ro-botics system comprises an itemized list ofthese relationships.

The model resolves consequences throughthe introduction of additional modules to pro-duce a specification that is the basis for realiz-ing the target robot system. It extracts the an-notations from the source code and generates amodule, with source code, for the correspond-ing modular robot system. This programmingmodel has been implemented in an environmentcalled DEIMOS.

The practical benefit of the approach rep-resented by MARS is the ability to move swiftlyfrom specification to implementation withoutthe requirement of a programmer either to gluethe components together or to articulate theinherent functions that can emerge when mod-ules interact.

Exploiting object-oriented conceptsMARS exploits object-oriented concepts in itsreasoning. The most important example of thisis in the inheritance of motion functionality.Central to this is the notion that sensors andend-effectors have an inherent potential formotion in six degrees of freedom, even thoughthey may not be able to express that motiondirectly. The MARS model allows these com-ponents—and tools in general—to inherit mo-tion functionality from the effectors on whichthey are mounted. When, for example, a cam-era is mounted on a pan-tilt head can inheritthe pan and tilt motions from the head. If thelatter is in turn mounted on a mobile base, thecamera can also inherit its motion functional-ity. This includes the inheritance of similar ro-tational functions from the pan-tilt head (pan)and the mobile base (rotate).

The MARS model also identifies data andcontrol consequences associated, respectively,with distributing data to multiple client mod-ules and arbitrating between multiple controlcommands converging on a single effector.These provide scope for a range of control ar-chitectures, including centralized, decentral-ized, hybrid and agent-based architectures.

We can illustrate functional inheritance inthe MARS model using the mobile camera ex-ample above. For each of the three compo-nents, a corresponding MARS module is de-fined. We will denote these, respectively, asthe Camera module, the PanTiltHead moduleand the MobilePlatform module. Each has awell-defined interface: the Camera module forgrabbing images, and the PanTiltHead andMobilePlatform modules for pan-tilt motions

and drive-rotate motions respectively.We assume that the vertical axes of rota-tion for the pan and rotate motions of thelatter are coincident (Figure 1). The con-figuration definition for the system isthen trivial, and defined as follows:@configuration@modules Camera, PanTiltHead,MobilePlatformCamera is_mounted_on PanTiltHeadPanTiltHead is_mounted_on

MobilePlatformThe definition identifies the modules

involved in the configuration and theirphysical relationship. The MARS reason-ing model then identifies the inheritedfunctionality available to the camera fromthe two modules on which it is mounted.The specification that makes this explicitis defined as follows:@specification@modules Camera, PanTiltHead,MobilePlatform, InheritanceNode1

Camera is_mounted_on PanTiltHeadPanTiltHead is_mounted_on

MobilePlatformCamera uses_motion_from

InheritanceNode1 Rotn_X, Rotn_Y,Trans_Z InheritanceNode1 {

Rotn_X: PanTiltHeadRotn_Y: PanTiltHead, MobilePlatformTrans_Z: MobilePlatformresolve Rotn_Y: UseOnly PanTiltHead

}The InheritanceNode in the specification

collects together the components of the func-tional inheritance and specifies how the rota-tional motions required by the camera shouldbe resolved between the head and the base. Inthis case it is simply resolved in favor of thehead. Other resolution strategies are possible.This specification now forms the basis, usingtools within the DEIMOS software environ-ment, for the creation of source code for thecomposite system. The MARS model is still inearly stages of development. Further researchwill evaluate the scope of the model and willinvestigate extensions to enhance its capabil-ity to deal with diverse robotics and computa-tional systems.

G. T. McKee and J. A. FryerThe University of Reading, UK.E-mail: [email protected]

References1. J. A. Fryer and G. T. McKee, Resource Modelling

and Combination in Modular Robotics Systems,Proc. IEEE Int’l Conf. Robotics and Automa-tion, pp. 3167-3172, Leuven. Belgium, 1998.

Figure 1. Modular robot system.

9SPIE’s International Technical Group Newsletter

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

The IRIS Laboratory: 3D imaging anddata fusion at the University of TennesseeThe Imaging, Robotics, and IntelligentSystems (IRIS) Laboratory, in the Uni-versity of Tennessee’s Department ofElectrical and Computer Engineering,performs research in 3D imaging and datafusion. Applications of this research in-clude mapping of large facilities for ro-botic navigation and automated construc-tion of virtual environments for real timesimulation. IRIS Lab core technologiesare classified into the three areas illus-trated in Figure 1 and described below.

Scene buildingTo acquire usable data, accurate sensorcharacterization is obviously important.In many scenarios, sensor placement mustbe addressed to ensure that the captureddata is as accurate and complete as pos-sible. Data sets captured from differentviewpoints, perhaps with different sen-sor modalities, must be registered to acommon coordinate system. Once regis-tered, the data can be fused to improvethe information available from a singlesensor or to capture additional informa-tion, such as texture or thermal charac-teristics. The final outcome of this task isa dense lattice of 3D, multimodal data thatdescribes the scene geometry and otherspatial and spectral characteristics.

Scene descriptionOnce constructed, a scene generally re-quires further processing to be of practical use.Objects of interest might need to be segmentedfrom other objects, clutter, and/or background.After segmentation, objects may be modeled toaid in manipulation, recognition, and/or visual-ization. The amount of data captured in the scenebuilding process often exceeds the needs or ca-pabilities of the application, thereby necessitat-ing data reduction. On the other hand, data en-hancement may be required for the examinationof small details. A multiresolution analysis ofthe data can benefit the solution of each of theseproblems. Referring to Figure 1, note that infor-mation flows in both directions between SceneBuilding and Scene Description. In many cases,intermediate scene description results can be usedto modify the operation of the scene buildingmodule.

Data visualizationCurrent visualization activities are focused onexamining the results from scene building andscene description. As these results are often usedfor virtual reality (Vr) or to visualize reality (vR),research must account for varying visualization

Figure 1. IRIS Lab core technologies.

Figure 2. 3D scene automatically reconstructed fromunregistered range and video.

requirements. The objective is to provide appro-priate data so that “event” dependent visualiza-tion can be achieved. Example events might in-clude viewpoint, hardware limitations, constantframe rate, and/or object specific interest.

The IRIS Lab is presently supported by theU.S. Department of Energy (DOE) through theUniversity Research Program in Robotics(URPR) and by the U.S. Army’s Tank-auto-motive & Armaments Command (TACOM)through the National Automotive Center(NAC) and the Automotive Research Center(ARC).

Mongi A. Abidi and Jeffery R. Price328 Ferris HallUniversity of TennesseeKnoxville, TN 37996-2100, USAE-mail: {abidi, jrp}@utk.eduhttp://www.iristown.engr.utk.edu

References1. C. Gourley, C. Dumont, and M. Abidi, Enhance-

ment of 3D models for fixed rate interactivityduring display, Proc. IASTED Int’l Conf.Robotics and App., pp. 174-179, October 1999.

2. L. Wong, C. Dumont, and M. Abidi, Next best viewsystem in a 3D object modeling task, Proc. IEEEInt’l Symp. Computational Intelligence inRobotics and Automation: CIRA’99, pp. 306-311, November 1999.

3. F. Boughorbal, D. Page, and M. Abidi, Automaticreconstruction of large 3D models of realenvironments from unregistered data sets, Proc.SPIE 3958, 2000.

4. H. Gray, C. Dumont, and M. Abidi,Integration ofmultiple range and intensity image pairs using avolumetric method to create textured 3D models,Proc. SPIE 3966, 2000.

5. E. Juarez, C. Dumont, and M. Abidi, Objectmodeling in multiple-object 3D scenes usingdeformable simplex meshes, Proc. SPIE 3958,2000.

6. M. Toubin, D. Page, C. Dumont, F. Truchetet, andM. Abidi, Multi-resolution wavelet analysis forsimplification and visualization of multi-texturedmeshes, Proc. SPIE 3960, 2000.

7. Y. Zhang, Y. Sun, H. Sari-Sarraf, and M. Abidi,Impact of intensity edge map on segmentation ofnoisy range images, Proc. SPIE 3958, 2000.

(a)

(b)Figure 3. Mesh reduction using a multiresolution,wavelet analysis: (a) original terrain mesh; (b)mesh after reduction.

SPIE’s International Technical Group Newsletter10

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

Mobile robots: Part of a smart environmentSmart environments consist of numerous agentsperceiving the environment, communicatingwith other systems and humans, reasoningchanges to the environment, and performingthose changes accordingly. A key characteristicfor a system operating in a smart environment is“situatedness”: a concept that was introduced tothe fields of AI and robotics in the 1980s. A situ-ated system is in tight interaction with its envi-ronment: analyzing the situation at hand andcalculating appropriate responses in timely fash-ion. This is the opposite of the traditional ap-proach to intelligent systems which emphasizedcomputationally expensive symbolic models andplanning. In our research, the concept ofsituatedness is enlarged into the field of mobilecomputing. In addition to a robot, a situated sys-tem can be a mobile phone, a wearable com-puter, a handheld navigational aid for the eld-erly, or some other mobile information system.In all these mobile devices serving a user,situatedness conveys the idea that the operationof the device depends on the state of the userand the state of the local environment.

Intelligent mobile robotsThe general goal of our research in this area is todevelop components for the next generation ofintelligent robots that will operate in our normalliving environment and cooperate with humanbeings and other machines. These robots are anessential part of the smart environment.

We have developed a control architecture fora mobile robot operating in a dynamic environ-ment. An early version of the control system,PEMM, was applied to the control of an intelli-gent and skilled paper roll manipulator.1,2 Theresearch resulted in a practical, full-sized imple-mentation capable of handling paper rolls inwarehouses and harbors. A key characteristic ofthe more recent version of the control architec-ture, Samba, is its ability to both reason aboutactions based on task constraints and to reactquickly to unexpected events in the environ-ment.3,4 That is, the architecture is goal-orientedand situated.

SambaSamba continuously produces reactions to all theimportant objects in the environment. These re-actions are represented as action maps. An ac-tion map describes, for each possible action, howadvantageous it is from the perspective of be-having towards the object in a certain way (e.g.avoiding or chasing). The preferences are shownby assigning a weight to each action. By com-bining action maps, an action producing a satis-factory reaction to several objects simultaneouslycan be found. Figure 1 shows two robots and anaction map for the robot in front to catch theball. The ridge describes the actions by which

the robot reaches the ball. The actions that wouldresult in a collision with the other robot beforethe ball is reached are inhibited.

Recently, our main activities have been indeveloping the Samba control architecture andtechniques for vision-based environment mod-eling. We have applied the control architecturein playing simulated soccer, and participated thefirst and second world championships of roboticsoccer, RoboCup, held in Nagoya in 1997 andParis in 1998.5,6 We are also trying to build amobile robot to support the independent livingof the elderly and disabled. In this work, we havedemonstrated the use of a teleoperated mobilerobot for domestic help.

Vision-based environment modelingIn vision-based environment modeling, a tech-nique is being developed that combines 3D in-formation provided by structured lighting withthe 2D information provided by intensity im-ages.7 As an application, the creation of the modelof a building for virtual reality applications isconsidered. This model is created while a robotis moving inside the target building. Because themodel will have 3D range and 2D (color) inten-sity information, it is possible to include versa-tile information about the structures inside thebuilding such as surface colors and materials.Figure 2 shows an image taken by the color rangescanner developed in our laboratory. This imageis combination of range and reflectance images.

Interacting with mobile robots and smartenvironmentsIn developing a mobile robot capable of execut-ing complex tasks in a dynamic environment,we selected the approach of first building ateleoperated robot and then gradually shiftingtasks from the human to the robot. This approachenables complex tasks to be performed as soonas the teleoperation is operational. This speedsup the research on human-robot interaction, ascomplex interactions can be analyzed at an earlystage of the research. Furthermore, in many ap-plications, human operators can be left in thecontrol loop to analyze the data collected by therobot or to solve tasks that are too difficult for it.

In the future, interaction between humans,mobile robots and other devices will become in-

creasingly important as machines will, more andmore, operate as assistants in our everyday life.Because of this, there is a need for a general-purpose technique that we can use to interact withrobots and other embedded systems in smart en-vironments. We have developed a techniquebased on a mobile code paradigm, that allowshumans to use a single handheld control deviceto interact with mobile robots and ubiquitous em-bedded systems.8

Juha Röning and Jukka RiekkiInfotech Oulu and Department of ElectricalEngineeringUniversity of OuluPO Box 4500FIN-90014, FinlandE-mail: [email protected]://www.ee.oulu.fi/research/isg/

References1. T. Heikkilä and J. Röning, PEM-Modelling: A

Framework for Designing Intelligent RobotControl, J. Robotics and Mechatronics 4 (5), pp.437444, 1992.

2. P. Vähä, T. Heikkilä, J. Röning, and J. Okkonen,Machine of the Future: An Intelligent Paper RollManipulator, Mechatronics: MechanicsElectronics Control 4 (8), pp. 861-877, 1994.

3. J. Riekki and J. Röning, Reactive Task Executionby Combining Action Maps, IEEE/RSJ Int’l Conf.on Intelligent Robots and Systems: IROS’97, pp.224-230, Grenoble, France, 8-12 September 1997.

4. J. Riekki, Reactive task execution of a mobilerobot, Acta Univ. Oul. C 129 (PhD Thesis),University of Oulu, Oulu, Finland, 1999. http://herkules.oulu.fi/isbn9514251318/

5. J. Riekki and J. Röning, Playing Soccer byModifying and Combining Primitive Reactions,RoboCup-97: Robot Soccer World Cup I,Lecture Notes in Artificial Intelligence 1395, H.Kitano (ed.), pp. 74-87, Springer-Verlag, Nagoya,1997.

6. J. Riekki, J. Pajala, A. Tikanmäki, and J. Röning,CAT Finland: Executing primitive tasks in parallel,RoboCup-98: Robot Soccer World Cup II,Lecture Notes in Artificial Intelligence 1604, M.Asada and H. Kitano (eds.), Springer-Verlag, pp.396-401, 1999.

7. J. Haverinen and J. Röning, A 3-D ScannerCapturing Range and Color, Proc. ScandinavianSymposium on Robotics, pp. 144-147, Oulu,Finland, 14-15 October 1999.

8. J. Röning and K. Kangas, Controlling PersonalRobots and Smart Environments, Proc. SPIE 3837,pp. 26-39, 1999.

Figure 1. An action map and two robots catchingthe ball. Figure 2. A combination of range and reflectance

images.

11SPIE’s International Technical Group Newsletter

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

Here’s an easy way to reach your col-leagues around the world—instantly.SPIE’s Robotics Listserv is an auto-mated e-mail server that connects you toa network of engineers, scientists, ven-dors, entrepreneurs, and service provid-ers.

SPIE’s Robotics Listserv has its rootsin the well-established and active Robot-ics & Machine Perception TechnicalGroup, and is yet another way to useSPIE’s technical resources.

To join the Robotics Listserv, send ane-mail message to [email protected] with the words subscribeinfo-robo in the message body.

For detailed instructions, as well as in-formation about other online SPIE ser-vices, such as Abstracts Online, Employ-ment Services, and Technical ProgramsOnline, send a message to [email protected] with the word helpin the message body.

Robotics & Machine PerceptionThe Robotics & Machine Perception newsletter is published semi-annually by SPIE—The International Society

for Optical Engineering for its International Technical Group on Robotics & Machine Perception.

Technical Group Chair Gerard McKee Managing Editor Linda DeLanoTechnical Editor Sunny Bains Advertising Sales Bonnie Peterson

Articles in this newsletter do not necessarily constitute endorsement or the opinionsof the editors or SPIE. Advertising and copy are subject to acceptance by the editors.

SPIE is an international technical society dedicated to advancing engineering, scientific, and commercialapplications of optical, photonic, imaging, electronic, and optoelectronic technologies. Its members are engineers,

scientists, and users interested in the development and reduction to practice of these technologies. SPIE provides themeans for communicating new developments and applications information to the engineering, scientific, and usercommunities through its publications, symposia, education programs, and online electronic information services.

Copyright ©2000 Society of Photo-Optical Instrumentation Engineers. All rights reserved.

SPIE—The International Society for Optical Engineering, P.O. Box 10, Bellingham, WA 98227-0010 USA.Phone: (1) 360/676-3290. Fax: (1) 360/647-1445.

European Office: Contact SPIE International Headquarters.

In Japan: c/o O.T.O. Research Corp., Takeuchi Bldg. 1-34-12 Takatanobaba, Shinjuku-ku, Tokyo 160, Japan.Phone: (81 3) 3208-7821. Fax: (81 3) 3200-2889. E-mail: [email protected]

In Russia/FSU: 12, Mokhovaja str., 121019, Moscow, Russia. Phone/Fax: (095) 202-1079.E-mail: [email protected].

Want it published? Submit work, information, or announcements for publication in future issues of thisnewsletter to Sunny Bains at [email protected] or at the address above. Articles should be 600 to 800 words long.Please supply black-and-white photographs or line art when possible. Permission from all copyright holders foreach figure and details of any previous publication should be included. All materials are subject to approval andmay be edited. Please include the name and number of someone who can answer technical questions. Calendarlistings should be sent at least eight months prior to the event.

T his newsletter is printed as a benefit ofmembership in the Robotics & Machine

Perception Technical Group. Technical groupmembership allows you to communicate andnetwork with colleagues worldwide.

Technical group member benefits include asemi-annual copy of the Robotics & MachinePerception newsletter, SPIE’s monthly news-paper, OE Reports, membership directory, anddiscounts on SPIE conferences and shortcourses, books, and other selected publicationsrelated to robotics.

SPIE members are invited to join for thereduced fee of $15. If you are not a member ofSPIE, the annual membership fee of $30 willcover all technical group membership services.For complete information and an applicationform, contact SPIE.

Send this form (or photocopy) to:SPIE • P.O. Box 10Bellingham, WA 98227-0010 USAPhone: (1) 360/676-3290Fax: (1) 360/647-1445E-mail: [email protected] FTP: spie.org

Please send me■■ Information about full SPIE membership■■ Information about other SPIE technical groups■■ FREE technical publications catalog

Join the Technical Group...and receive this newsletter

Membership Application

Please Print ■■ Prof. ■ ■ Dr. ■ ■ Mr. ■ ■ Miss ■ ■ Mrs. ■ ■ Ms.

First Name, Middle Initial, Last Name ______________________________________________________________________________

Position _____________________________________________ SPIE Member Number ____________________________________

Business Affiliation ____________________________________________________________________________________________

Dept./Bldg./Mail Stop/etc. _______________________________________________________________________________________

Street Address or P.O. Box _____________________________________________________________________________________

City/State ___________________________________________ Zip/Postal Code ________________ Country __________________

Telephone ___________________________________________ Telefax _________________________________________________

E-mail Address/Network ________________________________________________________________________________________

Technical Group Membership fee is $30/year, or $15/year for full SPIE members.

❏ Robotics & Machine PerceptionTotal amount enclosed for Technical Group membership $ __________________

❏ Check enclosed. Payment in U.S. dollars (by draft on a U.S. bank, or international money order) is required. Do not send currency.Transfers from banks must include a copy of the transfer order.

❏ Charge to my: ❏ VISA ❏ MasterCard ❏ American Express ❏ Diners Club ❏ Discover

Account # ___________________________________________ Expiration date ___________________________________________

Signature ___________________________________________________________________________________________________(required for credit card orders)

SPIE’s International Technical Group Newsletter12

ROBOTICS AND MACHINE PERCEPTION MARCH 2000

P.O. Box 10 • Bellingham, WA 98227-0010 USA

Change Service Requested

DATED MATERIAL

Non-Profit Org.U.S. Postage Paid

Society ofPhoto-Optical

InstrumentationEngineers

The CMU MultiRobot LabIn the MultiRobot Lab at CMU, we are inter-ested in building and studying teams of robotsthat operate in dynamic and uncertain environ-ments.1 Our research focuses specifically onissues of multiagent communication, coopera-tion, sensing and learning. We experiment withand test our theories in simulation and on anumber of real robot testbeds.

Cooperative multirobot behaviors andlocalizationPlanning for real robots to act in dynamic anduncertain environments is a challenging prob-lem. A complete model of the world is not vi-able and an integration of deliberation and be-havior-based control is most appropriate forgoal achievement and uncertainty handling. Inrecent work, we have successfully integratedperception, planning, and action for Sony quad-ruped legged robots (Figure 1).2

The quadruped legged robots running oursoftware are fully autonomous with onboardvision, localization and agent behavior. Ourperception algorithm automatically categorizesobjects by color in real time. The output of theimage processing step is provided to our Sen-sor Resetting Localization (SRL) algorithm (anextension of Monte Carlo Localization). SRLis robust to movement modelling errors and tolimited computational power. The system canestimate the position of a robot on a 2m by 4mfield within 5cm.

Our team of robots were entered in theRoboCup-99 robot soccer world championship.They only lost one game and placed third over-all.

Behavioral diversityBehavioral diversity provides an effectivemeans for robots on a team to divide a taskbetween them; robots can specialize by assum-ing different roles on the team. In earlier workwe developed quantitative measure of the ex-tent of behavioral diversity in a team and wehave used this measure in experimental evalu-ations of robot groups.3 One result of our re-search in behavioral diversity is data that indi-cates the usefulness of diversity depends on theteam task. For instance, in soccer simulations,teams using heterogeneous behaviors perform

Figure 1. Sony quadruped robots. Robust vision-based localization software has been developedfor these robots at the MultiRobotLab.

best, while in robot foraging experiments,groups using homogeneous behaviors workbest. We are continuing this work by investi-gating the appropriate level of diversity forvarious tasks and hardware platforms.

Hardware and software for multirobotresearchBecause we are interested in the behavior oflarge numbers of cooperating robots it is im-portant to address the scalability of multirobotsystems. Robot cost and reliability, and soft-ware portability and scalability, are crucial tothe goal of scalable multirobot systems. To re-duce robot cost and to improve reliability wehave designed a low-cost mobile robot platform

using only commercial-off-theshelf compo-nents (Figure 2). The Minnow robot is a fullyautonomous indoor vehicle equipped with colorvision, a Linux-based computer with hard disk,and wireless Ethernet. The entire robot costsless than $3000.4 This low cost-perrobot willenable us to scale up to five or ten robots overthe next year.

Of course scalable robot software is just asimportant as hardware. To provide for softwarereuse and portability we have developedTeamBots, our multirobot development envi-ronment, in Java.5 To our knowledge, this isthe first substantial robot control platform writ-ten in Java. One of the most important featuresof the TeamBots environment is that it supportsprototyping in simulation of the same control

systems that can be run on mobile robots. Thisis especially important for multirobot systemsresearch because debugging, or even running,a multirobot system is often a great challenge.A simulation prototyping environment can bea great help.

The TeamBots simulation environment isextremely flexible. It supports multiple hetero-geneous robot hardware running heterogeneouscontrol systems. Complex (or simple) experi-mental environments can be designed with

Figure 2. The low-cost Minnow robot platform(above). A simulation of the Minnow (right). →

continued on p. 7