PAPART THREERT THREE - ESAC chapra/Part_03.pdfNonlinear systems were discussed in Chap. 6 and will...

Transcript of PAPART THREERT THREE - ESAC chapra/Part_03.pdfNonlinear systems were discussed in Chap. 6 and will...

PART THREEPART THREE

cha1873X_p03.qxd 3/29/05 12:19 PM Page 216

217

LINEAR ALGEBRAICEQUATIONS

PT3.1 MOTIVATION

In Part Two, we determined the value x that satisfied a single equation, f (x) = 0. Now, wedeal with the case of determining the values x1, x2, . . . , xn that simultaneously satisfy a setof equations

f1(x1, x2, . . . , xn) = 0

f2(x1, x2, . . . , xn) = 0· ·· ·· ·

fn(x1, x2, . . . , xn) = 0

Such systems can be either linear or nonlinear. In Part Three, we deal with linear algebraicequations that are of the general form

a11x1 + a12x2 + · · · + a1n xn = b1

a21x1 + a22x2 + · · · + a2n xn = b2

. . (PT3.1)

. .

. .an1x1 + an2x2 + · · · + ann xn = bn

where the a’s are constant coefficients, the b’s are constants, and n is the number of equa-tions. All other equations are nonlinear. Nonlinear systems were discussed in Chap. 6 andwill be covered briefly again in Chap. 9.

PT3.1.1 Noncomputer Methods for Solving Systems of Equations

For small numbers of equations (n ≤ 3), linear (and sometimes nonlinear) equations canbe solved readily by simple techniques. Some of these methods will be reviewed at thebeginning of Chap. 9. However, for four or more equations, solutions become arduous andcomputers must be utilized. Historically, the inability to solve all but the smallest setsof equations by hand has limited the scope of problems addressed in many engineeringapplications.

Before computers, techniques to solve linear algebraic equations were time-consumingand awkward. These approaches placed a constraint on creativity because the methods wereoften difficult to implement and understand. Consequently, the techniques were sometimesoveremphasized at the expense of other aspects of the problem-solving process such as for-mulation and interpretation (recall Fig. PT1.1 and accompanying discussion).

cha1873X_p03.qxd 3/29/05 12:19 PM Page 217

The advent of easily accessible computers makes it possible and practical for you tosolve large sets of simultaneous linear algebraic equations. Thus, you can approach morecomplex and realistic examples and problems. Furthermore, you will have more time totest your creative skills because you will be able to place more emphasis on problem for-mulation and solution interpretation.

PT3.1.2 Linear Algebraic Equations and Engineering Practice

Many of the fundamental equations of engineering are based on conservation laws (recallTable 1.1). Some familiar quantities that conform to such laws are mass, energy, and mo-mentum. In mathematical terms, these principles lead to balance or continuity equationsthat relate system behavior as represented by the levels or response of the quantity beingmodeled to the properties or characteristics of the system and the external stimuli or forc-ing functions acting on the system.

As an example, the principle of mass conservation can be used to formulate a modelfor a series of chemical reactors (Fig. PT3.1a). For this case, the quantity being modeled isthe mass of the chemical in each reactor. The system properties are the reaction character-istics of the chemical and the reactors’ sizes and flow rates. The forcing functions are thefeed rates of the chemical into the system.

In Part Two, you saw how single-component systems result in a single equation that canbe solved using root-location techniques. Multicomponent systems result in a coupled set ofmathematical equations that must be solved simultaneously. The equations are coupled

218 LINEAR ALGEBRAIC EQUATIONS

x1 x1 xi�1xi�1 xn

(b)

Feed

Feed x1 x5

(a)

……

x2

x3

x4

FIGURE PT3.1Two types of systems that can be modeled using linear algebraic equations: (a) lumpedvariable system that involves coupled finite components and (b) distributed variable system thatinvolves a continuum.

cha1873X_p03.qxd 3/29/05 12:19 PM Page 218

because the individual parts of the system are influenced by other parts. For example, inFig. PT3.1a, reactor 4 receives chemical inputs from reactors 2 and 3. Consequently, itsresponse is dependent on the quantity of chemical in these other reactors.

When these dependencies are expressed mathematically, the resulting equations areoften of the linear algebraic form of Eq. (PT3.1). The x’s are usually measures of the mag-nitudes of the responses of the individual components. Using Fig. PT3.1a as an example,x1 might quantify the amount of mass in the first reactor, x2 might quantify the amount inthe second, and so forth. The a’s typically represent the properties and characteristics thatbear on the interactions between components. For instance, the a’s for Fig. PT3.1a mightbe reflective of the flow rates of mass between the reactors. Finally, the b’s usually repre-sent the forcing functions acting on the system, such as the feed rate in Fig. PT3.1a. Theapplications in Chap. 12 provide other examples of such equations derived from engineer-ing practice.

Multicomponent problems of the above types arise from both lumped (macro-) ordistributed (micro-) variable mathematical models (Fig. PT3.1). Lumped variable prob-lems involve coupled finite components. Examples include trusses (Sec. 12.2), reactors(Fig. PT3.1a and Sec. 12.1), and electric circuits (Sec. 12.3). These types of problems usemodels that provide little or no spatial detail.

Conversely, distributed variable problems attempt to describe spatial detail of systemson a continuous or semicontinuous basis. The distribution of chemicals along the length ofan elongated, rectangular reactor (Fig. PT3.1b) is an example of a continuous variablemodel. Differential equations derived from the conservation laws specify the distributionof the dependent variable for such systems. These differential equations can be solved nu-merically by converting them to an equivalent system of simultaneous algebraic equations.The solution of such sets of equations represents a major engineering application area forthe methods in the following chapters. These equations are coupled because the variablesat one location are dependent on the variables in adjoining regions. For example, the con-centration at the middle of the reactor is a function of the concentration in adjoining re-gions. Similar examples could be developed for the spatial distribution of temperature ormomentum. We will address such problems when we discuss differential equations later inthe book.

Aside from physical systems, simultaneous linear algebraic equations also arise in avariety of mathematical problem contexts. These result when mathematical functions arerequired to satisfy several conditions simultaneously. Each condition results in an equationthat contains known coefficients and unknown variables. The techniques discussed in thispart can be used to solve for the unknowns when the equations are linear and algebraic.Some widely used numerical techniques that employ simultaneous equations are regres-sion analysis (Chap. 17) and spline interpolation (Chap. 18).

PT3.2 MATHEMATICAL BACKGROUND

All parts of this book require some mathematical background. For Part Three, matrix nota-tion and algebra are useful because they provide a concise way to represent and manipulatelinear algebraic equations. If you are already familiar with matrices, feel free to skip toSec. PT3.3. For those who are unfamiliar or require a review, the following material pro-vides a brief introduction to the subject.

PT3.2 MATHEMATICAL BACKGROUND 219

cha1873X_p03.qxd 3/29/05 12:19 PM Page 219

PT3.2.1 Matrix Notation

A matrix consists of a rectangular array of elements represented by a single symbol. Asdepicted in Fig. PT3.2, [A] is the shorthand notation for the matrix and aij designates an in-dividual element of the matrix.

A horizontal set of elements is called a row and a vertical set is called a column. The firstsubscript i always designates the number of the row in which the element lies. The secondsubscript j designates the column. For example, element a23 is in row 2 and column 3.

The matrix in Fig. PT3.2 has n rows and m columns and is said to have a dimension ofn by m (or n × m). It is referred to as an n by m matrix.

Matrices with row dimension n = 1, such as

[B] = [b1 b2 · · · bm]

are called row vectors. Note that for simplicity, the first subscript of each element isdropped. Also, it should be mentioned that there are times when it is desirable to employ aspecial shorthand notation to distinguish a row matrix from other types of matrices. Oneway to accomplish this is to employ special open-topped brackets, as in �B�.

Matrices with column dimension m = 1, such as

[C] =

c1

c2

.

.

.

cn

are referred to as column vectors. For simplicity, the second subscript is dropped. As withthe row vector, there are occasions when it is desirable to employ a special shorthand no-tation to distinguish a column matrix from other types of matrices. One way to accomplishthis is to employ special brackets, as in {C}.

220 LINEAR ALGEBRAIC EQUATIONS

FIGURE PT3.2A matrix.

Column 3

[A] =

a11 a12 a13 . . . a1m

a21 a22 a23 . . . a2m

. . .

. . .

. . .

an1 an2 an3 . . . anm

Row 2

cha1873X_p03.qxd 3/29/05 12:19 PM Page 220

Matrices where n = m are called square matrices. For example, a 4 by 4 matrix is

[A] =

a11 a12 a13 a14

a21 a22 a23 a24

a31 a32 a33 a34

a41 a42 a43 a44

The diagonal consisting of the elements a11, a22, a33, and a44 is termed the principal or maindiagonal of the matrix.

Square matrices are particularly important when solving sets of simultaneous linearequations. For such systems, the number of equations (corresponding to rows) and thenumber of unknowns (corresponding to columns) must be equal for a unique solution to bepossible. Consequently, square matrices of coefficients are encountered when dealing withsuch systems. Some special types of square matrices are described in Box PT3.1.

PT3.2 MATHEMATICAL BACKGROUND 221

Box PT3.1 Special Types of Square Matrices

There are a number of special forms of square matrices that are im-portant and should be noted:

A symmetric matrix is one where aij = aji for all i’s and j’s. Forexample,

[A] =

5 1 2

1 3 7

2 7 8

is a 3 by 3 symmetric matrix.A diagonal matrix is a square matrix where all elements off the

main diagonal are equal to zero, as in

[A] =

a11

a22

a33

a44

Note that where large blocks of elements are zero, they are leftblank.

An identity matrix is a diagonal matrix where all elements onthe main diagonal are equal to 1, as in

[I ] =

1

1

11

The symbol [I] is used to denote the identity matrix. The identitymatrix has properties similar to unity.

An upper triangular matrix is one where all the elements belowthe main diagonal are zero, as in

[A] =

a11 a12 a13 a14

a22 a23 a24

a33 a34

a44

A lower triangular matrix is one where all elements above themain diagonal are zero, as in

[A] =

a11

a21 a22

a31 a32 a33

a41 a42 a43 a44

A banded matrix has all elements equal to zero, with the excep-tion of a band centered on the main diagonal:

[A] =

a11 a12

a21 a22 a23

a32 a33 a34

a43 a44

The above matrix has a bandwidth of 3 and is given a specialname—the tridiagonal matrix.

cha1873X_p03.qxd 3/29/05 12:19 PM Page 221

PT3.2.2 Matrix Operating Rules

Now that we have specified what we mean by a matrix, we can define some operating rulesthat govern its use. Two n by m matrices are equal if, and only if, every element in the firstis equal to every element in the second, that is, [A] = [B] if aij = bij for all i and j.

Addition of two matrices, say, [A] and [B], is accomplished by adding correspondingterms in each matrix. The elements of the resulting matrix [C ] are computed,

ci j = ai j + bi j

for i = 1, 2, . . . , n and j = 1, 2, . . . , m. Similarly, the subtraction of two matrices, say,[E] minus [F ], is obtained by subtracting corresponding terms, as in

di j = ei j − fi j

for i = 1, 2, . . . , n and j = 1, 2, . . . , m. It follows directly from the above definitionsthat addition and subtraction can be performed only between matrices having the samedimensions.

Both addition and subtraction are commutative:

[A] + [B] = [B] + [A]

Addition and subtraction are also associative, that is,

([A] + [B]) + [C] = [A] + ([B] + [C])

The multiplication of a matrix [A] by a scalar g is obtained by multiplying every elementof [A] by g, as in

[D] = g[A] =

ga11 ga12 · · · ga1m

ga21 ga22 · · · ga2m

· · ·· · ·· · ·

gan1 gan2 · · · ganm

The product of two matrices is represented as [C ] = [A][B], where the elements of [C ] aredefined as (see Box PT3.2 for a simple way to conceptualize matrix multiplication)

ci j =n∑

k=1

aikbk j (PT3.2)

where n = the column dimension of [A] and the row dimension of [B]. That is, the cij ele-ment is obtained by adding the product of individual elements from the ith row of the firstmatrix, in this case [A], by the jth column of the second matrix [B].

According to this definition, multiplication of two matrices can be performed only ifthe first matrix has as many columns as the number of rows in the second matrix. Thus, if[A] is an n by m matrix, [B] could be an m by l matrix. For this case, the resulting [C ] ma-trix would have the dimension of n by l. However, if [B] were an l by m matrix, the multi-plication could not be performed. Figure PT3.3 provides an easy way to check whether twomatrices can be multiplied.

222 LINEAR ALGEBRAIC EQUATIONS

cha1873X_p03.qxd 3/29/05 12:19 PM Page 222

PT3.2 MATHEMATICAL BACKGROUND 223

[5 9

7 2

]

↓

3 18 6

0 4

→

3 × 5 + 1 × 7 = 22

Thus, z11 is equal to 22. Element z21 can be computed in a similarfashion, as in

[5 9

7 2

]

↓

3 18 6

0 4

→

22

8 × 5 + 6 × 7 = 82

The computation can be continued in this way, following thealignment of the rows and columns, to yield the result

[Z ] =

22 29

82 84

28 8

Note how this simple method makes it clear why it is impossi-ble to multiply two matrices if the number of columns of the firstmatrix does not equal the number of rows in the second matrix.Also, note how it demonstrates that the order of multiplication mat-ters (that is, matrix multiplication is not commutative).

Box PT3.2 A Simple Method for Multiplying Two Matrices

Although Eq. (PT3.2) is well suited for implementation on a com-puter, it is not the simplest means for visualizing the mechanics ofmultiplying two matrices. What follows gives more tangible ex-pression to the operation.

Suppose that we want to multiply [X] by [Y ] to yield [Z ],

[Z ] = [X][Y ] =

3 1

8 6

0 4

[

5 9

7 2

]

A simple way to visualize the computation of [Z ] is to raise [Y ],as in

⇑[5 9

7 2

]← [Y ]

[X] →

3 1

8 6

0 4

?

← [Z ]

Now the answer [Z ] can be computed in the space vacated by [Y ].This format has utility because it aligns the appropriate rows andcolumns that are to be multiplied. For example, according toEq. (PT3.2), the element z11 is obtained by multiplying the first rowof [X ] by the first column of [Y ]. This amounts to adding the prod-uct of x11 and y11 to the product of x12 and y21, as in

[A]n � m [B]m � l � [C]n � l

Interior dimensionsare equal;

multiplicationis possible

Exterior dimensions definethe dimensions of the result

FIGURE PT3.3

cha1873X_p03.qxd 3/29/05 12:19 PM Page 223

If the dimensions of the matrices are suitable, matrix multiplication is associative,

([A][B])[C] = [A]([B][C])

and distributive,

[A]([B] + [C]) = [A][B] + [A][C]

or

([A] + [B])[C] = [A][C] + [B][C]

However, multiplication is not generally commutative:

[A][B] �= [B][A]

That is, the order of multiplication is important.Figure PT3.4 shows pseudocode to multiply an n by m matrix [A], by an m by l matrix

[B], and store the result in an n by l matrix [C ]. Notice that, instead of the inner productbeing directly accumulated in [C ], it is collected in a temporary variable, sum. This isdone for two reasons. First, it is a bit more efficient, because the computer need determinethe location of ci, j only n × l times rather than n × l × m times. Second, the precision ofthe multiplication can be greatly improved by declaring sum as a double precision variable(recall the discussion of inner products in Sec. 3.4.2).

Although multiplication is possible, matrix division is not a defined operation. How-ever, if a matrix [A] is square and nonsingular, there is another matrix [A]−1, called theinverse of [A], for which

[A][A]−1 = [A]−1[A] = [I ] (PT3.3)

Thus, the multiplication of a matrix by the inverse is analogous to division, in the sense thata number divided by itself is equal to 1. That is, multiplication of a matrix by its inverseleads to the identity matrix (recall Box PT3.1).

The inverse of a two-dimensional square matrix can be represented simply by

[A]−1 = 1

a11a22 − a12a21

[a22 −a12

−a21 a11

](PT3.4)

224 LINEAR ALGEBRAIC EQUATIONS

FIGURE PT3.4 SUBROUTINE Mmult (a, b, c, m, n, l)DOFOR i � 1, nDOFOR j � 1, lsum � 0.DOFOR k � 1, msum � sum � a(i,k) � b(k,j)

END DOc(i,j) � sum

END DOEND DO

cha1873X_p03.qxd 3/29/05 12:19 PM Page 224

Similar formulas for higher-dimensional matrices are much more involved. Sections inChaps. 10 and 11 will be devoted to techniques for using numerical methods and the com-puter to calculate the inverse for such systems.

Two other matrix manipulations that will have utility in our discussion are the trans-pose and the trace of a matrix. The transpose of a matrix involves transforming its rows intocolumns and its columns into rows. For example, for the 4 × 4 matrix,

[A] =

a11 a12 a13 a14

a21 a22 a23 a24

a31 a32 a33 a34

a41 a42 a43 a44

the transpose, designated [A]T, is defined as

[A]T =

a11 a21 a31 a41

a12 a22 a32 a42

a13 a23 a33 a43

a14 a24 a34 a44

In other words, the element aij of the transpose is equal to the aji element of the originalmatrix.

The transpose has a variety of functions in matrix algebra. One simple advantage isthat it allows a column vector to be written as a row. For example, if

{C} =

c1

c2

c3

c4

then

{C}T = �c1 c2 c3 c4�where the superscript T designates the transpose. For example, this can save space whenwriting a column vector in a manuscript. In addition, the transpose has numerous mathe-matical applications.

The trace of a matrix is the sum of the elements on its principal diagonal. It is desig-nated as tr [A] and is computed as

tr [A] =n∑

i=1

aii

The trace will be used in our discussion of eigenvalues in Chap. 27.The final matrix manipulation that will have utility in our discussion is augmentation.

A matrix is augmented by the addition of a column (or columns) to the original matrix. Forexample, suppose we have a matrix of coefficients:

[A] = a11 a12 a13

a21 a22 a23

a31 a32 a33

PT3.2 MATHEMATICAL BACKGROUND 225

cha1873X_p03.qxd 3/29/05 12:19 PM Page 225

We might wish to augment this matrix [A] with an identity matrix (recall Box PT3.1) toyield a 3-by-6-dimensional matrix:

[A] = a11 a12 a13 1 0 0

a21 a22 a23 0 1 0a31 a32 a33 0 0 1

Such an expression has utility when we must perform a set of identical operations on twomatrices. Thus, we can perform the operations on the single augmented matrix rather thanon the two individual matrices.

PT3.2.3 Representing Linear Algebraic Equations in Matrix Form

It should be clear that matrices provide a concise notation for representing simultaneouslinear equations. For example, Eq. (PT3.1) can be expressed as

[A]{X} = {B} (PT3.5)

where [A] is the n by n square matrix of coefficients,

[A] =

a11 a12 · · · a1n

a21 a22 · · · a2n

. . .

. . .

. . .

an1 an2 · · · ann

{B} is the n by 1 column vector of constants,

{B}T = �b1 b2 · · · bn�and {X} is the n by 1 column vector of unknowns:

{X}T = �x1 x2 · · · xn�Recall the definition of matrix multiplication [Eq. (PT3.2) or Box PT3.2] to convince your-self that Eqs. (PT3.1) and (PT3.5) are equivalent. Also, realize that Eq. (PT3.5) is a validmatrix multiplication because the number of columns, n, of the first matrix [A] is equal tothe number of rows, n, of the second matrix {X}.

This part of the book is devoted to solving Eq. (PT3.5) for {X}. A formal way to ob-tain a solution using matrix algebra is to multiply each side of the equation by the inverseof [A] to yield

[A]−1[A]{X} = [A]−1{B}Because [A]−1[A] equals the identity matrix, the equation becomes

{X} = [A]−1{B} (PT3.6)

Therefore, the equation has been solved for {X}. This is another example of how the inverseplays a role in matrix algebra that is similar to division. It should be noted that this is not a

226 LINEAR ALGEBRAIC EQUATIONS

cha1873X_p03.qxd 3/29/05 12:19 PM Page 226

very efficient way to solve a system of equations. Thus, other approaches are employed innumerical algorithms. However, as discussed in Chap. 10, the matrix inverse itself has greatvalue in the engineering analyses of such systems.

Finally, we will sometimes find it useful to augment [A] with {B}. For example, ifn = 3, this results in a 3-by-4-dimensional matrix:

[A] = a11 a12 a13 b1

a21 a22 a23 b2

a31 a32 a33 b3

(PT3.7)

Expressing the equations in this form is useful because several of the techniques forsolving linear systems perform identical operations on a row of coefficients and the corre-sponding right-hand-side constant. As expressed in Eq. (PT3.7), we can perform the ma-nipulation once on an individual row of the augmented matrix rather than separately on thecoefficient matrix and the right-hand-side vector.

PT3.3 ORIENTATION

Before proceeding to the numerical methods, some further orientation might be helpful.The following is intended as an overview of the material discussed in Part Three. In addi-tion, we have formulated some objectives to help focus your efforts when studying thematerial.

PT3.3.1 Scope and Preview

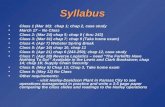

Figure PT3.5 provides an overview for Part Three. Chapter 9 is devoted to the most fun-damental technique for solving linear algebraic systems: Gauss elimination. Beforelaunching into a detailed discussion of this technique, a preliminary section deals with sim-ple methods for solving small systems. These approaches are presented to provide you withvisual insight and because one of the methods—the elimination of unknowns—representsthe basis for Gauss elimination.

After the preliminary material, “naive’’ Gauss elimination is discussed. We start withthis “stripped-down” version because it allows the fundamental technique to be elaboratedon without complicating details. Then, in subsequent sections, we discuss potential prob-lems of the naive approach and present a number of modifications to minimize and cir-cumvent these problems. The focus of this discussion will be the process of switchingrows, or partial pivoting.

Chapter 10 begins by illustrating how Gauss elimination can be formulated as an LUdecomposition solution. Such solution techniques are valuable for cases where many right-hand-side vectors need to be evaluated. It is shown how this attribute allows efficientcalculation of the matrix inverse, which has tremendous utility in engineering practice.Finally, the chapter ends with a discussion of matrix condition. The condition number isintroduced as a measure of the loss of significant digits of accuracy that can result whensolving ill-conditioned matrices.

The beginning of Chap. 11 focuses on special types of systems of equations that havebroad engineering application. In particular, efficient techniques for solving tridiagonal

PT3.3 ORIENTATION 227

cha1873X_p03.qxd 3/29/05 12:19 PM Page 227

228 LINEAR ALGEBRAIC EQUATIONS

FIGURE PT3.5Schematic of the organization of the material in Part Three: Systems of Linear Algebraic Equations.

PT 3.1

Motivation

PT 3.2Mathematicalbackground PT 3.3

Orientation

9.1Small

systems

9.2Naive GausseliminationPART 3

Linear AlgebraicEquations

PT 3.6Advancedmethods

EPILOGUE

CHAPTER 9

GaussElimination

PT 3.5Importantformulas

PT 3.4

Trade-offs

12.4Mechanicalengineering

12.3Electrical

engineering

12.2Civil

engineering 12.1Chemical

engineering 11.3Libraries and

packages

11.2Gauss-Seidel

11.1Special

matrices

CHAPTER 10

LU Decompositionand

Matrix Inversion

CHAPTER 11

Special Matricesand Gauss-Seidel

CHAPTER 12

EngineeringCase Studies

10.3System

condition

10.2Matrixinverse

10.1LU

decomposition

9.7Gauss-Jordan

9.6Nonlinearsystems

9.5Complexsystems

9.4Remedies

9.3Pitfalls

cha1873X_p03.qxd 3/29/05 12:19 PM Page 228

systems are presented. Then, the remainder of the chapter focuses on an alternative toelimination methods called the Gauss-Seidel method. This technique is similar in spirit tothe approximate methods for roots of equations that were discussed in Chap. 6. That is, thetechnique involves guessing a solution and then iterating to obtain a refined estimate. Thechapter ends with information related to solving linear algebraic equations with softwarepackages and libraries.

Chapter 12 demonstrates how the methods can actually be applied for problem solving.As with other parts of the book, applications are drawn from all fields of engineering.

Finally, an epilogue is included at the end of Part Three. This review includesdiscussion of trade-offs that are relevant to implementation of the methods in engineeringpractice. This section also summarizes the important formulas and advanced methods re-lated to linear algebraic equations. As such, it can be used before exams or as a refresherafter you have graduated and must return to linear algebraic equations as a professional.

PT3.3.2 Goals and Objectives

Study Objectives. After completing Part Three, you should be able to solve problemsinvolving linear algebraic equations and appreciate the application of these equations inmany fields of engineering. You should strive to master several techniques and assess theirreliability. You should understand the trade-offs involved in selecting the “best” method(or methods) for any particular problem. In addition to these general objectives, the spe-cific concepts listed in Table PT3.1 should be assimilated and mastered.

Computer Objectives. Your most fundamental computer objectives are to be able tosolve a system of linear algebraic equations and to evaluate the matrix inverse. You will

PT3.3 ORIENTATION 229

TABLE PT3.1 Specific study objectives for Part Three.

1. Understand the graphical interpretation of ill-conditioned systems and how it relates to the determinant.2. Be familiar with terminology: forward elimination, back substitution, pivot equation, and pivot

coefficient.3. Understand the problems of division by zero, round-off error, and ill-conditioning.4. Know how to compute the determinant using Gauss elimination.5. Understand the advantages of pivoting; realize the difference between partial and complete pivoting.6. Know the fundamental difference between Gauss elimination and the Gauss-Jordan method and which

is more efficient.7. Recognize how Gauss elimination can be formulated as an LU decomposition.8. Know how to incorporate pivoting and matrix inversion into an LU decomposition algorithm.9. Know how to interpret the elements of the matrix inverse in evaluating stimulus response computations in

engineering.10. Realize how to use the inverse and matrix norms to evaluate system condition.11. Understand how banded and symmetric systems can be decomposed and solved efficiently.12. Understand why the Gauss-Seidel method is particularly well suited for large, sparse systems of

equations.13. Know how to assess diagonal dominance of a system of equations and how it relates to whether the

system can be solved with the Gauss-Seidel method.14. Understand the rationale behind relaxation; know where underrelaxation and overrelaxation are

appropriate.

cha1873X_p03.qxd 3/29/05 12:19 PM Page 229

want to have subprograms developed for LU decomposition of both full and tridiagonalmatrices. You may also want to have your own software to implement the Gauss-Seidelmethod.

You should know how to use packages to solve linear algebraic equations and find thematrix inverse. You should become familiar with how the same evaluations can be imple-mented on popular packages such as Excel and MATLAB software as well as with softwarelibraries.

230 LINEAR ALGEBRAIC EQUATIONS

cha1873X_p03.qxd 3/29/05 12:19 PM Page 230