Object-oriented Classification of Urban Areas Using Lidar ...

Transcript of Object-oriented Classification of Urban Areas Using Lidar ...

Journal of the Korean Society of Surveying, Geodesy, Photogrammetry and CartographyVol. 33, No. 3, 173-179, 2015http://dx.doi.org/10.7848/ksgpc.2015.33.3.173

Object-oriented Classification of Urban Areas Using Lidar and Aerial Images

Lee, Won Hee1)

AbstractIn this paper, object-based classification of urban areas based on a combination of information from lidar and

aerial images is introduced. High resolution images are frequently used in automatic classification, making use of the spectral characteristics of the features under study. However, in urban areas, pixel-based classification can be difficult since building colors differ and the shadows of buildings can obscure building segmentation. Therefore, if the boundaries of buildings can be extracted from lidar, this information could improve the accuracy of urban area classifications. In the data processing stage, lidar data and the aerial image are co-registered into the same coordinate system, and a local maxima filter is used for the building segmentation of lidar data, which are then converted into an image containing only building information. Then, multiresolution segmentation is achieved using a scale parameter, and a color and shape factor; a compactness factor and a layer weight are implemented for the classification using a class hierarchy. Results indicate that lidar can provide useful additional data when combined with high resolution images in the object-oriented hierarchical classification of urban areas.

Keywords : Object-oriented Classification, Lidar, Aerial Image, eCognition, Image Segmentation, Hierarchical Classification

173

ISSN 1598-4850(Print)ISSN 2288-260X(Online) Original article

Received 2015. 05. 19, Revised 2015. 06. 12, Accepted 2015. 06. 251) Member, School of Convergence & Fusion System Engineering, Kyungpook National University (E-mail: [email protected])

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc/3.0) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

1. Introduction

Remote sensing imagery from a large variety of airborne and spaceborne platforms provides valuable data for mapping, environmental monitoring, and other applications (Aplin and Smith, 2008; Csatho et al., 1998; Serpico and Roli, 1995). However, the majority of applications rely on pixel-based image processes and show unsatisfactory results for high-resolution images (Rego and Koch, 2003).

High-resolution images include rich contextual information, such as spatial and spectral information, but pixel-based classifications have faced substantial difficulties in utilizing this contextual information (Haala and Walter, 1999). Thus, object-oriented classification processing, which combines various information, is implemented to

overcome these difficulties based on the classification of objects of various sizes, and with varying spatial and spectral features (Fraser et al., 2005; Gupta and Bhadauria, 2014). For each image object, a meaningful statistic is calculated in an increased uncorrelated feature space using observed shapes and features (Onishi, 2004).

Object-oriented hierarchical classification has been utilized in an efficient classification of forest cover types (McRoberts and Tomppo, 2007). In addition, the classification of tree species from IKONOS images using object-oriented classification has been achieved (Hájek, 2005). Principal component analysis (PCA) is used to pre-process data to reduce abundant data.

Object-oriented classification has been applied in the automated detection of urban changes (Doxani et al., 2012;

Journal of the Korean Society of Surveying, Geodesy, Photogrammetry and Cartography, Vol. 33, No. 3, 173-179, 2015

174

Walter, 2004), in object recognition (Csatho et al., 1999), and in the classifi cation of shaded urban areas (Zhou et al., 2009). Results have been compared with the geographical information system (GIS), and the possibility of revising the GIS database has been raised. Another GIS revision approach using remote sensing data with eCognition can be found in Benz et al. (2004), in which the authors made an appropriate link between remote sensing data and GIS based on multiresolution and object-oriented fuzzy analyses. A hierarchical image object network can provide a strong link to real-world objects.

This paper presents that the lidar data can provide supplementary information to the aerial image about building boundaries in the object oriented classifi cation. A local maxima fi lter was used to extract lidar points on buildings to combine with aerial imagery. Fuzzy logic membership values were adopted for the accuracy assessment of the classifi cation.

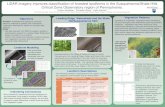

2. Study Area and Dataset

Ocean City, located in the northern part of the coastal area of Maryland, U.S., was selected as the test area since it contains a combination of buildings, roads, coast, and sea. This varied terrain is suitable for classifi cation combining lidar and aerial imagery.

The dataset consists of stereo images and lidar data. An RC20 camera was used to acquire panchromatic aerial images; the camera model is UA9II 3043 and its calibrated focal length is 152.74 mm. The Airborne Topographic Mapper (ATM) laser system was used to acquire digital elevation data. The vertical accuracy of better than 10cm

has been achieved by the ATM laser system. Fig. 1 shows an aerial image of the study area and indicates lidar data on the aerial image.

3. Object-oriented Classification

Classifi cation is the process of assigning classes to pixels from remote sensing data. In an agricultural scene, for example, each pixel could be assigned the class wheat, rye, barley, or fallow. This is important for remote sensing and GIS work, since the classifi ed image is much easier to incorporate than the original unclassifi ed image. In this study, the fuzzy classifi cation method is used. Fuzzy logic is a mathematical approach to quantifying uncertain statements.

The basic idea is to replace the two strictly logical statements, “yes” and “no,” by a continuous range of values between 0 and 1, where 0 means “exactly no” and 1 means “exactly yes.” All values between 0 and 1 represent a more or less certain state of “yes” and “no.” Thus, fuzzy logic is able to emulate human thinking and can even take into account linguistic rules. Fuzzy classifi cation systems are well suited to handling the ambiguity involved in extracting information from remote sensing data. Parameter and model uncertainties are considered using fuzzy sets defi ned by membership functions. Instead of the binary “true” and “false” multivalued fuzzy logic allows transitions between “true” and “false.” Additionally, there are more or less strict realizations of the logical operations “and” or “or.” The membership degree for each land cover or land use class is given for each object. This enables a detailed performance analysis and gives an insight into the class mixture for each image object. This is a major advantage of fuzzy classifi cation. In this study, a fuzzy nearest neighbor classifi er is implemented. This is called object-oriented classifi cation, and involves the grouping of neighboring pixels into meaningful elements.

The proposed method is based on integrating information from lidar and aerial images for object-oriented classifi cation of urban areas. MATLAB was used for local maxima fi ltering and for the conversion from point data to images. ERDAS IMAGINE was used for the coordinate transformation and Fig. 1. Aerial image and lidar (red) for the study area

Object-oriented Classifi cation of Urban Areas Using Lidar and Aerial Images

175

multiresolution segmentation to implement object-oriented classifi cation. The method follows fi ve major steps. First, lidar points on the building are extracted using the local maxima fi lter. Second, the building segmentation data from the lidar points are converted into images. Third, the coordinate systems are unifi ed. Fourth, multiresolution segmentation and classifi cation are implemented. The fi nal step is accuracy assessment.

To provide information on the building location, lidar points on the building should be extracted from original lidar data. The basic concept is that the height of lidar points on the building are higher than neighboring ground points. The local maxima fi lter extracts points over a threshold given by a minimum height plus a threshold value. In this paper, the threshold value was 6 m, which is roughly equivalent to a two-story building or house. The size of the fi lter was 30 m by 30 m, since this exceeds the largest building and is an appropriate size for detecting only points on the building. The large fi lter size causes inaccuracies in the extraction as the real ground may have a tilt and may not be at sea level. This means that the large fi lter could extract a point on the hills as a building point and, in low-lying areas, neighboring ground points could be detected as building points.

Fig. 2 illustrates the basic concept of the local maxima fi lter.

Fig. 3 shows an aerial image and lidar points on a building, and a large-scale aerial image and lidar points for the buildings that represent the commercial area of Ocean City.

The building segmentation data are point data, which

cannot be processed by eCognition. Therefore, the point data are converted into images. The image has the same resolution and dimensions as the aerial image for ease of use.

The size of a single pixel on the ground is around 0.09 m. Therefore, when lidar points on the buildings are converted to image format, an area of 11 by 11 pixels around one lidar point (around 1 m) is classed as a building area. Since lidar points are not dense enough to represent the whole building as a polygon, this assumption is required to provide the building boundary information.

Fig. 4 shows the image of building-segmented lidar data in the commercial area.

Fig. 2. Local maxima fi lter

Fig. 3. Aerial images and lidar points (red) on buildings

Fig. 4. Aerial image and building-segmented lidar data

Journal of the Korean Society of Surveying, Geodesy, Photogrammetry and Cartography, Vol. 33, No. 3, 173-179, 2015

176

hierarchy is the framework of object-oriented classifi cation that creates the knowledge base for a given classifi cation. It reduces the complexity and redundancy of classifi cation. Fig. 6 shows the classifi cation of an aerial image and building segmentation data from lidar.

The building and the building facade have lidar points, but the pixel values from the aerial image are different when compared with the lidar data. Therefore, the building and the building facade were classifi ed as other classes. In addition, the “building facade” and “tree” classes have similar characteristics since they both involve lidar points and similar pixel values in the aerial image.

Fig. 7 shows the classifi cation of an aerial image and building segmentation data from lidar in the residential area.

4.2 Accuracy assessment

Classifi ed image objects are not just assigned to a single class; a detailed list with the membership values of each of the classes is contained in the class hierarchy. An image object is assigned to the class with the highest membership value, as long as this highest membership value is equal to or greater than the minimum membership value. An important issue is the detailed analysis of the accuracy after classifi cation.

4. Results and Discussion

4.1 Classification of commercial and residential

areas

In the test commercial area, there are two buildings, roads, a parking lot, a white impervious surface, a grey impervious surface, shadow, sand, and trees. Thus, the upper level of the class hierarchy (i.e., the parent class) consists of impervious surfaces and non-impervious surfaces. The detailed class hierarchy is shown below in Fig. 5. The class hierarchy of the residential area is somewhat different to that of the commercial area since the white and grey impervious surfaces do not exist in the residential area. In addition, impervious color surfaces can be categorized into white- and grey-roofed houses, as shown in Fig. 5. The class

Fig. 6. Classifi cation of an aerial image and building segmentation data from lidar in the commercial area

(a) Commercial area (b) Residential area Fig. 5. Class hierarchies for commercial and residential

areas

(a) Original image (b) Image only (c) Image + Lidar

Fig. 7. Classifi cation of an aerial image and building segmentation data from lidar in the residential area

Object-oriented Classification of Urban Areas Using Lidar and Aerial Images

177

When using fuzzy classification methods, objects can belong to several classes with different degrees of membership in the case when class descriptions overlap. Thus, to evaluate the reliability or stability of classes, it is necessary to survey the different degrees of membership of the classified objects.

The statistics in Table 1, 2, 3 and 4 show the mean, standard deviation, and minimum and maximum fuzzy logic membership values for the commercial and residential areas. The fuzzy membership values are between 0 (maximum ambiguity) and 1 (maximum certainty). Membership function

of each class is provided by eCognition software.The overall accuracies reach 96.37% and 97.54% in the

commercial and residential areas, respectively as described in Table 2 and Table 4. The overall accuracies of the pixel-based classification using only aerial imagery are 76.47% and 71.15% in the commercial and residential areas, respectively as described in Table 1 and Table 3. Overall accuracy has been improved by combining aerial image with lidar. Lidar data provides supplementary data to the aerial image for the information about building areas.

Class Objects Mean Std. Dev. Minimum MaximumBuilding 136 0.7160 0.2487 0.3747 0.9158Shadow 27 0.8152 0.1090 0.6818 1

Trees, elevator top, or building facade 69 0.6812 0.1983 0.3814 1White sand 10 1.000 0.0000 1.0000 1

Road and parking lot 134 0.8514 0.1647 0.5649 1Grey impervious surface 110 0.7431 0.1857 0.5176 1White imperious surface 47 0.7525 0.2751 0.5820 1

Overall accuracy 0.7647

Table 1. Statistics for all objects in the classification using image only in the commercial area

Class Objects Mean Std. Dev. Minimum MaximumBuilding 116 0.9822 0.0588 0.5707 1Shadow 38 0.9976 0.0043 0.9818 1

Trees, elevator top, or building facade 75 0.8940 0.1983 0.4014 1White sand 8 1.000 0.0000 1.0000 1

Road and parking lot 120 0.9980 0.0059 0.9562 1Grey impervious surface 122 0.9512 0.1152 0.6042 1White imperious surface 52 0.9423 0.1231 0.5820 1

Overall accuracy 0.9637

Table 2. Statistics for all objects in the object-oriented classification in the commercial area

Class Objects Mean Std. Dev. Minimum MaximumWhite-roofed house 28 0.6194 0.2595 0.4577 1

Shadow 15 0.8024 0.1557 0.6432 1Grassland 225 0.7249 0.1957 0.4922 1Waterbody 31 0.7118 0.1491 0.4555 1

Road 91 0.7057 0.1947 0.4172 1Grey-roofed house or trees 44 0.6822 0.2487 0.4860 1

Overall accuracy 0.7115

Table 3. Statistics for all objects in the classification using image only in the residential area

Journal of the Korean Society of Surveying, Geodesy, Photogrammetry and Cartography, Vol. 33, No. 3, 173-179, 2015

178

5. Conclusion

From the experiment, it can be seen that the object-oriented classification accuracy has been improved by combining information from aerial images and lidar. Object-oriented classification can implement automated classification using specialized membership functions. A local maxima filter was used to extract lidar points on buildings and these point data were converted to an image for processing in eCognition. The image of the building-segmented lidar data has the same resolution and dimensions as the aerial image. This image can provide supplementary data to the aerial image to provide information on building boundaries. Finally, fuzzy logic membership values were adopted for the accuracy assessment of the classification. Lidar offers accurate position and height information, but includes less information on the features’ geometrical shapes. Thus, the extraction of building points was used to provide supplementary data on the features’ geometrical shapes in this study, unlike other object-oriented classification studies, which usually use lidar DEM data.

References

Aplin, P. and Smith, G. (2008), Advances in object-based image classification. International Archives of the Photogrammetry Remote Sensing and Spatial Information Sciences, Vol. 37, Part B7, pp. 725-728.

Benz, U., Hofmann, P., Willhauck, G., Lingenfelder, I., and Heynen, M. (2004), Multiresolution, object-oriented fuzzy analysis of remote sensing data for GIS ready information,

ISPRS Journal of Photogrammetry & Remote Sensing, Vol. 58 (3-4), pp. 239-258.

Csatho, B., Krabill, W., Lucas, J., and Schenk, T. (1998), A multisensor data set of an urban and coastal scene, International Archives of Photogrammetry and Remote Sensing, Vol. 32 (3/2), pp. 588-592.

Csatho, B., Schenk, T., Lee, D., and Filin, S. (1999), Inclusion of multispectral data into object recognition, International Archives of Photogrammetry and Remote Sensing, Vol. 32 (7-4-3 W6), pp. 53-61.

Doxani, G., Karantzalos, K., and Tsakiri-Strati, M. (2012), Monitoring urban changes based on scale-space filtering and object-oriented classification, International Journal of Applied Earth Observation and Geoinformation, Vol. 15, pp. 38-48.

Fraser, R.H., Abuelgasim, A., and Latifovic, R., (2005), A method for detecting large-scale forest cover change using coarse spatial resolution imagery, Remote Sensing of Environment, Vol. 95, pp. 414-427.

Gupta, N. and Bhadauria, H.S. (2014), Object based information extraction form high resolution satellite imagery using eCognition, International Journal of Computer Science Issues, Vol. 11, Issue 3, No. 2, pp. 139-144.

Haala, N. and Walter, V. (1999), Classification of urban environments using LIDAR and color aerial image, International Archives for Photogrammetry and Remote Sensing, Vol. 32 (7-4-3W6), pp. 76-82.

Hájek, F. (2005), Object-Oriented Classification of Remote Sensing Data for the Identification of Tree Species Composition, The National Agency for Agricultural Research under a Project Code QG50097, Department of

Class Objects Mean Std. Dev. Minimum MaximumWhite-roofed house 31 0.9832 0.0403 0.8334 1

Shadow 11 0.9877 0.0264 0.9062 1Grassland 205 0.9885 0.0367 0.7707 1Waterbody 26 0.9131 0.1242 0.9103 1

Road 82 0.9854 0.0446 0.546 1Grey-roofed house or trees 36 0.9124 0.2131 0.718 1

Overall accuracy 0.9754

Table 4. Statistics for all objects in the object-oriented classification of the residential area

Object-oriented Classification of Urban Areas Using Lidar and Aerial Images

179

Forestry Management, Forestry and Environment CUA Prague.

McRoberts, R.E. and Tomppo, E.O. (2007), Remote sensing support for national forest inventories, Remote Sensing of Environment, Vol. 110, pp. 412-419.

Onishi, N. (2004), Approach to hierarchical forest cover type classification with object-oriented method, The 25th Asian Conference on Remote Sensing, Chiang Mai, Thailand, pp. 1701-1704.

Rego, F. and Koch, B. (2003), Automatic classification of land cover with high resolution data of the Rio de Janeiro city Brazil comparison between pixel and object classification, Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 27-29 June, Regensburg, Germany.

Serpico, S. and Roli, F. (1995), Classification of multisensor remote sensing images by structured neural networks, IEEE Transactions on Geoscience and Remote Sensing, Vol. 33, No. 3, pp. 562-577.

Walter, V. (2004), Object-based classification of remote sensing data for change detection, ISPRS Journal of Photogrammetry & Remote Sensing, Vol. 58, pp. 225-238.

Zhou, W., Huang G., Troy, A., and Cadenasso, M. (2009), Object-based land cover classification of shaded areas in high spatial resolution imagery of urban areas: a comparison study, Remote Sensing of Environment, Vol. 113 (8), pp. 1769-1777.