MSc Research Proposal Proforma.doc

-

Upload

hassan-mehmood -

Category

Documents

-

view

40 -

download

0

Transcript of MSc Research Proposal Proforma.doc

UNIVERSITY OF ENGINEERING AND TECHNOLOGY TAXILA

RESEARCH PROJECT PROPOSAL FROFORMA

DEPARTMENT OF CIVIL ENGINEERING

PhD Student Particulars No.: ………………... Date: …………………...

Name: Muhammad Shahid Regd No:

Date of registration: 08F-UET/PhD-CP-13 Status: Full Time

Research Supervisor:

Title of Research Project Proposal

Vehicle Future Position Augmentation over Wind Screen: Head-up-Display and Eye

Tracking Framework.

Requested Amount

150,000/-

Tentative Starting Date

January, 2011

Duration

1 Year

The PhD Research Committee Meeting held on _____________ at ______________,

[1]. Recommends the approval of the research project proposal

[2]. Rejects the research project proposal

[3]. Recommends the approval with following suggestions

……………………………………………………………………………………

……………………………………………………………………………………

……………………………………………………………………………………

1. Name: Dr. Tabassam Nawaz Signature: _______________________

(PhD Research Supervisor)

3. Name: Prof. Dr. M. Iram Baig Signature: _______________________

(PhD Research Committee Member)

4. Name: Dr. Samiuddin Signature: _______________________

(PhD Research Committee Member)

5. Name: Dr. Hafiz Adnan Habib Signature: _______________________

(PhD Research Committee Member)

FACULTY RESEARCH PROJECT PROPOSAL SUBMITTED TODIRECTOR OF ADVANCED STUDIES, RESEARCH AND

TECHNOLOGICAL DEVELOPMENTUNIVERSITY OF ENGINEERING & TECHNOLOGY TAXILA

(COVER PAGE)

Name of Sponsoring Agency/Department/Organization: Grant No: ______________

Faculty

Telecommunication &

Information Engineering

Department

Computer Engineering

Area of Specialization

Computer Vision & Image

Processing

Title of Research Project Proposal

Vehicle Future Position Augmentation over Wind Screen: Head-up-Display and Eye Tracking

Framework

Requested Amount150,000/-

Tentative Start DateJanuary 2011

Duration 1 Year

Investigator’s Name Designation Highest Degree

Degree Date Institution

Principal Investigator Dr. Tabassam Nawaz

Associate Professor(Chairman SED)

Ph.D August2008

UET Taxila

Co-Investigator

Muhammad Shahid

PhD Scholar MSc March

2007

NUST

Rwp.

Co-Investigator

Co-Investigator

Signature

(Principal Investigator) Co-Investigator Co-Investigator Co-Investigator

Endorsements Chairman of Department Dean of faculty

Name Dr. M. Iram Baig Prof. Dr. Adeel Akram

Signature with Date

CONTENTS

Page

1. TITLE AND PROJECT SUMMARY 4

2. PROJECT DESCRIPTIN 5

2.1 Literature Review

5

2.2 Project and Program Objectives

5

2.3 Project Management Design 5

2.4 Organization and Management Plan 6

2.5 Work Schedule Plan

6

2.6 Budget Description

7

2.7 Utilization

9

3. CITED REFERENCES 9

4. CURRICULUM VITAE

5. UNDERTAKING 9

Project Title

Vehicle Future Position Augmentation over Wind Screen: Head-up-Display and Eye Tracking

Framework.

Principal Investigator

Dr. Tabassam Nawaz

Summary of Proposed Work (Use Additional Sheets if Necessary)

This TR&D proposal aims at the development of novel augmented reality system for vehicle

driver assistance. System provides assistance to the driver up to twenty five meters ahead of

vehicle. Two lines are drawn over wind screen through tiny projector inside vehicle. Each line

extends over wind screen to give visualization as if lines are physically drawn over road

according to the width of either side of vehicle. The knowledge base has pre-stored information

about width of vehicle that can be adjusted according to width of vehicle.

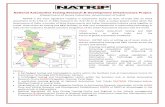

Technical challenge lies in determining the position and shapes of lines to be projected on wind

screen, as shown in Figure 1. Lines can’t be fixed as these lines are projection of road

coordinates to the human eye while passing through wind screen. Lines drawn over wind screen

may be considered as zoomed version of image being developed in human eye. Viewer/driver

head position determines the position of these lines over wind screen. Change in position of face

alters the position of lines on wind screen. Position of face is captured through a camera inside

vehicle focusing the driver.

Figure 1: Proposed Framework

During last few years, head up displays are being installed in top level vehicles and the trend

influenced more automotive manufacturers to include head up displays in their vehicles. Head up

displays are being deployed in automotive for displaying status information such as speed, fuel

gauge, temperature etc. This TR&D proposal is a novel application for head up displays. Such

system can directly be used for driver assistance system. This system can also be an extension in

driver training systems. Massive deployment for such system can be expected as deployment of

head up displays is being common in automotives. Similarly this system can also be a major

extension in driver training systems.

This TR&D proposal seeks funding for equipment required for experimentation setup, final

software application development, embedded system development and human resources

involved. My PhD dissertation focused on the development of video based real time system that

involved algorithm development, testing prototype development, coding and testing of

algorithms and finally publishing the work in IEEE Transactions on Consumer Electronics. I

have good understanding of video & imaging systems and determined for successful execution

of this TR&D project.

2. PROJECT DESCRIPTION

2.1 Literature Review

This section is divided into six parts

2.1.1 Augmented Reality

2.1.2 Display

2.1.2.1 Video see-through

2.1.2.2 Optical see-through

21.2.3 Projective

2.1.3 Display positioning

B1.3.1 Head-worn

B1.3.2 Hand-held

B1.3.3 Spatial

2.1.4 Tracking sensors and approaches

B1.4.1 Modeling environments

B1.4.2 User movement tracking

B1.4.2(i) Face Detection

- Face Detection Challenges:

- Literature Survey

B1.4.2(ii) Eye-Gaze Tracking

- Eye-Gaze Challenges:

- Literature Survey

2.1.5 Coordinate systems

B1.5.1 Five Frame of reference:

2.1.6 Camera Calibration

B1.6.1 Calibration methods and models

B1.6.2 Camera model

2.1.1 Augmented Reality

Augmented Reality (AR) is a visualization paradigm that supplements (typically) vision with

computer generated information, superimposed on the users view[1]. AR is focused on the

presentation of information for enhanced situational awareness and perception within a temporal

and spatially structured manner, allowing users to view, browse, filter, search and interact with

data in the real world[2][3]. Schmalsteig et. al.[1] state that AR is the natural progression of

cartography (map drawing). AR techniques also empower to remove (occlude) existing objects

from reality, such techniques commonly known as mediated or diminished reality[4].

Augmenting the real world with computer generated graphics provides users with an experience

not possible in just the real or virtual world. Augmented Reality (AR) resides between virtuality

(Virtual Environments) and reality on Milgram & Kishino's continuum [5].

The field of modern AR has existed for well over a decade [4], with origins back to the 1960's

when Sutherland (1968) and his students at Harvard University and the University of Utah

developed Sketchpad, a man-machine graphical communication system. AR systems are defined

by their ability to spatially integrate virtual objects into the physical world in real-time,

immersing the user in an information-rich, interactive environment[1].

AR can be applied to any human sense, although vision is most commonly augmented. Most

applications typically uses Head-Mounted Displays (HMD's) to display spatially registered

computer generated images onto the real world for the user to see[6]. AR is useful in any

situation where additional information is required. Known application areas of AR include

medicine, manufacturing, construction, automotive maintenance, environmental management,

tourism, entertainment, architecture, military, aero and automobile industry. In many critical

applications such as medical operations and military simulations, it has been found advantageous

to conduct simulations within safe augmented environments that are physically accurate and

visually realistic.

In AR applications such as automotive or aircraft, the heads-up display(HUD) virtual graphics

being displayed are not aligned with the real objects resulting in a weak link between virtuality

and reality. A real sense of the virtual and real worlds meeting is achieved when the graphics are

spatially aligned to real world objects which require accurate real-time tracking of the user’s

Reality-Virtuality (RV) Continuum, Courtesy of Milgram[15]

position and orientation[7]. The diversity of applications to which AR has been applied makes a

consensual definition difficult. Rather than an explicit definition, Azuma[4] defines AR as a

computer system which; combines real and virtual objects, is interactive in real-time and

registered in two or three-dimensional space. While this statement is not perfect] – it is generally

inclusive of all augmented reality derivatives. In the context of application of AR in vehicle

future position augmentation over wind screen, we can adopt this definition as an area of

augmented reality in which augmentation occurs through pre-stored knowledge base about

vehicle dimension and determination of user’s position and orientation (the user’s location and

therefore the surrounding environment).” Augmented Reality has been identified as providing an

excellent user interface for the given application as it augment the user’s senses with additional

information about the vehicle future position.

2.1.2 Display

Of all the modalities in human sensory input, sight, sound and/or touch are currently the senses

that AR systems commonly apply. Since the focus of proposed research is limited to sight this

section only focuses on visual displays.

There are basically three ways to visually present an augmented reality. Closest to virtual reality

is video see-through, where the virtual environment is replaced by a video feed of reality and the

AR is overlaid upon the digitized images. Another way is optical see-through and leaves the

real-world perception alone but displays only the AR overlay by means of transparent mirrors

and lenses. The third approach is to project the AR overlay onto real objects themselves resulting

in projective displays. True 3D displays for the masses are still far off, although [8] already

achieve 1000 dots per second in true 3D free space using plasma in the air. The three techniques

may be applied at varying distance from the viewer: head-mounted, hand-held and spatial.

2.1.2.1 Video see-through

Besides being the cheapest and easiest to implement, this display technique offers the several

other advantages i.e. (1) to mediate or remove objects from reality including removing or

replacing fiducial markers or placeholders with virtual objects, (2) to blend with ease brightness

and contrast of virtual objects with the real environment, evaluating the light conditions of a

static outdoor scene is of importance when the computer generated content has to blend in

smoothly, (3) allows tracking of head movement for better registration, and (4) provides

possibility to match perception delays of the real and virtual. Issues in video see-through include

a low resolution of reality, a limited field-of-view, and user disorientation due to a parallax (eye-

offset) due to the camera’s positioning at a distance from the viewer’s true eye location, causing

significant adjustment effort for the viewer.

2.1.2.2 Optical see-through

Optical see-through techniques with beam-splitting holographic optical elements (HOEs) may be

applied in head-worn displays, hand-held displays, and spatial setups where the AR overlay is

mirrored either from a planar screen or through a curved screen. These displays not only leave

the real-world resolution intact, they also have the advantage of being cheaper, safer, and

parallax-free (no eye-offset due to camera positioning). Optical techniques are safer because

users can still see when power fails, making this an ideal technique for military and medical

purposes. However, other input devices such as cameras are required for interaction and

registration. Challenges in combining the virtual objects holographically through transparent

mirrors and lenses involves reduced brightness and contrast of both the images and the real-

world perception.

2.1.2.3 Projective

These displays have the advantage that they do not require special eye-wear thus accommodating

user’s eyes during focusing, and they can cover large surfaces for a wide field-of-view.

Projection surfaces may range from flat, plain colored walls to complex scale models ]. Zhou et

al. [9] list multiple pico projectors that are lightweight and low on power consumption for better

integration. However, as with optical see-through displays, other input devices are required for

(indirect) interaction. Also, in most applications the projectors need to be calibrated each time

the environment or the distance to the projection surface changes (crucial in mobile setups).

Fortunately, calibration may be automated using cameras in e.g. a multi-walled Cave automatic

virtual environment (CAVE) with irregular surfaces [10]. Furthermore, this type of display is

limited to indoor use only due to low brightness and contrast of the projected images. Occlusion

or mediation of objects is also quite poor, but for head-worn projectors this may be improved by

covering surfaces with retro-reflective material. Objects and instruments covered in this material

will reflect the projection directly towards the light source which is close to the viewer’s eyes,

thus not interfering with the projection.

2.1.3 Display positioning

AR displays may be classified into three categories based on their position between the viewer

and the real environment: head-worn, hand-held, and spatial.

2.1.3.1 Head-worn

Visual displays attached to the head include the video/optical see-through head-mounted display

(HMD), virtual retinal display (VRD), and head-mounted projective display (HMPD). A current

drawback of head-worn displays is the fact that they have to connect to graphics computers like

laptops that restrict mobility due to limited battery life.

2.1.3.2 Hand-held

This category includes hand-held video/optical see-through displays as well as hand-held

projectors. Although this category of displays is bulkier than head-worn displays, it is currently

the best work-around to introduce AR to a mass market due to low production costs and ease of

use. For instance, hand-held video see-through AR acting as magnifying glasses may be based

on existing consumer products like mobile phones Möhring et al.

2.1.3.3 Spatial

The last category of displays are placed statically within the environment and include screen-

based video see-through displays, spatial optical see-through displays, and projective displays

such as windshield in automobile applications. These techniques lend themselves well for

varying size presentations and exhibitions with limited interaction. Early ways of creating AR

are based on conventional screens (computer or television) that show a camera feed with an AR

overlay. Such technique is commonly applied in the world of sports television where

environments such as swimming pools and race tracks are well defined and easy to augment.

Head-up displays (HUDs) in military cockpits, aero and automobile industry are a form of

spatial optical see-through and are expected to become a standard extension for future car

productions to project navigational directions in the windshield.

2.1.4 Tracking sensors and approaches

Before an AR system can display virtual objects into a real environment, the system must be able

(1) to sense the environment and (2) track the viewer’s (relative) movement preferably with six

degrees of freedom (6DOF): three variables (x, y, and z) for position and three angles (yaw,

pitch, and roll) for orientation. There must be some model of the environment to allow tracking

for correct AR registration. Furthermore, most environments have to be prepared before an AR

system is able to track 6DOF movement, but not all tracking techniques work in all

environments. To this day, determining the orientation of a user is still a complex problem with

no single best solution.

2.1.4.1 Modeling environments

Both tracking and registration techniques rely on environmental models. To annotate for instance

external objects on the windshield, an AR system needs to know where they are located with

regard to the user’s current position and field of view. Sometimes the annotations themselves

may be occluded based on environmental model. For instance when an annotated building is

occluded by other objects, the annotation should point to the non-occluded parts only. Since the

Vehicle Future Position Overlay uses optical see-through head-up display technique, the

environmental modeling will be of little concern.

2.1.4.2 User movement tracking

AR tracking devices must have higher accuracy, a wider input variety and bandwidth, and longer

ranges. Registration accuracy depends not only on the geometrical model but also on the

distance of the objects to be annotated – vehicle path in the of case vehicle future position

augmentation. The further away an object (i) the less impact errors in position tracking have and

(ii) the more impact errors in orientation tracking have on the overall mis-registration [18].

Tracking is usually easier in indoor settings than in outdoor settings as the tracking devices do

not have to be completely mobile and wearable or deal with shock, abuse, weather, etc. Instead

the indoor environment is easily modeled and prepared, and conditions such as lighting and

temperature may be controlled. Currently, unprepared outdoor environments still pose tracking

problems with no single best solution.

In case of vehicle future position augmentation, the user tracking involves a two step approach

(1) face detection (2) eye tracking and gaze estimation.

2.1.4.2(i) Face Detection

In this section, an introduction to the work done by other researchers related to the problem of

face detection will be presented which is the first step in user detection in vehicle future position

augmentation application.

Face detection is a vital component in several applications, it is the first step of any automated

face recognition and eye-gaze tracking systems which can also be used as part of surveillance

and security systems, general object detection, video encoding, facial feature tracking, human

tracking etc. in diversified industrial domains. Face detection problem in the field of computer

vision has been addressed mainly over the last few decades, it has been under thorough

investigation by many researchers with the aim of producing an efficient face detection

algorithms that can solve the problem of detecting faces under different lighting conditions,

scale, location, orientation (up-right, rotated), poses (frontal, profile), and faces in complex

backgrounds. Even up to this day, this problem has not been quite solved and more research into

this field is still required.

Face detection techniques employed several cues e.g. skin color for face detection in color

images and videos, motion for faces in videos, facial/head shape, facial appearance, or a

combination of these parameters. Most successful face detection algorithms use appearance-

based models. A general face detection process involves scanning of an input image at all

possible locations and scales by a sub-window. Face detection is posed as classifying the pattern

in the sub-window as either face or non-face. The face/non-face classifier is learned from face

and non-face training examples using statistical learning methods.

a) Face Detection Challenges

It is particularly hard to build robust classifiers which are able to detect faces in different image

situations and face conditions even if it seems really easy to do this with our human visual

system. In fact, the object “face” is hard to define because of its large variability, depending on

the identity of the person, the lightning conditions, the psychological context of the person etc...

The main challenge for detecting faces is to find a classifier which can discriminate faces from

all other possible images. The first problem is to find a model which can englobe all the possible

states of faces. Let’s define the main variable points of the faces:

The face global attributes. We can extract some common attributes from every face. A face is

globally an object which can be estimated by a kind of ellipse but there are thin faces, rounder

faces... The skin color can also be really different from one person to one another.

The pose of the face. The position of the person in front of the camera which has been used to

acquire the image can totally change the view of the face: the frontal view, the profile view and

all the intermediate positions, upside down...

The facial expression. Face appearance depends highly on the affective state of the people. The

face features of a smiling face can be far from those of an indifferent temperament or a sad

one. Faces are nonrigid objects and that will limit considerably the number of detection

methods.

Presence of added objects. Face detection included objects that we can usually find on a face:

glasses which change one of the main characteristics of the faces: the darkness of the eyes.

Natural facial features such as mustache beards or hair which can occult one part of the face.

Image Condition. The face appearance vary a lot in function of the lightning conditions, the

type of illumination and intensity and the characteristics of the acquisition system need to be

taken in account.

The background composition is one of the main factors for explaining the difficulties of face

detection. Even if it is quite easy to build systems which can detect faces on uniform

backgrounds, most of the applications need to detect faces in any background condition,

meaning that the background can be textured and with a great variability.

In this context, various approaches have been taken to detect faces in images.

Two main face detection approaches:

Image-Based methods which are built given a set of examples and uses a sliding window to

perform the detection.

Geometrical-Based methods which take in account geometric particularity of face structures.

b) Literature Survey:

The problem of face detection goes back to early 70’s, at that time the overall focus was on

finding ways to detect human faces in simple constitutions where typically the face is in a

passport like photo with a uniform background and uniform lighting conditions with frontal

faces without any rotation in the plane or out of the plane, the research on the subject was rather

simple at the time and only came into attention at early 90’s where more in-depth research was

taking over into the problem using different algorithms, the problem began to address the issues

related to detecting faces in complex backgrounds with different scales and rotation degrees,

introducing statistical methods and neural networks for face detection in cluttered scenes and

using new methods to extract features from the images like active contours which delineates the

outlines of possible objects in complex images and deformable templates or active shape model

and its variations which could be learned out of a training set to identify the location of pre-

labeled features points and thus can be used to test unseen features points and check if they lie

within the boundary of the deformable templates, several statistical methods can be used to build

such models including the PCA principle component analysis which helps to reduce the

dimensionality of the input training data set. The face detection approaches are as follows

Feature based methods for face detection

Feature based face detection involves several stages, each depends on the results obtained from

the earlier stage, first a low level analysis is done to segment the image features depending on

pixel values, the features extracted are in general the edges in the current scene. Along with edge

detection gray or color pixel information can be used in the initial analysis of the image. The

features obtained from the first stage are analyzed in the another stage to reduce the number of

low level features and extract features relative to facial features depending on the knowledge of

the face geometry. Several methods could be applied in this stage. Leung[11] adopted random

search of facial features and mapping them to the relative position of the facial feature

component which uses a flexible graph matching technique for facial features matching. Benn et

al[12] uses the information of the eyes shape and densities compared to the surrounding areas for

face detection. Kroon[13] addresses the issues of using the eyes for face matching . Active Shape

Models, deformable template and point distributed models which uses labeled training samples

to statistically learn the active fitting parameters and limits, could also be used to extract the final

set of facial features and determine the final decision about the presence or absence of a face.

View-based methods for face detection

View-based methods attempts to detect the human face without depending on the knowledge of

its geometry, treating the face detection problem as a pattern recognition problem by having two

separate classes, faces and none faces, and by implicitly extracting the knowledge embedded in

the face to create a proper boundary between face and none face samples in the image-two

dimensional pixels space. This approach requires training to produce the final classifier which

will be applied at all positions at all scales, where windows are extracted, possibly re-sampled

(re-scaled), this exhaustive search can be boosted by increasing the scan and/or scaling steps to

reduce the total number of scanned windows, algorithms used in the view-based approach

include PCA Principal Component Analysis, Neural Networks, SVM Support Vector Machines

and other statistical methods that can learn from examples.

Neural Network-Based Face Detection

Rowley’s[14] research a view based approach to detect faces in still images and proved that face

detection problem can be effectively solved by using Neural Networks to detect frontal, and non-

frontal faces with different poses and rotation degrees, the main problems addressed in using

machine learning techniques to learn detecting faces was that faces in images vary considerably

with different lighting conditions, pose, occlusion and facial expressions, compensating for these

variations was important for the learning process, it was essential to choose a representative set

of none faces where training set size could increase indefinitely, so the training used a bootstrap

method[15]. Baluja[16] studied how to detect faces which are rotated in plane, assuming a the

current window is a face window, an initial neural network tries to find the rotation angle of the

face then rotate it to become upright frontal face image and use another neural network to

determine the presence or absence of a face.

Hausdorff Distance-Based Face Detection

Hausdorff distance[17] can be effectively used to detect similarity of two binary images. Several

researched used this method, Jesorsky et al[18] presented a model based approach to detect faces

which uses an edge model that is aimed to model a wide variety of faces this model is matched

against a calculated image of a candidate face which is calculated by using Sobel operator, a

threshold is used and can be changed to take variable illuminations conditions into

considerations.

Face detection using a Boosted Cascade of Simple Features

This method proved to detect objects extremely rapidly and is comparable to the best real time

face detection systems. Viola and Jones[19] presented a new image representation called Integral

Image which allows fast calculation of image features to be used by their detection algorithm,

the second step is an algorithm based on AdaBoost which is trained against the relevant object

class to select a minimal set of features to represent the object, third step is introducing series of

classifiers which have an increasing complexity, these set of cascade classifiers allows to rapidly

discard of most non-objects at early stages, allowing more time to be spent on the final stages to

yield higher detection rates and thus boosting both accuracy and processing time for the object

detection task, Viola and Jones presented a frame work for object detection motivated by faced

detection and yielded some good results as viewed by their research for real time face detection

at high rates.

Real time 2D face detection using Color Ratios and KMean Clustering

Byrd et. al.[20] presented an algorithm for detecting faces in real time MPEG videos. The

proposed method after converting the MPEG stream into JPEG file sequences the application

turns into still image face detection problem where two phases are used for the detection

purpose, the first is the Skin region detection classifier, images passed to this classifier are

searched for skin regions, images that don’t contain skin regions are discarded, images with skin

regions are passed to a K-Mean face segmentation clustering unit for further investigation of the

skin regions to match a human face.

Component-based Face Detection

Heisele et al[21] used two support vector machine classifiers. The first classifier detects the

components of the current image window as a potential face, the second classifier checks the

geometry of these components to match that of a face or not, in this, a component based

approach is more robust to occlusion than approaches that depend on the whole face.

FloatBoost Learning and Statistical Face Detection

Li and Zang[22] proposed a method to improve the performance of AdaBoost method to achieve

a minimal error rate and requires less weak classifiers to achieve the lower error rate than that of

the AdaBoost, AdaBoost which learns a sequence of weak classifiers which when combined

from a strong classifier of a higher accuracy, as in Viola-Jones work which primarily focused on

frontal faces views, and their work depending on AdaBoost learning algorithm consist mainly of

three stages the first is to identify a minimal set of features out of huge number of features, the

second stage is learning weak classifiers to identify each of these features and the third one in the

combination of these weak classifiers into a strong classifier, this hierarchy leads to one of the

best frontal real time faces detection systems.

Support Vector Regression and Classification Based Multi-view Face Detection and

Recognition

Li et al[23] approach involves construction of multiple face detectors; each for a specific view of

the face, it makes use of the symmetrical property of the face, but first it applies support vector

regression techniques to estimate to the pose of the face, this estimation is quite crucial to choose

which detection component to apply which improves both accuracy and computational time,

applying a separate detector for each pose yields a better detection rate compared to methods

applied to any pose, further improvements could be made for applying this algorithm on video

by using pose change smoothing strategies to improve performance in real time image

sequences.

Facial feature detection using Haar classifier

Wilson et. al.[24] made an attempt to improve the performance of Viola-Jones detection method

by reducing the area that needs to be examined and thus reducing the computation time, instead

of using the intensity values of each pixel alone, it uses the change in values of contrast between

groups of adjacent regions, these regions are rectangles that can be changes in size to scale Haar-

like features and detect objects of different sizes.

2.1.4.2(ii) Eye-Gaze Tracking:

Another, more specific task after locating the faces’ positions is to recognize what a person is

looking at commonly known as eye-gaze tracking. There is an obvious difference between the

head pose/orientation and the view direction of the eyes. A head can face a specific object, a

monitor for instance, and follow several activities on the screen, such as the mouse pointer, by

moving the eyes against static head. In many application domains the knowledge about the view

direction of the eyes is more important than the orientation of the head, respectively the face. But

the measurements relying on the eyes and the head are usually related to each other. A typical

example in the automotive context using information about the view angle is to measure the

duration of distraction while driving and operating the infotainment system at once. Another

demanding application is the proper perspective projection of a 3-dimensional scene in a virtual

reality environment or to highlight the area with the field of view.

a) Eye-Gaze Detection Challenges:

Eye models needs to be sufficiently expressive to take account of large variability in the

appearance and dynamics of the eye while also sufficiently constrained to be computationally

efficient is essential in eye detection. The appearance of eye regions shares commonalities across

race, illumination, and viewing angle. On the other hand, a relatively small variation in viewing

angles, even of the same subject, can cause significant changes in appearance. Despite active

research, eye detection and tracking remains a very challenging task due to several unique

issues, including (1) occlusion of the eye by the eyelids, (2) degree of openness of the eye, (3)

variability in either size, reflectivity or head pose, etc.

b) Eye-Gaze Survey:

Eye Gaze is defined as the line of sight of a person. It represents a person’s focus of attention.

Eyes and their movements play an important role in expressing a person’s desires, needs,

cognitive processes, emotional states, and interpersonal relations. The importance of eye

movements to the individual’s perception of and attention to the visual world is implicitly

acknowledged as it is the method through which we gather the information necessary to

negotiate our way through and identify the properties of the visual world. Robust nonintrusive

eye detection and tracking is, therefore, crucial for the development of human-computer

interaction, attentive user interfaces, and understanding human affective states.

Research in eye detection and tracking focuses on two areas: eye localization in the image and

gaze estimation. There are three aspects of eye detection i.e. (1) to detect the existence of eyes,

(2) to accurately interpret eye positions in the images, and (3) to track the detected eyes from

frame to frame in case of video tracking. The eye position is commonly measured using the pupil

or iris center. The detected eyes in the images are used to estimate and track where a person is

looking in 3D, or alternatively, determining the 3D line of sight. This process is called gaze

estimation. In the subsequent discussion, we will use the terms “eye detection” and “gaze

tracking” to differentiate them, where eye detection represents eye localization in the image

while gaze tracking means estimating gaze paths.

Several techniques have been investigated by the researchers for effective eye-gaze tracking

including scleral coil contact lens, electro-oculography(EoG), video-oculography and head

mounted eye trackers. However, since our concern is only limited to video-aculography or video

based eye trackers, the review is limited to this specific domain of eye and gaze tracking.

Eye Detection

Eye models needs to be sufficiently expressive to take account of large variability in the

appearance and dynamics of the eye while also sufficiently constrained to be computationally

efficient is essential in eye detection. The appearance of eye regions shares commonalities across

race, illumination, and viewing angle. On the other hand, a relatively small variation in viewing

angles, even of the same subject, can cause significant changes in appearance. Despite active

research, eye detection and tracking remains a very challenging task due to several unique

issues, including (1) occlusion of the eye by the eyelids, (2) degree of openness of the eye, (3)

variability in either size, reflectivity or head pose, etc. The eye image may be characterized by

the intensity distribution of the pupil(s), iris, and cornea, as well as by their shapes. Ethnicity,

viewing angle, head pose, color, texture, light conditions, the position of the iris within the eye

socket, and the state of the eye (i.e., open/close) are issues that heavily influence the appearance

of the eye. The intended application and available image data lead to different prior eye models.

The prior model representation is often applied at different positions, orientations, and scales to

reject false candidates. Shape-based methods can be subdivided into fixed shape[25][26][27] and

deformable shape[28][29]. The methods are constructed from either the local point features of

the eye and face region or from their contours. The pertinent features may be edges, eye corners,

or points selected based on specific filter responses. The limbus and pupil are commonly used

features. While the shape-based methods use a prior model of eye shape and surrounding

structures, the appearance-based methods rely on models built directly on the appearance of the

eye region. The appearance-based approach (the holistic approach) conceptually relates to

template matching by constructing an image patch model and performing eye detection through

model matching using a similarity measure. The appearance- based methods can be further

divided into intensity and subspace-based methods. The intensity-based methods[30] use the

intensity or filtered intensity image directly as a model, while the subspace methods[31] assume

that the important information of the eye image is defined in a lower dimensional subspace.

Hybrid methods combine feature, shape, and appearance approaches to exploit their respective

benefits.

Gaze Estimation

The primary task of gaze estimation is to determine user focus or attention. Gaze can be either

the gaze direction or the point of regard (PoR). Gaze modeling consequently focuses on the

relations between the image data and the point of regard/gaze direction. Basic categorizations of

eye movements include saccades and fixations. A fixation occurs when the gaze rests for some

minimum amount of time on a small predefined area, usually within 2-5 degrees of central

vision, usually for at least 80-100 ms. Saccades are fast, jump-like rotations of the eye between

two fixated areas, bringing objects of interest into the central few degrees of the visual field.

Smooth pursuit movements are a further categorization that describe the eye following a moving

object. Saccadic eye movements have been extensively investigated for a wide range of

applications including the detection of fatigue/drowsiness, human vision studies, diagnosing

neurological disorders, and sleep studies. Fixations are often analyzed in vision science,

neuroscience, and psychological studies to determine a person’s focus and level of attention. Eye

positions are restricted to a subset of anatomically possible positions described in Listing’s and

Donder’s laws[32]. According to Donder’s law, gaze direction determines the eye orientation

uniquely and the orientation is furthermore independent of the previous positions of the eye.

Listing’s law describes the valid subset of eye positions as those which can be reached from the

so called primary position through a single rotation about an axis perpendicular to the gaze

direction.

People move their heads when using a gaze tracking. A person’s gaze is determined by the head

pose (position and orientation) and eyeball orientation. A person can change gaze direction by

rotating the eyeball (and consequently also the pupil) while keeping the head stationary.

Similarly, a person can change gaze direction by moving the head while keeping the eye

stationary relative to the head. Usually, a person moves the head to a comfortable position before

orienting the eye. Head pose, therefore, determines the coarse-scale gaze direction while the

eyeball orientation determines the local and detailed gaze direction. Gaze estimation therefore

needs to (either directly or implicitly) model both head pose and pupil/iris position. The problem

of ensuring head pose invariance in gaze tracking, such as in the case of vehicle future position

augmentation, is important and constitutes a challenging research topic. Head pose invariance

may be obtained through various hardware configurations and prior knowledge of the geometry

and cameras. Information on head pose is rarely used directly in the gaze models. It is more

common to incorporate it implicitly either through the mapping function or through the use of

glints (reflections on the cornea).

All gaze estimation methods need to determine a set of parameters through calibration including:

- camera-calibration: determining intrinsic camera parameters;

- geometric-calibration: determining relative locations and orientations of different units in the

setup such as camera, light sources, and monitor;

- personal calibration: estimating cornea curvature, angular offset between visual and optical

axes; and

- gazing mapping calibration: determining parameters of the eye-gaze mapping functions.

A system where the camera parameters and geometry are known is termed fully calibrated.

Desirable attributes in a gaze tracking include minimal intrusiveness and obstruction, allowing

for free head movements while maintaining high accuracy, easy and flexible setup, and low cost.

A more detailed description of eye tracking preferences is elaborated by Scott and Findlay [33].

Until recently, the standard eye tracking systems were intrusive, requiring, for example, a

reflective white dot placed directly onto the eye or attaching a number of electrodes around the

eye. Use of headrests, bite-bars, or making the eye tracker head mounted were common

approaches to accommodate significant head movements. Head movements are typically tracked

using either a magnetic head tracker, another camera, or additional illuminators. Head and eye

information is fused to produce gaze estimates. Compared to the early systems, video-based gaze

trackers have now evolved to the point where the user is allowed much more freedom of head

movements while maintaining good accuracy (0.5 degree or better). As reviewed in this section,

recent studies show that using specific reflections from the cornea allows gaze tracking relatively

easy, and enhances stable and head pose invariant gaze estimation.

2.1.5 Coordinate Systems:

a) Raster oriented uses row and column coordinates starting at [0, 0] from the top left.

b) Cartesian coordinate frame with [0, 0] at the lower left.

c) Cartesian coordinate frame with [0, 0] at the image center.

d) Relationship of pixel center point [x, y] to area element sampled in array element I[i, j]

2.1.5.1 Five Frame of reference

References frames are needed in order to do either qualitative or quantitative analysis of 3D

scenes. Five frames of reference are commonly found for general problems in 3D scene analysis.

Figure: Five coordinate frames for 3D analysis

i) Pixel Coordinate Frame I:

Many things about a scene can be determined by analysis of the image in terms of only rows and

columns .Using only image I, however, and no other information, we cannot determine which

object is actually larger in 3D or whether or not objects are on a collision course.

ii) Object Coordinate Frame O:

An object coordinate frame is used to model ideal objects in both computer graphics and

computer vision. The object coordinate frame is needed in order to inspect an object, e.g. to

check if a particular hole is in a proper position relative to other holes or corners.

iii) Camera Coordinate Frame C:

The camera coordinate frame is often needed for “egocentric” (camera centric) view; e.g. to

represent whether or not an object is just in front of the sensor, moving away etc.

iv) Real Image Coordinate Frame F:

Camera coordinates are real numbers, usually in the same units as the world coordinates say

inches or mm. 3D points project to the real image plane at coordinates [ ] where f is the focal

length and are related to the pixel size and pixel position of the optical axis in the image.

v) World Coordinate Frame W:

The world coordinate frame is needed to relate objects in 3D. E.g. to determine whether or not a

runner is far off a base or if the runner and second baseman will collide.

2.1.6 Camera Calibration

Camera calibration, in the context of three-dimensional machine vision, is the process of

determining the internal camera geometric and optical characteristics (intrinsic parameters),

and/or the 3-D position and orientation of the camera frame relative to a certain world coordinate

system. For applications that need to infer 3-D information from 2-D image coordinates, e.g.

automatic assembling, three dimensional metrology, robot calibration, and vehicle guidance, it is

essential to calibrate the camera either on-line or off-line. In many cases, the overall system

performance strongly depends on the accuracy of the camera calibration. Camera calibration has

been widely studied in photogrammetric and computer vision. As a result, several calibration

techniques have been proposed. According to Weng et al. these techniques can be classified into

three categories:

nonlinear minimization

These techniques typically produce very accurate calibration results, but they are

computationally intensive.

closed-form solutions

Closed-form solutions are obtained simply by solving a set of linear equations, but the result is

not as accurate as can be achieved with nonlinear methods. A trade-off between the accuracy and

the computational cost is up to the requirements of the application.

two-step methods

Two-step methods involve a direct solution for most of the camera parameters and an iterative

solution for the other parameters. Explicit camera calibration means the process of computing

the physical camera parameters, like the image center, focal length, position and orientation, etc.

Most of the calibration procedures proposed in the literature belong to this category. However, in

some specific cases, the physical explanation for the camera parameters is not required. An

implicit calibration procedure can then be used where the parameters do not have any physical

meaning.

Usually, the problem of camera calibration is to compute the camera parameters based on a

number of control points whose mutual positions are know, and whose image positions are

measured. The control point structure may be three-dimensional or coplanar. In the case of a 3-D

structure, the camera parameters can be solved based on a single image. However, an accurate 3-

D target that covers the entire object space is quite difficult to manufacture and maintain.

Coplanar targets are more convenient, but not all camera parameters can be solved based on a

single view. Thus, some constraints for the parameters must be applied. Another possibility is to

capture several images from different viewpoints and solve all intrinsic and extrinsic parameters

simultaneously. However, problems may exist with highly correlated parameters. An approach

that differs considerably from the traditional calibration methods is self-calibration, where the

camera parameters are estimated based on an unknown scene structure, and only a reference

distance is needed to scale the parameters into correct units. Several calibration procedures can

be found from the literature, but typically the errors characteristic of these procedures are not

much considered. There are various error sources that contribute to the calibration procedure.

Typically, these errors are inherent in the image formation process. Some of them are observable

as a systematic component of the fitting residual, but there also are certain errors that cannot be

determined from the residual.

2.1.6.1 Calibration Methods and Models

The nature of the application and the required accuracy can dictate which of two basic

underlying functional models should be adopted:

A camera model based on perspective projection, where the implication is that the IO is

stable (at least for a given focal length setting) and that all departures from co linearity, linear

and non-linear, can be accommodated. This co linearity equation-based model generally

requires five or more point correspondences within a multi-image network and due to its

non-linear nature requires approximations for parameter values for the least-squares bundle

adjustment in which the calibration parameters are recovered.

A projective camera model supporting projective rather than Euclidean scene

reconstruction. Such a model, characterized by the Essential matrix and Fundamental matrix

models, can accommodate variable and unknown focal lengths, but needs a minimum of 6 - 8

point correspondences to facilitate a linear solution, which is invariably quite unstable.

Nonlinear image coordinate perturbations such as lens distortion are not easily dealt with in

such models.

Further criteria can also be used to classify camera calibration methods:

Implicit versus explicit models. The photogrammetric approach, with its explicit physically

interpretable calibration model, is contrasted against implicit models used in structure from

motion algorithms which correct image point positions in accordance with alignment

requirements of a real projective mapping.

Methods using 3D rather than planar point arrays. Whereas some CV methods and

photogrammetric self-calibration can handle both cases – with appropriate network

geometry – models such as the Essential matrix cannot accommodate planar point arrays.

Point-based versus line-based methods. Point-based methods are more popular in

photogrammetric, with the only line-based approach of note, namely plumb line

calibration, yielding parameters of lens distortion, but not of IO.

A more specific classification can be made according to the parameter estimation and

optimization technique employed:

Linear techniques are quite simple and fast, but generally cannot handle lens distortion and

need a control point array of known coordinates. They can include closed-form solutions,

but usually simplify the camera model, leading to low accuracy results. The well-known

DLT, which is essentially equivalent to an Essential matrix approach, exemplifies such a

technique.

Non-linear techniques such as the extended co linearity equation model, which forms the

basis of the self calibrating self calibrating bundle adjustment, are most familiar to

photogrammetrists. A rigorous and accurate modeling of the camera IO and lens distortion

parameters is provided through an iterative least-squares estimation process.

A combination of linear and non-linear techniques where a linear method is employed to

recover initial approximations for the parameters, after which the orientation and

calibration are iteratively refined. This two-stage approach has in most respects been

superseded for accurate camera calibration by the bundle adjustment formulation above,

which is also implicitly a two-stage process.

2.1.6.2 Camera Model

The object and camera coordinates are typically given in a right-handed Cartesian coordinate

system. This is illustrated in figure where the camera projection is approximated with a pinhole

model. The pinhole model is based on the principle of co linearity, where each point in the object

space is projected by a straight line through the projection center into the image plane. The

origin of the camera coordinate system is in the projection center at the location (X0, Y0, Z0)

with respect to the object coordinate system, and the z-axis of the camera frame is perpendicular

to the image plane. The rotation is represented using Euler angles ω, Φ and k that define a

sequence of three elementary rotations around the x-, y-, z-axis, respectively. The rotations are

performed clockwise, first around the x-axis, then the y-axis that is already once rotated, and

finally around the z-axis that is twice rotated during the previous stages.

Figure: Object and camera coordinate systems

2.2 Project and Program Objectives

(Describe the project aims in terms of theoretical, experimental and socio-

economic aspects. Clearly lay down major and minor milestones to be achieved

during the execution of the project. List down these objectives in explicit terms)

2.3 Project Management Design

(Narrate the total duration of the project. Then divide the total period into various

phases, such as;

Phase Activity Description Duration

1 Literature Review 2 Months

2 Simulation Setup Design 2 Month

3 Development of dataset 1 Month

4 Algorithm Coding & Result Formulation 4 Months

5 Publication & Report Writing 3 Months

2.4 Organization and Management

Human Resource Required:

1. Principle Investigator

2. Co-principle Investigator

2.5 Project Work Schedule

Phase

1

2

3

4

5

2.6 Project Detailed Budget

2.6.1 Human Resources

Senior Investigators List by

Name

Level of Effort

(No of Months)

(Part Time)

Rate/Month

Total (Rs)

Principal Investigator --- --- ---

Co Investigator --- --- ---

Research Assistants and Other

Personal (Put No in brackets)

a. ( ) Postgraduate Students ---

b. ( ) Undergraduate Students ---

c. ( ) Lab Technicians ---

d. ( ) Other Professionals ---

e. ( ) Secretaries & Labours - - -

2.6.2 Capital Expenses

Experimental Equipment

(Provide Details)

1. IR Camera Rs. 20000

2. Peco Projector Rs. 55000

3. TI Based Video Processing Board Rs. 75000

2.6.3 Materials and Supplies

a. Materials (Specify)

b) Supplies

c. Stationery __________________________________________

d. Others _____________________________________________

2.6.4 Books, Research Literature

a. Book & Literature “Journal Paper” b. Internet Services

Charges_____________________________ Rs. ………………

2.6.5 Other Expenses

a. Field Work/Collection of Data__________________________Rs. ………………

b. Travel _____________________________________________ Rs. ………………

c. Publication Charges

TOTAL PROJECT COST: Rs. 150000/-

2.7 Utilization

The proposed TR&D project has four major motivations

To enhance the functionality of driver training and simulation systems in real time

environment.

To assist drivers in real time driving situations by displaying real time information.

To train drivers to maintain their lane with maximum safety.

Overall improvement in safety for drivers, vehicles and pedestrians.

Driver training and simulation systems are mainly indoor based systems. This framework will

help in conducting the training in real time. Trainee will get help while driving vehicle over

road.

Drivers will get assistance in real time as they can see their vehicle’s future occupancy over road

area on the wind screen. In parallel, this system will support novice drivers to get maturity

gradually. System has major utility in crowded areas as well where pedestrians and other

vehicles are too close.

3. CITED REFERENCES

[1] Schmalstieg, D and Reitmayr, G (2007) ‘The World as a User Interface: AR for Ubiquitous

Computing’, In Gartner, G., Cartwright, W., and Peterson, M. (eds.), Location Based Services

and TeleCartography, Springer, pp. 369-392

[2] Behringer, R (1999) ‘Registration for Outdoor Augmented Reality Applications Using Computer

Vision Techniques and Hybrid Sensors’, In Proceedings of IEEE Virtual Reality, IEEE

Computer Society, pp. 244-251.

[3] Ledermann, F and Schmalstieg, D (2005) ‘APRIL: A High-Level Framework for Creating

Augmented Reality Presentations’, In IEEE Virtual Reality, pp. 187-194.

[4] Azuma, R, Baillot, Y, Behringer, R, Feiner, S et al. (2001) ‘Recent Advances in Augmented

Reality’, IEEE Computer Graphics and Applications, 21(6), pp. 34-47.

[5] Milgram, P and Kishino, F (1994) ‘A taxonomy of mixed reality visual displays’, IEICE

Transactions on Information Systems, E77-D(12), pp. 1321-1329.

[6] Reitmayr, G (2004) ‘On software design for augmented reality’, Vienna University of Technology.

[7] Dix, A, Finlay, J, Adowd, G and Beale, R (1998) Human-Computer Interaction, 2nd ed. Prentice

Hall.

[8] D. Schmalstieg, A. Fuhrmann, and G. Hesina. Bridging multiple user interface dimensions with

augmented reality. In [3], pp. 20–29.

[9 F. Zhou, H. B.-L. Duh, and M. Billinghurst. Trends in augmented reality tracking, interaction and

display: A review of ten years of ISMAR. In M. A. Livingston, O. Bimber, and H. Saito, editors,

ISMAR’08: Proc. 7th Int’l Symp. on Mixed and Augmented Reality, pp. 193–202, Cambridge,

UK, Sep. 15-18 2008. IEEE CS Press. ISBN 978-1-4244-2840-3.

[10] J. Rekimoto. Transvision: A hand-held augmented reality system for collaborative design. In

VSMM’96: Proc. Virtual Systems and Multimedia, pp. 85–90, Gifu, Japan, 1996. Int‟l Soc.

Virtual Systems and Multimedia

[11] Leung, T. K., Burl, M. C., and Perona, P. (1995). Finding faces in cluttered scenes using random

labeled graph matching. In Proceedings of the Fifth international Conference on Computer

Vision (June 20 - 23, 1995). ICCV. IEEE Computer Society, Washington, DC, p.637.

[12] Benn, D. E., Nixon, M. S. and Carter, J. N. (1999) Extending Concentricity Analysis by

Deformable Templates for Improved Eye Extraction. In: Proceedings of the Second International

Conference on Audio- and Video-Based Biometric Person Authentication.

[13] Kroon, B., Hanjalic, A., and Maas, S. M. 2008. Eye localization for face matching: is it always

useful and under what conditions?. In Proceedings of the 2008 international Conference on

Content-Based Image and Video Retrieval (Niagara Falls, Canada, July 07 - 09, 2008). CIVR '08.

ACM, New York, NY, 379-388.

[14] H.A. Rowley, S. Baluja, and T. Kanade, “Neural network-based face detection” ,IEEE

Transactions on Pattern Analysis and Machine Intelligence, 20(1):23–38,1998.

[15] Sung, K. Poggio,T.(1998), Example-based Learning for View-based Human Face Detection,

IEEE Transactions On Pattern Analysis And Machine Intelligence, Vol. 20, No. 1, January 1998

[16] Baluja,S (1997). Face detection with in-plane rotation: Early concepts and preliminary Results,

Justsystem Pittsburgh Research Center, online available at:

http://www.cs.cmu.edu/˜baluja/papers/baluja.face.in.plane.ps.gz

[17] Huttenlocher, D. P. Klanderman, G. A. Rucklidge, W. J, (1993), Comparing Images Using the

Hausdorff Distance, IEEE Institute Of Electrical And Electronics, IEEE Transactions On Pattern

Analysis And Machine Intelligence”.

[18] Jesorsky, O.Kirchberg, K. Frischholz, R. (2001), Robust Face Detection Using the Hausdorff

Distance, In Proc. Third International Conference on Audio- and Video-based Biometric Person

Authentication, Springer, Halmstad, Sweden, 6–8 June 2001, Lecture Notes in Computer Science,

LNCS-2091, pp. 90–95.

[19] Viola, P. Jones, M. (2004), Rapid Object Detection Using a Boosted Cascade of Simple Features,

Mitsubishi Electric Research Laboratories, IEEE Computer Society Conference on Computer

Vision and Pattern Recognition, May 2004, [Online]: http://www.merl.com/reports/docs/TR2004-

043.pdf [10/03/2008]

[20] Byrd, R. and Balaji, R. 2006. Real time 2-D face detection using color ratios and K-mean

clustering. In Proceedings of the 44th Annual Southeast Regional Conference (Melbourne,

Florida, March 10 - 12, 2006). ACM-SE 44. ACM, New York, NY, 644-648.

[21] Heisele, B. et al. (2002) Component-based Face Detection, [online], Available:

http://cbcl.mit.edu/projects/cbcl/publications/ps/cvpr2001-1.pdf [02-Oct-2008.

[22] Li, Z. Zhang .Z(2004), FloatBoost Learning andStatistical Face Detection, IEEE Transactions

On Pattern Analysis And Machine Intelligence, Vol. 26, No. 9, September 2004

[23] Li, Y. Gong, S. and Liddell, H. (2000) Support vector regression and classification based multi-

view facedetection and recognition, Automatic Face and Gesture Recognition, 2000. Proceedings.

Fourth IEEE International Conference on Volume , Issue , 2000 Page(s):300 – 305.

[24] Wilson, P. I. and Fernandez, J. (2006). Facial feature detection using Haar classifiers. J. Comput.

Small Coll. 21, 4 (Apr. 2006), 127-133.

[25] D.W. Hansen and A.E.C. Pece, “Eye Tracking in the Wild,” Computer Vision and Image

Understanding, vol. 98, no. 1, pp. 182-210, Apr. 2005.

[26] J. Daugman, “The Importance of Being Random: Statistical Principles of Iris Recognition,”

Pattern Recognition, vol. 36, no. 2, pp. 279-291, 2003.

[27] K.-N. Kim and R.S. Ramakrishna, “Vision-Based Eye-Gaze Tracking for Human Computer

Interface,” Proc. IEEE Int’l Conf. Systems, Man, and Cybernetics, 1999.

[28] J.P. Ivins and J. Porrill, “A Deformable Model of the Human Iris for Measuring Small 3-

Dimensional Eye Movements,” Machine Vision and Applications, vol. 11, no. 1, pp. 42-51, 1998

[29] A. Yuille, P. Hallinan, and D. Cohen, “Feature Extraction from Faces Using Deformable

Templates,” Int’l J. Computer Vision, vol. 8, no. 2, pp. 99-111, 1992

[30] P.W. Hallinan, “Recognizing Human Eyes,” Geometric Methods in Computer Vision, pp. 212-

226, SPIE, 1991.

[31] J. Huang and H. Wechsler, “Eye Detection Using Optimal Wavelet Packets and Radial Basis

Functions (RBFs),” Int’l J. Pattern Recognition and Artificial Intelligence, vol. 13, no. 7, 1999.

[32] D. Tweed and T. Vilis, “Geometric Relations of Eye Position and Velocity Vectors during

Saccades,” Vision Research, vol. 30, no. 1, pp. 111-127, 1990.

[33] D. Scott and J. Findlay, “Visual Search, Eye Movements and Display Units,” human factors

report, 1993.

UNDERTAKING

I/We hereby agree to undertake the research work of project with details as under

Title of Research Project Proposal

Vehicle Future Position Augmentation over Wind Screen: Head-up-Display and Eye Tracking

Framework

Name of Sponsoring Agency/Department/Organization:

---------------------------------------------------------------------------------------------------------------------

Awarded to me/us vide Notification/Grant No: ……………………… Dated: ……………………

I/We also undertake to submit and present reports on the progress of the work and audited

statements of the approved expenditures incurred.

At the termination of the project, I/We would submit the final report on the research project to

the competent authority/sponsoring agency.

Further, I/We have not submitted this project to any other sponsoring agency for funding.

Principal Investigator Co Investigator

Name Dr. Tabassam Nawaz Mr. Muhammad Shahid

Designation Associate Professor PhD Scholar

Department Software Engineering Department

UET Taxila

Computer Engineering Department

UET Taxila

Signature with date

Endorsements Chairman of Department Dean of Faculty

Name Dr. M. Iram Baig Prof Dr. Adeel Akram

Signature with date