Milestone 4

-

Upload

melissa-hovanes -

Category

Documents

-

view

226 -

download

0

description

Transcript of Milestone 4

Introduction

This document outlines the procedures and results of our initial usability testing plan for our motorcoach safety widget design (see below).

S t e e l b e a m S o f t w a r e , I n c .

Steelbeam Software

BoltriEversmanHovanes

Ray

Milestone 4

Contents

Introduction .......................................... 1

Study Protocol ......................................... 2 - 4

Demographics ......................................... 5 - 6

Results ................................................. 7 - 14

Appendices ............................................ 15 - 20

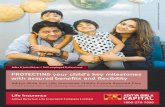

Mock-up of safety widget as it would appear on a motorcoach carrierʼs website (widget outlined in red) www.greyhound.com

Study Protocol

Heuristic Evaluation

This evaluation was performed internally by Steelbeam Software team members. The system was evaluated with the following 10 heuristics outlined by Jakob Nielson (useit.com):

1. Visibility of system status2. Match between system and the real world3. User control and freedom4. Consistency and standards5. Error prevention6. Recognition rather than recall7. Flexibility and efficiency of use8. Aesthetic and minimalist design9. Help users recognize, diagnose, and recover from errors10. Help and documentation

We used the following 0 to 4 scale (useit.com, Nielson) to rate the severity of usability problems:

0 = I don't agree that this is a usability problem at all 1 = Cosmetic problem only: need not be fixed unless extra time is available on project 2 = Minor usability problem: fixing this should be given low priority 3 = Major usability problem: important to fix, so should be given high priority 4 = Usability catastrophe: imperative to fix this before product can be released

-2-

S t e e l b e a m S o f t w a r e , I n c .

DOT Score Panel

Cognitive Walkthrough

The cognitive walkthrough was also performed internally by Steelbeam Software. We came up with the following series of tasks that would be performed by our user:

Step 1" User examines the overall DOT score and the scores associated with each BASIC. User clicks on “More Information” to get more info specific to the carrier.

Step 2" User displays the User Reviews tab and can view the FullTank gauge. Step 3" User views all of the reviews.Step 4" User finds several reviews particularly helpful and decides to “like” themStep 5" User wants to write a review of her own about a past experience with the company and submits her

review.

We then asked the following 4 questions:

• Will the user try to achieve the right effect?• Will the user notice that the correct action is available?• Will the user associate the correct action with the effect that user is trying to

achieve?• If the correct action is performed, will the user see that progress is being made

towards their goal?

-3-

S t e e l b e a m S o f t w a r e , I n c .

User Reviews Panel

Our testing site at the Miller Learning Center

Think-aloud Evaluation"For this testing technique, we wanted a variety of participants to test our system, so we provided an incentive by offering a free chicken sandwich upon completion of the test. Potential participants were approached at random.

The following steps outline the procedure by which they tested the system:

Step 1: Participant fills out consent form (Appendix A1). Tester asks for permission to capture video and audio.Step 2: Tester explains the general use of the system and informs participant of the steps that will be

completed during the test. Step 3: Participant completes 9 general demographics questions (Appendix C).Step 4: Participant begins test under the direction of the tester.Step 5: Participant verbalizes thought process while completing a series of tasks, as outlined on Task

Worksheet (Appendix B).Step 6: After completing the series of tasks, the Participant completes a reflective questionnaire (Appendix C). Step 7: Tester thanks participant for their time and rewards them with a chicken sandwich.

Retrospective Testing Interview"For this testing technique, potential participants were again chosen at random and offered a chicken sandwich as an incentive to complete the testing.

The following steps outline the procedure by which they tested the system:

Step 1: Participant fills out consent form (Appendix A2). Tester asks for permission to capture video and audio.Step 2: Tester explains the general use of the system and informs participant of the steps that will be

completed during the test. Step 3: Participant completes 9 general demographics questions (Appendix C).Step 4: Participant begins test under the direction of the tester.Step 5: Participant completes a series of tasks, as outlined on Task Worksheet (Appendix B).Step 6: After completing the series of tasks, the Participant completes a reflective questionnaire(Appendix C). Step 7: Participants discusses his/her experience using the system. with the Tester.Step 7: Tester thanks participant for their time and rewards them with a chicken sandwich.

-4-

S t e e l b e a m S o f t w a r e , I n c .

Demographics

Our evaluations and surveys took place in the Miller Learning Center on the campus of the University of Georgia. As a result, our demographics were somewhat limited in terms of the variety of users we could attract at such a location. However, as stated in previous design iterations, our system was primarily designed to attract the target demographic of younger users (18 – 35) who have regular access to the internet.

We conducted evaluations with ten individuals, seven male and three female, who were all between the ages of 18 to 29. Most of the participants were full-time students, with two part-time students and two individuals in the work force. The income range of those involved in the study predominately fell into the less than $20,000 array with only one individual reporting an income over $20,000. The average internet use in terms of hours spent online per week by the users polled was 22.7 and is illustrated in the graph below.

-5-

S t e e l b e a m S o f t w a r e , I n c .

The frequency of motorcoach travel for our randomly selected group saw half the participants never having traveled by motorcoach, with the remaining five averaging between 1 to 5 rides in the past year. The preferred booking method of motorcoach travel, which for those never having toured was a hypothetical estimation, predominately returned the response of: “via the Internet,” with “phone” and “friend/family” also receiving votes (see graph below).

The next inquiry relates to the previous question by asking which device would be or is preferred when booking a motorcoach online. This response returned in favor of the computer/tablet, with only one vote going indicating that the participant does not travel.

We certainly realize that some of the correlations made after conducting this study are not necessarily statistically valid due to population size--only ten individuals were interviewed--however, due to the time constraints placed upon us as part-time researchers in a classroom setting, these limitations had to be made in the interest of overall completion. It is also worth noting that while only ten participants were used, many of the questions asked received similar responses from up to nine out of ten instances, which cannot be ignored in terms of validity.

-6-

S t e e l b e a m S o f t w a r e , I n c .

Results

Heuristic Evaluation

The following table is composed of the results from our internal heuristic evaluation.

problem severity no.

heuristic no.

1 There is nothing to inform the user that there are mouse over tooltips for the BASIC categories, and it takes several seconds of hovering for the tip to appear

1 1

2 On the User Reviews screen, it may not be clear that the number under the gauge is the overall average of reviews

2 1

3 Page name for see all reviews is “Rate your motorcoach carrier” 0 1

4 BASIC categories donʼt use the most intuitive wording 3 2

5 “Full tank” may be confusing 1 2

6 “FMCSA Safety Info” at the top of review pages: users may not know what FMCSA is 3 2

7 If users want to see all the reviews and then leave a review, they have to go back to the applet; ie, they should be able to do so from the “all reviews” screen

3 3

8 No way to undo a helpful review vote 1 3

9 In confirmation screen for a helpful vote, a better label for the button might be “back to all reviews”

0 3

10 If the user submits a review by mistake, there is no way to edit or delete a review 3 3

11 On reviews, you vote that you find the review “helpful”, while on confirmation screen, you have “liked” the review

1 4

-7-

S t e e l b e a m S o f t w a r e , I n c .

problem severity no.

heuristic no.

12 “score” vs “overall score”, users might wonder if there is a difference 2 4

13 If the user enters a blank review, they receive an error 4 5

14 Frequent users may not want to see a confirmation screen every time they vote a review as helpful

3 7

15 exact time isnʼt really necessary for reviews, maybe just include date 0 8

16 perhaps have a way to collapse a review 1 8

17 Name label seems cluttered next to “helpful” box 1 8

18 a visual representation of each review score (eg, stars) would be a nice addition 1 8

19 Error message for blank review is “HTTP Status 500” 4 9

20 the FMCSA Safety Info link just goes to the main page, which might not be useful to many users

2 10

21 no way to get to the “submit a rating” page from the “view all ratings” page. 2 3, 7

22 response page after “liking” a review could get annoying—could just be a response message that is placed at the top of the screen

0 8, 2

23 a single user can “like” a review as many times as they want—could easily result in abuse

3 2, 3

24 a single user can leave as many reviews as he/she would like –could also result in abuse

3 2, 3

25 the score on the user rating site could be a little easier to read 2 2, 7, 8

26 some inconsistency of naming – user review or rating? 0 4

-8-

S t e e l b e a m S o f t w a r e , I n c .

Cognitive Walkthrough

We evaluated each of the 5 steps using the 4 questions outlined in the Study Protocol section of this document. Our results were as follows:

Step 1" User examines the overall DOT score and the scores associated with each BASIC. User clicks on “More Information” to get more info specific to the carrier.

The user should be able to complete this task, however, there may be some confusion about what each of the BASICS categories mean.

Step 2" User displays the User Reviews tab and can view the FullTank gauge.

This step might be difficult to complete--the User Review tab is somewhat hard to find/understand that it opens a different window. Also, while the FullTank gauge presents the user review information in a sleek, visual format, it may not be entirely intuitive and may need more explanation that is currently lacking.

Step 3" User views all of the reviews.

This step is easy, as long as the user is able to successfully complete the previous step, which takes the user to the User Reviews panel of the widget. However, there may be some confusion, as the “See all Reviews” button opens another window in the userʼs browser, rather than in the widget itself.

Step 4" User finds several reviews particularly helpful and decides to give them “thumbs up”

This step follows the format of other review sites in allowing a user to give a review he/she finds helpful a “thumbs up.” The user may be provided with too much feedback, however. Each time a user gives a review a “thumbs up,” he/she is directed to a confirmation page that is more of an annoyance than a help.

Step 5" User wants to write a review of her own about a past experience with the company and submits her review.

This task may be difficult to perform because the only way to navigate to the page where one can leave a review is through the Widget interface. There should be a button that allows the user to leave a review from the “See all Reviews” site.

-9-

S t e e l b e a m S o f t w a r e , I n c .

KSLM Evaluation

This predictive evaluation was conducted on the tasks listed in Task Worksheet (Appendix B)

H = 0.40 s P = 1.10 s B = 0.20 s M = 1.35 s K = 0.28 s

Find overall DOT safety score: Home on mouse – H Point to the DOT Score box (tool-tip score

explanation appears) – P " Predictive Time = 1.50 s

Find safety score for Driver Fitness: Home on mouse – H Point to “Driver Fitness” text in box (tool-tip

score explanation appears) – P" Predictive Time = 1.50 s

Click More Information button: Home on mouse – H Point to the More Information button – MP Click on mouse button (press/release) – B

" Predictive Time = 3.05 s

Find the overall user rating for the carrier Home on mouse – H Point to User Reviews tab – MP Click on mouse button (press/release) – B Point to user ratings box – P

" Predictive Time = 4.15 s

View the reviews that have been left by users and find the review left by Beth

Home on mouse – H Point to See All Reviews button – MP Click on mouse button (press/release) – B Point to reviews web page scroll bar – P Click on mouse button (press) – B Drag mouse cursor in a downward motion to

scroll the webpage for Bethʼs review – P Release mouse button when review is found

– B" Predictive Time = 5.65 s

Find the review voted most helpful Home on mouse – H Point to reviews web page scroll bar – P Click on mouse button (press) – B Drag mouse cursor to scroll the webpage

for the most helpful review – P Release mouse button when review is

found – B " Predictive Time = 3.0 s

-10-

S t e e l b e a m S o f t w a r e , I n c .

Give a review a helpful vote (i.e. thumbs up) Home on mouse – H Point to reviews web page scroll bar – P Click on mouse button (press) – B Drag mouse cursor to scroll webpage for

review on which to cast vote – MP Release mouse button when review is found

– B Point to thumbs up button – P Click on the thumbs up button to give the

review a helpful vote (press/release) – B" Predictive Time = 5.65 s

Leave a user review Home on mouse – H Point at the Leave a Review button located

on the system –MP Click on mouse button (press/release) – B Point to the Leave a Reviewʼs webpage

textbox for user name – P Click on mouse button (press/release) – B Home on keyboard – H Type 6-letter name into textbox – 6K Home on mouse – H Point to Leave a Reviewʼs webpage textbox

for review description – P Click on mouse button (press/release) – B Home on keyboard – H Type 31 character description into textbox –

31K Home on mouse – H Point to Submit button – MP Click on mouse (press/release) – B

Predictive Time = 20.26 s

-11-

S t e e l b e a m S o f t w a r e , I n c .

More photos from testing site at the Miller Learning Center.

Reflective Questionnaire Results

select results from Ease of Tasks questions from Reflective Questionnaire (Appendix C2)

Discussion of Results

Generally, we are pleased with our prototype based on the results of evaluation. User feedback was generally positive, and our cognitive walkthrough showed that, for many tasks, usersʼ needs are satisfied. However, there are some flaws with the design. Our heuristic evaluation revealed some usability issues, and the feedback received raised some problems as well. The results of all of these evaluations are discussed below.

-12-

S t e e l b e a m S o f t w a r e , I n c .

Ease of Tasks graph (above) shows the average reported ease level of completing the first 7 tasks (see Appendix A).

Our heuristic evaluations revealed some errors and omissions fairly quickly. Some of the most glaring errors have to do with errors: when users leave a blank review, they receive an error screen that is intimidating and does not provide any remedies or “ways out.” Though that was the worst problem, we noticed several more errors that were still fairly severe. One error that we noticed, and that was later confirmed by user testing, was that users are not able to leave a review from the review overview page.

Another error with reviews is that users do not have the ability to edit or delete a review. One thing that we noticed that didnʼt come up in user testing was that users can leave multiple reviews and like a review multiple times. This could easily result in abuse. Finally, there are some varieties in terminology that could be confusing. For example, we use the terms “helpful” and “like” to describe what a user believes is a good review, and we use “score” in one context but “overall score” in another to mean the same thing.

The results we received from our questionnaires revealed some problems that didnʼt come up during the cognitive walkthrough or heuristic evaluation. The most common complaint from users was that our visual design was not very appealing. During our evaluations, we were looking for functionality errors, so the feedback we received about visual aspects of the program was very helpful.

One of the other most common complaints was something that we did notice prior to user testing: navigation. Users had difficulty finding some pages, and a few complained that there were too many screens. We found that, of the different screens, users liked the DOT score window the most. They found this screen to provide a lot of clear, accessible information in a small space. The biggest criticism of this window was that it was difficult to interpret the scores into meaningful information. The component users liked the least varied, but in general had to do with the review screens. They said that, in addition to the navigation issues, they also didnʼt like the format of the reviews page. Reviews were too cluttered and it was hard to find the relevant data. When we asked about how difficult users found each task, the data we received confirmed some of the above criticism. For example, when we asked users to tell us the overall user rating, which required them to navigate to a new screen, they found that task to be more difficult than other tasks.

We performed 3 retrospective interviews, which for the most part confirmed the results of our questionnaire. With DOT scores, the participants appreciated the bold, large font that was used for the overall score and found the BASIC scores (in a smaller font) helpful and easy to understand as well. One participant did note that it may be helpful to display how many points the score is out of (nowhere on the system did it say “out of 10”, although it was assumed by the participants).

-13-

S t e e l b e a m S o f t w a r e , I n c .

When participants selected the “More Information” button, participants generally disliked the DOT website that they were taken to; the DOT rating on our system was the preferred method when looking up government data. However, when asked whether they preferred DOT scores or user reviews, all participants stated that they would prefer reading user reviews.

Each participantʼs experience with the FullTank design for user reviews were favorable, however it was noted by one individual that the phrasing of “rating” and “review” was not consistent and made it difficult to understand what the evaluation was asking them to look up. When navigating to the “See all reviews” page, user reviews were considered effective and simple to understand; however each participant noted that the designʼs appearance was very plain with its white/black colors and overall bland look, a view that closely matched the results of the questionnaire. Participants had no issue leaving their own review either, though the plainness of the aesthetic design was brought up again. As a whole, participants found the system effective in displaying the information, and a majority of the downsides came in the form of how the system actually looked, not from problems in functionality.

Based on all of these tests, if we were to redesign our prototype with this data, there are several improvements that could be made. One of the biggest things that we would try to do is iron out some of the navigation issues. For some criticisms, this could be as simple as adding a button. However, some criticisms, such as the complaint that we had too many screens, might require extensive brainstorming to resolve.

Another improvement would be to the visual design of the system. If this application were being developed professionally, we might consider hiring a graphic designer to help with the aesthetic appeal. Even without a design, we could add more colors to our review page to help convey the information more effectively, and perhaps use a font scheme that users find to be more modern (one of the criticisms we received was that the font looked dated). One improvement that would be simple to make is to unify our terminology. In addition the differences in terms we noticed during the heuristic evaluation, users had trouble distinguishing the terms “user ratings” and “user reviews”, something we did not pick up on during our non-user evaluations. Finally, if we had more time, we might make some general refinements to the review system. We would take a look at how we can make the layout more effective, and we would eliminate the possible for abuse by leaving multiple reviews or liking a review more than once.

At its core, though, we still believe our idea provides a unique, effective solution for getting more information about motorcoach safety to users.

-14-

S t e e l b e a m S o f t w a r e , I n c .

Appendices

-15-

S t e e l b e a m S o f t w a r e , I n c .

CONSENT FORM FOR RETROSPECTIVE TESTING INTERVIEW I, _________________________________, agree to participate in a usability test of a Motorcoach Safety Information System (“System”), designed by Mark Boltri, Dillon Eversman, Melissa Hovanes, and Nathan Ray as part of a team project for the Human-Computer Interaction class (CSCI 4800) at the University of Georgia, taught by Chris Plaue. I understand that my participation is voluntary. I can refuse to participate or stop taking part at anytime without giving any reason, and without penalty or loss of benefits to which I am otherwise entitled. I can ask to have all of the information about me returned to me, removed from the research records, or destroyed. The purpose of this testing is to receive feedback from potential users about the various aspects of the System. If I volunteer to take part in the testing, I will be asked to do the following things: 1) Fill out a brief demographics questionnaire, which should take about a minute. 2) Use the System to perform a series of tasks, which will be provided by the designers. 3) Complete a verbal interview in which I will explain what steps I took to complete the tasks

performed in procedure 2. 4) Fill out a post-testing questionnaire, which will ask for my opinions about various aspects of the

System. This should take about 5 to 10 minutes No discomforts, stresses, or risks to my physical or psychological well-being are expected as a result of the testing procedures. For my participation, I will receive a chicken sandwich, the corresponding value of which is approximately $3. The results of my participation will be anonymous. No individually-identifiable information about me will be collected during the course of the testing. The designers will answer any further questions about the usability tests, now or at any time during course of the testing. I understand that I am agreeing by my signature on this form to take part in this usability test. I will receive this consent form for my records. Mark Boltri _______________________ Dillon Eversman _______________________ Melissa Hovanes _______________________ Nathan Ray _______________________ __________ Names of Designers Signatures Date _________________________ _______________________ __________ Name of Participant Signature Date

Please sign both copies, keep one and return one to the researcher.

CONSENT FORM FOR THINKALOUD EVALUTION I, _________________________________, agree to participate in a usability test of a Motorcoach Safety Information System (“System”), designed by Mark Boltri, Dillon Eversman, Melissa Hovanes, and Nathan Ray as part of a team project for the Human-Computer Interaction class (CSCI 4800) at the University of Georgia, taught by Chris Plaue. I understand that my participation is voluntary. I can refuse to participate or stop taking part at anytime without giving any reason, and without penalty or loss of benefits to which I am otherwise entitled. I can ask to have all of the information about me returned to me, removed from the research records, or destroyed. The purpose of this testing is to receive feedback from potential users about the various aspects of the System. If I volunteer to take part in the testing, I will be asked to do the following things: 1) Fill out a brief demographics questionnaire, which should take about a minute. 2) Use the System to perform a series of tasks, which will be provided by the designers. While

performing these tasks, I will explain out loud what I am doing as well as any thoughts I am having about the System. This should take about 5 to 10 minutes.

3) Fill out a post-testing questionnaire, which will ask for my opinions about various aspects of the System. This should take about 5 to 10 minutes

No discomforts, stresses, or risks to my physical or psychological well-being are expected as a result of the testing procedures. For my participation, I will receive a chicken sandwich, the corresponding value of which is approximately $3. The results of my participation will be anonymous. No individually-identifiable information about me will be collected during the course of the testing. The designers will answer any further questions about the usability tests, now or at any time during course of the testing. I understand that I am agreeing by my signature on this form to take part in this usability test. I will receive this consent form for my records. Mark Boltri _______________________ Dillon Eversman _______________________ Melissa Hovanes _______________________ Nathan Ray _______________________ __________ Names of Designers Signatures Date _________________________ _______________________ __________ Name of Participant Signature Date

Please sign both copies, keep one and return one to the researcher.

Appendix A: Consent Forms

A1: Thinkaloud Evaluation A2: Retrospective Testing

-16-

S t e e l b e a m S o f t w a r e , I n c .

!"#$%&%'"($)*)++++++))

)

!"#$%&'"("%)*$%+*&*$,*',-%.')#&/%01)*$2'&3*1%./"%

,- .()$/0)123)104256)5#%$0)725()$/0)280#"44)9:;)<"=0$>)<&2#0)=2#)?#0>/2@(7)A%(0<-)

B- C#%$0)725()$/0)<"=0$>)<&2#0)=2#)7#%80#)=%$(0<<-)

D- ?0$)E2#0)%(=2#E"$%2()"12@$)?#0>/2@(7F<)9:;)<"=0$>)<&2#0)"(7)&/0&G)$/0)123)10425-)

H- C#%$0)725()5/"$)$/0)280#"44)@<0#)#"$%(I)%<)=2#)$/0)&"##%0#-)

J- K%05)"44)#08%05<)$/"$)/"80)100()40=$)1>)@<0#<)"(7)&/0&G)$/0)123)10425-)

L- M%(7)$/0)#08%05)5#%$$0()1>)N0$/)"(7)5#%$0)725()$/0)<&2#0)=2#)$/"$)#08%05-)

O- C#%$0)725()$/0)#08%050#F<)("E0)=2#)$/0)#08%05)$/"$)/"<)100()82$07)E2<$)/04'=@4-)

P- M%(7)$/0)#08%05)$/"$)!"#)=%(7)E2<$)/04'=@4)"(7)I%80)$/"$)#08%05)")/04'=@4(0<<)82$0)Q$/@E1<)@'R-)

S/0&G)$/0)123)10425-)

)

T- A0"80)")#08%05)2=)>2@#)25(-)M2#)$/0)("E06)@<0)$/0)("E0)2=)>2@#)="82#%$0)=%&$%2("4)&/"#"&$0#U)=2#)$/0)<&2#06)'%&G)")8"4@0)10$500()D)"(7)OU)"(7)=2#)$/0)#08%05)%$<04=6)5#%$0)@')$2)")<0($0(&0)%()$/0)$03$)"#0")#04"$%(I)$2)1@<)<"=0$>)"(7)V@"4%$>-)W@1E%$)>2@#)#08%05)"(7)&/0&G)$/0)123)10425-)

Appendix B: Task Worksheet Appendix C: Questionnaire

1. Are you male or female?

Male

Female

2. Which category below includes your age?

17 or younger

18-20

21-29

30-39

40-49

50-59

60 or older

3. What is the highest level of school you have completed or the highest degree you have received?

Less than high school degree

High school degree or equivalent (e.g., GED)

Some college but no degree

Associate degree

Bachelor degree

Graduate degree

C1: Demographics Questions

-17-

S t e e l b e a m S o f t w a r e , I n c .

7. In the past year, how many times did you ride on a motorcoach (i.e. charter bus)?

I did not ride on a motorcoach last year

1 time

2-3 times

3-5 times

5 or more times

8. When booking travel services (hotel, airline, rental car, motorcoach or charter bus) what resources do you use? Check all that apply.

Internet

Phone

Travel Agent

Friend or Family Member

I don't make reservations/I book services at the service desk

I don't travel

Other (please specify) ____________________________________________________________

9. If you use the Internet to book travel service, what device do you most use when making a reservation/buying a ticket? Select 1 answer.

Laptop/Desktop Computer

Smartphone

Tablet

I do not make reservations online

Other (please specify) ____________________________________________________________

4. Which of the following categories best describes your employment status?

Student, studying full time

Student, studying part time

Employed, working 1-39 hours per week

Employed, working 40 or more hours per week

Not employed, looking for work

Not employed, NOT looking for work

Retired

Disabled, not able to work

5. What is your annual income?

Less than $20,000

$20,000 to $34,999

$35,000 to $49,999

$50,000 to $74,999

$75,000 to $99,999

$100,000 to $149,999

$150,000 or More

6. In a typical week, how many hours do you spend online?

_____________ hours

C1: Demographics Questions C1: Demographics Questions

-18-

S t e e l b e a m S o f t w a r e , I n c .

10. Which part of the system did you like the most? Select 1 answer.

DOT Score Window

User Review Window

See All Reviews Webpage

Leave A Review Interface

11. Which part of the system did you like the least? Select 1 answer.

DOT Score Window

User Review Window

See All Reviews Webpage

Leave A Review Interface

12. Explain your reasons for your answers to Questions 10 and 11, above.

________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________

13. On a scale of 1 to 5, 1 being the worst and 5 being the best, give your overall

impression of the system’s aesthetic design.

Not Aesthetically Pleasing Aesthetically Pleasing

1 2 3 4 5

14. How easy or difficult was it to navigate between different parts of the system on a

scale of 1 to 5, with 1 being the most difficult and 5 being the easiest?

Very Difficult to Navigate Easy to Navigate

1 2 3 4 5

15. Rank the following components of the system from most informative to least - with 1 being the most informative and 5 being least informative, using each number only once.

______ Overall safety score

______ Safety score breakdown by BASICS categories

______ The web page visited by the More Information button

______ Overall user review score

______ Individual reviews from different users

ranking from 1 to 5

C2: Reflective Questionnaire C2: Reflective Questionnaire

-19-

S t e e l b e a m S o f t w a r e , I n c .

16. What suggestions would you have to improve the visual design of the program?

________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________

17. Rate the difficulty level performing the following tasks:

1Very

Difficult

2 3 4 5Very Easy

!"#$%&'()*&$+%&(,%"-..&/01&2-3%$4&25("%&3("&6"%4+(7*'&8#*%29

!"#$%&'()*&$+%&2-3%$4&25("%&3("&'"#,%"&:$*%229

6%$&;("%&#*3(";-<(*&-=(7$&6"%4+(7*'>2&/01&2-3%$4&25("%9

!"#$%&'()*&)+-$&$+%&(,%"-..&72%"&"-<*?&3("&$+%&5-""#%"9

@#%)&-..&"%,#%)2&$+-$&+-,%&=%%*&.%A&=4&72%"29

B#*'&$+%&"%,#%)&)"#C%*&=4&D%$+&-*'&)"#$%&'()*&$+%&25("%&3("&$+-$&"%,#%)9

!"#$%&'()*&$+%&*-;%&-*'&25("%&3("&$+%&"%,#%)&$+-$&+-2&=%%*&,($%'&;(2$&+%.E37.9

C2: Reflective Questionnaire C2: Reflective Questionnaire

To watch screen capture videos from our Thinkaloud Evaluations and to see more photos from the testing site,

visit our website.

-20-

S t e e l b e a m S o f t w a r e , I n c .

Mark Boltri

Melissa Hovanes

Dillon Eversman

Nathan Ray

Steelbeam Software, Inc.