Lecture 3. Directory-based Cache Coherence

description

Transcript of Lecture 3. Directory-based Cache Coherence

Lecture 3. Directory-based Cache Coherence

Prof. Taeweon SuhComputer Science Education

Korea University

COM503 Parallel Computer Architecture & Programming

Korea Univ

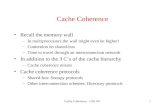

Scalability Problem with Bus• Bus-based machines are essentially based on broadcasting via bus• Bus bandwidth limitation as the number of processors increases

Bandwidth = #wires X clock freq.• Wire length grows with #processors• Load (capacitance) grows with #processors

Snoopy bus could delay memory accesses

2

Korea Univ

Distributed Shared Memory

• Goal: Scale up machines Distribute memory Use scalable point-to-point interconnection providing

• Bandwidth scales linearly with #nodes• Memory access latency grows sub-linearly with #nodes

3

P1 Pn

CA

$

Mem

Scalable Interconnection network

CA

$

Mem

…

CA (Communication Assist)

Korea Univ

Distributed Shared Memory

• Important issue How to provide caching and coherence in hardware on a

machine with physically distributed memory, without the benefits of a globally snoopable interconnect such as a bus

• Not only must the memory access latency and bandwidth scale well, but so must the protocols used for coherence, at least up to the scales of practical interest

• The most common approach for full hardware support for cache coherence: Directory-based cache coherence

4

Korea Univ

A Scalable Multiprocessor with Directories

5CA (Communication Assist)

P1 Pn

CA

$

Scalable Interconnection network

CA

$…MemoryDirectory Memory Directory

• Scalable cache coherence is typically based on the concept of a directory Maintain the state of memory block explicitly in a place called a directory The directory entry for the block is co-located with the main memory

• Its location can be determined from the block address

Korea Univ

2-level Cache Coherent Systems• An approach that is (was?) popular takes a form of 2-level protocol hierarchy

Each node of the machine is itself a multiprocessor The caches within a node are kept coherent by inner protocol Coherence across the nodes is maintained by outer protocol

• A common organization Inner protocol: Snooping protocol Outer protocol: Directory-based protocol

6

Korea Univ

ccNUMA

• Main memory is physically distributed and has non-uniform access costs to a processor Architectures of this type are often called cache-coherent,

non-uniform memory access (ccNUMA)

• More generally, systems that provide a shared address space programming model with physically distributed memory and coherent replication are called distributed shared memory (DSM) systems

7

Korea Univ

DSM (NUMA) Machine Examples

• Nehalem-based systems with QPI

8http://www.qdpma.com/systemarchitecture/SystemArchitecture_QPI.html

Nehalem-based

Xeon 5500

QPI: QuickPath Interconnect

Korea Univ

Directory-based Approach• Three important functions upon cache miss

Finding out enough information about the state of the memory block Locating other copies if needed (e.g., to invalidate them) Communicating with the other copies (e.g., obtaining data from them or invalidating

or updating them)

• In snoopy protocols, all three functions are done by the broadcast on bus

• Broadcasting in DSM machines generates a large amount of traffic On a p-node machine, at least p network transactions on every miss So, it does not scale

• A simple directory approach: Look up directory to find out the information about the state of blocks in other

caches The location of the copies is also found from the directory The copies are communicated with using point-to-point network transactions in an

arbitrary interconnection network, without resorting to broadcast9

Korea Univ

Terminology• Home node is the node in whose main memory the block is allocated

• Dirty node is the node that has a copy of the block in its cache in modified (dirty) state The home node and the dirty node for a block may be the same

• Owner node is the node that currently holds the valid copy of a block and must supply the data when needed This is either the home node (when the block is not in dirty state in a cache) or the dirty node

• Exclusive node is the node that has a copy of the block in its cache in an exclusive (dirty or clean) state

• Local node (or requesting node) is the node containing the processor that issues a request for the block

• Blocks whose home is the requesting processor are called locally allocated (or local blocks), whereas all others are called remotely allocated (or remote blocks)

10

P1 Pn

CA

$

Scalable Interconnection network

CA

$…MemoryDirectory MemoryDirectory

Korea Univ

A Directory Structure• A natural way to organize a directory

Maintain the directory together with a block in main memory at home

• A simple organization of the directory for a block is a bitvector of n presence bits (which indicates each of the n nodes) together with state bits For simplicity, let’s assume that there is only one state bit (dirty)

11

P1 Pn

CA

$

Scalable Interconnection network

CA

$…MemoryDirectory MemoryDirectory • ••

Directory

n presence bitsdirty bit

Korea Univ

A Directory Structure (Cont.)

• If the dirty bit is ON, then only one node (the dirty node) should be caching that block and only that node’s presence bit should be ON

• A read miss can be serviced by looking up the directory to see which node has a dirty copy of the block or if the block is valid in main memory at home

• A write miss can be serviced by looking up the directory as well to see which nodes are the sharers that must be invalidated

12

Korea Univ

Basic Operation

13

HomeHome

Korea Univ

A Read Miss (at node i) Handling

• If the dirty bit is OFF, CA obtains the block from main memory, supplies it to the

requestor in a reply transaction, and turns the ith presence bit (presence[i]) ON

• If the dirty bit is ON, CA responds to the requestor with the identity of the node whose

presence bit is ON (i.e., the owner node) The requestor then sends a request network transaction to that

owner node At the owner, the cache changes its state to shared and supplies

the block to both the requesting node as well as to main memory at the home node

• The requestor node stores the block in its cache in shared state At memory, the dirty bit is turned off and presence[i] is turned ON

14

CA (Communication Assist)

Korea Univ

A Write Miss (at node i) Handling

• If the dirty bit is OFF, Main memory has a clean copy of the data Invalidation request transactions must be sent to all nodes j for which

presence[j] is ON• The home node supplies the block to the requesting node (node i) together with

presence bit vector• CA at the requestor sends invalidation requests to the required nodes and waits for

acknowledgment transactions from the nodes The directory entry is cleared, leaving only presence[i] and the dirty bit ON The requestor places the block in its cache in dirty state

• If the dirty bit is ON, The block is first delivered from the dirty node (whose presence bit is ON)

using network transactions• The cache in the dirty node changes its state to invalid, and then the block is

supplied to the requesting processor The requesting processor places the block in its cache in dirty state The directory entry in the home node sets presence[i] and the dirty bit ON

15

CA (Communication Assist)

Korea Univ

Block Replacement Issues in Cache

• Replacement of a dirty block in node i The dirty data being replaced is written back to main memory

in home node The directory is updated to turn off the dirty bit and presence[i]

• Replacement of a shared block in node i A message may or may not be sent to the directory in home

node to turn off the corresponding presence bit • An invalidation is not sent to this node the next time the block is written

if the message was sent• This message is called a replacement hint (whether it is sent or not does

not affect the correctness of the protocol or the execution)

16

Korea Univ

Scalability of Directory-based Protocol

• The main goal of using directory protocols is to allow cache coherence to scale beyond the number of processors that may be sustained by a bus It is important to understand the scalability of directory protocols in terms

of both performance and storage overhead for directory information

• The major performance scaling issues for a protocol are how the latency and bandwidth demands scale with the number of processors used The bandwidth demands are governed by the number of network

transactions generated per miss (multiplied by the frequency of misses) and latency by the number of these transactions that are in the critical path of the miss

These quantities are affected both by the directory organization and by how well the flow of network transactions is optimized in the protocol

17

Korea Univ

Reducing Latency

18

Baseline

Optimized (adopted in Stanford DASH)

RemRd: Remote Read request

Korea Univ

Race Conditions

• What if multiple requests for a block are in transit

• Solutions Directory controller can serialize transactions for a

block through an additional status bit (busy or lock bit)

If the busy bit is set and the home node receives another request for the same block, 2 options:• Reject incoming requests for as long as the busy bit is set.

That is, nack (negative acknowledgement) to the requests• Queue incoming requests – Bandwidth saved, Request

latency kept to a minimum, Design complexity increased (Queue overflow)

19

Korea Univ

Issue with Turning Off Busy Bit

• When to turn off busy bit? Turn off the busy bit before

home responds to the requester (local node)?

• Race condition example In (c), before UpgrAck is

reached to the local node, what if RemRd arrives at the node?

• This race condition occurs if subtranctions follow physically different paths

• One solution Send Nack to the RemRd request

20

RemRd: Remote Read request

Korea Univ

Scalability of Directory-based Protocol

• Storage is affected only by how the directory information is organized For the simple bit vector organization, the number of presence bits needed grows

linearly with• #nodes: p bits for a memory block for p nodes• Main memory size: one bit vector for a memory block

It could lead to a potentially large storage overhead for the directory

• Examples With a 64-byte block size and 64 processors, what is the directory overhead as a

fraction of non-directory (i.e., data)?• 64 bits for each 64 bytes block: 64b/64B = 1/8 = 12.5%

What if there are 256 processors with the same block size?• 256 bits for each 64 bytes block: 256b/64B = ½ = 50%!

What if there are 1024 processors?• 1024 bits for each 64 bytes block: 1024b/64B = 2 = 200%!!!

21

Korea Univ

Alternative Directory Organizations

• Fortunately, there are many other ways to organize directory information that improve the scalability of directory storage

• The different organizations naturally lead to different high-level protocols with different ways of addressing the protocol functions

22

Korea Univ

Alternative Directory Organizations

• The 2 major classes of alternatives for finding the source of the directory information for a block Hierarchical directory schemes Flat directory schemes

23

Korea Univ

Hierarchical Directory Schemes• Hierarchical scheme organizes the processors as the

leaves of a logical tree (need not be binary)

• An internal node stores the directory entries for the memory blocks local to its children• A directory entry essentially informs which of its children

subtrees are caching the block and if there are some subtrees (which are not its children) that are caching the block

Finding the directory entry of a block involves a traversal of the tree until the entry is found • Inclusion is maintained between level k and k+1 directory

node where the root is at the highest level In the worst case you may have to go to the root to find

directory

These organizations are not popular since the latency and bandwidth characteristics tend to be much worse than flat schemes

24

Korea Univ

Flat, Memory-Based Directory Schemes

• The bit vector organization is the most straightforward way to store directory information in a flat, memory-based scheme

• Performance characteristics on writes The number of network transactions per invalidating write grows

only with the number of actual sharers• But, because the identity of all sharers is available at home, invalidations

can be sent in parallel• The number of fully serialized network transactions in the critical path is

thus not proportional to the number of sharers, reducing latency

• The main disadvantage of the bit vector organization is storage overhead

25

Korea Univ

Flat, Memory-Based Directory Schemes

• 2 ways to reduce the overhead Increase the cache block size Put multiple processors (rather than just one) in a node that is

visible to the directory protocol (that is, to use a 2-level protocol)

• Example: 4-processor node, 128 byte cache blocks, the directory memory overhead for a 256-processor machine is 6.25%

• However, these methods reduce the overhead by only a small constant factor Total directory storage is still proportional to P x (P x m)

• P is #nodes• P x m is #total memory blocks in the machine

m is #memory blocks in local memory

26

Korea Univ

Reducing Directory Overhead• Reducing the directory width

Directory width is reduced by using limited pointer directories• Most of time, only a few caches have a copy of a block when a block is written• Limited pointer schemes maintain a fixed number of pointers, each pointing

to a node that currently caches a copy of the block Each pointer takes log2P bits of storage for P nodes For example, for a machine with 1024 nodes, each pointer needs 10 bits, so even

having 100 pointers uses less storage than a full bit vector scheme• These schemes need some kind of backup or overflow strategy for the

situation when more than i readable copies are cached when they can keep track of only i copies precisely

• Reducing the directory height Directory height can be reduced by organizing the directory itself as

a cache• Only small fraction of the memory blocks will actually be present in caches at

a given time, so most of the directory entries will be unused anyway

27

Korea Univ

Limited Pointer Directories

• Assume that directory can track i copies In other words, directory can keep i number of pointers

• Pointer overflow handling options If there are more than i sharers, broadcast from i+1th request Victimize (Invalidate) one pointer and use it for the new node Let software handle overflow (used by MIT Alewife)

• When hardware pointers are exhausted, a trap to a software handler is taken

• Handler allocates a new pointer in regular (non-directory) memory• Software handler is needed

When the number of nodes exceeds the number of hardware pointers available

When a block is modified and some pointers have been allocated in memory

28

Korea Univ

Flat, Cache-Based Directory Schemes

• There is still a home main memory for the block However, the directory entry at the home node does not contain the identities of

all sharers, but only a pointer to the first sharer in the list plus a few state bits• This pointer is called the head pointer for the block

The remaining nodes caching that block are joined together in a distributed, doubly-linked list

• A cache that contains a copy of the block also contains pointers to the next and previous caches that have a copy

They are called the forward and backward pointers

29

Korea Univ

A Read Miss Handling

• The requesting node sends a network transaction to the home memory to find out the identity of the head node

• If the head pointer is null (no sharers), the home replies with data

• If the head pointer is not null, the requester must be added to the list of sharers The home responds to the requestor with the head pointer The requestor sends a message to the head node, asking to be inserted at

the head of the list and hence to become the new head node• The head pointer at home now points to the requestor• The forward pointer of the requestor’s cache entry points to the old head node• The backward pointer of the old head node points to the requestor

The data for the block is provided by the home if it has the latest copy or by the head node, which always has the latest copy

30

Korea Univ

A Write Miss Handling

• The writer obtains the identity of the head node from the home

• It then inserts itself into the list as the head node If the writer was already in the list as a sharer and is now performing an

upgrade, it is deleted from its current position in the list and inserted as the new head

• The rest of the distributed linked list is traversed node by node via network transactions to find and invalidate the cached blocks If a block that is written is shared by three nodes A, B, and C, the home only

knows about A, so the writer sends an invalidation message to it; the identity of the next sharer B can only be known once A is reached, and so on.

Acknowledgements for these invalidations are sent to the writer

• If the data for the block is needed by the writer, it is provided by either the home or the head node as appropriate

31

Korea Univ

Pros and Cons of Flat, Cache-based Schemes

• Cons Latency: the number of messages per invaliding write is in the

critical path

• Pros The directory overhead is small The linked list records the order in which accesses were made

to memory for the block, thus making it easier to provide fairness in a protocol

Sending invalidations is not centralized at the home but rather distributed among sharers

• Thus reducing the bandwidth demands placed on a particularly busy home CA

32

CA (Communication Assist)

Korea Univ

IEEE 1596-1992 SCI

• Scalable Coherent Interface (SCI) Manipulating insertion in and deletion from distributed

linked lists can lead to complex protocol implementations

These complexity issues have been greatly alleviated by formalization and publication of a standard for a cache-based directory organization and protocol: IEEE 1596-1992 Scalable Coherent Interface (SCI)

Several commercial machines use this protocol• Sequent NUMA-Q, 1996• Convex Exemplar, Convex Computer Corp. 1993• Data General, 1996

33

Korea Univ

Correctness Issues • Read Section 8.4 in the Culler’s book

Serialization to a location for coherence Serialization across locations for sequential consistency Deadlock, Livelock, and Starvation

34

Korea Univ

Example: Write Atomicity

35

• A common solution for write atomicity in an invalidation-based scheme is for the current owner of a block (the main memory or the processor holding the dirty copy in its cache) to provide the appearance of atomicity by not allowing access to the new value by any process until all invalidation acknowledgements for the write have returned