Hyperspectral Unmixing Via Sparse Regression Optimization ... · the multiple sinal classification...

Transcript of Hyperspectral Unmixing Via Sparse Regression Optimization ... · the multiple sinal classification...

-

José M. Bioucas-Dias

Instituto de Telecomunicações

Instituto Superior Técnico

Technical University of Lisbon

Portugal

Hyperspectral Unmixing Via Sparse Regression

Optimization Problems and Algorithms

MAHI, Nice 2012

TexPoint fonts used in EMF.

Read the TexPoint manual before you

delete this box.: AAAAAAAAAAAAAAAAAA

Joint work with Mário A. T. Figueiredo

-

Outline

Introduction to hyperspectral unmixing

Solving convex sparse hyperspectral unmixing and related

convex inverse problems with ADMM

Concluding remarks

Hyperspectral unmixing via sparse regression.

Recovery guarantees/convex and nonconvex solvers

Improving hyperspectral sparse regression structured sparsity

dictionary pruning

-

Hyperspectral imaging (and mixing)

3

-

Linear mixing model (LMM)

Incident radiation interacts only

with one component

(checkerboard type scenes)

Hyperspectral linear

unmixing Estimate

4

-

Hyperspectral unmixing

Alunite Kaolinite Kaolinite #2

VCA [Nascimento, B-D, 2005]

AVIRIS of Cuprite,

Nevada, USA

R – ch. 183 (2.10 m)

G – ch. 193 (2.20 m)

B – ch. 207 (2.34 m)

5

-

NIR tablet imaging

6 [Lopes et al., 2010]

-

Spectral linear unmixing (SLU)

Subject to the LMM:

ANC: abundance

nonnegative

constraint ASC: abundance

sum-to-one

constraint

SLU is a blind source separation problem (BSS) )

Given N spectral vectors of dimension m:

Determine:

The mixing matrix M (endmember spectra)

The fractional abundance vectors

7

-

Geometrical view of SLU

probability simplex ( )

(p-1) - simplex

Inferring M inferring the vertices of the simplex

8

-

Pure pixels

Minimum volume simplex (MVS)

MVS works MVS works MVS does not work

No pure pixels No pure pixels

9 [B-D et al., IEEE JSTATRS, 2012]

-

Sparse regression-based SLU

Unmixing: given y and A, find the sparsest solution of

Advantage: sidesteps endmember estimation

Disadvantage: Combinatorial problem !!!

Key observation: Spectral vectors can be expressed as linear

combinations of a few pure spectral signatures obtained from a

(potentially very large) spectral library

[Iordache, B-D, Plaza, 11, 12]

0

0

0

0

0

0

13

-

Sparse reconstruction/compressive sensing

Key result: a sparse signal is exactly recoverable from an

underdetermined linear system of equations in a computationally

efficient manner via convex/nonconvex programming

[Candes, Romberg, Tao, 06], [Candes,Tao, 06], [Donoho, Tao, 06], [Blumensath, Davies, 09]

is the unique solution of if

is the solution of the optimization problem

NP-hard

14

-

Bayesian CS

CoSaMP – Compressive Sampling

Matching Pursuit

IHT – Iterative Hard Thresholding

GDS - Gradient Descent Sparsification

HTP – Hard Thresholding Pursuit

MP - Message Passing

[Blumensath, Davies, 09]

[Foucart, 10]

[Villa Schniter, 2012]

[Ji et al., 2008]

[Needell, Tropp, 2009]

[Garg Khandekar, 2009]

Optimization strategies to cope with P0 NP-hardness

(BP – Basis Pursuit) [Chen et al., 2001]

Sparse reconstruction/compressive sensing

Convex relaxation Approximation algorithms

(BPDN – BP denoising)

(LASSO) [Tibshirani, 1996]

15

-

Exact recovery of sparse vectors

Many SR algorithms ensure exact recovery provided that:

This condition is satisfied for random matrices provided that

Algorithm BP HTP CoSaMP GDP IHT

Ratio 9.243 9 27.08 18 12

Recovery guarantees: linked with the restricted isometric property (RIP)

Restricted isometric constant:

[Foucart, 10] (from ) 16

-

Example: Gaussian matrices; signed signals

17

0 20 40 60 80 100 120 140 160 180 2000

20

40

60

80

100

120

||x||0 (number of active columns of A)

SR

E (

dB

)

Signal to Reconstruction Error

BPDN

EM-GM-AMP

SNR = 25 dB

SNR = 100 dB

EM-GM-AMP

[Villa Schniter, 2012] Algorithms: (BPDN – SUnSAL) [B-D, Figueiredo, 2010] EM-BG-AMP

-

18

Example: Gaussian matrices; non-negative signals

0 20 40 60 80 100 120 140 160 180 2000

20

40

60

80

100

120

||x||0 (number of active columns of A)

SR

E (

dB

)

Signal to Reconstruction Error

BPDN

EM-GM-AMPSNR = 100 dB

SNR = 25 dB

Algorithms: (BPDN – SUnSAL) EM-BG-AMP

-

Hyperspectral libraries

0 50 100 150 200 250-0.2

-0.1

0

0.1

0.2

Normalized and mean removed

Illustration:

19

Hyperspectral libraries exhibit poor RI constants

(Mutal coherence close to 1 ) [Iordache, B-D, Plaza, 11, 12]

0 200 400 600 800 10000

0.5

1

1.5

2

2.5

BP guarantee

for p = 10

-

20

Example: Hyperspectral library; non-negative signals

1 2 3 4 5 6 7 8 9 100

10

20

30

40

50

60

70

80

90

100

||x||0 (number of active columns of A)

SR

E (

dB

)

Signal to Reconstruction Error

BPDN - 100 dB

EM-GM-AMP 100 dB

BPDN - 30 dB

EM-GM-AMP 30 dB

min

10

1 2 3 4 5 6 7 8 9 100

5

10

15

20

25

30

35

40

||x||0 (number of active columns of A)

SR

E (

dB

)

Signal to Reconstruction Error

BPDN - 100 dB

EM-GM-AMP 100 dB

BPDN - 30 dB

EM-GM-AMP 30 dB

min 4

Algorithms: (BPDN – SUnSAL)

Illustration:

EM-BG-AMP

-

USGS

Phase transition curves

21

undersamplig factor fractional sparsity

Gaussian

matrices

BPDN

EM-BG-AMP

(enough for many hyperspectral applications)

[Donoho, Tanner, 2005]

Results linked with the

k-neighborliness property of

polytopes

SNR = 1

SNR = 30 dB

-

19

Real data – AVIRIS Cuprite

[Iordache, B-D, Plaza, 11, 12]

-

20

Sparse reconstruction of hyperspectral data: Summary

Bad news: Hyperspectral libraries have poor RI constants

Surprising fact: Convex programs (BP, BPDN, LASSO, …) yield much

better empirical performance than non-convex state-of-the-art

competitors

Good news: Hyperspectral mixtures are highly sparse, very often p · 5

Directions to improve hyperspectral sparse reconstruction

Structured sparsity + subspace structure

(pixels in a give data set share the same support)

Spatial contextual information

(pixels belong to an image)

-

21

Beyond l1 pixelwise regularization

Rationale: introduce new sparsity-inducing regularizers to

counter the sparse regression limits imposed by the high

coherence of the hyperspectral libraries.

Let’s rewrite the LMM as

image band

regression coefficients

of pixel j

-

22

total Variation of

i-th band

Constrained total variation sparse regression (CTVSR)

[Iordache, B-D, Plaza, 11]

[Zhao, Wang, Huang, Ng, Plemmons, 12] Related work

-

Ilustrative examples with simulated data : SUnSAL-TV

Original data cube

Original abundance of EM5

SUnSAL estimate

SUnSAL-TV estimate

28

-

24

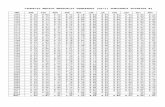

multiple measurements share the same support

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0 =

[Turlach, Venables, Wright, 2004] [Iordache, B-D, Plaza, 11, 12]

Constrained colaborative sparse regression (CCSR)

Theoretical guaranties (superiority of multichanel) : the probability of recovery

failure decays exponentially in the number of channels. [Eldar, Rauhut, 11]

-

Original x

20 40 60 80 100

50

100

150

200

250

300

Without collaborative sparseness

20 40 60 80 100

50

100

150

200

250

300

With collaborative sparseness

20 40 60 80 100

50

100

150

200

250

300

Ilustrative examples with simulated data : CSUnSAL

34

-

If , the above bound is achieved using

the multiple sinal classification (MUSIC) algorithm

Multiple measurements

29

The multiple measurement vector (MMV) problem

- number of non-null rows of

[Feng, 1997], [Chen, Huo, 2006],

[Davies, Eldar, 2012]

MMV has a unique solution iff

MMV gain

-

30

Endemember identification with MUSIC

(noiseless measurements) Y=AX

2) Compute and set

with

1) Compute , the first p eigenvalues of

MUSIC algorithm

-

detected endmembers

A – USGS (¸ 3±) p = 10, N=5000, X - uniform over the simplex, SNR = 1,

50 100 150 200 250 3000

0.02

0.04

0.06

0.08

0.1

31

Examples (simulated data)

50 100 150 200 250 3000

0.02

0.04

0.06

0.08

0.1Gaussian iid noise, SNR = 25 dB

gap

gap

-

32

Examples (simulated data)

colored noise, SNR = 25 dB

cause of the large projection errors: poor identification of the

subspace signal

cure: identify the signal subspace

50 100 150 200 250 3000

0.02

0.04

0.06

0.08

0.1

-

33

Signal subspace identification

colored noise, SNR = 25 dB (incorrect signal subspace)

50 100 150 200 250 3000

0.02

0.04

0.06

0.08

0.1

colored noise, SNR = 25 dB (signal subspace identified

with HySime, [BD, Nascimento, 2008])

50 100 150 200 250 3000

0.02

0.04

0.06

0.08

0.1

-

34

2) Compute and define the index set

Proposed MUSIC – Colaborative SR algorithm

[Kim, Lee, Ye, 2012] Related work: CS-MUSIC

(N < k and iid noise)

3) Solve the colaborative sparse regression optimization

[B-D, Figueiredo, 2012]

1) Estimate the signal subspace using, e.g. the HySime algorithm.

MUSIC-CSR algorithm [B-D, 2012]

-

acummulated

abundances

35

MUSIC – CSR results

A – USGS (¸ 3±), Gaussian shaped noise, SNR = 25 dB, k = 5, m = 300,

500 1000 1500 2000 2500 3000 3500 4000 4500 5000

50

100

150

200

250

300

true

endmembers

SNR = 11.7 dB

computation time ' 10 sec MUSIC-CSR

CSR SNR = 0 dB

computation time ' 600 sec

-

33

• size: 350x350 pixels

• spectral library: 302 materials

(minerals) from the USGS library

• spectral bands: 188 out of 224

(noisy bands were removed)

• spectral range: 0.4 – 2.5 um

• spectral resolution: 10 nm

• “validation” map: Tetracorder***

Results with CUPRITE

0 50 100 150 200 250 300 350 400 4500

0.05

0.1

0.15

0.2

-

34

Note: Good spatial distribution of the endmembers

Processing times: 2.6 ms/pixel using the full library; 0.22ms/pixels using the

pruned library with 40 members

Results with real data

-

Convex optimization problems in SLU

Constrained least squares (CLS)

Constrained sparse regression (CSR)

Fully constrained least squares (FCLS)

38

ANC ASC

-

36

CBP denoising (CBPDN)

Convex optimization problems in SLU

Constrained basis pursuit (CBP)

Constrained total variation (CTV)

Constrained colaborative sparse regression (CCSR)

-

37

Convex optimization problems in SLU

Structure of the optimization problems:

convex functions

convex set

Source of difficulties: large scale ( ); nonsmoothness

40

Line of attack: alternating direction method of multiplies (ADMM)

[Glowinski, Marrocco, 75], [Gabay, Mercier, 76]

-

Alternating Direction Method of Multipliers (ADMM)

Unconstrained (convex) optimization problem:

ADMM [Glowinski, Marrocco, 75], [Gabay, Mercier, 76]

Interpretations: variable splitting + augmented Lagrangian + NLBGS;

Douglas-Rachford splitting on the dual [Eckstein, Bertsekas, 92];

split-Bregman approach [Goldstein, Osher, 08]

41

-

A Cornerstone Result on ADMM [Eckstein, Bertsekas, 1992]

Consider the problem

Let and be closed, proper, and convex and have full column rank.

The theorem also allows for inexact minimizations, as long as the

errors are absolutely summable.

Let be the sequence produced by ADMM, with ;

, then, if the problem has a solution, say , then

[He, Yuan, 2011] Convergence rate:

42

-

Proper, closed, convex functions Arbitrary matrices

We adopt:

Consider a more general problem

There are many ways to write as

ADMM for two or more functions [Figueiredo, B-D, 09]

Another approach in [Goldfarb, Ma, 09, 11]

43

-

Applying ADMM to more than two functions

Conditions for easy applicability:

inexpensive matrix inversion

inexpensive proximity operators

44

-

Constrained sparse regression (CSR)

Problem

Equivalent formulation

indicator of the first

orthant Template:

Spectral unmixing by split augmented Lagrangian (SUnSAL) [B-D, Figueiredo, 2010]

Related algorithm (split-Bregman view) in [Szlam, Guo, Osher, 2010]

Matrix inversion can be precomputed

(typical sizes 200~300 x 500~1000)

Proximity operators: Mapping:

45

-

Concluding remarks

Sparse regression framework, used with care, yields effective

hyperspectral unmixing results.

Critical factors High mutual coherence of the hyperspectral libraries

Non-linear mixing and noise

Acquisition and calibration of hyperspectral libraries

Favorable factors Hyperspectral mixtures are highly sparse

ADMM is a very flexible and efficient tool solving the hyperspectral

sparse regression optimization problems

-

References

J. Bioucas-Dias, A. Plaza, N. Dobigeon, M. Parente, Q. Du, P. Gader, and J. Chanussot,

"Hyperspectral unmixing overview: geometrical, statistical, and sparse regression-based

approaches", IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing,

vol. 5, no. 2, pp. 354-379, 2012

S. Chen, D. Donoho, and M. Saunders, “Atomic decomposition by basis pursuit,” SIAM Rev., vol.

43, no. 1, pp. 129–159, 2001.

M. V. Afonso, J. Bioucas-Dias, and M. Figueiredo, “An augmented Lagrangian approach to the

constrained optimization formulation of imaging inverse problems”, IEEE Transactions on Image

Processing, vol. 20, no. 3, pp. 681-695, 2011.

J. Bioucas-Dias and M. Figueiredo, “Alternating direction algorithms for constrained sparse

regression: Application to hyperspectral unmixing”, in 2nd IEEE GRSS Workshop on

Hyperspectral Image and Signal Processing-WHISPERS'2010, Raykjavik, Iceland, 2010.

D. Gabay and B. Mercier, “A dual algorithm for the solution of nonlinear variational problems via

finite-element approximations,” Comput. Math. Appl., vol. 2, pp. 17–40, 1976.

-

M-D, Iordache, J. Bioucas-Dias and A. Plaza, "Sparse unmixing of hyperspectral data",

IEEE Transactions on Geoscience and Remote Sensing, vol. 49, no. 6, pp. 2014-2039, 2011

References

E. Esser, “Applications of Lagrangian-based alternating direction methods and connections to split-

Bregman,” Comput. Appl. Math., Univ. California, Los Angeles, Tech. Rep. 09–31, 2009.

R. Glowinski and A. Marroco, “Sur l’approximation, par elements finis d’ordre un, et la

resolution, par penalisation-dualité d’une classe de problemes de dirichlet non lineares,” Rev.

Française d’Automat. Inf. Recherche Opérationelle, vol. 9, pp. 41–76, 1975.

T. Goldstein and S. Osher, “The split Bregman algorithm for regularized problems,” Comput.

Appl. Math., Univ. California, Los Angeles, Tech. Rep. 08–29, 2008.

J. Eckstein, D. Bertsekas, “On the Douglas-Rachford splitting method and the proximal point

algorithm for maximal monotone operators”, Math. Progr., vol. 5, pp. 293-318, 1992

B. He and X. Yuan, X, “On convergence rate of the Douglas-Rachford operator splitting method”,

2011.

-

References

J. Nascimento and J. Bioucas-Dias, "Vertex component analysis: a fast algorithm to unmix

hyperspectral data", IEEE Transactions on Geoscience and Remote Sensing, vol. 43, no. 4, pp. 898-910,

2005.

M.-D. Iordache, J. Bioucas-Dias, and A. Plaza, "Hyperspectral unmixing with sparse group lasso",

in IEEE International Geoscience and Remote Sensing Symposium (IGARSS'11), Vancouver,

Canada, 2011.

M.-D. Iordache, J. Bioucas-Dias and A. Plaza, "Total variation spatial regularization for sparse

hyperspectral unmixing", IEEE Transactions on Geoscience and Remote Sensing, vol. PP, no. 99,

pp. 1-19, 2012.

C. Li, T. Sun, K. Kelly, and Y. Zhang, “A Compressive Sensing and Unmixing Scheme for

Hyperspectral Data Processing”, IEEE Transactions on Image Processing, 2011

G. Martin, J.Bioucas-Dias, A. Plaza, “Hyperspectral coded aperture (hyca): a new

technique for hyperspectral compressive sensing”, IEEE International Geoscience and

Remote Sensing Symposium (IGARSS'12), Munich, Germany, 2012

-

References

A Turlach, W. Venables, and S. Wright, “Atomic decomposition by basis pursuit,” SIAM Rev.,

vol. 43, no. 1, pp. 129–159, 2001.

R. Tibshirani, “Regression shrinkage and selection via the lasso”, “Journal of the Royal Statistical

Society. Series B (Methodological”, pp. 267--288, 2006.

X. Zhao, F. Wang, T. Huang, M. Ng and R. Plemmons, “Deblurring and Sparse Unmixing For

Hyperspectral Images”, Preprint, May 2012.

Q. Zhang, R. Plemmons, D. Kittle, D. Brady, S. Prasad, “Joint segmentation and reconstruction of

hyperspectral data with compressed measurements”, Applied Optics, 2011.

P. Sprechmann, I. Ramirez, G. Sapiro, G., and, Y. Eldar, “Collaborative hierarchical sparse

modeling”, IEEE 4th Annual Conference on Information Sciences and Systems (CISS), 2010,

pp. 1-6, 2010