Human Emotion Detection and Recognition from Still · PDF fileHuman Emotion Detection and...

Transcript of Human Emotion Detection and Recognition from Still · PDF fileHuman Emotion Detection and...

Human Emotion Detection and Recognition from Still Images

Zareena

Student, VTU RC, Mysore

E-mail ID- [email protected]

Abstract

For the interaction among human and machine

communication systems, extraction and understanding of

Emotion is of high importance. The most expressive way

to display the human’s emotion is through facial

expression analysis. Facial expression is an important

channel for human communication and can be applied in

many real applications. This paper presents and

implements the detection and recognition method of facial

expressions and emotion from still image. The main objective of this paper is the real-time implementation of

a facial emotion recognition system.

Keywords: Emotion Recognition, Face Recognition,

Bezier curve.

1.INTRODUCTION

Recognition and analysis of human facial expressionand

emotion have attracted a lot of interest inthe past few decades, and they have been researchedextensively in

neuroscience, cognitive sciences,computer sciences and

engineering [2].These researches focus not only on

improving human-computerinterfaces, but also on

improving the actions which computer takes on feedback

by the user. Whilefeedback from the user has traditionally

been occurred by using keyboard or mouse, in the recent,

smartphoneand camera enable the system to see and

watch theuser’s activities, and this leads that the user can

easily utilize intelligent interaction. Human interactwith

each other not only through speech, but alsothrough

gestures, to emphasize a certain part of thespeech, and to

display of emotions. Emotions of theuser are displayed by

visual, vocal and other physiological means [3]. There are

many to display human’s emotion, and the most natural

way to display emotions is using facial expressions. This

paper proposes a scheme to automatically segment an

input still image, and to recognize facial emotion using detection of color-based facial feature map and

classification of emotion with simple curve is proposed

and implemented. The motivation of this paper is to study

the effect of facial landmark, and to implement an

efficient recognition algorithm of facial emotion with still

images. This paper is organized as follows: next section

reviews related works about emotion recognitionthrough

facial expression analysis. Section 3 presents the

proposed system that uses two main step to recognize and

classify the facial emotion from still image. Experimental

results are shown in section 4, and section 5 summarizes

the study and its future works.

2. RELATED WORKS

A number of papers recently exist on automatic affect

analyze and recognition of human emotion [6]. Research

on recognizing emotion through facial expression was

pioneered by Ekman [4], who started their work from the

psychology perspective. Ira et al [3] proposed architecture

of Hidden Markov Models for automatically segment and

recognize human facial expression from video sequences.

Yashnari [10] investigated a method for facial expression

recognition for a human speaker by using thermal image

processing and a speech recognition system. He improved

speech recognition system to save thermal images, just

before and just when speaking the phonemes of the first

and last vowels, through intentional facial expressions of

five categories with emotion. Spiros et al [8] suggested

the extraction of appropriate facial features and consequent recognition of the user’s emotional state that

could be robust to facial expression variations among

different users. They extracted facial animation

parameters defined according to the ISO MPEG-4

standard by appropriate confidence measures of the

estimation accuracy. But, most of these research are used

a method to recognize emotion based on videos using

extractingFrames. Several prototype systems were

published that can recognize deliberately produced action

units in either frontal view face images [14] or profile

view face images [13]. These systems employ different

approaches including expert rules and machine learning

methods such as neural networks, and use either feature-

based image representation or appearance-based image

representation. Val star et al [17] employs probabilistic,

statistical and ensemble learning techniques, which seem

Zareena, Int.J.Computer Technology & Applications,Vol 5 (3),1097-1101

IJCTA | May-June 2014 Available [email protected]

1097

ISSN:2229-6093

to be particularly suitable for automatic action unit

recognition from, face image sequences.

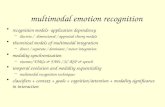

3. PROPOSED METHOD

The proposed method for recognition of facial expression

and emotion is composed of two major steps: first one is a

detecting and analysis of facial area from original input

image, and next is a verification ofthe facial emotion of characteristic features in the region of interest. A block

diagram of the proposed method is depicted in Figure 1.

ffffff

Fig 1: Proposed System Flowchart.

In the first step for face detection, the proposed method

locates and detects a face in a color still image based on

the skin color and the region of eye and mouth. Thus, the

algorithm first extract the skin color pixels by initialized

spatial filtering, based on the result from the lighting

compensation. Then, the method estimates a face position and the region of facial location for eye and

mouth by feature map.

After obtaining the region of interest, we extract points of

the feature map to apply Bezier curve on eye and mouth.

3.1System Architecture There are 10 basic building blocks of the emotion

recognition as enlisted below:

Live Streaming: Image acquisition.

Skin Color Segmentation: Discriminates between face

and non-face parts.

Face Detection: Locates position of face in the given

image frame using skin pixels.

Eye Detection: Identifies position of eyes in the image

frame.

Lip Detection:Determines lip coordinates on face.

Longest Binary Pattern: Skin and non-skin pixels are

converted to white and black pixels, respectively.

Bezier Curve Algorithm: AppliesBezier curve equation

on the facial feature points.

Emotion Detection: Emotion is detected by pattern

matching using values from database.

Database: Stores values derived from Bezier curve

equation. Output Display: Rendersoutput from detection phase on

screen.

Fig 2: System Architecture.

3.1.1. Live Streaming

Live streaming is the fundamental step of image

acquisition in real time, where image frames are received

using streaming media. In this stage, the application

receives images from built-in webcam or external video

camera device. The streaming continues until input image

frame is acquired.

3.1.2. Skin Color Segmentation

Skin colour segmentation, discriminates between skin and

non-skin pixels. Skin segmentation permits face detection

to focus on reduced skin areas in preference to the entire

image frame. Skin segmentation has proved to be

instrumental as regions containing skin can be located

fast by exploring pixels having skin color. Before

performing skin color segmentation, we first contrast the

image which involves separation of brightest and darkest

image areas. Further, the largest continuous region is

spotted to identify potential candidate faces. Then the

probability of this largest connected area becoming a face is determined and is further used in subsequent steps for

emotion detection.

Step 1(Detection)

Step 2(Recognition)

Colour Space

conversion

Min-Max

Normalization

Morphology Based

Operation

Colour-based

Feature Map

Detection

Classification of

Facial Emotion

Computing

Hausdorff

Distance

Apply Bezier Curve

Big Connected

Operation

Live

Streaming

Input

Image

Frame

Bezier

curve

Algorithm

Eye

Detection

Lip

Detection

Skin colour

Segmentation

Face

Detection

Longest

Binary

Pattern

Output Display

Emotion

Detection

Database

Zareena, Int.J.Computer Technology & Applications,Vol 5 (3),1097-1101

IJCTA | May-June 2014 Available [email protected]

1098

ISSN:2229-6093

3.1.3. Face Detection

Face detection can be considered as a specific case of

Object-class detection that discovers size and location of

objects, listed in class, within an input image. In this step,

face is detected by identifying facial features while

ignoring all non-face elements. To perform face detection

image is first converted into a binary form and scan the

image for forehead. Thenit searches for maximum width of continuous white pixels till we reach eye brows. Later

the face is cut in such a way that its height is 1.5 times

that of its width.

3.1.4. Eye Detection

For eyes detection too, RGB image is converted into

binary image and then scan image from W/4 (where W is

width) to remaining width in order to find the middle

position of the eyes. Next, we determine upper position of

the two eyebrows. For left eye search ranges from W/8 to

mid (middle position between eyes) of the imageand for

right eye from mid of the image to W-W/8 of the total

width. We then place continuous black pixels vertically in

order to get eyes and eyebrows connected. To achieve

this for left eye, pixel lines are placed vertically between

mid/2 to mid/4 and in case of right eye vertical black pixel lines are placed between {mid + (W-mid)/4 } to

{mid + 3*(W – mid)/4}. Further search is done for black

pixel lines, horizontally, from the mid position to the

starting position so as to locate the right side of left eye.

To detect left side of right eye we scan the starting to mid

position of black pixels within the upper and lower

position of right eye. The left side of left eye is simply the

starting width of image and right eye’s right side is the

ending width. Using this we cut the left side, the right

side and the upper and lower positions of each eye from

the previous RGB image.

3.1.5. Lip Detection

Another important feature to be detected to analyze

emotions is Lip. It helps us to know whether shape of lips

is: plain, slightly curved, broadly curved,pout, parted lips. This module outputs a lip box having dimensions that can

contain entire lip in the box and some part of nose.

3.1.6. Longest Binary Pattern

It is extremely important that the area of interest be made

smooth so as to detect the exact emotions. So we convert

the skin pixels in that box to white pixels and rest of them

to black. Then to find a particular region, say lip, we find

the biggest connected region of monotone colour, here its

longest sequences of black pixels. This results in longest

binary pattern.

3.1.7. Bezier Curve Algorithm

Most notable applications of Bezier Curve include

interpolation, approximation, curve fitting, and object

representation. The aim of the algorithm is to find points

in the middle of 2 nearby points and to repeat this until

there is no more iteration. The new values of points

results in curve. The famous Beizer equation is accurate

formulation of this idea.

3.1.8. Emotion Detection

Next step after detecting features is to recognize the emotions. For thisthe entireBezier curve is converted into

larger regions. These static curves are then compared with

the values already present in database. Then the nearest

matching pattern is picked and related emotion is given as

output. If the input doesn’t match the ones in database,

then average height for each emotion in database is

computed & on basis of this a decision is made.

3.1.9. Database

Database contains existing values that can be later used in

comparisons to find out the closest matching emotion to

be displayed as output.

3.1.0. Output Display

Once the comparison is done, the output will contain the emotion of the portrayed input.

4. EXPERIMENTAL RESULTS

The expressions such as smile, sad, surprise and neutral

are considered for the experiment of face recognition. The

faces with expressions are compared against the model

face database consisting of neutral faces. All the face

images are normalized using some parameters. The

Bezier points are interpolated over the principal lines of

facial features. These points for each curve form the

adjacent curve segments. The Hausdroff distance is

calculated based on the curve segments. Then,

understanding and decision of facial emotion is chosen by

measuring similarity in faces. The ground truth set

forestimating the performance of the algorithm is

provided with the categories in the experiments, which are correct if the decision is belonged to the correct

category. Figure 3 shows how to move the control points

across different subjects (e.g., neutral and smile) and to

interpret a tracking facial features with Bezier curve and

extracted feature point interpolation. To categorize

facialemotion, first we need to determine the expressions

from movements of facial control points. Ekman et al. [4]

have produced a system for describing visually

distinguishable facial movements (called Facial Action

Coding System, FACS), which is based on enumeration

of all action units (AUs). There are 46 AUs in FACS that

account for changes in facial expression, and combination

rules of the AUs are considered to determine form

defining emotion specified expressions. The rules

forfacial emotion are created by basic AUs from FACS,

and the decision of recognition is determined fromtheir

rules, as given in Table 1.

Zareena, Int.J.Computer Technology & Applications,Vol 5 (3),1097-1101

IJCTA | May-June 2014 Available [email protected]

1099

ISSN:2229-6093

Table 1: Facial Emotion Recognition

Emotions Movement of AU

Smile Eye opening is narrowed, Mouth is opening, and Lip corners are

pulled obliquely

Sad Eye is tightened closed, and Lower lip

corner depressor

Surprise Eye and Mouth are opened, Upper

eyelid raiser, and Mouth stretch

Fig.3:Examples of images for three subjects from

sample database: Neutral facial emotion at first row,

and smiling for happy at the bottom.

5. CONCLUSION AND FUTURE WORKS

In this paper, a simple approach for recognition of the

facial expression analysis is presented and

implemented.This paper uses the best of any other

approaches for face recognition, thus limiting the latency

in response. Moreover, it further takes a step to improvise

the emotion detection technique using Cubic Bezier

Curve implementation which is more adaptive and

resurface as the ones with utmost importance in various other fields like that of robotics, computer graphics,

automation and animation. The system works well for

faces with different shapes, complexions as well as skin

tones and senses basic emotional expressions. Although it is unable to discover compound or mixed emotions, the

system accurately detects the emotions for a single face; however this correctness reduces prominently for

multiple faces. The system can further be upgraded to

include multiple face detections. This would indeed form

a big step in areas of Human Computer Interaction, Image

Processing,Animation, Automation and Robotics.

REFERENCES

[1] Bezier curve. Wikipedia 2013.

http://en.wikipedia.org/wiki/Bezier_curve.

[2] R. Adolphs. “Recognizing Emotion from Facial

Expressions: Psychological and Neurological

Mechanisms”, Behavioral and Cognitive Neuroscience

Reviews, 2002.

[3] I. Cohen, A. Garg, and T. S. Huang, “Emotion Recognition from Facial Expressions using Multilevel

HMM”, In Proc. of the 2000 NIPS Workshop on Affective

Computing, Denver, Colorado, USA, December 2000.

[4] P. Ekman and W. Friesen, “Facial Action Coding

System (FACS): Investigator’s Guide”, Conculting

Psychologists Press, 1978.

[5] R. C. Gonzalea, R. E.Woods, and S. L. Eddins,

“Digital Image Processing using Mat lab”, Prentice Hall, 2004.

[6] G. H. and P. M., “Automatic Temporal Segment

Detection and Affect Recognition from Face and Body

Display”, IEEE Transactions on Systems, Man, and

Cybernetics - Part B, 39(1):64–84, 2009.

[7] R.L. Hsu, M. Abdel-Mattaleb, and A. K. Jain,

“Face Detection in Color Images”, IEEE Transactions

onPattern Analysis and Machine Intelligence, 2002.

[8] S. V. Ioannou, A. T. Raouzaiou, V. A. Tzouvaras,

T. P. Mailis, K. C. Karpouzis, and S. D. Kollias, “Emotion Recognition through Facial Expression

Analysis based on a Neurofuzzy Network”, Neural

Networks,18(4):423–435, 2005.

[9] S.H. Kim and Y. J. Ahn, “An Approximation of

Circular Arcs by Quartic Bezier Curves”, Computer

AidedDesign, 39(6):490–493, 2007.

[10] Y. Koda, Y. Yoshitomi, M. Nakano, and M.

Tabuse, “Facial Expression Recognition for Speaker

using Thermal Image Processing and Speech Recognition

System”, In Proc. of the 18th IEEE (RO-MAN’09),

Toyama, Japan, pages 955–960. IEEE.

[11] Y.H. Lee, T.K. Han, Y.S. Kim and S.B. Rhee, “An

Efficient Algorithm for Face Recognition in Mobile

Environments”, Asian Journal of Information

Technology, 4(8):796–802, 2005.

[12] M. J. Lyons, M. Kamachi, and J.Gyoba, “Japanese

Femail Facial Expressions (JAFFE)”, Database of digital

images, 1997.

[13] P. M. and I. Patras, “Dynamics of facial

expression: Recognition of facial actions and their

temporal segments from face profile image sequences”,

IEEE Transactions on Systems, Man and Cybernetics,

36(2):433–449, 2006.

[14] P. M. and L. Rothkrantz, “Facial Action

Recognition for Facial Expression Analysis from Static

Face Images”, IEEE Transactions on Systems, Man and

Cybernetics - Part B, 34(3):1449–1461, 2004.

[15] M. R. Rahman, M. A. Ali, and G. Sorwar,

“Finding Significant Points for Parametric Curve

GenerationTechniques”, Journal of Advanced

Computations, 2(2), 2008.

Zareena, Int.J.Computer Technology & Applications,Vol 5 (3),1097-1101

IJCTA | May-June 2014 Available [email protected]

1100

ISSN:2229-6093

[16] B. Sam and B. R. Samuel, “3D Computer Graphics

-A Mathematical Introduction with OpenGL”, Cambridge

University Press, 2003.

[17] N. Sebe, I. Cohen, T. Gevers, and T. S. Huang,

“Multimodal Approaches for Emotion Recognition: A

Survey”, Society of Photo Optical, January 2005.

[18] C. Thomas and G. Giraldi, “A New Ranking

Method for Principal Components Analysis and its

Applicationto Face Image Analysis”, Image and Vision

Computing, 28(6):902–913, 2010.

Zareena, Int.J.Computer Technology & Applications,Vol 5 (3),1097-1101

IJCTA | May-June 2014 Available [email protected]

1101

ISSN:2229-6093