Gerrit Polder

description

Transcript of Gerrit Polder

-

Spectral imaging for measuringbiochemicals in plant material

Gerrit Polder

-

Spectral imaging for measuringbiochemicals in plant material

Proefschrift

ter verkrijging van de graad van doctoraan de Technische Universiteit Delft,

op gezag van de Rector Magnificus prof. dr. ir. J.T. Fokkema,voorzitter van het College voor Promoties,

in het openbaar te verdedigen op donderdag 28 oktober 2004 om 10:30 uur

door

Gerrit POLDER

elektrotechnisch ingenieurgeboren te Dirksland.

-

Dit proefschrift is goedgekeurd door de promotor:

Prof. dr. I.T. Young

Samenstelling promotiecommissie:

Rector Magnificus voorzitterProf. dr. I.T. Young Technische Universiteit Delft, promotorDr. ir. G.W.A.M. van der Heijden Plant Research InternationalProf. dr. S.M. de Jong Universiteit van UtrechtProf. dr. O. van Kooten Wageningen UniversiteitProf. dr. A.J.W.G. Visser Wageningen UniversiteitProf. dr. S. de Vries Technische Universiteit DelftDr. M.F. van Wordragen Agrotechnology & Food InnovationsProf. dr. ir. L.J. van Vliet Technische Universiteit Delft, reservelid

This work was partially supported by the Dutch Ministry of Economic Affairs, throughtheir Innovation-Driven Research Programme (IOP Beeldverwerking), project numberIBV98004.

Advanced School for Computing and Imaging

This work was carried out in the ASCI graduate school.ASCI dissertation series number 105.

ISBN: 90-9018537-2c 2004, Gerrit Polder, All rights reserved.

-

Voor Margriet

-

Contents

1. Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11.1 Problem statement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11.2 Optical properties of food . . . . . . . . . . . . . . . . . . . . . . . . . . . 21.3 Spectral image analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31.4 Imaging spectroscopy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41.5 Overview of the thesis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2. Calibration and characterization of imaging spectrographs . . . . . . 112.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112.2 System setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142.3 Characterization and calibration methods . . . . . . . . . . . . . . . . . . 17

2.3.1 Noise sources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172.3.2 Sensitivity and SNR . . . . . . . . . . . . . . . . . . . . . . . . . 182.3.3 Spectral calibration . . . . . . . . . . . . . . . . . . . . . . . . . . 192.3.4 Spectral and spatial resolutions . . . . . . . . . . . . . . . . . . . . 202.3.5 Calculation spectral reflectance . . . . . . . . . . . . . . . . . . . 20

2.4 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212.4.1 Noise sources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212.4.2 Sensitivity and SNR . . . . . . . . . . . . . . . . . . . . . . . . . 252.4.3 Spectral calibration . . . . . . . . . . . . . . . . . . . . . . . . . . 252.4.4 Spectral and spatial resolution . . . . . . . . . . . . . . . . . . . . 25

2.5 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3. Spectral image analysis for measuring ripeness of tomatoes . . . . 393.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 393.2 Imaging spectrometry . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 403.3 Color invariance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.3.1 The reflection model . . . . . . . . . . . . . . . . . . . . . . . . . 41

-

ii Contents

3.3.2 Color constancy . . . . . . . . . . . . . . . . . . . . . . . . . . . 423.3.3 Normalization . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.4 Linear discriminant analysis . . . . . . . . . . . . . . . . . . . . . . . . . 433.5 Experiment: Image recording of tomatoes . . . . . . . . . . . . . . . . . . 443.6 Data analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.6.1 Image preprocessing . . . . . . . . . . . . . . . . . . . . . . . . . 453.6.2 Linear discriminant analysis . . . . . . . . . . . . . . . . . . . . . 46

3.7 Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 473.8 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4. Measuring surface distribution of carotenes and chlorophyll in ripeningtomatoes using imaging spectrometry . . . . . . . . . . . . . . . . . . 554.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 564.2 Materials and methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

4.2.1 Tomato samples . . . . . . . . . . . . . . . . . . . . . . . . . . . 564.2.2 HPLC analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 574.2.3 Imaging spectrometry . . . . . . . . . . . . . . . . . . . . . . . . 584.2.4 Data preprocessing . . . . . . . . . . . . . . . . . . . . . . . . . . 584.2.5 Partial least squares regression . . . . . . . . . . . . . . . . . . . . 59

4.3 Results and discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . 614.3.1 HPLC analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . 614.3.2 Spectroscopic data and preprocessing . . . . . . . . . . . . . . . . 634.3.3 Predicting concentrations with PLS regression . . . . . . . . . . . 664.3.4 Distribution of compounds . . . . . . . . . . . . . . . . . . . . . . 71

4.4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

5. Tomato sorting using independent component analysis on spectral im-ages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 775.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 775.2 Independent component analysis . . . . . . . . . . . . . . . . . . . . . . . 79

5.2.1 Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 795.2.2 Preprocessing: whitening and dimension reduction . . . . . . . . . 805.2.3 FastICA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

5.3 ICA estimation on spectral images of tomatoes . . . . . . . . . . . . . . . 805.4 Results and discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . 845.5 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

6. Detection of Fusarium in single wheat kernels using spectral imaging 916.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 916.2 Material and methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

6.2.1 Material . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 936.2.2 Spectral Imaging . . . . . . . . . . . . . . . . . . . . . . . . . . . 936.2.3 Recording system . . . . . . . . . . . . . . . . . . . . . . . . . . . 936.2.4 TaqMan real-time PCR analysis . . . . . . . . . . . . . . . . . . . 94

-

Contents iii

6.2.5 Image preprocessing . . . . . . . . . . . . . . . . . . . . . . . . . 956.2.6 Spectral preprocessing . . . . . . . . . . . . . . . . . . . . . . . . 966.2.7 Partial least squares regression . . . . . . . . . . . . . . . . . . . . 976.2.8 Fuzzy c-means clustering . . . . . . . . . . . . . . . . . . . . . . . 99

6.3 Results and discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . 996.3.1 Light absorption and wavelength ratio . . . . . . . . . . . . . . . . 996.3.2 Partial least squares regression . . . . . . . . . . . . . . . . . . . . 1006.3.3 Fuzzy c-means clustering . . . . . . . . . . . . . . . . . . . . . . . 104

6.4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

7. Visualization of spectral images . . . . . . . . . . . . . . . . . . . . . . 1097.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1097.2 Spectral based representation . . . . . . . . . . . . . . . . . . . . . . . . . 1107.3 Image based representation . . . . . . . . . . . . . . . . . . . . . . . . . . 1117.4 Feature based representation . . . . . . . . . . . . . . . . . . . . . . . . . 1117.5 Combining spectral, image and feature based representation . . . . . . . . 112

7.5.1 Combination of image and spectral display . . . . . . . . . . . . . 1127.5.2 Combination of image and feature display . . . . . . . . . . . . . . 1137.5.3 Volume visualization techniques applied to spectral images . . . . . 115

7.6 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

8. General discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1218.1 Spectral information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

8.1.1 Spectral classifiers . . . . . . . . . . . . . . . . . . . . . . . . . . 1228.1.2 Spectral data reduction . . . . . . . . . . . . . . . . . . . . . . . . 123

8.2 Combining spectral and spatial classifiers . . . . . . . . . . . . . . . . . . 1268.2.1 Sequential spectral and spatial classifiers . . . . . . . . . . . . . . 1278.2.2 Parallel spectral and spatial classifiers . . . . . . . . . . . . . . . . 1288.2.3 Integrated spectral and spatial classifiers . . . . . . . . . . . . . . . 132

8.3 Spectral imaging in future applications . . . . . . . . . . . . . . . . . . . . 134

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

Samenvatting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Curriculum vitae . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Dankwoord . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

-

iv Contents

-

Chapter 1Introduction

1.1 Problem statement

The demands for high quality fruits and other plant material are increasing. New consumerdemands are aimed at taste, ripeness, and health-promoting compounds. These criteria areoften related to the presence, absence and spatial distribution patterns of specific compoundssuch as chlorophyll, carotenes, plant phenolics, fatty acids and sugars. Other important cri-teria are the absence of mycotoxins produced by certain fungi. All these biochemicals canbe measured with analytical chemistry equipment or molecular methods, but such measure-ments are destructive, expensive and slow. Image analysis offers an alternative for fast andnon-destructive determination of these compounds. However, the compounds can often notbe visualized in a (filtered) grey value, or RGB image.

Amongst others, machine builders of fruit sorting machines are interested in a non-destructive, quantitative, fast method for measuring quality-related compounds in plant ma-terial. This would be a tremendous step forward in sorting plant material in quality classes.

Further, such an approach of measuring specific quality compounds allows quality con-trol throughout the production and transportation chain of plant material. It can also beused for monitoring plant material during storage allowing better just-in-time delivery tothe consumers.

An objective measure of the quality of plant material offers Dutch growers a possibilityto distinguish themselves from other growers. Growers in the Netherlands generally offerhigh-quality plant material but this is difficult to reward if it can not be objectively measured.

Fast, non-destructive methods for selection of specific biochemicals are also useful inplant breeding programs. This is especially true for the pharmaceutical and fine-chemicalindustry, since they increasingly use a plant as a suitable source for environmentally friendlyand sustainable production of biochemicals. This requires, however, fast and objective cri-teria to screen and breed plant material for the presence or absence of specific compounds.

The goal of this study is to visualize and quantify the spatial distribution of specific

-

2 Chapter 1. Introduction

transmittance

diffuse transmittance

diffuse reflectancespecularreflectance

incident light absorbance

Figure 1.1: Incident light on the tissue cells of food products results in specular reflectance, diffusereflectance (diffuse) transmittance and absorbance. These strongly depend on the object material andwavelength.

biochemicals in plant material using spectral imaging.

1.2 Optical properties of food

Optical properties of objects in general are based on reflectance, transmittance, absorbance,and scatter of light by the object. The ratio of light reflected from a surface patch to thelight falling onto that patch is often referred to as the bi-directional reflectance distributionfunction (BRDF) [11]. The BRDF depends on the incident angle of the light source withrespect to the surface normal and the material properties of the object. Since there is actuallya family of such reflectance distributions, one for each source incidence angle the BRDF isa very complex function. Material properties vary from perfect diffuse reflection in alldirections (Lambertian surface), to specular reflection mirrored along the surface normal.

The physical structure of plant tissues is by nature very complex. In figure 1.1 a broadoutline of the possible interactions of light with plant tissue is given. Incident light which isnot directly reflected interacts with the structure of the different cells and the biochemicalswithin the cells. These interactions are wavelength dependent. How light energy penetratesinto the tissue depends upon factors like the optical density of the tissue and the layers belowthe tissue, the intensity and spectrum of the light source, and the angle of inflection. Thediffuse reflectance is responsible for the color of the product. The more cells are involvedin reflectance, the more useful is the chemometric information which can be extracted from

-

1.3. Spectral image analysis 3

the reflectance spectra. Instead of measuring the diffuse reflectance, it is also possible tomeasure the transmittance. In that case the chemometric information of the whole interiorof the object can be determined, but depending on the size and optical density of the productvery high incident light intensities are needed. Also spatial information will be disturbed bythe scattering of light in the object.

Abbott [1] gives a nice overview of quality measurement methods for fruits and veg-etables, including optical and spectroscopic techniques. According to Birth [3], when har-vested food, such as fruits or seeds are exposed to light, depending on the kind of productand the wavelength of the light, about 4% of the incident light is reflected at the outersurface, causing specular reflection. The remaining 96% of incident light is transmittedthrough the surface into the cellular structure of the product where it is scattered by thesmall interfaces within the tissue or absorbed by cellular constituents .

1.3 Spectral image analysis

Image analysis, or machine vision has successfully been applied in agricultural applicationsfor more than 20 years [8, 16, 24, 34]. Applications can be found in all stages of the foodproduction chain. Examples are image analysis for variety testing [37], seed sorting [2, 6,15, 35, 41], fruit harvesting [13, 19, 23, 29, 32, 40] and fruit sorting [7, 10, 12, 25, 26, 33].The first applications, made use of a monochrome grey value camera. In these cameras apixel (picture element) has a single value which is proportional to the sum of the incominglight over the wavelength spectrum from 400 to 900 nm depending on the sensitivity of thesensor and the possible use of infrared cutoff filters. Although color images were not yetavailable, color was the main parameter used for classification. Sometimes optical filterswere used to select a specific proportion of the electromagnetic spectrum. Sarkar and Wolfe[25, 26] discussed the use of computer vision in tomato sorting. In this study color wasestimated from the observed grey level at four positions on the fruit surface.

When the RGB color camera was introduced in agricultural machine vision applications,Choi et al [5] used a color camera for automatic sorting of tomatoes. Liao et al [18] usedcolor images for corn kernel hardness classification. Shearer and Payne [28] applied colormachine vision for sorting bell peppers. Noordam et al described a system for high-speedpotato grading and quality inspection using a color vision system [22]. In an RGB colorimage, a pixel contains three values, corresponding to the light intensity in the red, greenand blue part of the electromagnetic spectrum. The filters used in a color RGB camera aredesigned, in order to produce color images which are suitable for human interpretation. Formachine vision applications, however, filtering other bands of the electromagnetic spectrummight be more informative than RGB filters. For instance narrow band filters around 670 nmgive information about the amount of chlorophyll in the measured object [20]. Combinationof narrow band images at different wavelengths can be successfully applied to measureseveral compounds.

In order to imitate the human visual system, a color camera grabs images in threebands. For machine vision applications, however, using more wavelength bands can sig-nificantly improve the performance of these applications. Recently equipment has become

-

4 Chapter 1. Introduction

Rel

ativ

e re

flect

ion

450 500 550 600 650 700 7500

0.2

0.4

0.6

0.8

1

Wavelength [nm]

x

y

Spectral image

Pixel

Figure 1.2: A spectral image is a three dimensional data cube with two spatial dimensions (x and y)and one spectral dimension ( ) resembling the reflection spectrum at every pixel.

available which makes it possible to capture images in up to 300 wavelength bands. In thiscase we speak of spectral images [38], multispectral (10 bands) images or hyperspectral(>10 bands) images. Chen et al [4] give an overview of recent developments of hardwareand software for machine vision with emphasis on multispectral and hyperspectral imagingfor modern food inspection. Noordam et al described the prospects for the application ofmultispectral imaging to inline control of latent defects and diseases on French Fries [21].

1.4 Imaging spectroscopy

We can also talk about spectral image analysis as an extension of spectroscopy. Spec-troscopy is the study of light as a function of wavelength that has been transmitted, emitted,reflected or scattered from an object. Chemical bonds within the fruit absorb light energy at

-

1.5. Overview of the thesis 5

specific wavelengths. The variety of absorption processes and their wavelength dependencyallows us to derive information about the chemistry of the object.

There is a vast literature on spectroscopic methods to measure biochemicals in foodand on their relationship to maturity and quality. Amongst many others, applications aredescribed in peaches [14, 30], apples [17, 36] and tomatoes [31]. Abbott [1] gives a niceoverview of quality measurement methods for fruits and vegetables, including optical andspectroscopic techniques.

Besides imaging spectroscopy for measuring food quality, it is also used for measuringcrops in the field. Schut [27] explored the application of this technique to characterizegrass swards with respect to growth monitoring, detection of stress, and assessment of drymatter yield, clover content, nutrient content, feeding value, heterogeneity and productioncapacity. West et al [39] give a review about recent developments in the use of opticalmethods, including imaging spectroscopy, for detecting foliar disease in field crops, anddiscusses practicalness and limitations of using optical disease detection systems for cropprotection in precision pest management.

Conventional spectroscopy gives one spectrum, which is measured on a specific spot,or is a result of an integrated measurement over the whole fruit. Extending this to imagingspectroscopy gives a spectrum at each pixel of the image. This makes it possible to analyzethe spatial relationship of the chemistry of the object.

As a result of the twofold background of spectral imaging, different names have beenused in this field. Most well known terms we encounter are: spectral imaging, hyperspec-tral imaging, imaging spectrometry and imaging spectroscopy. Although these termshave a different meaning in the sense of a direct translation (i.e., spectroscopy=seeing,spectrometry=measuring, and hyperspectral = extreme many bands), the significance andperception to the image analysis community is the same: the acquisition of images in hun-dreds of registered, contiguous spectral bands such that for each picture element of an imageit is possible to derive a complete reflectance spectrum [9, 38]. Figure 1.2 shows how apixel of a spectral image represents the reflection spectrum at a certain position.

1.5 Overview of the thesis

In our research we use an experimental setup incorporating an imaging spectrograph. Theoverall characterization of this system is described in chapter 2. The strong and weakpoints are identified and this information is used in the experiments described in the rest ofthe thesis. Traditionally color image analysis is often used in fruit sorting applications, forinstance to classify ripeness. In chapter 3 color image analysis is compared with spectralimage analysis for sorting tomatoes of different maturity. In order to make it possible tohave comparable classification results over a large range of illumination conditions, the re-flectance needs to be made invariant to the light source and object geometry. This aspect isalso investigated in chapter 3, using the Shafer reflection model. In chapter 4 the surfacedistribution of lycopene, lutein, -carotene, chlorophyll-a and chlorophyll-b in tomatoesof different maturity is predicted from spectral images. In this supervised system the rela-tion between the compound concentrations measured with HPLC and the spectral images

-

6 References

is analyzed using partial least square (PLS) regression. Calibration of spectral images us-ing chemical reference measurements as described in chapter 4 is time consuming andexpensive and hampers practical applications. In chapter 5 the possibility of unsupervisedin-line calibration using Independent Component Analysis (ICA) is investigated. Chapter6 describes the application of spectral imaging to measure the contamination of wheat ker-nels with Fusarium fungi. Spectral imaging sensors provide images with a large number ofcontiguous spectral channels per pixel. Visualization of these huge data sets is not a straight-forward issue. There are three principal ways in which spectral data can be presented; asspectra, as image and in feature space. Chapter 7 describes several visualization methodsand their suitability in the different steps in the research cycle. Chapter 8 gives a generaldiscussion. Some questions which are not answered in the previous chapters are partiallyanswered by performing some small additional experiments. Implications for practical useof spectral imaging in food sorting applications are described in relation to the objectives ofthis thesis.

References

[1] J.A. Abbott. Quality measurement of fruits and vegatables. Postharvest Biology andTechnology, 15:207225, 1999.

[2] A.G. Berlage, T.M. Cooper, and R.A. Carone. Seed sorting by machine vision. Agri-cultural Engineering, 65(10):1417, 1984.

[3] G.S. Birth. How light interacts with foods. In J.J. Gaffney Jr., editor, Quality Detec-tion in Foods, pages 611, St. Joseph. MI, 1976. American Society for AgriculturalEngeneering.

[4] Y.R. Chen, K. Chao, and M.S. Kim. Machine vision technology for agricultural appli-cations. Computers and electronics in Agriculture, 36:173191, 2002.

[5] K.H. Choi, G.H. Lee, Y.J. Han, and J.M. Bunn. Tomato maturity evaluation usingcolor image analysis. Transactions of the ASAE, 38(1):171176, 1995.

[6] S.R. Draper and A.J. Travis. Preliminary observations with a computer based systemfor analysis of the shape of seeds and vegetative structures. Journal of the NationalInstitute of Agricultural Botany, 16(3):387395, 1984.

[7] R. Gardiner, D. Parry, and M. Hall. Sorting fruit using image processing. In C.F.Osborne and I.D. Svalbe, editors, Automatic Vision Technology, page C5, Victoria,Australia, 1987. Chisholm Institute of Technology.

[8] C.A. Glasbey and G.W. Horgan. Image analysis in agricultural research. In A. Stein,T.H. Jetten, and A.G.T. Schut, editors, Forum of Methodology, number 23 in Quanti-tative Approaches in Systems Analysis, pages 4354, 2001.

[9] A.F.H. Goetz, G. Vane, J.E. Solomon, and B.N. Rock. Imaging spectrometry for earthremote sensing. Science, 228:11471153, 1985.

-

References 7

[10] G.L. Graf. Automatic Detection of Surface Blemisches on Apples using Digital ImageProcessing. PhD thesis, Cornell University, Ithaca NY, 1982.

[11] B.K.P. Horn. Robot Vision. The MIT Press, Cambridge, MA, 1986.[12] M. Johnson. Automation in citrus sorting and packing. In Proc. AgriMation. I. Con-

ference and Exposition, page 63, Chicago, 1985. Chicago Press.[13] N. Kawamura, K. Namikawa, T. Fujuira, and M. Ura. Study of fruit harvesting robot

and its application on other works. In Proceedings of International Symposium onAgricultural Machinery and International Cooperation in High Technology Era, pages132138. University of Tokyo, April 1987.

[14] S. Kawano, H. Watanabe, and M. Iwamoto. Determination of sugar content in intactpeaches by near- infrared spectroscopy with fiber optics in interactance mode. Journalof the Japanese Society for Horticultural Science, 61(2):445451, 1992.

[15] P.D. Keefe and S.R. Draper. The isolation of carrot embryos and their measurementby machine vision for the prediction of crop uniformity. Journal of HorticulturalScience,, 61(4):497502, 1986.

[16] G.A. Kranzler. Applying digital image processing in agriculture. Agricultural Engi-neering,, 66(3):1113, 1985.

[17] J. Lammertyn, A. Peirs, J. De Baerdemaeker, and B. Nicolai. Light penetration prop-erties of NIR radiation in fruit with respect to non-destructive quality assessment.Postharvest Biology and Technology, 18(2):121132, 2000.

[18] K. Liao, J.F. Reid, M.R. Paulsen, and E.E. Shaw. Corn kernel hardness classificationby color segmentation. In American Society of Agricultural Engineers, page 14pp,1991.

[19] W.F. McLure. Agricultural robotics in Japan: a challenge for US Agricultural Engi-neers. In Proc. 1st Int. Conf. Robotics and Machines, page 76, St. Joseph, MN, 1984.

[20] P.M. Mehl, K. Chao, M. Kim, and Y.R. Chen. Detection of defects on selected applecultivars using hyperspectral and multispectral image analysis. Applied Engineeringin Agriculture, 18(2):219226, 2002.

[21] J.C. Noordam, W.H.A.M. van den Broek, and L.M.C. Buydens. Perspective of inlinecontrol of latent defects and diseases on French Fries with multispectral imaging. InYud Ren Chen and George E. Meyer, editors, SPIE, Food Safety and AgriculturalMonitoring, volume 5271, Providence, Rhode Island, USA, 2003.

[22] J.C. Noordam, G.W. Otten, A.J.M. Timmermans, and B. van Zwol. High-speed potatograding and quality inspection based on a color vision system. In Kenntih W. Tobin,editor, SPIE, Machine vision and its applications, volume 3966, pages 206220, SanJose, Californie, 2000.

-

8 References

[23] Jr. Parrish, E.A. and A.K. Gobsel. Pictorial pattern recognition applied to fruit har-vesting. Transactions of the ASAE, 20:822, 1977.

[24] T.V. Price and C.F. Osborne. Computer imaging and its application to some problemsin agriculture and plant science. Critical reviews in plant sciences, 9(3):235266,1990.

[25] N. Sarkar and R.R. Wolfe. Computer vision based system for quality separation offresh market tomatoes. Transactions of the ASAE, 28(5):17141718, 1985.

[26] N. Sarkar and R.R. Wolfe. Feature extraction techniques for sorting tomatoes by com-puter vision. Transactions of the ASAE, 28(3):970979, 1985.

[27] A.G.T. Schut. Imaging spectroscopy for characterisation of grass swards. PhD thesis,Wageningen University, 2003.

[28] S.A. Shearer and F.A. Payne. Color and defect sorting of bell peppers using machinevision. Transactions of the ASAE, 33(6):20452050, 1990.

[29] P.W. Sites and M.J. Delwiche. Computer vision to locate fruit on a tree. Transactionsof the ASAE, 31(1):257263, 1988.

[30] D.C. Slaughter. Nondestructive determination of internal quality in peaches and nec-tarines. Transactions of the ASAE, 38(2):617623, 1995.

[31] D.C. Slaughter, D. Barrett, and M. Boersig. Nondestructive determination of solu-ble solids in tomatoes using near infrared spectroscopy. Journal of Food Science,61(4):695697, 1996.

[32] D.C. Slaughter and R.C. Harrell. Color vision in robotic fruit harvesting. Transactionsof the ASAE, 30(4):11441148, 1987.

[33] R.W. Taylor, G.E. Rehkugler, and J.A. Throop. Apple bruise detection using a digitalline scan camera system. In Agricultural Electronics 1983 and Beyond, volume 2,page 652. ASAE Publication 9-84, 1983.

[34] R.D. Tillett. Image analysis for agricultural processes: A review of potential oppertu-nities. Journal of Agricultural Engineering Research, 50(4):247258, 1991.

[35] A.J. Travis and S.R. Draper. A computer based system for the recognition of seedshape. Seed Science and Technology, 13:813820, 1986.

[36] B.L. Upchurch, J.A. Throop, and D.J. Aneshansley. Influence of time, bruise-type,and severity on near-infrared reflectance from apple surfaces for automatic bruise de-tection. Transactions of the ASAE, 37(5):15711575, 1994.

[37] G.W.A.M. van der Heijden. Applications of image analysis in plant variety testing.PhD thesis, Delft University of Technology, 1995.

-

References 9

[38] F.D. van der Meer and S.M. de Jong, editors. Imaging Spectrometry, Basic Princi-ples and Prospective Applications, volume 4 of Remote Sensing and Digital ImageProcessing. Kluwer Academic Publishers, Dordrecht/Boston/London, 2001.

[39] J.S. West, C. Bravo, R. Oberti, D. Lemaire, D. Moshou, and H.A. McCartney. Thepotential of optical canopy measurement for targeted control of field crop diseases.Annual Review of Phytopathology, 41:593614, 2003.

[40] A.D. Whittaker, G.E. Miles, O.R. Mitchell, and L.D. Gaultney. Fruit location in apartially occluded image. Transactions of the ASAE, 30(3):591596, 1987.

[41] I. Zayas, F.S. Lai, and Y. Pomeranz. Discrimination between wheat classes and vari-eties by image analysis. Cereal Chemistry, 63(1):5256, 1986.

-

10 References

-

Chapter 2Calibration and characterization ofimaging spectrographs 1

AbstractSpectrograph-based spectral imaging systems provide images with a large number of contiguous spec-tral channels per pixel. This chapter describes the calibration and characterization of such systems.

The relation between pixel position and measured wavelength has been determined using threedifferent wavelength calibration sources. Results indicate that for spectral calibration, a source withvery narrow peaks, such as a HgAr source, is preferred to narrow band filters. A third order polynomialmodel gives an appropriate fit for the pixel to wavelength mapping. The signal to noise ratio (SNR) isdetermined per wavelength. In the blue part of the spectrum, the SNR is lower than in the green andred part. This is due to a decreased quantum efficiency of the sensor, a smaller transmission coefficientof the spectrograph, as well as low output power of the illuminant. Increasing the amount of blue light,using an additional fluorescent tube with a special coating considerably increases the SNR.

Furthermore, the spatial and spectral resolution of the system has been determined in relation tothe wavelength. These can be used to choose appropriate binning factors to decrease the image sizewithout losing information. In our case this could reduce the image size by a factor of 60 or more.

2.1 Introduction

A grey-value image typically represents the light intensity over a part of the electromagneticspectrum collected into a single band and a color image represents the intensity over the red,green and blue part of the spectrum in three bands. If an image consists of 4-15 bands, theimage is often referred to as a multi-spectral image. As this number increases, it is often

1This chapter was originally published as: G. Polder, G.W.A.M. van der Heijden, L.C.P. Keizer, and I.T.Young. Calibration and characterization of imaging spectrographs. Journal of near Infrared Spectroscopy,11(3):193210, 2003.

-

12 Chapter 2. Calibration and characterization of imaging spectrographs

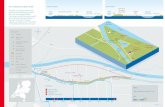

x x x

yy yl

l

Stepwise movement ofthe object

Figure 2.1: A spectral image is built up by moving the object line by line, hence creating a sequenceof (x, ) images. Rotating over the x-axis results in a (x,y) spectral image with a spectrum at eachpixel.

referred to as a hyper-spectral image (Landgrebe [11]). When the light intensity of thespectrum is recorded in a large number (> 50) of small contiguous bands (< 10 nm) ofthe spectrum, resulting in a spectrum per pixel, we prefer the term spectral image. Currenttechniques offer two basic approaches to spectral imaging: 1- acquiring a sequence of two-dimensional images at different wavelengths or 2- a sequence of line images where foreach pixel on the line a complete spectrum is captured. The first approach is implementedby employing a rotating filter wheel or a tunable filter in front of a monochrome camera.This approach is preferable if the number of bands needed is limited and the object canbe held still in front of the camera during recording. Gat discusses the different tunablefilter techniques [5]. Romier et al [17] describe an imaging spectrometer based on anacousto-optic tunable filter for remote sensing applications. This system has a full-widthhalf-maximum (FWHM) spectral resolution of 2-4 nm but, due to the sinc form of thesystems transmission characteristics, several small side lobes were present in the spectrum.The second approach requires an imaging spectrograph coupled to a monochrome matrixcamera. One dimension of the camera (spatial axis) records the line pixels and the otherdimension (spectral axis) the spectral information for each pixel. To acquire a completespectral image, i.e. a three-dimensional (x,y, ) array, either the object, or the spectrographneeds to be translated in small steps, so that at each step a (x, ) slice can be measured, untilthe whole object has been scanned. Martinsen et al [12] describe a system where the slit ofthe spectrograph is moved, and the object is placed at a fixed position. Other systems have afixed spectrograph where the objects are moved. This approach is well suited to a conveyorbelt system, using the camera as a line-scan camera. Figure 2.1 shows the acquisition of aspectral image using this approach.

The imaging spectrograph ImSpector (Spectral Imaging Ltd, Oulu, Finland) uses aprism-grating-prism (PGP) dispersive element and transmission optics [1], hence creatinga straight optical path [8, 9]. This makes it possible to use the spectrograph in combinationwith normal cameras and lenses. We have used this ImSpector in our research [7, 14, 15]and found that calibration and characterization of the system was necessary.

The imaging spectrograph system consists of four main components: the spectrographitself, the camera containing the two-dimensional light sensor, the translation stage, and

-

2.1. Introduction 13

the illumination source. Each of these components has its own characteristics that influ-ences the total accuracy of the system. To characterize the total system performance, it isimportant to measure and optimize all parameters which influence the quality of the ob-tained spectral image. Burke [2] gives a good overview of system characterization methodsfor two-dimensional image acquisition. Mullikin et al [13] describe methods for charge-coupled-device (CCD) camera characterization. Neither publication takes into account spe-cial aspects of spectral imaging systems. Stokman, et al [23] describe some aspects ofcalibration of an imaging spectrograph related to color measurements. Johansson et al [10]describe the design and performance of an imaging spectroscopic system used on a micro-scope. This system incorporated two ImSpectors for vis/NIR work and a reflective gratingsystem for the UV.

Illumination is very important in spectroscopic imaging applications. Since the imagingspectrograph acts as a line scan camera, line lighting with an uniform spatial distribution isan important consideration. Fibre optic line lighting devices with a cylindrical collimatorlens are efficient and affordable. The spectral power distribution of the lamp is an importantfactor. A smooth and stable emission spectrum is needed. Even better might be an emissionspectrum which is the inverse of the spectral sensitivity of the image sensor. Such illumi-nation sources do not exist, but it is possible to modify the spectral emission by filteringthe light source. Martinsen et al [12] used a high pass filter to compensate for the reduceddynamic range of their CCD sensor at higher wavelengths. A disadvantage of this approachis that the total sensitivity is decreased, resulting in the need for a longer integration time,which introduces more noise, or a wider diaphragm which decreases the focal depth and thesharpness. Local emission peaks create requirements for extra dynamic range in the cam-era. Fluorescent tubes are generally not suitable for spectral imaging, due to the excitationof mercury or neon vapor, which gives narrow peaks with very high intensity compared tothe intensity of the fluorescence. These high intensity peaks may induce blooming makingit impossible to measure proper reflectance in a large area around the wavelength of thesepeaks.

Several characteristics of the spectrograph are given in the manufacturers datasheets.The ImSpectors Users Manuals and application notes [19, 20, 21, 22] give basic informa-tion on calibrating the system. For research applications, however, thorough verificationof the performance of the integrated system is required. Total performance is limited by acombination of all possible error sources of the system components.

The aim of this chapter is to describe how to characterize a spectrograph-based spectralimaging system accurately. The following properties will be investigated: the impact ofvarious sources of noise (dark current, read-out noise, photon noise), the signal-to-noiseratio (SNR) per wavelength, spectral calibration (relation between wavelength and pixelposition) and the spectral and spatial resolution of the system. In the next section the overallsystem setup is described. In section 2.3, the theory of calibration and characterization isdescribed. The results of our system are given in section 2.4, and discussed in the finalsection.

-

14 Chapter 2. Calibration and characterization of imaging spectrographs

Camera Sensor Array

BFL

PGP element

Lens

Lens

ImSpector

Objective lens

Steppermotor Translationtable

Object plane

Slit

Fibre optic line illuminator

Figure 2.2: Diagram of the system. PGP = prism-grating-prism, BFL = back focal length.

2.2 System setup

Three systems are studied in these experiments. The first one is for measurements between400 and 700 nm (vis), the second one between 430 and 900 nm (vis-NIR), and the third isin the near infrared between 900 and 1750 nm (NIR). Figure 2.2 depicts the overall systemdiagram. Three ImSpectors were used, an ImSpector V7 spectrograph, with a range of 400to 710 nm and a slit width of 13 m, an ImSpector V9 with a range from 430 to 900 nmand a slit width of 50 m, and an ImSpector N17 with a range from 900 to 1750 nm, with aslit width of 80 m. According to the published characteristics of the ImSpectors, these slitwidths correspond with a spectral resolution of 1 nm, 4.5 nm and 13 nm respectively. Table2.1 tabulates the characteristics of the three systems used in this experiment as given by the

-

2.2. System setup 15

manufacturers.

Table 2.1: Specifications of the three systems used in this experiment. The wavelength range isdetermined by the PGP element, the spatial resolution by the slit width and the number of bandsdepends on both factors. The ImSpector optics determine the spatial resolution, denoted here asModulation Transfer Function (MTF) in linepairs per mm. The y resolution is determined by thetranslation table.

SystemI II III

ImSpector type V7 V9 N17wavelength range [nm] 400-710 430-900 900-1750slit width [m] 13 50 80spectral resolution [nm] 1 4.5 13number of bands 310 104 65MTF [lp mm1] 15 15 15

Camera PMI-1400EC PMI-1400EC SU128-1.7RTpixel size [m] 6.8 6.8 6.8 6.8 60 60

y resolution [mm] 0.05 0.05 0.05

Two cameras were used; for the V7 and V9 this was a Qimaging PMI-1400EC (Burnaby,Canada) Peltier-cooled camera with a Kodak KAF-1400EC class II CCD sensor (EastmanKodak, Rochester, USA). The CCD size is 1320 pixels along the spatial axis and 1035pixels along the spectral axis of the system. Since the number of pixels in the spectraldirection is larger than the number of distinguishable wavelength bands according to thespecifications, the pixels can be binned with a factor of 1035/310 3. In CCD sensors,the quantum efficiency of silicon falls to less than 5% at 1000-1050 nm [24]. Therefore forthe N17, a Sensors Unlimited SU128-1.7RT camera (Princeton NJ, USA) was used. Thiscamera is equipped with a SU128-1.7T1 Indium Gallium Arsenide array sensor, which issensitive between 900 and 1700 nm. The size of the sensor is 128 128 pixels. To matchthe spectral resolution of the spectrograph, the pixels in the spectral direction can be binnedusing a factor 128/65 2. As on-chip binning is not possible with this camera, this is donein software.

The spectral sensitivity for optical sensors is largely determined by the quantum effi-ciency (QE). The QE denotes the probability that a photon of a certain wavelength willcreate a photoelectron. The PMI-1400EC is coated with a very thin layer of a so-calledwavelength converter. This coating absorbs light in the blue part of the spectrum (300-500nm) and emits light at a longer wavelength, where the CCD is more sensitive. When usingthin coatings on the sensor, in spectral imaging applications, optical interference phenomenacan occur when light of a specific wavelength interferes with the film coating on the sensor

-

16 Chapter 2. Calibration and characterization of imaging spectrographs

400 600 800 1000 1200 1400 1600 18000

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

PMI1400EC

SU1281.7RT

Wavelength [nm]

QE [e

lectro

n/pho

ton]

Figure 2.3: The quantum efficiency (QE) of the PMI-1400EC and the SU128-1.7RT, as given by eachcamera manufacturer.

[10]. This effect, sometimes called etaloning, can also occur with thinned back-illuminatedCCD sensors. When selecting a camera, this effect needs to be considered. The sensorsused in our experiment were not hampered by this problem. Figure 2.3 shows the QE givenby the respective manufacturer of each camera.

The observed spectrum of a sample also depends on the spectral power distributionof the light source. Two Dolan-Jenner PL900 illuminators, with 150 W Quartz Tungstenhalogen lamp are used in combination with the V7 and V9. These lamps have a relativelysmooth spectrum between 400 and 2000 nm. Glass fiber optic line arrays of 0.02 inch 6 inch aperture (Dolan-Jenner) and cylinder lenses (Dolan-Jenner) for the line arrays, wereused for illuminating the scene. For the test with the SNR an additional blue fluorescent tubewas used (Arcadia Marine Blue Actinic). The glass fiber and rod lens combination shows anabsorption peak at 1400 nm, which disturbs the measurements in the NIR region. Thereforethe illumination used with the N17 system consisted of a 1000 W tungsten halogen floodlight, without fiber optic light guides. This illumination has a smooth spectral emission inthe NIR wavelengths, but since there is no focussing of light it costs more energy to havethe same amount of light at the object.

The camera spectrograph combination acts as a line scan camera, where the two-dimen-sional image contains spatial information in one dimension and spectral information in theother. By scanning the object using a linear translation stage (Lineairtechniek Lt1-Sp5-C8-600), the second spatial dimension was incorporated, resulting in a three-dimensional datacube of {x,y,}. The movement is 5 mm rev1, with a resolution of 30m, maximum

-

2.3. Characterization and calibration methods 17

speed 250 mm s1 and total travel length of 600 mm. A stepper motor and controller(Ever Elettronica, Italy, type: SDHWA 120) drives the translation table. The controller isconnected to the computer using an RS232 interface. The software to control the translationtable and frame grabber, to construct the spectral images, and to save and display them hasall been developed locally in a single, integral computer program written in Java (http://www.ph.tn.tudelft.nl/polder/isaac.html).

2.3 Characterization and calibration methods

2.3.1 Noise sourcesImages acquired with a camera will contain noise from a variety of sources. Since noise isa stochastic phenomenon, there can be no direct compensation. Noise sources that play arole in scientific cameras are: photon noise, thermal noise, readout noise and quantizationnoise. These noise sources are only determined by the camera and have nothing to do withthe ImSpector and the lens system.

Photon noise

Photon noise is caused by the stochastic process of photons hitting the sensor surface. Pho-tons arrive at purely random instances at the sensor, obeying Poisson statistics [16]. Adetected photon creates a photo-electron in the sensor. These photo-electrons are accumu-lated in potential wells. When a potential well is read out, the photo-electrons are counted.According to Mullikin [13], the theoretical maximum SNR is 10log(Nc), where Nc is the fullwell capacity of the sensor pixels. This theoretical maximum will be reached when othernoise sources are negligible. According to the specifications of the PMI-1400EC cameraNc 45,000e, yielding a maximum SNR of 46 dB. For the SU128-1.7RT Nc > 10Me,yielding a theoretical maximum SNR of 70 dB. In practice the SNR of the SU128-1.7RTwill never reach this theoretical maximum, since the SNR of this camera is limited by otherfactors such as the dark current, readout noise, and quantization noise.

Thermal noise

Thermal noise, also known as dark current refers to electron-hole pairs created by inter-nal thermal vibrations in the sensor instead of external photons. The production rate ofthermal-electrons is a function of temperature and also follows a Poisson distribution. Forthe PMI-1400EC the dark current was measured by keeping the shutter of the camera closed.Integration times were 50, 100, 500, 1000, 5000, and 10000 s. The dark current of theSU128-1.7RT was measured at its fixed exposure time of 16.38 ms. Thermal noise can bereduced by cooling the camera. The PMI-1400EC is standard cooled to about 30 belowambient temperature. The SU128-1.7RT operates at room temperature (18C). Thermalnoise is not equal for all pixels in the sensor. Due to impurities in the semiconductor mate-rial a minority of the pixels generate thermal-electrons at a much faster rate than the others.These pixels are often called hot-pixels. Cooling the camera reduces the impact of these

-

18 Chapter 2. Calibration and characterization of imaging spectrographs

pixels by the same amount as the average dark current. Since the positions of the hot-pixelsare fixed, this abnormality cannot be described as stochastic. It is possible to restore theimages based on the knowledge of the positions of the hot-pixels.

Readout noise

Readout noise is electronic noise induced by the charge-transfer process of the sensor. Itis additive, Gaussian distributed and independent of the signal [24]. Readout noise is mea-sured at the PMI-1400ECs readout speeds of 500 kb, 1 Mb, 4 Mb and 8 Mb. The SU128-1.7RT does not have selectable readout speeds, therefore the readout noise could not bedistinguished from the thermal noise, without manipulating the ambient temperature.

Quantization noiseThe pixel amplitudes are quantized using an analog-digital converter, in the camera or theframe grabber. Most systems use linear quantization in which the range is divided intoequal intervals. The quantization noise is SNR = 6 bits + 11 db [2]. The PMI-1400EC hasa resolution of 12 bits, resulting in a negligible quantization SNR of 83 dB. The SU128-1.7RT in our experiment was used in the analog video mode, with 8 bits quantization. Theaccompanying SNR is 59 dB, which is less than the photon noise described in section 2.3.1.

2.3.2 Sensitivity and SNRThe sensitivity of the sensor chip is wavelength dependent. For a normal silicon-basedCCD, the sensitivity in the blue part of the spectrum is low and in the red part high. Thiseffect of blue insensitivity is even worse when illuminants like Tungsten halogen are used.These illuminants have poor emission in the blue part of the spectrum.

The camera sensitivity S is given by Mullikin, et al [13] as:

S = QE c G F (2.1)

where QE is the quantum efficiency of the sensor (given by the manufacturer), c is thetransmission coefficient of the camera window, G is the conversion factor from electronsinto A/D converter units, and F is the sensors fill factor. In our case we have to extend thisformula to take into account the wavelength dependence:

S = QE c, s, l, G F (2.2)

where s, is the diffraction efficiency of the spectrographs grating. According to the man-ufacturer [9] it varies from 40 to 60% over the whole wavelength range of the ImSpectors.l, is the transmittance of the objective lens which in practice is estimated at 70 - 80%,depending on the wavelength and the quality of the lens. c, is the transmission coefficientof the camera window at wavelength . For the KAF-1400 the c, is assumed to be 100 %over all wavelengths and F is 100 %. The quantum efficiency of the SU128-1.7T1 is > 0.75from 1000 to 1600 nm (Figure 2.3), the c, is assumed to be 100 % over all wavelengths

-

2.3. Characterization and calibration methods 19

and F is 100 %. Assuming a photon noise limited system following a Poisson distribution,the gain can be calculated by:

G = var(I)I

(2.3)where I is the image intensity. Since the SU128-1.7RT is not photon noise limited, thisformula only holds for the PMI-1400EC camera. Image intensities I are measured bytaking spectral images of the six neutral patches of the MacBeth colorchecker [6] whichhave CIE Y values of: 90.0, 59.1, 36.2, 19.8, 9.0, and 3.1. The observed signal-to-noiseration (SNR) can be calculated as:

SNR = 10log(

I2var(I )

)(2.4)

The observed SNR is determined by the quantum efficiency and the sensitivity as describedabove. Furthermore the noise sources described in paragraph 2.3.1 play a role.

2.3.3 Spectral calibrationDetector position tolerance in the camera and non-linearity in the spectrograph dispersioncause small deviations in the wavelength scale of the image. To calibrate the systems, im-ages were acquired with spectral light sources with peaks at precisely known wavelengths.For the V7 and V9 systems three light sources were used:

1. a Mercury Argon (HgAr) source.2. a Philips TLD58W fluorescent lamp.

3. a halogen source with seven narrow band interference filters (CVI Laser CorporationFS40) with a FWHM bandwidth of 40 nm, central wavelengths of 400 - 700 nm, insteps of 50 nm, in front of the spectrograph lens.

The HgAr source is commonly used for the calibration of spectrophotometers. The emissionlines produced by HgAr sources are related to the orbital energies of the atoms making themextremely stable. A drawback of this source is that the total radiated energy is very low.Illuminating the sensor directly instead of measuring the reflection of this source on a whitediffuse surface gave the maximum number of distinguishable peaks. For sources 2 and 3, weused reflection of the illuminant on a white tile of PTFE-plastic with a spectral reflectance ofover 0.98 from 400 to 2000 nm (TOP Sensor Systems WS-2). For the N17 we used the well-known absorption peaks of Polyethylene (PE) and Polystyrene (PS) material [4]. Takingpixels of the recorded images at the center of the spatial axis gives a one-dimensional arraywith the spectral profile of the illuminant. The positions of the peaks of the HgAr sourcewere found using a peak detection algorithm in Matlab (version 5.3). For the V9 with aspectral resolution of more than 8 pixels the center of the peaks were found this way. Thespectrum of the V7 system with a spectral resolution of 2-3 pixels, was interpolated to asample spacing eight times finer than the original using a spline interpolation routine. For

-

20 Chapter 2. Calibration and characterization of imaging spectrographs

the spectra obtained with the CVI filters, the center of the peak was obtained as the positionhalfway between the points of intersection of the FWHM with the curve. The peak positionsof PE and PS were visually matched with the spectra obtained by a accurate standardizedhigh resolution spectrophotometer. Linear regression has been used to correlate the pixelpositions with the wavelengths. First-, second- and third-order polynomial models havebeen fitted.

2.3.4 Spectral and spatial resolutionsSpectral resolution is largely linearly dependent on the size of the slit placed before the PGPelement [19]. A small slit corresponds with high resolution, but has the disadvantage of adecreased amount of light on the individual sensor pixel.

The ImSpector spectrograph has been adjusted for standard C-mount back focal length(17.53 mm). It is important to put the sensor detector surface exactly at this distance, oth-erwise it is not possible to achieve the best possible spectral focus, resulting in a spectralresolution which is worse than that determined by the slit size. For the V7 and V9 sys-tem the spectral resolution is determined by recording a laserline of 670 nm with a spectrallinewidth 0.5 nm. The FWHM is measured from the images and the back focal length isadjusted for the optimal FWHM. Due to the massive body of the PMI-1400EC camera andthe construction of its C-mount adapter ring, modification of the ImSpector adapter ringswas required to enable proper adjustment.

The spatial resolution is different for the x and y direction. In the x direction which isperpendicular to the scan direction of the system, the resolution is limited by the numericalaperture of the lens and the ImSpector, and the resolution and size of the image sensor. In they direction the resolution also depends on the slit width of the ImSpector and the step sizeand resolution of the stepper table. These values are given in Table 2.1 for the equipmentused in our experiment. Note that the resolution of the stepper table is given for the objectplane, where the other values are for the sensor plane. The resolution of the stepper table inthe sensor plane can be calculated by dividing the value from the table by the magnificationof the object lens. The spatial resolution in both directions is determined by calculating thestep response of a recorded step edge. A simple method for spatial characterization is thedistance between the 10 and 90 % points of the step edge (Burke [2]). A second method isto determine the FWHM of the derivative of the step edge. The derivative can adequately beestimated by using b-spline interpolation with a factor of eight and subsequent convolutionwith a Gaussian derivative with = 1.5 (Mullikin [13]). Another method is to image a setof targets (bars and spaces) with a known resolution. The spatial frequency at the limitingresolution is the charted frequency at which the apparent contrast between the bars andspaces is still discernible. We used the USAF 1951 test chart.

2.3.5 Calculation spectral reflectanceAs described in the previous sections, the intensity of the measured spectra are dependanton the spectrum of the light source, the quantum efficiency of the camera, readout speed,shutter time and temperature of the sensor. These factors are not constant. For instance the

-

2.4. Results 21

spectrum of the light source varies in time due to aging. Dark current noise and hot pixelsdepends on the shutter time and sensor temperature. Using the Shafer [18] reflection modelassuming matte object surface, the spectral image can be made independent of all thesefactors using equation 2.5 (Chapter 3).

R =I B

W B(2.5)

where R is the color constant reflection value at wavelength , I is the original mea-sured spectral reflection value, W is the spectral radiation of the illuminant, obtained bymeasuring the reflection of a perfect isotropic diffuser, and B is the black reference.

This calculation, and thus the measurement of W and B, needs to be done at a regularinterval, at least as fast as the drift in the disturbing factors.

2.4 Results

2.4.1 Noise sourcesTwo aspects of both the readout noise and thermal noise were observed for the imagesobtained with the PMI-1400EC camera. Since the relevant setting regarding these noisesources can not be varied in the SU128-1.7RT, only data for the PMI-1400EC is given inthis section. When the readout speed varies, a dark band is seen at the side of the sensorwhere the charge is transferred out. At higher readout speeds this band is wider and moreintense (figure 2.4).

With higher integration times pixels in the upper right corner of the CCD show highervalues. This effect might be caused by heat leakage from the preamplifier electronics on thechip, which is situated close to this corner on the sensor chip (Figure 2.5).

In the middle of the chip no systematic effects are seen. Random noise, however, can beobserved.

Table 2.2: Standard deviation () of the whole image for the different integration times and readoutspeeds.

readout integration time [ms]speed 50 100 500 1000 5000 10000

500 kHz 3.27 3.28 3.36 3.40 8.44 17.161 MHz 3.55 3.58 3.64 3.66 8.37 17.024 MHz 3.76 3.75 3.78 3.86 8.53 17.038 MHz 3.69 3.62 3.69 3.84 8.57 16.96

-

22 Chapter 2. Calibration and characterization of imaging spectrographs

(a) 500 kHz (b) 500 kHz

(c) 1 MHz (d) 1 MHz

(e) 4 MHz (f) 4 MHz

(g) 8 MHz (h) 8 MHz

Figure 2.4: Readout levels (left) and readout noise (right) of all pixels. The readout speed is 500 kHz(a,b) 1 MHz (c,d), 4 MHz (e,f), and 8 MHz (g,h). The integration time is 50 ms. The range in the leftimage is 15 ADU the range in the right image is 3 ADU.

-

2.4. Results 23

(a) 100 ms (b) 100 ms

(c) 1000 ms (d) 1000 ms

(e) 10000 ms (f) 10000 ms

Figure 2.5: Dark current levels (a,c,e) and noise (b,d,f) of all pixels. The integration time is 100 (a,b),1000 (c,d) and 10000 ms (e,f). The readout speed is 500 kHz. The range in the left image is 40 ADU,the range in the right image is 3 ADU.

Table 2.3: Standard deviation () of the center of the image for the different integration times andreadout speeds.

readout integration time [ms]speed 50 100 500 1000 5000 10000

500 kHz 3.02 3.03 3.11 3.13 3.52 4.531 MHz 3.16 3.17 3.24 3.25 3.77 4.544 MHz 3.11 3.1 3.15 3.19 3.61 4.448 MHz 2.98 2.94 3.01 3.05 3.5 4.57

-

24 Chapter 2. Calibration and characterization of imaging spectrographs

10 5 0 5 10 15 20 25 303

4

5

6

7

8

9

10

sensor temperature [ C]

blac

k le

vel n

oise

[dB]

1 s5 s10 s

Figure 2.6: Dark current noise as function of the sensor temperature at different integration times.

Table 2.2 shows the dark current noise of the whole image for different integration timesand readout speeds. Table 2.3 shows this noise for a 400 400 pixels region in the middleof the chip. From Table 2.2 it is clear that with increased integration time the hot spot in thecorner severely increases the noise of the image. Higher readout speeds do not contributemuch to the noise, but from the observed images it can be seen that with higher readoutspeed the disturbing band broadens. On the other hand the amplitude of the band is only15 (from 4096) ADUs in the worst case, and since it is a systematic effect, the images caneasily be corrected by either subtracting the dark current image, or by bicubic interpolationof the surrounding pixels. Subtracting the dark current image is under the assumption ofequal gain for hot pixels and other pixels. This was proven to be true for the PMI-1400ECas long as the hot pixels were not saturated. From Table 2.3 we learn that random noiseincreases with longer integration times, and readout speed does not have a significant effecton the random noise.

Another point of interest is the dependency of the noise on the sensor temperature. ThePMI-1400EC is cooled to about 30 below ambient temperature and varies with the roomtemperature. Figure 2.6 shows the relation between the sensor temperature and the blacklevel noise at integration times of 1, 5 and 10 seconds. From this graph we learn that forintegration times > 1 second, the black level noise increases when the sensor temperatureincreases. When the integration time is 1 second this is only significant for sensor tem-peratures > 5 C. To minimize noise induced by the readout process, or the preamplifierheat leakage, the noise was calculated over a region of 700 500 pixels in the center of theimage.

-

2.4. Results 25

2.4.2 Sensitivity and SNRIn Figure 2.7 the SNR of spectral images of the six neutral patches from the Macbeth colorchecker are plotted as a function of wavelength using the V7 system. The integration timewas 120 ms and the readout speed 500 kHz. The patches were illuminated with a Quartzhalogen lamp (Figure 2.7a) and with a combination of a halogen lamp and a blue fluorescenttube (Arcadia Marine Blue Actinic) (Figure 2.7b). Note that in Figure 2.7a, the SNR in theblue part of the spectrum is much lower than in Figure 2.7b. For the V9 and N17 systemsimilar results were obtained.

2.4.3 Spectral calibrationTable 2.4 shows the measured peaks for the different calibration sources and systems.

For all peaks their spectral pixel locations were estimated based on the first-, second-and third-order polynomial fit through the pixel positions on the axis. Table 2.5 showsthe residual mean square error of these fits for all the combinations.

The residuals of the regression for the three systems are plotted in Figure 2.8, 2.9 and2.10. From these graphs and Table 2.5 it is clear that the third order fit of the HgAr sourcegives the best result for system I and II. The TLD fluorescence lamp has too few peaks tofit a third-order polynomial. The reason that calibration with the small band CVI filtersperforms worse was that the center of the filter was less precisely defined, compared to theHgAr emission peaks. For system III (N17) only one calibration source was available. Thetotal observed wavelength range for each system is given in Table 2.6.

The measurements of Table 2.4 were done at the center of the spatial axis of the Im-Spector. Due to abberations in the grating the spectral lines are slightly bent across thespatial axis. Figure 2.11 shows this effect for the V7 system. The amount of bending iswavelength dependent. In our case this bending was 1.3 nm at a wavelength of 670 nm.Therefore for optimal calibration the procedure needs to be carried out at all pixels alongthe spatial axis.

2.4.4 Spectral and spatial resolutionSpectral resolution of the three systems was measured using a laser line of 670 nm forsystem I and II. For system III a 1357 nm peak of the HGAR calibration source was used.Binning on the cameras was turned off. The results are given in Table 2.7.

The measured spectral resolutions were in accordance with the ImSpector specificationsTable 2.1. In order to investigate how sensitive the resolution is to small errors in theback focal length, the FWHM resolution of the the V7 and V9 ImSpector was measured atdifferent back focal distances. Figure 2.12 shows the result. From this figure we see that a10% error in back focal distance doubles (V9) or triples (V7) the spot size.

Spatial resolution measurements for the V7 and V9 system were carried out with aNikon 35 mm lens in front of the ImSpector. The lens aperture was f/11 and the distance tothe target 550 mm. This results in an image width of 132 mm. To investigate the wave-length dependency of the spatial resolution the images were analyzed at three different

-

26 Chapter 2. Calibration and characterization of imaging spectrographs

350 400 450 500 550 600 650 700 75025

30

35

40

45

50

[nm]

SNR

[db]

(a) SNR quartz halogen illuminationcie Y:90cie Y:59cie Y:36cie Y:20cie Y: 9cie Y: 3

350 400 450 500 550 600 650 700 75025

30

35

40

45

50

[nm]

SNR

[db]

(b) SNR halogen and blue illuminationcie Y:90cie Y:36cie Y: 9

Figure 2.7: Signal-to-noise ratio of spectral images from the six neutral patches of the Macbeth colorchecker. In (a) only quartz halogen illumination is used, (b) shows the result for halogen plus blueillumination for three of the six patches.

-

2.4. Results 27

Table 2.4: Detected peaks for the different calibration sources: HgAr, Mercury Argon calibrationsource, TLD58W Philips fluorescent lamp, CVI narrow band interference filters and Polyethylene(PE) and Polystyrene (PS) reflection.

HgAr TLD58W CVI PS PE Pixel position( [nm]) ( [nm]) ( [nm]) ( [nm]) ( [nm]) V7 V9 N17

402.9 73.5404.66 38.90435.84 435.84 141.50 39.0546.08 546.08 485.00 269.0

455.4 194.0 77.5500.0 350.0 177.5554.3 514.5 287.0

576.96 578.25578.02 333.0579.07 584.70

598.2 644.5 371.5612.00 612.00 399.0

649.8 796.0 470.5696.54 934.70 565.0

696.8 939.0 558.0706.72 964.90 584.5

706.72 587.0714.70 988.40 600.0727.29 1025.70 624.5738.40 645.5750.93 669.5763.51 694.5772.40 711.5794.82 754.5801.05 766.5810.95 786.0826.45 815.5841.64 845.0

852.14 861.0866.80 893.0

910.8a 918.0912.30 981.0922.45 1000.0

1144 411192 47

1210 511414 84

1682 121

a910.8 is the second order peak from the 455.4 filter

-

28 Chapter 2. Calibration and characterization of imaging spectrographs

400 450 500 550 600 650 700 7503

2

1

0

1

2

3HGAR V7

Wavelength [nm]

Res

idua

l: ac

tual

pre

dict

ed [n

m]1st order fit2nd order fit3th order fit

400 450 500 550 600 6501.5

1

0.5

0

0.5

1

1.5TLD V7

Wavelength [nm]

Res

idua

l: ac

tual

pre

dict

ed [n

m]

1st order fit2nd order fit3th order fit

400 450 500 550 600 650 7006

4

2

0

2

4

6

8CVI V7

Wavelength [nm]

Res

idua

l: ac

tual

pre

dict

ed [n

m]

1st order fit2nd order fit3th order fit

Figure 2.8: Residual plot of the first-, second- and third-order linear fit for the three calibrationsources of system I (V7).

-

2.4. Results 29

400 500 600 700 800 900 10003

2

1

0

1

2

3

4

5

6HGAR V9

Wavelength [nm]

Res

idua

l: ac

tual

pre

dict

ed [n

m]1st order fit2nd order fit3th order fit

400 500 600 700 800 9003

2

1

0

1

2

3

4TLD V9

Wavelength [nm]

Res

idua

l: ac

tual

pre

dict

ed [n

m]

1st order fit2nd order fit3th order fit

400 500 600 700 800 900 100010

5

0

5

10

15CVI V9

Wavelength [nm]

Res

idua

l: ac

tual

pre

dict

ed [n

m]

1st order fit2nd order fit3th order fit

Figure 2.9: Residual plot of the first-, second- and third-order linear fit for the three calibrationsources of system II (V9).

-

30 Chapter 2. Calibration and characterization of imaging spectrographs

Table 2.5: The residual mean square error of the first-, second- and third-order polynomial fit for thethree calibration sources for all systems. N is the number of discernable peaks.

System Source First order Second order Third order NI (V7) HgAr 5.01 0,76 0.18 9

TLD58W 2.27 0.08 0 4CVI filters 8.88 8.72 7.49 7

II (V9) HgAr 7.83 3.01 0.79 19TLD58W 6.81 0.84 0.77 5

CVI filters 20.15 1.72 1.68 7III (N17) Polystyrene 17.98 8.75 4.64 5

1100 1200 1300 1400 1500 1600 170015

10

5

0

5

10POLYSTYRENE N17

Wavelength [nm]

Res

idua

l: ac

tual

pre

dict

ed [n

m]

1st order fit2nd order fit3th order fit

Figure 2.10: Residual plot of the first-, second- and third-order linear fit for the calibration of systemIII (N17).

Table 2.6: Observed wavelength range of the three systems.

System Range [nm]I (V7) 393 - 730

II (V9) 418 - 940III (N17) 800 - 1747

-

2.4. Results 31

spectral linehorizontal line

x

Figure 2.11: An x- image of the spectrum of the arcadia blue fluorescent tube, recorded by the V7system. In the image a slight bending of discrete spectral lines can be observed.

17 18 19 20 21 22 230

2

4

6

8

10

12

14

16

V7

V9

Back focal lenght [mm]

FWH

M [n

m]

Figure 2.12: Spectral resolution (FWHM) as function of the back focal distance.

-

32 Chapter 2. Calibration and characterization of imaging spectrographs

Table 2.7: Resolution measurements of the three systems for , x and y. The values for x and y aregiven for the sensor plane. Between brackets the wavelength [nm] at which the measurement is done.

SystemI (V7) II (V9) III (N17)

FWHM [nm] 0.9 (670) 3.1 (670) 14.8 (1357)x FWHM [m] 25.8 (500) 36 (500) 78 (1000)

23.1 (600) 17.7 (700) 108 (1300)16.3 (700) 23.8 (900) 150 (1600)

10-90% [m] 68 (500) 54.4 (500) 120 (1000)47.6 (600) 34 (700) 120 (1300)40.8 (700) 61.2 (900) 180 (1600)

USAF [lp mm1] 38 (500) 15 (500) 5.4 (1000)38 (600) 38 (700) 5.4 (1300)47 (700) 26 (900) 4.3 (1600)

y FWHM [m] 25.2 (500) 47.6 (500) 108 (1000)17 (600) 47.6 (700) 96 (1300)16.3 (700) 57.8 (900) 96 (1600)

10-90% [m] 54.4 (500) 54.4 (500) 120 (1000)34 (600) 54.5 (700) 120 (1300)40.8 (700) 61.2 (900) 120 (1600)

USAF [lp mm1] 33 (500) 15 (500) 5.4 (1000)42 (600) 15 (700) 5.4 (1300)42 (700) 15 (900) 5.4 (1600)

wavelengths, 500, 600 and 700 nm for the V7 and 500, 700 and 900 nm for the V9. Thespatial resolution in the x direction was measured with a black to white step transition. Thespatial resolution was obtained by calculating the FWHM of the derivative of the b-splineinterpolated grey levels of this step edge (Figure 2.13). The 10-90% points and the spatialfrequency using the USAF 1951 test chart were also measured. Unfortunately the 10-90%method is disturbed by noise at the low and high value of the step edge, giving a less reli-able value compared to the FWHM method. In the lower region of the spectrum where thesystem is less sensitive this is even more the case. The test chart images were interpretedby human observation and therefore also less accurate. Figure 2.14 shows monochromaticimages at 650 nm of a part of the test target captured with the V7 and V9 ImSpector.

Spatial resolution measurements for the N17 system were performed with an Electro-physics 25 mm lens in front of the ImSpector. The lens aperture was f/8 and the distance to

-

2.4. Results 33

0 5 10 15 20 25 30500

0

500

1000

1500

2000

2500

x position [pixel]

Leve

l [ADU

]step edgederivative

FWHM

Figure 2.13: FWHM, determined by taking the derivative of a step edge recorded with system I (V7).

xaxis [pixels]yax

is [0.

1 mm

steps

]

ImSpector V7

50 100 150 200 250 300 3501

25

50

xaxis [pixels]yax

is [0.

1 mm

steps

]

ImSpector V9

50 100 150 200 250 300 3501

25

50

Figure 2.14: Monochromatic images of part of the USAF test target at 650 nm captured with the V7and the V9 ImSpector.

-

34 Chapter 2. Calibration and characterization of imaging spectrographs

0 0.5 1 1.5 2 2.50

500

1000

1500

2000

2500

3000

3500

4000

4500

5000

Y Position [mm]

Ampl

itude

0.05 mm0.1 mm0.2 mm0.4 mm

Figure 2.15: Intensity values of a small piece of the USAF chart captured with system I (V7). Step-sizes in the y direction were 0.05 - 0.4 mm.

the target 400 mm. This results in a image width of 100 mm. The images analyzed were atwavelengths of 1000, 1300 and 1600 nm. The results are given in Table 2.7. From Table2.7 we also see that both the x and y resolution are dependent on the wavelength.

In the y direction the spatial resolution was measured at different step sizes of the trans-lation table. The measured values are also given in Table 2.7. Figure 2.15 shows themeasured intensities using the V7 system as function of the y position. For a step size of0.05 and 0.1 mm the spatial resolution was the same as in the x direction. For step sizes over0.1 mm the resolution is limited by the step size as the data are now being under-sampled.

2.5 Discussion

From Figures 2.82.10 and Table 2.5 we can conclude that a third-order polynomial termgives a significant fit only for the HgAr calibration source. For the other two calibrationsources a second-order fit is sufficient. Due to the large number of well defined narrowpeaks, the HgAr calibration source is the most precise. It also gives the best fit, whichimplies that we found the most suitable model using this calibration source. A drawbackof the HgAr source is the low energy radiation. For a per column calibration you need toscan the HgAr source along the slit dimension. A good alternative is to use the excitationpeaks of a fluorescent tube, whose high energy allows illumination of a whole line. Thismakes it possible to do a per column spectral calibration. A disadvantage of the fluorescent

-

2.5. Discussion 35

tube is the low number of available peaks, which makes a higher order fit less reliable. Thissource might be ideal, however, for checking the calibration of a spectrograph while it is inoperation. The wavelength stability of the fluorescent tube is the same as the HgAr sourceas long as only the excitation peaks are used. The emission spectra of the phosphors are verysensitive to ambient temperature changes and are not suitable for spectral calibration [2].The CVI filters are also sensitive to changes in environment with temperature and humiditybeing the most critical factors [3].

From Figure 2.11 we learned that the spectrometer optics induced a slight bending ofthe spectral lines. Therefore per column spectral calibration is needed.

Standard halogen lighting has a low energy in the blue part of the spectrum, henceconsiderably decreasing the SNR for short wavelengths, even though a blue enhanced sensorwas used. Additional fluorescent illumination reduces this problem. However, the excitationpeaks of fluorescent tubes can seriously hamper the reflection measurements around thepeaks. The marine blue tube we used in combination with the halogen light source showedonly two clear excitation peaks. One peak is inside the main emission spectrum and cannotbe filtered out. The other one is at 546 nm and could easily be filtered out. Alternatives areXenon bulbs and flash lights. Xenon bulbs are expensive, however, and show only marginalimprovement in the blue part of the spectrum. Xenon flash lights provide high power anda reasonable spectrum, but are less flexible and intensity can vary between flashes. Highpower blue LEDs are still not powerful enough, but this might change in the near future.The glass fibre light guide and cylindrical lens, used in the vis systems disturbs the spectralpower distribution in the NIR range. For this reason the N17 uses a halogen flood light.The drawback of this illumination scheme is the loss of energy and heat production, sincefocusing of the light is quite difficult. All illumination systems show variations in intensityand spectral power distribution with time. The main reason is aging of the illuminant. Forproper spectral measurements, therefore, images need to be corrected with a recording of astable white or grey reference with a constant reflectance percentage over all wavelengths.This correction needs to be done at regular time intervals.

The narrow slit (13m) in the V7 gives a spectral resolution beter than 1 nm. It alsooptimises the spatial resolution, although it considerably reduces the amount of light. Atrade-off should therefore be made between light intensity and spectral/spatial resolutionwith respect to the slit size. A sensitive scientific camera like the PMI-1400EC can be usedto capture images of reasonable quality under low light conditions.

Noise sources and total SNR of such cameras can be characterized. In our situationreadout noise showed a slight systematic pattern, most prominently at high readout speed.This can easily be corrected and has only a marginal effect compared with other noisesources. Therefore the highest readout speed can be used in our system. Dark current wasonly noticeable at integration times of more than 1 second. At higher integration times, therewas also a systematic effect in one corner of the sensor, caused by heat leakage of the on-chip electronics. For accurate imaging, this corner should be discarded at high integrationtimes. Due to drift in the dark current caused by possible change in temperature, readoutspeed or shutter speed, the correction for these noise sources also needs to be performedregularly.

-

36 References