G-MPSoC: Generic Massively Parallel Architecture on FPGA€¦ · effectiveness, these solutions are...

Transcript of G-MPSoC: Generic Massively Parallel Architecture on FPGA€¦ · effectiveness, these solutions are...

G-MPSoC: Generic Massively Parallel Architecture on FPGA

HANA KRICHENEUniversity of Lille 1

INRIA Lille Nord EuropeLille - FranceENIS School

CES LaboratorySfax - Tunisia

MOUNA BAKLOUTIMOHAMED ABID

ENIS SchoolCES LaboratorySfax - Tunisia

[email protected]@enis.rnu.tn

PHILIPPE MARQUETJEAN-LUC DEKEYSER

University of Lille 1INRIA Lille Nord Europe

Lille - [email protected]@univ-lille1.fr

Abstract: Nowadays, recent intensive signal processing applications are evolving and are characterized by thediversity of algorithms (filtering, correlation, etc.) and their numerous parameters. Having a flexible and pro-grammable system that adapts to changing and various characteristics of these applications reduces the designcost. In this context, we propose in this paper Generic Massively Parallel architecture (G-MPSoC). G-MPSoC is aSystem-on-Chip based on a grid of clusters of Hardware and Software Computation Elements with different size,performance, and complexity. It is composed of parametric IP-reused modules: processor, controller, accelerator,memory, interconnection network, etc. to build different architecture configurations. The generic structure of G-MPSoC facilitates its adaptation to the intensive signal processing applications requirements. This paper presentsG-MPSoC architecture and details its different components. The FPGA-based implementation and the experimen-tal results validate the architectural model choice and show the effectiveness of this design.

Key–Words: SoC, FPGA, MPP, Generic architecture, parallelism, IP-reused

1 IntroductionThe intensive signal processing applications are in-creasingly oriented to specialized hardware accel-erators, which allow rapid treatment for specifictasks. However, these applications are characterizedby repetitive tasks performing multiple data, whichrequire massive parallelism for their efficient exe-cution. To achieve high performance required bythese applications, many massively parallel System-on-Chips are proposed [1, 7, 5, 2]. Despite theireffectiveness, these solutions are still dedicated tospecific applications and it is generally difficult tomake future changes to adapt new applications. Toaddress this problem, this paper proposes a novelGeneric Massively Parallel System-on-Chip, namedG-MPSoC. This system, based on modular struc-ture, allows building different configurations to covera wide range of intensive signal processing appli-cations. The architectural model of G-MPSoC canintegrate software homogeneous computation unitsor hardware(accelerator)/software(processor) hetero-geneous computation units. This generic feature al-lows the best partition of the specific tasks on thecomputational resources. To implement this system,the FPGA platform is targeted to exploit its reconfig-urable structure, which facilitates the test of differentG-MPSoC configurations with rapid re-design or cir-

cuit layout modifications. In this work, Xilinx Virtex6ML605 board is used to implement the G-MPSoC ar-chitecture and to evaluate the experimental results.

The remainder of the paper is structured as fol-lows: Section 2 discusses some related works; sec-tion 3 describes the proposed architecture and its ex-ecution model; the implementation methodology onFPGA board is detailed in Section 4; then, the exper-imental results are discussed in Section 5; and finally,Section 6 concludes the paper and proposes some per-spectives.

2 Related workNowadays, the digital embedded systems migrate to-ward the massively parallel on-Chip design, due to itsprovided high performance. Among these systems,we note the General-Purpose Processing on Graph-ics Processing Units (GP-GPU) [2, 24], which is amassively parallel System-on-Chip based on a hy-brid model between vector processing and hardwarethreading. It allows high performance by hiding thememory latency [1]. But, it loses efficiency with thelarge branch divergence between executing threads [3]when data dependency is demanded in intensive sig-nal (image, sound, motion...) processing applications.In this gap, Platform 2012 (P2012) [1] is positioned

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 456 Volume 14, 2015

with highly coupled building blocks based on clus-ter of extensible processors varying from 1 to 16 andshared the same memory. Despite its high scalabil-ity, this architecture still specialized for limited rangeof applications such as multi-modal sensor fusion andimage understanding. To provide more flexibility, aheterogeneous extension to the P2012 platform is pro-posed with He-P2012 [6] architecture. In He-P2012,the clusters of PEs used in P2012 are tightly cou-pled with hardware accelerators. All of them sharethe same data memory. With this architecture, a pro-gramming model is proposed, allowing the dispatch ofHardware and Software tasks with the same delay. En-large the initial software platform by additional hard-ware blocks can increase the design complexity withmultiple communication interfaces. Others architec-tures have adopted the same strategy of massivelyparallel shared memory architecture, such as STMSTHORM [1], Kalray MPPA [7], Plurality HAL [8],the NVIDIA Fermi GPU [9], etc. Although their pro-vided high performance, sharing the same memoryunit can limit the system bandwidth and cause somedata access congestion, which limit the system scal-ability. The autonomous control structure for mas-sively parallel System-on-Chip is proposed with theMPPA [4] architecture and Event-Driven MassivelyParallel Fine-Grained Processor Array [25], execut-ing in MIMD fashion. They provide more flexibil-ity with asynchronous execution than the previouscentralized and synchronous architectures. But, theuse of a completely decentralized processing structuremakes the control task of data transfer between inde-pendent computation units difficult to achieve.

To overcome the limits of the existing proposedmassively parallel architectures on-chip, we define aGeneric Massively Parallel SoC (G-MPSoC), allow-ing the execution of wide range of intensive signalprocessing applications. To meet the high perfor-mance requirements of these applications, this genericarchitecture can have different configurations goingfrom simple homogeneous structure to clustered het-erogeneous structure communicated through regularNetwork-on-Chip (NoC) and controlled by hierarchi-cal master-slave control structure [14]. This design isimplemented into FPGA platform, which allows rapidreconfiguration of the architecture according to thedesigner needs. This reconfigurability based on pro-grammable logic elements facilitates the system scal-ability.

In the next section, we detail the major compo-nents of G-MPSoC.

3 Generic Massively Parallel Ar-chitecture based on SynchronousCommunication AsynchronousComputation

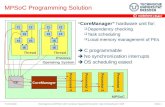

3.1 G-MPSoC architecture overviewThe G-MPSoC architecture is composed of a Mas-ter Controller Unit (MCU) connected to its sequen-tial instructions memory, called MCU-memory, anda grid of Slave Control Units (SCUs). Each SCU isconnected to a cluster of 16 Computation Elements(CEs), known collectively as Node. The CE can be aSoftware Processing Element (PE) or a Hardware spe-cialized Accelerator-IP. Each CE is connected to itslocal instructions and data memories, called Mi mem-ory. The parameter i is relative to the CE number. TheMCU and SCUs grid are connected through a bus withsingle hierarchical level and the SCUs are connectedtogether through neighbourhood interconnection net-work. Fig. 1 shows the hardware implementation ofthe G-MPSoC. Bellow, we present the design of theG-MPSoC components.

MCU Memory

MCU

Nodes grid

Global Control

SCU (0,0)

SCU (1,0)

SCU (2,0)

SCU (0,1)

SCU (1,1)

SCU (2,1)

SCU (0,2)

SCU (1,2)

SCU (2,2)

M0

M1

M15

CE0

CE1

CE15

M0

M1

M15

CE0

CE1

CE15

M0

M1

M15

CE0

CE1

CE15

M0

M1

M15

CE0

CE1

CE15

M0

M1

M15

CE0

CE1

CE15

M0

M1

M15

CE0

CE1

CE15

M0

M1

M15

CE0

CE1

CE15

M0

M1

M15

CE0

CE1

CE15

M0

M1

M15

CE0

CE1

CE15

Memory Connection CE Connection Network Connection

Figure 1: G-MPSoC architecture

3.1.1 MCUThe MCU is the first execution and control level inG-MPSoC. It is a simple processor, which fetches anddecodes program instructions, executes sequential in-structions and broadcasts mask activity and parallelcontrol instructions to SCUs. In a parallel execution,MCU remains in the idle state and controls the endsignal to resume the main program execution. In G-MPSoC, the MCU is based on modified FC16 [10],which is a stack processor with short 16-bits instruc-tions. It is open source and fully implemented inVHDL language, which allows rapid prototyping in

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 457 Volume 14, 2015

FPGA with instructions set simple to expand. Somespecific instructions are added to the FC16 instruc-tions set, mainly:

• Mask instructionsTo activate the involved nodes in the parallel ex-ecution, the MCU executes mask instructions, aspresented in table 1, and broadcasts the maskmap and mask code to all SCUs. The mask iscoded in 32 bits, to address an array of (16x16)nodes.

Table 1: Mask instructionsinstruction OpCode Mask

CodeDefinition

selbf 0x0080 (000) Activate SCUs se-lected by the mask.

selbfand 0x0081 (001) Activate SCUs in theintersection of the cur-rent mask and previousone.

selbfor 0x0082 (010) Activate SCUs in theunion of the currentmask and previousone.

selbfxor 0x0083 (011) Activate SCUs in theunion of the currentmask and previous oneexcept the intersectionpart.

• Broadcast instructionsCoded in 32 bits, the broadcast instructions iden-tify which area executes parallel control instruc-tions (table 2). Once the mask is mapped into theSCUs grid and when the broadcast instruction isexecuted, the MCU fetches the parallel controlinstruction, and then broadcasts it to all selectedSCUs followed by the broadcast code.

Table 2: Mask instructionsinstruction OpCode Mask

CodeDefinition

brdbf 0x0084 (100) Broadcast parallelcontrol instructions toactive SCUs.

brdbfb 0x0085 (101) Broadcast parallelcontrol instructions toinactive SCUs.

brdall 0x0086 (111) Broadcast parallelcontrol instructions toall the SCUs.

• Wait end instructionWhen executing this instruction, the MCU re-

mains in idle state until the end of the parallel ex-ecution. It is a synchronising instruction, whichdepends on the end-execution signal value.

The MCU is connected to the SCUs grid through abidirectional bus to communicate the parallel controlinstructions from MCU to SCUs and the computationresults from CEs to MCU via SCUs. The end signalconnects all SCUs to inform the MCU of the end ofthe parallel execution.

3.1.2 SCUs arrayThe second execution and control level in G-MPSoCis presented by the SCUs array. Each SCU controls:the local node activity, the autonomous parallel execu-tion in the CEs, the end execution and the synchronouscommunication in the interconnection network. Asshown in fig. 2, it is composed of four modules, whereeach one of them independently performs a specificcontrol function, as detailed in [14].

SCU_ Activity

scu_inst

scu_code

xnet_com

ce_inst

simd_scac

r_w

Dir

Local_Control

E

CMD(15:0)

SCU_ORTREE

A

xnet_a

SLCU_COM

COM_Control

SCU_ROUTER

CO

M

D

A

CO

M

CO

M

ins

t

data_rce

SCU

CE

Inte

rface

ortree end

Netw

ork

Inte

rfac

e

MC

U in

terf

ace

go

ISCU(15:0)

scu_data

Figure 2: SCU design

• SCU Activity moduleThis module receives the mask activity and theparallel control instruction from the MCU. Then,according to the mask code and broadcast code,it sets the activity-flag and transfers the paral-lel control instruction to the Local Control mod-ule, respectively. We notice that the use ofSCU Activity module in G-MPSoC architectureallows the sub-netting of the SCUs grid, whichoptimizes the data flow transfer and increases theparallel broadcast domains.

• Local Control moduleAfter sub-netting the network, the parallel con-trol instructions are broadcast to a Local-Control

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 458 Volume 14, 2015

module. This module prepares the communica-tion phase and controls the CEs execution. Themain sub-module in Local-Control is the Instruc-tion Decoder, which decodes the instructions re-ceived from the SCU Activity module. These in-structions are coded in 32 bits, as detailed in [14]:the first 16 bits represent the control micro-instruction (CMD) and the last 16 bits represent:the address of the parallel instructions block, thesingle parallel SIMD instruction (P INST) or thevalue of the communicated data.

• SCU COM moduleThe SCU components in G-MPSoC are con-nected in two-dimensional neighbourhood inter-connection network via the SCU-COM mod-ule. It allows the SCU to communicate withits 8 neighbours using only 6 connections. TheSCU-COM is composed of COM Control andSCU router sub-modules. The COM Controlsub-module manages the data transfer accord-ing to the communication instructions. TheSCU router sub-module itself is composed oftwo routers, as presented in the fig. 3.

Local Direction S E

MUX

DEMUX

(a)

(b)

Figure 3: Architecture of the SCU-Router(a) R-SCUXnet - (b) R-Xnet

All the communications take place in the samedirection, so there is no messages congestion inthe same data transfer port. This feature allowsthe simple design of the routing element:

– R-SCUXnet manages directions and dis-tances of communications. It is composedof a couple of 4:1 mux/demux, allowingsynchronous data transfer.

– R-Xnet allows simultaneous connection ofany pair of non-occupied ports according tothe given direction.

Table 3: X-net directionsDirection Code R-SCUXnet R-Xnet

↖ North West 0 0 ↖ 0 ↖↑ North 1 0 ↖ 1 ↗↗ North East 2 1 ↗ 1 ↗→ East 3 1 ↗ 2 ↘↘ South East 4 2 ↘ 2 ↘↓ South 5 2 ↘ 3 ↙↙ South West 6 3 ↙ 3 ↙← West 7 3 ↙ 0 ↖

Each SCU-Router can take 4 different directions,allowing the data transfer in 8 directions, as de-tailed in table 3.

When the COM-Control decodes the communi-cation instructions, it orders the COM-Router toopen the selected ports of the couple (R-Xnet,R-SCUXnet) to achieve the data transfer in thespecified direction. A COM-Control handles thecommunication requests and transfers data fromthe local SCU to the neighbour SCU in a givendirection. Once the communication is estab-lished, data will be stored in R COM register tobe used by the requester CE.

• OR Tree moduleThe barrier synchronization is a high latency op-eration in massively parallel systems. Severalsystems have implemented either dedicated bar-rier networks [12] or provided hardware supportwithin existing data networks [13]. The OR-Tree is a mechanism of global OR, checking thestate of the system parallel processing. It is com-posed of a tree of ”OR” gates, which comparesthe end execution signals of all the CEs in pairs.It is a barrier synchronization that allows the con-trollers to know if all activated CEs finished thecomputation. The G-MPSoC supports a hierar-chical OR Tree structure. The first level is in theSCU component to test the end execution in clus-ter of CEs and the second one is in the SCUs gridto test the end execution in all nodes of the sys-tem.

The modular structure of the SCU and the indepen-dent execution of the control functions allow the au-tonomous parallel computation while performing theparallel communication.

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 459 Volume 14, 2015

3.1.3 X-net: neighbourhood interconnection net-work

To maintain efficient execution in embedded systemsand high performance through a selective broadcast,we propose an on-chip regular neighbourhood inter-connection network inspired from the network usedin MP-1 and MP-2 MasPar [22] machines: called X-net Network-on-Chip. The X-net network uses vari-ous configurations according to the data-parallel algo-rithms needs. Therefore, we define different bus sizes(1 bit, 4 bits or 16 bits) and different network topolo-gies 1D (linear and ring) and 2D (mesh and torus).To change from one configuration to another, the de-signer has to use specific parameters (topology andbus size values) to establish the appropriate connec-tions. When the network parameters are selected, theX-net network is generated with the chosen configu-ration. To achieve the re-usability and reconfigurabil-ity, the X-net network directly connects each CE withits 8 nearest neighbours in bidirectional ports throughthe SCU component. In some cases, the extremityconnections in X-net network are wrapped around toform a torus topology, which is used to facilitate thematrix computation algorithms. All SCUs have thesame direction controls. In fact, each SCU can simul-taneously send a data to the northern neighbour andcan receive another data from its southern neighbour.The X-Net uses a bit-state signal to identify nodes thatparticipate in communication. Inactive SCUs can beused as pipeline stages to achieve distant communica-tion. This data transfer through networks occurs with-out conflicts and is achieved by COM S and COM Rinstructions that allow all the SCUs to communicatewith their neighbours in a given direction at a givendistance.

3.1.4 Cluster of CEsThe cluster of CEs is the third execution level in G-MPSoC. It allows the parallel execution through eitherhomogeneous CEs, using the same Software SW-CE,or heterogeneous CEs with different Software SW-CEand Hardware HW-CE.

• SW-CE: Processor Element (PE)Each PE executes its own instructions blockindependently from the others PEs. This au-tonomous execution requires the integration ofa local instruction memory and a local ProgramCounter (PC) in each SW-CE. When the SCUorders the parallel computation, all active SW-CEs start the execution of their local instructionsblocks at the same address on different data.

• HW-CE: IP AcceleratorSome specific functions performed by the pro-

cessor can be assigned to dedicated hardwaremodules, called IP accelerators. It allows exe-cuting a specific function more efficiently thanwith any processor. Regardless of the function toachieve, HW-CE is only sensitive to the triggersignal sent by the SCU controller. Once received,the HW-CE begins the execution, independentlyof the others CEs in the cluster.

The external interface of the CE is the same whateverthe nature of the component (HW-CE or SW-CE) toallow the rapid integration of the CE component in theG-MPSoC architecture. Each CE in the cluster is con-nected to its own data register R CEi located in SCUto store the intermediate results, needed for the com-munication process, and the final result that will betransmitted to the MCU. Each cluster of CEs is con-trolled by its SCU and only performs when receivesthe trigger signal.

3.1.5 Memories• Sequential memory: MCU-memory

The MCU is connected to the program memory.It includes sequential instructions to be executedby MCU and parallel control instructions to bebroadcast to SCUs. The data are stored in thestack of MCU processor.

• Parallel distributed memories: Mi memoryEach SW-CE is connected to its own local Mi

memory. It is divided into an instructions mem-ory, including parallel instructions blocks, and adata memory, including parallel data. Dependingon the SW-CE nature, the designer chooses to in-clude or not the data memory. For example, inthe case where SW-CE is a stack processor, theuse of data memory is not necessary.

The G-MPSoC architecture is designed as para-metric and reconfigurable system, which is able to tar-get various applications through customized architec-ture. Indeed, the number of the nodes (SCUs, CEsand memory size) is parametric in G-MPSoC, allow-ing the easiest scalability of the system. All thesenodes are connected via a reconfigurable interconnec-tion network, which can have one topology among4 (2D (mesh, torus) and 1D (linear, ring)), 1 direc-tion among 8 (N, S, E, O, NE, NO, SE and SO)and 1 distance ranging between 1 and 16. To facili-tate the modification and the reuse of the componentsthat constitute the architecture, a generic implementa-tion is defined, by which several G-MPSoC configu-rations can be built. To configure a G-MPSoC systemfrom a top level design, the designer defines the en-tities of the modules that will be used in the system,

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 460 Volume 14, 2015

specifies their parameters and interconnects them to-gether. Indeed, G-MPSoC instance can range from asimple configuration composed of a MCU connectedto a parametric grid of SCUs, where each SCU isconnected to a single CE, to a complex configurationwhere SCUs are interconnected via a reconfigurableneighbourhood network and each SCU is connectedto a cluster of heterogeneous CEs. Therefore, froma generic architecture a tailored solution can be built,according to the application requirements, in terms ofresources: computation, memorization and communi-cation.

3.2 Execution model of G-MPSoC3.2.1 Synchronous Communication• One-to-all communication: broadcast with mask

The broadcast with mask [11] is a techniqueof dividing a network into two or more sub-networks, where the execution of different in-structions is autonomous. This mechanism startsby activating the SCUs involved in the executionaccording to the mask sent by the MCUs. EachSCU in the grid has a unique number composedof the reference couple (X,Y). This number rep-resents its position relative to X line and Y col-umn. The mask codes set the activity flag of eachSCU. All active SCUs execute control instruc-tions, while the others remain in an idle state.

• Collective regular communicationThe communication in G-MPSoC is defined bythe temporal and spatial regularity of its datatransfer. It is regular, by which all the nodeshave the same degree of connection with theirneighbours and synchronously communicate inthe same direction and distance. This regularcommunication is managed by Send and Receiveinstructions. It is synchronously performed froma single control flow and locally controlled by theslave controllers. Such communication is simpleto design without the overhead of synchroniza-tion mechanism.

3.2.2 Asynchronous ComputationThe master-slave control mechanism [14] is basedon two control levels: the first one (MCU) executessequential instructions and sends parallel control in-structions to the second control level: SCUs. Thesecond level controls the parallel communication inthe interconnection network and the parallel executionin the cluster of CEs. Each CE executes its instruc-tions stream asynchronously from the others, whilethe SCUs manage the synchronous data transfer in the

network. The master-slave control mechanism pro-vides a flexible parallel execution with the use of mul-tiple control flows globally synchronized. This flexi-bility increases the system scalability.

4 G-MPSoC implementationThe choice of the G-MPSoC implementation onFPGA is justified by the flexibility and the re-configurability of this device. It allows the implemen-tation of generic architecture that is effectively tai-lored to the application requirements. The number ofnodes (SCU controllers + CEs calculators) and the lo-cal memories size are parametric. Indeed, a targetedapplication can need many SCUs with many heteroge-neous CEs using short memories, or a small amount ofSCUs and CEs with large memories. This architectureis implemented with VHDL language and targets theXilinx Virtex6 ML605 FPGA [15]. To evaluate the G-MPSoC performance, we use the ISim simulator andthe ISE synthesis Xilinx tools.

The G-MPSoC configuration includes a singleMCU and a grid of nodes. Each node is a hierarchi-cal unit that contains a SCU and its cluster of CEs.Each CE is another hierarchical unit that contains anIP-accelerator or a PE connected to its local mem-ory. Another intermediate hierarchical level, connect-ing several SCUs, has been defined to facilitate therouting process. All processors, used in G-MPSoC ar-chitecture, have a pre-existent FPGA implementation(FC16 [10], HoMade [16]...). We add some signalsto be adapted to the CE generic interface and someinstructions like: the wait GO instruction, to wait forthe trigger signal sent from the SCU, and the end CEinstruction, to inform the SCU of the end of the paral-lel computation. MCU is a modified FC16 processor,where mask, broadcast and wait end instructions areadded. Its interface with the SCUs grid is modified tosupport 32 bits buses and 3 bits mask/broadcast codebus. The IP-accelerator can be a predefined Xilinx IPor an implemented IP using the RTL description. Thememories modules are implemented using the exist-ing FPGA blocks memories.

The connections between the components usedin this design require several signals that consume alarge area. To increase the system scalability, it isnecessary to optimize the use of these signals withoutaffecting the processing. We have proposed to breakall final result connections from SCUs to MCU andwe have only kept the closest signals to MCUs (i.e.the SCUs in the first column). For the others, shiftoperations are performed to transfer the final result tothe SCUs in the first column. This method allows thedecrease of the system area occupancy approximately

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 461 Volume 14, 2015

about 6.26%.

The Synthesis results predict the maximum fre-quency of a given configuration, ranged around88.212MHz This frequency is relative to the usedprocessors frequency (FC16 151.404MHz, HoMade94.117MHz) and the longest critical path in the de-sign. A good place&route of the designed compo-nents is necessary to reduce the length of this criticalpath and to accelerate the signal propagation.

Table 4 gives the resources occupancy for the G-MPSoC components on the targeted FPGA. Depend-ing on the used CE size, G-MPSoC configuration caninclude 100 nodes with FC16 processor, 49 nodeswith HoMade processor or more than 256 nodes withmuladd accelerator. This number of component canbe increased with the use of another FPGA generationwith more provided hardware resources to reach thedefined architecture with 256 nodes and 16 CEs percluster (i.e. 4096 CEs). The table 4 shows the powerconsumption of these modules. For all of them, thepower characteristics are reported as around 400mW.This low power consumption makes the choice of theintegrated CE only depending on size and speed. Forsimple operation, it is better to integrate specializedaccelerator IPs than processors, in order to raise thearchitecture size and to accelerate the parallel execu-tion. Table 4 also shows that the control structurein G-MPSoC system does not clutter the occupiedarea. In particular, the SCU module contains four sub-modules that not require too much logic elements, asshown in table 5. Thereby, the total area-cost of G-MPSoC system does not burst with the use of master-slave control structure. In fact, based on the workin [14], the experimental results show that for 100nodes with clusters of a single CE (FC16 processor)connected to 4KB local memory, the grid of SCUs oc-cupy about 38% of the total consumed on-chip logicarea. For an array of 16 SCUs, it is around 16%. Infact, if the number of G-MPSoC nodes increases, thearea occupancy linearly increases, but the incrementalcost of adding SCU functionality to G-MPSoC controlsystem quickly becomes small.

Table 5: SCU sub-modules: synthesis result on FPGAVirtex6 ML605

Sub-module LUTs RegistersActivity controller 4 2Parallel execution controller 352 80Communication controller 101 50End execution controller (ORTree) 3 0

5 Experimental resultsThe generic feature of G-MPSoC is ensured notonly by the modular and parametric structure of itsdifferent components, but also by its hierarchical-distributed control, the diversity and the heterogene-ity of its processing units, and the parametricity of itsinterconnection network. In this section and throughseveral benchmarks, we validate the generic featureof the architecture and show the effectiveness of theirimplementation in terms of flexibility, scalability andhigh performance (execution time and bandwidth). Totest these benchmarks, we designed the G-MPSoC ar-chitecture with VHDL language and targeted the Xil-inx Virtex6-ML605 FPGA as prototyping platform.

5.1 Benchmark 1: Red-Black checkerboardMaster-slave control in G-MPSoC architecture isbased on a hierarchical-distributed structure, whichallows having several parallel processing areas se-lected by the sub-netting mechanism. Thereby, theMCU can manage these areas and can switch fromone to another using different activity masks, duringthe execution of a parallel program. This flexibility toswitch between the processing areas allows the simul-taneous execution of both conditional structure blocks(if...then...else...). This feature facilitates the activityprocess and avoids having idle processing nodes whenexecuting the conditional structure in massively paral-lel architecture.

To highlight this feature and its impact on the pro-posed architecture performance, we tested the Red-Black checkerboard application with the broadcast-with-mask [11] and traditional one-to-all [19] meth-ods. This application is used to solve partial differen-tial equations (Laplace equation with Dirichlet bound-ary conditions, Poisson-Boltzmann Equation, etc), us-ing massively parallel systems. It is based on the divi-sion of parallel processing nodes into red and black ar-eas, where all the same colour area are performing thesame instructions block. The code below is composedof a set of the added mask/broadcast instructions and a”lit” FC16 instruction [10] to map the red-black maskinto the processing grid, and then broadcast the paral-lel instruction to trigger the execution of the first in-structions block. Using the inverted mask, the secondarea can be activated and the execution of the secondinstructions block can be triggered.

The code of the broadcast-with-mask, detailed inlisting 1, is implemented with only 6 instructions tosubnet the (16× 16) grid into red-black checkerboardas shown in fig. 4. Therefore, the nodes with the samecolour have the same control flux and execute thesame instructions blocks, independently of the others

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 462 Volume 14, 2015

Table 4: G-MPSoC components: synthesis result on FPGA Virtex 6 ML605LUTs Slice registers DSP48E1s FPGA occupancy Fmax(Mhz) Power(W)

CE FC16 1132 206 - <1% 151.404 0.462HoMade 3560 493 1 2% 94.117 0.509

muladd accelerator 26 19 1 <1% 624.220 0.436Control module MCU 1139 201 - <1% 153.520 0.483

SCU 420 132 - <1% 256.937 0.536

nodes in the neighbour sub-network.

Listing 1: Broadcast-with-mask code for Red-Blackcheckerboard

l i t 0xAAAAAAAA / / f i n d mask A (2 c y c l e s )s e l b f / / send mask A (1 c y c l e )l i t 0 x55555555 / / f i n d mask B (2 c y c l e s )s e l b f o r / / send mask B (1 c y c l e )l i t 0 x06000010 / / f i n d p a r a l l e l c o n t r o l

i n s t r u c t i o n (2 c y c l e s )b r d b f / / send p a r a l l e l c o n t r o l

i n s t r u c t i o n (1 c y c l e )

As shown in listing 1, the red-black mask can berapidly mapped into a grid of (16 × 16) nodes in 6clock cycles, unlike the one-to-all method [19], thatneeds several clock cycles to map this mask into theprocessing nodes. With the one-to-all method, theremust be a relationship between identity and the ac-tivity bit to activate the nodes with peer identities anddisable the ones with odd identities, or to enable nodeswith identity lower than a specific value and disablethe others. So that to map the Red-Black mask into agrid of (16 × 16) nodes, 16 operations are required: 8for odds and 8 for peers, executed in 20 clock cycles.Thus, we notice that the broadcast-with-mask methodallows the rapid grid sub-netting on several processingareas, twice shorter than with the traditional one-to-allmethod.

Nodes grid

Red/Black mask

1 0 1 0 1 0

0 1 0 1 0 1

Mask A Mask B

1 0 1 0 1 0

0 1 0 1 0 1

Figure 4: Red-Black mask

The parallel instructions broadcast using thesetwo different activity control methods requires dif-ferent implementations of the control structure in G-MPSoC. The synthesis result given by ISE tool [17]and presented in table 6 shows the large bandwidth(∼12% higher than the one-to-all) provided by theG-MPSoC using broadcast-with-mask despite the ad-ditional consumed logic elements. This can be ex-plained by the fact that the proposed control struc-ture for parallel activity and parallel broadcast man-agement is based on local controllers (SCUs), whichoptimize the data flow transfer.

620

640

660

680

700

720

740

760

780

800

820

840

4 16 64 256

Ban

dw

idth

(M

B/s

)

CEs

one-to-all model

broadcast-with-mask model

Figure 5: Influence of broadcast models on bandwidth

Fig. 5 shows that an increasing number of nodesintegrated in the G-MPSoC architecture leads to ahigher gap between the bandwidth provided by thebroadcast-with-mask method and one-to-all method.This result shows the importance of broadcast-with-mask technique in the massively parallel systems scal-ability, without causing bottlenecks. However, the tra-ditional one-to-all method is recommended with smallsystems because of its efficiency in terms of area costand bandwidth. Thereby, the designer has to make theright choice between the system size and the broad-cast technique to ensure the high performance neededfor the parallel execution.

5.2 Benchmark 2: Matrix multiplicationThe processing units (CEs) in the G-MPSoC architec-ture can have several forms. They can be homogenouswith the same type of processors or heterogeneouswith clusters of different processors or clusters of dif-

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 463 Volume 14, 2015

Table 6: Synthesis results on FPGA Virtex6 of G-MPSoC with different activity control methodsFPGA occupancy Performance

LUTs Slice registers memories Fmax(Mhz) Bandwidth(MB/s) Power(W)Broadcast with mask 257747 54% 410904% 102567% 205.999 786 0.421Broadcast one-to-all 242768 51% 410564% 102567% 182.846 697 0.447

ferent processors and accelerators. Each one performsa specific function or blocks of parallel instructions.

To highlight the genericity and the flexibility ofthe CEs, we have tested the matrix multiplication ex-ample with two different G-MPSoC configurationswith a grid of (8×8) nodes, where each node is com-posed of:

• Conf.1: SCU + software CE (FC16 processor).

• Conf.2: SCU + software CE (FC16 processor) +hardware CE (muladd accelerator to perform themultiplication and the addition operations).

The matrix multiplication is one of the basic com-putational kernels in many data parallel applications.It is presented by the equation (1):

cij =n∑

k=1

aikbkj avec 1 ≤ i, j ≤ n (1)

To perform (8×8) matrix multiplication into sys-tem with (8×8) nodes, the execution needs 16 multi-plications, 16 additions and 30 communications. TheFC16 processor performs the multiplication in 19clock cycles [10], whereas muladd accelerator per-forms the multiplication in one cycle. That is why theconfiguration based on clusters of (FC16 + muladd)is more efficient than the architecture based on onlySW-CE (FC16). We notice that the conf.2 is the mostsuitable for matrix multiplication.

The generic feature of G-MPSoC architecturalmodel allows changing the system structure from ahomogeneous configuration to a heterogeneous con-figuration according to the performance provided bythe CEs and the algorithm requirements. The use ofparametric modules significantly facilitates the gener-ation of processing nodes with rapid modification ofsystem configuration. Thereby, the G-MPSoC systemis flexible, scalable and quickly adapts to applicationschanges.

5.3 Benchmark 3: Parallel SummingTo validate the flexible communication in G-MPSoCarchitecture, we chose the summing application [18]using a grid of 4×4 nodes. The aim of this appli-cation is to test the synchronous communication in

G-MPSoC system with different directions and dis-tances and using the Send/Receive instructions. Thisapplication is performed via the X-net network, us-ing the neighbourhood/distant communications andthe broadcast-with-mask method to facilitate the net-work sub-netting, as shown in fig. 6.

Figure 6: Summing application steps

Performing the Summing application, in tradi-tional SIMD system, needs several clock cycles, espe-cially for node activity step and communication step.In addition, doing distant communication in [18] re-quires external link. Therefore, the use of the X-netnetwork and the broadcast-with-mask mechanism im-proves the communication performance in G-MPSoCarchitecture, where X-net communication costs d (dis-tance) cycles: the delay of data transfer betweensource and destination (1 cycle for neighbour commu-nication).

0

25000

50000

75000

100000

125000

150000

175000

200000

4 16 64 256 4 16 64 256 4 16 64 256 4 16 64 256

mesh torus mesh torus

bus (16+1 bits) bus (1bit)

FPG

A r

eso

urc

es

number of PEs (Topology/bus size)

LUTs

Registres

Figure 7: FPGA occupancy with different size of G-MPSoC configurations

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 464 Volume 14, 2015

0

50

100

150

200

250

300

350

400

450

500

550

600

4 16 64 256 4 16 64 256 4 16 64 256 4 16 64 256

mesh torus mesh torus

bus size (16+1) bits bus size 1 bit

Ban

dw

idth

(M

B/s

)

CEs number

Figure 8: Influence of CEs number and bus sizes onbandwidth

Fig. 7 and fig. 8 present synthesis and bandwidthresults on different system topologies and bus sizes.We note a compromise between area and bandwidth.Indeed, the configuration integrating the X-net inter-connect with (16+1) bits buses gives efficient datatransfer with large bandwidth, but it occupies a largechip area (2 times higher), as shown in fig. 7. In ad-dition, if the number of the nodes is multiplied by afactor of 16, the bandwidth decreases by factor of 2and the FPGA area increases by a factor of 4, whichis an acceptable rate.

0

50

100

150

200

250

300

0 2 6 10 14

Lat

ency

(cy

cle)

distance

bus(16+1)bits

bus(4+1)bits

bus 1 bit

Figure 9: Influence of buses size on communicationdelay

We have also tested the communication delaywith the use of the different bus sizes. Fig. 9 showsthat the communication time is 17 times higher with1-bit bus than with 17-bits bus. Despite this tediouscommunication, 1-bit data transfer allows the use ofrelatively simple buses with low hardware cost, whichincrease system scalability. These previous experi-mental results show the efficiency of the integration ofreconfigurable interconnection network in G-MPSoC.Depending on the application needs, the designer canselect the network parameters, which guarantee theless communication delay.

5.4 Benchmark 4: FIR filterThe digital Finite Impulse Response (FIR) [21] fil-ters are widely used in digital signal processing toreduce certain undesired aspects. A FIR structure isdescribed by the differential equation (2):

y(n) =N−1∑k=0

h(k)× x(n− k) (2)

It is a linear equation, where X represents the in-put signal and Y presents the output signal. The orderof the filter is given by the parameter N, and H(k)represents the filter coefficients. The number of in-puts and outputs data is equal to n. To emphasize theadvantage of communication networks in the execu-tion of data-parallel programs, we have implementedthe FIR filter with two methods:1st method: 2D configurationThis method is inspired from the work presentedin [20]. It is an implementation of FIR filter as de-fined in its equation (2). The system is composed ofa MCU, a grid of (4×4) nodes (SCU + PE) and anX-net network in torus topology. We assume that theFIR system takes 16 H(k) parameters and 64 X(n)inputs. The algorithm is described as the followingsteps:

1. Data initialization: each H(k) is stored in PE(i,j)local data memory according to the followingfunction: k = 4 × i + j and all X(n) valuesare stored in each PE memory.

2. Multiplication of all the inputs with H(k) in eachPE(i,j).

3. Communication: as shown in fig. 10, all PEs doWest communication to send first multiplicationelement to their neighbour; then only PEs in thelast column do North-communication.

4. Addition of this new value with the second localmultiplication value in each PE(i,j).

5. Repeat 4) until obtain all the results which willbe stored in PE(0,0) memory.

To perform this FIR filter algorithm, (64×2) com-munications are required. The multiplication and theaddition operations are performed in parallel.

2nd method: 1D configurationThis method is inspired from the work presentedin [23]. In this implementation, the system is com-posed of a MCU, 16 slaves and X-net network inlinear topology. We also assume that the FIR sys-tem takes 16 H(k) parameters and 64 X(n) inputs.All H(k) are stored in MCU and sent to PE(i) when

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 465 Volume 14, 2015

PE 00 PE 01 PE 02 PE 03

PE 10 PE 11 PE 12 PE 13

PE20 PE 21 PE 22 PE 23

PE30 PE31 PE32 PE33

PE 20

PE 30 PE 31 PE 32 PE 33

Figure 10: FIR filter implementation in 2D-configuration

Table 7: 16-order FIR with inputs implementationthe in-puts (n)

Com execution time (cycle) on SIMD mode

G-MPSoCMethod 1

G-MPSoCMethod 2

ESCA reconf-SIMDon-chip

8 126 72 255 33216 270 144 351 74464 1134 576 1158 -

they are needed in the algorithm. The input X(n)are shifted inter-slaves using West-communication, aspresented in fig. 11. The n outputs are calculated us-ing multiplication and addition instructions, in the al-ternative way. To do this algorithm, only 63 commu-nications are required but the algorithm is not totallyparallel.

PE00 PE01 PE02 PE03 PE012 PE013 PE014 PE015MCU

h(15)

h(14)

h(13)

h(12)...

h(3)

h(2)

h(1)

h(0)

0

0

0

0...

x(0)...

x(47)

0

0

0

0...

x(1)...

x(48)

0

0

0

0...

x(2)...

x(49)

0

0

0

0...

x(3)...

x(50)

0

0

0

x(0)...

x(12)...

x(59)

0

0

x(0)

x(1)...

x(13)...

x(60)

0

x(0)

x(1)

x(2)...

x(14)...

x(61)

x(0)

x(1)

x(2)

x(3)...

x(15)...

x(62)

Figure 11: FIR filter implementation in 1D-configuration

Experimental results

The experimental results in table 7 show the timeneeded for data transfer in FIR filter application.As expected, the G-MPSoC architecture allows morerapid processing than both reconfigurable SIMD ar-chitecture on-Chip [23] and ESCA [20] architectures.We deduce that G-MPSoC architecture based on lin-ear interconnection topology is the most effective forthe FIR application.

Depending on the application needs, the designercan select the most appropriate network configurationto his system. The different topologies that can sup-port the X-net offer diverse choice of 1D or 2D con-figurations as well as at the size of the interconnectbus, to ensure data transfer rapidly and with low cost.

6 ConclusionThis paper presents a new generation of massivelyparallel System-on-Chip based on generic structure,called G-MPSoC platform. It is a configurable sys-tem, composed of clusters of hardware and/or soft-ware CEs, locally controlled by a grid of SCUs andglobally orchestrated by the MCU. All the CEs cancommunicate between each other via the SCUs com-ponents that are connected through regular X-net in-terconnection network. G-MPSoC architecture is en-tirely described in VHDL Language, in order to al-low rapid prototyping and testing, using the synthe-sis and simulation tools. The execution model of G-MPSoC is detailed to highlight the advantages of thesynchronous communication, based on broadcast withmask structure and regular communication network,and asynchronous computation, based on master-slavecontrol structure. An FPGA Hardware implementa-tion of G-MPSoC platform is also presented in thispaper and validated through several parallel applica-tions. Different configurations are tested going froma simple homogeneous structure to a complex hetero-geneous structure. All configurations tested differentX-net network topologies, different data buses sizesand different memories sizes. In this work, we de-fine a generic massively parallel system on chip thatis able to be quickly adapted to the applications re-quirements.

The next work is to define a new weakly coupledmassively parallel execution model for G-MPSoCplatform, based on Synchronous Communication andAsynchronous Computation. This model is classifiedbetween the synchronous centralized SIMD modeland the asynchronous decentralized MIMD model. Ittakes advantages of these two models to allow moreperformance needed for the execution of nowadays in-tensive processing applications.

References:

[1] D. Melpignano, L. Benini, , E. Flamand, et al.Platform 2012, a many-core computing accel-erator for embedded SoCs: performance eval-uation of visual analytics applications. Proc.Int. Conf. Design Automation Conference, NewYork, USA, 2012, pp. 1137–1142.

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 466 Volume 14, 2015

[2] SIMD < SIMT < SMT: parallelism in NVIDIAGPUs. http://yosefk.com/blog/simd-simt-smt-parallelism-in-nvidia-gpus.html

[3] PU-based Image Analysis on Mobile Devices.http://arxiv.org/pdf/1112.3110v1.pdf.

[4] D.B. Thomas, L. Howes and W. Luk. A Com-parison of CPUs, GPUs, FPGAs, and Mas-sively Parallel Processor Arrays for RandomNumber Generation. Proc. Int. Conf. Field Pro-grammable Gate Arrays, New York, USA, 2009,pp. 63–72.

[5] F. Hannig, V. Lari, S. Boppu, et al. InvasiveTightly-Coupled Processor Arrays: A Domain-Specific Architecture/Compiler Co-Design Ap-proach, ACM transactions on Embedded Sys-tems, 13, 2014, pp. 133:1-133:29.

[6] F. Conti, C. Pilkington, A. Marongiu, et al. He-P2012: Architectural Heterogeneity Explorationon a Scalable Many-Core Platform. Proc. Int.Conf. Application-specific Systems, Architec-tures and Processors, Zurich, Swiss, 2014, pp.114-120.

[7] Many-core Kalray MPPA, http://www.kalray.eu[8] The HyperCore Processor,

http://www.plurality.com/hypercore.html[9] Next Generation CUDA Compute

Architecture: Fermi WhitePaper,http://i.dell.com/sites/doccontent/shared-content/data-sheets/en/Documents/NVIDIA-Fermi-Compute-Architecture-Whitepaper-en.pdf

[10] R.E. Haskell and D.M. Hanna, A VHDL ForthCore for FPGAs, Journal of Microprocessorsand Microsystems, 29, 2009, pp. 115-125.

[11] H. Krichene, M. Baklouti, Ph. Marquet, et al.Broadcast with mask on Massively Parallel Pro-cessing on a Chip. Proc. Int. Conf. High Per-formance Computing and Simulation, Madrid,Spain, 2012, pp. 275–280.

[12] C.E. Leiserson, Z.S. Abuhamdeh, D.C. Douglas,et al. The network architecture of the connec-tion machine CM-5, Journal of Parallel and Dis-tributed Computing, 33, 1996, pp. 145-158.

[13] S.L. Scott. Synchronization and communicationin the T3E multiprocessor. Proc. Int. Conf. Ar-chitectural Support for Programming Languagesand Operating Systems, New York, USA, 1996,pp. 26–36.

[14] H. Krichene, M. Baklouti, Ph. Marquet, et al.Master-Slave Control structure for massivelyparallel System-on-Chip. Proc. Int. Conf. Eu-romicro Conference on Digital System Design,Santander, Spain, 2013, pp. 917–924.

[15] ML605 Hardware User Guide - UG534 (v1.8),http://www.xilinx.com/support/documentation/boards and kits/ug534.pdf.

[16] HoMade processor,https://sites.google.com/site/homadeguide/home.

[17] Xilinx website, https://www.xilinx.com.[18] M. Leclercq and P.Y. Aquilanti, X-Net network

for MPPSoC, Master thesis, University of Lille1, 2006.

[19] M. Baklouti, A rapid design method of a mas-sively parallel System on Chip: from modelingto FPGA implementation. PhD thesis, Universityof Lille 1 & University of Sfax, 2010.

[20] P. Chen, K. Dai, D. Wu, et al. Parallel Algo-rithms for FIR Computation Mapped to ESCAArchitecture. Proc. Int. Conf. Information Engi-neering, Beidaihe, China, 2010, pp. 123–126.

[21] S.M. Kuo and W.S. Gan, Digital Signal Proces-sors Architectures, implementation and applica-tion. Prentice Hall, 2005.

[22] J.R. Nickolls, The design of the MasPar MP-1: a cost effective massively parallel computer,IEEE Computer Society International Confer-ence, Compcon Spring, San Francisco, USA,1990, pp. 25–28.

[23] J. Andersson, M. Mohlin and A. Nilsson, A re-configurable SIMD architecture on-chip. MasterThesis in Computer System Engineering - Tech-nical Report, School of Information Science,Computer and Electrical Engineering - HalmstadUniversity, 2006.

[24] Sh. Raghav, A. Marongiu and D. Atienza, GPUAcceleration for Simulating Massively ParallelMany-Core Platforms. Journal of Parallel andDistributed Systems, 26, 2015, pp. 1336–1349.

[25] D. Walsh and P. Dudek , An Event-Driven Mas-sively Parallel Fine-Grained Processor Array.Proc. Int. Conf. Circuits and Systems (ISCAS),Lisbon, Portugal, 2015, pp. 1346–13496.

WSEAS TRANSACTIONS on CIRCUITS and SYSTEMSHana Krichene, Mouna Baklouti, Mohamed Abid

Philippe Marquet, Jean-Luc Dekeyser

E-ISSN: 2224-266X 467 Volume 14, 2015