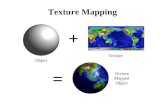

Face Recognition at-a-Distance using Texture and … Recognition at-a-Distance using Texture and...

Transcript of Face Recognition at-a-Distance using Texture and … Recognition at-a-Distance using Texture and...

Face Recognition at-a-Distance using Texture and Sparse-StereoReconstruction

Ham Rara, Aly Farag, Shireen Elhabian, Asem Ali, William Miller, Thomas Starr and Todd Davis

Abstract— We propose a framework for face recognition at adistance based on texture and sparse-stereo reconstruction. Wedevelop a 3D acquisition system that consists of two CCD stereocameras mounted on pan-tilt units with adjustable baseline. Wefirst detect the facial region and extract its landmark points,which are used to initialize the face alignment algorithm. Thefitted mesh vertices, generated from the face alignment process,provide point correspondences between the left and right imagesof a stereo pair; stereo-based reconstruction is then used toinfer the 3D information of the mesh vertices. We performexperiments regarding the use of different features extractedfrom these vertices for face recognition. The local patchesaround the landmark points are also well-suited for Gabor-based and LBP-based recognition. The cumulative rank curves(CMC), which are generated using the proposed framework,confirm the feasibility of the proposed work for long distancerecognition of human faces.

I. INTRODUCTION

There is a considerable increase in biometrics applicationsrecently, due to advances in technology and higher securitydemands. Biometric recognition systems use unique humansignatures (face, iris, fingerprint, gait, etc.) to automaticallyidentify or verify certain individuals [1].

Biometric sensing modalities can be classified into threecategories: contact, contactless, and at-a-distance. The re-quired distance to get an acceptable biometric sample be-tween the user and sensor determines the classification [1].Contact devices (e.g., fingerprint, palm) need active coop-eration from the user since they require physical contactto the sensor. Contactless devices do not require physicalcontact from the user; however, sensors belonging to thiscategory require a short distance, in the range of 1 cm. to1 m., entailing some degree of cooperation from the user.Iris capturing devices and touchless fingerprint sensors areexamples of this category.

Biometric systems capable of acquiring data at-a-distanceare very suitable for integrated surveillance/identity taskssince active cooperation from the target may not be required.Gait recognition and newly developed iris recognition sys-tems fall under this category. The main focus of this paperis to develop an at-a-distance biometric modality based onface recognition, due to the fact that the human face (asidefrom gait) is most accessible for these applications.

Face recognition is a challenging problem that has been anattractive area of research the past three decades [2]. The face

H. Rara, A. Farag, S. Elhabian, A. Ali, W. Miller and T. Starrare with the CVIP Lab, University of Louisville, Louisville, KY, USA{hmrara01,aafara01}@louisville.edu

T. Davis is with EWA Government Systems, Inc., Bowling Green,Kentucky, USA [email protected]

Fig. 1. Camera-lens-tripod portable unit with rechargeable batteries. Twosuch portable units comprise our stereo system and are connected wirelesslyto a server, where processing and analysis are done.

recognition problem is formulated as: given a still image,it is required to identify or verify one or more persons inthe scene using a stored database of face images. The maintheme of solutions provided by different researchers involvesdetecting one or more faces from the given image, followedby facial feature extraction that can be used for recognition.

Depending on the distance between the target user andcamera, face recognition systems can be categorized intothree types: near-distance, middle-distance, and far-distance.The last case is referred to as face recognition at-a-distance(FRAD) [3]. Cameras in near-distance face recognition sys-tems can easily capture high-resolution and stable images;the quality of images may become a big issue in FRADsystems. This is in addition to the fact that the performanceof many state-of-the-art face recognition algorithms suffersin the presence of lighting, pose, and many other factors [4].

Recently, there has been interest in face recognition at-a-distance. Yao, et al. [5] created a face video database,acquired from long distances, high magnifications, and bothindoor and outdoor under uncontrolled surveillance condi-tions. Medioni, et al. [6] presented an approach to identifynon-cooperative individuals at a distance by inferring 3Dshape from a sequence of images.

Due to the current lack of existing FRAD databases (aswell as facial stereo datasets), we built our own passive stereo

978-1-4244-7580-3/10/$26.00 ©2010 IEEE

acquisition setup in [7]. We initially captured images from 30subjects at various distances (3, 15, and 33 meters) indoor.Shape from sparse-stereo reconstruction was used to identifysubjects, with acceptable results. We increased the numberof subjects to 60 in [8] and captured images at 30m and50m, outdoors. In addition to sparse-stereo reconstruction,we performed dense reconstruction to aid in recognition. Theface alignment approach in [7][8] is the simultaneous ActiveAppearance Model (AAM) fitting of [9][10].

For this work, we increased our number of subjects to97 and captured images at 80m. Recently developed facealignment methods (e.g., Constrained Local Models (CLM))are used to improve our AAM implementation. We make usealso of texture from the stereo pair images for recognition.This work is part of our long-term project to create a realFRAD system capable of identifying at distances as far as1000 meters in an unconstrained setting.

The paper is organized as follows: Section 2 describesthe system setup, Section 3 discusses face detection andalignment, Section 4 talks about the features used for recog-nition, and later sections deal with the experimental results,discussions and conclusions.

II. SYSTEM SETUP

To achieve our goal and due to the lack of facial stereodatabases, we built our own passive stereo acquisition setupto acquire a stereo database. Our setup consists of a stereopair of high resolution cameras (and telephoto lenses) withan adjustable wide-baseline configuration.

Specifically, our system consists of two CCD camerasmounted on pan-tilt units (PTUs) on two separate tripods.Each camera (Canon EOS 50D) is attached with a lens(Canon EF Telephoto 800 mm) and produces an image of4752×3168 resolution. The camera-lens-tripod unit is pack-aged compactly into a single portable unit, as shown in Fig.1. Two such portable units comprise our stereo system andare connected wirelessly to a server, where processing andanalysis are done. Flexible baselines for stereo reconstructioncan be achieved by positioning them strategically to eachother.

Since the system parameters (i.e. baseline B (meter), focallength f (mm), pan angle φ (degree), and scale factor ofthe cameras kα (pixel/mm)) are known, the scene point(x, y, z) can be reconstructed from its projections p and qin the left and right images, respectively, by triangulation.The necessary equations and sample values of the systemparameters can be found in [7]. Fig. 2 shows sample capturedimages of our setup at various distances.

III. FACE DETECTION AND ALIGNMENT

Face recognition systems typically follow the detection-alignment-recognition pipeline, which divides the task ofdetecting faces, aligning them by locating facial featurepoints, and finally performing recognition based on thealignment results [2].

Fig. 2. Illustration of captured images: (a) 15-meter (b) 30-meter, (c)50-meter, and (d) 80-meter.

A. Face Detection

Face detection, in this work, involves detecting the facialregion, as well as the eyes and mouth locations (e.g., Fig.3(a)). It starts with identifying the possible facial regionsin the input image, using a combination of the Viola-Jonesdetector [11] and the skin detector of [12]. The face isthen divided into four equal parts to establish a geometricalconstraint of the face. The face landmarks are then identifiedusing variants of the Viola-Jones detector, i.e., the facedetector is replaced with the corresponding face landmark(e.g., eye detector) detector [13]. False detections are thenremoved by taking into account the geometrical structure ofthe face (i.e., expected facial feature locations).

(a) (b)Fig. 3. (a) Sample of face and facial feature detection, (b) 68-vertex meshused in face alignment.

B. Face Alignment

Our previous work in [7][8] uses the simultaneous ActiveAppearance Model (AAM) version of [10] to perform facealignment. We use a 68-vertex facial mesh similar to that inFig. 3(b). AAMs belong to the class of methods employinga generative model together with image templates of objectappearance. However, such algorithms suffer when the targetobject has a large appearance variation from the training set,due to changes caused by illumination conditions and non-rigid motion.

Recently, Constrained Local Models (CLM) [14] and itsvariants [15][16] have been found to outperform leadingholistic approaches such as AAMs. CLMs retain the shapemodel of AAMs but, instead of using the whole facial region,uses only patches (local regions) around facial feature pointsas image templates. For this paper, the Exhaustive LocalSearch (ELS) variant [17] of CLM is used. We extend theframework of [17] to include the recently invented LocalBinary Pattern (LBP) method for local feature extraction.We also constrain the fitting aspect of the classical ElasticBunch Graph Matching (EBGM) approach [18] using theframework of [17]. These two approaches will be comparedto the previously used AAMs and the Laplacian versionof [17] for face alignment experiments using our acquireddatabase.

C. Exhaustive Local Search (ELS)

This section discusses the ELS variant [17] of the Con-strained Local Model (CLM) approach for face alignment.To better understand the ELS approach, we first discuss theholistic gradient descent alignment approach.

Given a template T (z) and a source image Y (z′), the goalis to find the best alignment between them, such that z′ isthe warped image pixel position of z, i.e., z′ = W (z; p),where p refers to the warp parameters. z is the concatenationof individual pixel coordinates xi = (xi, yi), i.e., z =[x1, · · · , xn].

The warp function W (z; p) for ELS is defined asW (z; p) = Jp + z0. This is a linear model, which is alsoknown as the Point Distribution Model (PDM) in [19].Procrustes analysis is applied to all shape training samplesto estimate the base shape z0. Principal component analysis(PCA) is used to obtain shape eigenvectors J. The firstfour eigenvectors of J are forced to correspond to similarityvariation (translation, scale, rotation) [9]. The warp updatefor the holistic alignment approach is of the form

∆p = R[Y (z′)− T (z)] (1)

where R is the update matrix. Optimizing for the holisticwarp update ∆p can lead to solution divergence if the targetimage contains a large appearance variation from the trainingset. The ELS approach instead searches within N localneighborhoods to find N best translation updates ∆xi, andthen constrain all the local updates with the Jacobian matrix,J = ∂W (z;0)

∂p .Once the local pixel updates, ∆z = [∆x1, · · · ,∆xn], are

obtained, the global warp update ∆p can be estimated by aweighted least-squares optimization,

∆p = (JWJT )−1JW∆z (2)

where W is defined as a diagonal weighting matrix. To findthe optimal local update ∆xi, an exhaustive local search,using various distance measures (depending on the localfeature), is performed within a local neighborhood.

D. Local Features

1) Gabor Wavelets: This work follows the Elastic BunchGraph Matching (EBGM) framework of [18], which makesuse of the concept of a jet. A jet describes a small patch ofgray-level values in an image I(x) around a given pixel x. Itis based on a wavelet transform, defined as a convolutionwith a family of Gabor kernels. There are a total of 40kernels, derived from five distinct frequencies and eightorientations. The resulting jet is then a set of 40 complexcoefficients (after convolution) obtained for one image point.

2) Local Binary Patterns (LBP): The LBP operator, firstintroduced in [20], is a power texture descriptor supposedlyrobust to illumination variations. The original operator labelsthe pixels of an image by thresholding the 3×3 neighborhoodof each pixel with the center value and considering the resultas a binary number. At a given pixel position (xc, yc), thedecimal form of the resulting 8-bit word is

LBP (xc, yc) =

7∑n=0

s(in − ic)2n (3)

where ic corresponds to the center pixel (xc, yc), in to gray-level values of the eight surrounding pixels and function s(x)is a unit-step function. The operator is invariant to monotonicchanges in grayscale and can resist illumination variations aslong as the absolute gray-level value differences are not badlyaffected. The authors in [21] extended the LBP operator interms of a circular neighborhood (of different radius size)and with the concept of uniform patterns.

The notation LBPu2P,R used in this paper refers to theextended LBP operator in a (P,R) neighborhood, with onlyuniform patterns considered.

E. Exhaustive Local Search using Gabor Jets

Measuring the similarity between two jets, J and J ′

is crucial to ELS using Gabor jets. The equation for thesimilarity measure is

SD(J, J ′,d) =

∑Nj=0 aja

′jcos(φj − (φ′j + d · kj))√∑Nj=0 a

2j

∑Nj=0 a

′2j

(4)

where aj refers to magnitude, φj denotes phase, kj is avector defined in [18], and d is the unknown displacement.To estimate the displacement d, we use the method in [18],where d has a closed-form solution based on maximizing thesimilarity function SD in (4) in its Taylor expansion. Thisapproach is otherwise known as DEPredictiveIter in [22].

Once the displacement vector d is computed, we canconsider this as the local update ∆z in (2) and the globalwarp update ∆p can be determined easily.

F. Exhaustive Local Search using LBPs

Authors in [23] noticed that LBP alone cannot describethe velocity of local variation. To solve this problem, theyextended the LBP approach to include the gradient magni-tude image, in addition to the original image. Furthermore,in order to retain spatial information, the local region around

landmark points are divided into small regions from whichLBP histograms are extracted and concatenated into a singlefeature histogram representing the local features.

To get this histogram, we extract a rectangular regionof size 21 × 21, centered at the landmark point. A low-pass Gaussian filter is applied to reduce noise. The gradientmagnitude image is then generated using Sobel filters (hxand hy), i.e., |∇I| =

√(hx ⊗ I)2 + (hy ⊗ I)2. The original

image, as well as the gradient magnitude image, are dividedinto four regions. We then build five histograms correspond-ing to the whole image and four regions, and concatenatethe results for all cases, to get the final LBP histogram. Thisprocess is illustrated in Fig. 4.

Fig. 4. LBP histogram generation. No averaging is done with thehistograms; they are concatenated on top of each other to form the featurevector

During search, the LBP histogram corresponding to eachpoint located on the search region of size 9 × 9 is builtaccording to the above process. The similarity between thetesting point histogram H and the template histogram H ′ iscalculated using Chi-square statistic

χ2(H,H ′) =∑i

(Hi −H ′i)2

(Hi +H ′i)(5)

The closer the histograms are, the smaller the value ofχ2. The landmark point is moved to the search point wherethe LBP histogram is closest to the template histogram. Thisautomatically generates the local update ∆z in (2) and theglobal warp update ∆p can be easily determined afterwards.

There are differences between the implementation of [23]and this work. In this paper, instead of using a circular regionaround the landmark point, we utilize a rectangular region.We do not average any histograms during any step of theprocess (e.g., feature extraction and search), unlike in [23],since it is likely that averaging destroys local information.The search region is also rectangular region, instead of

a normal profile. Lastly, we use the global warp updatecomputed in (2) to constrain the local displacements.

G. Experimental Results

The criteria for the effectiveness of automated face align-ment methods is the distance between the resulting points tothe manually labeled ground truth. The distance metric [14]used in this work is

me =1

ns

n∑i=1

di (6)

where di is the Euclidean point-to-point error for eachindividual feature location and s is the ground-truth inter-ocular distance between left and right eye pupils. For thiswork, n = 53 (out of the 68 vertices) since facial featureslocated on the boundary of the face are ignored.

Fig. 5. Cumulative distribution of the point-to-point measure (me) usingvarious face alignment methods.

Fig. 5 shows the cumulative distribution of the point-to-point measure (me) using various face alignment methods. Itcan be seen (CLM+Gabor) and (CLM+LBP) both outperformour previous AAM implementation. The (CLM+Laplacian)of [17] do not perform as well as the former two. Between(CLM+Gabor) and (CLM+LBP), (CLM+LBP) reaches 1.0way earlier than (CLM+Gabor), leading to the conclu-sion that (CLM+LBP) has the best performance. However,this comes with a catch because face alignment using(CLM+LBP) is considerably slower than (CLM+Gabor),owing to its exhaustive search nature (while the Gaborversion has the DEPredictiveIter [22] component that canpredict the best solution in closed-form).

IV. RECOGNITION

A. Features

1) Sparse-Stereo Reconstruction: If the automated facialalignment methods discussed in Sec. III-B are applied toboth images of the stereo pair, what we get are sparsecorrespondences that can be used to reconstruct the 3Dposition of the facial landmarks. Our previous work in [7][8]have shown that 2D projections (to the x-y plane) of the 3Dsparse reconstructions (after frontal pose normalization, i.e.,rigidly registering our unknown-pose sparse 3D points to a3D model that is known to be frontal) can provide decent

classification via a 2D version of the Procrustes distance.Figure 6 shows sparse-stereo reconstruction results of threesubjects, visualized with the x-y, x-z, and y-z projections,after rigid alignment to one of the subjects. Notice thatin the x-y projections, the similarity (or difference) of 2Dshapes coming from the same (or different) subject is visuallyenhanced. In particular, Subject 1 (probe) is visually similarto Subject 1 (gallery) than Subject 2 (gallery) in the x-yprojection. This similarity (or difference) is not obvious inother projections. This is the main reason behind the use ofx-y projections as features.

Fig. 6. Visualization of sparse-stereo reconstruction results.

2) Texture: Although we only perform sparse reconstruc-tion in this work, the holistic texture from the left image,as well as the local patches around the detected landmarkpoints are still available.

For this work, the local patches around the landmark pointsare well-suited for EBGM-based and LBP-based recognitionusing the local features discussed in Sec. III-B. The simi-larity measures for EBGM (4) and LBP (5) are now usedfor recognition between gallery and probe. For comparisonpurposes, the classical Principal Component Analysis (PCA)approach is used to classify the holistic texture; this servesas a benchmark for more advanced texture methods.

B. Experimental Results

1) Experimental Setup: Our current database consists of97 subjects, with a gallery at 3 meters and five differentprobe sets at 3-meter and 15-meter indoors, together with30-meter, 50-meter, and 80-meter outdoors. Fig. 2 illustratesthe captured images (left image of the stereo pair) at differentranges.

The features discussed in Sec. III-B are now used forrecognition. No training is required for recognition usingsparse-stereo reconstruction, Gabor, and LBP features (i.e.,the similarity distance is computed directly between theprobe and each gallery instance and choosing the pair withthe smallest distance as the match). For PCA, the face spaceis determined by the gallery of 97 subjects at the 3-meterrange and the similarity function used is the L2 norm.

Three quick observations can be garnered from Figs. 7,8(a)-10(a). Texture methods perform well at indoors (3m,

Fig. 7. Cumulative match characteristic (CMC) curves for the (a) 3-meterand (b) 15-meter indoor probe sets.

Fig. 8. Cumulative match characteristic (CMC) curves of the (a) single-classifier and (b) multi-classifier architectures for the 30-meter probe set.

15m) as expected. However, they drop slightly at outdoorsettings (30m, 50m, 80m), due to illumination variations andfarther distances. At outdoor settings, Gabor and LBP-basedrecognition are better than the PCA benchmark. Recognitionusing sparse-reconstructions are relatively quick to computebut still acceptable, as shown from the results.

2) Multiple-Classifier Architecture: These three featuresfor recognition are good candidates for a recognition systemusing a multi-classifier architecture. Fig. 11 shows the multi-classifier design. In this architecture, the top n (e.g., n =6) candidates from the sparse reconstruction classifier issubmitted to both Gabor and LBP classifiers. The reasonbeing that the similarity function (Procrustes) for sparsereconstructions is relatively quicker to compute. The finalresult uses the sum rule of decision fusion [24], weighted bythe accuracy at rank-1 of each method at a specific range.Notice that the output of the multi-classifier approach is100%, for any distance range in Figs. 8(b)-10(b).

Fig. 9. Cumulative match characteristic (CMC) curves of the (a) single-classifier and (b) multi-classifier architectures for the 50-meter probe set.

Fig. 10. Cumulative match characteristic (CMC) curves of the (a) single-classifier and (b) multi-classifier architectures for the 80-meter probe set.

Fig. 11. Schematic diagram of the multi-classifier architecture.

V. CONCLUSIONS AND FUTURE WORKS

We have studied the use of texture, sparse-stereo recon-struction, in the context of long-distance face recognition.Experiments using sophisticated facial feature localizationmethods are performed. These same local features are usedfor recognition later in the process. With our database ofimages, we have illustrated the effectiveness of relativelystraightforward algorithms, especially when combined in amulti-classifier manner.

A continuing goal of this project is to further increase thedatabase size and include images as far as 1000 meters atchallenging image conditions. As more challenging scenar-ios are encountered later on, additional novel and existingapproaches will be utilized.

REFERENCES

[1] S.Z. Li, B. Schouten, and M. Tistarelli, ”Biometrics at a Distance: Is-sues, Challenges, and Prospects”, in Handbook of Remote Biometrics:for Surveillance and Security (eds: Massimo Tistarelli, Stan Z. Li, andRama Chellappa, Springer; 1 edition (August 6, 2009), pp. 3-22

[2] W. Zhao, R. Chellapa, P.J.Phillips, and A. Rosenfeld, ”Face recogni-tion: A literature survey”, in ACM Comput. Surv., 35(4), 2003, pp.399-458.

[3] M. Ao, D. Yi, Z. Lei, and S.Z. Li, ”Face Recognition at a Distance:System Issues”, in Handbook of Remote Biometrics: for Surveillanceand Security (eds: Massimo Tistarelli, Stan Z. Li, and Rama Chel-lappa, Springer; 1 edition (August 6, 2009), pp. 155-168

[4] P. J. Phillips, P. J. Flynn, T. Scruggs, K.W. Bowyer, J. Chang, K.Hoffman, J.Marques, J.Min, and W. Worek, ”Overview of the facerecognition grand challenge”, in Proceedings of IEEE Conference onComputer Vision and Pattern Recognition, 2005

[5] Y. Yao, B. R. Abidi, N. D. Kalka, N. A. Schmid, and M. A.Abidi, ”Improving long range and high magnification face recognition:Database acquisition, evaluation, and enhancement”, in Comput. Vis.Image Underst., 111(2), 2008, pp. 111125.

[6] G. Medioni, J. Choi, C.H. Kuo, and D. Fidaleo, ”Identifying nonco-operative subjects at a distance using face images and inferred three-dimensional face models” in IEEE Trans. Sys. Man Cyber. Part A,39(1), 2009, pp. 1224.

[7] H. Rara, S. Elhabian, A. Ali, M. Miller, T. Starr, and A. Farag,”Facerecognition at-a-distance based on sparse-stereo reconstruction” inIEEE CVPR Biometrics Workshop, 2009, pp. 2732.

[8] H. Rara, S. Elhabian, A. Ali, T. Gault, M. Miller, T. Starr, and A.Farag, ”A framework for long distance face recognition using dense-and sparse-stereo reconstruction” in ISVC 09: Proceedings of the 5thInternational Symposium on Advances in Visual Computing, Springer-Verlag, 2009, pp. 774783

[9] I. Matthews and S. Baker, ”Active Appearance Models Revisited”,in International Journal of Computer Vision (IJCV), 60(2), 2004, pp.135-164

[10] R. Gross, I. Matthews, and S. Baker, ”Generic vs. Person SpecificActive Appearance Models”, in Image and Vision Computing, 23(11),2005, pp. 1080-1093

[11] P. Viola and M.J. Jones, ”Robust real-time face detection”, in Inter-national Journal of Computer Vision (IJCV), 2004, pp. 151173

[12] M. Jones and J. Rehg, ”Statistical color models with application toskin detection”, in International Journal of Computer Vision (IJCV),2002, pp. 8196

[13] M. Castrillon-Santana, O. Deniz-Suarez, L. Anton-Canals, and J.Lorenzo-Navarro, ”FACE AND FACIAL FEATURE DETECTIONEVALUATION: Performance Evaluation of Public Domain Haar De-tectors for Face and Facial Feature Detection”, in VISAPP, 2008

[14] D. Cristinacce and T. Cootes, ”Feature Detection and Tracking withConstrained Local Models”, in 17th British Machine Vision Confer-ence, 2006, pp. 929-938

[15] U. Paquet, ”Convexity and Bayesian constrained local models”, IEEEComputer Vision and Pattern Recognition, 2009, pp. 1193-1199

[16] Y. Wang, S. Lucey, and J. Cohn, ”Enforcing Convexity for ImprovedAlignment with Constrained Local Models”, in IEEE InternationalConference on Computer Vision and Pattern Recognition (CVPR),2008

[17] Y. Wang, S. Lucey, and J. Cohn, ”Non-Rigid Object Alignment witha Mismatch Template Based on Exhaustive Local Search”, in IEEEWorkshop on Non-rigid Registration and Tracking through Learning -NRTL, 2007

[18] L. Wiskott, J. Fellous, N. Kruger, ”Face Recognition by Elastic BunchGraph Matching”, in IEEE Transactions on Pattern Analysis andMachine Intelligence, 1997, pp. 775-779

[19] T.F. Cootes, C.J. Taylor, D.H. Cooper, and J. Graham, ”Active shapemodels - their training and application”, in Computer Vision and ImageUnderstanding, 61, 1995

[20] T. Ojala, M. Pietikainen, and D. Harwood, ”A comparative study oftexture mea- sures with classification based on feature distributions”,in Pattern Recognition, 29, 1996, pp.51-59

[21] T. Ojala, M. Pietikainen, and T. Maenpaa, ”Multiresolution gray-scaleand rotation invariant texture classification with loval binary patterns”in IEEE Transactions on Pattern Analysis and Machine Intelligence,24, 2002, pp. 971-987

[22] D. Bolme, ”Elastic Bunch Graph Matching (Masters Thesis)” in CSUTechnical Report, 2003

[23] X. Huang, S. Li, and Y. Wang, ”Shape localization based on statisticalmethod using extended local binary pattern” in Third InternationalConference on Image and Graphics (ICIG), 2004, pp. 184187

[24] A. Ross and A. Jain, ”Information fusion in biometrics” in PatternRecognition Letters, 2003, 24(13), pp. 21152125