Example: The Dishonest Casino Hidden Markov Models The dishonest casino...

Transcript of Example: The Dishonest Casino Hidden Markov Models The dishonest casino...

1

Hidden Markov Models

Durbin and Eddy, chapter 3

Example: The Dishonest Casino

Game: 1. You bet $1 2. You roll 3. Casino player rolls 4. Highest number wins $2

The casino has two dice: Fair die P(1) = P(2) = P(3) = P(5) = P(6) = 1/6 Loaded die P(1) = P(2) = P(3) = P(5) = 1/10 P(6) = 1/2 Casino player switches between fair and loaded die (not too often, and not for too long)

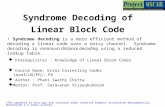

The dishonest casino model

FAIR LOADED

0.05

0.1

0.9 0.95

P(1|F) = 1/6 P(2|F) = 1/6 P(3|F) = 1/6 P(4|F) = 1/6 P(5|F) = 1/6 P(6|F) = 1/6

P(1|L) = 1/10 P(2|L) = 1/10 P(3|L) = 1/10 P(4|L) = 1/10 P(5|L) = 1/10 P(6|L) = 1/2

Question # 1 – Evaluation

GIVEN:

A sequence of rolls by the casino player 1245526462146146136136661664661636616366163616515615115146123562344

QUESTION:

How likely is this sequence, given our model of how the casino works?

This is the EVALUATION problem in HMMs

Prob = 1.3 x 10-35

Question # 2 – Decoding

GIVEN: A sequence of rolls by the casino player 1245526462146146136136661664661636616366163616515615115146123562344

QUESTION:

What portion of the sequence was generated with the fair die, and what portion with the loaded die?

This is the DECODING question in HMMs

FAIR LOADED FAIR

Question # 3 – Learning

GIVEN: A sequence of rolls by the casino player 1245526462146146136136661664661636616366163616515615115146123562344 QUESTION:

How does the casino player work: How “loaded” is the loaded die? How “fair” is the fair die? How often does the casino player change from fair to loaded, and back?

This is the LEARNING question in HMMs

2

The dishonest casino model

FAIR LOADED

0.05

0.1

0.9 0.95

P(1|F) = 1/6 P(2|F) = 1/6 P(3|F) = 1/6 P(4|F) = 1/6 P(5|F) = 1/6 P(6|F) = 1/6

P(1|L) = 1/10 P(2|L) = 1/10 P(3|L) = 1/10 P(4|L) = 1/10 P(5|L) = 1/10 P(6|L) = 1/2

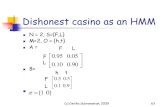

Definition of a hidden Markov model • Alphabet Σ = { b1, b2,…,bM } • Set of states Q = { 1,...,K } (K = |Q|)

• Transition probabilities between any two states

aij = transition probability from state i to state j

ai1 + … + aiK = 1, for all states i

• Initial probabilities a0i

a01 + … + a0K = 1

• Emission probabilities within each state

ek(b) = P( xi = b | πi = k) ek(b1) + … + ek(bM) = 1

K

1

…

2

Hidden states and observed sequence

At time step t, πt denotes the (hidden) state in the Markov chain

xt denotes the symbol emitted in state πt

A path of length N is: π1, π2, …, πN An observed sequence

of length N is: x1, x2, …, xN

An HMM is “memory-less”

At time step t, the only thing that affects the next state is the current State, πt P(πt+1 = k | “whatever happened so far”) = P(πt+1 = k | π1, π2, …, πt, x1, x2, …, xt) = P(πt+1 = k | πt)

K

1

…

2

A parse of a sequence Given a sequence x = x1……xN, A parse of x is a sequence of states π = π1, ……, πN

1

2

K

…

1

2

K

…

1

2

K

…

…

…

…

1

2

K

…

x1 x2 x3 xK

2

1

K

2

Likelihood of a parse Given a sequence x = x1…… ,xN and a parse π = π1, ……,πN,

How likely is the parse (given our HMM)?

P(x, π) = P(x1, …, xN, π1, ……, πN)

= P(xN, πN | x1…xN-1, π1, ……, πN-1) P(x1…xN-1, π1, ……, πN-1) = P(xN, πN | πN-1) P(x1…xN-1, π1, ……, πN-1)

= … = P(xN, πN | πN-1) P(xN-1, πN-1 | πN-2)……P(x2, π2 | π1) P(x1, π1)

= P(xN | πN) P(πN | πN-1) ……P(x2 | π2) P(π2 | π1) P(x1 | π1) P(π1)

= a0π1 aπ1π2……aπN-1πN eπ1(x1)……eπN(xN)

1 2

K …

1 2

K …

1 2

K …

… …

…

1 2

K …

x1 x2 x3 xK

2 1

K

2

!=

"=

N

iixea

iii1

)(1 ###

3

Example: the dishonest casino

What is the probability of a sequence of rolls x = 1, 2, 1, 5, 6, 2, 1, 6, 2, 4 and the parse

π = Fair, Fair, Fair, Fair, Fair, Fair, Fair, Fair, Fair, Fair?

(say initial probs a0,Fair = ½, a0,Loaded = ½) ½ × P(1 | Fair) P(Fair | Fair) P(2 | Fair) P(Fair | Fair) … P(4 | Fair) = ½ × (1/6)10 × (0.95)9 = 5.2 × 10-9

Example: the dishonest casino

So, the likelihood the die is fair in all this run is 5.2 × 10-9 What about π = Loaded, Loaded, Loaded, Loaded, Loaded, Loaded, Loaded,

Loaded, Loaded, Loaded?

½ × P(1 | Loaded) P(Loaded|Loaded) … P(4 | Loaded) = ½ × (1/10)8 × (1/2)2 (0.9)9 = 4.8 × 10-10

Therefore, it is more likely that the die is fair all the way, than loaded all

the way

Example: the dishonest casino

Let the sequence of rolls be: x = 1, 6, 6, 5, 6, 2, 6, 6, 3, 6 And let’s consider π = F, F,…, F

P(x, π) = ½ × (1/6)10 × (0.95)9 = 5.2 × 10-9

(same as before) And for π = L, L,…, L: P(x, π) = ½ × (1/10)4 × (1/2)6 (0.9)9 = 3.02 × 10-7 So, the observed sequence is ~100 times more likely if a loaded die

is used

Clarification of notation P[ x | M ]: The probability that sequence x was

generated by the model

The model is: architecture (#states, etc) + parameters θ = aij, ei(.)

So, P[x | M] is the same as P[ x | θ ], and P[ x ], when the architecture, and the parameters, respectively, are implied

Similarly, P[ x, π | M ], P[ x, π | θ ] and P[ x, π ] are the same when the architecture, and the parameters, are implied

In the LEARNING problem we write P[ x | θ ] to emphasize that we are seeking the θ* that maximizes P[ x | θ ]

What we know Given a sequence x = x1,…,xN and a parse π = π1,…,πN,

we know how to compute how likely the parse is:

P(x, π)

1 2

K …

1 2

K …

1 2

K …

… …

…

1 2

K …

x1 x2 x3 xK

2 1

K

2

What we would like to know

1. Evaluation

GIVEN HMM M, and a sequence x, FIND Prob[ x | M ]

2. Decoding

GIVEN HMM M, and a sequence x, FIND the sequence π of states that maximizes P[ x, π | M ]

3. Learning

GIVEN HMM M, with unspecified transition/emission probs., and a sequence x,

FIND parameters θ = (ei(.), aij) that maximize P[ x | θ ]

4

Problem 2: Decoding

Find the best parse of a sequence

Decoding

GIVEN x = x1x2,…,xN We want to find π = π1, …, πN, such that P[ x, π ] is maximized π* = argmaxπ P[ x, π ]

Maximize a0π1 eπ1(x1) aπ1π2…aπN-1πN eπN(xN) We can use dynamic programming!

Let Vk(i) = max{π1,…, π i-1} P[x1…xi-1, π1, …, πi-1, xi, πi = k] = Probability of maximum probability path ending at state πi = k

1

2

K …

1

2

K …

1

2

K …

…

…

…

1

2

K …

x1 x2 x3 xN

2

1

K

2

Decoding – main idea Inductive assumption: Given

Vk(i) = max{π1,…, π i-1} P[x1…xi-1, π1, …, πi-1, xi, πi = k]

What is Vr(i+1)? Vr(i+1) = max{π1,…, πi}P[ x1…xi, π1, …, πi, xi+1, πi+1 = r ] = max{π1,…, πi}P(xi+1, πi+1= r | x1…xi,π1,…, πi) P[x1…xi, π1,…, πi]

= max{π1,…, πi}P(xi+1, πi+1= r | πi ) P[x1…xi-1, π1, …, πi-1, xi, πi]

= maxk [P(xi+1, πi+1= r | πi = k) max{π1,…, π i-1}P[x1…xi-1,π1,…,πi-1, xi,πi=k]]

= maxk er(xi+1) akr Vk(i)

= er(xi+1) maxk akr Vk(i)

The Viterbi Algorithm Input: x = x1,…,xN Initialization: V0(0) = 1 (0 is the imaginary first position)

Vk(0) = 0, for all k > 0

Iteration: Vj(i) = ej(xi) × maxk akj Vk(i-1)

Ptrj(i) = argmaxk akj Vk(i-1)

Termination: P(x, π*) = maxk Vk(N)

Traceback: πN* = argmaxk Vk(N)

πi-1* = Ptrπi (i)

The Viterbi Algorithm Input: x = x1,…,xN Initialization: V0(0) = 1 (0 is the imaginary first position)

Vk(0) = 0, for all k > 0

Iteration: Vj(i) = ej(xi) × maxk akj Vk(i-1)

Ptrj(i) = argmaxk akj Vk(i-1)

Termination: P(x, π*) = maxk ak0Vk(N) (with an end state)

Traceback: πN* = argmaxk Vk(N)

πi-1* = Ptrπi (i)

The Viterbi Algorithm

Similar to “aligning” a set of states to a sequence Time:

O(K2N) Space:

O(KN)

x1 x2 x3 …………xi-1…xi…………………….xN State 1

j

K

Vj(i)

5

The edit graph for the decoding problem

• The Decoding Problem is essentially finding a longest path in a directed acyclic graph (DAG)

Viterbi Algorithm – a practical detail

Underflows are a significant problem

P[ x1,…., xi, π1, …, πi ] = a0π1 aπ1π2,…,aπi eπ1(x1),…,eπi(xi)

These numbers become extremely small – underflow Solution: Take the logs of all values

Vr(i) = log ek(xi) + maxk [ Vk(i-1) + log akr ]

Example Let x be a sequence with a portion that has 1/6 6’s, followed by a portion with ½ 6’s

x = 123456123456…12345 6626364656…1626364656

Then, it is not hard to show that the optimal parse is:

FFF…………………...F LLL………………………...L

Example Observed Sequence: x = 1,2,1,6,6 P(x) = 0.0002195337 Best 8 paths: LLLLL 0.0001018 FFFFF 0.0000524 FFFLL 0.0000248 FFLLL 0.0000149 FLLLL 0.0000089 FFFFL 0.0000083 LLLLF 0.0000018 LFFFF 0.0000017

Conditional Probabilities given x

Position F L 1 0.5029927 0.4970073 2 0.4752192 0.5247808 3 0.4116375 0.5883625 4 0.2945388 0.7054612 5 0.2665376 0.7334624

Problem 1: Evaluation

Finding the probability a sequence is generated by the

model

Generating a sequence by the model Given a HMM, we can generate a sequence of length n as follows: 1. Start at state π1 according to probability a0π1 2. Emit letter x1 according to probability eπ1(x1)

3. Go to state π2 according to probability aπ1π2

4. … until emitting xn

1

2

K …

1

2

K …

1

2

K …

…

…

…

1

2

K …

x1 x2 x3 xn

2

1

K

2 0

e2(x1)

a02

6

The Forward Algorithm

want to calculate P(x) = probability of x, given the HMM (x = x1,…,xN )

Sum over all possible ways of generating x:

P(x) = Σall paths π P(x, π) To avoid summing over an exponential number of paths π,

define

fk(i) = P(x1,…,xi, πi = k) (the forward probability)

The Forward Algorithm – derivation

the forward probability: fk(i) = P(x1…xi, πi = k)

= Σπ1…πi-1 P(x1…xi-1, π1,…, πi-1, πi = k) ek(xi)

= Σr Σπ1…πi-2 P(x1…xi-1, π1,…, πi-2, πi-1 = r) ark ek(xi)

= Σr P(x1…xi-1, πi-1 = r) ark ek(xi)

= ek(xi) Σr fr(i-1) ark

The Forward Algorithm A dynamic programming algorithm: Initialization:

f0(0) = 1 ; fk(0) = 0, for all k > 0

Iteration:

fk(i) = ek(xi) Σr fr(i-1) ark Termination:

P(x) = Σk fk(N)

The Forward Algorithm If our model has an “end” state: Initialization:

f0(0) = 1 ; fk(0) = 0, for all k > 0

Iteration:

fk(i) = ek(xi) Σr fr(i-1) ark Termination:

P(x) = Σk fk(N) ak0 Where, ak0 is the probability that the terminating state is k (usually = a0k)

Relation between Forward and Viterbi VITERBI

Initialization:

V0(0) = 1 Vk(0) = 0, for all k > 0

Iteration:

Vj(i) = ej(xi) maxk Vk(i-1) akj Termination:

P(x, π*) = maxk Vk(N)

FORWARD

Initialization: f0(0) = 1 fk(0) = 0, for all k > 0

Iteration:

fl(i) = el(xi) Σk fk(i-1) akl

Termination:

P(x) = Σk fk(N)

The most probable state Given a sequence x, what is the most likely state

that emitted xi? In other words, we want to compute P(πi = k | x)

Example: the dishonest casino Say x = 12341623162616364616234161221341 Most likely path: π = FF……F

However: marked letters more likely to be L

7

Motivation for the Backward Algorithm

We want to compute P(πi = k | x), We start by computing

P(πi = k, x) = P(x1…xi, πi = k, xi+1…xN) = P(x1…xi, πi = k) P(xi+1…xN | x1…xi, πi = k) = P(x1…xi, πi = k) P(xi+1…xN | πi = k)

Then, P(πi = k | x) = P(πi = k, x) / P(x) = fk(i) bk(i) / P(x)

Forward, fk(i) Backward, bk(i)

The Backward Algorithm – derivation

Define the backward probability:

bk(i) = P(xi+1…xN | πi = k)

= Σπi+1…πN P(xi+1,xi+2, …, xN, πi+1, …, πN | πi = k)

= Σr Σπi+2…πN P(xi+1,xi+2, …, xN, πi+1 = r, πi+2, …, πN | πi = k)

= Σr er(xi+1) akr Σπi+2…πN P(xi+2, …, xN, πi+2, …, πN | πi+1 = r)

= Σr er(xi+1) akr br(i+1)

The Backward Algorithm A dynamic programming algorithm for bk(i): Initialization:

bk(N) = 1, for all k Iteration:

bk(i) = Σr er(xi+1) akr br(i+1) Termination:

P(x) = Σr a0r er(x1) br(1)

The Backward Algorithm In case of an “end” state: Initialization:

bk(N) = ak0, for all k Iteration:

bk(i) = Σr er(xi+1) akr br(i+1) Termination:

P(x) = Σr a0r er(x1) br(1)

Computational Complexity

What is the running time, and space required for Forward, and Backward algorithms?

Time: O(K2N) Space: O(KN)

Useful implementation technique to avoid underflows - Rescaling at each position by multiplying by a constant

Scaling

• Define:

• Recursion:

• Choosing scaling factors such that Leads to:

Scaling for the backward probabilities:

8

Posterior Decoding

We can now calculate fk(i) bk(i) P(πi = k | x) = ––––––– P(x)

Then, we can ask What is the most likely state at position i of sequence x ?

Using Posterior Decoding we can now define:

= argmaxk P(πi = k | x)

Posterior Decoding

• For each state,

Posterior Decoding gives us a curve of probability of state for each position, given the sequence x

That is sometimes more informative than Viterbi path π*

• Posterior Decoding may give an invalid sequence of states

Why?

Viterbi vs. posterior decoding

• A class takes a multiple choice test. • How does the lazy professor construct the

answer key? • Viterbi approach: use the answers of the best

student • Posterior decoding: majority vote

Viterbi, Forward, Backward

VITERBI Initialization:

V0(0) = 1 Vk(0) = 0, for all k > 0

Iteration: Vr(i) = er(xi) maxk Vk(i-1) akr Termination: P(x, π*) = maxk Vk(N)

FORWARD Initialization:

f0(0) = 1 fk(0) = 0, for all k > 0

Iteration:

fr(i) = er(xi) Σk fk(i-1) akr

Termination:

P(x) = Σk fk(N) ak0

BACKWARD Initialization:

bk(N) = ak0, for all k Iteration:

br(i) = Σk er(xi+1) akr bk(i+1) Termination:

P(x) = Σk a0k ek(x1) bk(1)

A+ C+ G+ T+

A- C- G- T-

A modeling Example

CpG islands in DNA sequences

Methylation & Silencing

• Methylation Addition of CH3in C-

nucleotides Silences genes in

region

• CG (denoted CpG) often mutates to TG, when methylated

• Methylation is inherited during cell division

9

CpG Islands

CpG nucleotides in the genome are frequently methylated

C → methyl-C → T Methylation often suppressed around genes, promoters

→ CpG islands

CpG Islands

• In CpG islands: CG is more frequent Other dinucleotides (AA, AG, AT…) have different frequencies

Problem: Detect CpG islands

A model of CpG Islands – Architecture

A+ C+ G+ T+

A- C- G- T-

CpG Island

Non CpG Island

A model of CpG Islands – Transitions How do we estimate parameters of the

model? Emission probabilities: 1/0 1. Transition probabilities within CpG

islands

Established from known CpG islands

2. Transition probabilities within non-CPG islands

Established from non-CpG islands

+ A C G T A .180 .274 .426 .120

C .171 .368 .274 .188

G .161 .339 .375 .125

T .079 .355 .384 .182

- A C G T A .300 .205 .285 .210

C .233 .298 .078 .302

G .248 .246 .298 .208

T .177 .239 .292 .292

A model of CpG Islands – Transitions • What about transitions between (+) and (-) states? • Their probabilities affect

Avg. length of CpG island

Avg. separation between two CpG islands

q X Y

1-p

1-q

p

Length distribution of X region:

P[LX = 1] = 1-p P[LX = 2] = p(1-p) … P[LX= k] = pk-1(1-p) E[LX] = 1/(1-p) Geometric distribution, with

mean 1/(1-p)

A model of CpG Islands – Transitions No reason to favor exiting/entering (+) and (-) regions at a particular nucleotide

• Estimate average length LCPG of a CpG island:

LCPG = 1/(1-p) ⇒ p = 1 – 1/LCPG

• For each pair of (+) states: akr ← p × akr+

• For each (+) state k, (-) state r:

akr=(1-p)(a0r-)

• Do the same for (-) states

A problem with this model: CpG islands don’t have a geometric length distribution This is a defect of HMMs – a price we pay for ease of analysis &

efficient computation

A+ C+ G+ T+

A- C- G- T-

1–p 1-q

10

Using the model Given a DNA sequence x,

The Viterbi algorithm predicts locations of CpG islands Given a nucleotide xi, (say xi = A)

The Viterbi parse tells whether xi is in a CpG island in the most likely parse

Using the Forward/Backward algorithms we can calculate

P(xi is in CpG island) = P(πi = A+ | x)

Posterior Decoding can assign locally optimal predictions

of CpG islands = argmaxk P(πi = k | x)

Posterior decoding

Results of applying posterior decoding to a part of human chromosome 22

Posterior decoding Viterbi decoding

Sliding window (size = 100) Sliding widow (size = 200)

11

Sliding window (size = 300) Sliding window (size = 400)

Sliding window (size = 600) Sliding window (size = 1000)

Modeling CpG islands with silent states What if a new genome comes? • Suppose we just sequenced the porcupine

genome

• We know CpG islands play the same role in this genome

• However, we have no known CpG islands for porcupines

• We suspect the frequency and characteristics of CpG islands are quite different in porcupines

How do we adjust the parameters in our model?

LEARNING

12

Two learning scenarios • Estimation when the “right answer” is known

Examples:

GIVEN: a genomic region x = x1…xN where we have good (experimental) annotations of the CpG islands

GIVEN: the casino player allows us to observe him one evening as he changes the dice and produces 10,000 rolls

• Estimation when the “right answer” is unknown

Examples:

GIVEN: the porcupine genome; we don’t know how frequent are the CpG islands there, neither do we know their composition

GIVEN: 10,000 rolls of the casino player, but we don’t see when he changes dice

GOAL: Update the parameters θ of the model to maximize P(x|θ)

When the right answer is known Given x = x1…xN for which π = π1…πN is known, Define:

Akr = # of times k → r transition occurs in π Ek(b) = # of times state k in π emits b in x

The maximum likelihood estimates of the parameters are:

Akr Ek(b) akr = ––––– ek(b) = ––––––– Σi Aki Σc Ek(c)

When the right answer is known

Intuition: When we know the underlying states, the best estimate is the average frequency of transitions & emissions that occur in the training data

Drawback:

Given little data, there may be overfitting: P(x|θ) is maximized, but θ is unreasonable 0 probabilities – VERY BAD

Example:

Suppose we observe: x = 2 1 5 6 1 2 3 6 2 3 5 3 4 2 1 2 1 6 3 6 π = F F F F F F F F F F F F F F F L L L L L Then: aFF = 14/15 aFL = 1/15 aLL = 1 aLF = 0 eF(4) = 0; eL(4) = 0

Pseudocounts

Solution for small training sets:

Add pseudocounts Akr = # times k→r state transition occurs + tkr Ek(b) = # times state k emits symbol b in x + tk(b)

tkr, tk(b) are pseudocounts representing our prior belief Larger pseudocounts ⇒ Strong prior belief Small pseudocounts (ε < 1): just to avoid 0 probabilities

Pseudocounts

Akr + tkr Ek(b) + tk(b) akr = ––––––– ek(b) = –––––––––– Σi Aki + tkr Σc Ek(c) + tk(b)

Pseudocounts

Example: dishonest casino

We will observe player for one day, 600 rolls

Reasonable pseudocounts: t0F = t0L = tF0 = tL0 = 1; tFL = tLF = tFF = tLL = 1; tF(1) = tF(2) = … = tF(6) = 20 (strong belief fair is fair) tL(1) = tL(2) = … = tL(6) = 5 (wait and see for loaded)

Above numbers arbitrary – assigning priors is an art

13

When the right answer is unknown

We don’t know the actual Akr, Ek(b) Idea: • Initialize the model • Compute Akr, Ek(b) • Update the parameters of the model, based on Akr, Ek(b) • Repeat until convergence

Two algorithms: Baum-Welch, Viterbi training

When the right answer is unknown

Given x = x1…xN for which the true π = π1…πN is unknown,

EXPECTATION MAXIMIZATION (EM) 1. Pick model parameters θ 2. Estimate Akr, Ek(b) (Expectation) 3. Update θ according to Akr, Ek(b) (Maximization) 4. Repeat 2 & 3, until convergence

Estimating new parameters To estimate Akr: At each position of sequence x, find probability that transition k→r is used: P(πi = k, πi+1 = r, x1…xN) Q P(πi = k, πi+1 = r | x) = –––––––––––––––––––– = –––––

P(x) P(x) Q = P(x1…xi, πi = k, πi+1 = r, xi+1…xN) = P(πi+1 = r, xi+1…xN | πi = k) P(x1…xi, πi = k) = P(πi+1 = r, xi+1xi+2…xN | πi = k) fk(i) = P(xi+2…xN | πi+1 = r) P(xi+1 | πi+1 = r) P(πi+1 = r | πi = k) fk(i) = br(i+1) er(xi+1) akr fk(i)

fk(i) akr er(xi+1) br(i+1) So: P(πi = k, πi+1 = r | x, θ) = ––––––––––––––––––

P(x | θ)

Estimating new parameters fk(i) akr er(xi+1) br(i+1) P(πi = k, πi+1 = r | x, θ) = –––––––––––––––––– P(x | θ)

Summing over all positions gives the expected number of times the transition is used:

fk(i) akr er(xi+1) br(i+1)

Akr = Σi P(πi = k, πi+1 = r | x, θ) = Σi ––––––––––––––––– P(x | θ)

k r

xi+1

akr

er(xi+1)

br(i+1) fk(i)

x1………xi-1 xi+2………xN

xi

Estimating new parameters Emission probabilities:

Σ {i | xi = b} fk(i) bk(i) Ek(b) = ––––––––––––––––––––––

P(x | θ) When you have multiple sequences: sum over

them.

The Baum-Welch Algorithm Initialization:

Pick the best-guess for model parameters (or random) Iteration:

1. Forward 2. Backward 3. Calculate Akr, Ek(b) + pseudocounts 4. Calculate new model parameters akr, ek(b) 5. Calculate new log-likelihood P(x | θ) Likelihood guaranteed to increase (EM)

Repeat until P(x | θ) does not change much

14

The Baum-Welch Algorithm

Time Complexity: # iterations × O(K2N)

• Guaranteed to increase the log likelihood P(x | θ) • Not guaranteed to find globally best parameters:

Converges to local optimum • Too many parameters / too large model: Overfitting

Alternative: Viterbi Training

Initialization: Same Iteration:

1. Perform Viterbi, to find π* 2. Calculate Akr, Ek(b) according to π* + pseudocounts 3. Calculate the new parameters akr, ek(b)

Until convergence Comments:

Convergence is guaranteed – Why? Does not maximize P(x | θ) Maximizes P(x | θ, π*) In general, worse performance than Baum-Welch

HMM variants

Higher-order HMMs

• How do we model “memory” of more than one time step?

First order HMM: P(πi+1 = r | πi = k) (akr) 2nd order HMM: P(πi+1 = r | πi = k, πi -1 = j) (ajkr) …

• A second order HMM with K states is equivalent to a first order HMM with K2 states

state H state T

aHT(prev = H) aHT(prev = T)

aTH(prev = H) aTH(prev = T)

state HH state HT

state TH state TT

aHHT

aTTH

aHTT aTHH aTHT

aHTH

Note that not all transitions are allowed!

Modeling the Duration of States

Length distribution of region X:

E[LX] = 1/(1-p)

• Geometric distribution, with mean 1/(1-p)

This is a significant disadvantage of HMMs Several solutions exist for modeling different length distributions

X Y

1-p

1-q

p q

Solution 1: Chain several states

X Y

1-p

1-q

p

q X X

Disadvantage: Still very inflexible LX = C + geometric with mean 1/(1-p)

15

Solution 2: Negative binomial distribution

Suppose the duration in X is m, where During first m – 1 turns, exactly n – 1 arrows to next state are followed In the mth turn, an arrow to next state is followed

m – 1

P(LX = m) = n – 1 (1 – p)n pm-n

X

p

X X

p

1 ‒ p 1 ‒ p

p

…… Y

1 ‒ p

n copies of state X:

Example: genes in prokaryotes

• EasyGene: a prokaryotic gene-finder (Larsen TS, Krogh A)

• Codons are modeled with 3 looped triplets of states (negative binomial with n = 3)

Solution 3: Duration modeling Upon entering a state: 1. Choose duration d, according to probability distribution 2. Generate d letters according to emission probs 3. Take a transition to next state according to transition

probs Disadvantage: Increase in complexity:

Time: O(D2) Space: O(D)

where D = maximum duration of state