Dynamic View Dependent Isosurface Extraction

Transcript of Dynamic View Dependent Isosurface Extraction

1

Dynamic View Dependent Isosurface Extraction

Yarden Livnat, Charles Hansen

UUSCI-2003-004

Scientific Computing and Imaging InstituteUniversity of Utah

Salt Lake City, UT 84112 USA

Enter date here: 2002

Abstract:

We present a dynamic view-dependent isosurface extraction method. Our approach is based onthe 3-stage approach we first suggested in WISE [15], i.e., front-to-back traversal, software pruningbased on visibility and final rendering using the graphics hardware. In this work we re-examinethe WISE method and similar view-dependent extraction methods, and propose modifications thataccelerate the extraction time by a factor of 5 to 10. In particular we suggest using a bottom-upapproach for rendering occluding triangles, replacing the traditional marching cubes triangulationwith triangle fans and using point-based rendering for sub-pixel sections of the isosurface.

Dynamic View Dependent Isosurface Extraction

Yarden Livnat Charles Hansen

Scientific Computing and Imaging InstituteSchool of ComputingUniversity of Utah

Abstract

We present a dynamic view-dependent isosurface extractionmethod. Our approach is based on the 3-stage approachwe first suggested in WISE [15], i.e., front-to-back traversal,software pruning based on visibility and final rendering us-ing the graphics hardware. In this work we re-examine theWISE method and similar view-dependent extraction meth-ods, and propose modifications that accelerate the extractiontime by a factor of 5 to 10. In particular we suggest usinga bottom-up approach for rendering occluding triangles, re-placing the traditional marching cubes triangulation withtriangle fans and using point-based rendering for sub-pixelsections of the isosurface.

1 Introduction

The availability of inexpensive yet powerful desktop com-puters and parallel supercomputers in few location leads tothe development of remote visualization techniques. Thefundamental drive for this paradigm enables the scientist toperform very large simulations on a remote supercomputerwhile visualizing and investigating the results on the localdesktop. Remote isosurface visualization of large datasetsposes a special challenge due to the limited bandwidth ofthe intermediate network.

In respond to this challenge, current research efforts aimto simplify the geometry of the isosurface after extractingthe isosurface and before it renders or transmits over a net-work [2, 3]. In effect, the aim of such methods is to reduce thecomplexity of rendering an isosurface to a sub-linear com-plexity with respect to the size of the original isosurface.However, such methods do not address the initial challengeof extracting and constructing the isosurface.

In this paper, we present a dynamic view-dependent iso-surface extraction method that is based on 3-stage approachwe suggested in WISE [15],i.e., front-to-back traversal, soft-ware pruning based on visibility and final rendering usingthe graphics hardware. We begin with a review of earlierwork on isosurface extraction in Section 2. In Section 3 weidentify the bottlenecks in the WISE approach and proposeseveral modification and additions. We present experimentalresults and conclude with future work in Section 4.

2 Previous work

Early work [17, 9, 23, 7, 24, 11, 22, 12] on isosurface extrac-tion focused mainly on a complete isosurface extraction andthus exhibit a worst case time complexity of O(n), where nis the size of the data. In 1996, Cignoni et al. [6] presentedan optimal isosurface extraction method based on Livnatet al. [16] span-space. However, as the size of the datasets

grow new challenges arise. First, the size of the datasets of-ten is larger than the available memory. Second, the size ofthe extracted isosurfaces can overwhelm even the most ad-vance graphics systems. The first challenge, the size of thedata, is addressed by out of core isosurface extraction meth-ods [4, 5, 1] which aim at maintaining the data on disk andloading only relevant sections of it at a time. Another re-search effort, view dependent isosurface extraction, targetsthe size of the extracted isosurface by extracting only thevisible portion of the isosurface.

View-dependent isosurface extraction was first introducedby Livnat and Hansen [15]. Their approach is based on afront-to-back traversal of the data while maintaining a vir-tual framebuffer of all the extracted triangles. The virtualframebuffer is used during the traversal to cull sections of thedataset, which are hidden from the given view point by closerparts of the isosurface. Livnat and Hansen also proposed aparticular implementation for the virtual framebuffer andfor performing visibility queries against this framebuffer.Their method, termed WISE, is based on shear-wrap fac-torization [13] and Greene’s coverage maps [10]. Jinzhu andShen [8] presented a distributed parallel view-dependent ap-proach that uses multi-pass occlusion culling with an em-phasis on load balancing.

A ray casting approach was presented by Parker etal. [20, 19]. This approach lends itself very well to largeshared memory machines due to the parallel nature of raycasting. An additional benefit of the ray casting approachis the ability to generate global illumination effects such asshadows. Liu et al. [14] also used ray casting but insteadof calculating the intersection of each ray with the isosur-face, their rays are used to identify active cells. These activecells are then used as seeds to the more traditional isosurfacepropagation method.

Zhang et al. [25] presented a parallel out of core viewdependent extraction method. In this approach, the sectionsof the data are distributed to the various processors. For agiven isovalue, each processor uses ray casting to generate anocclusion map. The maps are then merged and redistributedto all the processors. Using this global occlusion map, eachprocessor extracts its visible portion of the isosurface. Theynoted that updating the occlusion map during the traversalis expensive even when using hierarchical occlusion map, andthus opt not to update the occlusion maps and rely on thefirst occlusion map approximation.

3 SAGE

The WISE algorithm provides a particular implementationof the view dependent approach. The performance of theWISE algorithm demonstrated the potential benefits of suchan approach. The two most prominent weaknesses of cur-rent view-dependent methods, which are based on the WISEapproach, are the ratio of triangle intersections per screen

cell and the fill rate of the screen tiles hierarchy.In the following we present a new approach to view de-

pendent isosurface extraction that aims at addressing theseweaknesses. This approach is based on the WISE methodand lessons learned from it and thus is termed SAGE. Ournew approach present four new features:

1. Bottom-up updates to the framebuffer hierarchy whilemaintaining a top-down queries.

2. Scan conversion of multiple triangles at once, i.e., theuse of concave polygons.

3. Replacing sub-pixel [meta-] cells with points and nor-mals.

4. Fast estimation of the screen bounding box of a given[meta-] cell.

3.1 A Bottom Up Approach

Current view-dependent isosurface extraction methods [15,8] that use a virtual framebuffer, employ hierarchical struc-ture in order to accelerate visibility queries. However, if thetriangles are small then each update (rendering of a triangle)of the hierarchy requires a deep traversal of the hierarchy.Such traversals are expensive on one hand and generally addonly a small incremental change.

To alleviate the problem of projecting many small tri-angles down the hierarchical tile structure, we employ abottom-up approach, as shown in Figure 1. Using this ap-proach the contribution of a small triangle is limited to onlya small neighborhood in the hierarchy, i.e., few tiles at thelowest level.

Polygons:Scan and update

Meta Cellsvisibility check

Figure 1: Bottom-up and top-down usage in SAGE.

The bottom-up approach is realized by projecting the tri-angles directly on to the bottom level, which is at the screenresolution. Only the tiles that are actually changed by theprojection of the triangle will be further checked to see ifthey cause changes up the hierarchy. Since the contribu-tion of the triangle is assumed to be small, its effect up thehierarchy will also be minimal.

3.2 Scan Conversion of Concave Polygons

One of the disadvantages of a top-down approach based onthe hierarchical tiles is that this algorithm is restricted toconvex polygons. In the WISE algorithm, this restrictionforced the projection of triangles by only one triangle at atime.

To alleviate this restriction, the SAGE algorithm employsa scan conversion algorithm, which simultaneously projectsa collection of triangles and concave polygons. The use ofthe scan conversion algorithm is made particularly simplein SAGE due to the bottom-up update approach. The pro-jected triangles and polygons are scan-converted at screenresolution at the bottom level of the tile hierarchy beforethe changes are propagated up the hierarchy. Applying thescan conversion in a top-down fashion would have made thealgorithm unnecessarily complex.

Additional acceleration can be achieved by eliminatingredundant edges, projecting each vertex only once per celland using triangle strips or fans. To achieve these goals, themarching cubes lookup table is first converted into a trian-gles fans format. The usual marching cubes lookup tablecontains a list of the triangles (three vertices) per case.

Wise Sage

3 Triangles9 Edges

3 Triangles9 Edges

1 Polygon5 Edges1 Double Edge

1 Polygon5 Edges

3 Triangles9 Edges

1 Polygon (convex)5 Edges

View direction Image

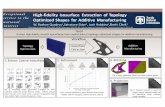

Figure 2: Comparison between WISE and SAGE.

A comparison of the WISE and the SAGE algorithms withrespect to the number of polygons and edges that are pro-jected onto the hierarchical tiles is shown in Figure 2.

3.3 Rendering Points

Another potential savings is achieved by using points withnormals to represent triangles or [meta-] cells that aresmaller than a single pixel. The use of points in isosur-face visualization was first proposed as the Dividing CubesMethod by Lorensen and Cline. Phister et al. [21] usedpoints to represent surface elements (surfels) for efficient ren-dering of complex geometry. The surfels method employs apre-process sampling stage to create an octree-based surfelrepresentation of a given geometry. During rendering thesurfels are projected onto the z-buffer and shaded based ontheir attributes such as normal and texture. Special atten-tion is given to the removal of holes and correct visibility.

The use of point rendering is an improvement over theWISE algorithm as the exact location of each screen pixelcenter is known during the scan line and the visibilitytests. In contrast, WISE operated on a warped image ofthe final image and thus the exact location of each pixelwas not easily obtained. Whenever a non-empty [meta-]cell is determined to have a size less then two pixels and itsprojection covers the center of a pixel, it is represented by asingle point. Note that the size of the bounding box can bealmost two pixels wide (high) and still cover only a singlepixel. Referring to Figure 4, we require,

Figure 3: Rendering points. The left image was extractedbased on the current view point. The right image shows acloseup of the same extracted geometry.

if ( right - left < 2 ) {

int l = trunc(left);

if ( left - l >= 0.5 ) l++;

if (l+0.5 < right && right < l+1.5)

// create a pointelse

// ignore [meta-] cell}

and similarity for the bounding box height.

Figure 3 shows an example in which some of the projectedcells are small enough such that they can be rendered aspoints. On the left is the image as seen by the user while onthe right is a closeup view of the same extracted geometry(i.e., the user zoomed in but did not re-extract the geometrybased on the new view point). Notice that much of the imageon the left is represented as points. Points are not only usefulin accelerating the rendering of a large isosurface but alsoassists in remote visualization since less geometry needs tobe transferred over the network.

left rightleft

left right

left

left rightleft

B CA

Figure 4: Pixel center and the projected bounding box. Apoint is created for cases A and B but not for C.

3.4 Fast Estimates of a Bounding Box of a Pro-jected Cell

The use of the visibility tests adds an overhead to the extrac-tion process that should be minimized. Approximating thescreen area covered by a meta-cell rather than computing itexactly can accelerate the meta-cell visibility tests. In gen-eral the projection of a meta-cell on the screen has a hexagonshape with non-axis aligned edges. We reduce the complex-ity of the visibility test by using the axis aligned boundingbox of the cell projection on the screen as seen in Figure 5.This bounding box is an overestimate of the actual coverageand thus will not misclassify a visible meta-cell, though theopposite is possible.

Screen

Eye

Meta Cell

Bounding boxof the meta cellprojection

Figure 5: Perspective projection of a meta-cell, the coveredarea and its bounding box.

The problem is how to find this bounding box quickly.The simplest approach is to project each of the eight verticesof each cell on to the screen and compare them. This processinvolves eight perspective projections and either two sorts (xand y) or 16 to 32 comparisons.

The solution in SAGE is to approximate the boundingbox as follows. Let P be the center of the current meta-cell in object space. Assuming the size of the meta-cell is(dx, dy, dz), we define the eight vectors

D = (±dx

2,±dy

2,±dz

2, 0)

The eight corner vertices of the cell can be represented as

V = P + D = P + (±Dx,±Dy,±Dz)]

Applying the viewing matrix M to a vertex V amountsto:

V M = (P + D)M = PM + DM

After the perspective projection the x screen coordinate ofthe vertex is:

[V M ]x[V M ]w

=[PM ]x + [DM ]x[PM ]w + [DM ]w

To find the bounding box of the projected meta-cell weneed to find the minimum and maximum of these projectionsover the eight vertices in both x and y. Alternatively, we can

overestimate these extrema values such that we may classifya non-visible cell as visible but not the opposite. Overesti-mating can thus lead to more work but will not introduceerrors.

The maximum x screen coordinate can be estimated asfollows,

max([V M ]x[V M ]w

) ≤ max([PM ]x + [DM ]x)

min([PM ]w + [DM ]w)

≤ [PM ]x + max([DM ]x)

min([PM ]w + [DM ]w)

≤ [PM ]x + [D+M+]xmin([PM ]w + [DM ]w)

where we define the + operator to mean to use the absolutevalue of the vector or matrix elements.

Assuming that the meta-cells are always in front of thescreen we have

Vz > 0 ⇒ Pz −D+z > 0 ⇒ [PM ]z − [D+M+]z > 0

thus,

max[V M ]x[V M ]w

=

8><>:[PM ]x+[D+M+]x[PM ]w−[D+M+]w

if numerator ≥ 0

[PM ]x+[D+M+]x[PM ]w+[D+M+]w

otherwise

Similarly, the minimum x screen coordinate can be over-estimated as,

min[V M ]x[V M ]w

≤

8><>:[PM ]x−[D+M+]x[PM ]w+[D+M+]w

if numerator ≥ 0

[PM ]x−[D+M+]x[PM ]w−[D+M+]w

otherwise

The top and bottom of the bounding box are computedsimilarly.

The complete procedure for estimating the bounding box(see Figure 6) requires only two vector matrix multiplica-tions, two divisions, four multiplications, four comparisons,and six additions.

4 Results

To evaluate the performance of the SAGE method wecompare it to the performance of the Bon Octree [24],NOISE [16] and WISE [15] methods. We also compare theperformance of the three acceleration modifications we pro-pose, namely: scan conversion of convex polygons, boundingbox estimation and point-base rendering. The various exper-iments were done using the head and legs sections from thevisible female dataset [18]. The head dataset has dimensionsof 512x512x208 for a total of 104MB, and the legs dataset is512x512x617 for a total of 308MB.

4.1 SAGE vs. Other Extraction Methods

The tests cases included (see Figure 7) a normal view ofeach dataset, a closeup of a small section, and a distantview. Each test was performed twice using the isovalues cor-responding to the skin (600.5) and bone (1224.5). The testswere selected such that each will reflect different characteris-tics. For the visible woman dataset, the skin isosurface had

PrecomputeM+:M = V iewMatrixM+

i,j = ‖Mi,j‖

Compute( t, f1, f2 )return t > 0 ? t ∗ f1 : t ∗ f2

FindBouningBox( cell )P = center of cellD = ( dx

2, dy

2, dz

2, 1)

PM = p ∗MDM = d ∗M+

ffar = 1PMw+DMw

fnear = 1PMw−DMw

right = Compute(PMx + DMx, fnear, ffar )left = Compute(PMx −DMx, ffar, fnear )top = Compute(PMy + DMy, fnear, ffar )bottom = Compute(PMy −DMy, ffar, fnear )

Figure 6: Procedure for (over)estimating the bounding boxof a projected cell.

(a) Distant (b) Normal (c) Closeup

Figure 7: Example of the three views.

large contiguous areas of coverage whereas the isosurfacesfor the bone exhibit complex structures with many holes andcavities. The closeup test demonstrates one of the benefitsof view dependent isosurface extraction when only a smallsection of the isosurface in needed. In contrast, the distanttests shows examples where not even the visible isosurfaceshould be extracted, rather only the visible portion with re-gard to the resolution of the screen. The size of the objectson the screen, in the case of the distance view, also corre-sponds to their size when the full dataset (see Figure 3) isused.

The experiments were done on an SGI Onyx2 (using asingle CPU). Table 1 and Table 2 illustrate the results forthese tests.

In all the tests the view dependent approaches, WISEand SAGE, consistently reduced the number of extractedpolygons. The SAGE algorithm generated far fewer trianglesthen the WISE method (except for one case) by up to factors70 (1.5%) for the distance cases, factor of 1.5 for closeup, andup to a factor of 7 on the normal view cases. With respect to

Table 1: Visible Woman Head Dataset

view method extraction number of view

time (sec) polygons (sec)

Skin

any Octree 10.9 1,430,824 2.6

NOISE 10.2

distant WISE 35.1 292,242 0.6

SAGE 3.4 18,645 <0.1

normal WISE 35.8 344,628 0.6

SAGE 4.4 195,408 0.3

closeup WISE 4.6 43,222 0.1

SAGE 0.6 36,939 <0.1

Bone

any Octree 17.0 2,207,592 4.6

NOISE 14.6

distant WISE 13.9 271,075 0.5

SAGE 4.1 12,747 <0.1

normal WISE 32.7 278,735 0.7

SAGE 4.5 153,617 0.2

closeup WISE 10.6 84,599 0.2

SAGE 1.5 67,808 0.2

the extraction time, the SAGE method outperformed WISEconsistently by factors of 3 to 7, and in one case up to afactor of 50.

These results depend on the depth complexity of the fullisosurface as well as the visible portion of the isosurface andits footprint on the screen.

4.2 SAGE Acceleration Modification

The key feature of the SAGE algorithm is the use of thebottom-up rendering approach. In addition, SAGE utilizesthree acceleration modifications different than its predeces-sor algorithm WISE: scan-conversion of concave polygons,bounding box approximation and point-based rendering. InTable 3 and Table 4 we show the advantage these threemodifications exhibit. The experiments were done using thesame datasets, on a 1.7 MHz Pentium 4 Linux machine with768MB and GeForce4 graphics card.

The results show that using point-based rendering, whenappropriate, consistently accelerate the rendering time by afactor of 10. This result is especially appealing for isosur-face extraction from very large datasets where many metacells project onto the same pixel on the screen. Using scan-conversion of concave polygons shows a consistent reductionof about 30% in the extraction time. The bounding boxalgorithm had the least impact and in general reduces theextraction time by 7-9%. It is interesting to note that for thedistance test cases the bounding box estimation performedworst then the full projection by about 1% if point-based ren-dering was not used. However, when combined with point-based rendering, the bounding box estimation consistentlyreduced the rendering time by more then 30%.

Another interesting point is the fact that SAGE performedthe best when only a small section of the isosurface is visible(closeup) or if the isosurface is far and point-based renderingcan be used. This is especially intriguing when one considersthat in the first case the isosurface covers most if not all ofthe image (i.e., the scan conversion has to touch each andevery pixel) while in the later case the isosurface covers onlya very small faction of the final image.

Table 2: Visible Woman Legs Dataset

view method extraction number of view

time (sec) polygons (sec)

Skin

any Octree 33.4 3,264,755 6.2

NOISE 27.1

distant WISE 37.5 968,073 2.0

SAGE 0.7 14,917 <0.1

normal WISE 70.4 935,784 1.8

SAGE 12.1 122,229 0.2

closeup WISE 16.6 364,394 0.80

SAGE 5.7 234,607 0.51

Bone

any Octree 18.9 2,328,940 4.7

NOISE 16.3

distant WISE 18.0 412,530 0.9

SAGE 0.5 6,099 <0.1

normal WISE 33.3 406,262 0.9

SAGE 6.8 503,545 0.1

closeup WISE 9.6 180,270 0.5

SAGE 3.8 122,262 0.3

SAGE performs the worst when the isosurface is largeenough such that the proposed point-base rendering is notused but the triangles it generates are very small. This issueshould be further investigated in future research.

5 Acknowledgments

This work was supported in part by grants from the DOEASCI, the DOE AVTC, the NIH NCRR, and by the NationalScience Foundation under Grants: 9977218 and 9978099.The authors would like to thank Blythe Nobleman and ChrisJohnson for their contribution.

References

[1] C. L. Bajaj, V. Pascucci, D. Thompson, and X. Y.Zhang. Parallel accelerated isocontouring for out-of-core visualization. In Proceedings of the 1999 IEEEsymposium on Parallel visualization and graphics, pages97–104. ACM Press, 1999.

[2] Martin Bertram, Mark A. Duchaineau, Bernd Hamann,and Kenneth I. Joy. Bicubic subdivision-surfacewavelets for large-scale isosurface representation and vi-sualization. In Proceedings of Visualization 2000, pages389–396, October 2000.

[3] Martin Bertram, Daniel E. Laney, Mark A.Duchaineau, Charles D. Hansen, Bernd Hamann,and Kenneth I. Joy. Wavelet representation of contoursets. In Proceedings of Visualization 2001, pages303–310, October 2001.

[4] Yi-Jen Chiang and Claudio T. Silva. I/O optimal iso-surface extraction. In Roni Yagel and Hans Hagen, ed-itors, IEEE Visualization ’97, pages 293–300, 1997.

[5] Yi-Jen Chiang, Cludio T. Silva, and William J.Schroeder. Interactive out-of-core isosurface extraction.In Proceedings of the conference on Visualization ’98,pages 167–174. IEEE Computer Society Press, 1998.

Table 3: Visible Woman Head Dataset

view Scan. BBox Points Extract Draw Tri.

Est. (msec) (msec) (points)

Skin

octree 1,493 576 1.430M

distant reg no no 1,590 2 13,610

new no no 1,084 2 13,610

new yes no 1,100 2 13,610

- no yes 159 0.5 (5,751)

- yes yes 112 0.5 (5,751)

normal reg no - 1,857 80 191,483

new no - 1,294 80 191,483

new yes - 1,205 80 191,483

closeup reg no - 155 3 22,628

new no - 102 3 22,628

new yes - 99 3 22,628

Bone

octree 2667 894 2.207M

distant reg no no 1,820 1.4 9,736

new no no 1,326 1.4 9,736

new yes no 1,279 1.4 9,736

- no yes 169 0.3 (4,289)

- yes yes 115 0.3 (4,289)

normal reg no - 2,062 70 167,949

new no - 1,512 70 167,949

new yes - 1,380 70 167,949

closeup reg no - 615 25 57,669

new no - 432 25 57,669

new yes - 437 25 57,669

[6] P. Cignoni, C. Montani, E. Puppo, and R. Scopigno.Optimal isosurface extraction from irregular volumedata. In Proceedings of IEEE 1996 Symposium on Vol-ume Visualization. ACM Press, 1996.

[7] R. S. Gallagher. Span filter: An optimization schemefor volume visualization of large finite element models.In Proceedings of Visualization ’91, pages 68–75. IEEEComputer Society Press, Los Alamitos, CA, 1991.

[8] Jinzhu Gao and Han-Wei Shen. Parallel view-dependent isosurface extraction using multi-pass occlu-sion culling. In Parallel and Large Data Visualizationand Graphics, pages 67–74. IEEE Computer SocietyPress, Oct 2001.

[9] M. Giles and R. Haimes. Advanced interactive visual-ization for CFD. Computing Systems in Engineering,1(1):51–62, 1990.

[10] Ned Greene. Hierarchical polygon tiling with coveragemasks. In Computer Graphics, Annual Conference Se-ries, pages 65–74, August 1996.

[11] T. Itoh and K. Koyamada. Isosurface generation byusing extrema graphs. In Visualization ’94, pages 77–83. IEEE Computer Society Press, Los Alamitos, CA,1994.

[12] T. Itoh, Y. Yamaguchi, and K. Koyyamada. Volumethining for automatic isosurface propagation. In Visu-alization ’96, pages 303–310. IEEE Computer SocietyPress, Los Alamitos, CA, 1996.

Table 4: Visible Woman Legs Datasetview MC. BBox Pts Extract Draw Tri.

Est. (msec) (msec) (points)

Skin

octree 11,407 1,832 3.874M

distant reg no no 4,688 8 32,618

new no no 3,306 8 32,618

new yes no 3,376 8 32,618

- no yes 475 2 (14,900)

- yes yes 300 2 (14,900)

normal reg no - 4,826 63 150,818

new no - 3,331 63 150,818

new yes - 3,058 63 150,818

closeup reg no - 976 42 102,103

new no - 683 42 102,103

new yes - 638 42 102,103

Bone

octree 2,381 1,044 2.2329M

distant reg no no 2,593 3 12,976

new no no 1,841 3 12,976

new yes no 1,705 3 12,976

- no yes 274 0.3 (6333)

- yes yes 180 0.3 (6333)

normal reg no - 2,593 30 76,931

new no - 1,818 30 76,931

new yes - 1,608 30 76,931

closeup reg no - 1,101 39 95,710

new no - 775 39 95,710

new yes - 742 39 95,710

[13] Philippe G. Lacroute. Fast volume rendering usingshear-warp factorization of the viewing transformation.Technical report, Stanford University, September 1995.

[14] Zhiyan Liu, Adam Finkelstein, and Kai Li. Progressiveview-dependent isosurface propagation. In Proceedingsof Vissym’2001, 2001.

[15] Y. Livnat and C. Hansen. View dependent isosurfaceextraction. In Visualization ’98, pages 175–180. ACMPress, October 1998.

[16] Y Livnat, H. Shen, and C. R. Johnson. A near optimalisosurface extraction algorithm using the span space.IEEE Trans. Vis. Comp. Graphics, 2(1):73–84, 1996.

[17] W.E. Lorensen and H. E. Cline. Marching cubes:A high resolution 3D surface construction algorithm.Computer Graphics, 21(4):163–169, July 1987.

[18] National Library of Medicine.The visible human project, 1986.http://www.nlm.nih.gov/research/visible/visible human.html.

[19] S. Parker, M. Parker, Y. Livnat, P.-P. Sloan, C. Hansen,and P. Shirley. Interactive ray tracing for volume vi-sualization. IEEE Transactions on Visualization andComputer Graphics, 1999.

[20] S. Parker, P. Shirley, Y. Livnat, C. Hansen, and P.-P.Sloan. Interactive ray tracing for isosurface rendering.In Visualization 98, pages 233–238. IEEE Computer So-ciety Press, October 1998.

[21] Hanspeter Pfister, Matthias Zwicker, Jeroen van Baar,and Markus Gross. Surfels: Surface elements as render-ing primitives. In Kurt Akeley, editor, Siggraph 2000,

Computer Graphics Proceedings, pages 335–342. ACMPress / ACM SIGGRAPH / Addison Wesley Longman,2000.

[22] H. Shen and C. R. Johnson. Sweeping simplicies: A fastiso-surface extraction algorithm for unstructured grids.Proceedings of Visualization ’95, pages 143–150, 1995.

[23] J. Wilhelms and A. Van Gelder. Octrees for faster iso-surface generation. Computer Graphics, 24(5):57–62,November 1990.

[24] J. Wilhelms and A. Van Gelder. Octrees for faster iso-surface generation. ACM Transactions on Graphics,11(3):201–227, July 1992.

[25] Xiaoyu Zhang, Chandrajit Bajaj, and Vijaya Ra-machandran. Parallel and out-of-core view-dependentisocontour visualization using random data distribu-tion. In D. Ebert, P. Brunet, and I. Navazo, editors,Joint Eurographics — IEEE TCVG Symposium on Vi-sualization (VisSym-02), pages 1–10, 2002.