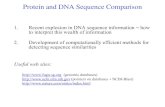

Lecture 10 – protein structure prediction. A protein sequence.

DeepSol: A Deep Learning Framework for Sequence-Based Protein … · 2018-03-12 · DeepSol: A Deep...

Transcript of DeepSol: A Deep Learning Framework for Sequence-Based Protein … · 2018-03-12 · DeepSol: A Deep...

DeepSol: A Deep Learning Framework for Sequence-Based Protein

Solubility Prediction

Sameer Khurana1, Reda Rawi2, Khalid Kunji3, Gwo-Yu Chuang2, Halima Bensmail3, andRaghvendra Mall3

1Computer Science and Artificial Intelligence Lab, Massachusetts Institute of Technology,Cambridge MA, USA

2Vaccine Research Center, National Institute of Allergy and Infectious Diseases, NationalInstitute of Health, Bethesda, MD, USA

3Qatar Computing Research Institute, Hamad Bin Khalifa University, Doha, Qatar

March 12, 2018

Abstract

Motivation: Protein solubility plays a vital role inpharmaceutical research and production yield. Fora given protein, the extent of its solubility can rep-resent the quality of its function, and is ultimatelydefined by its sequence. Thus, it is imperative to de-velop novel, highly accurate in silico sequence-basedprotein solubility predictors.Methods: In this work we propose, DeepSol, anovel Deep Learning based protein solubility predic-tor. The backbone of our framework is a Convo-lutional Neural Network (CNN) that exploits k-merstructure and additional sequence and structural fea-tures extracted from the protein sequence.Results: DeepSol outperformed all known sequence-based state-of-the-art solubility prediction methodsand attained an accuracy of 0.77 and Matthews cor-relation coefficient of 0.55. The superior predictionaccuracy of DeepSol allows to screen for sequenceswith enhanced production capacity and can more re-liably predict solubility of novel proteins.Availability: DeepSol’s best performing models andresults are publicly deposited at https://doi.org/

10.5281/zenodo.1162886.

Contact: [email protected] & [email protected]

1 Introduction

The heterologous expression of proteins in standardhost cells, such as Escherichia coli, often render theproteins insoluble reducing their manufacturability[1]. However, the exploration of structural and func-tional proteomics requires proteins to be produced insoluble form [2]. Thus, solubility of proteins is crucialfor production of proteins in both the pharmaceuticaland research setting. Enhancement in protein solu-bility is usually attained by the use of weak promotorsor strong denaturants followed by refolding [3], lowertemperatures [4], or optimization of other expressionconditions [5] such as codon optimization [6].

The primary structure plays a major role in deter-mining the solubility of a protein. Previous stud-ies [4, 7, 8], [9, 10, 11, 12] indicated that sev-eral sequence-based features, such as the extent ofcharged and turn-forming amino acid residues, thelevel of hydrophobic stretches, protein folding andthe length of the protein sequence, exhibit a strongassociation with the protein solubility.

1

Redundancy Reduction

with CD-HIT 90%

Similarity Within Train

Test Set

PROSO II

Full Data Set

Training Validation

Redundancy Reduction

with CD-HIT 30%

Similarity Between

Train and Test

DeepSol

Training Set

42% soluble

58% insoluble

Data Preparation

Data:

Process:

Model:

KeyAdditional Features

Sequence Length

Molecular Weight

Group 1: Direct

Information from Protein

Sequence

Fraction Turn-

Forming Residues

Hydropathicity index

Aliphatic Index

Absolute Charge

Group 2: Structural

Information Derived

from Protein Sequence

SCRATCH

3-State Secondary

Structure Classi cation

8-State Secondary

Structure Classi cation

Fraction of ERxHydro-

Phobicity of ER

Fraction of Exposed

Residues (ER)

Classi cation

Deep

Learning

Model Selection

Validation Set Select Best Classi er

DeepSol

Predict Solubility

Primary Classi er Performance on

Independent test set

(a) DeepSol development flowchart.

(b) The Deep Learning module is expanded to outline our proposed DeepSol architectures.Model setting 1 (DeepSol S1) corresponds to the setting wherecontinuous feature representation (h) of the raw input sequence, is the output of the K convolution and global max pooling blocks. Convolution blocksare given by the set of triplets {(fk, qk, ak)}k=1 to K , where fk is the convolution filter size, qk is the number of convolutional filters and ak is theactivation function of the block (See Fig 2 for details of the convolution block). In model setting 2 (DeepSol S2), we concatenate h with 57 sequence andstructural features (biological features referred as b), extracted using third-party bioinformatics tools represented with “orange” line. In model setting 3(DeepSol S3), we transform biological features using a feed-forward neural network to get bp before concatenating with h as shown by the “blue” line.

Figure 1: DeepSol overall framework.

2

Several machine learning based bioinformaticstools have been developed for protein solubility pre-diction to determine the soluble proteins in silicoand omit the expensive trial and error procedures in-volved in wet-labs. These sequence-based predictorsinclude PaRSnIP [13], PROSO II [14], CCSOL [15],SOLpro [5], PROSO [16], RPSP [9] and the Scor-ing Card Method (SCM) [17]. The majority of thesetools use a support vector machine (SVM) [18, 19]as the core discriminative model on biologically rele-vant handcrafted features from the protein sequencesto distinguish between the soluble and insoluble pro-teins. The recently proposed technique, PaRSnIP,uses a gradient boosting machine (GBM) [20] on adataset of over 8,000 mono-, di- and tri-peptide fre-quency based features along with an additional 57sequence and structural features. PaRSnIP identi-fied that higher fractions of exposed residues posi-tively correlate with protein solubility and tri-peptidestretches that contain multiple histidines negativelycorrelate with protein solubility. PROSO II utilizesa Parzen window model with a modified Cauchy ker-nel and a two-level logistic classifier. CCSOL uses anSVM classifier and identifies coil/disorder, hydropho-bicity, β-sheet and α-helix propensities as primarydifferentiating features. SOLpro also uses a two-stageSVM to build the protein solubility predictor. ThePROSO tool uses an SVM with an RBF (radial basisfunction) kernel [19] and a second-level Naive Bayesclassifier. RPSP performs classification with a stan-dard Gaussian distribution to distinguish soluble pro-teins from insoluble ones. Finally, the SCM methoduses a scoring card by utilizing only dipeptide com-position to estimate solubility scores of sequences.

The majority of these tools perform a two-stageclassification using a plethora of sequence based fea-tures including mono-, di- and tri-peptide frequen-cies i.e. over 8,000 features. In the first stage, thesemethods perform a feature selection task to reducethe risk of over-fitting, followed by the discrimina-tive classification step in the second stage. On theother hand, PaRSnIP relies on the feature impor-tance scores from the GBM model to identify andprune the non-essential features.

In this study, our goal is to build a single-stage pro-tein solubility prediction system that outperforms all

existing sequence-based tools. We used Deep Learn-ing models to identify the relationship between thek-mer structure in the input protein sequence and thesolubility of the protein. We used Convolutional Neu-ral Networks (CNNs) [21] to exploit the k-mer struc-ture. CNNs construct non-linear high-dimensionalk-mer vector spaces. These vector spaces enable themodel to encode more information about the k-merstructure, necessary for predicting protein solubility,than just the k-mer frequencies as encoded in the fea-ture vectors of previous machine learning techniques.Our work is inspired by the recent success of severalresearch groups using Deep Learning to model pro-tein sequences for secondary structure [22, 23] andresidue-residue contact prediction [24]. The maincontributions of this paper are:

1. Our Deep Learning model can be applied di-rectly to raw protein sequences without any ex-tensive feature engineering, which is the hall-mark of previous works. Applied directly to rawsequences, our model (DeepSol S1) attains com-parable performance to the current state-of-the-art protein solubility predictor, PaRSnIP. Apartfrom mono-, di- and tri-peptide frequency fea-tures, both PaRSnIP and PROSO II use addi-tional biological features extracted using vari-ous external feature extraction toolkits from theprotein sequences. However, in Deep Learningframework, discriminative features are extractedfrom the raw input sequence while optimizingthe model for predicting solubility. Hence, thefeature representations learned by a Deep Learn-ing model encode not just k-mer frequencies butalso higher-level abstract structural features thatare necessary for solubility prediction.

2. Our proposed framework simplifies the solubil-ity prediction work-flow for bioinformatics re-searchers by obviating the need for extensive fea-ture engineering. By including the 57 biologi-cal features used in PaRSnIP, the Deep Learn-ing model outperforms all the state-of-the-artsequence-based protein solubility predictors.

3. Our DeepSol models are publicly available, per-mitting future extensions.

3

Sequence features Structural featuresSequence length (1) 3-state secondary structure (3)Molecular weight (1) 8-state secondary structure (8)Fraction turn-forming residues (1) Fraction of exposed residuesAliphatic index (1) (0% - 95% cutoffs) (20)Average hydropathicity (1) Fraction of exposed residues ×Absolute charge (1) hydrophobicity of exposed residues

(0% - 95% cutoffs) (20)

Table 1: 57 Additional Sequence & Structural Featuresfor DeepSol

On an independent test set [1], we showed thatour predictor, DeepSol, is at least 3.5% more accu-rate than PaRSnIP and at least 15% more accuratethan second-best solubility predictor PROSO II. Fur-thermore, DeepSol was superior to all the currentsequence-based protein solubility predictors on sev-eral other quality metrics including Mathew’s cor-relation co-efficient (MCC), selectivity and gain forsoluble, and sensitivity for insoluble proteins.

2 Methods

2.1 Overview

The problem of protein solubility prediction is a bi-nary classification problem. We learn a mappingfunction that takes as input some parametrization,x, of a protein sequence and outputs a score in therange [0, 1] ∈ R i.e. t : x → [0, 1], where t is themapping function. In this work, t is a Convolu-tional Neural Network (CNN), a sparse variation ofa feed-forward Neural Network architecture that ex-ploits the co-occurrence patterns in the input. Pro-tein sequence, is parametrized by a sequence of vec-tors, x = (x1, x2, . . . , xL), where xl is the one-hotencoded vector [25] i.e. a binary vector of length 21(20 for amino acids and 1 for gap) with 1 bit ac-tive for the lth amino acid in the protein sequence.This is a common type of word or character encod-ing that is well known and widely used in naturallanguage processing (NLP) applications [26]. Here Lrepresents the fixed length of the protein sequence i.e.L = 1,200.

2.2 Data Partitioning

We used an initial training dataset consisting of58,689 soluble and 70,954 insoluble protein sequencesoriginally compiled in [14]. We then performed twomajor preprocessing steps as utilized in PaRSnIP [13]to avoid any unwanted bias and to ensure heterogene-ity of sequences within the training set. Similar toprevious work [14, 15], we first used CD-HIT [27, 28]to decrease sequence redundancy within the trainingdata with a maximum sequence identity of 90%. Sec-ond, we pruned out all sequences from the training setthat had a sequence identity ≥ 30% to any sequencein the independent test set to prevent any bias causedby homologous sequences. The final training data in-cluded 28,972 soluble and 40,448 insoluble proteins.

The independent test set consisted of 1,000 solubleand 1,001 insoluble protein sequences first collectedby [1]. We employed this test set as a benchmarkfor a comprehensive comparison of several state-of-the-art sequence-based protein solubility predictors.

2.3 Data Representation

Deep Learning Model Input: The raw protein se-quence was used as the input to the CNN. We did notperform any explicit feature engineering, the CNNwas allowed to learn feature representations that bestencode the information essential for solubility predic-tion.Additional Features: We used two sets of bio-logical features (see Table 1); First, sequence-basedfeatures, such as the length of sequence, molecularweight, and absolute charge were estimated alongwith features like the aliphatic indices (AI), the aver-age of hydropathicity (GRAVY), as well as fractionof turn-forming residues. Second, structural featurespredicted from the protein sequence using SCRATCH[29] were used. We determined 3- and 8-state sec-ondary structure (SS) using SCRATCH to calculatethe fraction of residues belonging to each class for agiven protein sequence. Additionally, we estimatedthe fraction of exposed residues (FER) at differentrelative solvent accessibility (RSA) cutoffs. We used20 different RSA cutoffs ranging from 0% to 95% withan interval of 5%. We also multiplied the FER by the

4

hydrophobicity indices of the exposed residues. In to-tal, we included 57 sequence and structural featuresin addition to the feature representations obtainedfrom CNN to enhance the predictive capability ofDeepSol.

2.4 Model

DeepSol consists of a CNN with multiple convolutionblocks that maps the raw protein sequence to a fixed-dimensional continuous feature vector representation(Fig 1b). The model is trained in a supervised learn-ing setting (see Section 2.5) to ensure that the featurevector is discriminative for the classification task.

The input protein sequence, x ∈ RL×21, where 21is the size of the amino acid symbol dictionary, istransformed to a fixed vector hnew ∈ Rd′

by perform-ing the following transformations on x:

1. Embed: x is projected to a dense continuousvector space by performing the transformation,E = xWe, where We ∈ R21×e is the embeddingweight matrix, e corresponds to the embeddingdimension and E ∈ RL×e is the embeddingmatrix.

2. Multi-convolution-pooling: The embeddingmatrix, E, is convolved with K parallel con-volution blocks (see Figure 1b). Convolu-tion blocks are represented by a set of triplets{(fk, qk, ak)}k=1...K , where fk is the convolutionfilter size, qk is the number of convolution fil-ters in the convolution block k and ak is the ac-tivation function associated with that convolu-tion block. We perform one dimensional con-volution along the protein sequence length, L(see Figure 2 for the description of a convolu-tion block). Convolution blocks output a set offeature maps, {Zk ∈ R(L−fk+1)×qk}k=1 to K . Aconvolution block k can be expressed using thefollowing mathematical equation:

Zk(m, q) = ak(

e∑i=0

fk∑j=0

C(i, j, q) × E(i,m+ j))

(1)

where, q = 1, . . . , qk, C ∈ Re × fk × qk is theweight tensor that contains all the qk convolu-tion filters in that convolution block, ak is theactivation function; we use rectified linear unit(ReLU) [30] as the activation and Zk(m, q) isthe (m, q)th element of the feature map Zk. Theweight tensor C is learned during the trainingphase. After obtaining each feature map, Zk,we carry out a global max pooling operation. Byperforming max pooling, we prevent over-fittingby reducing the number of features during thetraining phase. This operation leads to a vector,hk of dimension qk. The vector hk is obtainedby:

hk = [maxZk(:, 1); maxZk(:, 2); . . . ; maxZk(:, qk)]

Finally, we concatenate all such hk for k =1, . . . ,K to get:

h = [h1;h2; . . . ;hK ]

We next describe the additional steps requiredto obtain the protein solubility prediction fromthe model.

3. Biological Feature Concatenation: Threedifferent experimental settings have been ap-plied:

• DeepSol S1: We simply use h for classi-fication without the additional biologicalfeatures, i.e. hnew = h.

• DeepSol S2: We concatenate the biologicalfeature vector b, with h from the previousstage, to get hnew = [h;b].

• DeepSol S3: We transform the biologicalfeatures using a feed-forward neural net-work with p hidden units to obtain featurevector bp ∈ Rp and then concatenate itwith h, to get hnew = [h;bp].

Here hnew ∈ Rd′and all model settings are illus-

trated in Figure 1b.

5

4. Fully Connected Layer: We pass hnew

through a fully connected hidden layer havingfc hidden units, to get Fc = ReLU(hnewWfc),where ReLU represents a rectified linear ac-tivation unit and Wfc ∈ Rd′×fc is the weightmatrix associated with the fully connected layer.

5. Sigmoid Decision Unit: Finally, each of the2 output units gives a score between 0 and 1, asillustrated by the equation:

P (y = 1|x) =1

1 + exp(−FcWo),

P (y = 0|x) = 1− P (y = 1|x)

Here Wo ∈ Rfc×2 represents the output weight ma-trix.

Figure 2: Sample convolution block. Here we il-lustrate how convolution operation is performed onthe embedding matrix E corresponding to a givenprotein sequence. ‘?’ refers to the convolution opera-tor. E is convolved with the qk filters. Each convolu-tion filter outputs a vector zk that is passed throughthe non-linear activation function ak, which are allcombined together leading to the final feature mapZk.

2.5 Training

The DeepSol model is trained to classify protein se-quences into two classes: soluble or insoluble, using a

binary cross entropy objective function depicted be-low:

CE = −N∑

n=1

yn lnP (yn = 1|xn)+

(1− yn) ln(1− P (yn = 1|xn))

Here xn represents the nth protein sequence and yn

represents its corresponding soluble or insoluble classlabel and N represents the total number of proteinsin our training set. The models are trained for severalepochs using the Adam optimizer [31], which dependson various hyper-parameters such as:

1. Learning rate: The step size that the optimizershould take in the parameter space while updat-ing the model parameters.

2. Batch Size: The number of training examples toconsider before updating the parameters.

3. Maximum Epochs: The total number of itera-tions over the training set.

4. Early Stopping Patience: The number of epochsto wait before stopping the model training, giventhe validation loss does not improve.

We preset learning rate to 0.01, maximum number ofepochs to 50, early stopping patience to 10 and theoptimal value of the batch size tuned on the cross-validation set is found to be 64.

2.6 Evaluation Metrics

The performance of DeepSol was comprehensivelycompared with several bioinformatics tools usingevaluation metrics such as prediction accuracy andMathew’s correlation coefficient (MCC). We evalu-ated additional quality metrics like sensitivity, selec-tivity and gain for each class as described in [13].

3 Results

3.1 Hyper-Parameter Tuning

Our Deep Learning models (DeepSol S1, DeepSolS2, DeepSol S3) for solubility prediction consisted

6

of multiple hyper-parameters. To tune the hyper-parameters, we performed stratified 10-fold cross-validation. The hyper-parameters were tuned using agrid search procedure. The parameters and the rangeof values tested were as follows:

1. Sequence Length: The length of all proteinsequences in the training, validation and test setwas fixed to L = 1, 200, which is the length of thelongest protein sequence in the dataset. Smallerthan 1, 200 sequences were padded with zeros.

2. Embedding Dimension e: We tested the fol-lowing settings e ∈ {50, 64, 100}. We founde = 50 to be the optimal value after tuning onthe validation sets, which gives us the embeddingmatrix, E ∈ R1,200×50.

3. Convolution Filter Settings: For Deep-Sol S1, we tested three settings, given bythe set of triplets (fk, qk, ak) (see Section 2for explanation of notation). We drop akbecause it is fixed to ReLU for each triplet;1) A = {(3, 64), (7, 64), (11, 128)}, 2) B ={(3, 64), (5, 64), (7, 64), (11, 128), (13, 128), (15, 128)}and 3) C = {(2, 64), (3, 64), . . . , (15, 128)}. Fil-ter sizes (fk ∈ {2, 3, . . . , 15}) are used to extractlocal contexts from the protein sequence giventhe window size. This corresponds to the notionof a “biological word” [32] i.e. combination ofamino acid residues or an amino acid k-mer.For smaller filter sizes (fk ≤ 10), we usedqk = 64 while for larger filters (fk > 10), weused qk = 128.

Subsequent to the multi-convolution layer, weperformed global max-pooling to select the max-imum value from each feature map. Max-poolingresulted in qk values, each produced from a dis-tinct convolution filter. We concatenated thevalues obtained after max-pooling to form acompact vector representation, h. In the caseof setting A, hnew = h and hnew ∈ Rd′

, whered′ = 256. The dimension of hnew i.e. d′ can be

calculated using the formula, d′ =K∑

k=1

qk + |b|,

where b = {} for DeepSol S1, b ∈ R57 for Deep-Sol S2 and bp ∈ Rp for DeepSol S3.

4. Fully Connected Layer Dimension (fc): Af-ter the multi-convolution-layer gave us a vectorrepresentation hnew, we passed it through a finalfully connected feed-forward layer comprising fcneurons/units. In our experiments, the values offc tested were, fc ∈ {64, 128, 256}.

The mean performance of DeepSol models for differ-ent settings on the cross-validation sets are shownin supplementary figure 1. The settings B and Cperformed better than A and smaller value of fc werepreferred over larger values. The best performing set-ting was C.

Next for DeepSol S2 and DeepSol S3, we added bi-ological features to the feature representation h thatthe multi-convolution-pooling layer constructed. Inthe DeepSol S2 setup, we concatenated the biologicalfeatures directly, while for DeepSol S3, we passed bi-ological features through a single layer feed-forwardneural network. The hidden dimension of the neu-ral network was tuned on the validation set and theoptimal value was found to be 64. For testing the per-formance of DeepSol S2 and DeepSol S3, we primar-ily tested with settings B and C. A comprehensivecomparison of the three DeepSol models is providedin supplementary table 1.

The best setting for DeepSol S2 was found to beB and the optimal value of fc is 64. Similarly, thebest setting for DeepSol S3 was C and the optimalhidden dimension for the feed forward neural networkto transform the biological features is p = 256. Thebest value of fc for DeepSol S3 was 64.

The final fully connected layer for all DeepSol mod-els (fc) was connected to the output layer, whichhad two neurons, each corresponding to a class. Wedropped 20% of the weights connecting any two lay-ers in each architecture to avoid over-fitting. Eachmodel was run for a maximum of 20 epochs withan early stopping criterion that if no improvementwas observed in validation accuracy for 10 consecu-tive epochs, then the model building procedure wouldbe stopped to prevent over-fitting.

Additional architectures were built (other thanDeepSol S1, S2 and S3) using multi-layered andmulti-filtered convolutional features, but their pre-dictive performance was inferior to a model with a

7

(a) Precision-Recall Curve (b) ROC Curve(c) Accuracy vs. probability thresh-old curve

Figure 3: Comparison of proposed DeepSol models with PaRSnIP and PROSO II on indepen-dent test set. DeepSol S1, S2 and S3 correspond to the best DeepSol model for model setting 1 (S1),model setting 2 (S2) and model setting 3 (S3), respectively. Comparison was performed with respect toArea Under Precision-Recall curve (AUPR) (Figure 3a) and Area Under Receiver Operating curve (AUC)(Figure 3b). We illustrate how accuracy varies as a function of the cutoff which acts as a threshold todiscriminate between the soluble and the insoluble class for all these bioinformatics tools (Figure 3c).

single layer of multi-filtered convolutional features.Detailed analysis of these models are provided in thesupplementary.

3.2 DeepSol Performance

The prediction performance of DeepSol was as-sessed using an independent test set reported by[1]. We compared DeepSol with solubility predic-tors PaRSnIP, PROSO II, CCSOL, SOLpro, PROSO,RPSP and SCM. A comprehensive comparison ofDeepSol models with the best available sequence-based protein solubility predictor, PaRSnIP, and sec-ond best predictor, PROSO II, is showcased in Figure3.

DeepSol S2 and S3 outperformed state-of-the-artmethods like PaRSnIP and PROSO II with respectto several quality metrics, such as accuracy, selectiv-ity and sensitivity. On the other hand, the perfor-mance of DeepSol S1, which just used CNN basedfeatures and no additional biological features, wascomparable to PaRSnIP and better than PROSO IIbut worse than DeepSol S2 and DeepSol S3 (see Fig-ure 3 and Table 2). DeepSol S2 emerged as the best

solubility predictor for 5 evaluation metrics yieldinga prediction accuracy of 0.77 which was 3.5% betterthan PaRSnIP (0.74) and 19% better than PROSOII(0.64). DeepSol S2 achieved an MCC value of 0.55which was 15% better than that obtained by eitherPaRSnIP (0.48) or PROSO II (0.34) (see Figures 3aand 3b, and Table 2).

DeepSol S2 attained a much higher performancewith respect to selectivity for soluble (0.84) and sen-sitivity for insoluble (0.88) proteins with respect toPaRSnIP (0.76 and 0.78 respectively). However,DeepSol S3 performed the best with respect to selec-tivity for soluble (0.73) and gain for insoluble (1.46)proteins. The only metric for which PaRSnIP out-performed DeepSol S2 models was on sensitivity forsoluble (0.70) proteins where DeepSol S3 (0.69) wascompetitive with PaRSnIP. Finally, we assessed theperformance of DeepSol models using different prob-ability cutoffs (see Figure 3c). The best performancefor all bioinformatics tools (except 0.6 for PROSOII) was achieved using a threshold of 0.5, which wasexpected, as the training and the test sets were fairlybalanced (see Table 2). We perfomed an additionalcomparison highlighting the superiority of the three

8

Methods AccuracyMCCSelectivity (Soluble)Selectivity (Insoluble)Sensitivity (Soluble)Sensitivity (Insoluble)Gain (Soluble)Gain (Insoluble)DeepSol S1 0.73 0.46 0.75 0.71 0.69 0.77 1.50 1.42DeepSol S2 0.77 0.55 0.84 0.72 0.65 0.88 1.69 1.44DeepSol S3 0.77 0.54 0.81 0.73 0.69 0.84 1.63 1.46PaRSnIP 0.74 0.48 0.76 0.72 0.70 0.78 1.52 1.45PROSO II 0.64 0.34 0.67 0.68 0.69 0.66 1.33 1.35CCSOL 0.54 0.08 0.54 0.54 0.51 0.57 1.09 1.08SOLpro 0.60 0.20 0.62 0.58 0.51 0.69 1.24 1.17PROSO 0.58 0.16 0.58 0.57 0.54 0.62 1.17 1.15RPSP 0.52 0.03 0.52 0.51 0.44 0.59 1.03 1.02SCM 0.60 0.21 0.65 0.57 0.42 0.77 1.30 1.14

Table 2: Prediction performance of DeepSol compared to 7 protein solubility predictors on the independenttest set. Best performing method in bold. Performance values for majority of predictors obtained from [1].Here DeepSol S1, S2 and S3 correspond to best DeepSol model for 1st, 2nd & 3rd model settings.

DeepSol models over PROSO II using 10-fold cross-validation on the training set (see supplementary ta-ble 5). Moreover, we used 10-fold cross-validation onthe training set to illustrate the stability of the resultsobtained by the DeepSol models for various evalua-tion metrics (see supplementary figure 2). This isjustified by the small amount of variance in the box-plots corresponding to various evaluation metrics forthe different DeepSol models (see supplementary fig-ure 2).

Additionally, we built a deep feed-forward neuralnetwork classifier based on the exact set of featuresused in PaRSnIP. We optimized for the number oflayers, the number of hidden neurons in each layerand the amount of dropout at each layer for goodgeneralization performance and reduced the risk ofover-fitting by performing 10-fold cross validation.The optimal model, hereby referred as DL WPF(Deep Learning With PaRSnIP Features), has 3 feed-forward layers with 128 hidden neurons each and adropout of 20%. We could not use CNNs as the localcontextual features (mono-, di- and tri-peptide fre-quencies) from the raw protein sequences were engi-neered explicitly for PaRSnIP. DL WPF achieved anaccuracy of 0.70 and a MCC value of 0.39 on the in-dependent test set. For DL WPF both accuracy andMCC values were much smaller in comparison to thatobtained from PaRSnIP and DeepSol S2 indicating afeed-forward neural network built on PaRSnIP fea-tures was not as capable as either PaRSnIP (GBM)or DeepSol S2 (CNN) for protein solubility predic-tion.

By using score distribution plots, we were able tocompare DL WPF with PaRSnIP and the best pro-

posed approach, DeepSol S2, to identify whether theimprovement came from the training algorithm orfrom the selection of features (see Figure 4a). It em-pirically illustrated that the score distributions forthe 3 methods did not follow a normal distribution.Hence, we used the Mann-Whitney-Wilcox (MWW)test [33] to compare pairwise score distribution foreach class. We found that the score distributions ofDeepSol S2 vs. DL WPF, DL WPF vs. PaRSnIP andDeepSol S2 vs. PaRSnIP were all different (pvalue< 0.01) in the case of the insoluble class and thedifference between the mean scores for each of thepaired score distributions (DeepSol S2 vs. DL WPFand DL WPF vs. PaRSnIP) were statistically sig-nificant (pvalue ¡ 0.01) for the soluble class. More-over, from the violin plot, we observed that the scoredistributions followed very different density distribu-tions for the 3 methods, particularly in the case ofthe insoluble class (see Figure 4a). This indicatedthat the underlying mechanism being used by thesemodels for classifying the insoluble class predictionwas disparate. Both DL WPF and PaRSnIP usedthe same set of features, but PaRSnIP built moreaccurate gradient boosted trees, resulting in a scoredistribution that contrasted with the one obtainedfrom DL WPF for both the soluble and the insolu-ble class. Additionally, at the optimal cutoff 0.5, DLWPF had greater density of scores below threshold θfor the soluble class, resulting in lower accuracy thanDeepSol S2 and PaRSnIP.

Interestingly, DeepSol S2 was the only method thatwas ≥ 99% confident while predicting both the solu-ble (score = 1) and the insoluble (score = 0) classfor several test set proteins (see Figure 4a). An-

9

●●●●●

●●

p=0.74

p=1e−13

p=1e−3 p=2e−10

p=2e−16

p=2e−3

0.0

0.5

1.0

1.5

Insoluble SolubleTrue Class

Sc

ore

Dis

trib

uti

on

Methods

DeepSol S2

DL WPF

PaRsnIP

(a) Comparison of score distributions of DeepSol S2,DL WPF & PaRSnIP for both insoluble and solubletest set proteins.

●

●

●●

●

●●

●●●●●

●●

p=2e−16

p=2e−16

p=2e−2

p=2e−5

p=3e−3

p=5e−12

0.0

0.5

1.0

1.5

Insoluble SolubleTrue Class

Sc

ore

Dis

trib

uti

on

Methods

DeepSol S1

DeepSol S2

DeepSol S3

(b) Comparison of score distributions of DeepSol S1,S2 and S3 for both insoluble and soluble test set pro-teins.

Figure 4: Comparison of score distributions for various protein solubility predictors. PaRSnIPand DeepSol S2 have nearly similar score distributions (no statistical significance) for the soluble class butthese are very different for the insoluble class. However, in Figure 4b, only DeepSol S2 and DeepSol S3 havesimilar shapes for score distributions in the cases of both the soluble and the insoluble class. The violin plotcorresponding to each method for each class can efficiently estimate the density of the scores, with thickness∝ to the density. Here the “dark red” colored diamond represents the mean for each score distribution, prepresents pvalue and 2e-16 is equivalent to 2× 10−16.

other interesting observation was that DeepSol S2and PaRSnIP had a similar score distribution for thesoluble class (pvalue = 0.74) and the mean score forDeepSol S2 (0.24) was significantly lower than themean score for PaRSnIP (0.32) in case of the insolu-ble class. Lower score values are better for the insol-uble class where the actual class label is 0 and higherscore values are better for soluble class where the ac-tual class label is 1.

Similarly, we showed empirically that score distri-butions for the 3 DeepSol model settings did not fol-low the normal distribution (see Figure 4b). Hence,we performed the MWW test between pairwise distri-butions (DeepSol S1 vs. DeepSol S2, DeepSol S2 vs.DeepSol S3, DeepSol S3 vs. DeepSol S1) for both the

insoluble and the soluble class respectively. The dif-ference between the mean scores for each paired scoredistribution was statistically significant for both theinsoluble and the soluble class. At the optimal pre-diction cutoff 0.5, DeepSol S1 had a higher density ofscores ≥ 0.5 for the insoluble class and a higher den-sity of scores ≤ 0.5 for the soluble proteins, resultingin lower predictive accuracy and MCC in comparisonto DeepSol S2 and DeepSol S3. Moreover, from theviolin plot, we observed that the score density distri-bution of DeepSol S1 was very different from that ofDeepSol S2 and DeepSol S3 in the case of both the in-soluble and the soluble class (see Figure 4a). Thoughthe underlying mechanism being used by all DeepSolmodels was CNN based Deep Learning, DeepSol S2

10

and DeepSol S3 benefit similarly from the additional57 biological features resulting in more accurate mod-els (having almost identical density distributions forboth the classes) in comparison to DeepSol S1 (seeTable 2).4 Discussion

Novel in silico, sequence-based protein solubility pre-dictors that have high prediction accuracy are highlysought. In this study, we introduce DeepSol, a solu-bility predictor that uses Deep Learning, in partic-ular convolutional neural networks, and additionalset of biological features that represent sequence aswell as structural properties of proteins. DeepSol (S2and S3) outperformed, to the best of our knowledge,all existing sequence-based solubility predictors by atleast 3.5% in accuracy and 15% in MCC.

Interestingly, the performance of DeepSol S1,which extracts local contextual feature vectors us-ing “biological” words of different lengths (fk ∈{2, 3, . . . , 15}) from just the raw protein sequence,is comparable with PaRSnIP and much better thanSVM-based tools like PROSO II, CCSOL and SOL-pro with respect to various evaluation metrics.PaRSnIP uses 8,477 features including mono-, di- andtri-peptide frequencies along with an additional 57biological features mentioned in Table 1. The au-thors of PaRSnIP illustrated that k-mer peptide fre-quencies (where k ∈ {1, 2, 3}) play a major role inpredicting the solubility of a protein. However, thissuggests that DeepSol S1 not only captures mono-, di- and tri-peptide frequencies through the localcontextual feature vector, but can also capture ad-ditional informative higher order interactions amidstthe amino acid residues by means of the convolutionalfeatures (fk ∈ {4, 5, . . . , 15}) and higher order ab-stract structural features such as protein folds [34],thereby, making its predictive performance compara-ble to PaRSnIP (see Table 2). It was shown in [34]that CNNs can be used to accurately predict proteinfolds and in a recent review on protein solubility [12],the authors emphasize that sequence-based featuressuch as k-mer frequencies and structural features suchas protein folding play a vital role in protein solubil-ity.

One of the limitations of PaRSnIP is that it ex-

plicitly calculates the peptide frequency for differentvalues of k. Hence, for larger values of k, the com-putational complexity explodes (i.e. 20k features),whereas CNNs can capture these higher order localinteractions with relative ease using multiple parallelnon-linear convolution filters. However, PaRSnIP hasthe ability to provide relative variable importance foreach feature, a trait currently missing in the DeepSolarchitecture.

DeepSol S2 and S3 models have score means whichare closer to each other for both soluble and insol-uble classes in comparison to DeepSol S1 (see Fig-ure 4b). This is expected as they both greatly ben-efit from complimentary information incorporated inthese models through the additional 57 explicit se-quence and structural features. Both, DeepSol S2and DeepSol S3 achieve superior performance foreach class (see Table 2 and Figure 4b). This in-dicated that local contextual feature vectors learntvia a multi-filter CNN is complimented by sequenceand structural features such as fraction of exposedresidues (FER), secondary structure features ob-tained from SCRATCH, hydrophobicity indices of ex-posed residues, etc. and are not confounding eachother. It was shown in PaRSnIP [13] that severalof these additional explicit features also play a ma-jor role in protein solubility prediction. Moreover,as in DeepSol S1, both DeepSol S2 and DeepSol S3capture discriminative higher order local interactionsamidst the amino acid residues by means of the dif-ferent convolution filter sizes (fk ∈ {4, 5, . . . , 15}),thereby, making its predictive performance superior.

Finally, the primary reason for the enhanced per-formance of DeepSol models is the choice of state-of-the-art machine learning technique convolutionalneural networks (CNN). The CNN framework ex-ploits the k-mer structure in the input protein se-quence using a set of parallel convolution filters ofvarying sizes and can efficiently capture abstractstructural features such as protein folds [34]. It caninherently capture the non-linear relationships be-tween the local contextual feature vector and the de-pendent vector (solubility classification), while pre-venting over-fitting using dropout on the weights,leading to good generalization performance. It over-comes the limitations faced by two-stage classifiers

11

which have a separate step for feature selection.Moreover, the predictive performance of DeepSolmodels (DeepSol S2 and S3) is significantly boostedby the addition of 57 sequence and structural fea-tures extracted from protein sequences using bioinfor-matics tools (e.g. SCRATCH) which compliment thelocal contextual feature vector obtained from multi-filter CNN.

In conclusion, we propose a novel Deep Learningbased solubility predictor, DeepSol, that primarilyutilizes features extracted from the raw protein se-quence using CNNs. The performance of DeepSol isboosted by additional sequence and structural fea-tures extracted from the protein sequence. Deep-Sol overcomes limitations such as two-stage classifierwith a separate step for feature selection and outper-forms all existing sequence-based solubility predic-tors with respect to various evaluation metrics likeaccuracy, MCC, selectivity and gain for soluble andsensitivity for insoluble proteins.

The capability (sensitivity) of DeepSol S1 (69%)and DeepSol S3 (69%) to correctly identify solubleproteins in the independent test set is comparable tothat of sequence-based methods like PaRSnIP (70%)and PROSO II (69%). However, DeepSol S2 (88%)and DeepSol S3 (84%) are 10% and 6% more sensi-tive than PaRSnIP (78%) respectively, and are 22%and 18% more sensitive than PROSO II (66%) re-spectively for identifying insoluble proteins. Hence,DeepSol can be applied in applications, such as, topre-reject initial targets that are insoluble in silicoand thus can help to reduce the production cost.

References

[1] C. C. H. Chang, J. Song, B. T. Tey, andR. N. Ramanan. Bioinformatics approachesfor improved recombinant protein production inEscherichia coli: protein solubility prediction.Briefings in Bioinformatics, 15(6):953–962, nov2014.

[2] Wen-Ching Chan, Po-Huang Liang, Yan-PingShih, Ueng-Cheng Yang, Wen-chang Lin, andChun-Nan Hsu. Learning to predict expressionefficacy of vectors in recombinant protein pro-duction. BMC bioinformatics, 11(1):S21, 2010.

[3] Gregory D Davis, Claude Elisee, Denton MNewham, and Roger G Harrison. New fusionprotein systems designed to give soluble expres-sion in Escherichia coli. Biotechnology and bio-engineering, 65(4):382–388, 1999.

[4] Susan Idicula-Thomas and Petety V Balaji. Un-derstanding the relationship between the pri-mary structure of proteins and its propensity tobe soluble on overexpression in Escherichia coli.Protein science : a publication of the ProteinSociety, 14(3):582–92, mar 2005.

[5] Christophe N Magnan, Arlo Randall, and PierreBaldi. SOLpro: accurate sequence-based predic-tion of protein solubility. Bioinformatics (Ox-ford, England), 25(17):2200–7, sep 2009.

[6] Bastiaan A van den Berg, Marcel JT Reinders,Marc Hulsman, Liang Wu, Herman J Pel, Jo-hannes A Roubos, and Dick de Ridder. Explor-ing sequence characteristics related to high-levelproduction of secreted proteins in aspergillusniger. PLoS One, 7(10):e45869, 2012.

[7] Jean-Denis Pedelacq, Emily Piltch, Elaine C Li-ong, Joel Berendzen, Kim Chang-Yub, Beom-Seop Rho, Min S Park, Thomas C Terwilliger,and Geoffrey S Waldo. Engineering soluble pro-teins for structural genomics. Nature biotechnol-ogy, 20(9):927, 2002.

[8] G D Davis, C Elisee, D M Newham, and R GHarrison. New fusion protein systems designedto give soluble expression in Escherichia coli.Biotechnology and bioengineering, 65(4):382–8,nov 1999.

[9] D L Wilkinson and R G Harrison. Predict-ing the solubility of recombinant proteins in Es-cherichia coli. Biotechnology (Nature PublishingCompany), 9(5):443–8, may 1991.

[10] D Christendat, A Yee, A Dharamsi, Y Kluger,A Savchenko, J R Cort, V Booth, C D Mack-ereth, V Saridakis, I Ekiel, G Kozlov, K LMaxwell, N Wu, L P McIntosh, K Gehring, M AKennedy, A R Davidson, E F Pai, M Gerstein,

12

A M Edwards, and C H Arrowsmith. Structuralproteomics of an archaeon. Nature structural bi-ology, 7(10):903–9, oct 2000.

[11] P Bertone, Y Kluger, N Lan, D Zheng, D Chris-tendat, A Yee, A M Edwards, C H Arrowsmith,G T Montelione, and M Gerstein. SPINE: anintegrated tracking database and data miningapproach for identifying feasible targets in high-throughput structural proteomics. Nucleic acidsresearch, 29(13):2884–98, jul 2001.

[12] Kyle Trainor, Aron Broom, and Elizabeth MMeiering. Exploring the relationships betweenprotein sequence, structure and solubility. Cur-rent Opinion in Structural Biology, 42:136–146,2017.

[13] Reda Rawi, Raghvendra Mall, Khalid Kunji,Chen-Hsiang Shen, Peter D Kwong, and Gwo-YuChuang. PaRSnIP: Sequence-Based Protein Sol-ubility Prediction using Gradient Boosting Ma-chine. Bioinformatics, page btx662, 2017.

[14] Pawel Smialowski, Gero Doose, Phillipp Torkler,Stefanie Kaufmann, and Dmitrij Frishman.PROSO II - a new method for protein solubilityprediction. FEBS Journal, 279(12):2192–2200,jun 2012.

[15] Federico Agostini, Michele Vendruscolo, andGian Gaetano Tartaglia. Sequence-based predic-tion of protein solubility. Journal of MolecularBiology, 421(2-3):237–241, aug 2012.

[16] P. Smialowski, A. J. Martin-Galiano, A. Miko-lajka, T. Girschick, T. A. Holak, and D. Frish-man. Protein solubility: sequence based predic-tion and experimental verification. Bioinformat-ics, 23(19):2536–2542, oct 2007.

[17] Hui-Ling Huang, Phasit Charoenkwan, Te-FenKao, Hua-Chin Lee, Fang-Lin Chang, Wen-LinHuang, Shinn-Jang Ho, Li-Sun Shu, Wen-LiangChen, and Shinn-Ying Ho. Prediction and anal-ysis of protein solubility using a novel scoringcard method with dipeptide composition. BMCbioinformatics, 13 Suppl 1:S3, 2012.

[18] Corinna Cortes and Vladimir Vapnik. Supportvector networks. Machine Learning, 20(3):273–297, 1995.

[19] Johan A. K. Suykens, Tony Van Gestel, Jos DeBrabanter, Bart De Moor, and Joos Vandewalle.Least Squares Support Vector Machines. WorldScientific Publishing Co. Pte. Ltd., 2002.

[20] Jerome H. Friedman. Greedy function approxi-mation: A gradient boosting machine. The An-nals of Statistics, 29(5):1189–1232, oct 2001.

[21] Yann LeCun, Yoshua Bengio, et al. Convolu-tional networks for images, speech, and time se-ries. The handbook of brain theory and neuralnetworks, 3361(10):1995, 1995.

[22] Sheng Wang, Jian Peng, Jianzhu Ma, and JinboXu. Protein secondary structure prediction us-ing deep convolutional neural fields. Scientificreports, 6, 2016.

[23] Zhen Li and Yizhou Yu. Protein secondarystructure prediction using cascaded convolu-tional and recurrent neural networks. arXivpreprint arXiv:1604.07176, 2016.

[24] Sheng Wang, Siqi Sun, Zhen Li, Renyu Zhang,and Jinbo Xu. Accurate de novo predic-tion of protein contact map by ultra-deeplearning model. PLoS computational biology,13(1):e1005324, 2017.

[25] David Harris and Sarah Harris. Digital designand computer architecture. Morgan Kaufmann,2010.

[26] Tomas Mikolov, Ilya Sutskever, Kai Chen,Greg S Corrado, and Jeff Dean. Distributed rep-resentations of words and phrases and their com-positionality. In Advances in neural informationprocessing systems, pages 3111–3119, 2013.

[27] Weizhong Li and Adam Godzik. CD-HIT: a fastprogram for clustering and comparing large setsof protein or nucleotide sequences. Bioinformat-ics (Oxford, England), 22(13):1658–9, jul 2006.

13

[28] Limin Fu, Beifang Niu, Zhengwei Zhu, SitaoWu, and Weizhong Li. CD-HIT: accelerated forclustering the next-generation sequencing data.Bioinformatics (Oxford, England), 28(23):3150–2, dec 2012.

[29] Christophe N Magnan and Pierre Baldi.SSpro/ACCpro 5: almost perfect prediction ofprotein secondary structure and relative solventaccessibility using profiles, machine learning andstructural similarity. Bioinformatics (Oxford,England), 30(18):2592–7, sep 2014.

[30] Bing Xu, Naiyan Wang, Tianqi Chen, andMu Li. Empirical evaluation of rectified acti-vations in convolutional network. arXiv preprintarXiv:1505.00853, 2015.

[31] Diederik Kingma and Jimmy Ba. ADAM:A method for stochastic optimization. arXivpreprint arXiv:1412.6980, 2014.

[32] Ehsaneddin Asgari and Mohammad RK Mofrad.Continuous distributed representation of biolog-ical sequences for deep proteomics and genomics.PloS one, 10(11):e0141287, 2015.

[33] Henry B Mann and Donald R Whitney. On atest of whether one of two random variables isstochastically larger than the other. The annalsof mathematical statistics, pages 50–60, 1947.

[34] Jie Hou, Badri Adhikari, and Jianlin Cheng.Deepsf: deep convolutional neural network formapping protein sequences to folds. arXivpreprint arXiv:1706.01010, 2017.

14