Cluster Attention Contrast for Video Anomaly...

Transcript of Cluster Attention Contrast for Video Anomaly...

Cluster Attention Contrast for Video Anomaly Detection

Ziming Wang∗[email protected]

ADSPLAB, School of ECE, PekingUniversity

ShenZhen, China

Yuexian Zou∗†[email protected]

ADSPLAB, School of ECE, PekingUniversity

ShenZhen, China

Zeming [email protected]

Harbin Institute of TechnologyHarbin, China

ABSTRACTAnomaly detection in videos is commonly referred to as the dis-crimination of events that do not conform to expected behaviors.Most existing methods formulate video anomaly detection as anoutlier detection task and establish normal concept by minimizingreconstruction loss or prediction loss on training data. However,these methods performances suffer drops when they cannot guar-antee either higher reconstruction errors for abnormal events orlower prediction errors for normal events. To avoid these problems,we introduce a novel contrastive representation learning task, Clus-ter Attention Contrast, to establish subcategories of normality asclusters. Specifically, we employ multi-parallel projection layersto project snippet-level video features into multiple discriminatefeature spaces. Each of these feature spaces is corresponding to acluster which captures distinct subcategory of normality, respec-tively. To acquire the reliable subcategories, we propose the ClusterAttention Module to draw the cluster attention representation of eachsnippet, then maximize the agreement of the representations fromthe same snippet under random data augmentations via momentumcontrast. In this manner, we establish a robust normal concept with-out any prior assumptions on reconstruction errors or predictionerrors. Experiments show our approach achieves state-of-the-artperformance on benchmark datasets.

CCS CONCEPTS•Computingmethodologies→Computer vision; Scene anom-aly detection.

KEYWORDSvideo anomaly detection, cluster attention contrast, contrastivelearning

ACM Reference Format:Ziming Wang, Yuexian Zou, and Zeming Zhang. 2020. Cluster AttentionContrast for Video Anomaly Detection. In Proceedings of the 28th ACM

∗Also with Peng Cheng Laboratory.†Corresponding author ([email protected]).

Permission to make digital or hard copies of all or part of this work for personal orclassroom use is granted without fee provided that copies are not made or distributedfor profit or commercial advantage and that copies bear this notice and the full citationon the first page. Copyrights for components of this work owned by others than theauthor(s) must be honored. Abstracting with credit is permitted. To copy otherwise, orrepublish, to post on servers or to redistribute to lists, requires prior specific permissionand/or a fee. Request permissions from [email protected] ’20, October 12–16, 2020, Seattle, WA, USA© 2020 Copyright held by the owner/author(s). Publication rights licensed to ACM.ACM ISBN 978-1-4503-7988-5/20/10. . . $15.00https://doi.org/10.1145/3394171.3413529

𝒇𝒒(#) 𝒇𝒌(#)

𝒈𝒌(#)𝒈𝒒(#)

𝒉𝒊𝟏𝒒 , 𝒉𝒊𝟐

𝒒 , ⋯ , 𝒉𝒊𝑲𝒒 𝒉𝒊𝟏𝒌 , 𝒉𝒊𝟐𝒌 , ⋯ , 𝒉𝒊𝑲𝒌

Representations in K feature spaces

Cluster attention representation 𝒛𝒊𝒌𝒛𝒊𝒒

𝒕~𝜯 𝒕,~𝜯

Maximize agreement

"𝒙𝒊𝒒 "𝒙𝒊𝒌𝒙𝒊

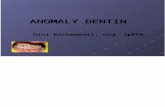

Figure 1: Themotivation of our work. The high-quality clus-ter attention representation of a snippet should be similarto the same sample under random data augmentation, anddistinguishable from other samples.

International Conference on Multimedia (MM ’20), October 12–16, 2020, Seat-tle, WA, USA. ACM, New York, NY, USA, 9 pages. https://doi.org/10.1145/3394171.3413529

1 INTRODUCTIONAnomaly detection in videos is an important task because of itsextensive applications in intelligent surveillance, activity recogni-tion, evidence investigation, etc. Since anomalous events are rarelyseen in common environments, anomalies are often defined as be-havioral or appearance patterns different from usual patterns inprevious work [2, 3]. It is extremely challenging because of the am-biguity and complexity of anomaly definition in real applications.Obviously, it is almost infeasible to collect all examples of abnormalevents and perform traditional supervised learning methods. There-fore, for a typical setting for anomaly detection tasks, only normalscenarios are given in the training data. Based on this definition,most recently anomaly detection approaches [3, 6, 27, 30, 38, 53, 60]are based on outlier detection and learn a model of normality fromtraining videos. During inference, events are labeled as abnormal ifthey deviate from the normality model. These methods for model-ing normal concept can be categorized into two types, namely thereconstruction-based framework [12, 13, 35, 53] and the prediction-based framework [37, 61]. They both regard events that do not fitinto the normality models as abnormal, but their performancessuffer when they cannot guarantee either higher reconstruction

errors for abnormal events or lower prediction errors for normalevents. However, these methods do not explicitly distinguish be-tween normal conditions that differ greatly. For example, on thesidewalks on campus, there are both normal when no one to passby or when a large number of pedestrians pass by after school, butthe two conditions have completely different motion patterns.

Different from previous methods, we propose a novel contrastiverepresentation learning task, Cluster Attention Contrast, to obtainmultiple subcategories of normality. We appreciate the diversity ofnormal conditions and exploit these gaps to establish a robust nor-mal concept. We propose to employ a contrastive learning paradigmto establish reliable subcategories of normality as clusters. Specifi-cally, we employ multi-parallel projection layers to project snippet-level video features into multiple discriminate feature spaces. Eachof these feature spaces is corresponding to a cluster which cap-tures distinct subcategory of normality, respectively. To acquirethe reliable subcategories, we propose the Cluster Attention Moduleand strive to draw the optimal cluster attention representation ofeach snippet by maximizing the agreement of the representationsfrom the same snippet under random data augmentations. Duringinference, for each test snippet, the highest similarity between itssubcategory-specific representations and corresponding centersis regarded as its regularity score. We detect anomalies based onthis intuition: if a sample is far away from the centers in all corre-sponding feature spaces, we can determine that an anomaly hasoccurred.

Our contribution is summarized as follows:• We introduce a novel contrastive representation learningtask, Cluster Attention Contrast, to establish a robust normalconcept for video anomaly detection task. As far as we know,this is the first work in the related field.• We propose an effective contrastive learning frameworkfor video anomaly detection. Our method achieves state-of-the-art results on two benchmark datasets: CUHK Avenue,ShanghaiTech Campus, where it reaches 87.0% and 79.3%frame-level AUC score, respectively.

We organize the paper as follows. We present related work inSection 2 and describe our approach in Section 3. We conductthe video anomaly detection experiments and elaborate on theimplementation details in Section 4. We draw our conclusions inSection 5.

2 RELATEDWORKVideo Anomaly Detection. As one of the most challenging prob-lems, anomaly detection in videos has been extensively studiedfor many years. Video Anomaly Detection is commonly formal-ized as an outlier detection task, most the research addresses theproblem under the assumption that anomalies are rare or unseen,and behaviors deviating from normal patterns are supposed to beanomalous. Researchers attempt to encode regular patterns viaa variety of statistic models, e.g., the social force model [38], themixture of dynamic models on texture [30], Hidden Markov Mod-els on video volumes [16, 28], the Markov Random Field uponspatial-temporal domain [27], Gaussian process modeling [6, 29],and identify anomalies as outliers. With the great success of deeplearning, the recent video anomaly detection work can be classified

into deep reconstruction methods and deep prediction methods.Hasan et al. [13] employed two autoencoders, one is learned onconventional handcrafted features, while another is learned in anend-to-end fashion with a fully convolutional network. On theother hand, Liu et al. [32] proposed a future frame prediction net-work for anomaly detection. What more closely related to our workare methods [6, 7, 11, 34, 34, 43] that learn a dictionary of atomsrepresenting normal events during training, they utilize sparse rep-resentation to construct a dictionary for normal behavior and detectanomalies as the ones with high reconstruction error. The maindifference between these methods and our work is that we do notsparsely encode features to evaluate reconstruction loss but directlyjudge the occurrence of anomalies by the similarities between itssubcategory-specific representations and corresponding centers.

Deep Clustering. Over the years, many methods have been de-veloped for deep clustering. Some earlier deep clustering methodsdivided representation learning and clustering into two stages, drawon SAE [4, 15] or its variants [36, 39, 49, 58]to extract intermediatefeatures, and then employ k-means [30, 36] or spectral cluster-ing [30]. In contrast to them, some studies[9, 52, 57, 62]integratethe two stages into a unified framework. At the same time, somemethods [17, 19, 54] directly use the convolutional neural network(CNN) or multi-layer perceptron (MLP) for representation learningby designing specific loss functions instead of the reconstructionloss. Furthermore, Semantic deep clustering methods have recentlygained more attention for clustering. IIC [25] directly trains a clas-sification network by maximizing the mutual information betweenoriginal data and their transformations. However, the computationof mutual information requires an immense batch size in the train-ing process, which is challenging to apply on large images. Themost similar method to our approach is DMC[18], which performsclustering by aligning the cluster centers of two modal features.DMC[18] uses pretrained image features to guide the learning andclustering of speech features in a teacher-student way. Besides, itneeds to be clarified that our approach is distinguished from theconcept of deep subspace clustering methods[24, 59]. The maindifference is our method directly projects the original space intoa lower-dimensional feature space instead of using a subset of theoriginal space dimensions.

Contrastive Representation Learning. Contrastive represen-tation learning makes use of internal patterns of data and devisespredictive tasks to train a model. Many pretext tasks have beenproposed: counting the objects[40], predicting the context [10], fill-ing in missing parts of an image [42], or recovering colors fromgrayscale images [58]. As for videos, self-supervision strategiesinvolve: predicting future [47], leveraging temporal continuity viatracking [50, 51], or preserving the equivariance of egomotion[23, 41, 63]. CMC[46] presented a contrastive learning frameworkthat enables the learning of unsupervised representations frommultiple views of a dataset. The principle of maximization of mu-tual information enables the learning of powerful representations.These methods can be thought of as building dynamic dictionaries.SimCLR[5] verified the impact of different types of data augmenta-tion and project heads on contrastive learning and pointed out con-trastive learning benefits from larger mini-batch sizes and longertraining compared to its supervised counterpart. At the same time,contrastive learning benefits from deeper and wider networks like

Project Layers

Cluster Attention Module

⋯

Pretrained Feature Extractor

LayerNorm

𝑧𝑧𝑖𝑖𝑞𝑞 𝑧𝑧𝑖𝑖𝑘𝑘

Cluster Attention Module

encoder q encoder k

Maximize agreement

𝑡𝑡~𝛵𝛵 𝑡𝑡′~𝛵𝛵⋯ ⋯⋯

Project Layers Project Layers Project Layers ⋯

Pretrained Feature Extractor

LayerNorm

Project Layers Project Layers

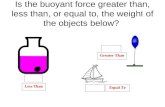

Figure 2: Overview of the Cluster Attention Contrast task.We perform two random data augmentation transformations on theinput snippet, and send them to the two encoders, respectively. We regard the output of encoder q and the output of encoderk as a positive pair if they originate from the same snippet, and otherwise as a negative sample pair. Finally, we employ acontrastive loss to maximize agreement the cluster attention representation of the positive pairs. Best viewed in color.

supervised learning. MoCo[14] present Momentum Contrast as away of building large and consistent dictionaries for unsupervisedlearning with a contrastive loss. Considering that video featureextraction requires a considerable amount of computing resources,we use the momentum contrast to train our network to obtain betterperformance with a small mini-batch size.

3 PROPOSED METHOD3.1 OverviewOur method proposes to establish a robust normal concept withoutany prior assumptions on reconstruction errors or prediction errors.Unlike previous works, we introduce an instance discriminationpretext task, Cluster Attention Contrast, to obtain reliable subcat-egories of normality, which is the core contribution of this work.As illustrated in Figure 2, our model consists of two fully symmet-ric encoders. Each encoder mainly consists of three components:a pretrained feature extractor, a parallel projection layers group,and a Cluster Attention Module. We employ multi-parallel pro-jection layers to project snippet-level video features into multiplediscriminative feature spaces for capturing diverse normal patterns.We introduce the Cluster Attention Module to connect the ClusterAttention Contrast task with the Video Anomaly Detection task.

Specifically, we first construct the input of encoders by a tem-poral cuboid using a sliding window technique. We stack 𝑇 = 8frames together as a snippet. It should be noted, all training andinference phases are performed on such snippets. During Training,for each snippet as illustrated in Figure 2, we draw two random dataaugmentations on the snippet and send them to the two encoders, re-spectively. In each encoder, we first use a pretrained action classifierto extract snippet-level features and perform a layer normalization

to improve regularization. Then we employ 𝐾 parallel projectionlayers to project snippet-level video features into 𝐾 discriminatefeature spaces. In this work, 𝐾 is a manually pre-fixed parameter.Each of these 𝐾 feature spaces is corresponding to a cluster whichcaptures distinct subcategory of normality. In Cluster AttentionModule, we redraw the original snippet-level feature through thelinear combination of its 𝐾 subcategory-specific representations.The linear combination weight is obtained by the similarity be-tween the feature’s subcategory-specific representations and thecorresponding cluster centers. After this redrawn snippet-level fea-ture passes through a project head 𝐻 , we can obtain the output ofour encoder, i.e., the cluster attention representation.

The cluster attention representation is the link between the VideoAnomaly Detection task and our contrastive representation learn-ing task. On the one hand, for our instance discrimination pretexttask, a high-quality cluster attention representation should satisfythe requirement to agree on the same sample under random dataaugmentation and to distinguish from different samples. On theother hand, due to this representation is obtained by subcategory-specific representations through our cluster attention mechanism,we need reliable projected feature spaces to support it. We uti-lize those 𝐾 feature spaces and corresponding cluster centers toconstruct a robust normal concept for Video Anomaly Detectiontask.

To estimate the cluster centers while training, we modify an EM-like[8] optimization mechanism: alternately updating the clustercenter and the model parameters, which makes a soft assignmentbased on the posterior probabilities and converges to a local opti-mum. It is worth noting that ourmethod only calculates the centroidof features in determining feature space as the cluster center insteadof introducing an additional clustering task.

Algorithm 1 Cluster Center EstimatingInput:

temperature 𝜏𝑐 , number of clusters 𝐾 , feature queue {𝒖𝑖 }𝑀𝑖=1structure of project layers

{𝑝𝑞

1 , 𝑝𝑞

2 , . . . , 𝑝𝑞

𝐾

},

{𝑝𝑘1 , 𝑝

𝑘2 , . . . , 𝑝

𝑘𝐾

}Initialize the 𝑠𝑞

𝑖 𝑗= 𝑠𝑘

𝑖 𝑗= 0.

for 𝑣 ∈ {𝑞, 𝑘} dofor all 𝑖 = 1 to𝑀 , 𝑗 = 1 to 𝐾 do

ℎ𝑣𝑖 𝑗

= 𝑝𝑣𝑗(𝑢𝑖 )

𝑤𝑣𝑖 𝑗

= softmax(𝑠𝑣𝑖 𝑗

)𝑐𝑣𝑗=∑𝑀𝑖=1𝑤

𝑣𝑖 𝑗ℎ𝑣𝑖 𝑗# update centers

𝑠𝑣𝑖, 𝑗

= ℎ𝑣⊤𝑖 𝑗𝑐𝑣𝑗/(𝜏𝑐

ℎ𝑣𝑖 𝑗 𝑐𝑣𝑗 ) # update similarityend for

end forreturn cluster centers for each encoder,{𝑐𝑞

1 , 𝑐𝑞

2 , . . . , 𝑐𝑞

𝐾|𝑐𝑞𝑗∈ 𝑅N

},

{𝑐𝑘1 , 𝑐

𝑘2 , . . . , 𝑐

𝑘𝐾|𝑐𝑘𝑗∈ 𝑅N

}We elaborate in section 3.2 on estimating the center over the

features through a differentiable approach, and introduce theClusterAttention Module and Cluster Attention Contrast task in section 3.3.

3.2 ClusteringEstablish Subcategories of Normality as Clusters. For simplic-ity, consider the task of obtaining the center of the subcategory𝑗 specific feature space of the encoder 𝑞, which can be expressedas 𝑐𝑞

𝑗. The simplest idea is selecting the centroid data point of all

features in current subcategory specific feature space as its center.Consider all the features in subcategory 𝑗 specific feature space:{ℎ𝑞

1𝑗 , ℎ𝑞

2𝑗 , . . . , ℎ𝑞

𝑀 𝑗|ℎ𝑞𝑖 𝑗∈ 𝑅N

}and an alternative center 𝑐𝑞

𝑗, hence,

the objective function can be formulated as,

minF (𝑐𝑞𝑗) =

𝑀∑𝑖=1

𝑑

(ℎ𝑞

𝑖 𝑗, 𝑐𝑞

𝑗

)(1)

where 𝑑(ℎ𝑞

𝑖 𝑗, 𝑐𝑞

𝑗

)means the distance between current point and the

alternative center. However, simply introducing Eq.(1) into the deepnetworks will make it difficult to optimize by gradient descent, asthe minimization function in Eq.(1) is a hard assignment of whethera data point is the center or not, which is not differentiable. Inspiredby DMC[18], we transform the hard assignment in Eq.(1) to a softassignment problem and be a differentiable one. We regard thecenter of each cluster as a linear combination of the features incurrent subcategory specific feature space, and the weight of eachfeature in the center can be obtained by the following formula,

𝑤𝑞

𝑖 𝑗=

𝑒−𝑑𝑞

𝑖 𝑗/𝜏𝑐∑𝑀

𝑖=1 𝑒−𝑑𝑞

𝑖 𝑗/𝜏𝑐

= softmax(𝑠𝑞

𝑖 𝑗

)(2)

where 𝑠𝑞𝑖 𝑗

= −𝑑(ℎ𝑞

𝑖 𝑗, 𝑐𝑞

𝑗

)/𝜏𝑐 means the similarity between cur-

rent point and the alternative center.Unlike DMC[18], we propose to use 𝑠𝑖, 𝑗 = ℎ⊤𝑖 𝑗𝑐 𝑗/

(𝜏𝑐

ℎ𝑖 𝑗 𝑐 𝑗 )as the similarity measure, which can flexibly control the softnessof assignment by adjusting clustering temperature hyperparameter

𝜏𝑐 . In our early experiments, we found simply introducing a cosineproximity as similarity measure may make the cluster centers tendto a simple mean average of related features. Therefore, we intro-duce the temperature 𝜏𝑐 to control the softness of the assignment.Experimental results show setting a slightly harder assignment thanDMC[18] can achieve better performance. Then, the minimizationoperation in Eq.(1) is approximatively computed as,

𝑐𝑞

𝑗=

𝑀∑𝑖=1

𝑤𝑞

𝑖 𝑗ℎ𝑞

𝑖 𝑗(3)

However, there remains another problem that the computationof 𝑠𝑖 depends on the current center 𝑐 which makes it difficult to getthe direct update rules for the centers. we choose the same way asDMC[18] to alternatively update the coefficient 𝑠𝑞 (𝑟 )

𝑖and center

𝑐𝑞 (𝑟+1)𝑗

, Eq.(3) is modified into,

𝑐𝑞 (𝑟+1)𝑗

=

𝑀∑𝑖=1

𝑤𝑞 (𝑟 )𝑖 𝑗

ℎ𝑞

𝑖 𝑗(4)

ConsistentCentersUse aQueue.Considering thatmini-batchgradient descent is usually used for optimization during training,which result in incoherent center representation due to the limitedmini-batch size. To address this problem, we propose to use a fea-ture queue to maintain the features from several previous batches.The introduction of the feature queue decouples the number ofsubcategory-specific representations involved in clustering fromthe mini-batch size. The new centers can benefit from the featurequeue by performing clustering on more projected features. Ourqueue size can be much larger than a typical mini-batch size andbe flexibly and independently set as a hyperparameter. We showthe complete cluster center estimating process in Algorithm 1.

AvoidingTrivial Solutions. Furthermore, trivial solutionsmayappear when the cluster centers are not constrained. For example,in an extreme case, all cluster centers are highly similar, whichis not guaranteed to obtain distinguished subcategories. This willresult in all the feature spaces focus on a few patterns and losethe remaining essential information for the normal concept. Toovercome this problem, we recommend attaching constraints toalign the centers obtained by the two encoders. In practice, weregard the cluster centers in the encoder q as the query, while thecluster centers in the encoder k as the key. The encoder q andencoder k are designed to have the same structure, and the clustercenters obtained from the corresponding project layers in the twoencoders are considered as positive pairs. Then we employ theInfoNCE[48] loss to force the 𝑐𝑞 is similar to its positive key 𝑐𝑘+and dissimilar to the other keys:

𝐿𝐷 =1𝐾

𝐾∑𝑖=0− log

exp(𝑐𝑞

𝑖· 𝑐𝑘𝑗+/𝜏

)∑𝐾𝑗=0 exp

(𝑐𝑞

𝑖· 𝑐𝑘𝑗/𝜏) (5)

Another typical trivial solution may appear in other clusteringmethod is that the vast majority of samples are assigned to a fewclusters, but did not appear in our experiments. This is possiblebecause this type of trivial solution does not contribute to mini-mizing the loss of the contrastive representation learning task weproposed in the next section.

Algorithm 2 Cluster Attention ContrastInput:

mini-batch size 𝑁 , structure of T ,structure of encoder 𝑞, encoder 𝑘 .

Initialize the queues and cluster centers.for sampled mini-batch {𝑥𝑖 }𝑁𝑖=1 do

for all 𝑖 ∈ {1, . . . , 𝑁 } dodraw two augmentation functions 𝑡 ∼ T , 𝑡 ′ ∼ T𝑥𝑞

𝑖= 𝑡 (𝑥𝑖 ), 𝑥𝑘𝑖 = 𝑡 ′ (𝑥𝑖 )

𝒉𝑞

𝑖= 𝑓𝑞

(𝑥𝑞

𝑖

), 𝒉𝑘𝑖 = 𝑓𝑘

(𝑥𝑘𝑖

)𝑧𝑞

𝑖= 𝑔𝑞

(𝒉𝑞

𝑖, 𝒄𝑞

), 𝑧𝑘𝑖= 𝑔𝑘

(𝒉𝑘𝑖 , 𝒄

𝑘)

end forupdate encoder network 𝑞 to minimize 𝐿(W) in Eq.(9).momentum update encoder network 𝑘 parameters.update key queue and feature queues.

update cluster centers{𝑐𝑞

𝑗

}𝐾𝑗=1

,{𝑐𝑘𝑗

}𝐾𝑗=1

use algorithm 1.

end forreturn encoder network 𝑞

Once we have obtained the current clustering center in eachfeature space, we can perform Cluster Attention Contrast accordingto the similarity between the subcategory-specific representationsand its corresponding centers.

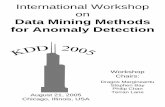

3.3 Cluster Attention ContrastCluster Attention Module. In section 3.2, we have obtained thecluster centers in 𝐾 feature spaces, the next problem to be solvedis the approach to redraw the original feature using its 𝐾 projectedfeatures and corresponding centers. A naive idea is to directly for-malize the 𝐾 similarity scores between the subcategory-specificrepresentations and corresponding centers as the final representa-tion of each original snippet. However, this scheme is not ideal inour early experiments. We speculate that this manner may resultin losing the abundant information in features and confuse ourinstance discrimination pretext task.

In this work, we propose to represent the original snippet-levelfeatures in a similar way as estimating the soft-assignment centerin the 𝐾 feature spaces. We redraw the snippet-level video fea-tures through a linear combination of its 𝐾 projected features. Thelinear combination weight is evaluated by the similarity betweenthe subcategory-specific representations and their correspondingcenters. The higher the similarity score measures the larger theproportion occupies in the final representation. The above processcan be formulated as,

𝑤𝑟𝑖 𝑗 =𝑒−𝑑𝑖 𝑗 /𝜏𝑧∑𝐾𝑗=1 𝑒

−𝑑𝑖 𝑗 /𝜏𝑧= softmax

(𝑠𝑖 𝑗

)(6)

where 𝑠𝑖, 𝑗 = ℎ⊤𝑖 𝑗𝑐 𝑗/(𝜏𝑧

ℎ𝑖 𝑗 𝑐 𝑗 ) measures the similarity betweencurrent point and the corresponding center. 𝜏𝑧 is a manually set

𝐾×128×1

𝐾×1

ℋ

𝑧!

Linear

ℎ!

𝐶

Softmax

Scale

LayerNorm

L2Norm

Clustering

𝐾×1×128

128×𝐾

ℎ!"

128×𝑘

𝑘×𝑘

ℋ#

𝑧#

Linear

ℎ#

𝑐#

Softmax

Scale

LayerNorm

L2Norm

Clustering

𝑘×128

128×𝑘

&ℎ#

128×𝑘

𝑘×𝑘

ℋ#

𝑧#

Linear

ℎ#

𝑐#

Softmax

Scale

LayerNorm

L2Norm

Clustering

𝑘×128

128×𝑘

&ℎ#

Figure 3: Our Cluster Attention Module Design. ’⊗’ denotesmatrix multiplication.

hyperparameter. Then the linear combination of the subcategory-specific representations is regarded as our redrawn feature,

𝑟𝑖 =

𝐾∑𝑗=1

𝑤𝑟𝑖 𝑗ℎ𝑖 𝑗 (7)

Finally, previous work[5] have empirically shown that usingan additional projection before the contrastive losses can improveperformance by suppressing the loss of information and our earlyexperiment concluded consistent with them. Hence, we use anadditional projection head 𝑧𝑖 = H (𝑟𝑖 ) at the end of in our laterexperimental settings. After the redrawn features pass through theproject head, we can obtain the output of the Cluster AttentionModule, i.e., the cluster attention representation. We show thecomplete Cluster Attention Module design in Figure 3.

Momentum Contrast Training. Since Cluster Attention Mod-ule is the final component of our encoders, the cluster attentionrepresentation is the final output of our model. Next, follow theclassic contrastive learning paradigm [5], we consider the outputof encoder q and the output of encoder k as a positive pair if theyoriginate from the same snippet, and otherwise as a negative sam-ple pair. Then we employ the InfoNCE[48] loss to force the positivepairs are similar and the negative pairs are dissimilar,

𝐿𝐶 =1𝑁

𝑁∑𝑖=0− log

exp(𝑧𝑞

𝑖· 𝑧𝑘𝑗+/𝜏

)∑𝑃𝑗=0 exp

(𝑧𝑞

𝑖· 𝑧𝑘𝑗/𝜏) (8)

By incorporating the constraint on the clusters, the total lossfunction is:

𝐿(W) = 𝛼1𝐿𝐶 + 𝛼2𝐿𝐷 + ∥W∥𝐹 (9)whereW represents model weights, 𝛼𝑖 (𝑖 = 1, 2) are hyperparame-ters to weight the losses. However, the contrastive learning para-digm commonly consumes lots of resources particularly with re-spect to video feature extraction. It brings great challenges to model

training. Inspired by MoCo[14], we recommend performing mo-mentum contrast training to address our proposed Cluster AttentionContrast task. In practice, we maintain a queue during training,store the keys of the latest several optimization steps, and contin-uously update the queue as the training process. In addition, tomaintain the key representations’ consistency in the key queue,we follow MoCo[14] to employ momentum update on the parame-ters of encoder k during training, which is an exponential movingaverage of the encoder q parameters1.

Formally, denoting the parameters of encoder q as \q and param-eters of encoder k as \k, we update \k as:

\k ←𝑚\k + (1 −𝑚)\q (10)where𝑚 ∈ [0, 1) is a momentum coefficient.We show the complete Cluster Attention Contrast algorithm in

Algorithm 2.

4 EXPERIMENTSIn this section, we evaluate our proposed method as well as the func-tionalities of different components on two publicly available anom-aly detection datasets, including the CUHK Avenue dataset[34],and the ShanghaiTech Campus dataset[35].

4.1 Datasets and Evaluation MetricHere we briefly introduce the datasets used in our experiments.

CUHK Avenue dataset. It contains 16 training videos and 21testing ones with a total of 47 abnormal events, including throwingobjects, loitering, and running. The size of people may changebecause of the camera position and angle.

The ShanghaiTech Campus dataset. ShanghaiTech Campusdataset is a challenging anomaly detection dataset. It contains 330training videos and 107 testing ones, which is taken in 13 differ-ent scenes with various camera angles and illumination. Abnor-mal activities are diverse and realistic, which include appearanceanomalies like bicycles, skateboards, motorbikes, cars, strollers, andmotion anomalies such as fighting, chasing, pushing, jumping, etc.

Evaluation Metric. In most previous works[13, 32, 34, 35, 61],a popular evaluation metric is to calculate the Receiver OperationCharacteristic (ROC) by gradually changing the threshold of regularscores. Then the Area Under Curve (AUC) is cumulated to a scalarfor performance evaluation. A higher value indicates better anomalydetection performance. In this paper, following the work[13, 34, 35],we leverage frame-level AUC for performance evaluation.

4.2 Implementation DetailsData Augmentation for Contrastive Representation Learn-ing. For data augmentation, we use: (1) random multiscale cropand resize, (2) random horizontal flip, (3) random color distortions,(4) random rotation, and (5) random grayscale for contrastive rep-resentation learning. For each training snippet which stacking 8frames, we first uniformly oversample each video frame with the“8-crop” augment2 and resize the crops to size 256 × 256. Then we

1For detailed explanations about Momentum Contrast, please refer to section 3.2 inMoCo.[14].2"8-crop" refers to cropping the images into the four corners and four additionalpositions near the center.

(a) Raw snippet

(b) Group crop and resize

(c) Group horizontal flip

(d) Group color distortions

(e) Group rotation

(f) Group grayscale

Figure 4: Data augmentation for contrastive representationlearning. Best viewed in color and zoomed in.

randomly perform the data augmentations on these 8-frame-group.The data augmentations used in encoder k and encoder q are sam-pled from the same family of augmentations. Some examples areshown in Figure 4.

Pretrained Feature Extractor. Considering the contrastivelearning paradigm commonly requires a larger mini-batch sizeand more training steps than supervised learning, we chose a lightand fast action classifier, PAN[56], as our feature extractor. PAN[56]designed a concise motion cue called Persistence of Appearance(PA), which focuses on modeling the small displacements of motionboundaries by calculating the pixel-wise differences between twoadjacent frames in the feature space. We choose BN-Inception[20]pretrained on Kinetics-400[26] as the backbone, and extract featuresfrom its global_pool layer. We freeze the weights of PA layer inall training phase. As mentioned above, all training and inferencephases are performed on the snippets temporally stacking 8 frames,which means our snippet consists of two typical video segmentslength in PAN[56]. We strongly recommend performing layer nor-malization on the extracted features to improve regularization.

Project Layers. For each feature space, we employ two linearlayers to project the PAN[56] features to a 128-dimensional rep-resentation. One layer directly projects the PAN[56] features to128-dimensional by a fully-connected layer, while the other onefirstly performs a timescale max pooling, then projects the featureto 128-dimensional through another fully-connected layer. Finally,we weight sum the two 128-dimensional vector through a manuallyset parameter as the projected representation.

Training.We train our models using an SGD[44] optimizer withthe default mini-batch size of 128. The SGD weight decay is 1𝑒 − 4and the SGD momentum is 0.9. We employ Shuffling Batch Normal-ization reported in [14] to avoid the information leakage caused byBatch Normalization[20]. We train models for 100 epochs with theinitial learning rate of 0.015. We freeze the weights of pretrainedfeature extractor in early 10 epochs for warmup. Hyperparameters𝛼1, 𝛼2 were empirically set to 1, 1𝑒 − 3, respectively. The defaultclustering temperature 𝜏𝑐 and cluster attention temperature 𝜏𝑧 areboth empirically set to 0.5, The best empirical setting for m in Eq.10is 0.999. The temperature 𝜏 of InfoNCE losses are set to 0.2 (in

Table 1: AUC(%) of various methods on the CUHK Avenueand ShanghaiTech Campus datasets. All methods are listedby the published year. Missing results due to the lack of re-ports.

Methods CUHK Avenue ShanghaiTech Campus

Lu et al.[34] 80.9 -ConvAE [13] 80.0 60.9s-RNN [35] 81.7 68.0STAE [29] 80.9 -Liu et al.[32] 85.1 72.8Liu et al.[33] 84.4 -

Ionescu et al. [22] 88.9 -Abati et al.[1] - 72.5AnoPCN [55] 86.2 73.6our method 87.0 79.3

Table 2: Ablation Studies on the CUHK Avenue Dataset.

MoCo Training Cluster Attention Feature Queue AUC(%)

68.4✓ 75.5✓ ✓ 86.1✓ ✓ ✓ 87.0

𝐿𝐶 ), 1.0 (in 𝐿𝐷 ), respectively. Our method introduces two types ofqueues to help accomplish the contrastive representation learningtask. The default size of the feature queue is 16384 and those of thekey queue is 8192.

Inference. During inference, for each test samples, the highestsimilarity between its 𝐾 feature space representations and corre-sponding centers will be regarded as its regularity score. To main-tain comparability with training data, we also oversample eachvideo snippet with the “8-crop” augment and resize the crops to256 × 256-pixel. We take the lowest regularity score evaluated onthe cropped snippets as the regularity score of the original snip-pet. The intuitive idea is that once a position in video detects theirregularity, we believe an abnormality has occurred in the currentsnippet. Finally, we employ a 1 − 𝐷 Gaussian filter to temporallysmooth the frame-level regularity scores.

4.3 Comparison with Existing MethodsTable 1 compares our method with a series of state-of-the-art meth-ods [1, 13, 22, 29, 32–35, 55] on the CUHK Avenue and the Shang-haiTech Campus Datasets. Since ShanghaiTech is published in 2017,some early methods have not been evaluated (or reproduced) on it.Besides, some methods, example as Ionescu et al.[22], didnot reportthe other missing results in their paper.

On the ShanghaiTech Campus Dataset, our method establishes anew state-of-the-art performance at 79.3% frame-level AUC. Com-parison with the existing deep reconstruction methods and deepprediction methods, our work provides an absolute gain of +5.7% interms of frame-level AUC, which demonstrating the improvementsin our work is appreciable. Notably, Ionescu et al.[21] applying a

ShanghaiTech Campus 01_0134ShanghaiTech Campus 01_0134

ShanghaiTech Campus 03_0035

CUHK Avenue 05

CUHK Avenue 13

ShanghaiTech Campus 05_0017

CUHK Avenue 03

CUHK Avenue 05

Figure 5: Regularity score curves evolved along time on fourtest samples. Light red shaded regions represent groundtruth temporal segments of the abnormal events.

pretrained SSD detector[31] on each frame, and implement theirmethod on detected objects. For fairness, our method did not com-pare with their approach.

On the CUHK Avenue dataset, our method achieves compara-ble performance with the state-of-the-art methods. Our methodhas a significant improvement over the existing methods basedon establishing normal behavior dictionaries, which indicates theeffectiveness of our introduced contrastive learning pretext task.Our method is slightly inferior to the work of Ionescu et al.[22].They employ a two-stage algorithm based on k-means clusteringand one-class Support Vector Machines (SVM) [45] to eliminateoutliers. In contrast, our method does not have additional SVMclassifiers and achieves comparable performance to their reportedresults.

4.4 DiscussionAblation Study. We conduct ablation study of each component ofour method on the CUHK Avenue Dataset. Table 2 present the ab-lation results. These experimental results demonstrate the methodwe proposed can significantly improve the performance. Firstly,

72

74

76

78

80

82

84

86

88

1 2 5 10 16 20

w/ cluster constraint w/o cluster constraint

Figure 6: Frame-level AUC(%) scores on the CUHK AvenueDataset with different numbers of clusters in {1, 2, 5, 10, 16,20}.

Figure 7: Failed cases. The bounding box in each subfigureindicates the location of anomaly. Best viewed in color andzoomed in.

without any components present in Table 2, the anomaly detectionperformance fails at a frame-level AUC round to 0.68. Figure 6 andthe second column in Table 2 indicate the effect of the number of𝐾 feature spaces on the performance. The number of 𝐾 = 1 (orwithout Cluster Attention) means we do not perform the ClusterAttention process but directly use a single projected representationfor instance discrimination pretext task. These experimental resultssupport our motivation for employing multi-parallel projection lay-ers to capture distinct subcategories of normality. Besides, when thenumber of 𝐾 feature spaces gradually increases with an additionalconstraint on the clusters, the improvement of performance vali-dates the effectiveness of our proposed cluster constraint. Finally, itis obvious that increasing the size of the feature queue can improvethe quality of clustering, since more samples lead to estimating amore reliable centroid. The ablation result involved in the featurequeue concluded consistent with this proposition.

Visualization and Analysis. Figure 5 depicts the frame-levelregularity score curves of our method on four test videos. The or-ange curve represents the regularity score obtained by our model,while the light red shaded regions represent ground truth temporalsegments of the abnormal events. Figure 5 indicates that the reg-ularity scores obtained by our model are strongly correlated withthe ground truth on these samples, which demonstrates the effec-tiveness of our approach. Figure 7 shows some of the failed cases ofour method on the ShanghaiTech Campus Dataset. We noticed thata considerable number of our failed cases contain abnormal objectsaway from the camera, which reveals that our method requires toexplore improving the performance of detecting small abnormalobjects.

5 CONCLUSIONIn this paper, we explore addressing video anomaly detection taskby a contrastive representation learning pretext task. We intro-duce a novel instance discrimination pretext task, Cluster AttentionContrast, to obtain reliable subcategories of normality as clusters.Experiments on benchmark datasets demonstrate our approach iseffective and interpretable. The Cluster Attention Contrast task canhelp to establish reliable and discriminate feature spaces for videoanomaly detection. By utilizing the similarity between subcategory-specific representations and corresponding centers, the proposedmethod achieves state-of-the-art performance on benchmark datasets.In this manner, we established a robust normal concept without anyprior assumptions on reconstruction errors or prediction errors.

In the future, we would like to explore the following directionsto improve this work. Firstly, we will explore to improve the perfor-mance of detecting small abnormal objects. Furthermore, we willdesign a more reliable method for determining the number of 𝐾feature spaces in encoders automatically. Finally, we will exploredifferent distance measures to obtain a more suitable one for ClusterAttention Contrast task.

ACKNOWLEDGMENTSThis paper was partially supported by the IER foundation (No. HT-JD-CXY-201904) and ShenzhenMunicipal Development and ReformCommission (Disciplinary Development Program for Data Scienceand Intelligent Computing). Special acknowledgments are given toAoto-PKUSZ Joint Lab for its support.

REFERENCES[1] Davide Abati, Angelo Porrello, Simone Calderara, and Rita Cucchiara. 2018.

Latent Space Autoregression for Novelty Detection. 2019 IEEE/CVF Conferenceon Computer Vision and Pattern Recognition (CVPR) (2018), 481–490.

[2] Amit Adam, Ehud Rivlin, Ilan Shimshoni, and David Reinitz. 2008. Robust Real-Time Unusual Event Detection using Multiple Fixed-Location Monitors. IEEETransactions on Pattern Analysis and Machine Intelligence 30 (2008), 555–560.

[3] Borislav Antic and Björn Ommer. 2011. Video parsing for abnormality detection.2011 International Conference on Computer Vision (2011), 2415–2422.

[4] Yoshua Bengio, Pascal Lamblin, Dan Popovici, and Hugo Larochelle. 2006. GreedyLayer-Wise Training of Deep Networks. In NIPS.

[5] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey E. Hinton. 2020.A Simple Framework for Contrastive Learning of Visual Representations. ArXivabs/2002.05709 (2020).

[6] Kai-Wen Cheng, Yie-Tarng Chen, and Wen-Hsien Fang. 2015. Video anomalydetection and localization using hierarchical feature representation and Gauss-ian process regression. 2015 IEEE Conference on Computer Vision and PatternRecognition (CVPR) (2015), 2909–2917.

[7] Yang Cong, Junsong Yuan, and Ji Liu. 2011. Sparse reconstruction cost forabnormal event detection. CVPR 2011 (2011), 3449–3456.

[8] A. Dempster, N. Laird, and D. Rubin. 1977. Maximum likelihood estimation fromincomplete data via the em algorithm.

[9] Kamran Ghasedi Dizaji, Amirhossein Herandi, Cheng Deng, Tom Weidong Cai,and HengHuang. 2017. Deep Clustering via Joint Convolutional Autoencoder Em-bedding and Relative Entropy Minimization. 2017 IEEE International Conferenceon Computer Vision (ICCV) (2017), 5747–5756.

[10] Carl Doersch, Abhinav Gupta, and Alexei A. Efros. 2015. Unsupervised VisualRepresentation Learning by Context Prediction. 2015 IEEE International Confer-ence on Computer Vision (ICCV) (2015), 1422–1430.

[11] Jayanta K. Dutta and Bonny Banerjee. 2015. Online Detection of AbnormalEvents Using Incremental Coding Length. In AAAI.

[12] Yachuang Feng, Yuan Yuan, and Xiaoqiang Lu. 2016. Deep Representation forAbnormal Event Detection in Crowded Scenes. Proceedings of the 24th ACMinternational conference on Multimedia (2016).

[13] Mahmudul Hasan, Jonghyun Choi, Jan Neumann, Amit K. Roy-Chowdhury, andLarry S. Davis. 2016. Learning Temporal Regularity in Video Sequences. 2016 IEEEConference on Computer Vision and Pattern Recognition (CVPR) (2016), 733–742.

[14] Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross B. Girshick. 2019.Momentum Contrast for Unsupervised Visual Representation Learning. ArXivabs/1911.05722 (2019).

[15] Geoffrey E. Hinton and Ruslan Salakhutdinov. 2006. Reducing the dimensionalityof data with neural networks. Science 313 5786 (2006), 504–7.

[16] Timothy M. Hospedales, Shaogang Gong, and Tao Xiang. 2009. A Markov Clus-tering Topic Model for mining behaviour in video. 2009 IEEE 12th InternationalConference on Computer Vision (2009), 1165–1172.

[17] Chih-Chung Hsu and Chia-Wen Lin. 2018. CNN-Based Joint Clustering andRepresentation Learning with Feature Drift Compensation for Large-Scale ImageData. IEEE Transactions on Multimedia 20 (2018), 421–429.

[18] Di Hu, Feiping Nie, and Xuelong Li. 2018. Deep Multimodal Clustering forUnsupervised Audiovisual Learning. 2019 IEEE/CVF Conference on ComputerVision and Pattern Recognition (CVPR) (2018), 9240–9249.

[19] Weihua Hu, Takeru Miyato, Seiya Tokui, Eiichi Matsumoto, and MasashiSugiyama. 2017. Learning Discrete Representations via Information MaximizingSelf-Augmented Training. ArXiv abs/1702.08720 (2017).

[20] Sergey Ioffe and Christian Szegedy. 2015. Batch Normalization: Accelerating DeepNetwork Training by Reducing Internal Covariate Shift. ArXiv abs/1502.03167(2015).

[21] Radu Tudor Ionescu, Fahad Shahbaz Khan, Mariana-Iuliana Georgescu, and LingShao. 2019. Object-Centric Auto-Encoders and Dummy Anomalies for AbnormalEvent Detection in Video. 2019 IEEE/CVF Conference on Computer Vision andPattern Recognition (CVPR) (2019), 7834–7843.

[22] Radu Tudor Ionescu, Sorina Smeureanu, Marius Popescu, and Bogdan Alexe.2019. Detecting Abnormal Events in Video Using Narrowed Normality Clusters.2019 IEEE Winter Conference on Applications of Computer Vision (WACV) (2019),1951–1960.

[23] Dinesh Jayaraman and Kristen Grauman. 2017. Learning Image RepresentationsTied to Egomotion from Unlabeled Video. International Journal of ComputerVision 125 (2017), 136–161.

[24] Pan Ji, Tong Zhang, Hongdong Li, Mathieu Salzmann, and Ian D. Reid. 2017.Deep Subspace Clustering Networks. In NIPS.

[25] Xu Ji, Andrea Vedaldi, and João F. Henriques. 2018. Invariant Information Clus-tering for Unsupervised Image Classification and Segmentation. 2019 IEEE/CVFInternational Conference on Computer Vision (ICCV) (2018), 9864–9873.

[26] Will Kay, João Carreira, Karen Simonyan, Brian Zhang, Chloe Hillier, SudheendraVijayanarasimhan, Fabio Viola, Tim Green, Trevor Back, Apostol Natsev, MustafaSuleyman, and Andrew Zisserman. 2017. The Kinetics Human Action VideoDataset. ArXiv abs/1705.06950 (2017).

[27] Jaechul Kim and Kristen Grauman. 2009. Observe locally, infer globally: A space-time MRF for detecting abnormal activities with incremental updates. In CVPR.

[28] Louis Kratz and Ko Nishino. 2009. Anomaly detection in extremely crowdedscenes using spatio-temporal motion pattern models. 2009 IEEE Conference onComputer Vision and Pattern Recognition (2009), 1446–1453.

[29] Nannan Li, Xinyu Wu, Huiwen Guo, Dan Xu, Yongsheng Ou, and Yen-Lun Chen.2015. Anomaly Detection in Video Surveillance via Gaussian Process. Int. J.Pattern Recognit. Artif. Intell. 29 (2015), 1555011:1–1555011:25.

[30] Weixin Li, Vijay Mahadevan, and Nuno Vasconcelos. 2014. Anomaly Detectionand Localization in Crowded Scenes. IEEE Transactions on Pattern Analysis andMachine Intelligence 36 (2014), 18–32.

[31] Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott E. Reed,Cheng-Yang Fu, and Alexander C. Berg. 2016. SSD: Single Shot MultiBox Detector.In ECCV.

[32] Wen Liu, Weixin Luo, Dongze Lian, and Shenghua Gao. 2018. Future FramePrediction for Anomaly Detection - A New Baseline. 2018 IEEE/CVF Conferenceon Computer Vision and Pattern Recognition (2018), 6536–6545.

[33] Yusha Liu, Chun-Liang Li, and Barnabás Póczos. 2018. Classifier Two SampleTest for Video Anomaly Detections. In BMVC.

[34] Cewu Lu, Jianping Shi, and Jiaya Jia. 2013. Abnormal Event Detection at 150FPS in MATLAB. 2013 IEEE International Conference on Computer Vision (2013),2720–2727.

[35] Weixin Luo, Wen Liu, and Shenghua Gao. 2017. A Revisit of Sparse CodingBased Anomaly Detection in Stacked RNN Framework. 2017 IEEE InternationalConference on Computer Vision (ICCV) (2017), 341–349.

[36] Jonathan Masci, Ueli Meier, Dan C. Ciresan, and Jürgen Schmidhuber. 2011.Stacked Convolutional Auto-Encoders for Hierarchical Feature Extraction. InICANN.

[37] Jefferson Ryan Medel and Andreas E. Savakis. 2016. Anomaly Detection in VideoUsing Predictive Convolutional Long Short-Term Memory Networks. ArXivabs/1612.00390 (2016).

[38] Ramin Mehran, Alexis Oyama, and Mubarak Shah. 2009. Abnormal crowdbehavior detection using social force model. 2009 IEEE Conference on ComputerVision and Pattern Recognition (2009), 935–942.

[39] Mehdi Noroozi and Paolo Favaro. 2016. Unsupervised Learning of Visual Repre-sentations by Solving Jigsaw Puzzles. In ECCV.

[40] Mehdi Noroozi, Hamed Pirsiavash, and Paolo Favaro. 2017. RepresentationLearning by Learning to Count. 2017 IEEE International Conference on ComputerVision (ICCV) (2017), 5899–5907.

[41] Deepak Pathak, Ross B. Girshick, Piotr Dollár, Trevor Darrell, and Bharath Hari-haran. 2017. Learning Features by Watching Objects Move. 2017 IEEE Conferenceon Computer Vision and Pattern Recognition (CVPR) (2017), 6024–6033.

[42] Deepak Pathak, Philipp Krähenbühl, Jeff Donahue, Trevor Darrell, and Alexei A.Efros. 2016. Context Encoders: Feature Learning by Inpainting. 2016 IEEEConference on Computer Vision and Pattern Recognition (CVPR) (2016), 2536–2544.

[43] Huamin Ren, Weifeng Liu, Søren I. Olsen, Sergio Escalera, and Thomas B. Moes-lund. 2015. Unsupervised Behavior-Specific Dictionary Learning for AbnormalEvent Detection. In BMVC.

[44] Herbert E. Robbins. 2007. A Stochastic Approximation Method. Annals ofMathematical Statistics 22 (2007), 400–407.

[45] Bernhard Schölkopf, John C. Platt, John Shawe-Taylor, Alexander J. Smola, andRobert C. Williamson. 2001. Estimating the Support of a High-DimensionalDistribution. Neural Computation 13 (2001), 1443–1471.

[46] Yonglong Tian, Dilip Krishnan, and Phillip Isola. 2019. Contrastive MultiviewCoding. ArXiv abs/1906.05849 (2019).

[47] Hanh Tran and David C. Hogg. 2017. Anomaly Detection using a ConvolutionalWinner-Take-All Autoencoder. In BMVC.

[48] Aäron van den Oord, Yazhe Li, and Oriol Vinyals. 2018. Representation Learningwith Contrastive Predictive Coding. ArXiv abs/1807.03748 (2018).

[49] Pascal Vincent, Hugo Larochelle, Isabelle Lajoie, Yoshua Bengio, and Pierre-Antoine Manzagol. 2010. Stacked Denoising Autoencoders: Learning UsefulRepresentations in a Deep Network with a Local Denoising Criterion. J. Mach.Learn. Res. 11 (2010), 3371–3408.

[50] Xiaolong Wang and Abhinav Gupta. 2015. Unsupervised Learning of VisualRepresentations Using Videos. 2015 IEEE International Conference on ComputerVision (ICCV) (2015), 2794–2802.

[51] Xiaolong Wang, Kaiming He, and Abhinav Gupta. 2017. Transitive Invariancefor Self-Supervised Visual Representation Learning. 2017 IEEE InternationalConference on Computer Vision (ICCV) (2017), 1338–1347.

[52] Junyuan Xie, Ross B. Girshick, and Ali Farhadi. 2016. Unsupervised Deep Em-bedding for Clustering Analysis. In ICML.

[53] Dan Xu, Yan Yan, Elisa Ricci, and Nicu Sebe. 2017. Detecting anomalous eventsin videos by learning deep representations of appearance and motion. Comput.Vis. Image Underst. 156 (2017), 117–127.

[54] Jianwei Yang, Devi Parikh, and Dhruv Batra. 2016. Joint Unsupervised Learningof Deep Representations and Image Clusters. 2016 IEEE Conference on ComputerVision and Pattern Recognition (CVPR) (2016), 5147–5156.

[55] Muchao Ye, Xiaojiang Peng,Weihao Gan,WeixinWu, and YuQiao. 2019. AnoPCN:Video Anomaly Detection via Deep Predictive Coding Network. Proceedings ofthe 27th ACM International Conference on Multimedia (2019).

[56] Can Zhang, Yue-Xian Zou, Guang Chen, and Lei Gan. 2019. PAN: PersistentAppearance Network with an Efficient Motion Cue for Fast Action Recognition.Proceedings of the 27th ACM International Conference on Multimedia (2019).

[57] Junjian Zhang, Chun-Guang Li, Chong You, Xianbiao Qi, Honggang Zhang, JunGuo, and Zhouchen Lin. 2019. Self-Supervised Convolutional Subspace ClusteringNetwork. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR) (2019), 5468–5477.

[58] Richard Zhang, Phillip Isola, and Alexei A. Efros. 2016. Colorful Image Coloriza-tion. ArXiv abs/1603.08511 (2016).

[59] Tong Zhang, Pan Ji, Mehrtash Harandi, Richard I. Hartley, and Ian D. Reid. 2018.Scalable Deep k-Subspace Clustering. ArXiv abs/1811.01045 (2018).

[60] Bin Zhao, Fei-Fei Li, and Eric P. Xing. 2011. Online detection of unusual eventsin videos via dynamic sparse coding. CVPR 2011 (2011), 3313–3320.

[61] Yiru Zhao, Bing Deng, Chen Shen, Yau-Wen Liu, Hongtao Lu, and Xian-ShengHua. 2017. Spatio-Temporal AutoEncoder for Video Anomaly Detection. Pro-ceedings of the 25th ACM international conference on Multimedia (2017).

[62] Pan Zhou, Yunqing Hou, and Jiashi Feng. 2018. Deep Adversarial SubspaceClustering. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(2018), 1596–1604.

[63] Tinghui Zhou, Matthew Brown, Noah Snavely, and David G. Lowe. 2017. Unsu-pervised Learning of Depth and Ego-Motion from Video. 2017 IEEE Conferenceon Computer Vision and Pattern Recognition (CVPR) (2017), 6612–6619.