Chapter 2: Inferences in Regression and Correlation Analysis · Lecture 14 Psychology 790 Our New...

Transcript of Chapter 2: Inferences in Regression and Correlation Analysis · Lecture 14 Psychology 790 Our New...

-

Lecture 14 Psychology 790

Chapter 2: Inferences in Regression andCorrelation Analysis

Lecture 14October 26, 2006Psychology 790

-

Overview Todays Lecture Schedule

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Todays Lecture

Inferences (aka Hypothesis Tests and confidence intervals)for:

1

0

Y

ANOVA tables for inferences.

-

Lecture 14 Psychology 790

Our New Schedule

Date Topic Chapter10/26 Inferences in regression and correlation analysis K 2

10/31 Diagnostics and remedial measures K 3

11/2 No Class - No Lab11/4 Simultaneous inferences and other topics K 4

11/7 Matrix algebra K 5

11/9 Multiple regression I K 6

11/14 Multiple regression II K 7

11/16 Regression models for quantitative and qualitative predictors K 8

11/21 No Class11/23 No Class - No Lab11/28 Building the regression model I K 9

11/30 Building the regression model II K 10

12/5 Building the regression model III (Final Handed Out) K 11

12/7 Open

-

Lecture 14 Psychology 790

Introductory Example

-

Overview

IntroductoryExample Snow geese Regression

Analysis

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Snow Geese

From Weisberg (1985, p. 102):

Aerial survey methods are regularly used to estimated thenumber of snow geese in their summer range areas west ofHudson Bay in Canada. To obtain estimates, small aircraft flyover the range and, when a flock of geese is spotted, anexperienced person estimates the number of geese in theflock. To investigate the reliability of this method of counting,an experiment was conducted in which an airplane carryingtwo observers flew over 45 flocks, and each observer made anindependent estimate of the number of birds in each flock.Also, a photograph of the flock was taken so that an exactcount of the number of birds in the flock could be made (databy Cook and Jacobsen, 1978).

-

Lecture 14 Psychology 790

Snow Geese

-

Lecture 14 Psychology 790

Hudson Bay

-

Overview

IntroductoryExample Snow geese Regression

Analysis

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

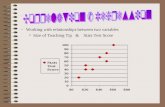

Regression Analysis

Using the first observer in the plane, we consider therelationship between this persons count and that from thephotograph:

0 100 200 300 400 500

observer 1 count

0

100

200

300

400

photo

count

WWW

WWWW

WW

WW

WWW

W

W

W

W

W

W

WW

W

W

WW

W

W

W

W

W

W

W

W

W

WW

WW

W

W

W

WW

W

-

Lecture 14 Psychology 790

Building on the Simple LinearRegression Model

-

Overview

IntroductoryExample

Building on ourModel

ErrorAssumptions

Our FirstAssumption

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

The Model

As seen previously...

The linear regression model (for observation i = 1, . . . , N ):

Yi = 0 + 1Xi + i

0 is the mean of the population when X is zero...the Yintercept.

1 is the slope of the line, the amount of increase in Ybrought about by a unit increase (X = X + 1) in X .

i is the random error, specific to each observation.

-

Overview

IntroductoryExample

Building on ourModel

ErrorAssumptions

Our FirstAssumption

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Lets talk about

Before we go any further, we need to talk about this crazy thing.

What do we know?

We know it is a random variable.

We know it is random error.

We know it has a mean of 0.

We know it has a variance of 2.

We can compute the regression line no matter thedistribution of the error term ().

However, we must have a distribution in order to dohypothesis testing.

-

Overview

IntroductoryExample

Building on ourModel

ErrorAssumptions

Our FirstAssumption

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Our First Assumption

With any statistical test, you must have a distribution.

Recall how we find critical values, p-values, etc...

Because we only know the mean and the variance of our ,we must make an assumption about the distribution of .

For most of this class, we will assume the following about theerror terms:

i N(0, 2)

-

Overview

IntroductoryExample

Building on ourModel

ErrorAssumptions

Our FirstAssumption

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Our First Assumption

We are going to use this assumption for the rest of the year.

It is a common theme to any regression model or generallinear model.

So, why assume that the error terms are normallydistributed?

With our new assumption of normal error terms, we can nowdo the following:

We now know the conditional distribution ofYi N(0 + 1Xi,

2), which can allow us to computeCIs.

We can now do great things with our s, namely computeconfidence intervals and perform hypothesis tests.

-

Overview

IntroductoryExample

Building on ourModel

ErrorAssumptions

Our FirstAssumption

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Lets Put Our Assumption to Good Use

So whats next?

Tests for the parameters, both 0 and 1.

Prediction intervals for the predicted value E(Yi).

Prediction interval for a point estimate (new observation).

Confidence bands for our regression line.

We are going to take a second approach to testing theparameters by using an ANOVA approach.

Talk about descriptive measures of the linear model, namelyr and r2.

-

Lecture 14 Psychology 790

Parameter Testing

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

Parameter Testing

So let us begin with the parameter of most interest to us inour regression equation, the slope, 1.

We are going to use our regular old hypothesis that welearned, but we are going to change everything to relate toour 1.

Ok, last class we found out how to obtain a parameterparameter estimate, (now called b1 - drop the hat - the bookonly uses it for Y).

We need to know the distribution of that parameter in orderto perform a test.

This is where our assumption comes into play.

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

Parameter Testing - Test Statistic

Given our assumption of normal error terms, it follows that:

b1 N(1, 2(1)) where 2(1) =

2

(x x)2

So we have the distribution of 1, we need a test statistic forour hypothesis test.

T =b1 1s(b1)

t(n2) where s(b1) =MSE

(x x)2

Where do the degrees of freedom come from?

In regression, we lose one degree of freedom for eachparameter we estimate.

In this case we have two, 0 and 1, hence n 2.

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

Parameter Testing - 5 Step Process

1. First we need a null and alternative, we usually just want totest if there is any slope at all to the line:

H0 : 1 = 0

HA : 1 6= 0

2. Type I error rate () - set prior to the experiment.

3. Test statistic, computed using SAS, find it on the output.

4. Then decide to reject/fail to reject.

5. Interpret your results.

Significant 1 means there is significant linear relationshipbetween variables X and Y .

-

CODE:libname geese C:\data files\;

proc glm data=geese.geese;model photo=observer1;run;

OUTPUT:

18-1

-

Lecture 14 Psychology 790

Geese ExampleThe GLM Procedure

Dependent Variable: photo photo count

Sum of

Source DF Squares Mean Square F Value Pr > F

Model 1 254769.4600 254769.4600 129.20 F

observer1 1 254769.4600 254769.4600 129.20 |t|

Intercept 26.64957256 8.61448190 3.09 0.0035

observer1 0.88255688 0.07764394 11.37

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

Hypothesis Test

Estimated model: Y = 26.65 + 0.88X

H0 : 1 = 0

HA : 1 6= 0

= .05

test statisticT = 11.37(43df), p < 0.0001

Reject Null

We can conclude that there is a significant linear associationbetween the observer count and the true number of geese.

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

Confidence Interval

Again, the concept of a confidence interval for 1 is the sameas the other parameters we learned about, it is a bandaround which we are confident our true parameter falls into.

Our formula in this case is:

1 t(n 2, /2) (s(b1))

Of course...why compute yourself when you can use SAS?

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

CI in SAS

SAS will do the CI for you!

Code:

proc glm data=geese.geese;

model photo=observer1 / clparm;

run;

Parameter Output Portion:

Standard

Parameter Estimate Error t Value Pr > |t| 95% Confidence Limits

Intercept 26.64957256 8.61448190 3.09 0.0035 9.27681411 44.02233100

observer1 0.88255688 0.07764394 11.37

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

What about poor old 0?

Unfortunately for 0 it really isnt that useful for us in term ofhypothesis testing.

Why? Well, what would we be testing?

We would be testing if the mean of Y at X = 0 is equal tosome value.

First of all, this doesnt usually make sense to do.

Second, this test is only valid if the range of X includes 0.

Subnote: The Regression Equation is only valid over thespan of your range of value contained in your sample, notoutside of those values

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

Lets Test 0 Anyway

Lets take pity on 0 and show how it can be done.

0 N(0, 2(0)) where 2(0) = 2

(

1

n+

x2

(x x)2

)

T =b0 0s(b0)

t(n2) where s(b0) = MSE(

1

n+

x2

(x x)2

)

The CI follows suit:

0 t(n 2, /2) (s(b0))

Or use SAS!

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

Hypothesis Test - From Example Again

H0 : 0 = 0

Ha : 0 = 0

= .05

test statistic

t(23) = 3.09(43df), p = 0.0035

Reject Null

We can conclude that the mean count of geese actuallypresent when no geese were observed is not zero.

Confidence Interval: (9.277, 44.022).

We are 95% confident that the true mean of geese presentwhen none were observed is between 9.277 and 44.022.

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing Test for 1 Parameter Testing

- 5 Step Process 1 Example 1 CI About 0 Testing 0 Inference Notes

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping UpLecture 14 Psychology 790

A Note when making Inferences on s

What happens when you have deviations from normality?

The hypothesis tests are fairly robust to minor deviationsfrom normality.

We will talk later about how to test the normalityassumption of [like, next class]...

Power - The formula for power is in the book and it is verymessy. Anyone interested can ask me about it later.

-

Lecture 14 Psychology 790

Interval Estimation of Predictions

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions Example Mean

Interval Intervals for Y Example Mean

Interval Plots

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

The distribution of E(Y )

Before we begin any talk about CI, we always need to startwith a distribution of the parameter, that is what determinesour CI.

So our new parameter is E(Yh)

Yh N(0 + 1 Xh, 2

(

1

n+

(Xh x)2

(x x)2

)

)

distributed t(n 2), so CI is:

Yh t(n 2, /2) s(Yh)

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions Example Mean

Interval Intervals for Y Example Mean

Interval Plots

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

From our example...

Want to build a 95% CI for predicted value for an observationof 30 geese.

First, we need to find Y for X = 30.

Y30 = 26.65 + 0.88(30) = 48.71

Next, we find our standard deviation:

s(Yh) = . . .

Then we get tired and use:proc glm data=geese.geese;

model photo=observer1 / clm;

run;

-

Lecture 14 Psychology 790

From our example...The SAS System 00:05 Wednesday, October 25, 2006 13

The GLM Procedure

95% Confidence Limits for

Observation Observed Predicted Residual Mean Predicted Value

1 56.0000000 70.7774166 -14.7774166 57.0286892 84.5261440

2 38.0000000 48.7134946 -10.7134946 33.5445777 63.8824115

3 25.0000000 53.1262790 -28.1262790 38.3130930 67.9394650

4 48.0000000 57.5390634 -9.5390634 43.0480025 72.0301243

5 38.0000000 48.7134946 -10.7134946 33.5445777 63.8824115

6 22.0000000 44.3007102 -22.3007102 28.7447619 59.8566584

7 22.0000000 37.2402551 -15.2402551 21.0056060 53.4749042

8 42.0000000 56.6565065 -14.6565065 42.1038195 71.2091935

9 34.0000000 44.3007102 -10.3007102 28.7447619 59.8566584

10 14.0000000 35.4751414 -21.4751414 19.0602616 51.8900211

11 30.0000000 48.7134946 -18.7134946 33.5445777 63.8824115

12 9.0000000 35.4751414 -26.4751414 19.0602616 51.8900211

13 18.0000000 39.8879258 -21.8879258 23.9159209 55.8599306

14 25.0000000 44.3007102 -19.3007102 28.7447619 59.8566584

15 62.0000000 61.9518478 0.0481522 47.7470198 76.1566758

16 26.0000000 53.1262790 -27.1262790 38.3130930 67.9394650

17 88.0000000 92.8413386 -4.8413386 79.4769408 106.2057365

18 56.0000000 57.5390634 -1.5390634 43.0480025 72.0301243

19 11.0000000 34.5925845 -23.5925845 18.0860992 51.0990698

20 66.0000000 75.1902010 -9.1902010 61.6074334 88.7729686

.

.

.

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions Example Mean

Interval Intervals for Y Example Mean

Interval Plots

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Intervals for Y

The past interval was for the mean of Y given X .

What about an interval for a single observation?

So our new parameter is Yh

Yh N(0 + 1 Xh, 2

(

1 +1

n+

(Xh x)2

(x x)2

)

)

distributed t(n 2), so CI is:

Yh t(n 2, /2) s(pred)

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions Example Mean

Interval Intervals for Y Example Mean

Interval Plots

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

From our example...

Want to build a 95% CI for predicted value for an observationof 30 geese.

First, we need to find Y for X = 30.

Y30 = 26.65 + 0.88(30) = 48.71

Next, we find our standard deviation:

s(Yh) = . . .

Then we get tired and use:proc glm data=geese.geese;

model photo=observer1 / cli;

run;

-

Lecture 14 Psychology 790

From our example...The SAS System 00:05 Wednesday, October 25, 2006 17

The GLM Procedure

95% Confidence Limits for

Observation Observed Predicted Residual Individual Predicted Value

1 56.0000000 70.7774166 -14.7774166 -19.8244321 161.3792653

2 38.0000000 48.7134946 -10.7134946 -42.1147142 139.5417034

3 25.0000000 53.1262790 -28.1262790 -37.6431980 143.8957560

4 48.0000000 57.5390634 -9.5390634 -33.1784009 148.2565277

5 38.0000000 48.7134946 -10.7134946 -42.1147142 139.5417034

6 22.0000000 44.3007102 -22.3007102 -46.5929365 135.1943568

7 22.0000000 37.2402551 -15.2402551 -53.7720040 128.2525142

8 42.0000000 56.6565065 -14.6565065 -34.0708222 147.3838352

9 34.0000000 44.3007102 -10.3007102 -46.5929365 135.1943568

10 14.0000000 35.4751414 -21.4751414 -55.5694398 126.5197225

11 30.0000000 48.7134946 -18.7134946 -42.1147142 139.5417034

12 9.0000000 35.4751414 -26.4751414 -55.5694398 126.5197225

13 18.0000000 39.8879258 -21.8879258 -51.0778503 130.8537018

14 25.0000000 44.3007102 -19.3007102 -46.5929365 135.1943568

15 62.0000000 61.9518478 0.0481522 -28.7203344 152.6240300

16 26.0000000 53.1262790 -27.1262790 -37.6431980 143.8957560

17 88.0000000 92.8413386 -4.8413386 2.2970146 183.3856626

18 56.0000000 57.5390634 -1.5390634 -33.1784009 148.2565277

19 11.0000000 34.5925845 -23.5925845 -56.4685573 125.6537262

20 66.0000000 75.1902010 -9.1902010 -15.3866120 165.7670140

.

.

.

-

Lecture 14 Psychology 790

Plots of Intervals

-

Lecture 14 Psychology 790

ANOVA table

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Whats up with that ANOVA table?

As you notice on all your regression output, you get theseANOVA tables.

The ANOVA table just refers to a partitioning of the variance.

ANOVA = Analysis of Variance

We have a total amount of error or variance present in ourdata.

What the ANOVA table does is to tell us which portion of thevariance can be accounted for by our model and whatportion is just random error.

Just as a theoretical note, we want to capture as much erroror variance by our model as possible. This means that weare accounting for changes in our data by our model.

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

ANOVA table

The table shows the partitioning of the total variance, SSTO,into its two parts: sum of squares regression, SSR, and sumof squares error, SSE

Formally:

Yi Y = Yi Y (SSR) + Yi Yi(SSE)

We then also partition the degrees of freedom. We have atotal df of n 1, we have a SSR df of 1 and a SSE df of n 2(just like in our test statistic for .

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Lets go over the ANOVA tableThe GLM Procedure

Dependent Variable: photo photo count

Sum of

Source DF Squares Mean Square F Value Pr > F

Model 1 254769.4600 254769.4600 129.20

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up

Lecture 14 Psychology 790

Our new test for 1

We start in the same way as before:

H0 : 1 = 0

Ha : 1 = 0

= 0.05

Test statistic This is where things change. Instead of ourt-test, we can compute an F test using our ANOVA table.

F (1, 43) = 129.20, p < 0.0001

Then the rest is the same:

Reject Null

We can conclude that there is a significant linear associationbetween the observer count and the true number of geese.

-

Lecture 14 Psychology 790

Descriptive Measures in Regression

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures Correlation Squared

Correlation Coefficient of

Determination

Wrapping Up

Lecture 14 Psychology 790

Correlation - r

The correlation is the measure of association between thetwo variables.

It is very much related to the slope in a regression model

If you recall, the equation for 1 can be expressed in termsof r.

It ranges from -1 to +1, with the extremes indicating perfectassociation.

If r = 0 there is no linear relationship between X and Y .

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures Correlation Squared

Correlation Coefficient of

Determination

Wrapping Up

Lecture 14 Psychology 790

Squared Correlation

As you can guess, r2 is not only computed for you, but it isalso the square of the correlation coefficient, r.

It is computed in terms of our ANOVA table as follows:

r2 =SSR

SSTO

Ok, great, now what does it mean conceptually?

It is a measure of the proportion of variance accounted for byour model

So, I mentioned earlier that we want to account for as muchtotal variance as possible. This lets us know how much.

In our example, we had an r2 = 0.750. This means that 75%of the total variance in total number of geese is accounted forby the observed number of geese.

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures Correlation Squared

Correlation Coefficient of

Determination

Wrapping Up

Lecture 14 Psychology 790

Coefficient of Determination

Our r2 measure is not an all-powerful, all-knowing thing. Itdoes have its limitations:

A high r2 does not mean that useful predictions can bemade by the model: We may still have a high errorvariance and our predictions bands may be large.

A high r2 does not indicate that the linear line is a "goodfit": You may get a high r2, but the data is curvilinear innature, so your linear model is not appropriate.

A low r2 means X and Y are not related: Theirrelationship just might not be linear.

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up Final Thought Next Class

Lecture 14 Psychology 790

Final Thought

Hypothesis testing inregression is somethingthat is often used, andbecomes fairly routine.

The use of confidenceintervals for parameters isalso fairly straightforward.

Perhaps the most informative portion of the course fromtoday was the ideas of mean and individual predictionintervals.

These intervals are important in considering where newobservations will fall - prediction.

get_video.mpgMedia File (video/mpeg)

-

Overview

IntroductoryExample

Building on ourModel

Parameter Testing

Interval Estimationof Predictions

ANOVA table

DescriptiveMeasures

Wrapping Up Final Thought Next Class

Lecture 14 Psychology 790

Next Time

Kutner Chapter 3.

Regression diagnostics.

Checking assumptions.

Worrying about data.

Practical statistics.

OverviewToday's LectureOur New Schedule

Introductory ExampleSnow GeeseSnow GeeseHudson BayRegression Analysis

Building on our ModelThe ModelLets talk about Our First AssumptionOur First AssumptionLet's Put Our Assumption to Good Use

Parameter TestingParameter TestingParameter Testing - Test Statistic Parameter Testing - 5 Step ProcessGeese ExampleHypothesis Test Confidence IntervalCI in SASWhat about poor old 0? Let's Test 0 AnywayHypothesis Test - From Example Again A Note when making Inferences on s

Interval Estimation of PredictionsThe distribution of E(Y) From our example...From our example...Intervals for "705EYFrom our example...From our example...Plots of Intervals

ANOVA tableWhat's up with that ANOVA table?ANOVA table Let's go over the ANOVA table Our new test for 1

Descriptive MeasuresCorrelation - r Squared CorrelationCoefficient of Determination

Wrapping UpFinal ThoughtNext Time