C-CBPM: Collective Context Based€¦ · of Haitians in the weeks after the crisis (Clarke, 2010)....

Transcript of C-CBPM: Collective Context Based€¦ · of Haitians in the weeks after the crisis (Clarke, 2010)....

C-CBPM: Collective Context Based Privacy Model

1

M VENAKATA SWAMY,

University of Arkansas at Little Rock, AR, USA

NITIN AGARWAL,

University of Arkansas at Little Rock, AR, USA

SRINI RAMASWAMY,

Industrial Software Systems, ABB Corporate Research, Bangalore, India. ________________________________________________________________________

Existing research on developing privacy models, although seem persuasive, are

essentially based on user, role or service identification. Such models are incapable of

automatically adjusting privacy needs of consumers or organizations to the context in

which the data is accessed. In this work we present a Context Based Privacy Model

(CBPM) that leverages the context in which the information content is accessed. We

introduce the concepts of donation and adoption for privacy configuration by extending

CBPM to Collective-CBPM (C-CBPM), bearing the analysis upon the notions of

collective intelligence and trust computation. The efficacy of the proposed C-CBPM

model is demonstrated by implementing it in various application domains such as,

humanitarian assistance and disaster relief operations. CBPM is also implemented as a

framework that assists in the comparison and extension of existing role or service based

privacy and access control models.

General Terms: Privacy, Context-based, Role based, Access Control, Information Flow,

Trust, Collective CBPM, Community based, Context aware privacy, Collective wisdom,

HADR, Social media, Crowdsourcing.

1. INTRODUCTION

The advent of social media (such as Twitter, Facebook, Blogs, etc), due to the

openness and easy accessibility, has phenomenally changed the landscape of

communication participation. These websites on social media (e.g., Facebook,

Myspace, Twitter), social health (e.g., PatientsLikeMe), location aware social

applications (e.g., FourSquare), etc. are a vast ocean of user-generated

content (UGC) — including non-sensitive as well as sensitive demographic,

geographic, financial, or health-related information. Although these websites

provide custom privacy policies, these are quite intricate and subject to

frequent modifications assuming the users at par with the technical

knowledge essentially laying the onus of privacy on the customers. Further,

different websites have their different privacy specifications making it

challenging for even proficient users (let alone naïve or new users) to cope up.

1 Authors’ addresses:

M VENKATA SWAMY, College of Engineering and Information Technology, University of Arkansas at Little

Rock, AR, USA. E-mail: [email protected]; NITIN AGARWAL, College of Engineering and Information Technology, University of Arkansas at Little

Rock, AR, USA. E-mail: [email protected]; and

SRINI RAMASWAMY, Industrial Software Systems, ABB Corporate Research, Bangalore, India, Email: [email protected].

2

As a result, users may unknowingly grant access to their data, leading to

grave privacy concerns. Additionally, social applications that leverage user

participation and collaboration, or commonly known as crowdsourcing,

essentially aggregates UGC, placing individuals at considerable

risk. Advanced socio-technical systems especially mobile social computing

application are no longer esoteric, rather they are part of daily lives for

billions of people worldwide.

Social media is demonstrated as a lucrative data source by several studies

(Chai et al., 2010; Liu et al., 2009; Liu et al., 2008; Nau and Mannes, 2009;

Subrahmanian and Kruglanski, 2008; Nau and Wilkenfield, 2007) to gain an

understanding of the cultural dynamics of the geopolitical regions. However,

the very desirable characteristics of social media and mobile devices have

created grave concerns regarding privacy of participants‘ information putting

their lives at considerable risk. Social media inspired human centric

computing to acquire cooperative intelligence from users to achieve high

usability (Gross 2010). Well designed context aware devices are source for

ambient intelligence in social media (Buchmayr and Kurchl, 2010).

Despite the privacy concerns, social media is certainly the most opportune

environment for crowdsourcing applications (Howe, 2006). Crowdsourcing

applications such as Ushahidi‘s maps, leverage community participation to

facilitate its goals. Several DoD medical care and outreach programs (such

as, DARPA-BAA-10-062 Healing Heroes) also seek to leverage social media

platforms for providing a premier social networking environment for service

members. Navy/Marine Corps Intelligence Activities (MCIA) used Facebook,

blogs, YouTube, Flickr, and Twitter (OSINT) extensively to deploy HADR

packages and operate missions to save lives and get crucial aid to thousands

of Haitians in the weeks after the crisis (Clarke, 2010). MCIA monitored the

social media to find the location of trapped bodies or people in need of food,

medical supplies, etc. Further, MCIA used the information from social media

to help deploy food-drops and monitor progress. However, Haitians treated

this humanitarian act of food-drop as an act of disrespect towards their

community by apparently being treated like ―dogs‖ and did not accept the

food packets. It was only until a tweet reflected the overall public sentiment

regarding the situation that MCIA immediately reevaluated their strategy

and started dropping food and supplies to USAID workers first who then

distributed it to the Haitians (Clarke, 2010). This demonstrates the immense

value of social media in HADR operations to the DoD by providing real-time

monitoring capabilities.

All the HADR missions (whether it is Haiti earthquake, Chile earthquake, or

Pakistan flooding) had one thing in common – reliance upon crowdsourcing

leveraging social media. The success of such operations considerably depends

upon the volume of user participation. In various incidents, however, the

security of USAID workers and even government and non-government

organizations was jeopardized due to vulnerabilities in the privacy of

information. According to a report issued by London-based Overseas

3

Development Institute2, an average of 95 aid workers were killed each year

between 2006 and 2009, a 50% increase from an average of about 66 each

year between 2003 and 2006. The rate of kidnappings of aid workers has

accelerated even more quickly, from about 18 a year between 2003 and 2006,

to about 57 a year between 2006 and 2009, an increase of over 200%. Amidst

such raging criminal activities, HADR workers may not feel comfortable

sharing information. Further, inter-agency information sharing,

collaboration, planning, and execution of shared missions necessitate the

deployment of selective transparency under the pretexts of trust between

various organizations. Unless strong privacy controls and measures are in

place, participating organizations or personnel could find themselves at

considerable risk, leading to user-attrition, hence affecting the success of

HADR crowdsourcing missions. It is analogous to generals‘ communication

problem in battle warfare; here we address the issue such as what to share

(communicate) rather than how to share (communicate).

Although many social media services provide privacy policies to the users,

these are quite intricate assuming the users at par with the technical

knowledge and essentially lay the onus of privacy on the users. As a result,

users may unknowingly grant access to their data, leading to grave privacy

concerns. Communications in social media entail not just data privacy, but

user‘s privacy (Adams et. al., 2001). Furthermore, each service imposes their

own privacy policies making it challenging for the users to keep up. Recently,

data or information warehouses in industries are facing increasingly

stringent federal regulations3, forcing them to constantly modify their privacy

policies. Expecting the users to continuously educate themselves of these

policies places them at considerable risk, exacerbating the problem. k-TTP

(trusted third party) is one of the flexible privacy mechanism to leverage

community based knowledge to generate privacy policies (Gilburd et. al.,

2004). There are works proposed to have a security agent who can distribute

standard security policies to each user in a framework like facebook

(Maximilien et. al., 2009 )

State-of-the-art privacy models (LaPadula and Bell, 1996; Brewer and Nash,

1989; Foley, 1990; McLean, 1990; Braghin et al., 2008) are essentially based

on either user or role identification, focusing on the information content. Such

models are incapable of automatically adjusting privacy needs of consumers

or organizations to the context in which the data is accessed. A context can be

defined based on established trust relation between the organizations;

affiliation to an organization; affiliation to a community; relations with

family members; friendship ties; depending on secure or non-secure access

etc. We propose a Context Based Privacy Model (CBPM) that automatically

identifies the context of the information, borrowing concepts from object

oriented methodology and machine learning. Considering numerous pieces of

information such as name, telephone number, e-mail address, age, gender,

items purchased online, social interactions that each individual provides; and

the number of contexts created, the CBPM matrix could quickly become huge

2 http://www.odi.org.uk/resources/download/3250.pdf, retrieved on 16 August 2010

3 http://www.nytimes.com/2010/10/23/technology/23google.html, retrieved on October 23, 2010

4

and unmanageable. The problem is addressed by leveraging intelligent,

scalable, adaptive, and robust machine learning algorithms to compress the

matrix, making it more amenable. Further, the CBPM is independent of

various social web services or mobile social computing applications making it

a global approach to control privacy policies. However, creating the CBPM

matrix for naïve users (also known as the usability problem) could be

extremely taxing. It is also possible that the naïve user can delegate his/her

access rights to others to define policies which further raises privacy issues

(Moniruzzaman and Barker, 2011). We will explore a collective CBPM that

introduces the donation concept where consultants can voluntarily contribute

their privacy settings to the community and naïve users can choose to adopt

such matrices depending on the combination of one or more characteristics of

the donor, including affiliation, trust, social ties, structure, role, and expert

level (Agarwal et al., 2008). In spite of growing online virtual communities,

people are using the virtual communities for business to achieve great value

in return; it is acceptable to consider the same communities to derive a user‘s

privacy policies. The proposed model allows users to define their own

contexts, similar to real world, adopt donations from the community,

lowering the apprehensions and foster user participation. Key contributions

of the work are following:

We demonstrate the need for contextual privacy and introduce context

aware privacy model taking it beyond the realms of user or role based

models;

We propose an incremental, scalable, evolutionary, efficient, global, social

media and platform independent privacy model;

We identify challenges with the proposed model and develop solutions, a

collective context aware privacy model that leverages notions of collective

intelligence allowing donation and adoption of context privacy

configurations;

We demonstrate the model‘s capability to quantify and assess notions of

trust associated with interactions observed by donation and adoption

mechanisms, enabling selective transparency;

The proposed model is compared with existing privacy and access control

models and demonstrate its capability as a framework to implement and

extend these existing models; and

We highlight the importance of context aware privacy in various

application domains such as humanitarian assistance and disaster relief

(HADR) operations, and demonstrate how proposed model can be

implemented in these application domains to address the privacy

concerns.

Next, we will discuss the proposed model and illustrate with examples.

Organization of the paper follows: Section 2 highlights the motivation of the

proposed model with the help of an illustrative example; Section 3 discusses

context based privacy model in detail; Section 4 addresses the challenges

with CBPM by extending the model to Collective CBPM; Section 5 compares

CBPM with existing access control models and demonstrates the capability of

the CBPM as a framework to implement these existing models. Section 6

5

describes potential application of the proposed model; followed by the

conclusions and future research directions in Section 7.

2. MOTIVATION

Privacy has gained a lot of tractions especially with the advent of social

media. We all worry about invasion of privacy. Often one‘s privacy is

infringed himself by direct release of confidential information or indirect

insight of inferences assembled from released information chunks. According

to (Nissenbaum, 2009), ―Information collected from an individual should not

be disclosed to any one without consent of the owner of the information‖.

From Nissenbaum‘s view, ―privacy is neither a right to secrecy nor a right to

control but a right to appropriate flow of personal information‖ (Nissenbaum,

2009). Privacy of an individual‘s information is more than the desire to

control access (Baker and Shapiro, 2003). Proper access control makes an

industry more vigorous and simultaneously enables it to comply with

precepts set by internal or governing bodies. Increasing administrative

agreements and the requisite for economical and flexible service access by

industries indicates the call for advancing towards exquisite access control.

Such access control cannot be accomplished through either user identity or

role identity. Traditional access control mechanisms are not adequate to

protect resources like information by restricting unauthorized access to

sensitive information and thus undermine privacy. Ideal privacy model

demands context, which is beyond user and role identity. One such

circumstance is discussed in Example-1.

Example 1: Heavy monsoon rains flooded Pakistan desperately in July 2010. At

one point, approximately one-fifth of Pakistan's total land area was underwater4. According to reports the floods directly affected about 20 million people, mostly by destruction of property, livelihood and infrastructure, with a death toll of close

to 2,0005.

By the end of August, the Relief Web Financial Tracking service indicated

that worldwide donations for humanitarian assistance had come to $687

million6. Many organizations and charities like CARE International,

REDCROSS, World Vision, Save the Children, Humanity First, USAID, UN,

FAO, UNICEF, WFP, etc. responded immediately with possible

humanitarian assistance7. Three radio operators have been hired. Radio

training sessions were taken place at the UNHCR premises in Peshawar for

all UN agencies who have expressed interest. Security remains a challenge.

Also, importation and licensing of telecommunications equipment continues

to be a constraint. Sahana Foundation8 deployed its open source disaster

4 http://www.bbc.co.uk/news/world-south-asia-10984477, retrieved on August 16, 2010

5 http://www.reliefweb.int/rw/rwb.nsf/db900SID/LSGZ-89GD7W?OpenDocument, retrieved on

November 12, 2010 6 http://fts.unocha.org/reports/daily/ocha_R24_E15913___1008270203.pdf, retrieved on August

12, 2011 7 http://www.coe-dmha.org/pakistan2010.html, retrieved on October 23, 2010

8 http://www.pakistan.sahanafoundation.org/eden/default/index, retrieved on August 20, 2010

6

management platform that manages volunteers and mobilizes resources to

necessitous locations.

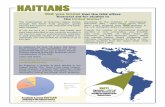

Figure 1. Pakistan floods HADR operations abstract view.

Due to political unrest, AfPak terrorist operations, and other delinquencies

by furious and desperate survivors9, precarious incidents and threats were

reported on HADR personnel and UN system in the field despite UNICEF‘s

increased security measures10. Pakistan deployed its special forces to the

valley and other potential target areas to secure HADR operations11. Due to

increased threats on HADR personnel as a preventive step, HADR agencies

like to conceal some information of HADR personnel. Following instances

demonstrates the utility of the proposed model to reduce the vulnerability of

HADR personnel and operations.

9 http://www.mirror.co.uk/news/top-stories/2010/08/10/pakistan-flood-disaster-could-be-worse-

than-the-boxing-day-tsunami-kashmir-earthquake-and-haiti-earthquake-combined-115875-

22476903/ , retrieved on August 12, 2010 10

http://www.irinnews.org/Report/90541/PAKISTAN-Securing-aid-delivery , retrieved on

September 22, 2010 11

http://www.cbn.com/cbnnews/world/2010/August/Report-Taliban-Kill-3-Christian-Aid-

Workers/, retrieved on August 30, 2010

7

User-Agency: Alex, a HADR volunteer for UNICEF is comfortable in

sharing location information with UNICEF but feels uncomfortable in

sharing his location with local news and media agencies. As a part of

daily reporting procedures, UNICEF is required to share HADR

personnel information with local government bodies and news/media

agencies (such as email, age, phone number, field-location, etc). There are

many instances where this information can be used to track HADR

personnel. There is a need for a privacy model that can consider the

context and allow information to be shared selectively (a.k.a selective

transparency).

Agency-Agency: As a part of coordination management, agencies say

USAID and UNICEF may share information related to their respective

HADR operations. However, a illegitimate person can intercept this

information and know the location of HADR personnel or the location of

planned food-drop by US Air Force/Navy/Army helicopter, putting the

lives of HADR personnel or the entire operation at risk. It is very

common to encounter such situations where organizations with conflict of

interest communicate to establish a mission. A context aware privacy

model could enable USAID and UNICEF to identify which part of

information to share, for example the resources are being delivered

instead of the location.

User-User: Alex wants to get resource, say medicines, by disclosing his

location to UNICEF. At the same time he despises to share his location

information with his fellow colleague say Bob. Alex location is shared

with Bob, here the trust in other words context is ―fellow colleague‖

where UNICEF is in ―authority‖ context.

Malicious user: An impostor(s) participating in HADR operations could

tender false information to the Sahana disaster management system

endangering mission success. The proposed model can help validate

information coming into the military from social media channels by

leveraging the trust relationships learned from the model data.

Information Aggregation: There is also broadcast media (like press media,

telemedia, net media), which collects information from every one involved

in the disaster. Many inferences can be derived from the promulgated

information, which can be used to track HADR operations.

Figure 1 depicts some possible attacks in Pakistan flood recovery scenario.

These scenarios are examples where context aware privacy system is

required, but there are number of such instances that exist in real time

occasions. In the absence of context aware privacy system, none of the HADR

personnel share one‘s details with others and their affiliations follow the

same policy. It is well known that relief operations are not successful without

proper coordination among various parties.

In addition to the above example, increased administrative agreements and

the requisite for economical and flexible service access by industries indicate

the need for advancing towards exquisite access control. Such access control

cannot be accomplished through the conventional user or role identity.

8

Traditional access control mechanisms are inadequate in restricting

unauthorized access to sensitive information and thus undermine privacy.

Ideal privacy model demands context, which is beyond user and role identity.

The proposed model will achieve information privacy considering the context

of the requestor as illustrated in Example 1. A preliminary version of the

model is implemented in (Martha et al., 2010). The model is discussed here in

detail addressing underlying challenges.

3. CONTEXT BASED PRIVACY MODEL

The proposed Context Based Privacy Model (CBPM) achieves information

privacy in situations like HADR operations as illustrated in Example 1. In

this section, we explain in detail how context is incorporated for achieving

information privacy. We start with describing the terminology and notations

used in CBPM.

3.1. Notations and Terminology

In this section, we will define the terms that are used in CBPM such as, data

element, object, security region, context and data set. The terminology used

in the following discussion is listed in Table 1.

Table 1. List of terms used in Context Based Privacy Model (CBPM).

Term Meaning Symbol

Object An entity that holds an individual‘s

information

O

Data element An atomic piece of information d

Data set Set of data elements having identical

privacy policies

D

Security Region Set of data elements SR

Public A security region in which data elements

are accessible to everyone

o

Private A security region in which access to data

elements are exclusive to owners

c

Protected A security region in which data elements

are granted access for specific request

p

Definition 1. Data element (di): Data element is an atomic piece of

information. Data elements could be name, account number, contact ID,

age, etc. Each data element has its own privacy policy.

Definition 2. Object (O): Object is an entity, which holds an individual‘s

information in the form of data elements, in other words object is a

container of data elements.

Definition 3. Security Region (SR): Data elements are classified into three

mutually exclusive sets, referred as Security Regions (SR).

9

The security regions are: public (o), private (c) and protected (p). The

properties of data sets in a security region are similar to access control over

members of a class in object-oriented methodology. Data elements of a data

set in public security region are accessible to every one; while the data

elements of a data set in private region are only accessible to the owner of the

object. By default every data set is placed in private security region for

stringent privacy resources. There are other data sets in which data elements

are neither accessible to everyone nor exclusive to the owner but rather the

access is defined based on the context. Such data sets are placed in protected

security region. Pictorial representation of distribution of data sets of

arbitrary objects among the three security regions is shown in Figure 2. Here

objects O1, O2 and O3 are shown as rectangular boxes with corresponding

private, public and protected security regions. The public, private and

protected security regions are mutually exclusive i.e. a data element can be

part of only one region. Implementing privacy for data elements in public

and private regions is trivial because either they are accessible to everyone or

none. Data elements in protected region are, however, different and warrant

a special treatment, which is the focus of this work. The data elements are

accessible in specific contexts. Objects could allow the data elements

accessible to some external entities, also called subjects, such as processes or

users. For the reason we define context as an abstract state of a subject.

Figure 2. Objects Sharing Data Sets through Access Control.

Definition 4. Context (C): Context is a set of attributes, which describes state

of a user (requester).

Context is a set of attributes to identify a requesting subject such as role, age,

or status of requesting process. Context is rich in expressing status of a

requesting subject as compared to role identity or user identity. For example,

Internet browser, mail, messenger, or social computing applications are

denoted as contexts. All the requests for data coming from subjects using an

Internet browser fall under Internet browser context; requests from mail or

messenger fall under mail or messenger context; similarly requests coming

from a social computing application (such as FarmVille, FourSquare, etc.) fall

under the social computing application context. The access permission of a

10

data set of an object is hence decided based on its context. A process or user‘s

access changes with context. This is referred as Contextual Privacy. It is

assumed that the context of requester can be determined using information

collected from the request. The context of the requester determines access

permission for a request by looking up CBPM. CBPM maintains a table that

indicates each context‘s permissions on each divisible part of information (or,

data elements). The privacy policy of two data elements can be similar and

such data elements can form a data set.

Definition 5. Dataset (D): A Dataset is a collection of data elements in which

all the data elements exhibits similar privacy policy.

Thereby, the data elements of all objects are combined to form one or more

mutually exclusive data sets. Initially each data element is assigned to a data

set, so there are as many singleton data sets as the number of data elements.

For example, data element, dj of an object, is assigned to a new data set Dj, Dj

={dj}. Data sets with similar privacy policies are then aggregated in a bottom-

up hierarchical fashion.

Table 2. CBPM for identified contexts for {D1, D2, D3, D4, D5, D6, D7} data sets.

Contexts \ Data Sets D1 D2 D3 D4 D5 D6 D7

C1

C2

…

Cm

Authorized access to data sets for each context is stored in a CBPM matrix.

We represent it with ‗M‘. Consider there are ‗m‘ contexts and their

permissions can be tabulated as shown in Table 2. There are ‗m‘ rows in Table

2, where each row represents access permissions for each identified context.

The columns represent data set of all objects. Note that the CBPM matrix is a

binary matrix. For a data set Dj and the context Ci , the access is defined as,

M(i,j) = (Ci,Dj) = 0 or 1

where, 1 <= i <= m, 1 <= j <= n

If an element at (i,j) in matrix M ( i.e., M(i,j) ) is ‗0‘ this means the privacy

policy that the data set Dj is not permitted in context Ci. In the same way, ‗1‘

means that the data set is accessible to Ci For contexts C1, C2, . . . ,Cm, the

permissions for a dataset Dj can be extracted as,

M(:,j) = m

i

jiDC

1

),(

Similarly, the permissions for a context Cj over n datasets is,

M(i,:) = n

j

jiDC

1

),(

11

Next, we will explain how CBPM is implemented. It could be taxing for naïve

user to initialize context matrices so we introduce a collective context based

privacy model that allows users to adopt privacy policies donated by the

community enabling naïve users to follow expert or trustworthy users

(discussed in more details in Section 4).

3.2. Implementation of CBPM

In this section we discuss how the CBPM can be realized. The CBPM involves

three phases.

Phase 1. Identify or define contexts and identify data elements.

Phase 2. The matrix is constructed by creating a row for each context

identified in the earlier phase. As discussed previously, there is a column

for each singleton data sets in all objects. Therefore, there are as many

columns as the number of data elements in the objects. It is obvious that

the matrix appears very large in size. In next phase the CBPM matrix is

reduced.

Phase 3. A reduction strategy is used to make CBPM matrix amenable.

Combining similar columns reduces the number of datasets. Further, this

reduction strategy can be implemented as an incremental process while

constructing the CBPM matrix to avoid re-computation every time there

is a change to the matrix.

Next we discuss in detail how the above three phases are implemented. We

start with matrix with no data sets and keep on adding data elements to the

matrix. Whenever a new data element (dz) is added to an object (Oi), its

permission set is verified with existing data sets (columns) in the object for

matching. If it finds one such data set Dj , the data element dz is included in

the data set. Mathematically,

Dj = Dj dz

If there is no such matching data set, the data element becomes only element

of a new data set {dz} and included into the matrix as a new column. The

algorithm for CBPM implementation is presented in Algorithm 1.

identify_data_elements() is a function to identity atomic parts of data and

define_contexts() is a function to define set of attributes as contexts.

identify_data_elements() and define_contexts() are part of Phase 1 and

presented in Algorithm 1.

As part of Phase 2, the CBPM matrix is constructed for each identified

Algorithm 1: Pseudo code for Phase1

Procedure:

1: function CBPM()

2: {

3: {d1,d2, ... dx}=identify_data_elements();

4: {c1,c2, ... cm}=define_contexts();

5: construct_matrix();

6: }

12

context and data element. The function add_element_to_matrix is called for

each data element, which adds the data elements to the matrix.

Phase 3 is to reduce the size of the matrix as there are as many columns as

data elements. There are two possible ways to reduce the matrix size: One is

offline merging of similar columns in which the complete matrix is

constructed and then are combined columns having similar values. This

approach is not efficient for larger and dynamic CBPM matrices since both

the contexts (rows) and data elements (columns) of the matrix could increase.

Other approach is online merging which is presented in Algorithm 3.

It uses on the fly construction by adding each data element to matrix in such

a way that it does not need further merging operations. While adding a data

element to matrix, its values are compared (function

find_element_in_column()) with existing columns in the matrix and is

included in matching column i.e. appropriate data set (Algorithm 3, Line 6).

If it is not able to find a matching column, the data element becomes a

singleton dataset (as a new column) in the matrix by calling function

include_column_in_matrix(). By doing this, we can avoid merging operation

which is a resource intensive task.

Once the matrix is constructed, it can be used to extract policy for a given

context to access a data element. Pseudo code to extract policies from a given

CBPM matrix is shown in Algorithm 4. Algorithm 4 receives requesting data

element and requester‘s context. It searches the set of data sets for its

matching data set through search_dataset function. get_index function gets

the index of the context of the requester in the access control matrix. The

Algorithm 3: Pseudo code for Phase 3 Procedure:

1: function add_element_to_matrix(d)

2: {

3: for all cm:column of an object in matrix M do

4: find_element_in_column(d,cm);

5: if found then

6: cm=cm U {d};

7: end if

8: end for

9: if never found then

10: include_column_in_matrix({d}, M);

11: end if

12: }

Algorithm 2: Pseudo code for Phase 2

Procedure:

1: function construct matrix()

2: {

3: for all d in {d1,d2, . . . dx} do

4: add_element_to_matrix(d);

5: end for

6: }

13

permission set of the data set is retrieved through get_column function. The

access permission is the index bit of the permission set of the data set which

is obtained by get_bit function. The algorithm is repeated whenever there is a

request. Further we discuss challenges involved in CBPM.

3.3. Challenges of CBPM

The CBPM approach assumes each user owns a single access matrix that

defines his/her specified access policies for different contexts identified by the

user. Creating a new matrix from scratch could be taxing for the user,

especially for a naïve user, also known as the usability problem12. As a

possible solution, we could initialize each user with a default matrix where

policy for all data elements is ―zero‖ or ―not allowed‖. Users can further refine

this matrix. This might not be the case for all users. So, as another possible

solution, we propose a community based collective intelligence approach

(Agarwal et al., 2010) to initialize the CBPM matrix. We call this Collective

Context Based Privacy Model, or C-CBPM. Next we describe C-CBPM

leveraging theories and concepts from collective intelligence to address

scalability and usability challenges mentioned above.

4. COLLECTIVE CONTEXT BASED PRIVACY MODEL

To address the challenges in the Context Based Privacy Model (CBPM) as

discussed in Section 3.3, in this section we extend the CBPM to Collective

Context Based Privacy Model (C-CBPM). Main idea of C-CBPM is to generate

CBPM matrix for a user given community support. Existing users voluntarily

support naïve users to harden his/her information sharing. Terminology used

in the C-CBPM model is described in Section 4.1.

4.1. Notations and Terminology

The notations used in following discussion are listed in Table 3.

12 It shares some issues with cold-start problem. Usability problem is naive user‘s lack of

experience to build a CBPM matrix

Algorithm 4: Pseudo code For Extraction of Policies

1: Input: data_element, context, access_matrix

2: Output: bool access_permission

3: Uses: search_dataset, get_index, get_column, get_bit.

4: Procedure:

5: function process_request()

6: {

7: dataset = search_dataset(data_element);

8: context_index = get_index( context, access_matrix);

9: column = get_column( dataset, access_matrix);

10: return get_bit(context_index, column);

11: }

14

Table 3. Notations in Collective-CBPM.

Meaning Notation

CBPM matrix of user ‗u‘ Mu

ith row of CBPM matrix (context policy of user ‗u‘) Mu(i,:)

jth column of CBPM matrix (Dataset policy of user ‗u‘) Mu(:,j)

Next we define the concepts used in C-CBPM.

Definition 6. Donation: It is the process of donating one‘s CBPM matrix to a

requester. Donation is voluntary for a user. Users can select a part of

his/her matrix for donation.

Definition 7. Adoption: A user can request other users for donation matrices

and can use the donations to construct his/her matrix. This adoption is also

selective like donation. User can adopt a part or the entire donation matrix.

Definition 8. Donor (D): Donor is a user who donates a CBPM matrix. Donor

is asked to select rows and columns that he/she wishes to donate. Donor

holds privilege to donate all rows and columns, in other words a donor can

donate all of his/her CBPM matrix.

Definition 9. Adopter (A): A user requests donors for CBPM matrices. Adopter

has to provide his/her credentials. A new CBPM matrix is constructed for

the adopter by aggregating donated matrices in a selective manner.

4.2. Description

Users can donate their privacy policy by sharing the whole or a part of their

CBPM matrix with the community. Naïve or new users can adopt a part of

the privacy settings or completely imitate what donors share thereby

addressing the cold-start problem. Naïve users can adopt such matrices

depending on the combination of one or more characteristics of the donor,

including affiliation, trust, social ties, structure, and role. Donors who are

ready to donate drop their CBPM matrices in C-CBPM donation pool.

A donor posts his CBPM matrix in whole or part of it. The donor could select

parts (rows and columns) of his matrix that can be donated beforehand. We

assume there are some donations available in C-CBPM for naïve user.

Adoption of a matrix from C-CBPM involves three phases. Next we discuss

these phases in detail.

Phase 1: Requesting Donations: Adopter who needs a CBPM matrix

initiates the process by broadcasting request to available donations along

with his credentials. The credentials could include affiliation, community,

location etc.

15

Figure 3. Collective CBPM.

Phase 2: Processing Requests: Upon receiving a request, C-CBPM

authorizes the requests looking at adopter‘s credentials. Authorized

requests are granted whole or part of valid CBPM matrices.

Phase 3: Aggregation: Adopter expects responses from C-CBPM with CBPM

matrices. Received matrices are aggregated to construct new CBPM

matrix. Default matrix is created when there is no donation available.

The aggregation phase is discussed in detail in following discussion.

Sequence diagram of C-CBPM process is depicted in Figure 4.

Figure 4. Sequence Diagram for C-CBPM.

Adopter is asked to pick sub-matrices (parts of donation matrices) from

received donation matrices. Adopter selections are translated to masks that

are applied to the donation matrices to extract sub-matrices relevant to the

adopter‘s selection. Note that for each donation matrix there will be one

16

mask. Essentially, the mask matrix nullifies values in a matrix except the

selected cells. For example, ‗MA‘ is a donation matrix from user ‗A‘, its

masked matrix is written as ‗MA‘ which is

MA(i,j) = MA(i,j) if user chooses policy for context ‗i‘ and dataset

‗j‘

= 0 otherwise

In this way, only the selected elements from the donation matrices are

considered for adoption. The aggregation of the masked matrices is defined as

‗+OR‘ operation. ‗+OR‘ over two binary-matrices is,

(MA +OR MB) (i,j) = 0 if MA(i,j) = MB(i,j) = 0,

= 1 otherwise

It can be trivially shown that the ‗+OR‘ addition operation is commutative,

closure, associative and transitive. The donation matrices are aggregated

using this operation to obtain final adoption matrix. For a user ‗X‘ who adopts

donation matrices from users A, B and C, the aggregated adoption matrix can

be obtained as follows,

MX = Mx +OR MA +OR MB +OR MC . . . . . . . . . . . . . . (1)

where MA , MB , and MC are the masked matrices for the users A, B and C

respectively. Note that there is a masked matrix for each donation. The

matrix ‗MX‘ becomes new CBPM matrix for user ‗X‘. As C-CBPM is a

collective approach, it depends on community donations that lead to

significant challenges. Next section addresses the challenges in C-CBPM.

4.3. Challenges Involved in C-CBPM

In this section, we first briefly state the challenges with C-CBPM followed by

proposed solutions to address them. The challenges are:

1. Too many donations – Since every user can donate their CBPM matrix, it

could overwhelm a naïve user with numerous donations to adopt from

2. Malicious Donors – Some donors can try to game the system by imitating

as legitimate consultants and try to push spurious CBPM matrices, which

upon adoption might jeopardize the privacy of the adopter.

3. Privacy of CBPM matrix in collective computation setting – It may seem that

sharing one‘s CBPM matrix or the privacy settings might jeopardize the

privacy of their CBPM. Donations are anonymous to untrustworthy users.

To address the challenges, we leverage the notions of trust (Agarwal and Liu,

2009) and expert identification models (Agarwal et al., 2008). Next, we

describe the evaluation of trust associated with donations.

Computation of Trust: Donors donate their matrices for adoption. The more

their matrices are adopted, the more they will be rewarded by earning trust

as a recognition of donation and their profiles will be associated with the

appropriate trust values. Users who are especially active in donating privacy

matrices and are widely adopted are called ―Expert Donors‖ or ―Consultants‖.

17

Figure 5. Trust evolution: A typical scenario.

Next we describe how the trust is evaluated for each donation. Trust value of

a user is calculated from the number of users who adopted CBPM matrix

from the user. Further, a combination of one or more characteristics of a

donor, including affiliation, social ties, structure, role, and expert levels

(Agarwal et al., 2008) can also be used to estimate trust. Trust value of a user

is initialized to ‗0‘ and evolves with time.

A typical scenario of donation and adoption is presented in Figure 5. A

directed edge from one user (A) to another user (X) indicates that ‗A‘ donated

his/her matrix to ‗X‘. Simply, it represents the direction of donation. Each of

such directed edge or donation is associated with a trust value denoted as

t(A,X). ‗t(A,X)‘ represents how much the user ‗X‘ trusts user ‗A‘. Trust values

always lie in [-1,1].

-1 <= t(A,X) <= 1

The trust values are used to classify the users into three categories. The

categories are defined as following:

o Trusted: Donors who have already earned their ―trust‖ value greater than

threshold value, the trust is observed from historical evidences.

‗X‘ trusts ‗A‘ if and only if t(A,X) = 1

o Yet to be trusted: Donors that are either new or do not have enough

adoptions to earn the ―trust‖ more than required. Donations from these

donors will raise the adopter of the accompanying risk.

‗X‘ has less trust on ‗A‘ is implied by 0 < t(A,X) < 1

o Non-trusted: Donors that are compromised, or proven non-trustworthy

based on historical evidence. The donors had enough historical evidence

to learn that the trust is negative. Their donations will be forthright

rejected.

‗X‘ believes ‗A‘ as malicious user if t(A,X) < 0

Trust values can be learned after several donations and adoptions using the

models proposed in our earlier work on trust (Agarwal and Liu, 2009) and

expert identification (Agarwal et al., 2008). A donor‘s trust rises when a user

18

accepts his/her donation, degrades otherwise. Next we describe the process of

aggregating donation matrices through incorporating corresponding trust

values.

Trust Aware Donation Matrix Aggregation: Now we make use of these trust

values for C-CBPM. Assume an adopter (X) received matrices from more than

one donor. Adopter is asked to select sub-matrices (rows and/or columns) of

donated matrices for adoption. A new matrix is built from selected sub-

matrices. Conflict at a cell during aggregation is resolved through trust

values. The aggregation is carried out believing upon trust values between

every non-symmetric pair of users. Once the trust values are calculated, the

donation matrices are aggregated. The masked matrix is multiplied with its

associated trust value and quantized. ‗t(A,X)MA‘ is obtained by multiplying

each element of masked matrix ‗MA‘ with ‗t(A,X)‘. Now the matrix elements are

quantized to 1-quantam or 0-quatum by comparing the values with a

threshold value. We represent quantization with ‗[ ]‘. ‗µ‘ is a threshold limit

for quantization and can be experimentally determined. Quantized value for

context ‗i‘ and dataset ‗j‘ is defined as,

[t(A,X)MA(i,j)] = 1 if t(A,X) * MA(i,j) > µ

= 0 otherwise

Quantization process transforms the ‗t(A,X)MA‘ into binary matrix. Such

quantized matrices are calculated for each donation. (eq 1) is now rewritten

by replacing masked matrices with the quantized matrices. The final

aggregation matrix is obtained using the following formula.

MX = Mx +OR [t(A,X)MA] +OR [t(B,X)MB] +OR [t(C,X)MC]

Next we justify the trust aware aggregation addresses the challenges

mentioned earlier.

Addressing The Challenges: Using the trust values of obtained donations, the

donations are ranked. Matrices from donors having low trust values are

discarded, thus valid trusted donations are picked for adoption from too many

donations. Malicious donors are also discarded as they associate with low

trust value. Now we address the remaining challenge, to protect CBPM

matrix from information breach. The donations are voluntary and shall be

kept anonymous to safeguard the privacy of the consultants or the donors of

CBPM matrix. The donations are voluntarily obtained from permitted users

to avoid any kind of information breach in terms of policies.

CBPM has successfully demonstrated its capability as a framework for

implementing existing user and role based privacy models, such as, Bell-

LaPadula model (LaPadula and Bell, 1996), Chinese Ethical Wall model

(Brewer and Nash, 1989), and Information Flow model (Foley, 1990; McLean,

1990; Braghin et al., 2008) and as presented in next section.

19

5. RELATED WORK

There are four access control models, which provides confidentiality;

therefore assuring privacy.

1. Bell-LaPadula Model: Objects are classified into four sensitivity levels: Top-

Secret, Secret, Confidential and Unclassified. A subject can only access

objects at certain levels determined by his/her security capability. The model

is not capable in restricting illegal information flow within a sensitivity level

(LaPadula and Bell, 1996; Francesco and Martini, 2007). There are also

situations where the write access to high level objects causes information

leakage called covert channel.

2. Ethical Wall Model: The main objective of the model is to avoid conflict of

interest problems. No two entities in a class of conflict of interest exchange

information (Brewer and Nash, 1989). It appears as a wall between each of

class of objects. The model does not benefit in situations where mutual

cooperation of objects in a class of conflict of interest is needed. The chinese

wall model can be generalized in terms of lattice-based Bell-LaPadula model.

Such generalization also does not provide facilities to exchange information

among objects in a class.

3. Information Flow model: Access to an object is granted for a subject only if

the subject promises the information will not reach unauthorized entities

from it (Foley, 1990; McLean, 1990; Braghin et al., 2008; Chou, 2004).

Subsequently the flow of the information is constrained. The model involves

complex issues while implementing in real time. The trace of the information

is needed to make a decision.

4. Context-Based Access Control: Subject's context plays a major role rather

than the subject or object's security capability (Kapsalis et al., 2006; Hong et

al., 2009). This is widely used in firewalls for state-full filtering of network

traffic. Context changes with many parameters such as time, location,

domain etc. Context aware systems take advantage of a consultant's context

to analyze the activities at each layer of the communication. We extend the

model to information flow to provide privacy in this paper.

Another access control mechanism is Role Based Access Control (RBAC),

which uses the role of the requesting entity to decide whether to give access

or to revoke (Goncalves and Maranda, 2008). Identifying the role of the

requester is not sufficient in taking decisions in all cases. Tbahriti proposed a

privacy model for data in Data as a Service (DaaS) environment where a

policy can be defined for a data object and service in combination (Tbahriti et.

al., 2011). Unlike user or role identity, in this paper we used contexts to

control the information access. Though the context aware systems are

complex, they were found effective in network traffic monitoring. There are

techniques to address privacy concerns in databases by inserting dummy

information (virtual database) to prevent disclosure of sensitive information

to certain contexts (Jindal et al., 2010). In an attempt to address the

complexity in implementing contextual privacy, we disseminate information

based on their privacy policy. Such dissemination helps incorporating fine-

grained privacy policies on each atomic piece of information. Inspired from k-

20

anonymity protection mechanism, we restrict a data element to a context if

the trusted community votes for the element access are less than given

threshold (analogous to ‗k‘ in k-anonymity approach) (Sweeney et. al., 2002).

All the above privacy models require the data owner or administrator to

define policies or access controls where C-CBPM inherits a user‘s neighbors

knowledge (policies) to automate the specification of privacy policies. We

compare C-CBPM with the discussed models in next section.

5.1. C-CBPM As A Framework: Extending Existing Models

We realize existing access control models with the C-CBPM. C-CBPM‘s

extended features enable the implementation of other models. We discuss

each of the model implementation in detail here.

5.1.1 Bella-LaPadula Model

C-CBPM has sufficient facilities to realize Bell-LaPadula model. Since there

is no notion of a piece of information i.e. data element in an object in Bell-

LaPadula model, each object is a single data set. The sensitivity label of the

object is used to build a CBPM matrix. The context of the consultant or

subject is defined as sensitivity label. Therefore the CBPM matrix comprises

of sensitivity labels as rows and data sets as columns. Table 4 shows the

constructed CBPM matrix. The values are filled in such a way that it would

obey NRU (No Read Up) and NWD (No Write Down) rules i.e.

(Ci , Dj) = 1 if Ci , Oj have same sensitivity label

= 0 otherwise

Table 4. CBPM matrix for Bell LaPadula Model.

Contexts \ Data sets D1 D2 D3 D4 D5

Top Secret

Confidential

Unclassified

Though the Bell-LaPadula model is implemented through CBPM, the

shortcomings of Bell-LaPadula model (mentioned in related work section) can

still not be addressed through its CBPM implementation. The CBPM,

however, can effectively address the shortcomings.

5.1.2 Ethical Wall Model

Ethical wall model can be accomplished using C-CBPM. It can be achieved by

interpreting classes of interests as contexts. A row for each class of interest.

The CBPM matrix is filled so that no subject (context=class of interest) gets

access to an object if they are from one class of interest and similar to object

accessing another object.

(Ci , Dj) = 1 if Ci , Oj are from different classes of interests

= 0 otherwise

21

Table 5. CBPM matrix for Ethical Wall Model.

Contexts \ Data sets D1 D2 D3 D4 D5 D6

Financial Institution

Oil Industry

Stock Broker

Medical and Insurance Inc.

By disseminating an object's information into data elements and labeling

each data element with a class of interest, ethical wall model allows sharing

non-critical information among entities in a class of interest. Moreover the

extension should not violate privacy policies.

5.1.3 Information Flow Model

To realize the Information Flow Model using C-CBPM, the contexts are

defined as connected entities. Each of group of connected entities is a context.

It can be any connection which features the information exchange (for

example telephone). There is no line of flow between the group and other

groups. In a group, a data element is accessible to all or none. One instance of

such scenarios is presented in Table 6.

Table 6. CBPM matrix for Information Flow Model.

Contexts \ Data sets D1 D2 D3 D4 D5 D6

Faculty

Students

Staff

It assumes that no faculty, staff and student in a university share critical

information. Therefore they form three connected groups. The groups can also

be identified by applying appropriate graph techniques on information flow

chart.

6. POTENTIAL APPLICATION AREAS

We demonstrate one among many potential application areas of C-CBPM

using hypothetical parameters. We develop a CBPM matrix with arbitrary

values for few hypothetical contexts and data sets.

HADR: As we discussed in Section 2, privacy of HADR staff can be achieved

using C-CBPM model. Continuing with the Example 1 we construct the

CBPM matrix for a HADR volunteer, Alex. Alex owns data elements such as

age, location, name, affiliation, email, blood group, allergies. Alex gives access

to each data element depending upon request‘s context. Here we show a

hypothetical CBPM matrix.

Table 7. CBPM matrix for Alex from UNICEF.

22

Contexts\Data

sets

{age} {location} {name} {affiliation} {email} {blood

group}

{allergies}

UNICEF 1 1 1 1 1 0 1

Staff from

UNICEF

0 0 1 1 0 0 0

Red Cross 1 0 0 0 0 1 1

Local Police 0 0 1 1 0 0 0

From the Table 7, it is clear that the data elements ‗age‘ and ‗blood‘ group

have same policy i.e. same column values so they are combined. The process

is carried out for other columns also. We found (age, blood group), (location,

email), (name, affiliation) having similar values, thus combined. The reduced

CBPM matrix is shown in Table 8.

Table 8. Final CBPM matrix for Alex from UNICEF.

Contexts\Data sets {age,

allergies}

{location,

email}

{name,

affiliation}

{blood group}

UNICEF 1 1 1 0

Staff from UNICEF 0 0 1 0

Red Cross 1 0 0 1

Local Police 0 0 1 0

From the above example, it can be observed that larger CBPM matrices could

be significantly reduced.

7. CONCLUSION

As social life is gradually moving onto Internet through social web and mobile

social computing applications, there is an increasing need for avant-garde

privacy models dealing with the challenges of new types of information

sources, creating a vast ocean of data with intricate access requirements and

constraints, forcing us to think beyond the existing content-centric user or

role based privacy models. The concomitant privacy issues with the World

Wide Web and more so with the social media has affected various

applications such as healthcare delivery. Other applications that leverage

extensive user participation and collaboration, or crowd sourcing, such as

humanitarian assistance and disaster relief operations (HADR) are

significantly affected too.

The proposed context based privacy model (CBPM) is incremental, scalable,

evolutionary, efficient, global, social media and platform independent. It

allows users to define their own contexts (similar to the real world), lowering

the apprehensions fostering user participation with far-reaching implications

and groundbreaking applications struggling with data privacy as well as

information security challenges. The CBPM matrix could pose scalability

challenges due to numerous data elements and contexts. We proposed an

incremental reduction strategy to keep the CBPM matrix more manageable.

23

Further, we extend the proposed model to Collective-CBPM (C-CBPM) that

furnishes hassle free privacy policy for naïve or new users. C-CBPM

leverages notions of trust and collective intelligence allowing donation and

adoption of context privacy configurations to and from the community,

respectively. The interaction observed through donation and adoption

mechanism enables the model to learn, assess, and quantify the trust

between various users or organizations facilitating selective transparency.

The model dynamically adapts to the domain of the users, meaning a user‘s

privacy is never compromised regardless of whether the donor pool comprises

of conservative or liberal users. The proposed model is also compared with

existing privacy and access control models and demonstrates its capability as

a framework to implement and extend these existing models. We also

demonstrate how the proposed model can be implemented in various

application domains such as humanitarian assistance and disaster relief

(HADR) operations to address the privacy concerns.

We envisage that this research will further our understanding of the induced

individual and collective socio-technical behavioral changes with the

advancement of technology creating new synergies and capabilities. Data

from this research will be made publicly available due to its efficacy for

various interdisciplinary research endeavors, especially in human-computer

interaction, game theory, political communication, social network analysis

and mining, and social computing, among others.

References

Abdulsalam Yassine and Shervin Shirmohammadi. 2009. A business privacy model for

virtual communities. Int. J. Web Based Communities 5, 2 (March 2009), 313-335.

Adams, A. and Sasse, M.A. (2001), ―Privacy in multimedia communications: Protecting

users, not just data‖, People and computers XV: interactions without frontiers: joint

proceedings of HCI 2001 and IHM 2001, Springer Verlag. pages 49-69

Agarwal, N., Liu, H., Tang, L., and Yu, P. (2008). Identifying Influential Bloggers in a

Community,. 1st International Conference on Web Search and Data Mining (WSDM08),

pp. 207-218, California.

Agarwal, N. and Liu, H. (2009). Trust in Blogosphere, . Encyclopedia of Database

Systems, Editors: Ling Liu and M. Tamer Özsu pp. 3187-3191, Springer.

Agarwal, N., Galan, M., Liu, H., and Subramanya, S. (2010). WisColl: Collective

Wisdom based Blog Clustering, Journal of Information Science. 180(1): 39-61

Baker. C. R. and Shapiro, B. (2003). Information technology and the social construction

of information privacy: Reply. Journal of Accounting and Public Policy, vol. 22, no. 3, ,

287 – 290.

Braghin, A. C. , Agostino, B. C., and Riccardo B F (2008). Information flow security in

boundary ambients. Information and Computation pp. 460-489. Elsevier.

Brewer, D. D. F. and Nash, D. M. J. (1989). The Chinese wall security policy. IEEE

Symposium on Research in Security and Privacy, pp. 206–214.

24

Buchmayr, Mario and Kurschl, (2010) Werner, ―A survey on situation-aware ambient

intelligence systems‖, Journal of Ambient Intelligence and Humanized Computing, vol.

2, pages 175-183.

Chai, S., Salerno, J., and Mabry, P. (2010). Third International Conference on Social

Computing, Behavioral Modeling, and Prediction, SBP 2010, Bethesda, MD, USA,

March 30-31, 2010, Springer.

Chou, S. (2004). Dynamic adaptation to object state change in an information flow

control model, Journal of Information and Software Technology, Volume 46, Issue 11,

Pages 729-737.

Clarke, C. (2010) Intelligence Collection through Social Media, Navy Imagery

Insider,2010 pp. 4.

Foley, S. (1990). Secure information flow using security groups. Computer Security

Foundations Workshop III, pp. 62 –72.

Francesco, N. and Martini, L. (2007) Instruction-level security analysis for information

flow in stack-based assembly languages, Journal of Information and Computation,

Volume 205, Issue 9, Pages 1334-1370.

Gilburd, B. and Schuster, A. and Wolff, R. (2004), ―k-TTP: a new privacy model for

large-scale distributed environments‖, Proceedings of the tenth ACM SIGKDD, pages

563—568.

Goncalves, G. and Maranda, A. (2008). Role engineering: From design to evolution of

security schemes, Journal of Systems and Software, Volume 81, Issue 8, Pages 1306-

1326.

Gross T (2008) Cooperative ambient intelligence: towards autonomous and adaptive

cooperative ubiquitous environments. In: International journal of autonomous and

adaptive communications systems (IJAACS), vol 1, suppl 2, pp 270–278

Hong, J., Suh, E., and Kim, S., (2009). Context-aware systems: A literature review and

classification, Journal of Expert Systems with Applications, Volume 36, Issue 4, Pages

8509-8522.

Howe, J. (2006) The Rise of Crowdsourcing. Wired, Issue 14.06, June 2006.

Kapsalis, V., Hadellis, L., Karelis, D., and Koubias, S. (2006). A dynamic context-aware

access control architecture for e-services, Journal of Computers & Security, Volume 25,

Issue 7, Pages 507-521.

Kurt Thomas, Chris Grier, and David M. Nicol. 2010. unfriendly: multi-party privacy

risks in social networks. In Proceedings of the 10th international conference on Privacy

enhancing technologies (PETS'10), Mikhail J. Atallah and Nicholas J. Hopper (Eds.).

Springer-Verlag, Berlin, Heidelberg, 236-252.

LaPadula, L. J. and Bell, D.E. (1996). Secure computer systems: A mathematical model,

Journal of Computer Security, Vol. 4, pp. 239-263.

Latanya Sweeney. 2002. k-anonymity: a model for protecting privacy. Int. J. Uncertain.

Fuzziness Knowl.-Based Syst. 10, 5 (October 2002), 557-570.

Liu, H., Salerno, J. , and Young, M. (2008). Proceedings of the Social Computing,

Behavioral Modeling, and Prediction (SBP08). Arizona: Springer.

25

Liu, H., Salerno, J. , and Young, M. (2009). Proceedings of the Social Computing and

Behavioral Modeling (SBP09). Arizona: Springer.

Martha, V., Ramaswamy, S., and Agarwal, N. (2010). CBPM: Context Based Privacy

Model. 2nd International Symposium on Privacy and Security Applications (PSA-10)

held in conjunction with IEEE International Conference on Privacy, Security, Risk.

Minneapolis: IEEE.

Maximilien, E.M.; Grandison, T.; Kun Liu; Sun, T.; Richardson, D.; Guo, S.; , "Enabling

Privacy as a Fundamental Construct for Social Networks," Computational Science and

Engineering, 2009. CSE '09. International Conference on , vol.4, no., pp.1015-1020, 29-

31 Aug. 2009

McLean., J. (1990). Security models and information flow. IEEE Symposium on

Research in Security and Privacy, (pp. pp. 180-187).

Moniruzzaman, M.; Barker, K.; , "Delegation of access rights in a privacy preserving

access control model," Privacy, Security and Trust (PST), 2011 Ninth Annual

International Conference on , vol., no., pp.124-133, 19-21 July 2011

Nau, D. and Mannes, A. (2009). Proceedings of the Third International Conference on

Computational Cultural Dynamics (ICCCD-09). Maryland: The AAAI Press.

Nau, D. and Wilkenfeld, J. (2007). Proceedings of the First International Conference on

Computational Cultural Dynamics (ICCCD-07). Maryland: The AAAI Press.

Nissenbaum, H. (2009). Privacy in Context, Stanford: Stanford Law & Politics.

Richa Jindal and Chander Kiran. Article: Dual Layer Privacy Model for Hidden

Databases. International Journal of Computer Applications 1(13):22–25, February 2010.

Published By Foundation of Computer Science.

Subrahmanian, V. S. and Kruglanski, A. (2008). Proceedings of the Second International

Conference on Computational Cultural Dynamics (ICCCD-08). Maryland: The AAAI

Press.

Tbahriti, S.-E.; Mrissa, M.; Medjahed, B.; Ghedira, C.; Barhamgi, M. & Fayn, J. (2011),

Privacy-Aware DaaS Services Composition., in Abdelkader Hameurlain; Stephen W.

Liddle; Klaus-Dieter Schewe & Xiaofang Zhou, ed., 'DEXA (1)' , Springer, pp. 202-216