Bachelor Thesis Automatic Speech Recognition for … Thesis Automatic Speech Recognition for...

Transcript of Bachelor Thesis Automatic Speech Recognition for … Thesis Automatic Speech Recognition for...

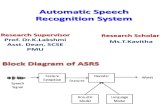

Bachelor Thesis

Automatic Speech Recognition forElectro-Larynx Speech

comparing different feature extraction methods

conducted at theSignal Processing and Speech Communications Laboratory

Graz University of Technology, Austria

byGabriel Hulser, 1031473

Felix Rothmund, 1073077

Supervisors:Dipl.-Ing. Anna Katharina Fuchs

Dipl.-Ing. Dr. techn. Martin Hagmuller

Graz, February 11, 2015

Abstract

The perfomance of Automatic Speech Recognition (ASR) systems has improved a lot over thelast years due to higher computational power. With ASR making its way into the consumer areaand being used more and more in daily life it should also be available for people with speechdisorders. Furthermore ASR offers the possibility to objectively quantify speech intelligibilityand could therefore help to improve the quality of electrolarynx (EL) speech. In previouswork at the SPSC Lab, EL speech has been applied to a recognition system designed for healthyspeech using Mel Frequency Cepstral Coefficients (MFCC). In this Bachelor thesis we integratedPerceptual Linear Prediction (PLP) into an existing ASR System and evaluated the use of PLPfeatures in comparison to MFCC feature extraction. We found that while comparable resultscan be obtained for healthy speech, PLP feature extraction generally led to a lower recognitionrate for EL speech.

Kurzfassung

Die Genauigkeit von automatischer Spracherkennung (ASR) hat sich aufgrund der steigendenRechenleistung in den letzten Jahren erheblich verbessert. Da ASR deshalb immer mehr imConsumerbereich Anwendung findet und von vielen Menschen im taglichen Leben verwendetwird, sollte diese Technologie auch fur Menschen mit Sprechstorungen zuganglich gemacht wer-den. Desweiteren bietet ASR die Moglichkeit, Sprachverstandlichkeit objektiv zu beurteilenund konnte somit dazu beitragen, die Qualitat von Elektrolarynx-Sprache (EL) zu verbessern.In einer fruheren Arbeit am SPSC wurden Mel Frequency Cepstral Coefficients (MFCC) furdie Erkennung von EL-Sprache getestet. In dieser Arbeit binden wir Perceptual Linear Pre-diction (PLP) in den existierenden Spracherkenner ein und vergleichen diese mit der MFCC-Merkmalsextraktion. Wahrend fur gesunde Sprache vergleichbare Ergebnisse erzielt werdenkonnen, fuhrt PLP fur Elektrolarynx-Sprache im Allgemeinen zu geringeren Worterkennungsraten.

ASR for EL Speech

Contents

1 Introduction 71.1 Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2 Automatic Speech Recognition 92.1 Signal Preparation and Feature Extraction . . . . . . . . . . . . . . . . . . . . . . 9

2.1.1 Mel Frequency Cepstral Coefficients (MFCC) . . . . . . . . . . . . . . . . 102.1.2 Perceptual Linear Prediction (PLP) . . . . . . . . . . . . . . . . . . . . . 112.1.3 PLP with RASTA Filtering . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.2 Hidden Markhov Model (HMM) . . . . . . . . . . . . . . . . . . . . . . . . . . . 132.3 HTK . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

3 Experimental Setup 163.1 The ELHE Database - Corpus Description . . . . . . . . . . . . . . . . . . . . . . 163.2 The SPSC ASR Engine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.2.1 Including the ELHE Speech Corpus . . . . . . . . . . . . . . . . . . . . . 173.2.2 Generating the Grammar and Transcription files . . . . . . . . . . . . . . 183.2.3 Implementation of the PLP Feature Extraction . . . . . . . . . . . . . . . 193.2.4 Running the Recognizer . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.3 Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

4 Experimental Results and Discussion 214.1 Training with healthy speech (HE) . . . . . . . . . . . . . . . . . . . . . . . . . . 214.2 Training with electrolarynx speech (EL) . . . . . . . . . . . . . . . . . . . . . . . 224.3 Training with healthy and electrolarynx speech (ELHE) . . . . . . . . . . . . . . 22

5 Conclusion 24

February 11, 2015 – ii –

ASR for EL Speech

1Introduction

Automatic speech recognition (ASR) systems are normally designed for healthy speech. Apply-ing disordered speech to those systems without further adjustments will lead to low recognitionrates. As automatic speech recognition is more and more becoming part of daily life throughmobile phones, entertainment devices, smart appliances etc., the technology should be madeaccessible to as many people as possible.Furthermore, speech recognition software could be used in speech training tools for patients withspeech disorders as it allows an objective quantitative measurement of intelligibility [1].

For laryngectomy patients, one possible way to regain their ability to speak is using an electro-larynx (EL). The EL is a medical device that replaces the function of the vocal folds by creatinglow-frequency vibrations in the hypopharynx. The device is usually battery powered and heldto the patients neck (see figure 1.1). Most devices produce constant pitch vibrations between100-200Hz which results in unnatural sounding speech and often low intelligibility [2].

Figure 1.1: The handheld electrolarynx [2]

In previous work at the Signal Processing and Speech Communication Laboratory (SPSC), ELspeech has been applied to a speech recognition system designed for normal, healthy speechusing Mel Frequency Cepstral Coefficients (MFCC) [1].For this thesis we integrated the implementation of Perceptual Linear Prediction (PLP) as

February 11, 2015 – 7 –

1 Introduction

proposed by Dan Ellis [3] into the existing ASR engine at the SPSC Lab and evaluated therecognizer’s performance for the different feature extraction methods.

In chapter 2 we provide a short introduction, explaining the different feature extraction methodsand the statistical model used in our experiments.In chapter 3 we explain the experimental setup, including the database and recognition softwarewe used. In chapter 4 we state and discuss our results. In chapter 5 we summarise our findings.

A short description of this project can be found on the SPSC Lab’s website: https://www.spsc.tugraz.at/student_projects/automatic-speech-recognition-electro-laynx-speech

1.1 Glossary

ASR Automatic Speech Recognition (chapter 2)DCT Discrete Cosine TransformDFT Discrete Fourier Transform

EL Electrolaryx (chapter 1)FFT Fast Fourier TransformHE Healthy

HMM Hidden Markov Model (chapter 2.2)

HTK Hidden Markov Toolkit (chapter 2.3)

MFCC Mel Frequency Cepstral Coefficients (chapter 2.1.1)

LP Linear Prediction (chapter 2.1.2)

PLP Perceptual Linear Prediction (chapter 2.1.2)

RASTA Relative Spectra (chapter 2.1.3)

February 11, 2015 – 8 –

ASR for EL Speech

2Automatic Speech Recognition

ASR systems convert speech into text. The basic idea is to extract a set of features from aspeech signal and to use those along with the transcription to create a statistical model. Thetranscription of unknown speech can then be derived from this model.Mechanisms used to transform a speech signal into a set of compact parameter vectors includeLinear Prediction (LP), PLP and the calculation of MFCCs. As for the statistical modeling,continuous-speech recognition systems with large vocabulary often make use of Hidden MarkovModels (HMMs). Other approaches include Neural Networks and Dynamic Time Warping.ASR systems can be speaker-dependent or speaker-independent. Speaker-dependent systemsinclude a speaker into the training to improve the performance of the system for this specificspeaker. This will improve the recognizer’s performance a lot but is often not possible [4].

2.1 Signal Preparation and Feature Extraction

To be able to build a sophisticated statistical model, a compact representation of the speechsignal is required. Regardless of the methods used to obtain these compact parameter vectors,the signal is divided into overlapping segments typically between 10 and 40 ms (see figure 2.1).The segments are then multiplied by a windowing function w[n]. Usually a hamming window isused:

w[n] = 0.54− 0.46 · cos(

2π(n− 1)

N − 1

)(2.1)

February 11, 2015 – 9 –

2 Automatic Speech Recognition

Figure 2.1: Segmentation of a speech signal [4]

2.1.1 Mel Frequency Cepstral Coefficients (MFCC)

To obtain the MFCCs the frequency axis is warped around the mel scale to simulate the frequencyresponse of the human auditory system. The mel scale (see figure 2.2) is a psychoacousticalscale of pitch perception. It ranges from 0 to 4000 and is commonly computed according to thefollowing formula as defined in the HTKBook [5]:

m = 2595 · log10(1 +f

700) (2.2)

After the signal is transformed into the frequency domain by using the Fast Fourier Transform(FFT) the magnitude coefficients are weighted by a filterbank representing the mel scale (seefigure 2.3).

Figure 2.2: Mel and bark scale (normalized)

February 11, 2015 – 10 –

2 Automatic Speech Recognition

Figure 2.3: Mel scale filterbank [5]

The MFCCs are then obtained by transforming the filterbank amplitudes mj into the cepstraldomain by using the discrete cosine transform (DCT). The DCT is similar to the Discrete FourierTransform (DFT) but uses only cosine functions to represent the signal. With N representingthe number of filterbank channels the coefficients are calculated according to

ci =

√2

N

N∑j=1

mjcos

(iπ

N(j − 0.5)

)(2.3)

Figure 2.4 shows the basic structure of the MFCC method.

speech

Segmentation Hamming |FFT|log Mel scale DCT

MFCC

Figure 2.4: MFCC Feature Extraction

2.1.2 Perceptual Linear Prediction (PLP)

PLP coefficients are obtained by first grouping the frequency bins into critical bands accordingto the bark scale. This step is similar to the frequency warping in mel cepstral analysis. Thebark scale (see figure 2.2) ranges from 1 to 24 and represents the critical bandwidths of hearing.In our PLP implementation the following formula as proposed by Hermansky[6] is used:

b = 6 · asinh(f

600) (2.4)

In the next step the signal is weighted by a simulated equal loudness curve and compressed bytaking the cubic root to approximate the intensity loudness power law.Hermansky [6] proposes the following formula for the equal loudness filter:

E[ω] =ω4(ω2 + 56.8 · 106)

(ω2 + 6.3 · 106)2(ω2 + 0.38 · 109)(2.5)

According to the intensity loudness power law the perceived loudness is equivalent to the cubicroot of the intensity. This can be written as:

y[n] = x[n]0.33 (2.6)

February 11, 2015 – 11 –

2 Automatic Speech Recognition

After the signal has been transformed back into the time domain using the Inverse DiscreteFourier Transform (IDFT) the linear prediction (LP) coefficients are calculated. Linear predic-tion analysis tries to find a good estimation of a sample s(n) based on the linear combinationof the previous samples s(n− 1), s(n− 2), ..., s(n− p).

s(n) = e(n) +

p∑i=1

ai · s(n− i) (2.7)

A good choice of the prediction coefficients ai results in a low prediction error e(n). In LPCanalysis the vocal tract is modeled by an all-pole filter with the following transfer function:

H[z] =1∑p

i=0 aiz−i

(2.8)

To solve the resulting matrix and obtain the parameters ai, typically the Levinson-Durbinrecursion is used. The resulting coefficients ai are then converted to cepstral coefficients.

2.1.3 PLP with RASTA Filtering

The PLP method can be expanded by adding a RASTA-Filter. The basic idea of RASTA-processing is to remove slowly varying frequency components, as the human hearing is relativelyunsensitive to slow changes in speech signals. This is done by transforming the critical-bandpower spectrum into the logarithmic spectrum and then applying a bandpass filter to eachfrequency band. In the logarithmic spectral domain nonlinearities as caused by the recordingequipment or in our case by the EL-device are represented by an additive constant and can thusbe easily filtered. The used filter has the following transfer function:

H[z] = 0.1z4 · 2 + z−1 − z−3 − 2z−4

1− 0.98z−4(2.9)

In the next step the filtered speech is transformed back into the spectral domain and the featurevector is then derived as in conventional PLP[7].Figure 2.5 shows the basic steps for the calculation of PLP and RASTA-PLP features.

speech

Segmentation Hamming FFT Bark scalerasta

processing

EqualLoudness

Curve

IntensityLoudness

PowerIDFT

LinearPrediction

FourierTransform

Cepstral Coefficients

Figure 2.5: PLP Feature Extraction with optional rasta processing

February 11, 2015 – 12 –

2 Automatic Speech Recognition

Figure 2.6 shows the spectral representation of the different feature vectors. It shows thefeatures of the sentence ”Einst stritten sich Nordwind und Sonne” in the spectral domain,spoken by a female speaker using an electrolarynx. The first spectrum is that of the unprocessedspeech signal sampled at 16 kHz from 0 to 8000 Hz. The other three show the respectivecompact feature vectors with reduced spectral resolution. What can be observed is that theconstant spetral components caused by the electrolarynx are most effectively suppressed byusing RASTA-PLP.

Figure 2.6: Spectra of different FE methods

2.2 Hidden Markhov Model (HMM)

Two utterances of the same word will never sound exactly the same but share similarities. Forexample one can easily distinguish a voiced phone from an unvoiced phone but each utterancewill sound different depending on the context, the speaker and the environment. How the speechsignal will look like can only be said with a certain probability [4]. As it is impossible for anyhuman speaker to exactly reproduce an utterance phonetically, speech has to be regarded as astochastic sequence of symbols.In a stochastic process the probability to reach a specific state depends on the previous (andfollowing) states in a non-deterministic way. The probability distribution can be described witha statistical model. If the probability distribution for future states depends on the current stateonly, then this process can be called a Markov process.The so called Hidden Markov Model (HMM) is a Markov process where the system state is notfully observable. The state can not be precisely determined and is thus called hidden state,

February 11, 2015 – 13 –

2 Automatic Speech Recognition

but correlates with the observation. Most modern speech recognition systems with sub-wordrecognition rely on a HMM of some sort.To build the statistical model, we regard speech as a sequence of states. Each state will be heldfor a certain time before the next state is reached. For every discrete time interval a probabilityto change into another state can be given. In most ASR systems these models are linear modelsextended with alterative paths and skipping states depending on the use case.For the recognition process, we assume that the sequence of observed speech vectors corre-sponding to each word or sub-word is generated by a Markov Model. For each time unit t anobservable speech vector ot is generated from the output probability density bi(ot) (see figure2.7). We get the observed output vector O = o1, o2, ..., oT , however the actual underlying statesequence X = x1, x2, x3, ..xT stays unknown.The joint probability is computed by summing the product of the transition probabilities aijand the output probabilities bt over all possible state sequences X = x1, x2, ..., xT :

P (O) =∑X

ax0x1

T∏t=1

bxt(ot)axtxt+1 (2.10)

For continuous speech input several linear HMMs are used in sequence. Each model in thesequence corresponds to the respective symbol (word or sub-word).

1 2 3 4 5 6

observationsequence

O:

o1 o2 o3 o4 o5 o6 o7 o8 o9

a12

a22

a23

a33

a34

a44

a45

a55

a56

b2(o1)b2(o2) b3(o3)

b3(o4)

b4(o5)

b4(o6)

b4(o7)

b5(o8)

b5(o9)

Figure 2.7: Linear Hidden Markov Model and the observable output sequence O = o1, o2, ..., o9

2.3 HTK

The Hidden Markov Model Toolkit (HTK) is a software implementation of the HMM primarilyused for speech processing sytems. It was developed by the Machine Intelligence Laboratory ofthe Cambridge University and is now held by Microsoft. The source code is available online foropen use upon registration [8]. The Toolkit contains a number of useful tools for data prepa-ration, speech analysis and HMM modelling, including tools to generate and edit grammar anddictionary files necessary for speech recognition and synthesis. As shown in figure 2.8 building arecognizer involves four main processing steps: data preparation, training, testing and analysis.Data preparation involves converting the speech data and the associated transcription into thecorrect format for further processing with HTK. There are also tools available to record speechand manually annotate the transcriptions.In training the data is used to build a set of HMMs. HTK offers tools to specify the topology

February 11, 2015 – 14 –

2 Automatic Speech Recognition

Figure 2.8: The fundamentals of HTK [5]

and the characteristics of the HMM. For the actual training process it is possible to adjust thecalculation of the models depending on the size of the database and the kind of transcriptionand label files available.For the recognition, word networks, a pronounciation dictionary and the HMMs are needed.The recognizer converts the word network to a sub-word network and matches the respectiveHMM to each sub-word instance. HTK includes tools to create simple bigram word networksbut also allows higher level grammar.To evaluate the recognizer’s performance, an analysis tool is available. It compares the referencetranscriptions of known speech input with the generated output transcription using a simple al-gorithm that counts substitution, deletion and insertion errors [5].

February 11, 2015 – 15 –

ASR for EL Speech

3Experimental Setup

3.1 The ELHE Database - Corpus Description

The Austrian German parallel electrolarynx speech and healthy speech corpus (ELHE) consistsof both healthy and electrolarynx speech recorded at the SPSC Lab’s recording studio [9]. Fourhealthy male (02M, 04M, 05M and 06M) and three healthy female speakers (01F, 03F and 07F)have been recording up to 503 utterances with healthy speech and again with an EL device.According to [10] there are no significant perceptual differences between EL speech produced bya patient and a healthy person. The sentences are the same for all speakers but half as manyfor the speakers 03F and 06M. Additionally 35 utterances by a patient were recorded (08M).For our experiments we use the same 60 sentences per speaker for testing the ASR engine’sperformance and the rest for training the HMMs. Note that no sentences of 08M are used forthe training process. All 35 utterances are used for testing.

Training Testingkind speaker # of sentences kind speaker # of sentencesHE 01F 443 HE 01F 60

02M 443 02M 6003F 187 03F 6004M 443 04M 6005M 443 05M 6006M 187 06M 6007F 443 07F 60

EL 01F 443 EL 01F 6002M 443 02M 6003F 187 03F 6004M 443 04M 6005M 443 05M 6006M 187 06M 6007F 443 07F 6008M 0 08M 35

Table 3.1: Number of utterances per speaker used for training and testing

February 11, 2015 – 16 –

3 Experimental Setup

3.2 The SPSC ASR Engine

The SPSC Lab’s ASR engine was set up by Juan A. Morales-Cordovilla during his work atTUGraz from June 2012 to December 2014.In this overview we will describe our approach on using the ASR Engine for our experiments.For a more detailed documentation refer to [11].The SPSC Lab’s ASR Engine is built in three main parts, namely the speech database consistingof the recorded speech material and its transcription, the front end used for feature extractionand data preparation implemented in Matlab and the recognition back end implemented withHTK.

3.2.1 Including the ELHE Speech Corpus

The speech data used for testing and training is located in /Speechdata/. In order to use newspeech recordings as a speech database for the ASR System the following folder structure hasto be maintained:/Database_name/Database_kind/Speaker_ID/basename.wav. Note that /Database_kind/ in-dicates whether the folder is used for training or testing. The following picture shows thestructure of the ELHE Database. The ASR Engine also allows to maintain a seperate smallerDatabase for test purposes next to the database with all utterances. To differentiate the two,either _All or _25 is appended to the Database_name, so they can be easily selected via theparameter DBSize.

Speechdata

ELHE

TestEL

01F

02M

03F

...

TestHE

01F

02M

03F

...

TrainEL

01F

02M

03F

...

TrainHE

01F

02M

03F

...

Figure 3.1: Structure of speech data

As our database is relatively small, we chose not to use a seperate smaller Database, so wemoved the recordings in the respective structure (see 3.1) to Speechdata/ELHE_All.To run the recogniser all the subfolders (individual speakers) needed for the experiment have tobe listed at AsrEngine/Recogniser/RecStages/DataBDir.sh. For the different training sce-narios, we had to select which folders and subfolders to use by listing them here.For training with the combined database (TrainEL and TrainHE) we also had to provide thepaths at AsrEngine/Recogniser/RecStages/TrainGen/Train.sh where the file $TempFListis generated. (Note: This is normally handled by the parameter $KTrain, but since we workedwith a combined database we had to do it manually.)

In addition to the wav files two lists with the filenames are needed to select which utter-ances to use for training and testing. The lists have to be placed in AsrEngine/Recognizer/

Recstages/TrainGen/TrainDir/Database_name/Lists/TrListAll_All.txt for training andin AsrEngine/Recognizer/Recstages/TestGen/TestDir/Database_name/Lists/WoTeListAll_

February 11, 2015 – 17 –

3 Experimental Setup

All.txt for testing. The single entries consist of the folder the wav-file is located in and thebasename without extension.In our case the list for Training looks like this:

01F/01 FNS00201

01F/01 FNS00202

01F/01 FNS00203

01F/01 FNS00204

01F/01 FNS00205

...

TrListAll_ All. txt

3.2.2 Generating the Grammar and Transcription files

For generating the transcriptions the following files had to be placed at AsrEngine/ExtraFun/Transcription/Trans/ELHE:

� TrListAll_All.txt

� WoTeListAll_All.txt

� StBNOrtTDic.txt

StBNOrtTDic.txt is the dictionary with all the basenames and the corresponding ortographictranscriptions including umlauts and punctuation characters.In AsrEngine/extraFun/Trascription/Trans/ a Matlab function called ELHETrans.m is re-quired. This can be done by copying one of the existing functions and renaming it. For example:Copy FBKGEDIRHATrans.m then name it ELHETrans.m.Inside the function the necessary code has to be uncommented. E.g. for training the code belowFor Training is needed.In Trans.m at AsrEngine/extraFun/Trascription/ the function is called, so there the namealso has to be ELHETrans.m.Trans.m can now be executed, the resulting files are located in AsrEngine/extraFun/Trascription/

Trans/Res.For training the following output files have to be placed at AsrEngine/Recognizer/Recstages/TrainGen/TrainDir/ELHE:

� Quests.txt

� MonoPhList.txt

� MonoPhSpList.txt

� TrMlfPhTrans.txt

� TrMlfPhTransSp.txt

For testing we copied the following output files to AsrEngine/Recognizer/Recstages/TestGen/TestDir/ELHE:

� WoW2AUDic.txt

� WoWList.txt

� WoMlfWTrans.txt

� WoBiSlfGr.txt

� WoSentSlfGr.txt

February 11, 2015 – 18 –

3 Experimental Setup

3.2.3 Implementation of the PLP Feature Extraction

For the PLP feature extraction we created a Matlab function AsrEngine/MatlabFE/JuanFE/

BaseFE/FEPLP.m based on the function .../FEBase.m which is an implementation of the MFCCfeature extraction method. The implementation of our FEPLP.m is based on the implementationby Dan Ellis [3] .The functions FEPLP.m and FEBase.m are called depending on the $TrOpt2 (PLP or MFCC)inside the function FEEmbBase.m. This Parameter $TrOpt2 can easily be accessed via the mainShell-script AllRec.sh and multiple options can be selected in one go for parallel processing.Note that if additional functions are added to the Front End, the paths have to be added tothe working directory by adding the paths in AsrEngine/MatlabFE/JuanFE/Recognizer/Fun/

AddSMPath.m.We also included the option to do RASTA-filtering. However, this has to be done manually(dorasta=1 in FEPLP.m) as we did not implement another training paramter for this option.The Front End has to compiled again after switching this parameter.

3.2.4 Running the Recognizer

The main tool for running the recognizer on the grid system is AllRec.sh which is located at/AsrEngine/Recogniser/. Before we could start using the system, we had to revise some pathsincluding username, the location of the ASR engine and the speech database. For training theHMMs with both EL and HE speech we set the following parameters in AllRec.sh:

################ Reconition Options ##################

set FEK = FEEmbBase # FE Kind (see Matlab FE)

set SDBase = ELHE # Speech DataBase

set DBSize = All # DataBase Size (25 or All)

set KTrain = Clean # Kind of Train (irrelevant for ELHE database)

set SpDep = 0 # 0 or 1, Speaker In- or Dependent

set Repr = Cep # Kind of Representation (Cep or Spe)

set Stages = (FETr Tr) # Recognition Stages: (FETr, Tr, FETe)

################ FE and Test Parameters ###############

set FE1 = (0) # Sentence Kind: All, Read, Sponteneous (0,1,2)

set FE2 = (MFCC PLP) # FE Kind for FEEmbBase

set FE3 = (x) # just for reference

set FE4 = (ELHE) # just for reference

set TE1 = (0) # Wo or Ph recognition (0,1). FEMatlab options

set TE2 = (0) # Word Penalty recognition (-20, 0 ,20)

As mentioned before, we didn’t implement RASTA-PLP as an additional feature extractionmethod but simply added the transformation and filtering to our PLP function in Matlab andcompiled the FEDir once again. We then set the parameter FE3 to Rasta to make sure we havethe correct reference for testing and not to overwrite the HMMs created with PLP features.

################ FE and Test Parameters ###############

set FE1 = (0) # Sentence Kind: All, Read, Sponteneous (0,1,2)

set FE2 = (PLP) # FE Kind for FEEmbBase

set FE3 = (Rasta) # just for reference

set FE4 = (ELHE) # just for reference

set TE1 = (0) # Wo or Ph recognition (0,1). FEMatlab options

set TE2 = (0) # Word Penalty recognition (-20, 0 ,20)

February 11, 2015 – 19 –

3 Experimental Setup

We started training in parallel using the command ./AllRec.sh p. After the programm isfinished it is possible to get the log files to check for warnings and errors using ./AllRec.sh e.For testing we first had to indicate which HMMs to use. This is done in the function TEParam.m

at /AsrEngine/MatlabFE/JuanFE/Recognizer/Fun/. Here we simply copied the string con-taining the feature extraction and test parameters for example 0PLPRastaELHE. Logically it wasnecessary to compile the FEDir after editing the test tarameters. For future experiments wewould recommend that this is done automatically to avoid compiling the Front End for everytraining scenario. After setting the parameter Stages to (FETe) we ran the feature extractionof the test material using once again ./AllRec.sh p. Following we obtained the results by using./AllRec.sh r.

The biggest part of the necessary adjustments for different experiments, was to specify thesubfolders of the speech database at respective places in the code (AllRec.sh, DataBDir.sh,Train.sh). As this can be unituitive and time consuming we suggest that the paths are onlystated at one part in the code and then beeing forwarded to the other parts. It would alsobe beneficial to the clearness and comprehensibility of the programm to store the dictionaryin the database folder together with the audio files and generate the word lists, grammar andtranscription files automatically.

3.3 Experiments

To evaluate the performance of PLP features in comparison to MFCC features we trained therecognizer with healthy speech only, EL speech only and the combined database. We evaluatedeach training scenario with both healthy and EL speech. All training and testing was performedusing all of the three discussed feature extraction methods, namely MFCC, PLP and RASTA-PLP.All speakers except for 08M were included in the training process.For the feature extraction we used a frame length of 32 ms, a frame shift of 10 ms, 26 triangularfilters for bark and mel spectrum, 13 MFCCs and a model order of 12 for the LP. Also deltaand delta-delta features were appended. We used a bigram language model derived from thetest sentences with low perplexity.

February 11, 2015 – 20 –

ASR for EL Speech

4Experimental Results and Discussion

In tables 4.1, 4.2 and 4.3 the word accuracy rate WAcc is listed for the different speakers andtraining scenarios. The WAcc rate is calculated for each sentence by deducting deletions D,insertions I and subtractions S from the number of words in each sentence N and is given inpercent of N :

WAcc =N − S −D − I

N· 100% (4.1)

4.1 Training with healthy speech (HE)

First we trained the recognizer with HE speech only. Note that all speakers except of speaker08M have been included in the training process.As expected, we obtained very good results for the matched case (HE speech applied to HEmodel) for all three feature extraction methods with an average WAcc of 99.61 % for MFCCfeatures, 99.55% for PLP features and 98.83 % for RASTA-PLP respectively.The performance decreased significantly for the mismatched case (EL speech applied to HEmodel). While we obtained mediocre recognition rates for the MFCC features, the recognitionrates are especially low for PLP features (0 - 2.28 %). Only speaker 08M stands out withrelatively good 20.66 %. RASTA-filtering significantly improves the performance of the PLPfeatures, but the MFCC features still outperform the RASTA-PLP features.As already established in earlier experiments [1], the speaker 03F stands out with poor perfor-mance. This is because she is the least experienced with the EL device and produces the leastintelligible speech. On the other hand, speaker 02M and the EL patient 08M score relativelywell, being the most experienced with the EL device.

February 11, 2015 – 21 –

4 Experimental Results and Discussion

Training with HE

FE-kind MFCC PLP RASTA-PLP

speaker EL HE EL HE EL HE

01F 51.90 99.54 1.83 99.39 28.92 99.70

02M 59.82 99.85 1.83 99.70 55.40 99.85

03F 3.96 100.00 0.76 100.00 3.96 98.93

04M 23.29 99.85 0.91 99.70 14.00 99.09

05M 14.16 98.17 0.00 99.09 11.72 98.63

06M 19.48 100.00 2.28 99.85 19.33 99.54

07F 15.83 99.85 2.28 99.09 1.07 96.04

08M 57.85 - 20.66 - 31.82 -

average 35.18 99.61 3.81 99.55 20.78 98.83

Table 4.1: Word Accuracy Rate WAcc in percent for HE training

4.2 Training with electrolarynx speech (EL)

For this experiment we trained the recognizer with EL speech only. Again, all speakers exceptof speaker 08M have been included in the training process.The recognition rates for the matched case (EL speech applied to EL model) are still very goodfor the MFCC features with a WAcc of over 90% but significantly lower for the PLP features(75.59%). Surprisingly, when applying RASTA-filtering the results slightly decrease.The results we obtained for the mismatched case (HE speech applied to EL model) were low forall three feature extraction methods, especially for the PLP features.

Training with EL

FE-kind MFCC PLP RASTA-PLP

speaker EL HE EL HE EL HE

01F 95.89 11.26 86.76 1.98 84.17 4.57

02M 99.54 7.15 95.13 0.91 91.48 3.81

03F 74.58 11.87 40.79 2.44 51.75 4.57

04M 95.89 38.51 88.74 2.28 82.95 9.13

05M 94.82 4.11 87.06 0.91 80.82 5.02

06M 96.19 3.81 82.50 1.98 72.60 3.81

07F 95.74 13.85 83.26 1.52 77.78 6.24

08M 78.93 - 40.50 - 19.01 -

average 91.45 12.94 75.59 1.72 70.07 5.31

Table 4.2: Word Accuracy Rate WAcc in percent for EL training

4.3 Training with healthy and electrolarynx speech (ELHE)

Finally we trained the recognizer using the combined database. Testing with healthy speechwe obtained very good results, comparable to those obtained when training with only healthyspeech. When testing with electrolarynx speech the rates are still reasonably high with up to87.44% using MFCC and 83.26% using PLP. RASTA filtering did not improve the performanceof PLP feature extraction. For all training scenarios the speaker-independent case (08M) isparticularly interesting. Naturally, one would expect speaker 08M to perform worse than the

February 11, 2015 – 22 –

4 Experimental Results and Discussion

Training with ELHE

FE-kind MFCC PLP RASTA-PLP

speaker EL HE EL HE EL HE

01F 95.28 99.09 91.93 98.48 84.93 97.56

02M 97.56 98.78 98.17 99.24 94.22 98.02

03F 63.77 99.54 54.64 100.00 45.21 93.46

04M 95.28 100.00 93.00 100.00 84.78 97.56

05M 94.52 98.93 90.11 99.09 81.13 93.91

06M 90.56 99.54 90.87 99.54 79.91 98.93

07F 96.04 98.02 79.15 99.54 77.17 91.32

08M 66.53 - 68.18 - 35.95 -

average 87.44 99.13 83.26 99.41 72.91 95.82

Table 4.3: Word Accuracy Rate WAcc in percent for ELHE training

other speakers included in the training. This is true for the matched case (Training with EL).However for the mismatched case (Training with HE), he outperforms the other speakers byaround 20% when using the PLP features. This might be due to the fact that this speaker is apatient and has the most experience with an EL device and therefore produces the most naturalsounding speech. He also uses a different EL device than the other speakers.

February 11, 2015 – 23 –

ASR for EL Speech

5Conclusion

In this thesis we compared different feature extraction methods for the use in ASR systemsapplied to EL speech. In particular, we evaluated the performance of PLP features with andwithout RASTA processing compared to MFCC features.For this we used the ASR system developed and maintained at SPSC Lab and the parallel ELHEdatabase.We found out that MFCC feature extraction generally led to higher recognition rates than PLP.While comparable results can be achieved for matched training and testing, the perfomance ofPLP features significantly decreases for mismatched cases. Also RASTA filtering did not consis-tently improve the perfomance of the recogniser, although slightly better results were obtainedfor the mismatched cases.Even though there is a great mismatch between the two domains, high word accuracy rates canbe achieved using the combined corpus for both MFCC and PLP feature extraction.

February 11, 2015 – 24 –

ASR for EL Speech

Bibliography

[1] A. Fuchs, J. Morales-Cordovilla, and M. Hagmuller, “ASR for electro-laryngeal speech,” inProc. ASRU, Olomouc, Czech Republic, 2013.

[2] J. Bronzino, The biomedical engineering handbook 2, The electrical engineering handbookseries. CRC Press, 2000.

[3] D. P. W. Ellis, “PLP and RASTA (and MFCC, and inversion) in Matlab,”2005, online web resource, accessed in January 2015. [Online]. Available: http://www.ee.columbia.edu/∼dpwe/resources/matlab/rastamat/

[4] I. Rogina, Sprachliche Mensch-Maschine-Kommunikation, 2005.

[5] S. J. Young, D. Kershaw, J. Odell, D. Ollason, V. Valtchev, and P. Woodland, The HTKBook Version 3.4. Cambridge University Press, 2006.

[6] H. Hermansky, “Perceptual linear predictive (PLP) analysis of speech,” J. Acoust. Soc.Am., 1990.

[7] H. Hermansky and N. Morgan, “RASTA Processing of Speech,” IEEE Transactions onSpeech and Audio Processing, 1994.

[8] “HTK Website,” online web resource, accessed in January 2015. [Online]. Available:http://htk.eng.cam.ac.uk/

[9] A. K. Fuchs and M. Hagmuller, “A german parallel electro-larynx speech – healthy speechcorpus,” in 8th International Workshop on Models and Analysis of Vocal Emissions forBiomedical Applications, Firenze University Press. Florence, Italy: Firenze UniversityPress, 12 2013, pp. 55 – 58.

[10] M. Hagmuller, “Speech enhancement for disordered and substitution voices,” Ph.D. Thesis,Graz University of Technology, 2009.

[11] J. Morales Cordovilla and M. Hagmuller, “AsrEngine Documentation,” Signal Processingand Speech Communication Laboratory, Graz University of Technology, Tech. Rep., 2014.

February 11, 2015 – 25 –