arXiv:2011.09631v1 [eess.AS] 19 Nov 2020pare with full-band MelGAN (FB-MelGAN)[17] as a baseline and...

Transcript of arXiv:2011.09631v1 [eess.AS] 19 Nov 2020pare with full-band MelGAN (FB-MelGAN)[17] as a baseline and...

-

UNIVERSAL MELGAN: A ROBUST NEURAL VOCODER FOR HIGH-FIDELITYWAVEFORM GENERATION IN MULTIPLE DOMAINS

Won Jang1, Dan Lim2, Jaesam Yoon1

1Kakao Enterprise Corp., Seongnam, Korea2Kakao Corp., Seongnam, Korea

ABSTRACT

We propose Universal MelGAN, a vocoder that synthesizes high-fidelity speech in multiple domains. To preserve sound quality whenthe MelGAN-based structure is trained with a dataset of hundredsof speakers, we added multi-resolution spectrogram discriminatorsto sharpen the spectral resolution of the generated waveforms. Thisenables the model to generate realistic waveforms of multi-speakers,by alleviating the over-smoothing problem in the high frequencyband of the large footprint model. Our structure generates signalsclose to ground-truth data without reducing the inference speed,by discriminating the waveform and spectrogram during training.The model achieved the best mean opinion score (MOS) in mostscenarios using ground-truth mel-spectrogram as an input. Es-pecially, it showed superior performance in unseen domains withregard of speaker, emotion, and language. Moreover, in a multi-speaker text-to-speech scenario using mel-spectrogram generatedby a transformer model, it synthesized high-fidelity speech of 4.22MOS. These results, achieved without external domain information,highlight the potential of the proposed model as a universal vocoder.

Index Terms— waveform generation, MelGAN, universalvocoder, robustness, text-to-speech

1. INTRODUCTION

Vocoders were originally used for speech compression in the fieldof communication. Recently, vocoders have been utilized in variousfields such as text-to-speech[1, 2, 3, 4] and voice conversion[5] orspeech-to-speech translation[6]. Neural vocoders generate human-like voices using neural networks, instead of using traditional meth-ods that contain audible artifacts [7, 8, 9].

Recently, it has been demonstrated that vocoders exhibit su-perior performances in generation speed and audio fidelity whentrained with single speaker utterances. However, some modelsface difficulty when generating natural sounds in multiple domainssuch as speakers, language, or expressive utterances. The abilityof these models can be evaluated by the sound quality when themodel is trained on data of multiple speakers and the sound qualityof the unseen domain (from an out-of-domain source). A vocoderthat can generate high-fidelity audio in various domains, regardlessof whether the input has been encountered during training or hascome from an out-of-domain source, is usually called a universalvocoder[10, 11].

MelGAN[12] is a vocoder based on generative adversarial net-works (GANs)[13]. It is a lightweight and robust model for unseenspeakers but yields lower fidelity than popularly employed mod-els [14, 15, 16]. MelGAN alleviates the metallic sound that oc-curs mainly in unvoiced and breathy speech segments through multi-scale discriminators that receive different scale waveforms as inputs.

However, it has not been implemented efficiently for learning withmultiple speakers for a universal vocoder.

In this study, we propose Universal MelGAN. The generatedwaveform of the original MelGAN with audible artifacts appearsas an over-smoothing problem with a non-sharp spectrogram. Weadded multi-resolution spectrogram discriminators to the model toaddress this problem in the frequency domain. Our multi-scale dis-criminators enable fine-grained spectrogram prediction by discrim-inating waveforms and spectrograms. In particular, they alleviatethe over-smoothing problem in the high frequency band of the largefootprint model, enabling the generation of realistic multi-speakerwaveforms.

To evaluate the performance of the proposed model, we com-pare with full-band MelGAN (FB-MelGAN)[17] as a baseline andtwo other vocoders: WaveGlow[16] and WaveRNN[10]. We de-signed experiments in both Korean and English for language inde-pendency. For evaluation, we prepared multiple speaker utterancesthat included unseen domain scenarios, such as new speakers, emo-tions, and languages.

The evaluation results indicate that the proposed model achievedthe best mean opinion score (MOS) in most scenarios and efficientlypreserved the fidelity in unseen speakers. In addition, the evaluationsshow that the model efficiently preserves the original speech, even inchallenging domains such as expressive utterances and unseen lan-guages. In multi-speaker text-to-speech scenarios, our model cangenerate high-fidelity waveforms with high MOS, and the modeloutperforms compared vocoders. This results without any externaldomain information suggest the possibility of the proposed model asa universal vocoder.

2. RELATED WORK

MelGAN[12] is a GAN-based lightweight vocoder in which the gen-erator comprises transposed convolution layers for upsampling anda stack of residual blocks for effective conversion. Multiple dis-criminators are trained with different scale waveforms to operatein different ranges. Recently, a modified architecture called FB-MelGAN[17] with improved fidelity has been proposed.

WaveGlow[16] can directly maximize the likelihood of databased on a normalizing flow. A chain of flows transforms simpledistributions (e.g. isotropic Gaussian) into the desired data distri-bution. It has been shown[18] that the speaker generalization ofWaveGlow obtained high objective scores than other models.

WaveRNN[15] is an autoregressive model that generates wave-forms using recurrent neural network (RNN) layers. It has beendemonstrated that WaveRNN preserves sound quality in unseendomains[10]. The robustness of WaveRNN using speaker represen-tations has been discussed[19].

arX

iv:2

011.

0963

1v2

[ee

ss.A

S] 4

Mar

202

1

-

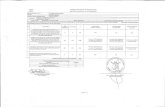

(a) Generator (b) Multi-scale waveform and spectrogram discriminators

Fig. 1. An architecture of the Universal MelGAN.

3. DESCRIPTION OF THE PROPOSED MODEL

3.1. FB-MelGAN

FB-MelGAN[17] has been used as the baseline in this study. Theadvantages of FB-MelGAN include pre-training of the generator, in-creased receptive field of residual stacks, and application of multi-resolution short-time Fourier transform (STFT) loss[20] as auxiliaryloss. These modifications are effective to achieve better fidelity andtraining stability.

The multi-resolution STFT loss is the sum of multiple spectro-gram losses calculated with different STFT parameter sets. It com-prises the spectral convergence loss Lmsc(·, ·) and the log STFT mag-nitude loss Lmmag(·, ·). These objectives are defined as follows:

Lmsc(x, x̂) =‖|STFTm(x)| − |STFTm(x̂)|‖F

‖|STFTm(x)|‖F(1)

Lmmag(x, x̂) =1

N‖ log |STFTm(x)| − log |STFTm(x̂)|‖1 (2)

Laux(G) =1

M

M∑m=1

Ex,x̂[Lmsc(x, x̂) + Lmmag(x, x̂)

](3)

where ‖ · ‖F and ‖ · ‖1 denote the Frobenius and L1 norms, and MandN denote the number of STFT parameter sets and the number ofelements in the STFT magnitude, respectively. |STFTm(·)| denotesthe STFT magnitude of the m-th STFT parameter set. The loss isused to minimize distances between the real data x and predicteddata x̂ = G(c), using the generator G. The overall objectives withauxiliary loss are defined as follows:

LG(G,D) = Laux(G) +λ

K

K∑k=1

Ex̂[(Dk(x̂)− 1)2] (4)

LD(G,D) =1

K

K∑k=1

(Ex[(Dk(x)− 1)2] + Ex̂[Dk(x̂)2]) (5)

where c denotes the mel-spectrogram, andK refers to the number ofdiscriminators Dk. There is a balancing parameter λ that optimizesthe adversarial and auxiliary loss simultaneously.

3.2. Universal MelGAN: Improvements

We confirmed that FB-MelGAN trained on the utterances of hun-dreds of speakers generated unsatisfactory sound quality wave-forms, unlike when trained on a single speaker’s utterances. InFB-MelGAN, multi-scale discriminators alleviate this degradationproblem. However, in our multi-speaker experiment, metallic soundswere discernable, especially in unvoiced and breathy segments.

To trade inference speed and quality, we increased the sizes ofthe hidden channel in the generator by four times and added gatedactivation units (GAUs)[21] to the last layers of each residual stack.Although the expansion of the nonlinearity improved the averagequality of the multi-speaker, the over-smoothing problem appearedin the high frequency band, accompanied by audible artifacts.

We assumed that discriminators in the temporal domain may notbe sufficient for the problem in the frequency domain. To solvethe problem, we propose multi-resolution spectrogram discrimina-tors that expand the spectrogram discriminator[22]. The objectiesfor Universal MelGAN can be updated as follows:

L′G(G,D) = Laux(G) +λ

K+M

( K∑k=1

Ex̂[(Dk(x̂)− 1)2]

+M∑

m=1

Ex̂[(Dsm(|STFTm(x̂)|)− 1)2]) (6)

L′D(G,D) =1

K+M

( K∑k=1

(Ex[(Dk(x)− 1)2] + Ex̂[Dk(x̂)2])

+

M∑m=1

(Ex[(Dsm(|STFTm(x)|)−1)2]+Ex̂[Dsm(|STFTm(x̂)|)2]))

(7)where Dsm denotes a spectrogram discriminator attached to themulti-resolution STFT module. Each m-th model uses the previ-ously calculated spectrogram to minimize the m-th STFT loss.

Fig. 1. shows the architecture of the Universal MelGAN. Thewaveforms generated by the large footprint generator in Fig. 1(a) arediscriminated by multiple scales for both the waveform and spectro-gram in Fig. 1(b).

-

Fig. 2. Comparison of spectrograms of the generated waveforms in the 6 to 12 kHz bands. (Each waveform has 24 kHz sampling rate.)

In Fig. 2, we compared the high frequency bands of the gener-ated waveforms. Although the large foorprint model of the baselineproduces improved resolution, it still faces an over-smoothing prob-lem, especially in high bands above 9 kHz. The proposed model notonly alleviates this problem (red) but also generates harmonic shapesthat there are not in the ground-truth in some segments (yellow).

4. EXPERIMENTS

We designed experiments in both Korean and English. During train-ing, we used reading style and studio-quality internal datasets with62 speakers and 265k utterances in Korean. We also used the read-ing style open datasets, LJSpeech-1.1[23] and LibriTTS(train-clean-360)[24], with 905 speakers and 129k utterances in English. To ac-curately evaluate the robustness, we prepared test sets that includedvarious seen and unseen domains, as shown in Table 1.

To evaluate the seen domain (i.e. speaker), we considered twoscenarios: a speaker with relatively more utterances and a speakerwith relatively fewer utterances in the total training set. To eval-uate whether the generated waveforms of the speaker trained withfewer utterances also preserved the sound quality well, the trainingset comprised a relatively large set of single speakers (K1 for Ko-rean, E1 for English) and a multi-speaker small set for each speaker(K2 for Korean, E2 for English). Each speaker’s test set was used toevaluate the sound quality.

To evaluate the unseen domains, three scenarios that were notincluded in the training data were considered: speaker, emotion,and language. We prepared utterances of unseen speakers to eval-uate the robustness of the speaker (K3 for Korean, E3 for English).We included the following variations of emotional utterances, suchas happiness, sadness, anger, fear, disgust, or sports casting in theevaluation sets (K4 for Korean, E4 for English). Ten unseen lan-guages were used to evaluate the language robustness (M1 for bothlanguages). We evaluated the universality of each model using theutterances of these unseen domains.

4.1. Data configurations

All speech samples were resampled to a rate of 24 kHz. We used ahigh-pass filter at 50 Hz and normalized the loudness to -23 LUFS.100-band log-mel-spectrograms with 0 to 12 kHz frequency bandswere extracted by using a 1024-point Fourier transform, 256 sampleframe shift, and 1024 sample frame length. All spectrograms werenormalized utterance-wise to have an average of 0 and variance of 1.

1Available at: http://aicompanion.or.kr/nanum/tech/data_introduce.php?idx=45

Table 1. Datasets for evaluation.Korean datasets

Index Name RemarksK1 Internal dataset #1 Seen: single speakerK2 Internal dataset #2 Seen: multi-speakerK3 Internal dataset #3 Unseen: speaker

K4 Internal dataset #4 Unseen: emotion+AICompanion-Emotion1

English datasetsIndex Name RemarksE1 LJSpeech-1.1[23] Seen: single speakerE2 LibriTTS(train-clean-360)[24] Seen: multi-speakerE3 LibriTTS(test-clean)[24] Unseen: speakerE4 BlizzardChallenge2013[25] Unseen: emotion

Multiple language datasetIndex Name RemarksM1 CSS10[26] Unseen: language

4.2. Model settings

The settings that are not specified follow the original papers or im-plementations in the footnotes.

When training WaveGlow[16]2 and WaveRNN[10]3, GitHubimplementations were used for reproducibility. In WaveGlow, wetrained up to 1M steps with all settings same as those in the imple-mentation. For inference, a noise sampling parameter σ = 0.6 wasused. In WaveRNN, we set up predicting 10-bit µ-law samples. Atevery 100k step, we reduced the learning rate to half of the initialvalue of 4e− 4 and trained up to 500k steps.

In FB-MelGAN[17], each upsampling rate was set to 8, 8, and4 to match the hopping size of 256. In our dataset, stable trainingwas effectively realized by reducing the initial learning rate of thediscriminators to 5e − 5. The batch size was set to 48. We trainedup to 700k steps using the Adam optimizer.

In Universal MelGAN, the channel sizes of the input layers andthe layers inside the three residual stacks in the generator were in-creased by four times; therefore, they were changed to 2048, 1024,512, and 256, respectively. GAU was added inside each residualstack, and the channel size of the previous layer was doubled tomaintain the same output size after GAU processing. Each spectro-gram discriminator has a structure similar to the waveform discrimi-nator (same as the discriminator used in FB-MelGAN), as shown inFig. 1(b). 1-d convolutions were replaced with 2-d, and all layers

2https://github.com/NVIDIA/waveglow3https://github.com/bshall/UniversalVocoding

http://aicompanion.or.kr/nanum/tech/data_introduce.php?idx=45 http://aicompanion.or.kr/nanum/tech/data_introduce.php?idx=45https://github.com/NVIDIA/waveglowhttps://github.com/bshall/UniversalVocoding

-

Table 2. MOS results of each model for seen speakers.Trained with Korean utterances

Model Single speaker(K1) Multi-speaker(K2)WaveGlow 3.42±0.08 3.10±0.07WaveRNN 3.97±0.08 3.42±0.08FB-MelGAN 3.25±0.09 2.72±0.09Ours 4.19±0.09 4.05±0.08Recordings 4.33±0.08 4.23±0.08

Trained with English utterancesModel Single speaker(E1) Multi-speaker(E2)WaveGlow 3.65±0.15 3.27±0.19WaveRNN 3.85±0.14 3.70±0.15FB-MelGAN 3.62±0.16 3.37±0.17Ours 3.81±0.15 3.71±0.15Recordings 3.89±0.16 3.79±0.16

have a channel size of 32, 1 group, and 1 dilation. The last two lay-ers have a kernel size of 3, and all other layers have kernel sizes of9. During operation, the length of the temporal domain was reducedto a stride of 2 over 3 times. Leaky ReLU with α = 0.2 was usedfor each activation. The balancing parameter λ for all discriminatorswas set to 2.5. We trained up to 700k steps with the same learningrate, training strategy, batch size, and optimizer as in FB-MelGAN.

5. RESULTS

We implemented MOS assessments in which listeners scored nat-uralness from 1 (negative) to 5 (positive) for each sample. Tenrandomly sampled utterances were prepared for each scenario andmodel, and 150 scores were collected to calculate the mean and95% confidence intervals. Fifteen native listeners participated in theKorean evaluation. For the English evaluation, we used a crowed-sourced evaluation via Amazon Mechanical Turk, with more than15 workers from the US for each scenario.

5.1. Seen speakers

These scenarios consist of evaluation sets for a single speaker with arelatively large training set for each model and for multiple speakersusing a relatively small dataset.

Table 2 shows that the proposed model scores higher than mostmodels, and the difference between the two scenarios is the smallest.This is the first result that represents the robustness of the proposedmodel that generates high-fidelity speech, regardless of whether thespeaker’s utterances were used frequently during training.

5.2. Unseen domains: speaker, emotion, language

These scenarios comprise evaluation sets of utterances from domainsthat were never used for training.

Table 3 shows that the results of our model are the closest to therecordings in most scenarios. Note that most models are not efficientin maintaining the performance in the emotion and language sets,compared to the score of speaker set. However, our model maintainsa relatively small score difference. This result represents that ourmodel can preserve sound quality in various unseen domains.

Audio samples are available at the following URL:https://kallavinka8045.github.io/icassp2021/

Table 3. MOS results of each model for unseen domains.Trained with Korean utterances

Model Speaker(K3) Emotion(K4) Language(M1)WaveGlow 3.27±0.07 3.11±0.06 3.27±0.07WaveRNN 3.83±0.08 2.46±0.09 2.85±0.07FB-MelGAN 2.90±0.08 2.60±0.09 2.71±0.08Ours 4.15±0.08 3.91±0.08 3.67±0.07Recordings 4.32±0.08 4.31±0.07 3.99±0.07

Trained with English utterancesModel Speaker(E3) Emotion(E4) Language(M1)WaveGlow 3.51±0.16 3.13±0.18 3.35±0.18WaveRNN 3.80±0.15 3.54±0.16 3.41±0.18FB-MelGAN 3.48±0.17 3.11±0.16 3.14±0.18Ours 3.80±0.16 3.76±0.16 3.58±0.18Recordings 3.95±0.15 4.01±0.16 3.71±0.17

Table 4. MOS results of multi-speaker text-to-speech and inferencespeed of each vocoder.

Trained with Korean utterancesModel TTS(K1+K2) Inference speedWaveGlow 3.36±0.06 0.058 RTFWaveRNN 3.06±0.10 10.12 RTFFB-MelGAN 3.43±0.09 0.003 RTFOurs 4.22±0.06 0.028 RTF

5.3. Multi-speaker text-to-speech

To evaluate this scenario, we trained the JDI-T[4] acoustic modelwith a pitch and energy predictor[2, 3] using a dataset with fourspeakers (containing K1 and subsets of K2). Each trained vocoderwas fine-tuned by 100k steps using a pair of the ground-truth wave-forms and the predicted mel-spectrograms. Note that we preparedthe predicted mel-spectrograms of JDI-T by using the text, referenceduration, ground-truth pitch, and energy.

Table 4 shows that Universal MelGAN achieved a real-timesynthesis rate of 0.028 real time factor (RTF), with a higher MOSthat outperforms other models. We used an NVIDIA V100 GPUto compare the inference speed of each model. All figures weremeasured without any hardware optimizations (i.e. mixed precision)or methods for accelerating the inference speed with a decrease insound quality (i.e. batched sampling[15] or multi-band generationstrategy[27]). This result indicates that the proposed model has theability to synthesize high-fidelity waveforms from text in real-time.

6. CONCLUSION

In this study, we propose Universal MelGAN, a robust neuralvocoder for high-fidelity synthesis in multiple domains. We solvedthe over-smoothing problem that causes a metallic sound, by attach-ing multi-resolution spectrogram discriminators to the model. Ourmodel is stable while generating waveforms with fine-grained spec-trograms in large footprint models. The evaluation results indicatethat the proposed model achieved the highest MOS in most seen andunseen domain scenarios. The result demonstrates the universalityof the proposed model. For more general use of the model, we willstudy a lightweight model in the future and apply the multi-bandstrategy to reduce the complexity while preserving the sound quality.

https://kallavinka8045.github.io/icassp2021/

-

7. REFERENCES

[1] J. Shen, R. Pang, R. J Weiss, M. Schuster, N. Jaitly, Z. Yang,Z. Chen, Y. Zhang, Y. Wang, R. Skerry-Ryan, et al., “Natu-ral TTS synthesis by conditioning WaveNet on mel spectro-gram predictions,” in 2018 IEEE International Conferenceon Acoustics, Speech and Signal Processing (ICASSP). IEEE,2018, pp. 4779–4783.

[2] Y. Ren, C. Hu, T. Qin, S. Zhao, Z. Zhao, and T.-Y. Liu, “Fast-Speech 2: Fast and high-quality end-to-end text-to-speech,”arXiv preprint arXiv:2006.04558, 2020.

[3] A. Lańcucki, “FastPitch: Parallel text-to-speech with pitch pre-diction,” arXiv preprint arXiv:2006.06873, 2020.

[4] D. Lim, W. Jang, G. O, H. Park, B. Kim, and J. Yoon,“JDI-T: Jointly trained duration informed transformer fortext-to-speech without explicit alignment,” arXiv preprintarXiv:2005.07799, 2020.

[5] L.-J. Liu, Z.-H. Ling, Y. Jiang, M. Zhou, and L.-R. Dai,“WaveNet vocoder with limited training data for voice con-version.,” in Interspeech, 2018, pp. 1983–1987.

[6] Y. Jia, R. J. Weiss, F. Biadsy, W. Macherey, M. Johnson,Z. Chen, and Y. Wu, “Direct speech-to-speech transla-tion with a sequence-to-sequence model,” arXiv preprintarXiv:1904.06037, 2019.

[7] D. Griffin and J. Lim, “Signal estimation from modified Short-Time Fourier Transform,” IEEE Transactions on Acoustics,Speech, and Signal Processing, vol. 32, no. 2, pp. 236–243,1984.

[8] H. Kawahara, I. Masuda-Katsuse, and A. De Cheveigne, “Re-structuring speech representations using a pitch-adaptive time-frequency smoothing and an instantaneous-frequency-based f0extraction: Possible role of a repetitive structure in sounds,”Speech communication, vol. 27, no. 3-4, pp. 187–207, 1999.

[9] M. Morise, F. Yokomori, and K. Ozawa, “WORLD: Avocoder-based high-quality speech synthesis system for real-time applications,” IEICE TRANSACTIONS on Informationand Systems, vol. 99, no. 7, pp. 1877–1884, 2016.

[10] J. Lorenzo-Trueba, T. Drugman, J. Latorre, T. Merritt, B. Pu-trycz, R. Barra-Chicote, A. Moinet, and V. Aggarwal, “To-wards achieving robust universal neural vocoding,” arXivpreprint arXiv:1811.06292, 2018.

[11] P. Hsu, C. Wang, A. T. Liu, and H. Lee, “Towards robust neu-ral vocoding for speech generation: A survey,” arXiv preprintarXiv:1912.02461, 2019.

[12] K. Kumar, R. Kumar, T. de Boissiere, L. Gestin, W. Z. Teoh,J. Sotelo, A. de Brébisson, Y. Bengio, and A. C. Courville,“MelGAN: Generative adversarial networks for conditionalwaveform synthesis,” in Advances in Neural Information Pro-cessing Systems, 2019, pp. 14910–14921.

[13] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative ad-versarial nets,” in Advances in neural information processingsystems, 2014, pp. 2672–2680.

[14] A. van den Oord, S. Dieleman, H. Zen, K. Simonyan,O. Vinyals, A. Graves, N. Kalchbrenner, A. Senior, andK. Kavukcuoglu, “WaveNet: A generative model for raw au-dio,” arXiv preprint arXiv:1609.03499, 2016.

[15] N. Kalchbrenner, E. Elsen, K. Simonyan, S. Noury,N. Casagrande, E. Lockhart, F. Stimberg, A. van den Oord,S. Dieleman, and K. Kavukcuoglu, “Efficient neural audio syn-thesis,” arXiv preprint arXiv:1802.08435, 2018.

[16] R. Prenger, R. Valle, and B. Catanzaro, “WaveGlow: Aflow-based generative network for speech synthesis,” inICASSP 2019-2019 IEEE International Conference on Acous-tics, Speech and Signal Processing (ICASSP). IEEE, 2019, pp.3617–3621.

[17] G. Yang, S. Yang, K. Liu, P. Fang, W. Chen, and L. Xie,“Multi-band MelGAN: Faster waveform generation for high-quality text-to-speech,” arXiv preprint arXiv:2005.05106,2020.

[18] S. Maiti and M. I Mandel, “Speaker independence of neuralvocoders and their effect on parametric resynthesis speech en-hancement,” in ICASSP 2020-2020 IEEE International Con-ference on Acoustics, Speech and Signal Processing (ICASSP).IEEE, 2020, pp. 206–210.

[19] D. Paul, Y. Pantazis, and Y. Stylianou, “Speaker condi-tional WaveRNN: Towards universal neural vocoder for un-seen speaker and recording conditions,” arXiv preprintarXiv:2008.05289, 2020.

[20] R. Yamamoto, E. Song, and J.-M. Kim, “Parallel Wave-GAN: A fast waveform generation model based on generativeadversarial networks with multi-resolution spectrogram,” inICASSP 2020-2020 IEEE International Conference on Acous-tics, Speech and Signal Processing (ICASSP). IEEE, 2020, pp.6199–6203.

[21] A. Van den Oord, N. Kalchbrenner, L. Espeholt, O. Vinyals,A. Graves, et al., “Conditional image generation with Pixel-Cnn decoders,” in Advances in neural information processingsystems, 2016, pp. 4790–4798.

[22] J. Su, Z. Jin, and A. Finkelstein, “HiFi-GAN: High-fidelitydenoising and dereverberation based on speech deep features inadversarial networks,” arXiv preprint arXiv:2006.05694, 2020.

[23] K. Ito et al., “The LJ speech dataset,” https://keithito.com/LJ-Speech-Dataset/, 2017.

[24] H. Zen, V. Dang, R. Clark, Y. Zhang, R. J. Weiss, Y. Jia,Z. Chen, and Y. Wu, “LibriTTS: A corpus derived from Lib-riSpeech for text-to-speech,” arXiv preprint arXiv:1904.02882,2019.

[25] S. King and V. Karaiskos, “The Blizzard challenge 2013,” Bliz-zard Challenge Workshop, 2013.

[26] K. Park and T. Mulc, “CSS10: A collection of singlespeaker speech datasets for 10 languages,” arXiv preprintarXiv:1903.11269, 2019.

[27] C. Yu, H. Lu, N. Hu, M. Yu, C. Weng, K. Xu, P. Liu, D. Tuo,S. Kang, G. Lei, et al., “DurIAN: Duration informed at-tention network for multimodal synthesis,” arXiv preprintarXiv:1909.01700, 2019.

1 Introduction2 Related work3 Description of the proposed model3.1 FB-MelGAN3.2 Universal MelGAN: Improvements

4 Experiments4.1 Data configurations4.2 Model settings

5 Results5.1 Seen speakers5.2 Unseen domains: speaker, emotion, language5.3 Multi-speaker text-to-speech

6 Conclusion7 References

![arXiv:1804.02549v1 [eess.AS] 7 Apr 2018](https://static.fdocuments.net/doc/165x107/621e4629dbc6970c1d1d457a/arxiv180402549v1-eessas-7-apr-2018.jpg)

![arXiv:1907.01413v1 [eess.AS] 1 Jul 2019](https://static.fdocuments.net/doc/165x107/61f4268eb66ae51c0e0dda14/arxiv190701413v1-eessas-1-jul-2019.jpg)

![arXiv:2110.02743v1 [eess.AS] 4 Oct 2021](https://static.fdocuments.net/doc/165x107/61aae987b0b6a243a725174d/arxiv211002743v1-eessas-4-oct-2021.jpg)

![Abstract arXiv:1811.04791v2 [eess.AS] 7 Apr 2020](https://static.fdocuments.net/doc/165x107/617384d361344a14eb780e1a/abstract-arxiv181104791v2-eessas-7-apr-2020.jpg)

![arXiv:2005.08497v1 [eess.AS] 18 May 2020](https://static.fdocuments.net/doc/165x107/6268af8ecf0abe3cd708d859/arxiv200508497v1-eessas-18-may-2020.jpg)

![@kaist.ac.kr arXiv:1911.06149v2 [eess.AS] 27 Nov 2019](https://static.fdocuments.net/doc/165x107/6240ddae8cf5290bea7c6fe8/kaistackr-arxiv191106149v2-eessas-27-nov-2019.jpg)

![Abstract arXiv:2106.10997v1 [eess.AS] 21 Jun 2021](https://static.fdocuments.net/doc/165x107/61c033176fb13d6543446dec/abstract-arxiv210610997v1-eessas-21-jun-2021.jpg)