arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera...

Transcript of arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera...

![Page 1: arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera (translation) walking dead (a movie title) takes away the appetite of anyone For this task,](https://reader033.fdocuments.net/reader033/viewer/2022052011/602662283478c505822e015c/html5/thumbnails/1.jpg)

Hierarchical Meta-Embeddings for Code-SwitchingNamed Entity Recognition

Genta Indra Winata, Zhaojiang Lin, Jamin Shin, Zihan Liu, Pascale FungCenter for Artificial Intelligence Research (CAiRE)

Department of Electronic and Computer EngineeringThe Hong Kong University of Science and Technology, Clear Water Bay, Hong Kong

{giwinata,zlinao,jmshinaa,zliucr}@connect.ust.hk,[email protected]

Abstract

In countries that speak multiple main lan-guages, mixing up different languages withina conversation is commonly called code-switching. Previous works addressing thischallenge mainly focused on word-level as-pects such as word embeddings. How-ever, in many cases, languages share com-mon subwords, especially for closely relatedlanguages, but also for languages that areseemingly irrelevant. Therefore, we pro-pose Hierarchical Meta-Embeddings (HME)that learn to combine multiple monolingualword-level and subword-level embeddings tocreate language-agnostic lexical representa-tions. On the task of Named Entity Recogni-tion for English-Spanish code-switching data,our model achieves the state-of-the-art perfor-mance in the multilingual settings. We alsoshow that, in cross-lingual settings, our modelnot only leverages closely related languages,but also learns from languages with differentroots. Finally, we show that combining dif-ferent subunits are crucial for capturing code-switching entities.

1 Introduction

Code-switching is a phenomenon that often hap-pens between multilingual speakers, in which theyswitch between their two languages in conver-sations, hence, it is practically useful to recog-nize this well in spoken language systems (Winataet al., 2018a). This occurs more often for entitiessuch as organizations or products, which motivatesus to focus on the specific problem of Named En-tity Recognition (NER) in code-switching scenar-ios. We show one of the examples as the follow-ing:

• walking dead le quita el apetito a cualquiera

• (translation) walking dead (a movie title)takes away the appetite of anyone

For this task, previous works have mostlyfocused on applying pre-trained word embed-dings from each language in order to representnoisy mixed-language texts, and combine themwith character-level representations (Trivedi et al.,2018; Wang et al., 2018; Winata et al., 2018b).However, despite the effectiveness of such word-level approaches, they neglect the importance ofsubword-level characteristics shared across differ-ent languages. Such information is often hard tocapture with word embeddings or randomly ini-tialized character-level embeddings. Naturally, wecan turn towards subword-level embeddings suchas FastText (Grave et al., 2018) to help this task,which will evidently allow us to leverage the mor-phological structure shared across different lan-guages.

Despite such expected usefulness, there hasnot been much attention focused around usingsubword-level features in this task. This is partlybecause of the non-trivial difficulty of combin-ing different language embeddings in the sub-word space, which arises from the distinct seg-mentation into subwords for different languages.This leads us to explore the literature of Meta-Embeddings (Yin and Schütze, 2016; Muromägiet al., 2017; Bollegala et al., 2018; Coates andBollegala, 2018; Kiela et al., 2018; Winata et al.,2019), which is a method to learn how to combinedifferent embeddings.

In this paper, we propose Hierarchical Meta-Embeddings (HME) 1 which learns how to com-bine different pre-trained monolingual embed-dings in word, subword, and character-level intoa single language-agnostic lexical representationwithout using specific language identifiers. Toaddress the issue of different segmentations, weadd a Transformer (Vaswani et al., 2017) encoder

1The source code is available at https://github.com/gentaiscool/meta-emb

arX

iv:1

909.

0850

4v1

[cs

.CL

] 1

8 Se

p 20

19

![Page 2: arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera (translation) walking dead (a movie title) takes away the appetite of anyone For this task,](https://reader033.fdocuments.net/reader033/viewer/2022052011/602662283478c505822e015c/html5/thumbnails/2.jpg)

self-attn

BPE-level Char-level

�(�)

1�

(�)

2...

encoder encoder encoder

�(�)

1�

(�)

2...

encoder

�(�)

1

en MallWestland

Transformer

Westland_WestlandWestland

Word-level

B-LOCATION

HierarchicalMeta-Embeddings

inputsequence

ConditionalRandomField

HierarchicalMeta-Embeddings

self-attn

meta-representations

Figure 1: Hierarchical Meta-Embeddings (HME) architecture for Named Entity Recognition (NER) task. Left:Transformer-CRF architecture for Named Entity Recognition. Right: HME accept words, BPEs, and charactersinputs.

which learns the important subwords in a givensentence. We evaluate our model on the taskof Named Entity Recognition for English-Spanishcode-switching data, and we use Transformer-CRF, a transformer-based encoder for sequencelabeling based on the implementation of Winataet al. (2019). Our experimental results confirmthat HME significantly outperforms the state-of-the-art system in absolute F1 score. The analysisshows that in the task of English-Spanish mixedtexts not only similar languages like Portuguese orCatalan help, but also seemingly distant languagesfrom Celtic origin also significantly increase theperformance.

2 Related Work

Embeddings Previous works have extensivelyexplored different representations such as word(Mikolov et al., 2013; Pennington et al., 2014;Grave et al., 2018; Xu et al., 2018), subword (Sen-nrich et al., 2016; Heinzerling and Strube, 2018),and character (dos Santos and Zadrozny, 2014;Wieting et al., 2016). Lample et al. (2016) hassuccessfully concatenated character and word em-beddings to their model, showing the potentialof combining multiple representations. Liu et al.(2019) proposed to leverage word and subwordembeddings into the application of unsupervisedmachine translation.

Meta-embeddings Recently, there are studieson combining multiple word embeddings inpre-processing steps (Yin and Schütze, 2016;Muromägi et al., 2017; Bollegala et al., 2018;Coates and Bollegala, 2018). Later, Kiela et al.

(2018) introduced a method to dynamically learnword-level meta-embeddings, which can be ef-fectively used in a supervised setting. Winataet al. (2019) proposed an idea to leverage multi-ple embeddings from different languages to gen-erate language-agnostic meta-representations formixed-language data.

3 Hierarchical Meta-Embeddings

We propose a method to combine word, subword,and character representations to create a mixtureof embeddings. We generate a multilingual meta-embeddings of word and subword, and then, weconcatenate them with character-level embeddingsto generate final word representations, as shownin Figure 1. Let w be a sequence of words withn elements, where w = [w1, . . . , wn]. Eachword can be tokenized into a list of subwordss = [s1, . . . , sm] and a list of characters c =[c1, . . . , cp]. The list of subwords s is gener-ated using a function f ; s = f(w). Function fmaps a word into a sequence of subwords. Fur-ther, let E(w), E(s), and E(c) be a set of word,subword, and character embedding lookup tables.Each set consists of different monolingual embed-dings. Each element is transformed into a embed-ding vector in Rd. We denote subscripts {i,j} aselement and embedding language index, and su-perscripts (w,s,c) as word, subword, and character.

3.1 Multilingual Meta-Embeddings (MME)

We generate a meta-representations by taking thevector representation from multiple monolingualpre-trained embeddings in different subunits such

![Page 3: arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera (translation) walking dead (a movie title) takes away the appetite of anyone For this task,](https://reader033.fdocuments.net/reader033/viewer/2022052011/602662283478c505822e015c/html5/thumbnails/3.jpg)

as word and subword. We apply a projectionmatrix Wj to transform the dimensions from theoriginal space xi,j ∈ Rd to a new shared spacex′

i,j ∈ Rd′ . Then, we calculate attention weightsαi,j ∈ Rd′ with a non-linear scoring function φ(e.g., tanh) to take important information fromeach individual embedding x′

i,j . Then, MME iscalculated by taking the weighted sum of the pro-jected embeddings x′

i,j :

x′i,j = Wj · xi,j , (1)

αi,j =exp(φ(x′

i,j))∑nk=1 exp(φ(x

′i,k))

, (2)

ui =n∑

j=1

αi,jx′i,j . (3)

3.2 Mapping Subwords and Characters toWord-Level Representations

We propose to map subword into word rep-resentations and choose byte-pair encodings(BPEs) (Sennrich et al., 2016) since it has a com-pact vocabulary. First, we apply f to segmentwords into sets of subwords, and then we ex-tract the pre-trained subword embedding vectorsx(s)i,j ∈ Rd for language j.Since, each language has a different f ,

we replace the projection matrix with Trans-former (Vaswani et al., 2017) to learn and com-bine important subwords into a single vector rep-resentation. Then, we create u

(s)i ∈ Rd′ which

represents the subword-level MME by taking theweighted sum of x′(s)

i,j ∈ Rd′ .

x′(s)i,j = Encoder(x(s)

i,j ), (4)

u(s)i =

n∑j=1

αi,jx′(s)i,j . (5)

To combine character-level representations, weapply an encoder to each character.

u(c)i = Encoder(xi) ∈ Rd′ . (6)

We combine the word-level, subword-level, andcharacter-level representations by concatenationuHMEi = (u

(w)i ,u

(s)i ,u

(c)i ), where u

(w)i ∈ Rd′

and u(s)i ∈ Rd′ are word-level MME and BPE-

level MME, and u(c)i is a character embedding.

We randomly initialize the character embeddingand keep it trainable. We fix all subword and wordpre-trained embeddings during the training.

3.3 Sequence Labeling

To predict the entities, we use Transformer-CRF,a transformer-based encoder followed by a Condi-tional Random Field (CRF) layer (Lafferty et al.,2001). The CRF layer is useful to constraint thedependencies between labels.

h = Transformer(u), h′ = CRF(h). (7)

The best output sequence is selected by a forwardpropagation using the Viterbi algorithm.

4 Experiments

4.1 Experimental Setup

We train our model for solving Named EntityRecognition on English-Spanish code-switchingtweets data from Aguilar et al. (2018). There arenine entity labels with IOB format. The training,development, and testing sets contain 50,757, 832,and 15,634 tweets, respectively.

We use FastText word embeddings trained fromCommon Crawl and Wikipedia (Grave et al.,2018) for English (es), Spanish (es), includingfour Romance languages: Catalan (ca), Por-tuguese (pt), French (fr), Italian (it), and a Ger-manic language: German (de), and five Celticlanguages as the distant language group: Bre-ton (br), Welsh (cy), Irish (ga), Scottish Gaelic(gd), Manx (gv). We also add the English TwitterGloVe word embeddings (Pennington et al., 2014)and BPE-based subword embeddings from Heinz-erling and Strube (2018).

We train our model in two different settings: (1)multilingual setting, we combine main languages(en-es) with Romance languages and a Germaniclanguage, and (2) cross-lingual setting, we useRomance and Germanic languages without mainlanguages. Our model contains four layers oftransformer encoders with a hidden size of 200,four heads, and a dropout of 0.1. We use Adam op-timizer and start the training with a learning rate of0.1 and an early stop of 15 iterations. We replaceuser hashtags and mentions with <USR>, emojiwith <EMOJI>, and URL with <URL>. We eval-uate our model using absolute F1 score metric.

4.2 Baselines

CONCAT We concatenate word embeddings bymerging the dimensions of word representations.This method combines embeddings into a high-dimensional input that may cause inefficient com-

![Page 4: arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera (translation) walking dead (a movie title) takes away the appetite of anyone For this task,](https://reader033.fdocuments.net/reader033/viewer/2022052011/602662283478c505822e015c/html5/thumbnails/4.jpg)

Model

Multilingual embeddings Cross-lingual embeddingsmain

languages+ closely-related

languages+ distant

languagesclosely-related

languagesdistant

languagesen-es ca-pt ca-pt-de-fr-it br-cy-ga-gd-gv ca-pt ca-pt-de-fr-it br-cy-ga-gd-gv

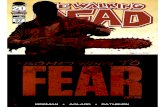

Flat word-level embeddingsCONCAT 65.3 ± 0.38 64.99 ± 1.06 65.91 ± 1.16 65.79 ± 1.36 58.28 ± 2.66 64.02 ± 0.26 50.77 ± 1.55

LINEAR 64.61 ± 0.77 65.33 ± 0.87 65.63 ± 0.92 64.95 ± 0.77 60.72 ± 0.84 62.37 ± 1.01 53.28 ± 0.41

Multilingual Meta-Embeddings (MME) (Winata et al., 2019)Word 65.43 ± 0.67 66.63 ± 0.94 66.8 ± 0.43 66.56 ± 0.4 61.75 ± 0.56 63.23 ± 0.29 53.43 ± 0.37

Hierarchical Meta-Embeddings (HME)+ BPE 65.9 ± 0.72 67.31 ± 0.34 67.26 ± 0.54 66.88 ± 0.37 63.44 ± 0.33 63.78 ± 0.62 60.19 ± 0.63

+ Char 65.88 ± 1.02 67.38 ± 0.84 65.94 ± 0.47 66.1 ± 0.33 61.97 ± 0.6 63.06 ± 0.69 57.5 ± 0.56

+ BPE + Char 66.55 ± 0.72 67.8 ± 0.31 67.07 ± 0.49 67.02 ± 0.16 63.9 ± 0.22 64.52 ± 0.35 60.88 ± 0.84

Table 1: Results (percentage F1 mean and standard deviation from five experiments). Multilingual: with mainlanguages, Cross-lingual: without main languages.

Model F1Trivedi et al. (2018) 61.89Wang et al. (2018) 62.39Wang et al. (2018) (Ensemble) 62.67Winata et al. (2018b) 62.76Trivedi et al. (2018) (Ensemble) 63.76Winata et al. (2019) MME 66.63 ± 0.94

Random embeddings 46.68 ± 0.79

Aligned embeddingsMUSE (es→ en) 60.89 ± 0.37

MUSE (en→ es) 61.49 ± 0.62

Multilingual embeddingsOur approach 67.8 ± 0.31

Our approach (Ensemble)† 69.17Cross-lingual embeddingsOur approach 64.52 ± 0.35

Our approach (Ensemble)† 65.99

Table 2: Comparison to existing works. Ensemble:We run a majority voting scheme from five differentmodels.

putation.

xCONCATi = [xi,1, ...,xi,n]. (8)

LINEAR We sum all word embeddings intoa single word vector with equal weight. Thismethod combines embeddings without consider-ing the importance of each of them.

xLINEARi =

n∑j=0

x′i,j . (9)

Random Embeddings We use randomly initial-ized word embeddings and keep it trainable to cal-culate the lower-bound performance.

Aligned Embeddings We align English andSpanish FastText embeddings using CSLS with

two scenarios. We set English (en) as the sourcelanguage and Spanish (es) (en → es) as the tar-get language, and vice versa (es → en). We runMUSE by using the code prepared by the authorsof Conneau et al. (2017). 2

5 Results & Discussion

In general, from Table 1, we can see that word-level meta-embeddings even without subword orcharacter-level information, consistently performbetter than flat baselines (e.g., CONCAT and LIN-EAR) in all settings. This is mainly because ofthe attention layer which does not require addi-tional parameters. Furthermore, comparing ourapproach to previous state-of-the-art models, wecan clearly see that our proposed approaches allsignificantly outperform them.

From Table 1, in Multilingual setting, whichtrains with the main languages, it is evident thatadding both closely-related and distant languageembeddings improves the performance. Thisshows us that our model is able to leverage thelexical similarity between the languages. This ismore distinctly shown in Cross-lingual setting asusing distant languages significantly perform lessthan using closely-related ones (e.g., ca-pt). Inter-estingly, for distant languages, when adding sub-words, we can still see a drastic performance in-crease. We hypothesize that even though the char-acters are mostly different, the lexical structure issimilar to our main languages.

On the other hand, adding subword inputs to themodel is consistently better than characters. Thisis due to the transfer of the information from thepre-trained subword embeddings. As shown in Ta-

2https://github.com/facebookresearch/MUSE

![Page 5: arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera (translation) walking dead (a movie title) takes away the appetite of anyone For this task,](https://reader033.fdocuments.net/reader033/viewer/2022052011/602662283478c505822e015c/html5/thumbnails/5.jpg)

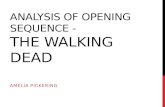

Figure 2: Heatmap of attention over languages from a validation sample. Left: word-level MME, Right: BPE-levelMME. We extract the attention weights from a multilingual model (en-es-ca-pt-de-fr-it).

O

OR

G

LOC

PER

PRO

D

EVEN

T

OTH

ER

GR

OU

P

TITL

E

TIM

E

DE

FR

IT

CA

PT

ES

EN

GLOVE_EN

0.0

0.2

0.4

0.6

0.8

1.0

Figure 3: The average of attention weights for wordembeddings versus NER tags from the validation set.

ble 1, subword embeddings is more effective fordistant languages (Celtic languages) than closely-related languages such as Catalan or Portuguese.

Moreover, we visualize the attention weights ofthe model in word and subword-level to interpretthe model dynamics. From the left image of Fig-ure 2, in word-level, the model mostly chooses thecorrect language embedding for each word, butalso combines with different languages. Withoutany language identifiers, it is impressive to seethat our model learns to attend to the right lan-guages. The right side of Figure 2, which showsattention weight distributions for subword-level,demonstrates interesting behaviors, in which formost English subwords, the model leverages ca,fr, and de embeddings. We hypothesize this is be-cause the dataset is mainly constructed with Span-ish words, which can also be verified from Fig-ure 3 in which most NER tags are classified as es.

6 Conclusion

We propose Hierarchical Meta-Embeddings(HME) that learns how to combine multiplemonolingual word-level and subword-levelembeddings to create language-agnostic represen-tations without specific language information. Weachieve the state-of-the-art results on the task ofNamed Entity Recognition for English-Spanishcode-switching data. We also show that our modelcan leverage subword information very effectivelyfrom languages from different roots to generatebetter word representations.

Acknowledgments

This work has been partially funded byITF/319/16FP and MRP/055/18 of the Inno-vation Technology Commission, the Hong KongSAR Government, and School of EngineeringPh.D. Fellowship Award, the Hong Kong Uni-versity of Science and Technology, and RDC1718050-0 of EMOS.AI. We sincerely thank thethree anonymous reviewers for their insightfulcomments on our paper.

ReferencesGustavo Aguilar, Fahad AlGhamdi, Victor Soto, Mona

Diab, Julia Hirschberg, and Thamar Solorio. 2018.Named entity recognition on code-switched data:Overview of the calcs 2018 shared task. In Proceed-ings of the Third Workshop on Computational Ap-proaches to Linguistic Code-Switching, pages 138–147, Melbourne, Australia. Association for Compu-tational Linguistics.

Danushka Bollegala, Kohei Hayashi, and Ken-IchiKawarabayashi. 2018. Think globally, embed lo-

![Page 6: arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera (translation) walking dead (a movie title) takes away the appetite of anyone For this task,](https://reader033.fdocuments.net/reader033/viewer/2022052011/602662283478c505822e015c/html5/thumbnails/6.jpg)

cally: locally linear meta-embedding of words. InProceedings of the 27th International Joint Con-ference on Artificial Intelligence, pages 3970–3976.AAAI Press.

Joshua Coates and Danushka Bollegala. 2018. Frus-tratingly easy meta-embedding–computing meta-embeddings by averaging source word embeddings.In Proceedings of the 2018 Conference of the NorthAmerican Chapter of the Association for Computa-tional Linguistics: Human Language Technologies,Volume 2 (Short Papers), pages 194–198.

Alexis Conneau, Guillaume Lample, Marc’AurelioRanzato, Ludovic Denoyer, and Hervé Jégou. 2017.Word translation without parallel data. arXivpreprint arXiv:1710.04087.

Edouard Grave, Piotr Bojanowski, Prakhar Gupta, Ar-mand Joulin, and Tomas Mikolov. 2018. Learningword vectors for 157 languages. In Proceedingsof the International Conference on Language Re-sources and Evaluation (LREC 2018).

Benjamin Heinzerling and Michael Strube. 2018.Bpemb: Tokenization-free pre-trained subword em-beddings in 275 languages. In Proceedings of theEleventh International Conference on Language Re-sources and Evaluation (LREC-2018).

Douwe Kiela, Changhan Wang, and Kyunghyun Cho.2018. Dynamic meta-embeddings for improved sen-tence representations. In Proceedings of the 2018Conference on Empirical Methods in Natural Lan-guage Processing, pages 1466–1477.

John D. Lafferty, Andrew McCallum, and FernandoPereira. 2001. Conditional random fields: Prob-abilistic models for segmenting and labeling se-quence data. In ICML.

Guillaume Lample, Miguel Ballesteros, Sandeep Sub-ramanian, Kazuya Kawakami, and Chris Dyer. 2016.Neural architectures for named entity recognition.In Proceedings of the 2016 Conference of the NorthAmerican Chapter of the Association for Computa-tional Linguistics: Human Language Technologies,pages 260–270.

Zihan Liu, Yan Xu, Genta Indra Winata, and PascaleFung. 2019. Incorporating word and subword unitsin unsupervised machine translation using languagemodel rescoring. In Proceedings of the Fourth Con-ference on Machine Translation (Volume 2: SharedTask Papers, Day 1), pages 275–282, Florence, Italy.Association for Computational Linguistics.

Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S Cor-rado, and Jeff Dean. 2013. Distributed representa-tions of words and phrases and their compositional-ity. In Advances in neural information processingsystems, pages 3111–3119.

Avo Muromägi, Kairit Sirts, and Sven Laur. 2017. Lin-ear ensembles of word embedding models. In Pro-ceedings of the 21st Nordic Conference on Compu-tational Linguistics, pages 96–104.

Jeffrey Pennington, Richard Socher, and ChristopherManning. 2014. Glove: Global vectors for wordrepresentation. In Proceedings of the 2014 confer-ence on empirical methods in natural language pro-cessing (EMNLP), pages 1532–1543.

Cícero Nogueira dos Santos and Bianca Zadrozny.2014. Learning character-level representations forpart-of-speech tagging. In ICML.

Rico Sennrich, Barry Haddow, and Alexandra Birch.2016. Neural machine translation of rare words withsubword units. In Proceedings of the 54th AnnualMeeting of the Association for Computational Lin-guistics (Volume 1: Long Papers), volume 1, pages1715–1725.

Shashwat Trivedi, Harsh Rangwani, and Anil Ku-mar Singh. 2018. Iit (bhu) submission for the aclshared task on named entity recognition on code-switched data. In Proceedings of the Third Work-shop on Computational Approaches to LinguisticCode-Switching, pages 148–153.

Ashish Vaswani, Noam Shazeer, Niki Parmar, JakobUszkoreit, Llion Jones, Aidan N Gomez, ŁukaszKaiser, and Illia Polosukhin. 2017. Attention is allyou need. In Advances in neural information pro-cessing systems, pages 5998–6008.

Changhan Wang, Kyunghyun Cho, and Douwe Kiela.2018. Code-switched named entity recognition withembedding attention. In Proceedings of the ThirdWorkshop on Computational Approaches to Linguis-tic Code-Switching, pages 154–158.

John Wieting, Mohit Bansal, Kevin Gimpel, and KarenLivescu. 2016. Charagram: Embedding words andsentences via character n-grams. In Proceedings ofthe 2016 Conference on Empirical Methods in Nat-ural Language Processing, pages 1504–1515.

Genta Indra Winata, Zhaojiang Lin, and Pascale Fung.2019. Learning multilingual meta-embeddings forcode-switching named entity recognition. In Pro-ceedings of the 4th Workshop on RepresentationLearning for NLP (RepL4NLP-2019), pages 181–186.

Genta Indra Winata, Andrea Madotto, Chien-ShengWu, and Pascale Fung. 2018a. Code-switchinglanguage modeling using syntax-aware multi-tasklearning. In Proceedings of the Third Workshopon Computational Approaches to Linguistic Code-Switching, pages 62–67.

Genta Indra Winata, Chien-Sheng Wu, AndreaMadotto, and Pascale Fung. 2018b. Bilingual char-acter representation for efficiently addressing out-of-vocabulary words in code-switching named entityrecognition. In Proceedings of the Third Workshopon Computational Approaches to Linguistic Code-Switching, pages 110–114.

![Page 7: arXiv:1909.08504v1 [cs.CL] 18 Sep 2019 · walking dead le quita el apetito a cualquiera (translation) walking dead (a movie title) takes away the appetite of anyone For this task,](https://reader033.fdocuments.net/reader033/viewer/2022052011/602662283478c505822e015c/html5/thumbnails/7.jpg)

Peng Xu, Andrea Madotto, Chien-Sheng Wu, Ji HoPark, and Pascale Fung. 2018. Emo2vec: Learn-ing generalized emotion representation by multi-task training. In Proceedings of the 9th Workshopon Computational Approaches to Subjectivity, Senti-ment and Social Media Analysis, pages 292–298.

Wenpeng Yin and Hinrich Schütze. 2016. Learningword meta-embeddings. In Proceedings of the 54thAnnual Meeting of the Association for Computa-tional Linguistics (Volume 1: Long Papers), vol-ume 1, pages 1351–1360.