An analysis of Ermakov-Zolotukhin quadrature using kernels

Transcript of An analysis of Ermakov-Zolotukhin quadrature using kernels

HAL Id: hal-03405615https://hal.archives-ouvertes.fr/hal-03405615

Submitted on 27 Oct 2021

HAL is a multi-disciplinary open accessarchive for the deposit and dissemination of sci-entific research documents, whether they are pub-lished or not. The documents may come fromteaching and research institutions in France orabroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, estdestinée au dépôt et à la diffusion de documentsscientifiques de niveau recherche, publiés ou non,émanant des établissements d’enseignement et derecherche français ou étrangers, des laboratoirespublics ou privés.

An analysis of Ermakov-Zolotukhin quadrature usingkernels

Ayoub Belhadji

To cite this version:Ayoub Belhadji. An analysis of Ermakov-Zolotukhin quadrature using kernels. NeurIPS 2021 - 35thConference on Neural Information Processing Systems, Dec 2021, Virtual-only Conference, Australia.pp.1-17. �hal-03405615�

An analysis of Ermakov-Zolotukhin quadratureusing kernels

Ayoub BelhadjiUniv Lyon, ENS de Lyon

Inria, CNRS, UCBLLIP UMR 5668, Lyon, France

Abstract

We study a quadrature, proposed by Ermakov and Zolotukhin in the sixties, throughthe lens of kernel methods. The nodes of this quadrature rule follow the distributionof a determinantal point process, while the weights are defined through a linearsystem, similarly to the optimal kernel quadrature. In this work, we show howthese two classes of quadrature are related, and we prove a tractable formula of theexpected value of the squared worst-case integration error on the unit ball of anRKHS of the former quadrature. In particular, this formula involves the eigenvaluesof the corresponding kernel and leads to improving on the existing theoreticalguarantees of the optimal kernel quadrature with determinantal point processes.

1 Introduction

Integrals appear in many scientific fields as quantities of interest per se. For example, in statistics,they represent expectations [27], while in mathematical finance, they represent the prices of financialproducts [17]. Unfortunately, integrals that can be written in closed form are exceptional. In general,their values are only known through approximations. For this reason, numerical integration is at theheart of many tasks in applied mathematics and statistics. Among all the possible approximationschemes, quadratures are the most practical since they approximate the integral of a function by afinite mixture of its evaluations. In this work, we focus on quadrature rules that take the form∫

Xf(x)g(x)dω(x) ≈

∑i∈[N ]

wif(xi), (1)

where the nodes xi are independent of f and g, while the weights wi depend only on g. The nodes andthe weights of a quadrature may be seen as degrees of freedom that the practitioner may tune in orderto achieve a given level of approximation error. The design of quadratures gave birth to a rich literaturefrom Gaussian quadrature [15] to Monte Carlo methods [25] to quadratures based on determinantalpoint processes (DPPs) [1]. These latter form a large class of probabilistic models of repulsive randomsubsets that make numerical integration possible in a variety of domains with strong theoreticalguarantees. In particular, central limit theorems with asymptotic convergence rates that scale betterthan the typical Monte Carlo rate O(N−1/2) were proven for several DPP based quadratures: whenthe integrand is a C1 function [1] or even when the integrand is non-differentiable [10]. Moreover,it is possible to design quadrature rules based on DPPs with non-asymptotic guarantees and withrates of convergence that adapt to the smoothness of the integrand. This is the case of the quadratureproposed by Ermakov and Zolotukhin in [14] and recently revisited in [16], and the optimal kernelquadrature [3].

In this work, we study the quadrature rule proposed by Ermakov and Zolotukhin (EZQ) through thelens of kernel methods. We start by comparing the weights of EZQ to the weights of the optimal

35th Conference on Neural Information Processing Systems (NeurIPS 2021).

kernel quadrature (OKQ), and we prove that they both belong to a broader class of quadrature rulesthat we call kernel based interpolation quadrature. Then, we study the approximation quality of EZQin reproducing kernel Hilbert spaces (RKHSs). This is done by proving a general tractable formula ofthe expected value of the squared worst-case integration error for functions that belong to the unit ballof an RKHS when the nodes follow the distribution of a determinantal point process. This formulainvolves principally the eigenvalues of the integral operator, and converges to 0 at a slightly slowerrate than the optimal rate. Interestingly, this analysis yields a better upper bound for the optimalkernel quadrature with DPPs proposed initially in [3]. Comparably to the theoretical guaranteesgiven in [14, 16], our theoretical guarantees are independent of the choice of the test function. Thisfacilitates the comparison of EZQ with other quadratures such as OKQ.

The rest of the article is organized as follows. Section 2 reviews the work of [14] and recall keyconcepts on kernel based quadrature. In Section 3, we present the main results of this work and theirconsequences. A sketch of the proof of the main theorem is given in Section 4. We illustrate thetheoretical results by numerical experiments in Section 5. Finally, we give a conclusion in Section 6.

Notation and assumptions. We use the notation N∗ = Nr {0}. We denote by ω a Borel measuresupported on X , and we denote by L2(ω) the Hilbert space of square integrable real-valued functionson X with respect to ω, equipped with the inner product 〈·, ·〉ω, and the associated norm ‖.‖ω. ForN ∈ N∗, we denote by ω⊗N the tensor product of ω defined on XN . Moreover, we denote by F theRKHS associated to the kernel k : X × X → R that we assume to be continuous and satisfying thecondition

∫X k(x, x)dω(x) < +∞. In particular, we assume the Mercer decomposition

k(x, y) =∑m∈N∗

σmφm(x)φm(y), (2)

to hold, where the convergence is pointwise, and σm and φm are the corresponding eigenvalues andeigenfunctions of the integral operator Σ defined for f ∈ L2(ω) by

Σf(·) =∫Xk(·, y)f(y)dω(y). (3)

We assume that the sequence σ = (σm)m∈N∗ is non-increasing and its elements are non-vanishing,and we assume that the corresponding eigenfunctions φm are continuous. Note that the φm arenormalized: ‖φm‖ω = 1 for m ∈ N∗. In particular, (φm)m∈N∗ is an o.n.b. of L2(ω), and everyelement f ∈ F satisfies ∑

m∈N∗

〈f, φm〉2ωσm

< +∞. (4)

Moreover, for every N ∈ N∗, we denote by EN the eigen-subspace of L2(ω) spanned byφ1, . . . , φN . For any kernel κ : X × X → R, and for x ∈ XN , we define the kernel matrixκ(x) := (κ(xi, xj))i,j∈[N ] ∈ RN×N . Finally, we denote in bold fonts the corresponding kernelmatrices: K(x) for the kernel k, KN (x) for the kernel kN , K⊥N (x) for the kernel k⊥N , κ(x) forthe kernel κ... Similarly, for any function µ : X → R and for x ∈ XN , we define the vector ofevaluations µ(x) := (µ(xi))i∈[N ] ∈ RN .

2 Related work

In the section, we review some results that are relevant to the contribution.

2.1 Ermakov-Zolotukhin quadrature

The quadrature rule proposed by Ermakov and Zolotukhin in [14] deals with integrals that write∫Xf(x)φm(x)dω(x), (5)

where f ∈ L2(ω), and (φm)m∈N∗ is an orthonormal family with respect to the measure ω. Itsconstruction goes as follows. Let N ∈ N∗ and let x ∈ XN such that the matrix

ΦN (x) := (φn(xi))(n,i)∈[N ]×[N ]

2

is non-singular. For n ∈ [N ], define

IEZ,n(f) =∑i∈[N ]

wEZ,ni f(xi), (6)

where wEZ,n := (wEZ,ni )i∈[N ] ∈ RN is given by wEZ,n = ΦN (x)−1en, with en is the vector of

RN with the n-th coordinate is 1 and the rest are 0 1. We can prove easily that this quadrature is exact

∀f ∈ Span(φn)n∈[N ],∑i∈[N ]

wEZ,ni f(xi) =

∫Xf(x)φn(x) dω(x). (7)

A quadrature rule that satisfies (7) is typically called an interpolatory quadrature rule.

Now, if f /∈ Span(φn)n∈[N ], the authors studied the expected value and the variance of IEZ,n(f)

when x = (x1, . . . , xN ) is taken to be a random variable in XN that follows the distribution ofdensity

pDPP(x1, . . . , xN ) :=1

N !Det2 ΦN (x), (8)

with respect to the product measure ω⊗N defined on XN . As it was observed in [16], the nodes ofthe quadrature follow the distribution of the determinantal point process of reference measure ω andmarginal kernel κN defined by κN (x, y) =

∑n∈[N ] φn(x)φn(y). We refer to [20] for further details

on determinantal point processes. Now, we recall the main result of [14].Theorem 1. Let x be a random subset of X that follows the distribution of DPP of kernel κN andreference measure ω. Let f ∈ L2(ω), and n ∈ [N ]. Then

EDPPIEZ,n(f) =

∫Xf(x)φn(x)dω(x), (9)

andVDPPI

EZ,n(f) =∑

m≥N+1

〈f, φm〉2ω. (10)

Theorem 1 shows that the IEZ,n(f) is an unbiased estimator of∫X f(x)φn(x)dω(x), and its variance

depends on the coefficients 〈f, φm〉ω for m ≥ N + 1. Consequently, the expected squared error ofthe quadrature is equal to the variance of IEZ,n(f) and it is given by

EDPP

∣∣∣∣ ∫Xf(x)φn(x)dω(x)−

∑i∈[N ]

wEZ,ni f(xi)

∣∣∣∣2 =∑

m≥N+1

〈f, φm〉2ω. (11)

The identity (11) gives a theoretical guarantee for an interpolatory quadrature rule when the nodesfollow the distribution of the DPP defined by (8). Compared to existing work on the literature[11, 22, 23, 24], (11) is generic and applies to any orthonormal family.

Now, we may observe that the expected squared error in (11) depends strongly on the function f . Thismakes the comparison between EZQ and other quadratures, based on some test function f , tricky: thechoice of f may favor (or disfavor) EZQ. In order to circumvent this difficulty, we suggest to studya figure of merit that is independent of the choice of the function f . This is possible using kernelsthrough the study of the worst-case integration error on the unit ball of an RKHS. The definition ofthis quantity will be recalled in the following section.

2.2 The worst integration error in kernel quadrature

The use of the kernel framework in the context of numerical integration can be tracked back to thework of Hickernell [18, 19], who introduced the use of kernels to the quasi Monte Carlo community.Their use was popularized in the machine learning community by [28, 9]. In this framework, thequality of a quadrature is assessed by the worst-case integration error on the unit ball of an RKHS Fassociated to some kernel k : X × X → R+. This quantity is defined as follows

supf∈F,‖f‖F≤1

∣∣∣ ∫X

f(x)g(x)dω(x)−∑i∈[N ]

wif(xi)∣∣∣. (12)

1The dependency of the vector wEZ,n on x was dropped for simplicity.

3

This quantity reflects how good is the quadrature uniformly on the unit ball of F . Interestingly, thisquantity has a closed formula ∥∥∥∥µg − ∑

i∈[N ]

wik(xi, .)

∥∥∥∥F, (13)

where µg = Σg is the so-called embedding of g in the RKHS F . We shall use in Section 3.2 theequivalent expression (13) of the worst-case integration error, to derive a closed formula of

EDPP supf∈F,‖f‖F≤1

∣∣∣ ∫X

f(x)g(x)dω(x)−∑i∈[N ]

wEZ,ni f(xi)

∣∣∣2. (14)

By now, we observe that the weights wEZ,ni of EZQ are non-optimal in the sense that they do not

minimize (13). By definition, the optimal kernel quadrature for a given configuration x, such thatthe kernel matrixK(x) is non-singular, is the quadrature with nodes the xi and weights the wi thatminimize (13). We specify in Section 3.1 the subtle difference between the quadrature of Ermakovand Zolotukhin and the optimal kernel quadrature. Before that, we review the existing constructionsof the optimal kernel quadrature in the following section.

2.3 The design of the optimal kernel quadrature

The optimal kernel quadrature may be calculated numerically under the assumption that the matrixK(x) is non-singular. Indeed, for a given configuration of nodes x ∈ XN , the square of (13) isquadratic on w and have a unique solution given by wOKQ,g =K(x)−1µg(x)

2. In particular, theoptimal mixture

∑i∈[N ] w

OKQ,gi k(xi, .) takes the same values as µg on the nodes xi: the optimal

mixture interpolates the function µg on the configuration of nodes x. At this level, x is still adegree of freedom and need to be designed. This task was tackled by different approaches. Oneapproach consists on using adhoc designs for which a theoretical analysis of the convergence rateis possible. This is the case of, inter alia, the uniform grid in the periodic Sobolev space [6, 26],higher-order digital nets sequences in tensor products of Sobolev spaces [8], or tensor product ofscaled Hermite roots in the RKHS defined by the Gaussian kernel [22]. Another approach consists onusing a sequential algorithm to build up the configuration x [12, 13, 21, 7]. In general, each step ofthese greedy algorithms requires to solve a non-convex problem and costly approximations must beemployed. Alternatively, random designs, based on determinantal point processes and their mixtures[3, 4], were shown to have strong theoretical guarantees and competitive empirical performances.More precisely, it was shown that if x follows the distribution of DPP of reference measure ω andmarginal kernel κN , and if g ∈ L2(ω) such that ‖g‖ω ≤ 1, then

EDPP

∥∥∥∥µg − ∑i∈[N ]

wOKQ,gi k(xi, .)

∥∥∥∥2F≤ 2σN+1 + 2

( ∑n∈[N ]

|〈g, φn〉ω|)2NrN , (15)

where rN =∑m≥N+1 σm [2] (Theorem 4.8). However, numerical simulations suggest that the l.h.s.

of (15) converges to 0 at the faster rate O(σN+1), which corresponds to the best achievable rateaccording to [4]. This optimal rate was proved to be achieved, under some mild conditions on theeigenvalues σn, using the distribution of continuous volume sampling (CVS) [4]. This distribution isa mixture of determinantal point processes and is closely tied to the projection DPP used in [3] andcomes with the following guarantee

∀g ∈ L2(ω), ECVS

∥∥∥∥µg − ∑i∈[N ]

wOKQ,gi k(xi, .)

∥∥∥∥2F=∑m∈N∗

〈g, φn〉2ωεm(N), (16)

where εm(N) = O(σN+1) for every m ∈ N∗, so that the expected squared worst-integration error ofOKQ under the continuous volume sampling distribution scales as O(σN+1) for every g ∈ L2(ω).

2The dependency of the vector wOKQ,g on x was dropped for simplicity.

4

3 Main results

This section gathers the main contributions of this article. In Section 3.1, we prove that both EZQand OKQ belong to a larger class of quadrature rules called kernel-based interpolation quadrature(KBIQ). In Section 3.2, we prove a close formula of the expected squared worst-case integration errorof EZQ. In Section 3.3, we use Theorem 3 to improve on the existing theoretical guarantees of OKQwith DPPs.

3.1 Kernel-based interpolation quadrature

In this section, we define a new class of quadrature rules that extends both Ermakov-Zolotukhinquadrature and the optimal kernel quadrature. We start by the following observation: the weightswEZ,ni (x) of EZQ, defined in (6), writes as

wEZ,n(x) = ΦN (x)−1en. (17)

By observing that φn(x) = ΦN (x)ᵀen, and κN (x) = ΦN (x)ᵀΦN (x), we prove that

wEZ,n(x) = κN (x)−1φn(x), (18)

Equivalently, we have φn(x) = κN (x)wEZ,n(x). In other words,∑i∈[N ] w

EZ,ni (x)κN (xi, .) takes

the same values as φn on the nodes xi: wEZ,n(x) is the vector resulting of the interpolation of φnby the kernel κN . From this observation, we define kernel-based interpolation quadrature as anextension of EZQ as follows: let γ := (γm)m∈N∗ be a sequence of positive real numbers, and letM ∈ N∗ ∪ {+∞}. Define the kernel κγ,M on X × X by

∀x, y ∈ X , κγ,M (x, y) =

M∑m=1

γmφm(x)φm(y). (19)

Now, starting from a configuration x ∈ XN such that DetκN (x) > 0, we have Detκγ,M (x) > 0 3,and for a given g ∈ L2(ω), we define the vector of weights wγ,M,g(x) ∈ RN by

wγ,M,g(x) = κγ,M (x)−1µγ,Mg (x), (20)

where

µγ,Mg (x) =

M∑m=1

γm〈g, φm〉ωφm(x). (21)

We check again that∑i∈[N ] w

γ,M,gi κγ,M (xi, .) takes the same values as µγ,Mg on the nodes xi: the

mixture interpolates µγ,Mg on the nodes xi. Now, for a given g ∈ L2(ω), the vector of weightswγ,M,g(x) have two degrees of freedom: the sequence γ and the rank of the kernel M . Thesedegrees of freedom may be mixed in a variety of ways to cover a large class of quadrature rules.In particular, we show in Section 3.1.1 that, for any sequence γ, KBIQ is equivalent to EZQ whenM = N , and we show in Section 3.1.2 that KBIQ is equivalent to OKQ when M = +∞ and γ = σ;as summarized in Table 1. We may also consider M to be finite but strictly larger than N . Yet, thetheoretical analysis of these intermediate quadrature rules is beyond the scope of this work.

Quadrature M γ µγ,Mg κγ,M

EZQ N Any∑n∈[N ] γn〈g, φn〉ωφn κγ,N

EZQ N γm = 1 gN :=∑n∈[N ]〈g, φn〉ωφn κN

. . . . . . . . . . . . . . .OKQ +∞ σ µg k

Table 1: An overview of some examples of KBIQ with the corresponding couples (κγ,M ,µγ,Mg ).

3See Appendix D.1. in [3] for a proof.

5

3.1.1 EZQ is a special case of KBIQ

We recover EZQ, as defined in [14, 16], by taking M = N , and γ is defined by γm = 1 for everym ∈ N∗, and g ≡ φn for some n ∈ [N ]. The equivalent definition (20) extends EZQ to the situationwhen g /∈ EN . Even better, we show in the following that wγ,N,g(x) is independent of γ whenM = N . In particular, for any sequence of positive numbers γ we have

∀n ∈ [N ], wγ,N,φn(x) = wEZ,n(x). (22)

Proposition 2. Let g ∈ EN , and let x ∈ XN such that DetκN (x) > 0. Let γ = (γm)m∈N∗ andγ = (γm)m∈N∗ be two sequences of positive numbers. We have

wγ,N,g(x) = wγ,N,g(x). (23)

Thanks to the invariance of wγ,N,g with respect to γ, we simplify the notation and we writewEZ,g instead 4. Moreover, using this invariance, EZQ may be seen as an approximation of OKQwhen g ∈ EN . Indeed, by approximating the kernel matrix K(x) ≈ KN (x) where kN (x, y) =∑

n∈[N ] σnφn(x)φn(y), we have

K(x)−1µg(x) ≈ wEZ,g, (24)

sinceKN (x)−1µg(x) = wEZ,g by Proposition 2. Interestingly, this approximation is reminiscent to

the one used in [23] in the case of the Gaussian kernel.

3.1.2 OKQ is a special case of KBIQ

The optimal kernel quadrature is a special case of KBIQ when M = +∞ and γ = σ. Indeed, in thiscase, we have κγ,M = k, and µγ,Mg = µg , so that

wσ,M,g(x) =K(x)−1µg(x) = wOKQ,g. (25)

In other words, Ermakov-Zolotukhin quadrature and the optimal kernel quadrature are extremeinstances of interpolation based kernel quadrature that correspond to the regimes M = N andM = +∞. As it was shown in Proposition 2, the weights of EZQ depend only on the eigenfunctionsφm and do not depend on the eigenvalues σm. This is to be compared to the weights of OKQ thatdepend simultaneously on the eigenvalues and the eigenfunctions.

3.2 Main theorem

We give in this section the theoretical analysis of the worst case integration error of EZQ under thedistribution of the projection DPP.Theorem 3. Let N ∈ N∗. We have

∀g ∈ EN , EDPP‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F =

∑n∈[N ]

〈g, φn〉2ωrN , (26)

where rN =∑m≥N+1 σm. Moreover,

∀g ∈ L2(ω), EDPP‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F ≤ 4‖g‖2ωrN . (27)

As an immediate consequence of Theorem 3, we have

∀g ∈ L2(ω), EDPP supf∈F,‖f‖F=1

∣∣∣∣ ∫Xf(x)g(x)dω(x)−

∑i∈[N ]

wEZ,gi f(xi)

∣∣∣∣2 = O(rN ). (28)

In other words, the squared worst-case integration error of Ermakov-Zolotukhin quadrature withDPP nodes converges to 0 at the rate O(rN+1). This rate is slower than the rate of convergence of

4In the case g ≡ φn for some n ∈ [N ], we use wEZ,φn or wEZ,n alternatively.

6

EDPPIEZ,n(f)2 given by Theorem 1. Indeed, if f ∈ F then ‖f‖2F =

∑m∈N∗〈f, φm〉2ω/σm < +∞,

and Theorem 1 yieldsVDPPI

EZ,n(f)2 ≤ σN+1‖f‖2F , (29)so that

EDPP

∣∣∣∣ ∫Xf(x)φn(x)dω(x)−

∑i∈[N ]

wEZ,ni f(xi)

∣∣∣∣2 = VDPPIEZ,n(f)2 = O(σN+1). (30)

Now, observe that for some sequences we have σN+1 = o(rN+1). For instance, if σm = m−2s forsome s > 1/2, then rN+1 = O(N1−2s). We conclude that the convergence of EZQ under DPP isslower than the optimal rate O(σN+1), that was observed empirically for OKQ under DPP in [3] andproved theoretically for OKQ under CVS in [4], if we consider the worst-case integration error asa figure of merit. This is to be compared with the theoretical result of [14] that can not predict thedifference in the rate of convergence between EZQ and OKQ: our analysis highlights the interest ofusing kernels when comparing quadratures.

3.3 Improved theoretical guarantees for the optimal kernel quadrature with DPPs

Theorem 3 improves on the existing theoretical guarantees of the optimal kernel quadrature withdeterminantal point processes initially proposed in [3]. This is the purpose of the following result.Theorem 4. Let N ∈ N∗. We have

∀g ∈ L2(ω), EDPP‖µg −∑i∈[N ]

wOKQ,gi k(xi, .)‖2F ≤ 4‖g‖2ωrN . (31)

Compared to the analysis conducted in [3], Theorem 4 offers a sharper upper bound of

EDPP‖µg −∑i∈[N ]

wOKQ,gi k(xi, .)‖2F . (32)

Indeed, the upper bound (31) is dominated by∑Nn=1〈g, φn〉2ωrN comparably to the upper bound (15),

proved in [3], dominated by (∑Nn=1 |〈g, φn〉ω|)2NrN : our bound improves upon (15) by a factor of

N2, since

(

N∑n=1

|〈g, φn〉ω|)2 ≤ NN∑n=1

〈g, φn〉2ω ≤ N‖g‖2ω. (33)

Theorem 4 follows immediately from Theorem 3 by observing that

‖µg −∑i∈[N ]

wOKQ,gi k(xi, .)‖2F ≤ ‖µg −

∑i∈[N ]

wEZ,gi k(xi, .)‖2F . (34)

Table 2 summarizes the theoretical contributions of this work compared to the existing literature.

Quadrature Distribution Theoretical rate Empirical rate ReferenceEZQ DPP O(rN+1) O(rN+1) Theorem 3OKQ DPP N2O(rN+1) O(σN+1) [3]

O(rN+1) O(σN+1) Theorem 4OKQ CVS O(σN+1) O(σN+1) [4]

Table 2: A comparison of the rates given by Theorem 3 and Theorem 4 compared to the existingguarantees in the literature.

We give in the following section, a sketch of the main ideas behind the proof of Theorem 3.

4 Sketch of the proof

The proof of Theorem 3 decomposes into two steps. First, in Section 4.1, we give a decomposition ofthe squared approximation error ‖µg −

∑i∈[N ] w

EZ,gi k(xi, .)‖2F , then, in Section 4.2, we use this

decomposition to prove a closed formula of EDPP‖µg −∑i∈[N ] w

EZ,gi k(xi, .)‖2F .

7

4.1 A decomposition of the approximation error

Let g ∈ EN and let x ∈ XN such that DetκN (x) > 0, we have

‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F = ‖µg‖2F − 2µg(x)

ᵀwEZ,g + wEZ,gᵀK(x)wEZ,g. (35)

The last two terms of the r.h.s of (35) decompose as follows.

Proposition 5. Let g ∈ EN and let x ∈ XN such that DetκN (x) > 0. We have

µg(x)ᵀwEZ,g = ‖µg‖2F , (36)

and

wEZ,gᵀK(x)wEZ,g = ‖µg‖2F + εᵀΦN (x)−1ᵀ

K⊥N (x)ΦN (x)−1ε, (37)

where ε =∑n∈[N ]〈g, φn〉ωen, and k⊥N is the kernel defined by

k⊥N (x, y) =∑

m≥N+1

σmφm(x)φm(y). (38)

The proof of Proposition 5 is detailed in Appendix A.3. Now, by combining (35), (36) and (37), weget

‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F = �

��‖µg‖2F − 2���‖µg‖2F +�

��‖µg‖2F + εᵀΦN (x)−1ᵀ

K⊥N (x)ΦN (x)−1ε.

This proves the following result.

Theorem 6. Let g ∈ EN and let x ∈ XN such that DetκN (x) > 0. We have

‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F = εᵀΦN (x)−1

ᵀ

K⊥N (x)ΦN (x)−1ε, (39)

where ε =∑n∈[N ]〈g, φn〉ωen.

4.2 A tractable formula of the expected approximation error

In the following, we prove a closed formula for EDPP‖µg−∑i∈[N ] w

EZ,gi k(xi, .)‖2F . By Theorem 6,

it is enough to calculateEDPPε

ᵀΦN (x)−1ᵀ

K⊥N (x)ΦN (x)−1ε, (40)

for ε ∈ RN . For this purpose, observe thatK⊥N (x) =∑m≥N+1 σmφm(x)φm(x)ᵀ, so that

εᵀΦN (x)−1ᵀ

K⊥N (x)ΦN (x)−1ε =∑

m≥N+1

σmεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε. (41)

Therefore, the calculation of 40 boils down to the calculation of

EDPPεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε, (42)

for m ≥ N + 1. This is the purpose of the following result.

Theorem 7. Let ε =∑n∈[N ] εnen, ε =

∑n∈[N ] εnen ∈ RN , and m ≥ N + 1. Then

EDPPεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε =∑n∈[N ]

εnεn. (43)

In particular,

EDPPεᵀΦN (x)−1

ᵀ

K⊥N (x)ΦN (x)−1ε =∑

m≥N+1

σm∑n∈[N ]

εnεn. (44)

8

10 20 30 50 100log10(N)

7

6

5

4

3

2

log 1

0(Sq

uare

d er

ror)

EZQ1 (N)EZQ10 (N)EZQ20 (N)

rN + 1N + 1

(a) EZQ (s = 2)

10 20 30 50 100log10(N)

7

6

5

4

3

2

log 1

0(Sq

uare

d er

ror)

KBIQ1 (N)KBIQ10 (N)KBIQ20 (N)

rN + 1N + 1

(b) KBIQ (s = 2)

10 20 30 50 100log10(N)

7

6

5

4

3

2

log 1

0(Sq

uare

d er

ror)

OKQ1 (N)OKQ10 (N)OKQ20 (N)

rN + 1N + 1

(c) OKQ (s = 2)

10 20 30 50 100log10(N)

109876543

log 1

0(Sq

uare

d er

ror)

EZQ1 (N)EZQ10 (N)EZQ20 (N)

rN + 1N + 1

(d) EZQ (s = 3)

10 20 30 50 100log10(N)

109876543

log 1

0(Sq

uare

d er

ror)

KBIQ1 (N)KBIQ10 (N)KBIQ20 (N)

rN + 1N + 1

(e) KBIQ (s = 3)

10 20 30 50 100log10(N)

109876543

log 1

0(Sq

uare

d er

ror)

OKQ1 (N)OKQ10 (N)OKQ20 (N)

rN + 1N + 1

(f) OKQ (s = 3)

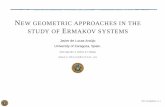

Figure 1: Squared worst-case integration error vs. number of nodes N for EZQ, KBIQ and OKQ inthe Sobolev space of periodic functions of order s ∈ {2, 3}.

We give the proof of Theorem 7 in Appendix A.4. By taking ε = ε =∑n∈[N ]〈g, φn〉ωen in

Theorem 7, we obtain (26). As for (27), it is sufficient to observe that

‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F ≤ 2

(‖µg − µgN ‖2F + ‖µgN −

∑i∈[N ]

wEZ,gi k(xi, .)‖2F

), (45)

where gN =∑n∈[N ]〈g, φn〉ωφn ∈ EN , so that we can apply (26) to gN and we obtain

EDPP‖µgN −∑i∈[N ]

wEZ,gi k(xi, .)‖2F =

∑n∈[N ]

〈g, φn〉2ω∑

m≥N+1

σm ≤ ‖g‖2ω∑

m≥N+1

σm. (46)

The term ‖µg − µgN ‖2F is upper bounded by σN+1‖g‖2ω. We give the details in Appendix A.This concludes the proof of Theorem 3. In the following section, we give numerical experimentsillustrating this result.

5 Numerical experiments

In this section, we illustrate the theoretical results presented in Section 3 in the case of the RKHSassociated to the kernel

ks(x, y) = 1 +∑m∈N∗

1

m2scos(2πm(x− y)), (47)

that corresponds to the periodic Sobolev space of order s on [0, 1] [5], and we take ω to be the uniformmeasure on X = [0, 1]. We compare the squared worst-case integration error of EZQ and OKQ andKBIQ, with M = 2N and γ = σ, for x that follows the distribution of the projection DPP and forg ∈ {e1, e10, e20}. We take N ∈ [5, 100]. Figure 1 shows log-log plots of the squared error w.r.t.N , averaged over 1000 samples for each point, for s ∈ {2, 3}. We observe that the squared error ofEZQ converges to 0 at the exact rate O(rN+1) predicted by Theorem 3, while the squared error ofOKQ converges to 0 at the rate O(σN+1) as it was already observed in [3], which is still better thanthe rate O(rN+1) proved in Theorem 4. Finally, KBIQ (M = 2N and γ = σ) converges to 0 at therate O(σN+1). We conclude that, by taking M = αN with α > 1, KBIQ have practically the sameaveraged error as OKQ (M = +∞). As we have mentioned before, the theoretical analysis of KBIQin the regime when M is finite and strictly larger than N is beyond the scope of this work, and wedefer it for future work.

9

6 Conclusion

We studied the quadrature rule proposed by Ermakov and Zolotukhin through the lens of kernelmethods. We proved that EZQ and OKQ belong to a larger class of quadrature rules that maybe defined through kernel based interpolation. From this new perspective, EZQ may be seen asan approximation of OKQ. Moreover, we studied the expected value of the squared worst-caseintegration error of EZQ when the nodes follow the distribution of a DPP. In particular, we proved thatEZQ converges to 0 at the rate O(rN+1) which is slower than the optimal rate O(σN+1) typicallyobserved for OKQ with DPPs. This work shows the importance of the worst-case integration erroras a figure of merit when comparing quadrature rules. Interestingly, we use our analysis of EZQ toimprove upon the existing theoretical guarantees of OKQ under DPPs. Finally, we illustrated thetheoretical results by some numerical experiments that hint that KBIQ in the regime M > N mayhave similar performances as OKQ. It would be interesting to study this broader class of quadraturesin the future.

Broader impact

This article makes contributions to the fundamentals of numerical integration, and due to its theoreticalnature, the author sees no ethical or immediate societal consequence of this work.

Acknowledgments and Disclosure of Funding

This project was supported by the AllegroAssai ANR project ANR-19-CHIA-0009. The authorwould like to thank the reviewers for their thorough and constructive reviews and would like to thankPierre Chainais and Rémi Bardenet for their constructive feedback on an early version of this work.

10

References[1] R. Bardenet and A. Hardy. Monte Carlo with determinantal point processes. The Annals of

Applied Probability, 30(1):368–417, 2020.

[2] A. Belhadji. Subspace sampling using determinantal point processes. PhD thesis, EcoleCentrale de Lille, 2020.

[3] A. Belhadji, R. Bardenet, and P. Chainais. Kernel quadrature with DPPs. In Advances in NeuralInformation Processing Systems 32, pages 12907–12917. 2019.

[4] A. Belhadji, R. Bardenet, and P. Chainais. Kernel interpolation with continuous volumesampling. Proceedings of the 37th International Coference on International Conference onMachine Learning, 2020.

[5] A. Berlinet and C. Thomas-Agnan. Reproducing kernel Hilbert spaces in probability andstatistics. Springer Science & Business Media, 2011.

[6] B. Bojanov. Uniqueness of the optimal nodes of quadrature formulae. Mathematics of computa-tion, 36(154):525–546, 1981.

[7] F. X. Briol, C. Oates, M. Girolami, and M. Osborne. Frank-Wolfe Bayesian quadrature:Probabilistic integration with theoretical guarantees. In Advances in Neural InformationProcessing Systems, pages 1162–1170, 2015.

[8] F. X. Briol, C. J. Oates, M. Girolami, M. A. Osborne, D. Sejdinovic, et al. Probabilisticintegration: A role in statistical computation? Statistical Science, 34(1):1–22, 2019.

[9] Y. Chen, M. Welling, and A. Smola. Super-samples from kernel herding. In Proceedings ofthe Twenty-Sixth Conference on Uncertainty in Artificial Intelligence, UAI’10, pages 109–116,Arlington, Virginia, United States, 2010. AUAI Press.

[10] J. F. Coeurjolly, A. Mazoyer, and P. O. Amblard. Monte carlo integration of non-differentiablefunctions on [0, 1]ι, ι = 1, . . . , d, using a single determinantal point pattern defined on [0, 1]d.arXiv preprint arXiv:2003.10323, 2020.

[11] P. J. Davis and P. Rabinowitz. Methods of numerical integration. 1984. Comput. Sci. Appl.Math, 1984.

[12] S. De Marchi. On optimal center locations for radial basis function interpolation: computationalaspects. Rend. Splines Radial Basis Functions and Applications, 61(3):343–358, 2003.

[13] S. De Marchi, R. Schaback, and H. Wendland. Near-optimal data-independent point locationsfor radial basis function interpolation. Advances in Computational Mathematics, 23(3):317–330,2005.

[14] S. M. Ermakov and V. Zolotukhin. Polynomial approximations and the monte-carlo method.Theory of Probability & Its Applications, 5(4):428–431, 1960.

[15] C. F. Gauss. Methodus nova integralium valores per approximationem inveniendi. apvdHenricvm Dieterich, 1815.

[16] G. Gautier, R. Bardenet, and M. Valko. On two ways to use determinantal point processes formonte carlo integration. In Advances in Neural Information Processing Systems, volume 32,2019.

[17] P. Glasserman. Monte Carlo methods in financial engineering, volume 53. Springer Science &Business Media, 2013.

[18] F. J. Hickernell. Quadrature error bounds with applications to lattice rules. SIAM Journal onNumerical Analysis, 33(5):1995–2016, 1996.

[19] F. J. Hickernell. A generalized discrepancy and quadrature error bound. Mathematics ofcomputation, 67(221):299–322, 1998.

11

[20] J. B. Hough, M. Krishnapur, Y. Peres, and B. Virág. Determinantal processes and independence.Probability surveys, 3:206–229, 2006.

[21] F. Huszár and D. Duvenaud. Optimally-weighted herding is Bayesian quadrature. In Proceedingsof the Twenty-Eighth Conference on Uncertainty in Artificial Intelligence, UAI’12, pages 377–386. AUAI Press, 2012.

[22] T. Karvonen, C. J. Oates, and M. Girolami. Integration in reproducing kernel Hilbert spaces ofgaussian kernels. arXiv preprint arXiv:2004.12654, 2020.

[23] T. Karvonen and S. Särkkä. Gaussian kernel quadrature at scaled Gauss-Hermite nodes. BITNumerical Mathematics, pages 1–26, 2019.

[24] J. Ma, V. Rokhlin, and S. Wandzura. Generalized gaussian quadrature rules for systems ofarbitrary functions. SIAM Journal on Numerical Analysis, 33(3):971–996, 1996.

[25] N. Metropolis and S. Ulam. The Monte Carlo method. Journal of the American statisticalassociation, 44(247):335–341, 1949.

[26] E. Novak, M. Ullrich, and H. Wozniakowski. Complexity of oscillatory integration for univariatesobolev spaces. Journal of Complexity, 31(1):15–41, 2015.

[27] C. P. Robert and G. Casella. Monte Carlo statistical methods. Springer, 2004.

[28] A. Smola, A. Gretton, L. Song, and B. Schölkopf. A Hilbert space embedding for distributions.In International Conference on Algorithmic Learning Theory, pages 13–31. Springer, 2007.

12

A Detailed proofs

A.1 Proof of Proposition 2

By definition, we have

∀x1, x2 ∈ X , κγN (x1, x2) =∑n∈[N ]

γnφn(x1)φn(x2), (48)

therefore∀x1, x2 ∈ X , κγN (x1, x2) =

∑n∈[N ]

ρnφn(x1)γnφn(x2), (49)

where∀n ∈ [N ], ρn =

γnγn. (50)

Then∀x ∈ XN , κγN (x) = ΦρN (x)ᵀΦγN (x) , (51)

whereΦρN (x) = (ρnφn(xi))(n,i)∈[N ]×[N ] ∈ RN×N , (52)

andΦγN (x) = (γnφn(xi))(n,i)∈[N ]×[N ] ∈ RN×N . (53)

Moreover, by definition of µγg , we have

∀x ∈ X , µγg (x) =∑n∈[N ]

γn〈g, φn〉ωφn(x), (54)

therefore∀x ∈ X , µγg (x) =

∑n∈[N ]

γn〈g, φn〉ωρnφn(x), (55)

so that∀x ∈ XN , µγg (x) = ΦρN (x)ᵀα, (56)

whereα = (γn)n∈[N ] ∈ RN . (57)

Combining (51) and (56), we prove that for any x ∈ XN such that DetκN (x) > 0, we have

wγ,N,g(x) = κγN (x)−1µγg (x)

= ΦγN (x)−1 ΦρN (x)−1ᵀ

µγg (x)

= ΦγN (x)−1 ΦρN (x)−1ᵀ

ΦρN (x)ᵀα

= ΦγN (x)−1 α. (58)

A.2 Useful results

We gather in this section some results that will be useful in the following proofs.

A.2.1 A useful lemma

We prove in the following a lemma that we will use in Section A.3.

Lemma 8. Let x ∈ XN such that DetκN (x) > 0. For n, n′ ∈ [N ], define

τn,n′(x) =√σn√σn′φn(x)

ᵀKN (x)−1φn′(x). (59)

Then∀n, n′ ∈ [N ], τn,n′(x) = δn,n′ . (60)

13

Proof. We have

KN (x) = Φ√σ

N (x)ᵀΦ√σ

N (x), (61)

whereΦ√σ

N (x) = (√σnφn(xi))(n,i)∈[N ]×[N ]. (62)

Let n, n′ ∈ [N ]. We have √σnφn(x)

ᵀ = eᵀnΦσN (x), (63)

and √σn′φn′(x) = ΦσN (x)ᵀen′ . (64)

Therefore

τn,n′(x) = eᵀnΦσN (x)

(ΦσN (x)ᵀΦσN (x)

)−1ΦσN (x)ᵀen′ (65)

= eᵀnΦσN (x)ΦσN (x)−1ΦσN (x)ᵀ−1

ΦσN (x)ᵀen′ (66)= eᵀnen′ (67)= δn,n′ . (68)

A.2.2 A borrowed result

We recall in the following a result proven in [16] that we will use in Section A.4.

Proposition 9. [Theorem 1 in [16]] Let x be a random subset of X that follows the distribution ofDPP of kernel κN and reference measure ω. Let f ∈ L2(ω), and n, n′ ∈ [N ] such that n 6= n′. Then

CovDPP(IEZ,n(f), IEZ,n′(f)) = 0. (69)

A.3 Proof of Proposition 5

Let x ∈ XN such that the condition DetκN (x) > 0 is satisfied, and let g ∈ EN . We start by theproof of (36). By definition of µg , we have

µg(x) =∑n∈[N ]

σn〈g, φn〉ωφn(x), (70)

so thatµg(x) =

∑n∈[N ]

σn〈g, φn〉ωφn(x). (71)

Proposition 2 yieldswEZ,g =KN (x)−1µg(x). (72)

Therefore

wEZ,gᵀµg(x) = µg(x)KN (x)−1µg(x)

=∑

n,n′∈[N ]

σnσn′〈g, φn〉ω〈g, φn′〉ωφn(x)ᵀKN (x)−1φn′(x)

=∑n∈[N ]

σn〈g, φn〉2ωτn,n(x)

+∑

n,n′∈[N ]n 6=n′

√σnσn′〈g, φn〉ω〈g, φn′〉ωτn,n′(x), (73)

where τn,n′(x) is defined by

τn,n′(x) =√σn√σn′φn(x)

ᵀKN (x)−1φn′(x). (74)

14

Now, Lemma 8 yields ∑n∈[N ]

σn〈g, φn〉2ωτn,n(x) =∑n∈[N ]

σn〈g, φn〉2ω

= ‖µg‖2F , (75)

and ∑n,n′∈[N ]n 6=n′

√σn√σn′〈g, φn〉ω〈g, φn′〉ωτn,n′(x) = 0. (76)

Combining (73), (75) and (76), we obtain

wEZ,gᵀµg(x) = ‖µg‖2F . (77)

We move now to the proof of (37). We have by the Mercer decomposition

K(x) =

+∞∑m=1

σmφm(x)φm(x)ᵀ (78)

=

N∑m=1

σmφm(x)φm(x)ᵀ +

+∞∑m=N+1

σmφm(x)φm(x)ᵀ. (79)

Moreover, observe that

KN (x) =

N∑m=1

σmφm(x)φm(x)ᵀ, (80)

and

K⊥N (x) =

+∞∑m=N+1

σmφm(x)φm(x)ᵀ. (81)

ThereforeK(x) =KN (x) +K⊥N (x), (82)

so thatwEZ,gᵀK(x)wEZ,g = wEZ,gᵀKN (x)wEZ,g + wEZ,gᵀK⊥N (x)wEZ,g. (83)

In order to evaluate wEZ,gᵀKN (x)wEZ,g , we use Proposition 2, and we get

wEZ,g =KN (x)−1µg(x), (84)

so that

wEZ,gᵀKN (x)wEZ,g = wEZ,gᵀKN (x)KN (x)−1µg(x)

= wEZ,gᵀµg(x)

= ‖µg‖2F . (85)

Finally, by definitionwEZ,g = ΦN (x)−1ε, (86)

where ε =∑n∈[N ]〈g, φn〉ωen. Therefore

wEZ,gᵀKN (x)⊥wEZ,g = εᵀΦN (x)−1ᵀ

KN (x)⊥ΦN (x)−1ε. (87)

A.4 Proof of Theorem 7

Let m ∈ N∗ such that m ≥ N + 1. We prove that

∀ε, ε ∈ RN , EDPPεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε =∑n∈N

εnεn. (88)

15

For this purpose, let ε, ε ∈ RN , and observe that

εᵀΦN (x)−1ᵀ

φm(x) =∑n∈[N ]

εneᵀnΦN (x)−1

ᵀ

φm(x) (89)

=∑n∈[N ]

εnwEZ,nφm(x) (90)

=∑n∈[N ]

εnIEZ,n(φm). (91)

andφm(x)ᵀΦN (x)−1ε =

∑n∈[N ]

εnIEZ,n(φm). (92)

Therefore

εᵀΦN (x)−1ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε =∑n∈[N ]

∑n′∈[N ]

εnεn′IEZ,n(φm)IEZ,n′(φm), (93)

then

EDPPεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε =∑n∈[N ]

∑n′∈[N ]

εnεn′EDPPIEZ,n(φm)IEZ,n′(φm).

(94)Now, for n, n′ ∈ [N ],

EDPPIEZ,n(φm) =

∫Xφm(x)φn(x)dω(x) = 0, (95)

andEDPPI

EZ,n′(φm) =

∫Xφm(x)φn′(x)dω(x) = 0. (96)

ThereforeEDPPI

EZ,n(φm)IEZ,n′(φm) = CovDPP(IEZ,n(φm), IEZ,n′(φm)). (97)

Now, by Proposition 9, we have CovDPP(IEZ,n(φm), IEZ,n′(φm)) = δn,n′ , so that

EDPPIEZ,n(φm)IEZ,n′(φm) = δn,n′ , (98)

and

EDPPεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε =∑n∈[N ]

∑n′∈[N ]

εnεn′EDPPIEZ,n(φm)IEZ,n′(φm)

=∑n∈[N ]

∑n′∈[N ]

εnεn′δn,n′

=∑n∈[N ]

εnεn. (99)

Now, for ε ∈ RN and m ∈ N∗ define Yε,m by

Yε,m = σmεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε. (100)

We haveEDPPYε,m = σm

∑n∈[N ]

ε2n, (101)

and the Yε,m are non-negative since

Yε,m = σm(εᵀΦN (x)−1ᵀ

φm(x))2 ≥ 0, (102)

moreover,+∞∑

m=N+1

EDPPYε,m < +∞. (103)

16

Therefore, by Beppo Levi’s lemma

EDPP

+∞∑m=N+1

Yε,m =

+∞∑m=N+1

EDPPYε,m

=∑n∈[N ]

ε2n

+∞∑m=N+1

σm. (104)

Now, in general for m ∈ N∗ such that m ≥ N + 1, we have

σmεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε ≤ 1

2(Yε,m + Yε,m), (105)

so that for M ≥ N + 1, we haveM∑

m=N+1

σmεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε ≤ 1

2(

+∞∑m=N+1

Yε,m +

+∞∑m=N+1

Yε,m). (106)

Therefore, by dominated convergence theorem we conclude that

EDPP

+∞∑m=N+1

σmεᵀΦN (x)−1

ᵀ

φm(x)φm(x)ᵀΦN (x)−1ε =

+∞∑m=N+1

σm∑n∈[N ]

εnεn. (107)

A.5 Proof of Theorem 3

Let g ∈ EN , and denote ε =∑n∈[N ]〈g, φn〉ωen. Combining Theorem 6 and Theorem 7, we obtain

EDPP‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F =

∑m≥N+1

σm∑n∈[N ]

ε2n. (108)

Now let g ∈ L2(ω), we have

‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F = ‖µg − µgN + µgN −

∑i∈[N ]

wEZ,gi k(xi, .)‖2F (109)

≤ 2(‖µg − µgN ‖2F + ‖µgN −

∑i∈[N ]

wEZ,gi k(xi, .)‖2F

), (110)

where gN =∑n∈[N ]〈g, φn〉ωφn ∈ EN .

Now, observe thatµγ,Ng = µγ,NgN , (111)

so thatwEZ,g = wEZ,gN . (112)

Therefore‖µg −

∑i∈[N ]

wEZ,gi k(xi, .)‖2F ≤ 2

(‖µg − µgN ‖2F + ‖µgN −

∑i∈[N ]

wEZ,gNi k(xi, .)‖2F

). (113)

Now, we have

‖µg − µgN ‖2F =∑

m≥N+1

σm〈g, φm〉2ω

≤ σN+1

∑m≥N+1

〈g, φm〉2ω

≤ rN+1‖g‖2ω. (114)Moreover, by (26) we have

EDPP‖µgN −∑i∈[N ]

wEZ,gNi k(xi, .)‖2F =

∑n∈[N ]

〈g, φn〉2ωrN+1

≤ ‖g‖2ωrN+1. (115)Combining (113), (114) and (115), we obtain

‖µg −∑i∈[N ]

wEZ,gi k(xi, .)‖2F ≤ 4‖g‖2ωrN+1. (116)

17