A Guide to Digital Television Systems and Measurements · 2017. 8. 7. · (Section 3), system...

Transcript of A Guide to Digital Television Systems and Measurements · 2017. 8. 7. · (Section 3), system...

A Guide to Digital TelevisionSystems and Measurements

EA

V

SA

V

3FF

Cb

000

Y

000

Cr

XY

Z

Y 3FF

1712

Cb

0

000

1713

Y1

000

1714

Cr

2

XY

Z17

15

Y3Word Number

Data

Last active picture sample First active picture sample

COMPOSITE: Full analog line digitized

COMPONENT: Active line digitized, EAV/SAV added

Copyright ® 1997, Tektronix, Inc. All rights reserved.

i

A Guide to Digital

Television Systems and

Measurements

AcknowledgmentAuthored by David K. Fibushwith contributions from:

Bob ElkindKenneth Ainsworth

Some text and figures used in this booklet reprint-ed with permission from Designing Digital Sys-tems published by Grass Valley Products.

ii

iii

1. Introduction · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 1

2. Digital Basics · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 3Component and Composite (analog)· · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 3Sampling and Quantizing · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 4Digital Video Standards· · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 4Serial Digital Video · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 8Rate Conversion – Format conversion · · · · · · · · · · · · · · · · · · · · · · · · · · · 10

3. Digital Audio · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 11AES/EBU Audio Data Format · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 11Embedded Audio · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 11

4. System Hardware and Issues · · · · · · · · · · · · · · · · · · · · · · · · · 19Cable Selection · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 19Connectors · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 19Patch Panels · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 19Terminations and Loop-throughs · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 19Digital Audio Cable and Connector Types · · · · · · · · · · · · · · · · · · · · · · · · 20Signal Distribution, Reclocking · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 20System Timing · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 20

5. Monitoring and Measurements · · · · · · · · · · · · · · · · · · · · · · · · 23

6. Measuring the Serial Signal · · · · · · · · · · · · · · · · · · · · · · · · · · 25Waveform Measurements · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 25Measuring Serial Digital Video Signal Timing · · · · · · · · · · · · · · · · · · · · · 26

7. Definition and Detection of Errors · · · · · · · · · · · · · · · · · · · · · 29Definition of Errors · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 29Quantifying Errors · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 30Nature of the Studio Digital Video Transmission Systems · · · · · · · · · · · 30Measuring Bit Error Rates · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 32An Error Measurement Method for Television· · · · · · · · · · · · · · · · · · · · · 32System considerations· · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 33

8. Jitter Effects and Measurements · · · · · · · · · · · · · · · · · · · · · · · 35Jitter in Serial Digital Signals · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 35Measuring Jitter · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 37

9. System Testing · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 39Stress Testing · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 39SDI Check Field · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 39

10. Bibliography· · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 42Articles and Books· · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 42Standards · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 43

11. Glossary · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · · 44

Contents

page 1

The following major topicsare covered in this booklet:1. A definition of digital

television including digi-tal basics, video stan-dards, conversionsbetween video signalforms, and digital audioformats and standards.

2. Considerations in select-ing transmission systempassive components suchas cable, connectors andrelated impedance match-ing concerns are dis-cussed as well as contin-ued use of the passiveloop-through concept.Much of the transmissionsystem methods and hard-ware already in place maybe used for serial digital.

3. New and old systemsissues that are particularlyimportant for digital videoare discussed, includingequalization, reclocking,and system timing.

4. Test and measurement ofserial digital signals canbe characterized in threedifferent aspects: usage,methods, and operationalenvironment. Types ofusage include: designer’sworkbench, manufactur-ing quality assurance,user equipment evalua-tion, system installationand acceptance testing,system and equipmentmaintenance, and perhapsmost important, opera-tion.

5. Several measurementmethods are covered indetail, including serialwaveform measurements,definition and detectionof errors, jitter effects andmeasurements, and sys-tem testing with specialtest signals.

New methods of test andmeasurement are required todetermine the health of thedigital video transmissionsystem and the signals it car-ries. Analysis methods forthe program video and audiosignals are well known.However, measurements forthe serial form of the digi-tized signal differ greatlyfrom those used to analyzethe familiar baseband videosignal.This guide addresses manytopics of interest to designersand users of digital televisionsystems. You’ll find informa-tion on embedded audio(Section 3), system timingissues (Section 4), and sys-tem timing measurements(Section 6). Readers who arealready familiar with digitaltelevision basics may wish tostart with Section 4, SystemHardware and Issues. Discus-sion of specific measurementtopics begins in Section 6.

1. Introduction

page 2

page 3

Figure 2-2. Composite encoding.

QuadratureModulation

at subcarrierfrequency

Y 0-5 MHz

R-Y 0-1.5 MHz

B-Y 0-1.5 MHz

++ Composite Video

0-5 MHz

Chroma2-5 MHz

Component and Composite(analog)Image origination sources,such as cameras andtelecines, internally producecolor pictures as three, fullbandwidth signals – one eachfor Green, Blue and Red. Cap-italizing on human vision notbeing as acute for color as itis for brightness level, televi-sion signals are generallytransformed into luminanceand color difference signalsas shown in Figure 2-1. Y, theluminance signal, is derivedfrom the GBR component col-ors, based on an equationsuch as:

Y = 0.59G + 0.30R + 0.11B

The color difference signalsoperate at reduced band-width, typically one-half ofthe luminance bandwidth. Insome systems, specificallyNTSC, the color differencesignals have even lower andunequal bandwidths. Compo-nent signal formats and volt-age levels are not standard-

ized for 525-line systems,whereas there is an EBU doc-ument (EBU N-10) for 625line systems. Details of com-ponent analog systems arefully described in anotherbooklet from Tektronix, Solv-ing the Component Puzzle.It’s important to note that sig-nal level values in the colordifference domain allow com-binations of Y, B-Y, R-Y thatwill be out of legal gamutrange when converted toGBR. Therefore, there’s aneed for gamut checking ofcolor difference signals whenmaking operational adjust-ments for either the analog ordigital form of these signals.Most television signals todayare two field-per-frame“interlaced.” A frame con-tains all the scan lines for apicture, 525 lines in 30frame/second applicationsand 625 lines in 25frame/second applications.Interlacing increases the tem-poral resolution by providingtwice as many fields per sec-

ond, each one with only halfthe lines. Every other pictureline is represented in the firstfield and the others are filledin by the second field. Another way to look at inter-lace is that it’s bandwidthreduction. Display rates of 50or more presentations persecond are required to elimi-nate perceived flicker. Byusing two-field interlace, thebandwidth for transmission isreduced by a factor of two.Interlace does produce arti-facts in pictures with highvertical information content.However, for television view-ing, this isn’t generally con-sidered a problem when con-trasted to the cost of twice thebandwidth. In computer dis-play applications, the arti-facts of interlace are unac-ceptable so progressive scan-ning is used, often with framerates well above 60 Hz. Astelevision (especially highdefinition) and computer ap-plications become more inte-grated there will be a migra-tion from interlace to pro-gressive scanning for allapplications.Further bandwidth reductionof the television signal occurswhen it’s encoded into com-posite PAL or NTSC asshown in Figure 2-2. NTSC isdefined for studio operationby SMPTE 170M which for-malizes and updates thenever fully approved,RS 170A. Definitions for PALand NTSC (as well asSECAM) can be found inITU-R (formally CCIR) Report624. Where each GBR signalmay have a 6 MHz band-width, color difference sig-nals would typically have Yat 6 MHz and each color dif-ference signal at 3 MHz; how-ever, a composite signal isone channel of 6 MHz or less.The net result is a composite6 MHz channel carrying colorfields at a 60 per second ratethat in its uncompressed, pro-gressive scan form, wouldhave required three 12 MHzchannels for a total band-

2. Digital Basics

Figure 2-1. Component signals.

page 4

width of 36 MHz. So, datacompression is nothing new;digital just makes it easier.For composite NTSC signals,there are additional gamutconsiderations when con-verting from the color differ-ence domain. NTSC trans-mitters will not allow 100percent color amplitude onsignals with high luminancelevel (such as yellow). Thisis because the transmittercarrier will go to zero for sig-nals greater than about 15percent above 1 volt. Hence,there’s a lower gamut limitfor some color difference sig-nals to be converted to NTSCfor RF transmission.As part of the encoding pro-cess, sync and burst areadded as shown in Figure2-3. Burst provides a refer-ence for decoding back tocomponents. The phase ofburst with respect to syncedge is called SCH phase,which must be carefully con-trolled in most studio appli-cations. For NTSC, 7.5 IREunits of setup is added to theluminance signal. This posessome conversion difficulties,

particularly when decodingback to component. Theproblem is that it’s relativelyeasy to add setup; but remov-ing it when the amplitudesand timing of setup in thecomposite domain are notwell controlled can result inblack level errors and/or sig-nal disturbances at the endsof the active line.

Sampling and QuantizingThe first step in the digitiz-ing process is to “sample”the continuous variations ofthe analog signal as shown inFigure 2-4. By looking at theanalog signal at discrete timeintervals, a sequence of volt-age samples can be stored,manipulated and later,reconstructed.In order to recover the analogsignal accurately, the samplerate must be rapid enough toavoid missing important in-formation. Generally, thisrequires the sampling fre-quency to be at least twicethe highest analog frequency.In the real world, the fre-quency is a little higher thantwice. (The Nyquist Sam-

pling Theorem says that theinterval between successivesamples must be equal to orless than one-half of theperiod of the highest fre-quency present in the signal.)The second step in digitizingvideo is to “quantize” byassigning a digital number tothe voltage levels of the sam-pled analog signal – 256 lev-els for 8-bit video, 1024 for10-bit video, and up to sev-eral thousand for audio.To get the full benefit of digi-tal, 10-bit processing isrequired. While most currenttape machines are 8-bit,SMPTE 125M calls out 10-bitas the interface standard.Processing at less than 10bits may cause truncationand rounding artifacts, par-ticularly in electronicallygenerated pictures. Visibledefects will be revealed inthe picture if the quantiza-tion levels are too coarse (toofew levels). These defectscan appear as “contouring”of the picture. However, thegood news is that randomnoise and picture details pre-sent in most live video sig-nals actually help concealthese contouring effects byadding a natural randomnessto them. Sometimes the num-ber of quantizing levels mustbe reduced; for example,when the output of a 10-bitprocessing device feeds an8-bit recorder. In this case,contouring effects are mini-mized by deliberately addinga small amount of randomnoise (dither) to the signal.This technique is known asrandomized rounding.

Digital Video StandardsAlthough early experimentswith digital technology werebased on sampling the com-posite (NTSC or PAL) signal,it was realized that for thehighest quality operation,component processing wasnecessary. The first digitalstandards were component.Interest in composite digitalwas revived when Ampexand Sony announced a com-posite digital recording for-mat, which became known as

Figure 2-3. Color difference and composite waveforms.

Figure 2-4. Sampling an analog signal.

page 5

signals CB and CR, which arescaled versions of the signals B-Y and R-Y.The sampling structuredefined is known as “4:2:2.”This nomenclature is derivedfrom the days when multi-ples of NTSC subcarrier werebeing considered for the sam-pling frequency. This ap-proach was abandoned, butthe use of “4” to representthe luminance sampling fre-quency was retained. Thetask force mentioned aboveexamined luminance sam-pling frequencies from12 MHz to 14.3 MHz. Theyselected 13.5 MHz as a com-promise because the sub-multiple 2.25 MHz is a factorcommon to both 525- and625-line system. Someextended definition TV sys-tems use a higher resolutionformat called 8:4:4 which hastwice the bandwidth of 4:2:2.Parallel component digital.Rec. 601 described the sam-pling of the signal. Electricalinterfaces for the data pro-duced by this sampling werestandardized separately bySMPTE and the EBU. Theparallel interface for525/59.94 was defined bySMPTE as SMPTE Standard125M (a revision of the ear-

lier RP-125), and for 625/50by EBU Tech 3267 (a revi-sion of the earlier EBU Tech3246). Both of these wereadopted by CCIR and areincluded in Recommenda-tion 656, the documentdefining the hardware inter-face.The parallel interface useseleven twisted pairs and 25-pin “D” connectors. (Theearly documents specifiedslide locks on the connec-tors; later revisions changedthe retention mechanism to4/40 screws.) This interfacemultiplexes the data wordsin the sequence CB, Y, CR, Y,CB…, resulting in a data rateof 27 Mwords/s. The timingsequences SAV and EAVwere added to each line torepresent Start of ActiveVideo and End of ActiveVideo. The digital active linecontains 720 luminance sam-ples, and includes space forrepresenting analog blankingwithin the active line. Rec. 601 specified eight bitsof precision for the datawords representing the vid-eo. At the time the standardswere written, some partici-pants suggested that this maynot be adequate, and provi-sion was made to expand theinterface to 10-bit precision.Ten-bit operation has indeedproved beneficial in manycircumstances, and the latestrevisions of the interfacestandard provide for a 10-bitinterface, even if only eightbits are used. Digital-to-ana-log conversion range is cho-sen to provide headroomabove peak white and foot-room below black as shownin Figure 2-5. Quantizing lev-els for black and white areselected so the 8-bit levelswith two “0”s added willhave the same values as the10-bit levels. Values 000 to003 and 3FF to 3FC arereserved for synchronizing

D-2. Initially, these machineswere projected as analogin/out devices for use inexisting analog NTSC andPAL environments; digitalinputs and outputs were pro-vided for machine-to-ma-chine dubs. However, thepost-production communityrecognized that greateradvantage could be taken ofthe multi-generation capabil-ity of these machines if theywere used in an all digitalenvironment.Rec. 601. RecommendationITU-R BT.601 (formerly CCIRRecommendation 601) is nota video interface standard,but a sampling standard. Rec.601 evolved out of a jointSMPTE/EBU task force todetermine the parameters fordigital component video forthe 525/59.94 and 625/50television systems. Thiswork culminated in a seriesof tests sponsored by SMPTEin 1981, and resulted in thewell known CCIR Recom-mendation 601. This docu-ment specified the samplingmechanism to be used forboth 525 and 625 line sig-nals. It specified orthogonalsampling at 13.5 MHz forluminance, and 6.75 MHzfor the two color difference

Figure 2-5. Luminance quantizing.

Excluded

Peak

Black

Excluded

766.3 mv

700.0 mv

0.0 mv

-51.1 mv

3FF

3AC

040

000

11 1111 1111

11 1010 1100

00 0100 0000

00 0000 0000

page 6

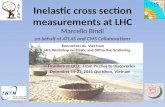

purposes. Similar factorsdetermine the quantizing val-ues for color difference sig-nals as shown in Figure 2-6.Figure 2-7 shows the locationof samples and digital wordswith respect to an analoghorizontal line. Because thetiming information is carriedby EAV and SAV, there is noneed for conventional syn-chronizing signals, and thehorizontal intervals (and theactive line periods during thevertical interval) may beused to carry ancillary data.The most obvious applica-tion for this data space is tocarry digital audio, and doc-uments are being preparedby SMPTE to standardize theformat and distribution ofthe audio data packets.Rec. 601/656 is a well proventechnology with a full rangeof equipment available forproduction and post produc-tion. Generally, the parallelinterface has been super-seded by a serial implemen-tation, which is far morepractical in larger installa-tions. Rec. 601 provides allof the advantages of both dig-ital and component opera-tion. It’s the system of choicefor the highest possible qual-ity in 525- or 625-linesystems.Parallel composite digital.The composite video signalis sampled at four times the(NTSC or PAL) subcarrierfrequency, giving nominalsampling rates of 14.3 MHzfor NTSC and 17.7 MHz forPAL. The interface is stan-dardized for NTSC as SMPTE244M and, at the time ofwriting, EBU documentationis in process for PAL. Bothinterfaces specify ten-bit pre-cision, although D-2 and D-3machines record only eightbits to tape. Quantizing ofthe NTSC signal (shown inFigure 2-8) is defined with amodest amount of headroomabove 100% bars, a smallfootroom below sync tip andthe same excluded values asfor component.PAL composite digital hasbeen defined to minimize

Figure 2-7. Digital horizontal line.

EA

V

SA

V

3FF

Cb

000

Y

000

Cr

XY

Z

Y 3FF

1712

Cb

0

000

1713

Y1

000

1714

Cr

2

XY

Z17

15

Y3Word Number

Data

Last active picture sample First active picture sample

COMPOSITE: Full analog line digitized

COMPONENT: Active line digitized, EAV/SAV added

Figure 2-6. Color difference quantizing.

Excluded

Max Positive

Black

Max Negative

Excluded

399.2 mv

350.0 mv

0.0 mv

-350.0 mv

-400.0 mv

3FF

3C0

200

040

000

11 1111 1111

11 1100 0000

10 0000 0000

00 0100 0000

00 0000 0000

Figure 2-8. NTSC quantizing.

Excluded

100% Chroma

Peak White

Blanking

Sync TipExcluded

998.7 mv

937.7 mv

714.3 mv

0.0 mv

-285.7 mv-306.1 mv

3FF

3CF

320

0F0

016000

11 1111 111111 1100 1111

11 0010 0000

00 1111 0000

00 0001 000000 0000 0000

page 7

sentation of vertical sync andequalizing pulses is alsotransmitted over the compos-ite interface. Composite digital installa-tions provide the advantagesof digital processing andinterface, and particularlythe multi-generation capabil-ities of digital recording.However, there are some lim-itations. The signal is com-posite and does bear the foot-print of NTSC or PAL encod-ing, including the narrow-band color informationinherent to these codingschemes. Processes such aschroma key are generally notsatisfactory for high qualitywork, and separate provisionmust be made for a compo-nent derived keying signal.Some operations, such asdigital effects, require thatthe signal be converted tocomponent form for process-ing, and then re-encoded to

composite. Also, the fullexcursion of the compositesignal has to be representedby 256 levels on 8-bitrecorders. Nevertheless, com-posite digital provides amore powerful environmentthan analog for NTSC andPAL installations and is avery cost effective solutionfor many users.As with component digital,the parallel composite inter-face uses a multi-pair cableand 25-pin “D” connectors.Again, this has proved to besatisfactory for small andmedium sized installations,but practical implementationof a large system requires aserial interface.16:9 widescreen componentdigital signals. Many televi-sion markets around theworld are seeing the intro-duction of delivery systemsfor widescreen (16:9 aspectratio) pictures. Some of thesesystems, such as MUSE inJapan and the future ATVsystem in the USA, areintended for high-definitionimages. Others, such asPALplus in Europe will use525- or 625-line pictures.Domestic receivers with 16:9displays have been intro-duced in many markets, andpenetration will likelyincrease significantly overthe next few years. For mostbroadcasters, this creates theneed for 16:9 programmaterial.Two approaches have beenproposed for the digital rep-resentation of 16:9 525- and625-line video. The firstmethod retains the samplingfrequencies of the Rec. 601standard for 4:3 images(13.5 MHz for luminance).This “stretches” the pixelrepresented by each dataword by a factor of 1.33 hori-zontally, and results in a lossof 25 percent of the horizon-tal spatial resolution whencompared to Rec. 601 pic-tures. For some applications,this method still provides ad-equate resolution, and it hasthe big advantage that much

quantizing noise by using amaximum amount of theavailable digital range. Ascan be seen in Figure 2-9, thepeak analog values actuallyexceed the digital dynamicrange, which might appear tobe a mistake. Because of thespecified sampling axis, ref-erence to subcarrier and thephase of the highest lumi-nance level bars (such as yel-low), the samples neverexceed the digital dynamicrange. The values involvedare shown in Figure 2-10.Like the component inter-face, the composite digitalactive line is long enough toaccommodate the analogactive line and the analogblanking edges. Unlike thecomponent interface, thecomposite interface transmitsa digital representation ofconventional sync and burstduring the horizontal blank-ing interval. A digital repre-

Figure 2-9. PAL quantizing.

Excluded

Peak White

Blanking

Sync TipExcluded

913.1 mv

700.0 mv

0.0 mv

-300.0 mv-304.8 mv

3FF

34C

100

004000

11 1111 1111

11 0100 1100

01 0000 0000

00 0000 010000 0000 0000

Figure 2-10. PAL yellow bar sampling.

-U+V sample

U+V sample

-U-V sample

U-V sample

0.934 V

Excluded values0.9095 to 0.9131 VHighest sample

0.886 V

+167o

0.620 V Luminance Level

Reference Subcarrier

100% Yellow Bar

page 8

existing Rec. 601 equipmentcan be used.A second method maintainsspatial resolution where pix-els (and data words) areadded to represent the addi-tional width of the image.This approach results in 960luminance samples for thedigital active line (comparedto 720 samples for a 4:3 pic-ture, and to 1920 samples fora 16:9 high definition pic-ture). The resulting samplingrate for luminance is18 MHz. This system pro-vides the same spatial resolu-tion as Rec. 601 4:3 images;but existing equipmentdesigned only for 13.5 MHzcannot be used. The addi-tional resolution of thismethod may be advantageouswhen some quality overheadis desired for post produc-tion or when up-conversionto a high-definition system iscontemplated. SMPTE 267M provides forboth the 13.5 MHz and18 MHz systems. Because

16:9 signals using 13.5 MHzsampling are electricallyindistinguishable from 4:3signals, they can be con-veyed by the SMPTE 259Mserial interface at 270 Mb/s.It’s planned that SMPTE259M (discussed below) willbe revised to provide forserial transmission of18 MHz sampled signals,using the same algorithm, ata data rate of 360 Mb/s.

Serial Digital VideoParallel connection of digitalequipment is practical onlyfor relatively small installa-tions, and there’s a clearneed for transmission over asingle coaxial cable. This isnot simple as the data rate ishigh, and if the signal weretransmitted serially withoutmodification, reliable recov-ery would be very difficult.The serial signal must bemodified prior to transmis-sion to ensure that there aresufficient edges for reliableclock recovery, to minimize

the low-frequency content ofthe transmitted signal, and tospread the transmittedenergy spectrum so thatradio-frequency emissionproblems are minimized.In the early 1980s, a serialinterface for Rec. 601 signalswas recommended by theEBU. This interface used 8/9block coding and resulted ina bit rate of 243 Mb/s. Itsinterface did not support ten-bit precision signals, andthere were some difficultiesin producing reliable, costeffective, integrated circuits.The block coding based inter-face was abandoned and hasbeen replaced by an interfacewith channel coding thatuses scrambling and conver-sion to NRZI. The serial inter-face has been standardized asSMPTE 259M and EBU Tech.3267, and is defined for bothcomponent and compositesignals including embeddeddigital audio.Conceptually, the serial digitalinterface is much like a carriersystem for studio applica-tions. Baseband audio andvideo signals are digitized andcombined on the serial digital“carrier” as shown in Figure2-11. (It’s not strictly a carriersystem in that it’s a basebanddigital signal, not a signalmodulated on a carrier.) Thebit rate (carrier frequency) isdetermined by the clock rateof the digital data: 143 Mb/sfor NTSC, 177 Mb/s for PALand 270 Mb/s for Rec. 601component digital. Thewidescreen (16:9) componentsystem defined in SMPTE 267will produce a bit rate of360 Mb/s.Parallel data representing thesamples of the analog signalis processed as shown in Fig-ure 2-12 to create the serialdigital data stream. The par-allel clock is used to loadsample data into a shift regis-ter, and a times-ten multipleof the parallel clock shiftsthe bits out, LSB (least signif-icant bit) first, for each 10-bitdata word. If only 8 bits ofdata are available at theinput, the serializer places

Figure 2-11. The carrier concept.

ANALOG TODIGITAL

C ONVERSION

DIGITAL DATAF ORMATTING

VIDEO

AUDIO

&

SERIAL DIGITALVIDEO “C ARRIER”

143 Mb/s NTSC

177 Mb/s PAL

270 Mb/s CCIR 601

360 Mb/s 16:9 component

Figure 2-12. Parallel-to-serial conversion.

Shift Register

EncoderScrambler

MSB10

LSB

x 10

Data

Parallel In Serial Out

Scrambled

NRZI

MSB LSB

NRZ

Clock

Fclock

Load Data Shift Data

10 x Fclock

G1(x) = x9 + x4 + 1

G2(x) = x + 1

page 9

zeros in the two LSBs tocomplete the 10-bit word.Component signals do notneed further processing asthe SAV and EAV signals onthe parallel interface provideunique sequences that can beidentified in the serialdomain to permit word fram-ing. If ancillary data such asaudio has been inserted intothe parallel signal, this datawill be carried by the serialinterface. The serial interfacemay be used with normalvideo coaxial cable.The conversion from parallelto serial for composite signalsis somewhat more complex.As mentioned above, theSAV and EAV signals on the

parallel component interfaceprovide unique sequencesthat can be identified in theserial domain. The parallelcomposite interface does nothave such signals, so it isnecessary to insert a suitabletiming reference signal (TRS)into the parallel signal beforeserialization. A diagram ofthe serial digital NTSC hori-zontal interval is shown inFigure 2-13. The horizontalinterval for serial digital PALwould be similar except thatthe sample location isslightly different on eachline, building up two extrasamples per field. A three-word TRS is inserted in thesync tip to enable word fram-

Figure 2-14. NRZ and NRZI relationship.

NRZ

NRZ all "0"s

NRZ all "1"s

NRZI

NRZI all "highs" or "lows"

NRZI 1/2 clock freqeither polarity

Clock FreqData detect on

rising edge

1

oo o o o o o

1 1 1

ing at the serial receiver,which should also removethe TRS from the receivedserial signal. The composite parallel inter-face does not provide fortransmission of ancillarydata, and the transmission ofsync and burst means thatless room is available forinsertion of data. Upon con-version from parallel toserial, the sync tips may beused. However, the dataspace in NTSC is sufficientfor four channels of AES/EBUdigital audio. Ancillary datasuch as audio may be addedprior to serialization, andthis would normally be per-formed by the same co-pro-cessor that inserts the TRS.Following the serialization ofthe parallel information thedata stream is scrambled by amathematical algorithm thenencoded into NRZI (non-return to zero inverted) by aconcatenation of the follow-ing two functions:

G1(X) = X 9 + X 4 + 1

G2(X) = X + 1

At the receiver the inverse ofthis algorithm is used in thedeserializer to recover thecorrect data. In the serial dig-ital transmission system, theclock is contained in the dataas opposed to the parallelsystem where there’s a sepa-rate clock line. By scram-bling the data, an abundanceof transitions is assured asrequired for clock recovery.The mathematics of scram-bling and descrambling leadto some specialized test sig-nals for serial digital systemsthat are discussed later inthis guide.Encoding into NRZI makesthe serial data stream polar-ity insensitive. NRZ (nonreturn to zero, an old digitaldata tape term) is the familiarcircuit board logic level, highis a “1” and low is a “0.” Fora transmission system it’sconvenient to not require acertain polarity of the signalat the receiver. As shown inFigure 2-14, a data transitionis used to represent each “1”

Figure 2-13. NTSC horizontal interval.

page 10

and there’s no transition for adata “0.” The result’s that it’sonly necessary to detect tran-sitions; this means eitherpolarity of the signal may beused. Another result of NRZIencoding is that a signal ofall “1”s now produces a tran-sition every clock intervaland results in a square waveat one-half the clock fre-quency. However, “0”s pro-duce no transition, whichleads to the need for scram-bling. At the receiver, the ris-ing edge of a square wave atthe clock frequency would beused for data detection.

Rate Conversion – FormatConversionWhen moving between com-ponent digital and compositedigital in either direction,there are two steps: theactual encoding or decoding,and the conversion of thesample rate from one stan-dard to the other. The digitalsample rates for these twoformats are different:13.5 MHz for component dig-ital and 14.3 MHz for NTSCcomposite digital (17.7 MHzfor PAL). This second step iscalled “rate conversion.”Often the term rate conver-sion is used to mean bothencoding/decoding and

resampling of digital rates.Strictly speaking, rate con-version is taking one samplerate and making anothersample rate out of it. For ourpurposes, we’ll use the term“format conversion” to meanboth the encode/decode stepand resampling of digitalrates. The format conversionsequence depends on direc-tion. For component to com-posite, the usual sequence israte conversion followed byencoding. For composite tocomponent, the sequence isdecoding followed by rateconversion. See Figure 2-15. It’s much easier to do pro-duction in componentbecause it isn’t necessary towait for every color framingboundary (four fields forNTSC and eight for PAL) tomatch two pieces of video to-gether; instead, they can bematched every two fields(motion frame). This pro-vides four times the opportu-nity. Additionally, compo-nent is a higher quality for-mat since luminance andchrominance are handledseparately. To the extent pos-sible, origination for compo-nent environment produc-tion should be accomplishedcomponent. A high quality

composite-to-component for-mat converter provides a rea-sonable alternative.After post-production work,component digital oftenneeds to be converted tocomposite digital. Sourcesthat are component digitalmay be converted for inputto a composite digitalswitcher or effects machine.Or a source from a compo-nent digital telecine may beconverted for input to a com-posite digital suite. Further-more, tapes produced incomponent digital may needto be distributed or archivedin composite digital.The two major contributorsto the quality of this processare the encoding or decodingprocess and the sample rateconversion. If either one isfaulty, the quality of the finalproduct suffers. In order toaccurately change the digitalsample rate, computationsmust be made between twodifferent sample rates and in-terpolations must be calcu-lated between the physicallocation of the source pixeldata and the physical loca-tion of destination pixel data.Of the 709,379 pixel loca-tions in a PAL compositedigital frame, all except onemust be mapped (calculated).In order to accomplish thiscomputationally intense con-version, an extremely accu-rate algorithm must be used.If the algorithm is accurateenough to deal with PALconversions, NTSC conver-sions using the same algo-rithm are simple. To ensurequality video, a sophisticatedalgorithm must be employedand the hardware must pro-duce precise coefficients andminimize rounding errors.

Figure 2-15. Format conversion.

page 11

AES/EBU Audio Data FormatAES digital audio (alsoknown as AES/EBU) con-forms to the specificationAES3 (ANSI 4.40) titled “AESrecommended practice fordigital audio engineering –Serial transmission format fortwo-channel linearly repre-sented digital audio data.”AES/EBU digital audio is theresult of cooperation betweenthe Audio Engineering Soci-ety and the European Broad-casting Union. When discussing digitalaudio, one of the importantconsiderations is the numberof bits per sample. Wherevideo operates with 8 or 10bits per sample, audio imple-mentations range from 16 to24 bits to provide the desireddynamic range and signal-to-noise ratio (SNR). The basicformula for determining theSNR for digital audio is:

SNR = (6.02 * n) + 1.76

where “n” is the number ofbits per sampleFor a 16-bit system, the maxi-mum theoretical SNR would be(6.02 * 16) + 1.76 = 98.08 dB;for an 18-bit system, the SNRwould be 110.2 dB; and for a20-bit device, 122.16 dB. Awell-designed 20-bit ADCprobably offers a value

between 100 and 110 dB.Using the above formula foran SNR of 110 dB, this sys-tem has an equivalent 18.3-bit resolution. An AES digital audio signalalways consists of two chan-nels which may be distinctlyseparate audio material orstereophonic audio. There’salso a provision for single-channel operation (mono-phonic) where the seconddigital data channel is eitheridentical to the first or hasdata set to logical “0.” For-matting of the AES data isshown in Figure 3-1. Eachsample is carried by a sub-frame containing: 20 bits ofsample data, 4 bits of auxil-iary data (which may be usedto extend the sample to 24bits), 4 other bits of data anda preamble. Two sub-framesmake up a frame which con-tains one sample from each ofthe two channels.Frames are further groupedinto 192-frame blocks whichdefine the limits of user dataand channel status datablocks. A special preambleindicates the channel identityfor each sample (X or Ypreamble) and the start of a192-frame block (Z pream-ble). To minimize the direct-current (DC) component on

the transmission line, facili-tate clock recovery, and makethe interface polarity insensi-tive, the data is channelcoded as biphase-mark. Thepreambles specifically violatethe biphase-mark rules foreasy recognition and toensure synchronization.When digital audio is embed-ded in the serial digital videodata stream, the start of the192-frame block is indicatedby the so-called “Z” bitwhich corresponds to theoccurrence of the Z-type pre-amble.The validity bit indicateswhether the audio samplebits in the sub-frame are suit-able for conversion to an ana-log audio signal. User data isprovided to carry other infor-mation, such as time code.Channel status data containsinformation associated witheach audio channel. Thereare three levels of implemen-tation of the channel statusdata: minimum, standard,and enhanced. The standardimplementation is recom-mended for use in profession-al television applications,hence the channel status datawill contain informationabout signal emphasis, sam-pling frequency, channelmode (stereo, mono, etc.), useof auxiliary bits (extendaudio data to 24 bits or otheruse), and a CRC (cyclicredundancy code) for errorchecking of the total channelstatus block.

Embedded AudioOne of the important advan-tages of SDI (Serial DigitalInterconnect) is the ability toembed (multiplex) severalchannels of digital audio inthe digital video. This is par-ticularly useful in large sys-tems where separate routingof digital audio becomes acost consideration and theassurance that the audio isassociated with the appropri-ate video is an advantage. Insmaller systems, such as apost production suite, it’s

3. Digital Audio

Figure 3-1. AES audio data formatting.

Sample Data20 bits

32 bits

Auxiliary Data 4 bits

Audio sample validityUser bit data

Audio channel statusSubframe parity

Start of 192 frame block

Channel BChannel BChannel B Channel AChannel A

Subframe 1 Subframe 2

Frame 0Frame 191 Frame 1

Preamble X, Y or Z, 4 bits

ZZ

Subframe Structure

Frame Structure

YY YY XX YY

page 12

generally more economical tomaintain separate audio thuseliminating the need fornumerous mux (multiplexer)and demux (demultiplexer)modules. In developing theSDI standard, SMPTE 259M,one of the key considerationswas the ability to embed at

least four channels of audioin the composite serial digi-tal signal which has limitedancillary data space. A basicform of embedded audio forcomposite video is docu-mented in SMPTE 259M andthere’s a significant amountof equipment in the field

using this method for bothcomposite and componentsignals. As engineers wereusing the SMPTE 259M spec-ifications for their designs, itbecame clear that a moredefinitive document wasneeded. A draft proposedstandard is being developedfor embedded audio whichincludes such things as dis-tribution of audio sampleswithin the available compos-ite digital ancillary dataspace, the ability to carry 24-bit audio, methods to handlenon-synchronous clockingand clock frequencies otherthan 48 kHz, and specifica-tions for embedding audio incomponent digital video.Ancillary data space. Com-posite digital video allowsancillary data only in itsserial form and then only inthe tips of synchronizing sig-nals. Ancillary data is notcarried in parallel compositedigital as parallel data is sim-ply a digital representation ofthe analog signal. Figures3-2 and 3-3 show the ancil-lary data space available incomposite digital signalswhich includes horizontalsync tip, vertical broadpulses, and vertical equaliz-ing pulses. A small amount of the hori-zontal sync tip space isreserved for TRS-ID (timingreference signal and identifi-cation) which is required fordigital word framing in thedeserialization process. Sincecomposite digital ancillarydata is only available in theserial domain, it consists of10-bit words, whereas bothcomposite and componentparallel interconnects may beeither 8 or 10 bits. A sum-mary of composite digitalancillary data space for NTSCis shown in Table 3-1. Thirtyframes/second will provide9.87 Mb/second of ancillarydata which is enough for fourchannels of embedded audiowith a little capacity left over.PAL has slightly more ancil-lary data space due to thehigher sample rate; however

Figure 3-2. Composite ancillary data space.

Active Video Active Video

Ancillary Data SpaceTRS-ID (not to scale)V

ertical B

lanking

Vertical

Blanking

ScanDirection

V H

Figure 3-3. NTSC available ancillary data words.

Not to scale

790 - 794

790 - 794

790 - 794

TRS-ID

TRS-ID

TRS-ID

ANC Data - 55 wordsH-sync (all lines except vertical sync)

ANC Data - 376 wordsVertical sync lines only

ANC Data - 21 wordsVertical equalizing pulses only

ANC Data - 21 wordsVertical equalizing pulses only

ANC Data - 376 wordsVertical sync lines only

795 - 849

795 - 260

795 - 815

340 - 715

340 - 360

Table 3-1. NTSC Digital Ancillary Data SpacePulse Tip Words Per Frame Total

Horizontal 55 507 27,885

Equalizing 21 24 504

Broad 376 12 4,512

Total 10-bit words/frame 32,901

page 13

Figure 3-5. Ancillary data formatting.

HANC (horizontal ancillarydata) and VANC (verticalancillary data) with theSMPTE defining the use ofHANC and the EBU definingthe use of VANC. Wordlengths are 10-bit for HANCspecifically so it can carryembedded audio in the sameformat as for composite digi-tal and 8-bit for VANCspecifically so it can be car-ried by D-1 VTRs (on thosevertical interval lines that arerecorded). Total data space isshown in Table 3-2. Up to 16

channels of embedded audioare specified for HANC inthe proposed standard andthere’s room for significantlymore data.Not all of the VANC dataspace is available. For in-stance, the luminance sam-ples on one line per field arereserved for DVITC (digitalvertical interval time code)and the chrominance sam-ples on that line may bedevoted to video index. Also,it would be wise to avoidusing the vertical intervalswitch line and perhaps, thesubsequent line where datamight be lost due to relockafter the switch occurs.Ancillary data formatting.Ancillary data is formattedinto packets prior to multi-plexing it into the video datastream as shown in Figure3-5. Each data block maycontain up to 255 user datawords provided there’senough total data spaceavailable to include five(composite) or seven (compo-nent) words of overhead. Forcomposite digital, only thevertical sync (broad) pulseshave enough room for thefull 255 words. Multiple datapackets may be placed inindividual ancillary dataspaces, thus providing arather flexible data commu-nications channel. At the beginning of each datapacket is a header usingword values that are exclud-ed for digital video data andreserved for synchronizingpurposes. For compositevideo, a single header wordof 3FCh is used whereas forcomponent video, a three-word header 000h 3FFh3FFh is used. Each type ofdata packet is identified witha different Data ID word.Several different Data IDwords are defined to orga-nize the various data packetsused for embedded audio.The Data Block Number(DBN) is an optional counterthat can be used to providesequential order to ancillarydata packets allowing areceiver to determine if

embedded audio for digitalPAL is rarely used.There’s considerably moreancillary data space availablein component digital videoas shown in Figure 3-4. Allof the horizontal and verticalblanking intervals are avail-able except for the smallamount required for EAV(end of video) and SAV (startof video) synchronizingwords. The ancillary data space hasbeen divided into two types

Table 3-2. Component Digital Ancillary Data Space525 line Words Per Frame Total Rate Mb/s

HANC 268 525 140,700 42.2

VANC 1440 39 56,160 13.5

Total words 198,860 55.7

625 line Words Per Frame Total Rate Mb/s

HANC 282 625 176,250 44.1

VANC 1440 49 70.560 14.1

Total words 246,810 58.2

User Data255 words maximum

Data Count (1 word)Check Sum (1 word)

Data Block Number (1 word)

Data Header1 word 3 words

composite, 3FCcomponent,000 3FF 3FF

Data ID (1 word)

Figure 3-4. Component ancillary data space.

page 14

sum which is used to detecterrors in the data packet.Basic embedded audio.Embedded audio defined inSMPTE 259M provides fourchannels of 20-bit audio datasampled at 48 kHz with thesample clock locked to thetelevision signal. Althoughspecified in the compositedigital part of the standard,the same method is also usedfor component digital video.

there’s missing data. As anexample, with embeddedaudio the DBN may be usedto detect the occurrence of avertical interval switch,thereby allowing the receiverto process the audio data toremove the likely transient“click” or “pop.” Just prior tothe data is the Data Countword indicating the amountof data in the packet. Finally,following the data is a check-

This basic embedded audiocorresponds to Level A in theproposed embedded audiostandard. Other levels ofoperation provide morechannels, other sampling fre-quencies, and additionalinformation about the audiodata. Basic embedded audiodata packet formattingderived from AES audio isshown in Figure 3-6. Two AES channel-pairs areshown as the source; how-ever it’s possible for each ofthe four embedded audiochannels to come from a dif-ferent AES signal (specifi-cally implemented in someD-1 digital VTRs). The AudioData Packet contains one ormore audio samples from upto four audio channels. 23bits (20 audio bits plus the C,U, and V bits) from each AESsub-frame are mapped intothree 10-bit video words (X,X+1, X+2) as shown in Table3-3.Bit-9 is always not Bit-8 toensure that none of theexcluded word values (3FFh-3FCh or 003h-000h) are used.The Z-bit is set to “1” corre-sponding to the first frame ofthe 192-frame AES block.Channels of embedded audioare essentially independent(although they,re alwaystransmitted in pairs) so theZ-bit is set to a “1” in eachchannel even if derived fromthe same AES source. C, U,and V bits are mapped fromthe AES signal; however theparity bit is not the AES par-ity bit. Bit-8 in word X+2 iseven parity for bits 0-8 in allthree words.There are several restrictionsregarding distribution of theaudio data packets althoughthere’s a “grandfather clause”in the proposed standard toaccount for older equipmentthat may not observe all therestrictions. Audio datapackets are not transmittedin the horizontal ancillarydata space following the nor-mal vertical interval switchas defined in RP 168. They’realso not transmitted in theancillary data space desig-Figure 3-6. Basic embedded audio.

20 bitsAES 1

Embed

Sample Datachnl B (subframe 2)ded Channel 2

Auxiliary Data 4 bitssample validity

User bit datachannel statusAudio

Audio

Subframe parityPreamble X, Y or Z, 4 bits

Channel BChannel BChannel B Channel AChannel A

Subframe 1 Subframe 2

Frame 0Frame 191 Frame 1

ZZYY YY XX YY

Channel BChannel BChannel B Channel AChannel A

Subframe 1 Subframe 2

Frame 0Frame 191

Frame 3

Frame 1

ZZYY YY XX YY

AES Subframe 32 bits

Dat

aC

ount

Che

ckS

um

Dat

aB

lk N

um

Dat

a ID

Data HeaderComposite, 3FCComponent, 000 3FF 3FF

20 + 3 bitsmapped into

of data is3 ANC words

AES 1, Chnl AChannel 1x, x+1, x+2

AES 1, Chnl BChannel 2x, x+1, x+2

AES 2, Chnl AChannel 3x, x+1, x+2

AES Channel-Pair 1

AES Channel-Pair 2

Audio Data Packet

Table 3-3. Embedded Audio Bit DistributionBit X X + 1 X + 2

b9 not b8 not b8 not b8

b8 aud 5 aud 14 Parity

b7 aud 4 aud 13 C

b6 aud 3 aud 12 U

b5 aud 2 aud 11 V

b4 aud 1 aud 10 aud 19 (msb)

b3 aud 0 aud 9 aud 18

b2 ch bit-1 aud 8 aud 17

b1 ch bit-2 aud 7 aud 16

b0 Z-bit aud 6 aud 15

page 15

nated for error detectioncheckwords defined inRP 165. For composite digitalvideo, audio data packets arenot transmitted in equalizingpulses. Taking into accountthese restrictions “datashould be distributed asevenly as possible through-out the video field.” The rea-son for this last statement isto minimize receiver buffersize which is an importantissue for transmitting 24-bitaudio in composite digitalsystems. For basic, Level A,this results in either three or

four audio samples per chan-nel in each audio datapacket.Extended embedded audio.Full-featured embeddedaudio defined in the pro-posed standard includes: • Carrying the 4 AES auxil-

iary bits (which may beused to extend the audiosamples to 24-bits)

• Allowing non-synchronousclock operation

• Allowing sampling fre-quencies other than 48 kHz

• Providing audio-to-videodelay information for eachchannel

• Documenting Data IDs toallow up to 16 channels ofaudio in component digitalsystems

• Counting “audio frames”for 525 line systems.

To provide these features,two additional data packetsare defined. Extended DataPackets carry the 4 AES aux-iliary bits formatted suchthat one video word containsthe auxiliary data for twoaudio samples as shown inFigure 3-7. Extended datapackets must be located inthe same ancillary data spaceas the associated audio datapackets and must follow theaudio data packets. The Audio Control Packet(shown in Figure 3-8.) istransmitted once per field inthe second horizontal ancil-lary data space after the ver-tical interval switch point. Itcontains information onaudio frame number, sam-pling frequency, active chan-nels, and relative audio-to-video delay of each channel.Transmission of audio con-trol packets is optional for48 kHz synchronous opera-tion and required for allother modes of operation(since it contains the infor-mation as to what mode isbeing used).Audio frame numbers are anartifact of 525 line, 29.97frame/second operation. Inthat system there are exactly8008 audio samples inexactly five frames, whichmeans there’s a non-integernumber of samples perframe. An audio framesequence is the number offrames for an integer numberof samples (in this case five)and the audio frame numberindicates where in thesequence a particular framebelongs. This is importantwhen switching betweensources because certainequipment (most notably dig-ital VTRs) require consistentsynchronous operation toprevent buffer over/under

Figure 3-7. Extended embedded audio.

Dat

aC

ount

Dat

aC

ount

AU

X

AU

X

AU

X

AU

X

AU

X

AU

X

Che

ckS

umC

heck

Sum

Dat

aB

lk N

umD

ata

Blk

Num

Data HeaderComposite, 3FCComponent, 000 3FF 3FF

(1FD, 1FB, 2F9)Data ID 2FF

Data ID 1FE (2FC, 2FA, 1F8)

AES 1, ch AChannel 1x, x+1, x+2

Audio Data Packet

Extended Data PacketAuxiliary 4 bits

AES 2, ch AChannel 3x, x+1, x+2

AES 1, ch BChannel 2x, x+1, x+2

Figure 3-8. Audio control packet formatting.

DID

DB

N

DC

AC

T

RA

TE

AF

1-2

AF

3-4

DE

LA

0

DE

LC

0

DE

LA

1

DE

LC

1

DE

LA

2

DE

LC

2

DE

LB

0

DE

LD

0

DE

LB

1

DE

LD

1

DE

LB

2

DE

LD

2

RS

RV

RS

RV

CH

K S

UM

Data HeaderComponent

Composite 3FC000 3FF 3FF

Data IDData block number

Data count

Audio framenumber chnls 1 & 2chnls 3 & 4

+_ Delay (audio-video)

SamplingFrequency

ActiveChannels

ReservedWords

Checksum

ch 1 (&2) ch 3 (&4) ch 4 =\ 3ch 2 =\ 1

Table 3-4. Data IDs For Up To 16-channel OperationAudio Audio Data Extended Audio Control

Channels Packet Data Packet Packet

Group 1 1-4 1FF 1FE 1EF

Group 2 5-8 1FD 2FC 2EE

Group 3 9-12 1FB 2FA 2ED

Group 4 13-16 2F9 1F8 1EC

page 16

flow. Where frequent switch-ing is planned, receivingequipment can be designedto add or drop a sample fol-lowing a switch in the fourout of five cases where thesequence is broken. Thechallenge in such a system isto detect that a switch hasoccurred. This can be facili-tated by use of the data blocknumber in the ancillary dataformat structure and byincluding an optional framecounter with the unused bitsin the audio frame numberword of the audio controlpacket.Audio delay informationcontained in the audio con-trol packet uses a defaultchannel-pair mode. That is,delay-A (DELA0-2) is forboth channel 1 and channel2 unless the delay for chan-nel 2 is not equal to channel1. In that case, the delay forchannel 2 is located in delay-C. Sampling frequency mustbe the same for each channelin a pair, hence the data in“ACT” provides only twovalues, one for channels 1and 2 and the other for chan-nels 3 and 4.In order to provide for up to16 channels of audio in com-ponent digital systems theembedded audio is dividedinto audio groups corre-sponding to the basic four-channel operation. Each ofthe three data packet typesare assigned four Data IDs asshown in Table 3-4.Receiver buffer size. In com-ponent digital video, the re-ceiver buffer in an audiodemultiplexer is not a criti-cal issue since there’s muchancillary data space availableand few lines excluding au-

dio ancillary data. The caseis considerably different forcomposite digital video dueto the exclusion of data inequalizing pulses and, evenmore important, the datapacket distribution requiredfor extended audio. For thisreason the proposed standardrequires a receiver buffer of64 samples per channel witha grandfather clause of 48samples per channel to warndesigners of the limitationsin older equipment. In theproposed standard, Level Adefines a sample distributionallowing use of a 48 sample-per-channel receiver bufferwhile other levels generallyrequire the use of the speci-fied 64 sample buffer. Deter-mination of buffer size isanalyzed in this section fordigital NTSC systems, how-ever the result is much thesame for digital PAL systems. Synchronous sampling at48 kHz provides exactly 8008samples in five frames whichis 8008 ÷ (5 x 525) = 3.051samples per line. Thereforemost lines may carry threesamples per channel whilesome should carry four sam-ples per channel. For digitalNTSC, each horizontal synctip has space for 55 ancillarydata words. Table 3-5 showshow those words are used forfour-channel, 20-bit and 24-bit audio (overhead includesheader, data ID, data count,etc.). Since it would require24 more video words to carryone additional sample perchannel for four channels of24-bit audio and that wouldexceed the 55-word space,there can be no more thanthree samples per line of 24-bit audio.

A receiver sample buffer isneeded to store samples nec-essary to supply the continu-ous output required duringthe time of the equalizingpulses and other excludedlines (the vertical intervalswitch line and the subse-quent line). When only 20-bitaudio is required the “evendistribution of samples” dic-tates that some horizontallines carry four samples perchannel resulting in a rathermodest buffer size of 48 sam-ples or less depending on thetype of buffer. Using only thefirst broad pulse of each ver-tical sync line, four or fivesamples in each broad pulsewill provide a satisfactory,even distribution. This isknown as Level A. However,if 24-bit audio is used or ifthe sample distribution is toallow for 24-bit audio even ifnot used, there can be nomore than three samples perhorizontal ancillary dataspace for a four-channel sys-tem. The first broad pulse ofeach vertical sync line isrequired to carry 16 or 17samples per channel to getthe best possible “even dis-tribution.” This results in abuffer requirement of 80samples per channel, or less,depending on the type ofbuffer. To meet the 64 sam-ple-per-channel buffer, asmart buffer is required.There are two common typesof buffer that are equivalentfor the purpose of under-standing requirements tomeet the proposed standard.One is the FIFO (first in firstout) buffer and the other is acircular buffer. Smart buffershave the following synchro-nized load abilities:• FIFO Buffer – hold off

“reads” until a specificnumber of samples are inthe buffer (requires acounter) AND neither reador write until a specifiedtime (requires verticalsync).

• Circular Buffer – set theread address to be a certainnumber of samples afterthe write address at a spec-

Table 3-5. Maximum Samples Per NTSC Sync Tip4-Channels 20-bit Audio 24-bit Audio

3-samples 4-samples 3-samples

Audio DataOverhead 5 5 5

Sample data 36 48 36

Extended DataOverhead 5

Auxiliary data 6

TOTAL 41 53 47

page 17

audio or for automatic opera-tion of both sample sizes.Therefore, a 64 sample-per-channel smart buffer isrequired to meet the specifi-cations of the proposedstandard. Systemizing AES/EBU audio.Serial digital video andaudio are becoming com-monplace in production andpost-production facilities aswell as television stations. Inmany cases, the video andaudio are married sources;and it may be desirable tokeep them together and treatthem as a single serial digitaldata stream. This has, for oneexample, the advantage ofbeing able to keep the signalsin the digital domain andswitch them together with aserial digital video routingswitcher. In the occasional

instances where it’s desirableto break away some of theaudio sources, the digitalaudio can be demultiplexedand switched separately viaan AES/EBU digital audiorouting switcher.At the receiving end, afterthe multiplexed audio haspassed through a serial digi-tal routing switcher, it maybe necessary to extract theaudio from the video so thatediting, audio sweetening, orother processing can be ac-complished. This requires ademultiplexer that strips offthe AES/EBU audio from theserial digital video. The out-put of a typical demulti-plexer has a serial digitalvideo BNC as well as connec-tors for the two-stereo-pairAES/EBU digital audiosignals.

ified time (requires verticalsync).

Using a smart buffer the min-imum sizes are 24 samplesper channel for 20-bit opera-tion and 40 samples perchannel for 24-bit operation.Because of the differentrequirements for the twotypes of sample distribution,the minimum smart buffersize for either number of bitsper sample is 57 samples.Therefore, a 64 sample-per-channel smart buffer shouldbe adequate.In the case of a not-so-smartbuffer, read and writeaddresses for a circularbuffer must be far enoughapart to handle either a buildup of 40 samples or a deple-tion of 40 samples. Thismeans the buffer size mustbe 80 samples for 24-bit

page 18

page 19

Cable SelectionThe best grades of precisionanalog video cables exhibitlow losses from very low fre-quencies (near DC) to around10 MHz. In the serial digitalworld, cable losses in thisportion of the spectrum are ofless consequence but stillimportant. It’s in the higherfrequencies associated withtransmission rates of 143,177, 270, or 360 Mb/s wherelosses are considerable. For-tunately, the robustness ofthe serial digital signal makesit possible to equalize theselosses quite easily. So whenconverting from analog todigital, the use of existing,quality cable runs shouldpose no problem.The most important charac-teristic of coaxial cable to beused for serial digital is itsloss at 1/2 the clock fre-quency of the signal to betransmitted. That value willdetermine the maximumcable length that can beequalized by a given receiver.It’s also important that thefrequency response loss in dBbe approximately propor-tional to 1/√

___f down to fre-

quencies below 5 MHz. Someof the common cables in useare the following:

PSF 2/3 UKBelden 8281 USA and JapanF&G 1.0/6.6 Germany

These cables all have excel-lent performance for serialdigital signals. Cable manu-facturers are seizing theopportunity to introducenew, low-loss, foam dielectriccables specifically designedfor serial digital. Examples ofalternative video cables areBelden 1505A which,although thinner, more flexi-ble, and less expensive than8281, has a higher perfor-mance at the frequencies thatare more critical for serialdigital signals. A recentdevelopment specifically forserial digital video is Belden1694A with lower loss than1505A.

ConnectorsUntil recently, all BNC con-nectors used in television hada characteristic impedance of50 ohms. BNC connectors ofthe 75-ohm variety wereavailable, but were not physi-cally compatible with 50-ohmconnectors. The impedance“mismatch” to coax is of littleconsequence at analog videofrequencies because thewavelength of the signals ismany times longer than thelength of the connector. Butwith serial digital’s high datarate (and attendant shortwavelengths), the impedanceof the connector must be con-sidered. In general, the lengthof a simple BNC connectordoesn’t have significant effecton the serial digital signal.Several inches of 50-ohmconnection, such as a numberof barrels or short coax,would have to be used forany noticeable effect. In thespecific case of a serial trans-mitter or receiver, the activedevices associated with thechassis connector need signif-icant impedance matching.This could easily includemaking a 50-ohm connectorlook like 75 ohms over thefrequency band of interest.Good engineering practicetells us to avoid impedancemismatches and use 75-ohmcomponents wherever possi-ble and that rule is being fol-lowed in the development ofnew equipment for serialdigital.

Patch PanelsThe same holds true for other“passive” elements in the sys-tem such as patch panels. Inorder to avoid reflectionscaused by impedance discon-tinuities, these elementsshould also have a character-istic impedance of 75 ohms.Existing 50-ohm patch panelswill probably be adequate inmany instances, but newinstallations should use 75-ohm patch panels. Severalpatch panel manufacturersnow offer 75-ohm versions

designed specifically forserial digital applications.

Terminations and Loop-throughsThe serial digital standardspecifies transmitter andreceiver return loss to begreater than 15 dB up to270 MHz. That is, termina-tions should be 75 ohms withno significant reactive com-ponent to 270 MHz. Clearlythis frequency is related tocomponent digital, hence alower frequency might besuitable for NTSC and thatmay be considered in thefuture. As can be seen by therather modest 15 dB specifi-cation, return loss is not acritical issue in serial digitalvideo systems. It’s moreimportant at short cablelengths where the reflectioncould distort the signal ratherthan at long lengths wherethe reflected signal receivesmore attenuation.Most serial digital receiversare directly terminated inorder to avoid return lossproblems. Since the receiveractive circuits don’t look like75 ohms, circuitry must beincluded to provide a goodresistive termination and lowreturn loss. Some of today’sequipment does not providereturn loss values meeting thespecification for the higherfrequencies. This hasn’tcaused any known systemproblems; however, good en-gineering practice should pre-vail here as well.Active loop-throughs are verycommon in serial digitalequipment because they’rerelatively simple and havesignal regeneration qualitiessimilar to a reclocking distri-bution amplifier. Also, theyprovide isolation betweeninput and output. However, ifthe equipment power goes offfor any reason, the connec-tion is broken. Active loop-throughs also have the sameneed for care in circuitimpedance matching as men-tioned above.

4. System Hardware and Issues

page 20

Passive loop-throughs areboth possible and practical.Implementations used in se-rial digital waveform moni-tors have return loss greaterthat 25 dB up to the clockfrequency. This makes it pos-sible to monitor the actualsignal being received by theoperational equipment orunit-under-test and not sub-stitute the monitor receiver. The most important use ofpassive loop-throughs is forsystem diagnostics and faultfinding where it’s necessaryto observe the signal in thetroubled path. If a loop-through is to be used formonitoring, it’s importantthat it be passive loop-through for two reasons.First, serial transmitters withmultiple outputs usuallyhave separate active deviceson each output, thereforemonitoring one outputdoesn’t necessarily indicatethe quality of another outputas it did in the days of resis-tive fan-out of analog signals.Second, when an active loop-through is used, loss ofpower in the monitoringdevice will turn off the sig-nal. This would be disastrousin an operational situation. Ifan available passive loop-through isn’t being used, it’simportant to make sure thetermination is 75 ohms withno significant reactive com-ponent to at least the clockfrequency of the serial signal.That old, perhaps “preci-sion,” terminator in your toolbox may not do the job forserial digital.

Digital Audio Cable andConnector TypesAES/EBU digital audio hasraised some interesting ques-tions because of its character-istics. In professional appli-cations, balanced audio hastraditionally been deemednecessary in order to avoidhum and other artifacts. Usu-ally twisted, shielded, multi-conductor audio cable isused. The XLR connectorwas selected as the connectorof choice and is used univer-sally in almost all profes-

sional applications. WhenAES/EBU digital audioevolved, it was natural toassume that the traditionalanalog audio transmissioncable and connectors couldstill be used. The scope ofAES3 covers digital audiotransmission of up to 100meters, which can be han-dled adequately withshielded, balanced twisted-pair interconnection. Because AES/EBU audio hasa much wider bandwidththan analog audio, cablemust be selected with care.The impedance, in order tomeet the AES3 specification,requires 110-ohm source andload impedances. The stan-dard has no definition for abridging load, although loop-through inputs, as used inanalog audio, are theoreti-cally possible. Improperlyterminated cables may causesignal reflections and subse-quent data errors. The relatively high frequen-cies of the AES/EBU signalscannot travel over twisted-pair cables as easily as ana-log audio. Capacitance andhigh-frequency losses causehigh-frequency rolloff. Even-tually, signal edges becomeso rounded and amplitude solow that the receivers can nolonger tell the “1”s from the“0”s. This makes the signalsundetectable. Typically,cable lengths are limited to afew hundred feet. XLR con-nectors are also specified.Since AES/EBU digital audiohas frequencies up to about6 MHz, there’ve been sugges-tions to use unbalancedcoaxial cable with BNC con-nectors for improved perfor-mance in existing videoinstallations and for trans-missions beyond 100 meters.There are two committeesdefining the use of BNC con-nectors for AES/EBU digitalaudio. The Audio Engineer-ing Society has developedAES3-ID and SMPTE isdeveloping a similar recom-mended practice. These rec-ommendations will use a110-ohm balanced signal

instead of 75-ohm unbal-anced and reduce the 3- to10-volt signal to 1 volt. Theresulting signal now has thesame characteristics as ana-log video, and traditionalanalog video distributionamplifiers, routing switchers,and video patch panels canbe used. Costs of making upthe coaxial cable with BNCsis also less than multicon-ductor cable with XLRs.Tests have indicated thatEMI emissions are alsoreduced with coaxialdistribution.

Signal Distribution, Reclocking Although a video signal maybe digital, the real worldthrough which that signalpasses is analog. Conse-quently, it’s important to con-sider the analog distortionsthat affect a digital signal.These include frequencyresponse rolloff caused bycable attenuation, phase dis-tortion, noise, clock jitter,and baseline shift due to ACcoupling. While a digital sig-nal will retain the ability tocommunicate its data despitea certain degree of distortion,there’s a point beyond whichthe data will not be recover-able. Long cable runs are themain cause of signal distor-tion. Most digital equipmentprovides some form of equal-ization and regeneration at allinputs in order to compen-sate for cable runs of varyinglengths. Considering the spe-cific case of distribution am-plifiers and routing switch-ers, there are several ap-proaches that can be used:wideband amplifier, wide-band digital amplifier, andregenerating digital amplifier.Taking the last case first,regeneration of the digitalsignal generally means torecover data from an incom-ing signal and to retransmit itwith a clean waveform usinga stable clock source. Regen-eration of a digital signalallows it to be transmittedfarther and sustain more ana-log degradation than a signalwhich already has accumu-lated some analog distor-

page 21

several dozen times before aparallel regeneration is nec-essary. Excessive jitter buildup eventually causes a sys-tem to fail; jitter acceptableto a serial receiver must stillbe considered and handledfrom a system standpointwhich will be discussedlater.Regeneration, where a refer-ence clock is used to producethe output, can be performedan unlimited number oftimes and will eliminate allthe jitter built up in a seriesof regeneration operations.This type of regenerationhappens in major operationalequipment such as VTRs,production switchers (visionmixers) or special effectsunits that use external refer-ences to perform their digitalprocessing. So the bottomline is, regeneration (orreclocking) can be perfect,only limited by economicconsiderations.A completely differentapproach to signal distribu-tion is the use of widebandanalog routers, but there aremany limitations. It’s truethat wideband analog routers(100 MHz plus) will pass a143 Mb/s serial digital signal.However, they’re unlikely tohave the performancerequired for 270 or 360 Mb/s.With routers designed forwideband analog signals, the1/√

___f frequency response

characteristics will adverselyaffect the signal. Receiversintended to work withSMPTE 259M signals expectto see a signal transmittedfrom standard source andattenuated by coaxial cablewith frequency responselosses. Any deviation from6 dB/octave rolloff over abandwidth of 1 MHz up tothe clock frequency willcause improper operation ofthe automatic equalizer inthe serial receiver. In general,this will be a significantproblem because the analogrouter is designed to haveflat response up to a certainbandwidth and then roll offat some slope that may, or

may not, be 6 dB/octave. It’sthe difference between thein-band and out-of-bandresponse that causes theproblem.In between these twoextremes are wideband serialdigital routers that don’treclock the signal. Such non-reclocking routers will gener-ally perform adequately at allclock frequencies for theamount of coax attenuationgiven in their specifications.However, that specificationwill usually be shorter thanfor reclocking routers.

System TimingThe need to understand,plan, and measure signaltiming has not been elimi-nated in digital video, it’sjust moved to a different setof values and parameters. Inmost cases, precise timingrequirements will be relaxedin a digital environment andwill be measured in micro-seconds, lines, and framesrather than nanoseconds. Inmany ways, distribution andtiming is becoming simplerwith digital processingbecause devices that havedigital inputs and outputscan lend themselves to auto-matic input timing compen-sation. However, mixed ana-log/digital systems placeadditional constraints on thehandling of digital signaltiming. Relative timing of multiplesignals with respect to a ref-erence is required in mostsystems. At one extreme,inputs to a composite analogproduction switcher must betimed to the nanosecondrange so there will be no sub-carrier phase errors. At theother extreme, most digitalproduction switchers allowrelative timing betweeninput signals in the range ofone horizontal line. Signal-to-signal timing requirementsfor inputs to digital videocan be placed in three cate-gories: 1. Digital equipment with

automatic input timingcompensation.

tions. Regeneration generallyuses the characteristics of theincoming signal, such asextracted clock, to producethe output. In serial digitalvideo, there are two kinds ofregeneration: serial andparallel.Serial regeneration is sim-plest. It consists of cableequalization (necessary evenfor cable lengths of a fewmeters or less), clock recov-ery, data recovery, and re-transmission of the data us-ing the recovered clock. Aphase locked loop (PLL) withan LC (inductor/capacitor) orRC (resistor/capacitor) oscil-lator regenerates the serialclock frequency, a processcalled reclocking.Parallel regeneration is morecomplex. It involves threesteps: deserialization; paral-lel reclocking, usually usinga crystal-controlled timebase; and serialization.Each form of regenerationcan reduce jitter outside itsPLL bandwidth, but jitterwithin the loop bandwidthwill be reproduced and mayaccumulate significantlywith each regeneration. (Fora complete discussion of jit-ter effects and measurementssee Section 8.) A serial regen-erator will have a loop band-width on the order of severalhundred kilohertz to a fewmegahertz. A parallel regen-erator will have a much nar-rower bandwidth on theorder of several Hertz. There-fore, a parallel regeneratorcan reduce jitter to a greaterextent than a serial regenera-tor, at the expense of greatercomplexity. In addition, theinherent jitter in a crystalcontrolled timebase (parallelregenerator) is much lessthan that of an LC or RCoscillator timebase (serialregenerator). Serial regenera-tion obviously cannot be per-formed an unlimited numberof times because of cumula-tive PLL oscillator jitter andthe fact that the clock isextracted from the incomingsignal. Serial regenerationcan typically be performed

page 22

2. Digital equipment withoutautomatic input timingcompensation.

3. Digital-to-analog convert-ers without automatictiming compensation.

In the first case, the signalsmust be in the range of theautomatic compensation,generally about one horizon-tal line. For system installa-tion and maintenance it’simportant to measure the rel-ative time of such signals toensure they’re within theautomatic timing range withenough headroom to allowfor any expected changes.Automatic timing adjust-ments are a key componentfor digital systems; but theycannot currently be appliedto a serial data stream due tothe high digital frequenciesinvolved. A conversion backto the parallel format for theadjustable delay is necessarydue to the high data rates.Therefore, automatic inputtiming is not available in allequipment.For the second case, signalsare nominally in-time aswould be expected at theinput to a routing switcher.SMPTE recommended prac-tice RP 168, which applies toboth 525 and 625 line opera-tion, defines a timing win-dow on a specific horizontalline where a vertical intervalswitch is to be performed.See Figure 4-1 which shows

the switching area for field 1.(Field 2 switches on line 273for 525 and line 319 for 625.)The window size is 10 µswhich would imply anallowable difference in timebetween the two signals of afew microseconds. A pre-ferred timing difference isgenerally determined by thereaction of downstreamequipment to the verticalinterval switch. In the case ofanalog routing switchers, avery tight tolerance is oftenmaintained based on subcar-rier phase requirements. Forserial digital signalsmicroseconds of timing dif-ference may be acceptable.At the switch point, clocklock of the serial signal willbe recovered in one or twodigital words (10 to 20 serialclock periods). However,word framing will, mostlikely, be lost causing the sig-nal value to assume somerandom value until the nextsynchronizing data (EAV)resets the word framing andproduces full synchroniza-tion of the signal at thereceiver. A signal with a ran-dom value for part of a line isclearly not suitable on-line toa program feed; howeverdownstream equipment canbe designed to blank theundesired portion of the line.In the case where the unde-sired signal is not elimi-nated, the serial signal tim-ing to the routing switcher

will have to be within one ortwo nanoseconds so the dis-turbance doesn’t occur.Clearly, the downstreamblanking approach ispreferred.Finally, the more difficultcase of inputs to DACs with-out timing compensationsmay require the same accura-cy as today’s analog systems– in the nanosecond range.This will be true where fur-ther analog processing isrequired, such as used inanalog production switchers(vision mixers). Alternately,if the analog signal is simplya studio output, this highaccuracy may not berequired.Automatic timing adjust-ments are a key componentfor digital systems; but theycannot currently be appliedto a serial data stream due tothe high digital frequenciesinvolved. A conversion backto the parallel format for thedelay is necessary due to thehigh data rates. There’s aneed for a variety ofadjustable delay devices toaddress these new timingrequirements. These includea multiple-line delay deviceand a frame delay device. Aframe delay device, ofcourse, would result in avideo delay that could poten-tially create some annoyingproblems with regard toaudio timing and the passingof time code through the sys-tem. Whenever audio andvideo signals are processedthrough different paths, apotential exists for differen-tial audio-to-video delay.The differential delay causedby embedding audio is insig-nificant (under 1 ms). How-ever, potential problemsoccur where frame delays areused and the video andaudio follow separate paths.

Figure 4-1. Vertical interval switching area.

1 2 3 4 5 6 7 8 9 22 23 24622 623 624 625

SwitchingArea

35 25 µs

1 2 3 4 5 6 7 8 9 10 11 12 19 20 21525

SwitchingArea

525-line systems, Field 1

625-line systems, Field 1

25 µs35 µs

µs

page 23

Test and measurement ofserial digital signals can becharacterized in three differ-ent aspects: usage, methods,and operational environment.Types of usage wouldinclude: designer’s work-bench, manufacturing qualityassurance, user equipment