A DDoS Attack Detection Method Based on Hybrid ...ResearchArticle A DDoS Attack Detection Method...

Transcript of A DDoS Attack Detection Method Based on Hybrid ...ResearchArticle A DDoS Attack Detection Method...

Research ArticleA DDoS Attack Detection Method Based on HybridHeterogeneous Multiclassifier Ensemble Learning

Bin Jia1 Xiaohong Huang1 Rujun Liu2 and YanMa1

1 Information and Network Center Institute of Network Technology Beijing University of Posts and TelecommunicationsBeijing 100876 China2School of CyberSpace Security Beijing University of Posts and Telecommunications Beijing 100876 China

Correspondence should be addressed to Bin Jia jb qd2010bupteducn

Received 22 October 2016 Accepted 11 January 2017 Published 15 March 2017

Academic Editor Jun Bi

Copyright copy 2017 Bin Jia et al This is an open access article distributed under the Creative Commons Attribution License whichpermits unrestricted use distribution and reproduction in any medium provided the original work is properly cited

The explosive growth of network traffic and its multitype on Internet have brought new and severe challenges to DDoS attackdetection To get the higher True Negative Rate (TNR) accuracy and precision and to guarantee the robustness stability anduniversality of detection system in this paper we propose a DDoS attack detection method based on hybrid heterogeneousmulticlassifier ensemble learning and design a heuristic detection algorithm based on Singular Value Decomposition (SVD) toconstruct our detection system Experimental results show that our detection method is excellent in TNR accuracy and precisionTherefore our algorithm has good detective performance for DDoS attack Through the comparisons with Random Forest 119896-Nearest Neighbor (119896-NN) and Bagging comprising the component classifiers when the three algorithms are used alone by SVDand by un-SVD it is shown that our model is superior to the state-of-the-art attack detection techniques in system generalizationability detection stability and overall detection performance

1 Introduction

The explosive growth of network traffic and its multitype onInternet have brought new and severe challenges to networkattack behavior detection Some traditional detection meth-ods and techniques have not met the needs of efficient andexact detection for the diversity and complexity of attacktraffic in the high-speed network environment especiallysuch as DDoS attack

Distributed Denial of Service (DDoS) attack is launchedby some remote-controlled Zombies It is implemented byforcing a kidnapped computer or consuming its resourcessuch as CPU cycle memory and network bandwidth More-over Palmieri et al [1] described the attack that exploitscomputing resources to waste energy and to increase costsWith the network migrating to cloud computing environ-ments the rate of DDoS attacks is growing substantially [2]For DDoS attack detection the previous researches in recentyears mainly include the following

In 2014 Luo et al [3] developed a mathematical model toestimate the combined impact of DDoS attack pattern andnetwork environment on attack effect by originally capturingthe adjustment behaviors of victim TCPs congestion windowIn 2015 Xiao et al [4] presented an effective detectionapproach based on CKNN (119870-nearest neighborrsquos trafficclassification with correlation analysis) and a grid-based andnamed r-pollingmethod to detect DDoS attackThemethodscan reduce training data involved in the calculation and canexploit correlation information of training data to improvethe classification accuracy and to reduce the overhead causedby the density of training data In 2016 Saied et al [5]designed a model to detect and mitigate the known andunknown DDoS attack in real-time environments Theychose an Artificial Neural Network (ANN) algorithm todetect DDoS attack based on specific characteristic featuresthat separate DDoS attack traffic from normal traffic

However the existing detection methods still suffer fromlow True Negative Rate (TNR) accuracy and precision And

HindawiJournal of Electrical and Computer EngineeringVolume 2017 Article ID 4975343 9 pageshttpsdoiorg10115520174975343

2 Journal of Electrical and Computer Engineering

theirmethods ormodels are homogeneous so the robustnessstability and universality are difficult to be guaranteed Toaddress the abovementioned problems in this paper wepropose the DDoS attack detection method based on hybridheterogeneous multiclassifier ensemble learning

Ensemble learning finishes the learning task by struc-turing and combining multiple individual classifiers It ishomogeneous for the ensemble of the same type of individualclassifiers and this kind of individual classifier is known asldquobase classifierrdquo or ldquoweak classifierrdquo Ensemble learning canalso contain the different types of individual classifiers andthe ensemble is heterogeneous In heterogeneous ensemblethe individual classifiers are generated by different learningalgorithms The classifiers are called as ldquocomponent classi-fierrdquo For the research of homogeneous base classifier thereis a key hypothesis that the errors of base classifier areindependent of each other However for the actual attacktraffic detection they apparently are impossible In additionthe accuracy and the diversity of individual classifiers conflictin nature When the accuracy is very high increasing thediversity becomes extremely difficult Therefore to generatethe robust generalization ability the individual classifiersought to be excellent and different

An overwhelming majority of classifier ensembles arecurrently constructed based on the homogeneous base clas-sifier model It was proved to obtain a relatively good classifi-cation performance However according to some theoreticalanalyses while error correlation between every two indi-vidual classifiers is smaller the error of ensemble system issmaller Simultaneously while the error of classifier isincreased the negative effect came into being Therefore theensemble learning model for homogeneous individual clas-sifiers cannot satisfy the needs of the higher ensembleperformance [6]

According to the different measured standard in [7]while the value of diversity is larger the difference is largerand the classifying precision of classifier is higher Throughthe abovementioned analysis the heterogeneous multiclas-sifier ensemble learning owns more remarkable and morepredominant generalization performance than the homoge-neous multiclassifier ensemble learning

This paper makes the following contributions (i) To thebest of our knowledge this is the first attempt to apply the het-erogeneous multiclassifier ensemble model to DDoS attackdetection and we provide the system model and its formu-lation (ii) We design a heuristic detection algorithm basedon Singular Value Decomposition (SVD) to construct theheterogeneous DDoS detection system and we conductthorough numerical comparisons between our method andseveral famous machine learning algorithms by SVD and byun-SVD

The rest of this paper is organized as follows Section 2describes the system model and the related problem for-mulation Section 3 presents DDoS detection algorithm inheterogeneous classification ensemble learning model Ourexperimental results and analysis are discussed in Section 4In Section 5 we conclude this paper

2 System Model and Problem Formulation

21 SystemModel Theclassification learningmodel based onRotation Forest and SVD aims at building the accurate anddiverse individual component classifiers Here the model isused for DDoS attack detection Rodrıguez et al [8] appliedRotation Forest and Principal Component Analysis (PCA)algorithm to extract features subsets and to reconstruct afull feature set for each component classifier in the ensemblemodel However PCA has indeed some weaknesses Forinstance PCA sees all samples as a whole and searches anoptimal linear map projection on the premise of minimummean-square error Nonetheless categorical attributes areignored The ignored projection direction is likely to includesome important divisible information In order to overcomethe abovementioned shortcomings in this paper we use SVDto substitute for PCA

There are two key points to construct heterogeneousmulticlassifier ensemble learning model [9] The first one isthe selection of individual classifiers The second one is theassembly of individual classifiers We discuss the two issuesas follows

(i) Firstly classifiers in an ensemble should be differentfrom each other otherwise there is no gain in combiningthem These differences cover diversity orthogonality andcomplementarity [10] Secondly Kuncheva and Rodrıguez[11] proposed static classifier selection and dynamic classifierselection The difference of the two methods is whether ornot evaluation of competence is implemented during theclassification Because of the characteristic of prejudgedcompetence the dynamic classifier selection approach isbetter than the static classifier selection Last but not least thepowerful generalization ability is necessary in heterogeneousclassification ensemblemodel Irrelevance between every twoindividual classifiers is desired as much as possible and theerror of every individual classifier itself ought to be smallerThe interrelation between error rate of integrated system andcorrelation of individual classifiers is given [6] by

119864 = (1 + 120588 (119873 minus 1)119873 )119864 + 119864Optimal Bayes (1)

where 119864 denotes Ensemble Generalization Error (EGE) 119864denotes the mean of these individual generalization errors119864Optimal Bayes denotes False Recognition Rate (FRR) that is gotby using the Bayesian rules when all conditional probabilitiesare known Therefore the above formula shows that theensemble error is smaller when individual differences arebigger and individual errors are smaller

(ii) The majority voting method is chosen as our com-bined strategy of all component classifiers For the predictionlabel of every record in testing data set we choose those labelswhose votes are more than half as final predicting outcomesThe majority voting is given by

119867(119909) = 119875119894 if119905sum119894=1

ℎ119895119894 (119909) gt 12119898sum119897=1

119905sum119894=1

ℎ119897119894 (119909) (2)

Journal of Electrical and Computer Engineering 3

Primitive trainingdata set

Disjoint trainingdata subset

partition

Componentclassier 1

Componentclassier 2

Componentclassier k

Classicationresult 1

Classicationresult 2

Classicationresult k

Voting system basedon majority voting

method

Final classicationdetection result

Heterogeneous multiclassier detection module

Training subset 1

Testing subset 2

Training subset k

Testing subset k

Training subset 2

Testing subset 1

Primitive testing data set

New testing datasubset partition

Rotation matrixbased on SVD

Data set pretreatment moduleClassication result

acquisition module

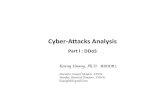

Figure 1 Hybrid heterogeneous multiclassifier ensemble classification model

where the 119898-dimension vector (ℎ1119894 (119909) ℎ2119894 (119909) ℎ119898119894 (119909)) isdenoted as prediction output ℎ119894 is on the sample 119909 and ℎ119895119894 (119909)is the output on the class label 119875119894

As shown in Figure 1 the proposed hybrid heterogeneousmulticlassifier ensemble classification model includes threeprimary modules and they are Data Set Pretreatment Mod-ule Heterogeneous Multiclassifier Detection Module andClassification Result Acquisition Module In Data Set Pre-treatment Module the primitive training data set is split firstinto 119896 disjoint training data subsets based on SVD The newtraining data subset is generated by the linearly independentbase transformation Secondly the primitive testing data setsare split also into 119896 data subsets corresponding to the featuresof new training data subsets Finally the new testing datasubsets are generated by Rotation Forest In HeterogeneousMulticlassifier Detection Module the 119896 new training datasubsets and testing data subsets are used as the input of119896 component classifiers Next the 119896 classification detectionresults are got In Classification Result Acquisition Modulethe voting system votes on 119896 results based on the majorityvoting method Then the final classification detection resultis obtained

22 Problem Formulation We assume that the 119898 times 119899matrixdenotes a training data set where119898 represents the number ofnetwork traffic records and 119899 represents the number of char-acteristic attributes in a record In addition 119863119894 denotes the119894th classifier in heterogeneous ensemble Here all componentclassifiers can be trained in parallel The whole features oftraining data set are split into 119896 disjoint data subsets Eachfeature subset contains 119891 (119891 = [119899119896]) features The SVDmethod is applied to every split subset

Suppose that119862 is the119898times119891 training subsetmatrix119880 is the119898times119898 square matrix and119881 is the 119891times119891 square matrix Herethe column of 119880 is orthogonal eigenvector of 119862119862119879 and thecolumn of 119881119879 is orthogonal eigenvector of 119862119879119862 In addition119903 is rank of the matrix 119862 The SVD is shown by

119862 = 119880sum119881119879 (3)

where sum is the 119898 times 119891 matrix sum119894119894 = radic120582119894 and the differentvalues ofsum119894119894 are arranged by the descending orderThe valueson the other locations are zero

Then the computational formulae of the singular valueand the eigenvectors are given by

(119862119879119862) V119894 = 120582119894V119894 (4)

120590119894 = radic120582119894 (5)

where V119894 represents the eigenvector and 120590119894 represents thesingular value

The new training data subset 1198621015840 whose all features arelinearly independent is obtained by

1198621015840 = 119862119881119879 (6)

So far the singular value and their eigenvectors ofthe corresponding eigenvalue by SVD for every subset areobtained Next the rotation matrix is got It is denoted by

119877119862 =[[[[[[[

1198811 [0] sdot sdot sdot [0][0] 1198812 sdot sdot sdot [0] d[0] [0] sdot sdot sdot 119881119896

]]]]]]] (7)

where 119881119894 (119894 = 1 2 119896) represents the eigenvector set of119862119879119862 for the 119894th training data subset 119862 in the main diagonalposition And the null vectors are in the other positions

The primitive testing data set 119862119879119901 is split also into 119896 datasubsets corresponding to the features of new training datasubsets In order to get the new testing data set 119862119879 similarlythe linearly independent base transformation is operated by

119862119879 = 119862119879119901119877119862 (8)

One of the keys for good performance of ensembles isthe diversityThere are several ways to inject diversity into anensemble the most common is the use of sampling [12] Toconsider adequately the differentiation and complementar-ity between every different classification algorithm in thispaper we select three typical machine learning algorithms asindividual component classifiers and they are Bagging [13]Random Forest [14] and 119896-Nearest Neighbor (119896-NN) [15]

4 Journal of Electrical and Computer Engineering

respectively Firstly Bagging is the best famous representativeof parallel ensemble learning methods It employs ldquoRandomSamplingrdquo in sampling data setThe algorithm focusesmainlyon decreasing variance Secondly ldquoRandom Feature Selec-tionrdquo is farther introduced in the training process for RandomForest In the constructing process of individual decisiontree Bagging uses ldquoDeterministic Decision Treerdquo whereall features in node are considered in choosing to divideproperty However ldquoStochastic Decision Treerdquo is used inRandom Forest where an attribute subset is consideredThirdly 119896-NN classifies bymeasuring distance between everytwo different feature values It usually employs Euclideandistance by

119889 = radic(1199091 minus 1199101)2 + (1199092 minus 1199102)2 + sdot sdot sdot + (119909119899 minus 119910119899)2 (9)

where (1199091 1199092 119909119899) and (1199101 1199102 119910119899) represent two dif-ferent sample records which have 119899 features respectively

In addition a statistical test should be employed toeliminate the bias in the comparison of the tested algorithmsIn our model we use the statistical normalization [16]as a statistical testing method The methodrsquos purpose isto convert data derived from any normal distribution tostandard normal distribution And 999 samples of theattribute are scaled into [minus3 3] in the method The statisticalnormalization is defined by

119909119894 = V119894 minus 120583120590 (10)

where 120583 is the mean of 119899 values for a given attribute and 120590 isits standard deviation The mean and the standard deviationare denoted by

120583 = 1119899119899sum119894=1

V119894 (11)

120590 = radic 1119899119899sum119894=1

(V119894 minus 120583)2 (12)

3 A Detection Algorithm in HeterogeneousClassification Ensemble Model

In this section we first describe DDoS attack detectionprocess in heterogeneousmulticlassifier ensemblemodel andthen the detection algorithm based on SVD is presented

Firstly all primitive training data set and all testing dataset are split into 119896 disjoint data subsets by the same featurefields respectively Secondly the new training data subsetsand the new testing data subsets are got by linearly inde-pendent base transformation based on SVD and RotationForest method Thirdly every new training data subset andevery new testing data subset are normalized by the statisticalnormalization in a batchmanner and then they are input intoevery component classifier to classify and learn The 119896 clas-sification detection results are acquired Finally the votingsystem votes on 119896 results based on the majority votingmethod and outputs the final classification detection result

Here we propose a heuristics algorithm to keep the strongergeneralization and sufficient complementarity

The classification detection algorithm is shown as follows

Input

119862 a training data set the119898 times 119899matrix119862119879119901 a testing data set the 119897 times 119899matrix119896 the number of data subsets and the number ofcomponent classifiers

Step 1 Split all features into 119896 subsets 119865119894 (119894 = 1 2 119896) andeach feature subset contains 119891 (119891 = [119899119896]) featuresStep 2 For 119894 = 1 to 119896 doStep 3 Apply SVD on the training subset the119898 times 119891matrix

Step 4 Get the linearly independent column eigenvectormatrix 119881119879Step 5 Get the new training data subset 1198621015840 (1198621015840 = 119862119881119879)Step 6 Get the new testing data subset 1198621015840119879 (1198621015840119879 = 119862119879119881119879)Step 71198621015840 and1198621015840119879 are normalized by the statistical normaliza-tion in a batch manner

Step 8 1198621015840 and 1198621015840119879 are input into the component classifier

Step 9 Get the classification label

Step 10 End for

Step 11 Input the results of 119896 component classifiers into thevoting system based on the majority voting method

Output

The final label of a testing data record label =NormalDDoSIn our algorithm in order to select the component clas-

sifiers we use the parallelization principle The componentclassifiers have no strong dependencies by the principle Inaddition we select Bagging Random Forest and 119896-NN algo-rithms as the component classifiers In Bagging the CARTalgorithm is used as the base learner of our heterogeneousclassification ensemble model

4 Experimental Results and Analysis

In this section we discuss how to apply the heterogeneousclassification ensemble model in detecting DDoS attacktrafficWe first present the data set and the data pretreatmentmethod used in our experiments Then the experimentalresults are given and we analyze andmake comparisons withthe homogeneous models based on three selected algorithmsby SVD and by un-SVD Here the computer environment torun our experiments is listed in Table 1

Journal of Electrical and Computer Engineering 5

Table 1 Computer experimental condition

CPU Memory Hard disk OS MATLABIntel XeonCPU E5-2640 v2200GHz200GHz (2processors)

32GB 2TBWindowsServer2008 R2Enterprise

R2013a(810604)

64-bit (win64)

In this paper we use the famous Knowledge Discoveryand Data mining (KDD) Cup 1999 dataset [17 18] as the veri-fication of our detection modelThis data set has been widelyapplied to research and evaluate network intrusion detectionmethods [19 20] KDD CUP 1999 data set comprises aboutfive million network traffic records and it provides a trainingsubset of 10 percent of network record and a testing subsetEvery record of the data set is described by four types offeatures and they are TCP connection basic features (9features) TCP connective content features (13 features) time-based network traffic statistical characteristics (9 features)and host-based network traffic statistical characteristics (10features) respectively All 41 features in the four types areshown in Table 2

In addition KDDCUP 1999 data set covers fourmain cat-egories of attack and these are DoS R2L U2R and ProbingBecause the traffic records of ldquoneptunerdquo and ldquosmurfrdquo for DoSaccount for more than 99 and 96 in the abovementionedtraining subset and testing subset we choose the two typesfor DoS as our algorithm evaluation and comparison withthe three famous existingmachine learning algorithms in thispaper

41 Data Pretreatment Firstly because the training subsetof 10 percent and the ldquocorrectedrdquo testing subset in KDDCUP 1999 data set include hundreds of thousands of networkrecords the hardware configuration of our sever cannot loadthe calculation to process the abovementioned data setsHere we use the built-in ldquorandom ()rdquo method in MySQL torandomly select one in every ten records of the abovemen-tioned training subset and testing subset as our data sets Thedata sets used in our experiments are listed in Table 3

Secondly for each network traffic record it includesthe information that has been separated into 41 featuresplus 1 class label [21] in KDD CUP 1999 and there are 3nonnumeric features in all features In our experiments wealso transform the type of three features into the numerictype The conversion process is listed as follows

(i) TCP UDP and ICMP in the ldquoprotocol typerdquo featureare marked as 1 2 and 3 respectively

(ii) The 70 kinds of ldquoservicerdquo for the destination host aresorted by the percentage in the training subset of 10percentWe get the top three types and they are ecr iprivate and http The three types account for over90 The ecr i private http and all other types aremarked as 1 2 3 and 0 respectively

Table 2 All 41 features in the four types

NumberTCP connection basic features(1) duration(2) protocol type(3) service(4) flag(5) src bytes(6) dst bytes(7) land(8) wrong fragment(9) urgentTCP connective content features(10) hot(11) num failed logins(12) logged in(13) num compromised(14) root shell(15) su attempted(16) num root(17) num file creations(18) num shells(19) num access files(20) num outbound cmds(21) is hot login(22) is guest login

Time-based network traffic statistical characteristics(23) count(24) srv count(25) serror rate(26) srv serror rate(27) rerror rate(28) srv rerror rate(29) same srv rate(30) diff srv rate(31) srv diff host rateHost-based network traffic statistical characteristics(32) dst host count(33) dst host srv count(34) dst host same srv rate(35) dst host diff srv rate(36) dst host same src port rate(37) dst host srv diff host rate(38) dst host serror rate(39) dst host srv serror rate(40) dst host rerror rate(41) dst host srv rerror rate

(iii) The ldquoSFrdquo is marked as 1 and the other ten falseconnection statuses are marked as 0 in the ldquoflagrdquofeature

6 Journal of Electrical and Computer Engineering

Table 3 Data sets used in our experiments

Category Training data set Testing data setNormal 9728 6059DoS 38800 22209

42 Fivefold Cross-Validation Cross-validation is an effectivestatistical technique to ensure the robustness of a model Inthis paper to improve the reliability of the experimental dataand to verify the availability of our model a fivefold cross-validation approach is used in our experiments

The training data set is randomly split into five partsIn turn we take out one part as the actual training data setand the other four parts and testing data set as the finaltesting data setThe aforementioned statistical normalizationmethod in Section 22 is employed as the data statistical test

43 Evaluation Index Whether normal or attack for thenetwork traffic belongs to the category of the binary classifi-cation we need some evaluation indexes to evaluate it In thispaper we use three typical indexes to measure our detectionmodel and they are TNR accuracy and precisionHere TNRdenotes the proportion of normal samples that are correctlyrecognized as normal samples in the testing data set It reflectsthe veracity that detection model discerns normal samplesAccuracy denotes the proportion between the number ofcorrectly classified samples and the total number of samplesin the testing data set It reflects the distinguishing ability todifferentiate normal samples from attack samples Precisiondenotes the proportion of true attack samples in all attacksamples recognized by detectionmodel in the testing data setTNR accuracy and precision are formulated as follows

TNR = TNFP + TN

(13)

Accuracy = TP + TNTP + FP + TN + FN

(14)

Precision = TPTP + FP

(15)

The performance of a classification detection model isevaluated by the counts of records for the normal samplesand the attack samples The matrix is called as the confusionmatrix [22] based on the abovementioned countsThematrixis listed in Table 4 where

(1) TP (True Positive) is the number of attacks correctlyclassified as attacks

(2) FP (False Positive) is the number of normal recordsincorrectly classified as attacks

(3) TN (True Negative) is the number of normal recordscorrectly classified as normal records

(4) FN (False Negative) is the number of attacks incor-rectly classified as normal records

Table 4 Confusion matrix

Predicted DoS Predicted normal TotalOriginal DoS TP FN POriginal normal FP TN NTotal P1015840 N1015840 P + N (P1015840 + N1015840)

44 Experimental Results and Discussion In this section ourheterogeneous detection model is compared with RandomForest 119896-NN and Bagging comprising the component classi-fiers when the three algorithms are used by themselves Hereour comparisons are based on SVDandun-SVD respectively

We refer to the past experience threshold value alongwith conducting many experiments In this paper we finallyselect eight threshold values to evaluate the performanceof our model Experimental results demonstrate that TNRaccuracy and precision of our model are excellent in thedetection model and the model is more stable than theprevious three algorithms in TNR accuracy and precision

In Figure 2(a) when Random Forest 119896-NN and Baggingare processed by SVD respectively our detection model iscompared with them for TNR It is shown that the TNRs ofour model are about 994 for different threshold valuesHowever the TNRs of Random Forest 119896-NN and Baggingare about 998 991 and 178 respectivelyTherefore theTNR of ourmodel is very close to the TNRof RandomForestand it is superior to the TNRs of 119896-NN and Bagging

In Figure 2(b) when Random Forest 119896-NN and Baggingconstruct alone their component classifiers by un-SVD ourdetection model is compared with them for TNR It is shownthat theTNRs of RandomForest andBagging are about 999and 999 respectivelyTheTNRof 119896-NN falls from996 to981when the range of threshold value is from25 to 200Theexperimental results demonstrate that the TNR of our modelis close to the TNRs of Random Forest and Bagging and it issuperior to the TNR of 119896-NN In addition the TNR of 119896-NNis relatively unstable with the change of different thresholdvalues

In Figure 3(a) when Random Forest 119896-NN and Baggingare processed by SVD respectively our detection model iscompared with them for accuracy It is shown that theaccuracies of our model are about 998 However theaccuracies of Random Forest 119896-NN and Bagging are about999 212 and 823 respectivelyTherefore the accuracyof our model is very close to the accuracy of Random Forestand it is superior to the accuracies of 119896-NN and Bagging

In Figure 3(b) when Random Forest 119896-NN and Baggingconstruct alone their component classifiers by un-SVD ourdetection model is compared with them for accuracy It isshown that the accuracies of Random Forest and Baggingare about 999 and 999 respectively The accuracy of 119896-NN falls from 9989 to 8848 when the range of thresholdvalue is from 25 to 75 The experimental results demonstratethat the accuracy of our model is close to the accuracies ofRandomForest andBagging and it is superior to the accuracyof 119896-NN In addition the accuracy of 119896-NN is relativelyunstable with the change of different threshold values

Journal of Electrical and Computer Engineering 7

10

20

40

60

80

100

110TN

R (

)

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

(a) TNR comparison based on SVD

92

94

96

98

99

100

102

TNR

()

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

(b) TNR comparison based on un-SVD

Figure 2 TNR for comparing our model with the other algorithms

1520

50

8085

95100105

Accu

racy

()

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

(a) Accuracy comparison based on SVD

80

85

90

95

100

105

Accu

racy

()

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

(b) Accuracy comparison based on un-SVD

Figure 3 Accuracy for comparing our model with the other algorithms

In Figure 4(a) when Random Forest 119896-NN and Baggingare processed by SVD respectively our detection modelis compared with them for precision It is shown that theprecisions of our model are about 9984 However theprecisions of Random Forest 119896-NN and Bagging are about999 0 and 816 respectively Therefore the precision ofour model is very close to the precision of Random Forestand it is superior to the precisions of 119896-NN and Bagging

In Figure 4(b) when Random Forest 119896-NN and Baggingconstruct alone their component classifiers by un-SVD our

detection model is compared with them for precision It isshown that the precisions of Random Forest and Bagging areabout 9998 and 9998 respectively The precision of 119896-NN falls from 999 to 995 when the range of thresholdvalue is from 25 to 75 The experimental results demonstratethat the precision of our model is close to the precisionsof Random Forest and Bagging and it is superior to theprecision of 119896-NN In addition the precision of 119896-NN isrelatively unstable with the change of different thresholdvalues

8 Journal of Electrical and Computer Engineering

25 50 75 100 125 150 175 200reshold value

05

40

8085

95100105

Prec

ision

()

Our modelRandom Forest

k-NNBagging

(a) Precision comparison based on SVDPr

ecisi

on (

)

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

992

993

994

995

996

997

998

999

100

1001

1002

(b) Precision comparison based on un-SVD

Figure 4 Precision for comparing our model with the other algorithms

5 Conclusions

The efficient and exact DDoS attack detection is a keyproblem for diversity and complexity of attack traffic inhigh-speed Internet environment In this paper we studythe problem from the perspective of hybrid heterogeneousmulticlassifier ensemble learning What is more in orderto get the stronger generalization and the more sufficientcomplementarity we propose a heterogeneous detectionsystem model and we construct the component classifiersof the model based on Bagging Random Forest and 119896-NN algorithms In addition we design a detection algorithmbased on SVD in heterogeneous classification ensemblemodel Experimental results show that our detection methodis excellent and is very stable in TNR accuracy and precisionTherefore our algorithm and model have good detectiveperformance for DDoS attack

Competing Interests

The authors declare that they have no competing interests

Acknowledgments

The work in this paper is supported by the Joint Funds ofNational Natural Science Foundation of China and Xinjiang(Project U1603261)

References

[1] F Palmieri S Ricciardi U Fiore M Ficco and A CastiglioneldquoEnergy-oriented denial of service attacks an emergingmenacefor large cloud infrastructuresrdquoThe Journal of Supercomputingvol 71 no 5 pp 1620ndash1641 2015

[2] Q Yan F R Yu Q X Gong and J Q Li ldquoSoftware-definednetworking (SDN) and distributed denial of service (DDoS)attacks in cloud computing environments a survey someresearch issues and challengesrdquo IEEE Communications Surveysamp Tutorials vol 18 no 1 pp 602ndash622 2016

[3] J Luo X Yang JWang J Xu J Sun and K Long ldquoOn amathe-matical model for low-rate shrew DDoSrdquo IEEE Transactions onInformation Forensics and Security vol 9 no 7 pp 1069ndash10832014

[4] P Xiao W Y Qu H Qi and Z Y Li ldquoDetecting DDoSattacks against data center with correlation analysisrdquo ComputerCommunications vol 67 pp 66ndash74 2015

[5] A Saied R E Overill and T Radzik ldquoDetection of knownand unknown DDoS attacks using artificial neural networksrdquoNeurocomputing vol 172 pp 385ndash393 2016

[6] S S Mao L Xiong L C Jiao S Zhang and B ChenldquoIsomerous multiple classifier ensemble via transformation ofthe rotating forestrdquo Journal of Xidian University vol 41 no 5pp 48ndash53 2014

[7] L I Kuncheva and C J Whitaker ldquoMeasures of diversity inclassifier ensembles and their relationship with the ensembleaccuracyrdquoMachine Learning vol 51 no 2 pp 181ndash207 2003

[8] J J Rodrıguez L I Kuncheva and C J Alonso ldquoRotationforest a new classifier ensemble methodrdquo IEEE Transactions onPattern Analysis and Machine Intelligence vol 28 no 10 pp1619ndash1630 2006

[9] Z L Fu and X H Zhao ldquoDynamic combination method ofclassifiers and ensemble learning algorithms based on classi-fiers Combinationrdquo Journal of Sichuan University (EngineeringScience Edition) vol 43 no 2 pp 58ndash65 2011

[10] L I Kuncheva ldquoThat elusive diversity in classifier ensemblesrdquoin Proceedings of the 1st Iberian Conference on Pattern Recogni-tion and Image Analysis (ibPRIA rsquo03) pp 1126ndash1138 SpringerMallorca Spain June 2003

[11] L I Kuncheva and J J Rodrıguez ldquoClassifier ensembles witha random linear oraclerdquo IEEE Transactions on Knowledge andData Engineering vol 19 no 4 pp 500ndash508 2007

Journal of Electrical and Computer Engineering 9

[12] J F Dıez-Pastor J J Rodrıguez C Garcıa-Osorio and LI Kuncheva ldquoRandom balance ensembles of variable priorsclassifiers for imbalanced datardquo Knowledge-Based Systems vol85 pp 96ndash111 2015

[13] L Breiman ldquoBagging predictorsrdquoMachine Learning vol 24 no2 pp 123ndash140 1996

[14] L Breiman ldquoRandom forestsrdquoMachine Learning vol 45 no 1pp 5ndash32 2001

[15] D T Larose ldquok-nearest neighbor algorithmrdquo in DiscoveringKnowledge in Data An Introduction to DataMining pp 90ndash1062nd edition 2005

[16] WWang X Zhang S Gombault and S J Knapskog ldquoAttributenormalization in network intrusion detectionrdquo in Proceedingsof the 10th International Symposium on Pervasive SystemsAlgorithms and Networks (I-SPAN rsquo09) pp 448ndash453 IEEEKaohsiung Taiwan December 2009

[17] R Lippmann JWHaines D J Fried J Korba andKDas ldquoThe1999 DARPA off-line intrusion detection evaluationrdquoComputerNetworks vol 34 no 4 pp 579ndash595 2000

[18] J McHugh ldquoTesting Intrusion detection systems a critique ofthe 1998 and 1999 DARPA intrusion detection system evalua-tions as performed by Lincoln Laboratoryrdquo ACM Transactionson Information and System Security (TISSEC) vol 3 no 4 pp262ndash294 2000

[19] K C Khor C Y Ting and S Phon-Amnuaisuk ldquoA cascadedclassifier approach for improving detection rates on rare attackcategories in network intrusion detectionrdquo Applied Intelligencevol 36 no 2 pp 320ndash329 2012

[20] P Prasenna R K Kumar A V T R Ramana and A DevanbuldquoNetwork programming and mining classifier for intrusiondetection using probability classificationrdquo in Proceedings of theInternational Conference on Pattern Recognition Informaticsand Medical Engineering (PRIME rsquo12) pp 204ndash209 IEEETamilnadu India March 2012

[21] C Bae W C Yeh M A M Shukran Y Y Chung and T JHsieh ldquoA novel anomaly-network intrusion detection systemusing ABC algorithmsrdquo International Journal of InnovativeComputing Information and Control vol 8 no 12 pp 8231ndash8248 2012

[22] C Guo Y Zhou Y Ping Z Zhang G Liu and Y Yang ldquoAdistance sum-based hybrid method for intrusion detectionrdquoApplied Intelligence vol 40 no 1 pp 178ndash188 2014

International Journal of

AerospaceEngineeringHindawi Publishing Corporationhttpwwwhindawicom Volume 2014

RoboticsJournal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Active and Passive Electronic Components

Control Scienceand Engineering

Journal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

International Journal of

RotatingMachinery

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporation httpwwwhindawicom

Journal ofEngineeringVolume 2014

Submit your manuscripts athttpswwwhindawicom

VLSI Design

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Shock and Vibration

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Civil EngineeringAdvances in

Acoustics and VibrationAdvances in

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Electrical and Computer Engineering

Journal of

Advances inOptoElectronics

Hindawi Publishing Corporation httpwwwhindawicom

Volume 2014

The Scientific World JournalHindawi Publishing Corporation httpwwwhindawicom Volume 2014

SensorsJournal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Modelling amp Simulation in EngineeringHindawi Publishing Corporation httpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Chemical EngineeringInternational Journal of Antennas and

Propagation

International Journal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Navigation and Observation

International Journal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

DistributedSensor Networks

International Journal of

2 Journal of Electrical and Computer Engineering

theirmethods ormodels are homogeneous so the robustnessstability and universality are difficult to be guaranteed Toaddress the abovementioned problems in this paper wepropose the DDoS attack detection method based on hybridheterogeneous multiclassifier ensemble learning

Ensemble learning finishes the learning task by struc-turing and combining multiple individual classifiers It ishomogeneous for the ensemble of the same type of individualclassifiers and this kind of individual classifier is known asldquobase classifierrdquo or ldquoweak classifierrdquo Ensemble learning canalso contain the different types of individual classifiers andthe ensemble is heterogeneous In heterogeneous ensemblethe individual classifiers are generated by different learningalgorithms The classifiers are called as ldquocomponent classi-fierrdquo For the research of homogeneous base classifier thereis a key hypothesis that the errors of base classifier areindependent of each other However for the actual attacktraffic detection they apparently are impossible In additionthe accuracy and the diversity of individual classifiers conflictin nature When the accuracy is very high increasing thediversity becomes extremely difficult Therefore to generatethe robust generalization ability the individual classifiersought to be excellent and different

An overwhelming majority of classifier ensembles arecurrently constructed based on the homogeneous base clas-sifier model It was proved to obtain a relatively good classifi-cation performance However according to some theoreticalanalyses while error correlation between every two indi-vidual classifiers is smaller the error of ensemble system issmaller Simultaneously while the error of classifier isincreased the negative effect came into being Therefore theensemble learning model for homogeneous individual clas-sifiers cannot satisfy the needs of the higher ensembleperformance [6]

According to the different measured standard in [7]while the value of diversity is larger the difference is largerand the classifying precision of classifier is higher Throughthe abovementioned analysis the heterogeneous multiclas-sifier ensemble learning owns more remarkable and morepredominant generalization performance than the homoge-neous multiclassifier ensemble learning

This paper makes the following contributions (i) To thebest of our knowledge this is the first attempt to apply the het-erogeneous multiclassifier ensemble model to DDoS attackdetection and we provide the system model and its formu-lation (ii) We design a heuristic detection algorithm basedon Singular Value Decomposition (SVD) to construct theheterogeneous DDoS detection system and we conductthorough numerical comparisons between our method andseveral famous machine learning algorithms by SVD and byun-SVD

The rest of this paper is organized as follows Section 2describes the system model and the related problem for-mulation Section 3 presents DDoS detection algorithm inheterogeneous classification ensemble learning model Ourexperimental results and analysis are discussed in Section 4In Section 5 we conclude this paper

2 System Model and Problem Formulation

21 SystemModel Theclassification learningmodel based onRotation Forest and SVD aims at building the accurate anddiverse individual component classifiers Here the model isused for DDoS attack detection Rodrıguez et al [8] appliedRotation Forest and Principal Component Analysis (PCA)algorithm to extract features subsets and to reconstruct afull feature set for each component classifier in the ensemblemodel However PCA has indeed some weaknesses Forinstance PCA sees all samples as a whole and searches anoptimal linear map projection on the premise of minimummean-square error Nonetheless categorical attributes areignored The ignored projection direction is likely to includesome important divisible information In order to overcomethe abovementioned shortcomings in this paper we use SVDto substitute for PCA

There are two key points to construct heterogeneousmulticlassifier ensemble learning model [9] The first one isthe selection of individual classifiers The second one is theassembly of individual classifiers We discuss the two issuesas follows

(i) Firstly classifiers in an ensemble should be differentfrom each other otherwise there is no gain in combiningthem These differences cover diversity orthogonality andcomplementarity [10] Secondly Kuncheva and Rodrıguez[11] proposed static classifier selection and dynamic classifierselection The difference of the two methods is whether ornot evaluation of competence is implemented during theclassification Because of the characteristic of prejudgedcompetence the dynamic classifier selection approach isbetter than the static classifier selection Last but not least thepowerful generalization ability is necessary in heterogeneousclassification ensemblemodel Irrelevance between every twoindividual classifiers is desired as much as possible and theerror of every individual classifier itself ought to be smallerThe interrelation between error rate of integrated system andcorrelation of individual classifiers is given [6] by

119864 = (1 + 120588 (119873 minus 1)119873 )119864 + 119864Optimal Bayes (1)

where 119864 denotes Ensemble Generalization Error (EGE) 119864denotes the mean of these individual generalization errors119864Optimal Bayes denotes False Recognition Rate (FRR) that is gotby using the Bayesian rules when all conditional probabilitiesare known Therefore the above formula shows that theensemble error is smaller when individual differences arebigger and individual errors are smaller

(ii) The majority voting method is chosen as our com-bined strategy of all component classifiers For the predictionlabel of every record in testing data set we choose those labelswhose votes are more than half as final predicting outcomesThe majority voting is given by

119867(119909) = 119875119894 if119905sum119894=1

ℎ119895119894 (119909) gt 12119898sum119897=1

119905sum119894=1

ℎ119897119894 (119909) (2)

Journal of Electrical and Computer Engineering 3

Primitive trainingdata set

Disjoint trainingdata subset

partition

Componentclassier 1

Componentclassier 2

Componentclassier k

Classicationresult 1

Classicationresult 2

Classicationresult k

Voting system basedon majority voting

method

Final classicationdetection result

Heterogeneous multiclassier detection module

Training subset 1

Testing subset 2

Training subset k

Testing subset k

Training subset 2

Testing subset 1

Primitive testing data set

New testing datasubset partition

Rotation matrixbased on SVD

Data set pretreatment moduleClassication result

acquisition module

Figure 1 Hybrid heterogeneous multiclassifier ensemble classification model

where the 119898-dimension vector (ℎ1119894 (119909) ℎ2119894 (119909) ℎ119898119894 (119909)) isdenoted as prediction output ℎ119894 is on the sample 119909 and ℎ119895119894 (119909)is the output on the class label 119875119894

As shown in Figure 1 the proposed hybrid heterogeneousmulticlassifier ensemble classification model includes threeprimary modules and they are Data Set Pretreatment Mod-ule Heterogeneous Multiclassifier Detection Module andClassification Result Acquisition Module In Data Set Pre-treatment Module the primitive training data set is split firstinto 119896 disjoint training data subsets based on SVD The newtraining data subset is generated by the linearly independentbase transformation Secondly the primitive testing data setsare split also into 119896 data subsets corresponding to the featuresof new training data subsets Finally the new testing datasubsets are generated by Rotation Forest In HeterogeneousMulticlassifier Detection Module the 119896 new training datasubsets and testing data subsets are used as the input of119896 component classifiers Next the 119896 classification detectionresults are got In Classification Result Acquisition Modulethe voting system votes on 119896 results based on the majorityvoting method Then the final classification detection resultis obtained

22 Problem Formulation We assume that the 119898 times 119899matrixdenotes a training data set where119898 represents the number ofnetwork traffic records and 119899 represents the number of char-acteristic attributes in a record In addition 119863119894 denotes the119894th classifier in heterogeneous ensemble Here all componentclassifiers can be trained in parallel The whole features oftraining data set are split into 119896 disjoint data subsets Eachfeature subset contains 119891 (119891 = [119899119896]) features The SVDmethod is applied to every split subset

Suppose that119862 is the119898times119891 training subsetmatrix119880 is the119898times119898 square matrix and119881 is the 119891times119891 square matrix Herethe column of 119880 is orthogonal eigenvector of 119862119862119879 and thecolumn of 119881119879 is orthogonal eigenvector of 119862119879119862 In addition119903 is rank of the matrix 119862 The SVD is shown by

119862 = 119880sum119881119879 (3)

where sum is the 119898 times 119891 matrix sum119894119894 = radic120582119894 and the differentvalues ofsum119894119894 are arranged by the descending orderThe valueson the other locations are zero

Then the computational formulae of the singular valueand the eigenvectors are given by

(119862119879119862) V119894 = 120582119894V119894 (4)

120590119894 = radic120582119894 (5)

where V119894 represents the eigenvector and 120590119894 represents thesingular value

The new training data subset 1198621015840 whose all features arelinearly independent is obtained by

1198621015840 = 119862119881119879 (6)

So far the singular value and their eigenvectors ofthe corresponding eigenvalue by SVD for every subset areobtained Next the rotation matrix is got It is denoted by

119877119862 =[[[[[[[

1198811 [0] sdot sdot sdot [0][0] 1198812 sdot sdot sdot [0] d[0] [0] sdot sdot sdot 119881119896

]]]]]]] (7)

where 119881119894 (119894 = 1 2 119896) represents the eigenvector set of119862119879119862 for the 119894th training data subset 119862 in the main diagonalposition And the null vectors are in the other positions

The primitive testing data set 119862119879119901 is split also into 119896 datasubsets corresponding to the features of new training datasubsets In order to get the new testing data set 119862119879 similarlythe linearly independent base transformation is operated by

119862119879 = 119862119879119901119877119862 (8)

One of the keys for good performance of ensembles isthe diversityThere are several ways to inject diversity into anensemble the most common is the use of sampling [12] Toconsider adequately the differentiation and complementar-ity between every different classification algorithm in thispaper we select three typical machine learning algorithms asindividual component classifiers and they are Bagging [13]Random Forest [14] and 119896-Nearest Neighbor (119896-NN) [15]

4 Journal of Electrical and Computer Engineering

respectively Firstly Bagging is the best famous representativeof parallel ensemble learning methods It employs ldquoRandomSamplingrdquo in sampling data setThe algorithm focusesmainlyon decreasing variance Secondly ldquoRandom Feature Selec-tionrdquo is farther introduced in the training process for RandomForest In the constructing process of individual decisiontree Bagging uses ldquoDeterministic Decision Treerdquo whereall features in node are considered in choosing to divideproperty However ldquoStochastic Decision Treerdquo is used inRandom Forest where an attribute subset is consideredThirdly 119896-NN classifies bymeasuring distance between everytwo different feature values It usually employs Euclideandistance by

119889 = radic(1199091 minus 1199101)2 + (1199092 minus 1199102)2 + sdot sdot sdot + (119909119899 minus 119910119899)2 (9)

where (1199091 1199092 119909119899) and (1199101 1199102 119910119899) represent two dif-ferent sample records which have 119899 features respectively

In addition a statistical test should be employed toeliminate the bias in the comparison of the tested algorithmsIn our model we use the statistical normalization [16]as a statistical testing method The methodrsquos purpose isto convert data derived from any normal distribution tostandard normal distribution And 999 samples of theattribute are scaled into [minus3 3] in the method The statisticalnormalization is defined by

119909119894 = V119894 minus 120583120590 (10)

where 120583 is the mean of 119899 values for a given attribute and 120590 isits standard deviation The mean and the standard deviationare denoted by

120583 = 1119899119899sum119894=1

V119894 (11)

120590 = radic 1119899119899sum119894=1

(V119894 minus 120583)2 (12)

3 A Detection Algorithm in HeterogeneousClassification Ensemble Model

In this section we first describe DDoS attack detectionprocess in heterogeneousmulticlassifier ensemblemodel andthen the detection algorithm based on SVD is presented

Firstly all primitive training data set and all testing dataset are split into 119896 disjoint data subsets by the same featurefields respectively Secondly the new training data subsetsand the new testing data subsets are got by linearly inde-pendent base transformation based on SVD and RotationForest method Thirdly every new training data subset andevery new testing data subset are normalized by the statisticalnormalization in a batchmanner and then they are input intoevery component classifier to classify and learn The 119896 clas-sification detection results are acquired Finally the votingsystem votes on 119896 results based on the majority votingmethod and outputs the final classification detection result

Here we propose a heuristics algorithm to keep the strongergeneralization and sufficient complementarity

The classification detection algorithm is shown as follows

Input

119862 a training data set the119898 times 119899matrix119862119879119901 a testing data set the 119897 times 119899matrix119896 the number of data subsets and the number ofcomponent classifiers

Step 1 Split all features into 119896 subsets 119865119894 (119894 = 1 2 119896) andeach feature subset contains 119891 (119891 = [119899119896]) featuresStep 2 For 119894 = 1 to 119896 doStep 3 Apply SVD on the training subset the119898 times 119891matrix

Step 4 Get the linearly independent column eigenvectormatrix 119881119879Step 5 Get the new training data subset 1198621015840 (1198621015840 = 119862119881119879)Step 6 Get the new testing data subset 1198621015840119879 (1198621015840119879 = 119862119879119881119879)Step 71198621015840 and1198621015840119879 are normalized by the statistical normaliza-tion in a batch manner

Step 8 1198621015840 and 1198621015840119879 are input into the component classifier

Step 9 Get the classification label

Step 10 End for

Step 11 Input the results of 119896 component classifiers into thevoting system based on the majority voting method

Output

The final label of a testing data record label =NormalDDoSIn our algorithm in order to select the component clas-

sifiers we use the parallelization principle The componentclassifiers have no strong dependencies by the principle Inaddition we select Bagging Random Forest and 119896-NN algo-rithms as the component classifiers In Bagging the CARTalgorithm is used as the base learner of our heterogeneousclassification ensemble model

4 Experimental Results and Analysis

In this section we discuss how to apply the heterogeneousclassification ensemble model in detecting DDoS attacktrafficWe first present the data set and the data pretreatmentmethod used in our experiments Then the experimentalresults are given and we analyze andmake comparisons withthe homogeneous models based on three selected algorithmsby SVD and by un-SVD Here the computer environment torun our experiments is listed in Table 1

Journal of Electrical and Computer Engineering 5

Table 1 Computer experimental condition

CPU Memory Hard disk OS MATLABIntel XeonCPU E5-2640 v2200GHz200GHz (2processors)

32GB 2TBWindowsServer2008 R2Enterprise

R2013a(810604)

64-bit (win64)

In this paper we use the famous Knowledge Discoveryand Data mining (KDD) Cup 1999 dataset [17 18] as the veri-fication of our detection modelThis data set has been widelyapplied to research and evaluate network intrusion detectionmethods [19 20] KDD CUP 1999 data set comprises aboutfive million network traffic records and it provides a trainingsubset of 10 percent of network record and a testing subsetEvery record of the data set is described by four types offeatures and they are TCP connection basic features (9features) TCP connective content features (13 features) time-based network traffic statistical characteristics (9 features)and host-based network traffic statistical characteristics (10features) respectively All 41 features in the four types areshown in Table 2

In addition KDDCUP 1999 data set covers fourmain cat-egories of attack and these are DoS R2L U2R and ProbingBecause the traffic records of ldquoneptunerdquo and ldquosmurfrdquo for DoSaccount for more than 99 and 96 in the abovementionedtraining subset and testing subset we choose the two typesfor DoS as our algorithm evaluation and comparison withthe three famous existingmachine learning algorithms in thispaper

41 Data Pretreatment Firstly because the training subsetof 10 percent and the ldquocorrectedrdquo testing subset in KDDCUP 1999 data set include hundreds of thousands of networkrecords the hardware configuration of our sever cannot loadthe calculation to process the abovementioned data setsHere we use the built-in ldquorandom ()rdquo method in MySQL torandomly select one in every ten records of the abovemen-tioned training subset and testing subset as our data sets Thedata sets used in our experiments are listed in Table 3

Secondly for each network traffic record it includesthe information that has been separated into 41 featuresplus 1 class label [21] in KDD CUP 1999 and there are 3nonnumeric features in all features In our experiments wealso transform the type of three features into the numerictype The conversion process is listed as follows

(i) TCP UDP and ICMP in the ldquoprotocol typerdquo featureare marked as 1 2 and 3 respectively

(ii) The 70 kinds of ldquoservicerdquo for the destination host aresorted by the percentage in the training subset of 10percentWe get the top three types and they are ecr iprivate and http The three types account for over90 The ecr i private http and all other types aremarked as 1 2 3 and 0 respectively

Table 2 All 41 features in the four types

NumberTCP connection basic features(1) duration(2) protocol type(3) service(4) flag(5) src bytes(6) dst bytes(7) land(8) wrong fragment(9) urgentTCP connective content features(10) hot(11) num failed logins(12) logged in(13) num compromised(14) root shell(15) su attempted(16) num root(17) num file creations(18) num shells(19) num access files(20) num outbound cmds(21) is hot login(22) is guest login

Time-based network traffic statistical characteristics(23) count(24) srv count(25) serror rate(26) srv serror rate(27) rerror rate(28) srv rerror rate(29) same srv rate(30) diff srv rate(31) srv diff host rateHost-based network traffic statistical characteristics(32) dst host count(33) dst host srv count(34) dst host same srv rate(35) dst host diff srv rate(36) dst host same src port rate(37) dst host srv diff host rate(38) dst host serror rate(39) dst host srv serror rate(40) dst host rerror rate(41) dst host srv rerror rate

(iii) The ldquoSFrdquo is marked as 1 and the other ten falseconnection statuses are marked as 0 in the ldquoflagrdquofeature

6 Journal of Electrical and Computer Engineering

Table 3 Data sets used in our experiments

Category Training data set Testing data setNormal 9728 6059DoS 38800 22209

42 Fivefold Cross-Validation Cross-validation is an effectivestatistical technique to ensure the robustness of a model Inthis paper to improve the reliability of the experimental dataand to verify the availability of our model a fivefold cross-validation approach is used in our experiments

The training data set is randomly split into five partsIn turn we take out one part as the actual training data setand the other four parts and testing data set as the finaltesting data setThe aforementioned statistical normalizationmethod in Section 22 is employed as the data statistical test

43 Evaluation Index Whether normal or attack for thenetwork traffic belongs to the category of the binary classifi-cation we need some evaluation indexes to evaluate it In thispaper we use three typical indexes to measure our detectionmodel and they are TNR accuracy and precisionHere TNRdenotes the proportion of normal samples that are correctlyrecognized as normal samples in the testing data set It reflectsthe veracity that detection model discerns normal samplesAccuracy denotes the proportion between the number ofcorrectly classified samples and the total number of samplesin the testing data set It reflects the distinguishing ability todifferentiate normal samples from attack samples Precisiondenotes the proportion of true attack samples in all attacksamples recognized by detectionmodel in the testing data setTNR accuracy and precision are formulated as follows

TNR = TNFP + TN

(13)

Accuracy = TP + TNTP + FP + TN + FN

(14)

Precision = TPTP + FP

(15)

The performance of a classification detection model isevaluated by the counts of records for the normal samplesand the attack samples The matrix is called as the confusionmatrix [22] based on the abovementioned countsThematrixis listed in Table 4 where

(1) TP (True Positive) is the number of attacks correctlyclassified as attacks

(2) FP (False Positive) is the number of normal recordsincorrectly classified as attacks

(3) TN (True Negative) is the number of normal recordscorrectly classified as normal records

(4) FN (False Negative) is the number of attacks incor-rectly classified as normal records

Table 4 Confusion matrix

Predicted DoS Predicted normal TotalOriginal DoS TP FN POriginal normal FP TN NTotal P1015840 N1015840 P + N (P1015840 + N1015840)

44 Experimental Results and Discussion In this section ourheterogeneous detection model is compared with RandomForest 119896-NN and Bagging comprising the component classi-fiers when the three algorithms are used by themselves Hereour comparisons are based on SVDandun-SVD respectively

We refer to the past experience threshold value alongwith conducting many experiments In this paper we finallyselect eight threshold values to evaluate the performanceof our model Experimental results demonstrate that TNRaccuracy and precision of our model are excellent in thedetection model and the model is more stable than theprevious three algorithms in TNR accuracy and precision

In Figure 2(a) when Random Forest 119896-NN and Baggingare processed by SVD respectively our detection model iscompared with them for TNR It is shown that the TNRs ofour model are about 994 for different threshold valuesHowever the TNRs of Random Forest 119896-NN and Baggingare about 998 991 and 178 respectivelyTherefore theTNR of ourmodel is very close to the TNRof RandomForestand it is superior to the TNRs of 119896-NN and Bagging

In Figure 2(b) when Random Forest 119896-NN and Baggingconstruct alone their component classifiers by un-SVD ourdetection model is compared with them for TNR It is shownthat theTNRs of RandomForest andBagging are about 999and 999 respectivelyTheTNRof 119896-NN falls from996 to981when the range of threshold value is from25 to 200Theexperimental results demonstrate that the TNR of our modelis close to the TNRs of Random Forest and Bagging and it issuperior to the TNR of 119896-NN In addition the TNR of 119896-NNis relatively unstable with the change of different thresholdvalues

In Figure 3(a) when Random Forest 119896-NN and Baggingare processed by SVD respectively our detection model iscompared with them for accuracy It is shown that theaccuracies of our model are about 998 However theaccuracies of Random Forest 119896-NN and Bagging are about999 212 and 823 respectivelyTherefore the accuracyof our model is very close to the accuracy of Random Forestand it is superior to the accuracies of 119896-NN and Bagging

In Figure 3(b) when Random Forest 119896-NN and Baggingconstruct alone their component classifiers by un-SVD ourdetection model is compared with them for accuracy It isshown that the accuracies of Random Forest and Baggingare about 999 and 999 respectively The accuracy of 119896-NN falls from 9989 to 8848 when the range of thresholdvalue is from 25 to 75 The experimental results demonstratethat the accuracy of our model is close to the accuracies ofRandomForest andBagging and it is superior to the accuracyof 119896-NN In addition the accuracy of 119896-NN is relativelyunstable with the change of different threshold values

Journal of Electrical and Computer Engineering 7

10

20

40

60

80

100

110TN

R (

)

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

(a) TNR comparison based on SVD

92

94

96

98

99

100

102

TNR

()

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

(b) TNR comparison based on un-SVD

Figure 2 TNR for comparing our model with the other algorithms

1520

50

8085

95100105

Accu

racy

()

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

(a) Accuracy comparison based on SVD

80

85

90

95

100

105

Accu

racy

()

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

(b) Accuracy comparison based on un-SVD

Figure 3 Accuracy for comparing our model with the other algorithms

In Figure 4(a) when Random Forest 119896-NN and Baggingare processed by SVD respectively our detection modelis compared with them for precision It is shown that theprecisions of our model are about 9984 However theprecisions of Random Forest 119896-NN and Bagging are about999 0 and 816 respectively Therefore the precision ofour model is very close to the precision of Random Forestand it is superior to the precisions of 119896-NN and Bagging

In Figure 4(b) when Random Forest 119896-NN and Baggingconstruct alone their component classifiers by un-SVD our

detection model is compared with them for precision It isshown that the precisions of Random Forest and Bagging areabout 9998 and 9998 respectively The precision of 119896-NN falls from 999 to 995 when the range of thresholdvalue is from 25 to 75 The experimental results demonstratethat the precision of our model is close to the precisionsof Random Forest and Bagging and it is superior to theprecision of 119896-NN In addition the precision of 119896-NN isrelatively unstable with the change of different thresholdvalues

8 Journal of Electrical and Computer Engineering

25 50 75 100 125 150 175 200reshold value

05

40

8085

95100105

Prec

ision

()

Our modelRandom Forest

k-NNBagging

(a) Precision comparison based on SVDPr

ecisi

on (

)

25 50 75 100 125 150 175 200reshold value

Our modelRandom Forest

k-NNBagging

992

993

994

995

996

997

998

999

100

1001

1002

(b) Precision comparison based on un-SVD

Figure 4 Precision for comparing our model with the other algorithms

5 Conclusions

The efficient and exact DDoS attack detection is a keyproblem for diversity and complexity of attack traffic inhigh-speed Internet environment In this paper we studythe problem from the perspective of hybrid heterogeneousmulticlassifier ensemble learning What is more in orderto get the stronger generalization and the more sufficientcomplementarity we propose a heterogeneous detectionsystem model and we construct the component classifiersof the model based on Bagging Random Forest and 119896-NN algorithms In addition we design a detection algorithmbased on SVD in heterogeneous classification ensemblemodel Experimental results show that our detection methodis excellent and is very stable in TNR accuracy and precisionTherefore our algorithm and model have good detectiveperformance for DDoS attack

Competing Interests

The authors declare that they have no competing interests

Acknowledgments

The work in this paper is supported by the Joint Funds ofNational Natural Science Foundation of China and Xinjiang(Project U1603261)

References

[1] F Palmieri S Ricciardi U Fiore M Ficco and A CastiglioneldquoEnergy-oriented denial of service attacks an emergingmenacefor large cloud infrastructuresrdquoThe Journal of Supercomputingvol 71 no 5 pp 1620ndash1641 2015

[2] Q Yan F R Yu Q X Gong and J Q Li ldquoSoftware-definednetworking (SDN) and distributed denial of service (DDoS)attacks in cloud computing environments a survey someresearch issues and challengesrdquo IEEE Communications Surveysamp Tutorials vol 18 no 1 pp 602ndash622 2016

[3] J Luo X Yang JWang J Xu J Sun and K Long ldquoOn amathe-matical model for low-rate shrew DDoSrdquo IEEE Transactions onInformation Forensics and Security vol 9 no 7 pp 1069ndash10832014

[4] P Xiao W Y Qu H Qi and Z Y Li ldquoDetecting DDoSattacks against data center with correlation analysisrdquo ComputerCommunications vol 67 pp 66ndash74 2015

[5] A Saied R E Overill and T Radzik ldquoDetection of knownand unknown DDoS attacks using artificial neural networksrdquoNeurocomputing vol 172 pp 385ndash393 2016

[6] S S Mao L Xiong L C Jiao S Zhang and B ChenldquoIsomerous multiple classifier ensemble via transformation ofthe rotating forestrdquo Journal of Xidian University vol 41 no 5pp 48ndash53 2014

[7] L I Kuncheva and C J Whitaker ldquoMeasures of diversity inclassifier ensembles and their relationship with the ensembleaccuracyrdquoMachine Learning vol 51 no 2 pp 181ndash207 2003

[8] J J Rodrıguez L I Kuncheva and C J Alonso ldquoRotationforest a new classifier ensemble methodrdquo IEEE Transactions onPattern Analysis and Machine Intelligence vol 28 no 10 pp1619ndash1630 2006

[9] Z L Fu and X H Zhao ldquoDynamic combination method ofclassifiers and ensemble learning algorithms based on classi-fiers Combinationrdquo Journal of Sichuan University (EngineeringScience Edition) vol 43 no 2 pp 58ndash65 2011

[10] L I Kuncheva ldquoThat elusive diversity in classifier ensemblesrdquoin Proceedings of the 1st Iberian Conference on Pattern Recogni-tion and Image Analysis (ibPRIA rsquo03) pp 1126ndash1138 SpringerMallorca Spain June 2003

[11] L I Kuncheva and J J Rodrıguez ldquoClassifier ensembles witha random linear oraclerdquo IEEE Transactions on Knowledge andData Engineering vol 19 no 4 pp 500ndash508 2007

Journal of Electrical and Computer Engineering 9

[12] J F Dıez-Pastor J J Rodrıguez C Garcıa-Osorio and LI Kuncheva ldquoRandom balance ensembles of variable priorsclassifiers for imbalanced datardquo Knowledge-Based Systems vol85 pp 96ndash111 2015

[13] L Breiman ldquoBagging predictorsrdquoMachine Learning vol 24 no2 pp 123ndash140 1996

[14] L Breiman ldquoRandom forestsrdquoMachine Learning vol 45 no 1pp 5ndash32 2001

[15] D T Larose ldquok-nearest neighbor algorithmrdquo in DiscoveringKnowledge in Data An Introduction to DataMining pp 90ndash1062nd edition 2005

[16] WWang X Zhang S Gombault and S J Knapskog ldquoAttributenormalization in network intrusion detectionrdquo in Proceedingsof the 10th International Symposium on Pervasive SystemsAlgorithms and Networks (I-SPAN rsquo09) pp 448ndash453 IEEEKaohsiung Taiwan December 2009

[17] R Lippmann JWHaines D J Fried J Korba andKDas ldquoThe1999 DARPA off-line intrusion detection evaluationrdquoComputerNetworks vol 34 no 4 pp 579ndash595 2000

[18] J McHugh ldquoTesting Intrusion detection systems a critique ofthe 1998 and 1999 DARPA intrusion detection system evalua-tions as performed by Lincoln Laboratoryrdquo ACM Transactionson Information and System Security (TISSEC) vol 3 no 4 pp262ndash294 2000

[19] K C Khor C Y Ting and S Phon-Amnuaisuk ldquoA cascadedclassifier approach for improving detection rates on rare attackcategories in network intrusion detectionrdquo Applied Intelligencevol 36 no 2 pp 320ndash329 2012

[20] P Prasenna R K Kumar A V T R Ramana and A DevanbuldquoNetwork programming and mining classifier for intrusiondetection using probability classificationrdquo in Proceedings of theInternational Conference on Pattern Recognition Informaticsand Medical Engineering (PRIME rsquo12) pp 204ndash209 IEEETamilnadu India March 2012

[21] C Bae W C Yeh M A M Shukran Y Y Chung and T JHsieh ldquoA novel anomaly-network intrusion detection systemusing ABC algorithmsrdquo International Journal of InnovativeComputing Information and Control vol 8 no 12 pp 8231ndash8248 2012

[22] C Guo Y Zhou Y Ping Z Zhang G Liu and Y Yang ldquoAdistance sum-based hybrid method for intrusion detectionrdquoApplied Intelligence vol 40 no 1 pp 178ndash188 2014

International Journal of

AerospaceEngineeringHindawi Publishing Corporationhttpwwwhindawicom Volume 2014

RoboticsJournal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Active and Passive Electronic Components

Control Scienceand Engineering

Journal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

International Journal of

RotatingMachinery

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporation httpwwwhindawicom

Journal ofEngineeringVolume 2014

Submit your manuscripts athttpswwwhindawicom

VLSI Design

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Shock and Vibration

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Civil EngineeringAdvances in

Acoustics and VibrationAdvances in

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Electrical and Computer Engineering

Journal of

Advances inOptoElectronics

Hindawi Publishing Corporation httpwwwhindawicom

Volume 2014

The Scientific World JournalHindawi Publishing Corporation httpwwwhindawicom Volume 2014

SensorsJournal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Modelling amp Simulation in EngineeringHindawi Publishing Corporation httpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Chemical EngineeringInternational Journal of Antennas and

Propagation

International Journal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

Navigation and Observation

International Journal of

Hindawi Publishing Corporationhttpwwwhindawicom Volume 2014

DistributedSensor Networks

International Journal of

Journal of Electrical and Computer Engineering 3

Primitive trainingdata set

Disjoint trainingdata subset

partition

Componentclassier 1

Componentclassier 2

Componentclassier k

Classicationresult 1

Classicationresult 2

Classicationresult k

Voting system basedon majority voting

method

Final classicationdetection result

Heterogeneous multiclassier detection module

Training subset 1

Testing subset 2

Training subset k

Testing subset k

Training subset 2

Testing subset 1

Primitive testing data set

New testing datasubset partition

Rotation matrixbased on SVD

Data set pretreatment moduleClassication result

acquisition module

Figure 1 Hybrid heterogeneous multiclassifier ensemble classification model

where the 119898-dimension vector (ℎ1119894 (119909) ℎ2119894 (119909) ℎ119898119894 (119909)) isdenoted as prediction output ℎ119894 is on the sample 119909 and ℎ119895119894 (119909)is the output on the class label 119875119894

As shown in Figure 1 the proposed hybrid heterogeneousmulticlassifier ensemble classification model includes threeprimary modules and they are Data Set Pretreatment Mod-ule Heterogeneous Multiclassifier Detection Module andClassification Result Acquisition Module In Data Set Pre-treatment Module the primitive training data set is split firstinto 119896 disjoint training data subsets based on SVD The newtraining data subset is generated by the linearly independentbase transformation Secondly the primitive testing data setsare split also into 119896 data subsets corresponding to the featuresof new training data subsets Finally the new testing datasubsets are generated by Rotation Forest In HeterogeneousMulticlassifier Detection Module the 119896 new training datasubsets and testing data subsets are used as the input of119896 component classifiers Next the 119896 classification detectionresults are got In Classification Result Acquisition Modulethe voting system votes on 119896 results based on the majorityvoting method Then the final classification detection resultis obtained

22 Problem Formulation We assume that the 119898 times 119899matrixdenotes a training data set where119898 represents the number ofnetwork traffic records and 119899 represents the number of char-acteristic attributes in a record In addition 119863119894 denotes the119894th classifier in heterogeneous ensemble Here all componentclassifiers can be trained in parallel The whole features oftraining data set are split into 119896 disjoint data subsets Eachfeature subset contains 119891 (119891 = [119899119896]) features The SVDmethod is applied to every split subset